by community-syndication | Sep 20, 2012 | BizTalk Community Blogs via Syndication

For debugging, it is neccessary to attach the BizTalk host instance process to Visual Studio. However, if you have multple host instances, you cant tell which one is which. I have found a PS script which gives you the process Ids of multiple host instances. TASKLIST /FI “USERNAME ne NT AUTHORITYSYSTEM” /FI “IMAGENAME eq BTSNTSvc.exe” […]

Blog Post by: DipeshA

by community-syndication | Sep 20, 2012 | BizTalk Community Blogs via Syndication

Published by: Bill Chesnut

Recently at our Mexia Code Camp the 2 days before TechEd Australia on the Gold Coast I had a chance to look at the recently released Service Bus 1.0 Beta for Windows Server. Having spent a fair bit of time working with the Azure Service Bus I am interested in how the on-premises version compares and to my relief it is a very close implementation.

The differences that I found were around the authentication and the addressing, the Windows Server version ships with it own STS for authentication using your windows credentials so that it can have no dependencies on the Azure ACS. The addressing of the queues differ by the fact that you are talking to a windows server in your infrastructure, so instead of the Azure. Azure uses a method of .servicebus.windows.net, on-premises you end up with .<your domain (options)/ these differences are support by a modified Microsoft.ServiceBus.dll version 1.6 that is part of the beta install.

So now on the a performance comparison, I typically don’t put much stock in performance testing of beta version by I was interested to see just a rough estimate of the performance. One of the 1st issues that I ran into was that my previous Azure service bus performance testing was dong with BizTalk, but the WCF netMessagingTransport and specifically the transportClientEndpointBehavior does not support the windows sts authentication. To overcome this missing authentication support in the beta I modified the BizTalk Service Bus adapter that I am currently working on to support the on-premises beta, the changes were minimal and really only effected the connection establishment part of the adapter.

Now what kind of numbers did I get, so my previous testing with the Azure Service Bus topics was bases on sending 600 messages to a topic with 600 subscriptions, so I replicated the same configuration in my on-premises test, luckily the utility code that I had written for creating the 600 subscription work with minor changes to the the connection code. One of the 1st things that I tested was Azure Service Bus has a limit of 100 concurrent connections, was that the same for windows server beta, no it seem there is no limit to the number of connections to an individual queue, topic or subscription. Running the same test as with Azure, I found that Azure was taking 15 seconds to send the 600 message to a topic with 600 subscriptions and the on-premises beta was taking 30 seconds, which is not as bad as it seems, since my on-premises implementation is running on a single virtual machine with SQL and BizTalk on the same virtual. Which indicates that what most people think is the biggest limiting factor for Azure is the latency to the Azure data centre is not the limiting factor, processing the filters for 600 subscriptions on a single topic seem to be more limiting than the connection latency.

Next steps, The overall design of the windows server implementation of service bus is bases on a farm model, with my test environment I had a single server farm, so I am planning to create a 4 virtual machine on-premises service bus farm to see how spreading the load across multiple virtual machines help the overall through put.

I think the on-premises implementation of service bus offers a great addition to our integration toolkit and can fill the gaps when the cloud is not the right solution for your integration solutions.

More …

Tags: Service Bus,Azure,BizTalk

by stephen-w-thomas | Sep 19, 2012 | Stephen's BizTalk and Integration Blog

I have been spending a lot of time the past few weeks working with the Windows Azure Virtual Machines that are currently available as a Preview Feature.

The ability to be up and running with a custom hosted Virtual Machine in a matter of minutes without any extra onsite hardware costs still amazes me, although this concept has been around for a while now. It is ever better that I can use the Virtual Machine for a few hours and then throw it away at a net cost to me of only a few cents.

As part for creating and removing over 50 Windows Azure Virtual Machines I ran into two main issues. I wanted to share those issues and the work around I have found for them.

Issue 1: Orphaned Windows Azure Virtual Machine Disks. These are listed under the Virtual Machines area of the preview portal, under Disks. These are Disks that say they are attached to a Virtual Machine that has already been deleted. I see this happen in about 1 in 15 Virtual Machines that I create and then delete.

FIX: The best way to deal with orphaned disks is to use PowerShell to delete them.

Install and configure Azure PowerShell according to this article. At a high level, you will need to download Azure PowerShell, create a management certificate, upload the certificate, download your account publishing settings, and install them into Azure PowerShell. It might sound like a lot of work but it takes less than 10 minutes.

Once complete, open PowerShell and run Get-AzureDisk. This will lists all the Virtual Machine Disks in your account. It uses your account details from the publishing settings file you imported and authentication is done via the management certificate.

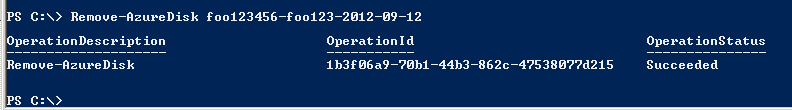

To remote the orphaned disks, run Remove-AzureDisk yourDiskName as shown below.

Issue 2: When you try to delete a vhd blob you receive the following error: “A lease conflict occurred with the blob https://StorageName.blob.core.windows.net/vhds/YourVHDName.vhd”.

FIX: This is talked about in the forms and they list a PowerShell script for taking care of this issue. I was unable to get the PowerShell script to work but was able to get the lease broken using the Azure SDK 1.7.1 (on github).

Once you have a reference to the Azure SDK 1.7.1, it has a method called BreakLease on the CloudBlob class. The code to break the lease in C# is shown below. See the form post above for more details on VB and how to use the Azure SDK 1.7.1.

// Create the blob client using the Accounts details in App.Config

CloudStorageAccount sourceStorageAccount = CloudStorageAccount.Parse(CloudConfigurationManager.GetSetting("SourceStorageConnection"));

// Create the blob client using the Accounts above

CloudBlobClient sourceBlobClient = sourceStorageAccount.CreateCloudBlobClient();

// Retrieve reference to a previously created container

// Rename "vhds" as needed. Can be used to read from any container.

CloudBlobContainer sourceContainer = sourceBlobClient.GetContainerReference("vhds");

CloudBlob sourceBlob = sourceContainer.GetBlobReference(VHDNAME.vhd);

// Create Timespan to allow the Lease to remain, in this case 1 second

TimeSpan breakTime = new TimeSpan(0, 0, 1);

// Call BreakLease (Available in 1.7.1)

sourceBlob.BreakLease(breakTime);

Use caution though, make sure you remove the Lease on the right blob otherwise bad and unexpected things will happen. At the very least, you will need to re-create the Virtual Machine from scratch.

I am working on a simple tool that will help copy, move, and delete VHDs as well as breaks the lease if you wish. This tool will be available in the next few days.

by community-syndication | Sep 19, 2012 | BizTalk Community Blogs via Syndication

ACS support for JWT still shows as “beta”, but it meets the spec and it works nicely, so it’s becoming the preferred option as SWT is losing favour. (Note that currently ACS doesn’t support JWT encryption, if you want encrypted tokens you need to go SAML).

In my last post I covered pulling claims from an ACS token without WIF, using the SWT format. The JWT format is a little more complex, but you can still inspect claims just with string manipulation.

The incoming token from ACS is still presented in the BinarySecurityToken element of the XML payload, with a TokenType of urn:ietf:params:oauth:token-type:jwt:

<t:RequestSecurityTokenResponse xmlns:t=“http://schemas.xmlsoap.org/ws/2005/02/trust“>

<t:Lifetime>

<wsu:Created xmlns:wsu=“http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd“>2012-08-31T07:39:55.337Z</wsu:Created>

<wsu:Expires xmlns:wsu=“http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd“>2012-08-31T09:19:55.337Z</wsu:Expires>

</t:Lifetime>

<wsp:AppliesTo xmlns:wsp=“http://schemas.xmlsoap.org/ws/2004/09/policy“>

<EndpointReference xmlns=“http://www.w3.org/2005/08/addressing“>

<Address>http://localhost/x.y.z</Address>

</EndpointReference>

</wsp:AppliesTo>

<t:RequestedSecurityToken>

<wsse:BinarySecurityToken wsu:Id=“_1eeb5cf4-b40b-40f2-89e0-a3343f6bd985-6A15D1EED0CDB0D8FA48C7D566232154“ ValueType=“urn:ietf:params:oauth:token-type:jwt“ EncodingType=“http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-soap-message-security-1.0#Base64Binary“ xmlns:wsu=“http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd“ xmlns:wsse=“http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-secext-1.0.xsd“>[ base64string ] </wsse:BinarySecurityToken>

</t:RequestedSecurityToken>

<t:TokenType>urn:ietf:params:oauth:token-type:jwt</t:TokenType>

<t:RequestType>http://schemas.xmlsoap.org/ws/2005/02/trust/Issue</t:RequestType>

<t:KeyType>http://schemas.xmlsoap.org/ws/2005/05/identity/NoProofKey</t:KeyType>

</t:RequestSecurityTokenResponse>

The token as a whole needs to be base-64 decoded. The decoded value contains a header, payload and signature, dot-separated; the parts are also base-64, but they need to be decoded using a no-padding algorithm (implementation and more details in this MSDN article on validating an Exchange 2013 identity token). The values are then in JSON; the header contains the token type and the hashing algorithm:

“{“typ”:”JWT”,”alg”:”HS256″}”

The payload contains the same data as in the SWT, but JSON rather than querystring format:

{“aud”:”http://localhost/x.y.z” “iss”:”https://adfstest-bhw.accesscontrol.windows.net/”

“nbf”:1346398795

“exp”:1346404795

“http://schemas.microsoft.com/ws/2008/06/identity/claims/authenticationinstant”:”2012-08-31T07:39:53.652Z”

“http://schemas.microsoft.com/ws/2008/06/identity/claims/authenticationmethod”:”http://schemas.microsoft.com/ws/2008/06/identity/authenticationmethod/windows”

“http://schemas.microsoft.com/ws/2008/06/identity/claims/windowsaccountname“:”xyz”

“http://schemas.xmlsoap.org/ws/2005/05/identity/claims/emailaddress”:”xyz@abc.com”

“http://schemas.xmlsoap.org/ws/2005/05/identity/claims/upn”:”xyz@abc.com”

“identityprovider”:”http://fs.svc.x.y.z.com/adfs/services/trust“}

The signature is in the third part of the token. Unlike SWT which is fixed to HMAC-SHA-256, JWT can support other protocols (the one in use is specified as the “alg” value in the header).

How to: Validate an Exchange 2013 identity token contains an implementation of a JWT parser and validator; apart from the custom base-64 decoding part, it’s very similar to SWT extraction.

I’ve wrapped the basic SWT and JWT in a ClaimInspector.aspx page on gitHub here: SWT and JWT claim inspector. You can drop it into any ASP.Net site and set the URL to be your redirect page in ACS. Swap ACS to issue SWT or JWT, and using the same page you can inspect the claims that come out.

by community-syndication | Sep 19, 2012 | BizTalk Community Blogs via Syndication

WIF with SAML is solid and flexible, but unless you need the power, it can be overkill for simple claim assertion, and in the REST world WIF doesn’t have support for the latest token formats. Simple Web Token (SWT) may not be around forever, but while it’s here it’s a nice easy format which you can manipulate in .NET without having to go down the WIF route.

Assuming you have set up a Relying Party in ACS, specifying SWT as the token format:

When ACS redirects to your login page, it will POST the SWT in the first form variable. It comes through in the BinarySecurityToken element of a RequestSecurityTokenResponse XML payload , the SWT type is specified with a TokenType of http://schemas.xmlsoap.org/ws/2009/11/swt-token-profile-1.0 :

<t:RequestSecurityTokenResponse xmlns:t=“http://schemas.xmlsoap.org/ws/2005/02/trust“>

<t:Lifetime>

<wsu:Created xmlns:wsu=“http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd“>2012-08-31T07:31:18.655Z</wsu:Created>

<wsu:Expires xmlns:wsu=“http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd“>2012-08-31T09:11:18.655Z</wsu:Expires>

</t:Lifetime>

<wsp:AppliesTo xmlns:wsp=“http://schemas.xmlsoap.org/ws/2004/09/policy“>

<EndpointReference xmlns=“http://www.w3.org/2005/08/addressing“>

<Address>http://localhost/x.y.z</Address>

</EndpointReference>

</wsp:AppliesTo>

<t:RequestedSecurityToken>

<wsse:BinarySecurityToken wsu:Id=“uuid:fc8d3332-d501-4bb0-84ba-d31aa95a1a6c“ ValueType=“http://schemas.xmlsoap.org/ws/2009/11/swt-token-profile-1.0“ EncodingType=“http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-soap-message-security-1.0#Base64Binary“ xmlns:wsu=“http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd“ xmlns:wsse=“http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-secext-1.0.xsd“> [ base64string ] </wsse:BinarySecurityToken>

</t:RequestedSecurityToken>

<t:TokenType>http://schemas.xmlsoap.org/ws/2009/11/swt-token-profile-1.0</t:TokenType>

<t:RequestType>http://schemas.xmlsoap.org/ws/2005/02/trust/Issue</t:RequestType>

<t:KeyType>http://schemas.xmlsoap.org/ws/2005/05/identity/NoProofKey</t:KeyType>

</t:RequestSecurityTokenResponse>

Reading the SWT is as simple as base-64 decoding, then URL-decoding the element value:

var wrappedToken = XDocument.Parse(HttpContext.Current.Request.Form[1]);

var binaryToken = wrappedToken.Root.Descendants(“{http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-secext-1.0.xsd}BinarySecurityToken”).First();

var tokenBytes = Convert.FromBase64String(binaryToken.Value);

var token = Encoding.UTF8.GetString(tokenBytes);

var tokenType = wrappedToken.Root.Descendants(“{http://schemas.xmlsoap.org/ws/2005/02/trust}TokenType”).First().Value;

The decoded token contains the claims as key/value pairs, along with the issuer, audience (ACS realm), expiry date and an HMAC hash, which are in query string format. Separate them on the ampersand, and you can write out the claim values in your logged-in page:

var decoded = HttpUtility.UrlDecode(token);

foreach (var part in decoded.Split(‘&’))

{

Response.Write(“<pre>” + part + “</pre><br/>”);

}

– which will produce something like this:

http://schemas.microsoft.com/ws/2008/06/identity/claims/authenticationinstant=2012-08-31T06:57:01.855Z

http://schemas.microsoft.com/ws/2008/06/identity/claims/authenticationmethod=http://schemas.microsoft.com/ws/2008/06/identity/authenticationmethod/windows http://schemas.microsoft.com/ws/2008/06/identity/claims/windowsaccountname=XYZ

http://schemas.xmlsoap.org/ws/2005/05/identity/claims/emailaddress=xyz@abc.com

http://schemas.xmlsoap.org/ws/2005/05/identity/claims/upn=xyz@abc.com

http://schemas.microsoft.com/accesscontrolservice/2010/07/claims/identityprovider=http://fs.svc.xyz.com/adfs/services/trust Audience=http://localhost/x.y.z

ExpiresOn=1346402225

Issuer=https://x-y-z.accesscontrol.windows.net/

HMACSHA256=oDCeEDDAWEC8x+yBnTaCLnzp4L6jI0Z/xNK95PdZTts=

The HMAC hash lets you validate the token to ensure it hasn’t been tampered with. You’ll need the token signing key from ACS, then you can re-sign the token and compare hashes. There’s a full implementation of an SWT parser and validator here: How To Request SWT Token From ACS And How To Validate It At The REST WCF Service Hosted In Windows Azure, and a cut-down claim inspector on my github code gallery: ACS Claim Inspector.

Interestingly, ACS lets you have a value for your logged-in page which has no relation to the realm for authentication, so you can put this code into a generic claim inspector page, and set that to be your logged-in page for any relying party where you want to check what’s being sent through. Particularly handy with ADFS, when you’re modifying the claims provided, and want to quickly see the results.

by community-syndication | Sep 19, 2012 | BizTalk Community Blogs via Syndication

We’re in the process of delivering an enabling project to expose on-premise WCF services securely to Internet consumers. The Azure Service Bus Relay is doing the clever stuff, we register our on-premise service with Azure, consumers call into our .servicebus.windows.net namespace, and their requests are relayed and serviced on-premise. In theory it’s all wonderfully simple; by using the relay we get lots of protocol options, free HTTPS and load balancing, and by integrating to ACS we get plenty of security options. Part of our delivery is a suite of sample consumers for the service – .NET, jQuery, PHP – and this set of posts will cover setting up the service and the consumers.

Part 1: Exposing the on-premise service

In theory, this is ultra-straightforward. In practice, and on a dev laptop it is – but in a corporate network with firewalls and proxies, it isn’t, so we’ll walkthrough some of the pitfalls. Note that I’m using the “old” Azure portal which will soon be out of date, but the new shiny portal should have the same steps available and be easier to use.

We start with a simple WCF service which takes a string as input, reverses the string and returns it. The Part 1 version of the code is on GitHub here: on GitHub here: IPASBR Part 1.

Configuring Azure Service Bus

Start by logging into the Azure portal and registering a Service Bus namespace which will be our endpoint in the cloud. Give it a globally unique name, set it up somewhere near you (if you’re in Europe, remember Europe (North) is Ireland, and Europe (West) is the Netherlands), and enable ACS integration by ticking “Access Control” as a service:

Authenticating and authorizing to ACS

When we try to register our on-premise service as a listener for the Service Bus endpoint, we need to supply credentials, which means only trusted service providers can act as listeners. We can use the default “owner” credentials, but that has admin permissions so a dedicated service account is better (Neil Mackenzie has a good post On Not Using owner with the Azure AppFabric Service Bus with lots of permission details). Click on “Access Control Service” for the namespace, navigate to Service Identities and add a new one. Give the new account a sensible name and description:

Let ACS generate a symmetric key for you (this will be the shared secret we use in the on-premise service to authenticate as a listener), but be sure to set the expiration date to something usable. The portal defaults to expiring new identities after 1 year – but when your year is up *your identity will expire without warning* and everything will stop working. In production, you’ll need governance to manage identity expiration and a process to make sure you renew identities and roll new keys regularly.

The new service identity needs to be authorized to listen on the service bus endpoint. This is done through claim mapping in ACS – we’ll set up a rule that says if the nameidentifier in the input claims has the value serviceProvider, in the output we’ll have an action claim with the value Listen. In the ACS portal you’ll see that there is already a Relying Party Application set up for ServiceBus, which has a Default rule group. Edit the rule group and click Add to add this new rule:

The values to use are:

Issuer: Access Control Service

Input claim type: http://schemas.xmlsoap.org/ws/2005/05/identity/claims/nameidentifier

Input claim value: serviceProvider

Output claim type: net.windows.servicebus.action

Output claim value: Listen

When your service namespace and identity are set up, open the Part 1 solution and put your own namespace, service identity name and secret key into the file AzureConnectionDetails.xml in Solution Items, e.g:

<azure namespace=“sixeyed-ipasbr“>

<!– ACS credentials for the listening service (Part1):–>

<service identityName=“serviceProvider”

symmetricKey=“nuR2tHhlrTCqf4YwjT2RA2BZ/+xa23euaRJNLh1a/V4=“/>

</azure>

Build the solution, and the T4 template will generate the Web.config for the service project with your Azure details in the transportClientEndpointBehavior:

<behavior name=“SharedSecret“>

<transportClientEndpointBehavior credentialType=“SharedSecret“>

<clientCredentials>

<sharedSecret issuerName=“serviceProvider“

issuerSecret=“nuR2tHhlrTCqf4YwjT2RA2BZ/+xa23euaRJNLh1a/V4=“/>

</clientCredentials>

</transportClientEndpointBehavior>

</behavior>

, and your service namespace in the Azure endpoint:

<!– Azure Service Bus endpoints –>

<endpoint address=“sb://sixeyed-ipasbr.servicebus.windows.net/net”

binding=“netTcpRelayBinding”

contract=“Sixeyed.Ipasbr.Services.IFormatService”

behaviorConfiguration=“SharedSecret“>

</endpoint>

The sample project is hosted in IIS, but it won’t register with Azure until the service is activated. Typically you’d install AppFabric 1.1 for Widnows Server and set the service to auto-start in IIS, but for dev just navigate to the local REST URL, which will activate the service and register it with Azure.

Testing the service locally

As well as an Azure endpoint, the service has a WebHttpBinding for local REST access:

<!– local REST endpoint for internal use –>

<endpoint address=“rest”

binding=“webHttpBinding”

behaviorConfiguration=“RESTBehavior”

contract=“Sixeyed.Ipasbr.Services.IFormatService“ />

Build the service, then navigate to:

http://localhost/Sixeyed.Ipasbr.Services/FormatService.svc/rest/reverse?string=abc123

– and you should see the reversed string response:

If your network allows it, you’ll get the expected response as before, but in the background your service will also be listening in the cloud. Good stuff! Who needs network security? Onto the next post for consuming the service with the netTcpRelayBinding.

Setting up network access to Azure

But, if you get an error, it’s because your network is secured and it’s doing something to stop the relay working. The Service Bus relay bindings try to use direct TCP connections to Azure, so if ports 9350-9354 are available *outbound*, then the relay will run through them. If not, the binding steps down to standard HTTP, and issues a CONNECT across port 443 or 80 to set up a tunnel for the relay.

If your network security guys are doing their job, the first option will be blocked by the firewall, and the second option will be blocked by the proxy, so you’ll get this error:

System.ServiceModel.CommunicationException: Unable to reach sixeyed-ipasbr.servicebus.windows.net via TCP (9351, 9352) or HTTP (80, 443)

– and that will probably be the start of lots of discussions. Network guys don’t really like giving servers special permissions for the web proxy, and they really don’t like opening ports, so they’ll need to be convinced about this. The resolution in our case was to put up a dedicated box in a DMZ, tinker with the firewall and the proxy until we got a relay connection working, then run some traffic which the the network guys monitored to do a security assessment afterwards.

Along the way we hit a few more issues, diagnosed mainly with Fiddler and Wireshark:

System.Net.ProtocolViolationException: Chunked encoding upload is not supported on the HTTP/1.0 protocol

– this means the TCP ports are not available, so Azure tries to relay messaging traffic across HTTP. The service can access the endpoint, but the proxy is downgrading traffic to HTTP 1.0, which does not support tunneling, so Azure can’t make its connection. We were using the Squid proxy, version 2.6. The Squid project is incrementally adding HTTP 1.1 support, but there’s no definitive list of what’s supported in what version (here are some hints).

System.ServiceModel.Security.SecurityNegotiationException: The X.509 certificate CN=servicebus.windows.net chain building failed. The certificate that was used has a trust chain that cannot be verified. Replace the certificate or change the certificateValidationMode. The evocation function was unable to check revocation because the revocation server was offline.

– by this point we’d given up on the HTTP proxy and opened the TCP ports. We got this error when the relay binding does it’s authentication hop to ACS. The messaging traffic is TCP, but the control traffic still goes over HTTP, and as part of the ACS authentication the process checks with a revocation server to see if Microsoft’s ACS cert is still valid, so the proxy still needs some clearance. The service account (the IIS app pool identity) needs access to:

- www.public-trust.com

- mscrl.microsoft.com

We still got this error periodically with different accounts running the app pool. We fixed that by ensuring the machine-wide proxy settings are set up, so every account uses the correct proxy:

netsh winhttp set proxy proxy-server=”http://proxy.x.y.z”

– and you might need to run this to clear out your credential cache:

certutil -urlcache * delete

If your network guys end up grudgingly opening ports, they can restrict connections to the IP address range for your chosen Azure datacentre, which might make them happier – see Windows Azure Datacenter IP Ranges.

After all that you’ve hopefully got an on-premise service listening in the cloud, which you can consume from pretty much any technology.

by community-syndication | Sep 19, 2012 | BizTalk Community Blogs via Syndication

HP Operations manager (previously called as HP OpenView OVO) is an enterprise class monitoring solution used by organisations to monitor the health and performance of various IT assets. It’s equivalent or competing product to Microsoft System Centre Operations manager. There are few more players in this area but HPOM is one of the popular ones. […]

The post BizTalk Server monitoring using HP Operations Manager and BizTalk360 appeared first on BizTalk360 Blog.

Blog Post by: Saravana Kumar

by community-syndication | Sep 19, 2012 | BizTalk Community Blogs via Syndication

It is always good practise to optimize your SQL query statements for a better performance. Just making sure your query does what it needs to, isn’t enough. You should consider the performance impact of your code and try to optimize it for the best performance.

Below is a short list of steps to take when optimizing your query statements.

More details on the steps can be found in this blog.

- Check if you’re missing any required table joins.

This could result in a Cartesian join.

- Check if you’re missing any required WHERE clause to prevent retrieving too much data.

- Check if statistics are being created & updated automatically.

- Check if the statistics are up-to-date.

The DBCC SHOW_STATISTICS command can be used to view the last updated date-time, total table rows and the number of rows sampled.

Outdated statistics can be renewed using the sp_updatestats stored procedure, or By using the FULLSCAN option to update all statistics of a table.

- Check for any missing table or Index scans by using the execution plans.

you can use these DMV’s to check for missing indexes: sys.dm_db_missing_index_details, sys.dm_db_missing_index_group_stats, sys.dm_db_missing_index_groups.

- Check for RID Lookups, also by using the execution plans.

These cannot always be eliminated, but by making use of covering indexes, RID Lookups can be reduced.

- Check for any sort operator, again by using the execution plans.

There are 3 options:

- Modify the underlining tables to create a CLUSTERED index on the required sort columns. It could be worth trying out creating the CLUSTERED index on another column which is not the Primary Key.

- Create an Indexed view on the underlining tables and sort the view by creating a CLUSTERED Index.

- Create a NON CLUSTERED Index on the specified columns and Include all other columns which will be returned.

- Check for excessive index fragmentation.

The DMV sys.dm_db_index_physical_stats can be used for this matter.

- Check table locks.

To prevent locking problems, follow these guidelines:

- Keep transactions as short as possible.

- Review the transaction isolation level, and consider minimizing locking contention, thus increasing concurrency by changing to ‘Read Committed using row versioning’ or ‘Snapshot’.

- Specify table hints such as READUNCOMMITTED or READPAST on the select statements. Although both of these table hints do increase concurrency, both have disadvantages such as ‘dirty reads’ when using the READUNCOMMITTED or returning an incomplete data set when using the READPAST and therefore they may not be acceptable to use in all circumstances.

Source: http://www.sqlservercentral.com/articles/Performance+Tuning/70647/

by community-syndication | Sep 19, 2012 | BizTalk Community Blogs via Syndication

I found a complete serie on speeding up acces to your SQL Database.

So far, there are only 4 parts published of an 8 part series of articles on speeding up access to a SQL Server Database.

Here’s an overview of the content of all 8 parts (including links of already published parts):

- Part 1 Pinpointing missing indexes and expensive queries

- Find expensive queries (with trace log)

- Locate missing indexes with the query optimizer (or with Tuning Advisor)

- Find unused indexes

- Part 2 Pinpointing other bottlenecks

- Find Locks and Latches (via counters in perfmon)

- Execution plan reusability (using counters or dynamic management views (DMV))

- Fragmentation of data and indexes

- Memory check (using counters to verify if there’s a lack of memory)

- Disk usage (locate possible disk bottlenecks using counters)

- CPU (use counters to see if the CPU is stressed)

- Part 3 Fixing missing indexes

- Indexes explained (clustered and nonclustered indexes)

- Index usage (when / when not)

- Maintaining indexes

- Part 4 Fixing expensive queries

- Cache aggregation queries

- Keep records short

- Considering Denormalization

- Be careful with triggers

- Use table variables for small temporary result sets

- Use Full Text Search instead of LIKE

- Replacing cursors with set based code

- Minimise traffic from SQL Server to Web Server

- Object Naming

- Use SET NOCOUNT ON

- Use FILESTREAM for values over 1MB

- Avoid functions on columns in WHERE clauses

- Use UNION ALL instead of UNION

- Use EXISTS instead of COUNT to find existence of records

- Combine SELECT and UPDATE

- Part 5 Fixing locking issues

- Part 6 Fixing execution plan reuse

- Part 7 Fixing fragmentation

- Part 8 Fixing memory, disk and CPU issues

I’ll update this post when new parts are published.

by stephen-w-thomas | Sep 19, 2012 | Stephen's BizTalk and Integration Blog

I have been working with Windows Azure Virtual Machines for a few weeks now. As of this blog post, the preview feature is running six locations currently: West US, East US, East Asia, Southeast Asia, North Europe, and West Europe.

One of the things I like about Windows Azure is the world-wide presence. When I select Quick Create for a Virtual Machine, I am able to decide what location to host my Virtual Machine so I can ensure this is some place close to me. Today I might be in Seattle but tomorrow I might be in London. I have the option to select what works best for me at any given time.

Here are a few items I think are worth pointing out.

I found that when using Quick Create to create a new Virtual Machine, I am not able to specify a storage location in the event that I have an existing location I would like to use to store the VHD. A new storage location in the format of portalvhds* will be created for me. The plus side is if you have an auto created location already, all other Quick Create Virtual Machines will use that location. The name of the auto created storage account is not the same across regions.

When using From Gallery, I have a few more options. For Microsoft supplied Images, I have the ability to use a pre-created storage account or have a new one created for me.

If I select Use Automatically Generated Storage Account, I then have the ability to select the Region (i.e. the datacenter) to host the Virtual Machine and Storage Account.

If I select an existing Storage Account, I no longer have the ability to select the Region.

I have been creating and using custom images for a while now and always wondered when I went to use a custom image, why I cannot change the Region I want the Virtual Machine to run at.

And now it all makes total sense!

You cannot create a Virtual Machine in a different Region than your storage container used for the Image or VHD. When selecting an existing storage account in the Gallery, using an existing custom image, or an existing VHD – in these cases the location of the Virtual Machine depends on the location of the underlying storage account.

The location of the Image or Disk is shown on the screen when selecting the image using the From Gallery creation option.

How to ensure you are running in the region / datacenter you want to be running in?

If you want your Virtual Machine to be running in a different region you will need to move the Image or VHD to that region first before creating a Virtual Machine using that resource. Either download and re-upload or copy it to via the API’s. Watch out, at 30 GB to 50 GB a piece this can get “expensive” quickly (for 50 GB VHD Zone 1 – $6.00 US to download). Inside the same datacenter, even across accounts, moving VHD currently do not have bandwidth charged.

Watch for more to come related to moving VHD from one location to another for use with Virtual Machines. I have a tool in the works that should help with this process.

![]()