by Eldert Grootenboer | Sep 4, 2017 | BizTalk Community Blogs via Syndication

When implementing software, it’s always a good idea to follow existing patterns, as these allow us to use proven and reliable techniques. The same applies in integration, where we have been working with integration patterns in technologies like BizTalk, MSMQ etc. These days we are working more and more with new technologies in Azure, giving us new tools like Service Bus, Logic Apps, and since recently Event Grid. But even though we are working with new tools, these integration patterns are still very useful, and should be followed whenever possible. This post is the first in a series where I will be showing how we can implement integration patterns using various services in Azure.

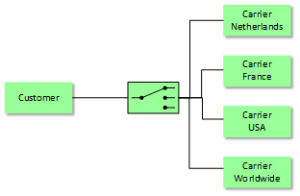

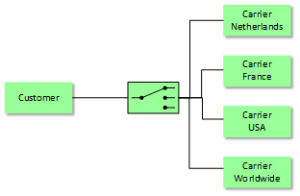

Message Router Pattern

The first pattern which will be shown is the Message Router, which is used to route a message to different endpoints depending on a set of conditions, which can be evaluated against the contents or metadata of the message. We will implement this pattern with different technologies, where we will focus on Logic Apps in this post. For this sample we will implement a scenario where we receive orders, and depending on the city where the order should be delivered we will route it to a specific carrier.

Scenario

When using Logic Apps, we can easily route a message based on its contents to various endpoints. Using a Logic in combination with the Message Router pattern is especially useful when we have the following requirements:

- Different types of endpoints; the power of Logic Apps lies in the many connectors we get out of the box, allowing us to easily integrate with various systems like SQL, Dynamics CRM, Salesforce, etc.

- Small amount of endpoints; as we will be using a switch in our Logic App, managing these becomes cumbersome when we have many endpoints.

In this sample we write the messages to Github Gists, but you could easily replace this with other destinations. We use a HTTP Trigger, meaning we receive the message on a http endpoint, where the message format is as the following.

{

"Address":"Kings Cross 20",

"City":"New York",

"Name":"Eldert Grootenboer"

}

|

We use a switch to determine the endpoint to which we will send our message based on the city inside the message body, and send out the message to our endpoint, in this case using a HTTP action. Of course we could send the message to any other type of endpoint from our cases inside the switch as well. Finally we will respond the location of the Gist where the message was placed.

Logic App Implementation

You can easily deploy this solution from the Azure Quickstart Templates site, or use the below button to directly deploy this to your own Azure environment.

by Gautam | Sep 4, 2017 | BizTalk Community Blogs via Syndication

Do you feel difficult to keep up to date on all the frequent updates and announcements in the Microsoft Integration platform?

Integration weekly update can be your solution. It’s a weekly update on the topics related to Integration – enterprise integration, robust & scalable messaging capabilities and Citizen Integration capabilities empowered by Microsoft platform to deliver value to the business.

If you want to receive these updates weekly, then don’t forget to Subscribe!

On-Premise Integration:

Cloud and Hybrid Integration:

Feedback

Hope this would be helpful. Please feel free to let me know your feedback on the Integration weekly series.

by Sandro Pereira | Sep 3, 2017 | BizTalk Community Blogs via Syndication

Welcome back to a new post about BizTalk Server Tips and Tricks! And this time I would like to talk about a very important topic for BizTalk Administrators: BizTalk MarkLog tables.

All the BizTalk databases that are backed up by the ‘Backup BizTalk Server’ job, which means all the default BizTalk databases (SSODB, BizTalkRuleEngineDb, BizTalkMsgBoxDb, BizTalkMgmtDb, BizTalkDTADb, BAMPrimaryImport, BAMArchive and BAMAlertsApplication) with the exception of the BAM Star Schema database (BAMStarSchema), have a table called “MarkLog”.

The only thing that these tables store, is a timestamp in a string format (Log_<yyyy>_<MM>_<dd>_<HH>_<mm>_<ss>_<fff>) that tells you each time the ‘Backup BizTalk Server’ job performs a backup of the transaction log of that specific database.

Note: This task is performed by the 3rd step (MarkAndBackUpLog) of the ‘Backup BizTalk Server’ job

So, each time this step runs, by default each 15 minutes, a string is stored in that table. Unfortunately, BizTalk has no out-of-the-box possibilities to clean up these tables. The normal procedure is to run the old Terminator tool to clean it up, which nowadays is integrated with the BizTalk Health Monitor.

Both of them (they are actually the same tool) has two major problems:

- Using these tools, it means that we need to stop our BizTalk Server environment, i.e., downtime for a few minutes of our entire integration platform.

- If we look at the description of the task, these tools execute: “PURGE Marklog table”, it says that this operation calls a SQL script that cleans up everything in Marklog table – and maybe this is not the best practices in terms of maintaining your environment.

This information is important and useful for the BizTalk Administration team, for example, to keep an eye on the backup/log shipping history records to see whether the backup is working correctly and data/logs are restored correctly in the standby environment.

As a best practice: you should respect the parameter “@DaysToKeep” present in the 4th step (Clear Backup History) of the ‘Backup BizTalk Server’ job, i.e., clean everything on that table older than the days specified in the “@DaysToKeep” parameter.

How to properly maintain BizTalk MarkLog tables?

To address and solve this problem, I end up creating a custom stored procedure in the BizTalk Management database (BizTalkMgmtDb) that I called “sp_DeleteBackupHistoryAndMarkLogsHistory”. This stored procedure is basically a copy of the existing “sp_DeleteBackupHistory” stored procedure with extended functionalities:

- It iterates all the databases that are backed up by BizTalk and delete all data older than the days define in @DaysToKeep parameter

Script sample:

/* Create a cursor */

DECLARE BackupDB_Cursor insensitive cursor for

SELECT ServerName, DatabaseName

FROM admv_BackupDatabases

ORDER BY ServerName

open BackupDB_Cursor

fetch next from BackupDB_Cursor into @BackupServer, @BackupDB

WHILE (@@FETCH_STATUS = 0)

BEGIN

-- Get the proper server name

EXEC @ret = sp_GetRemoteServerName @ServerName = @BackupServer, @DatabaseName = @BackupDB, @RemoteServerName = @RealServerName OUTPUT

IF @@ERROR <> 0 OR @ret IS NULL OR @ret <> 0 OR @RealServerName IS NULL OR len(@RealServerName) <= 0

BEGIN

SET @errorDesc = replace( @localized_string_sp_DeleteBackupHistoryAndMarkLogsHistory_Failed_sp_GetRemoteServerNameFailed, N'%s', @BackupServer )

RAISERROR( @errorDesc, 16, -1 )

GOTO FAILED

END

/* Create the delete statement */

select @tsql =

'DELETE FROM [' + @RealServerName + '].[' + @BackupDB + '].[dbo].[MarkLog]

WHERE DATEDIFF(day, REPLACE(SUBSTRING([MarkName],5,10),''_'',''''), GETDATE()) > ' + cast(@DaysToKeep as nvarchar(5) )

/* Execute the delete statement */

EXEC (@tsql)

SELECT @error = @@ERROR

IF @error <> 0 or @ret IS NULL or @ret <> 0

BEGIN

SELECT @errorDesc = replace( @localized_string_sp_DeleteBackupHistoryAndMarkLogsHistory_Failed_Deleting_Mark, '%s', @BackupServer + N'.' + @BackupDB )

GOTO FAILED

END

/* Get the next DB. */

fetch next from BackupDB_Cursor into @BackupServer, @BackupDB

END

close BackupDB_Cursor

deallocate BackupDB_Cursor

Steps required to install/configure:

- Download the SQL script from BizTalk Server: Cleaning MarkLog Tables According to Some of the Best Practices and create the sp_DeleteBackupHistoryAndMarkLogsHistory stored procedure against to BizTalk Management database (BizTalkMgmtDb)

- Change and configure the 4th step of the ‘Backup BizTalk Server’ job – “Clear Backup History” to call this new stored procedure: sp_DeleteBackupHistoryAndMarkLogsHistory

Note: Do not change or delete the “sp_DeleteBackupHistory”!

Credits: Tord Glad Nordahl, Rui Romano, Pedro Sousa, Mikael Sand and me

Stay tuned for new BizTalk Server Tips and Tricks!

Author: Sandro Pereira

Sandro Pereira is an Azure MVP and works as an Integration consultant at DevScope. In the past years, he has been working on implementing Integration scenarios both on-premises and cloud for various clients, each with different scenarios from a technical point of view, size, and criticality, using Microsoft Azure, Microsoft BizTalk Server and different technologies like AS2, EDI, RosettaNet, SAP, TIBCO etc. View all posts by Sandro Pereira

by Dan Toomey | Sep 3, 2017 | BizTalk Community Blogs via Syndication

(This post was originally published on Mexia’s blog on 1st September 2017)

Microsoft recently released the public preview of Azure Event Grid – a hyper-scalable serverless platform for routing events with intelligent filtering. No more polling for events – Event Grid is a reactive programming platform for pushing events out to interested subscribers. This is an extremely significant innovation, for as veteran MVP Steef-Jan Wiggers points out in his blog post, it completes the existing serverless messaging capability in Azure:

- Azure Functions – Serverless compute

- Logic Apps – Serverless connectivity and workflows

- Service Bus – Serverless messaging

- Event Grid – Serverless Events

And as Tord Glad Nordahl says in his post From chaos to control in Azure, “With dynamic scale and consistent performance Azure Event grid lets you focus on your app logic rather than the infrastructure around it.”

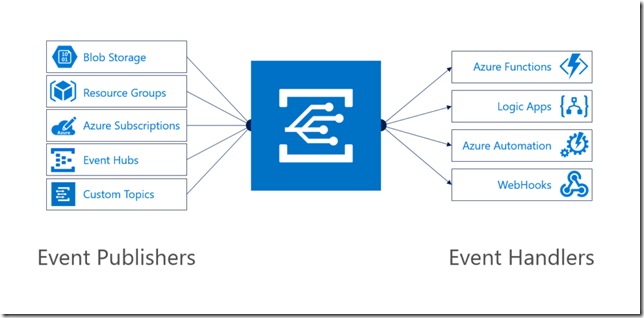

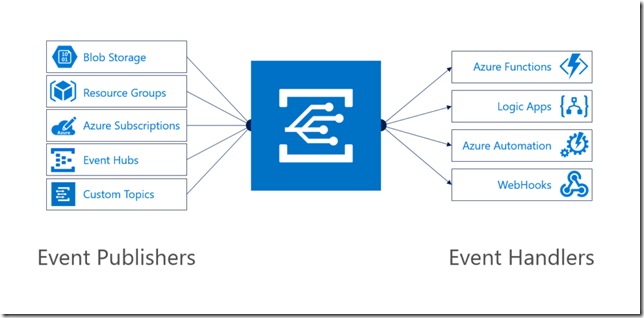

The preview version not only comes with several supported publishers and subscribers out of the box, but also supports customer publishers and (via WebHooks) custom subscribers:

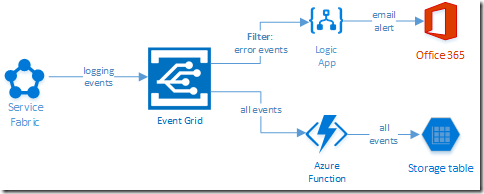

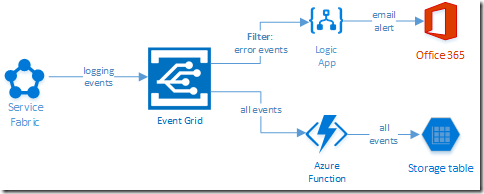

In this blog post, I’ll describe the experience in building a sample logging mechanism for a service hosted in Azure Service Fabric. The solution not only logs all events to table storage, but also sends alert emails for any error events:

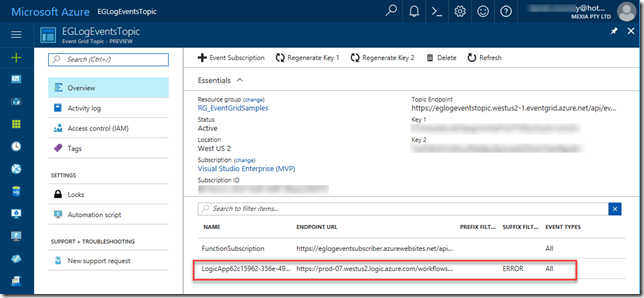

Creating the Event Grid Topic

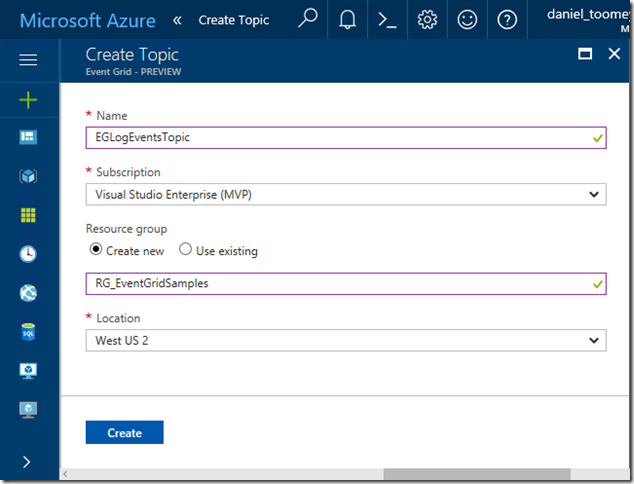

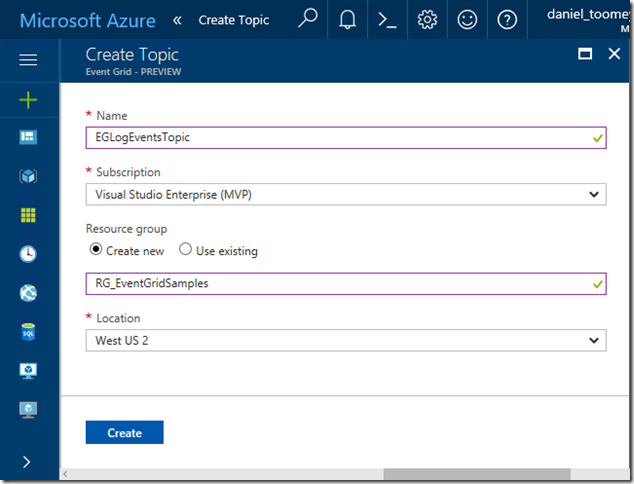

This was an extremely simple process executed in the Azure Portal. Create a new item by searching for “Event Grid Topic”, and then supply the requested basic information:

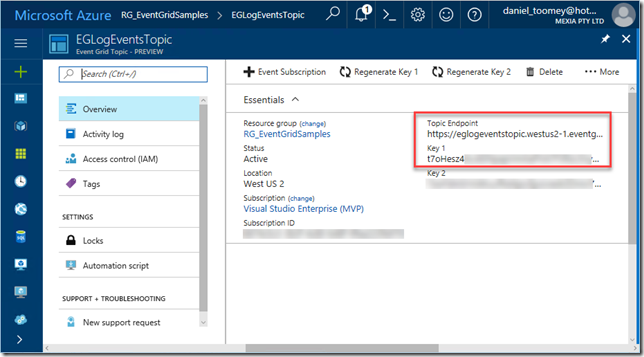

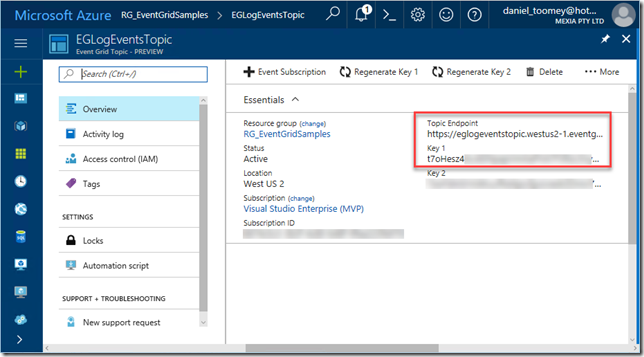

Once created, the key items you will need once the topic is created is the Topic Endpoint and the associated key:

Creating the Event Publisher

As mentioned previously, there are a number of existing Azure services that can publish events to Event Grid including Event Hubs, resource groups, subscriptions, etc. – and there will be more coming as the service moves toward general availability. However, in this case we create a custom publisher which is a service hosted in Azure Service Fabric. For this sample, I used an existing Voting App demo which I’ve written about in a previous blog post, modifying it slightly by adding code to publish logging events to Event Grid.

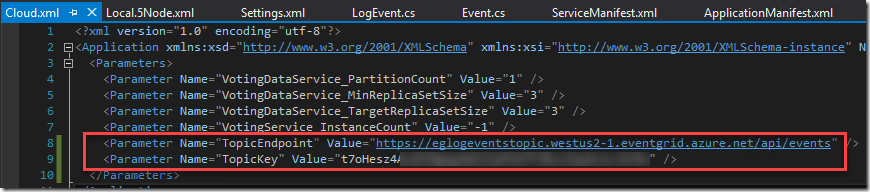

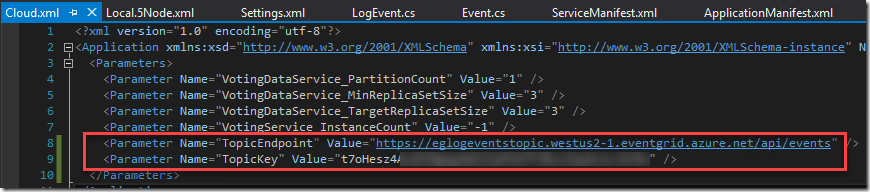

The first requirement was storing the topic endpoint and key in the parameter files, and of course creating the associated configuration items in the ServiceManifest.xml and ApplicationManifest.xml files (this article provides information about application configuration in Service Fabric):

Note that in a production situation the TopicKey should be encrypted within this file – but for the purposes of this example we will keep it simple.

Next step was creating a small class library in the solution to house the following items:

- The Event class which represents the Event Grid events schema

- A LogEvent class which represents the “Data” element in the Event schema

- A utility class which includes the static SendLogEvent method

- A LogEventType enum to define logging severity levels (ERROR|WARNING|INFO|VERBOSE)

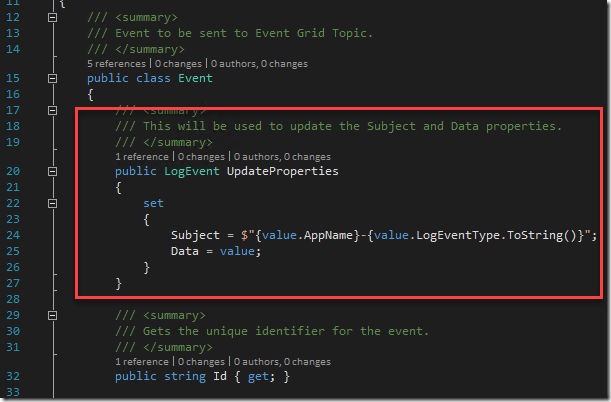

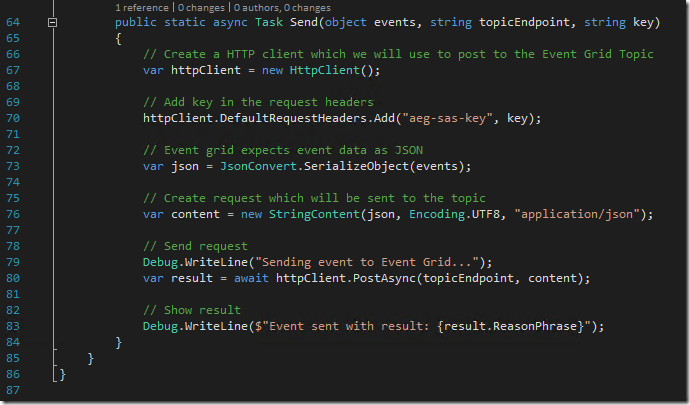

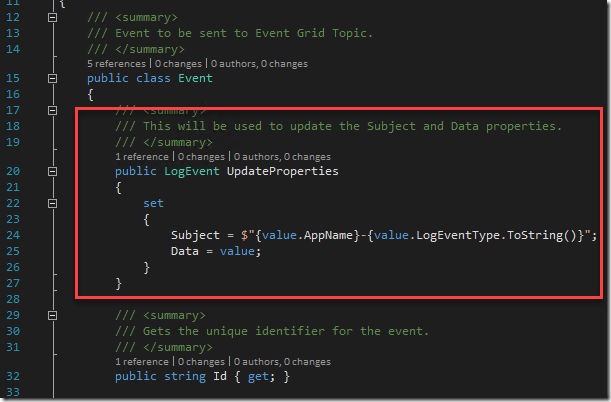

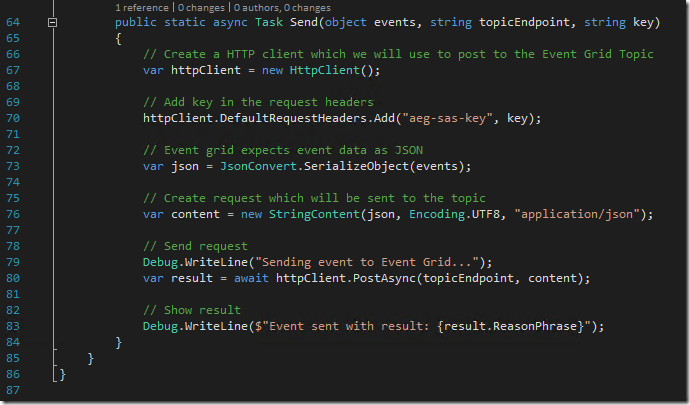

To see an example of how to create the Event class, refer to fellow Azure MVP Eldert Grootenboer’s excellent post. The only changes I made were to assign the properties for my custom LogEvent, and to add a static method for sending a collection of Event objects to Event Grid (notice how the Event.Subject field is a concatenation of the Application Name and the LogEventType – this will be important later on):

The utility method that creates the collection and invokes this static method is pretty straight forward:

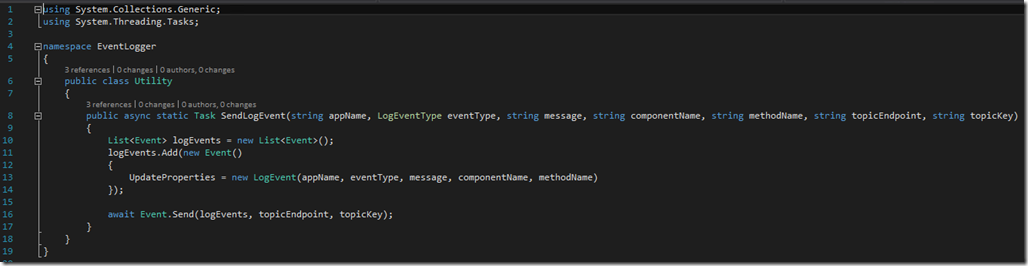

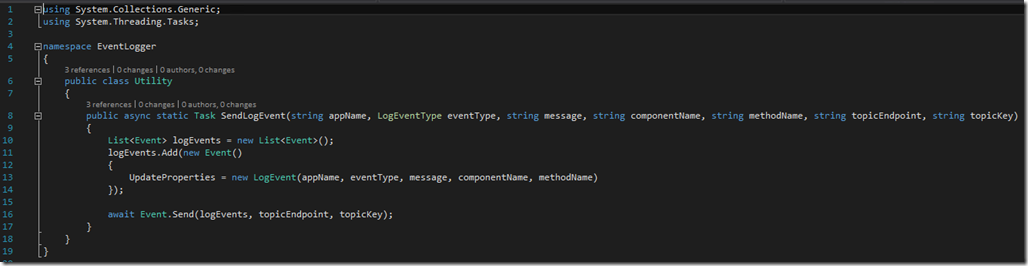

This all makes it simple to embed logging calls into the application code:

Creating the Event Subscribers

Capturing All Events

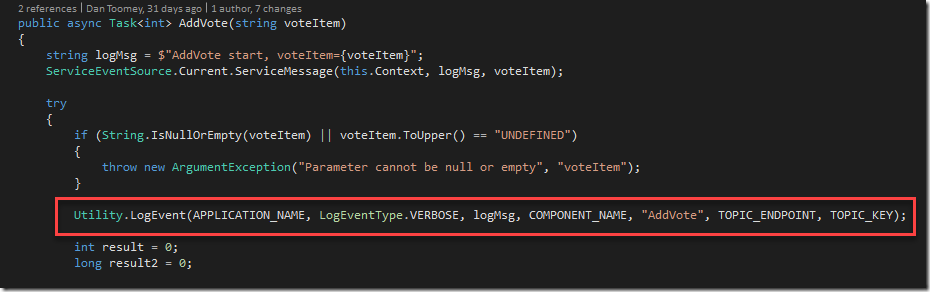

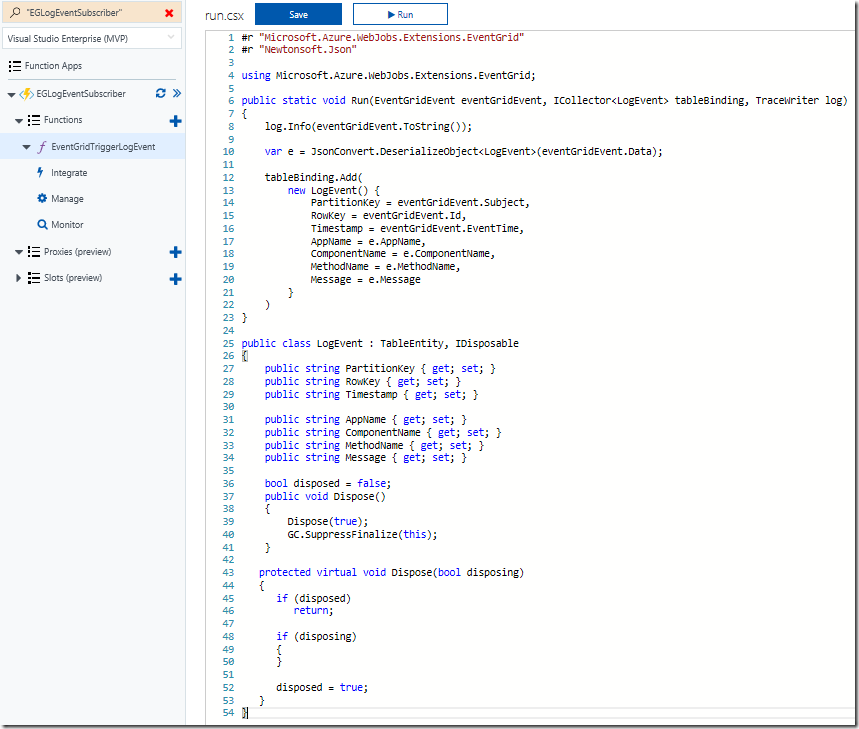

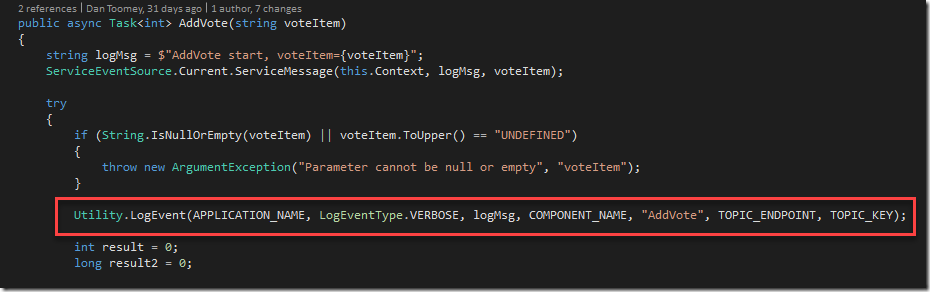

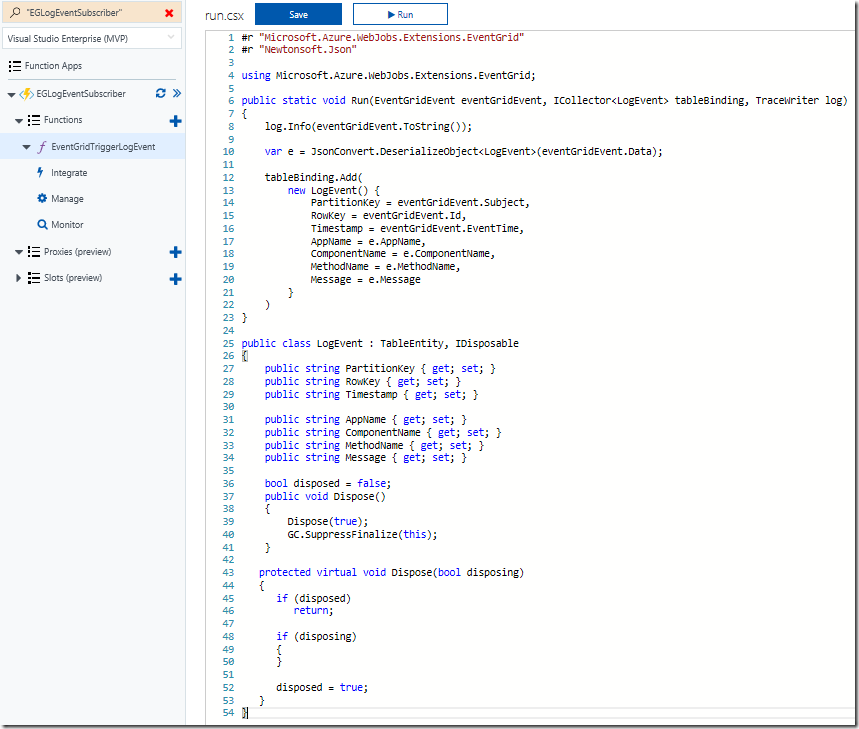

The first topic subscription will be an Azure Function that will write all events to Azure table storage. Provided you’ve created your Function App in a region that supports the Event Grid preview (I’ve just created everything aside from the Service Fabric solution within the same resource group and location), you will see that there is already an Event Grid Trigger available to choose. Here is my configured trigger:

As you can see, I’ve also configured a Table Storage output. The code within this function creates a record in the table using the Event.Subject as a partition and the Event.Id as the row key:

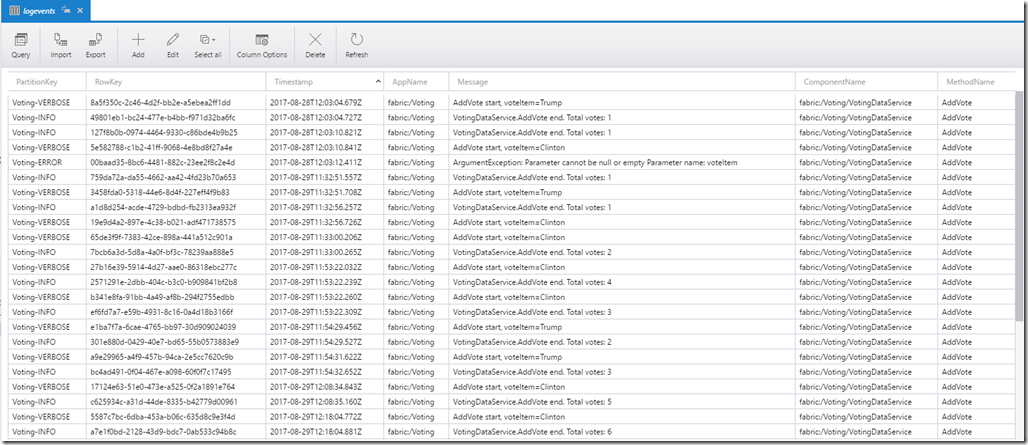

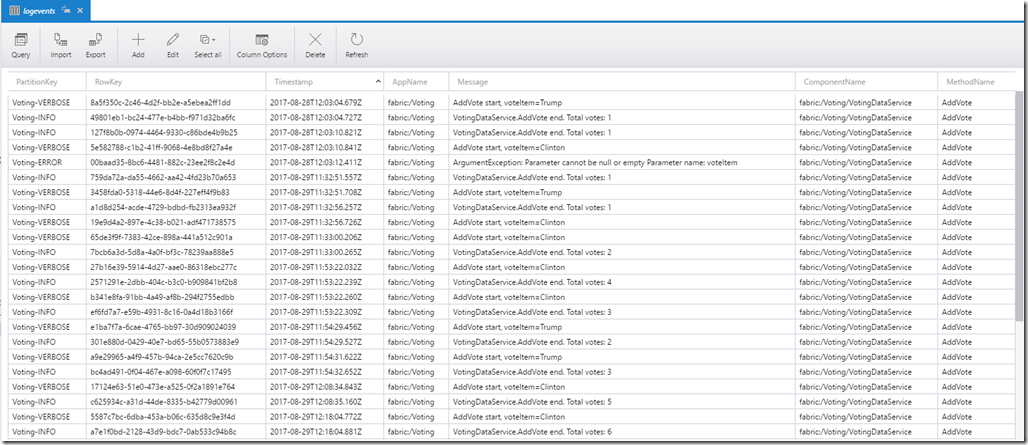

Using the free Azure Storage Explorer tool, we can see the output of our testing:

Alerting on ERROR Events

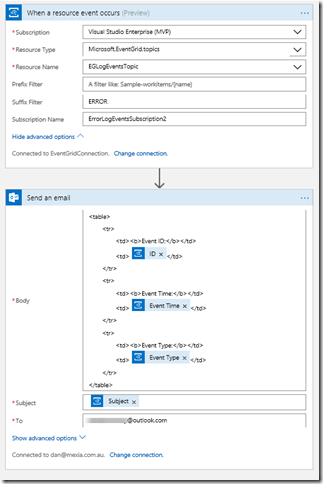

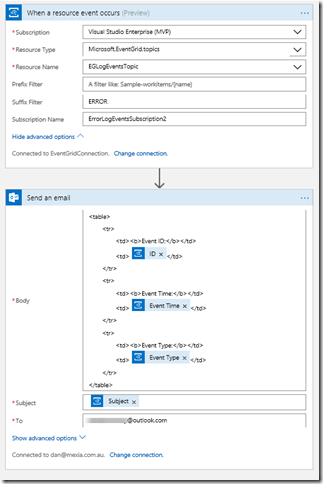

Now that we’ve completed one of the two subscriptions for our solution, we can create the other subscription which will use a filter on ERROR events and raise an alert via sending an email notification.

The first step is to create the Logic App (in the same region as the Event Grid) and add the Event Grid Trigger. There are a few things to watch out for here:

- When you are prompted to sign in, the account that your subscription belongs to may or may not work. If it doesn’t, try creating a Service Principal with contributor rights for the Event Grid topic (here is an excellent article on how to create a service principal)

- The Resource Type should be Microsoft.EventGrid.topics

- The Suffix field contains “ERROR” which will serve as the filter for our events

- If the Resource Name drop-down list does not display your Event Grid topic at first, type something in, save it and then click the “x”; the list should hopefully appear. It is important to select from the list as just typing the display name will not create the necessary resource ID in the topic field and the subscription will not be created.

You can then follow this with an Office365 Email action (or any other type of notification action you prefer). There are four dynamic properties that are available from the Event Grid Trigger action (Subject, ID, Event Type and Event Time):

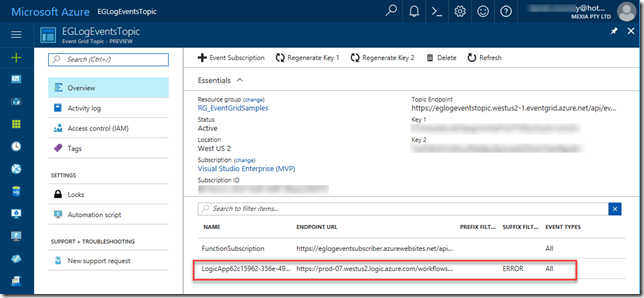

After saving the Logic App, check for any errors in the Overview blade, and then check the Overview blade for the Event Grid Topic – you should see the new subscription created there:

Finally, we can test the application. My Voting demo service generates an exception (and a ERROR logging event) when a vote is cast for a null/empty candidate (see the ERROR entry in the table screenshot above). This event now triggers an email notification:

Summary

So this example may not be the niftiest logging application on the market (especially considering all of the excellent logging tools that are available today), but it does demonstrate how easy it is to get up and running with Event Grid. You’ve seen an example of using a custom publisher and two built-in subscribers, including one with intelligent filtering. To see how to write a custom subscriber, have a look at Eldert’s post “Custom Subscribers in Event Grid” where he uses an API App subscriber to write shipping orders to table storage.

Event Grid is enormously scalable and its consumption pricing model is extremely competitive. I doubt there is anything else quite like this on offer today. Moreover, there will be additional connectors coming in the near future, including Azure AD, Service Bus, Azure Data Factory, API Management, Cosmos DB, and more.

For a broader overview of Event Grid’s features and the capabilities it brings to Azure, have a read of Tom Kerkhove’s post “Exploring Event Grid”. And to understand the differences between Event Hub, Service Bus and Event Grid, Saravana Kumar’s recent post sums it up quite nicely. Finally, if you want to get your hands dirty and have a play, Microsoft has provided a quickstart page to get you up and running.

Happy Eventing!

by Steef-Jan Wiggers | Sep 2, 2017 | BizTalk Community Blogs via Syndication

A few weeks ago Azure Event Grid service became available in preview. This service enables centralized management of events in a uniform way. It’s scales with you when the number of events increases. And this is made possible by the foundation the event grid relies on service fabric. Not only does is auto scale you also do not have to provision anything beside a Event Topic to support custom events (see my blog Routing an Event with a custom Event Topic). Event Grid is Serverless, you only pay for each action (Ingress events, Advanced matches, Delivery attempts, Management calls). Moreover, the price will be 30 cents per million action in preview, and will be 60 cents once the service will be GA.

Azure Event Grid can be described as an event broker that has one of more event publishers and subscribers. Event publishers are currently Azure blob storage, resource groups, subscriptions, event hubs and custom events. More will be added in the coming months like IoT Hub, Service Bus, and Azure Active Directory. Subsequently, there are consumers of events (subscribers) like Azure Functions, Logic Apps, and WebHooks. And more will be added on the subscriber side too with Azure Data Factory, Service Bus and Storage Queues for instance.

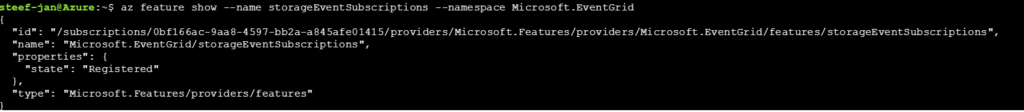

Azure Event Grid Storage registeration

Currently to capture Azure Blob Storage events you will need to register your subscription through a preview program. Once you have registered your subscription, which could take a day or two you can leverage Event Grid in Azure Blob Storage only in Central West US!

The Microsoft documentation on Event Grid has a section “Reacting to Blob storage events”, which contains a walkthrough to try out the Azure Blob Storage as an event publisher.

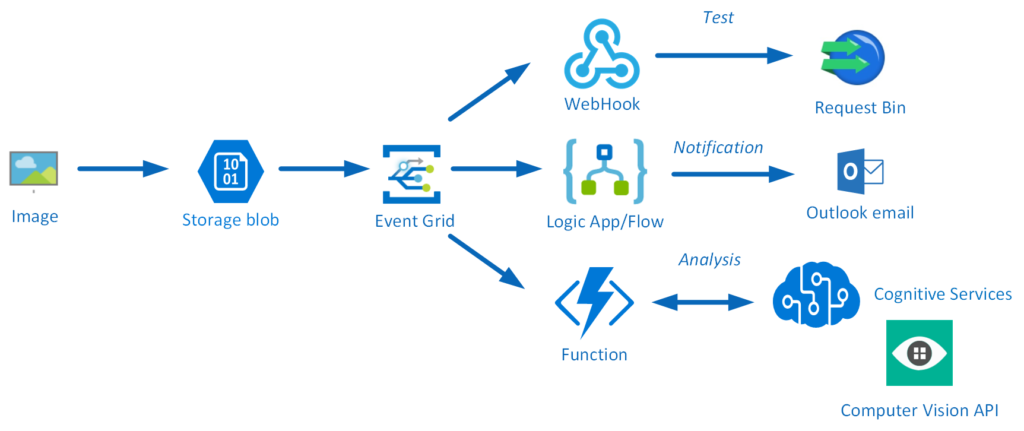

Azure Event Grid Storage Account Events Scenario

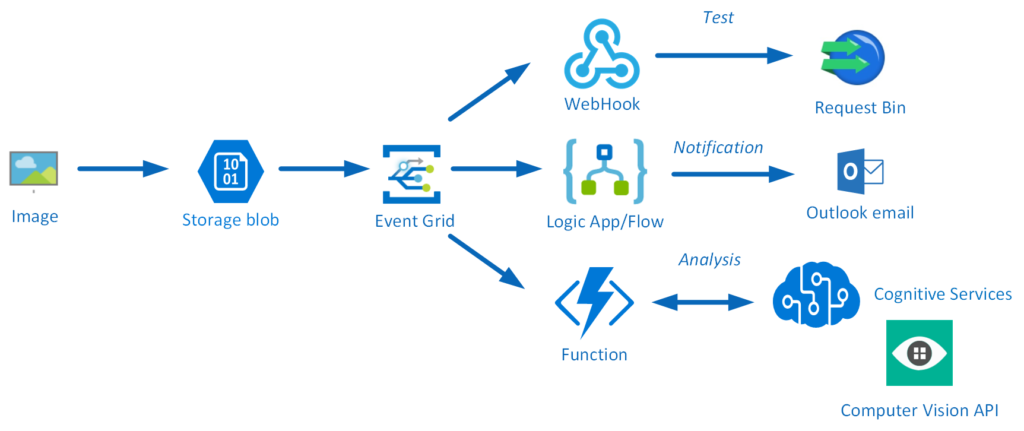

Having registered my subscription to the preview program I started exploring its capability as in the landing page of Event Grid sample scenario’s were explained. And I wanted to try out the serverless architecture sample, where one can use Event Grid to instantly trigger a serverless function to run image analysis each time a new photo is added to a blob storage container. Hence, I build a demo according to the diagram below.

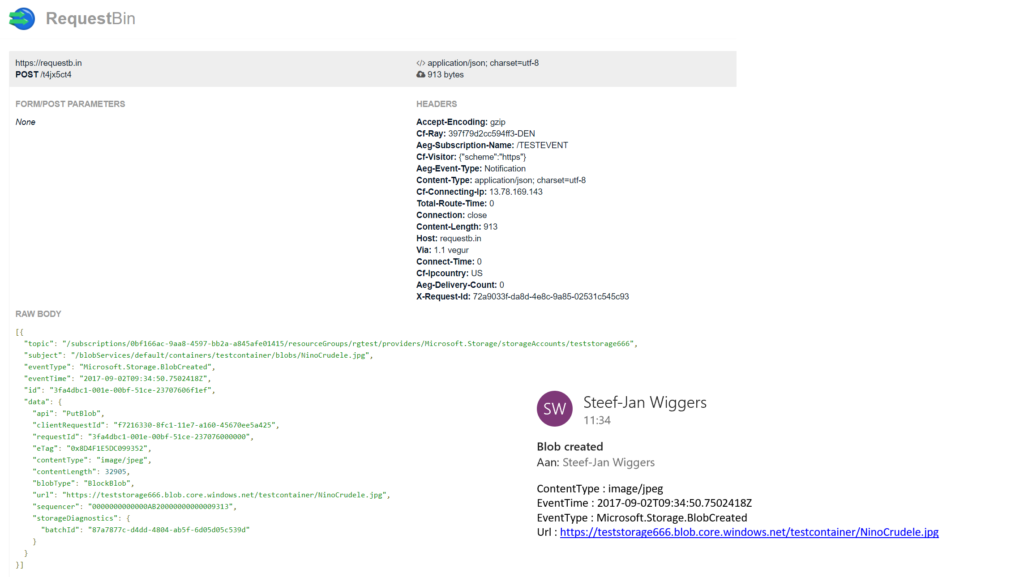

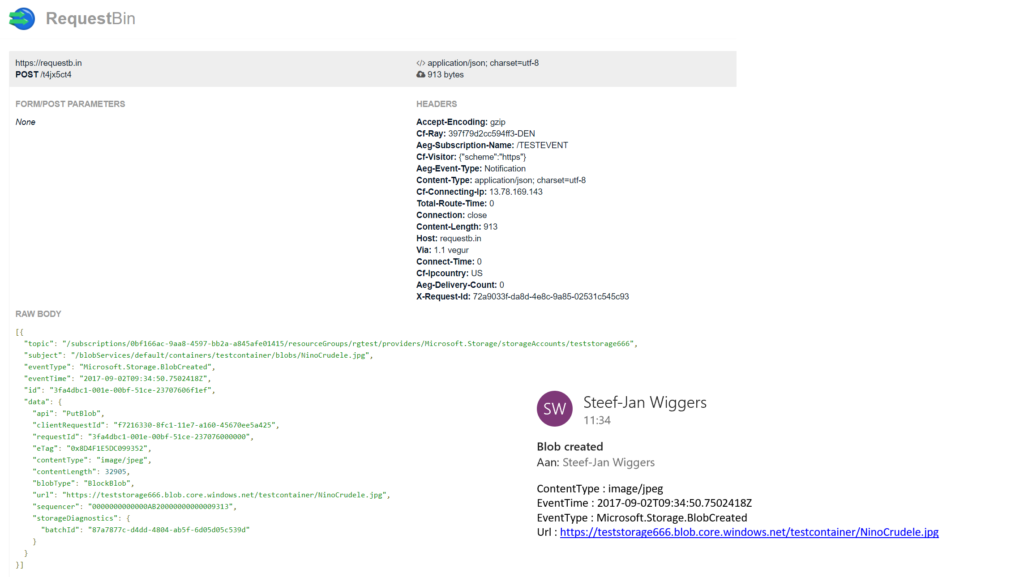

An image will be uploaded to a Storage blob container, which will be the event source (publisher). Subsequently, the Storage blob container belongs to a Storage Account containing the Event Grid capability. And the Event Grid has three subscribers, a WebHook (Request Bin) to capture the output of the event, a Logic App to notify me a blob has been created and an Azure Function that will analyze the image created in the blob storage, by extracting the URL from the event and use it to analyze the actual image.

Intelligent routing

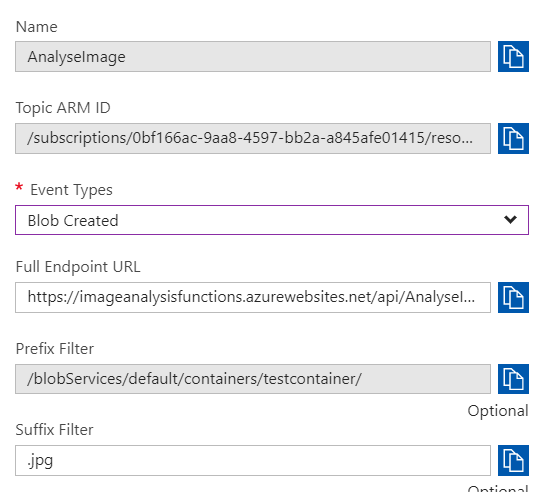

The screenshot below depicts the subscriptions on the events on the Blob Storage account. The WebHook will subscribe to each event, while the Logic App and Azure Function are only interested in the BlobCreated event, in a particular container(prefix filter) and type (suffix filter).

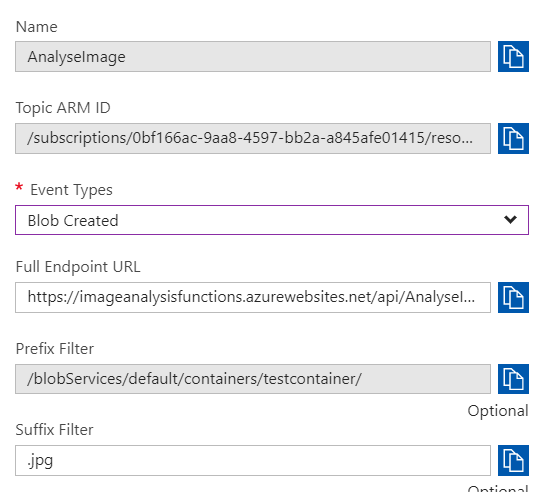

Besides being centrally managed Event Grid offers intelligent routing, which is the core feature of Event Grid. And you can use filters for event type, or subject pattern (pre- and suffix). Moreover, the filters are intended for the subscribers to indicate what type of event and/or subject they are interested in. When we look at our scenario the event subscription for Azure Functions is as follows.

- Event Type : Blob Created

- Prefix : /blobServices/default/containers/testcontainer/

- Suffix : .jpg

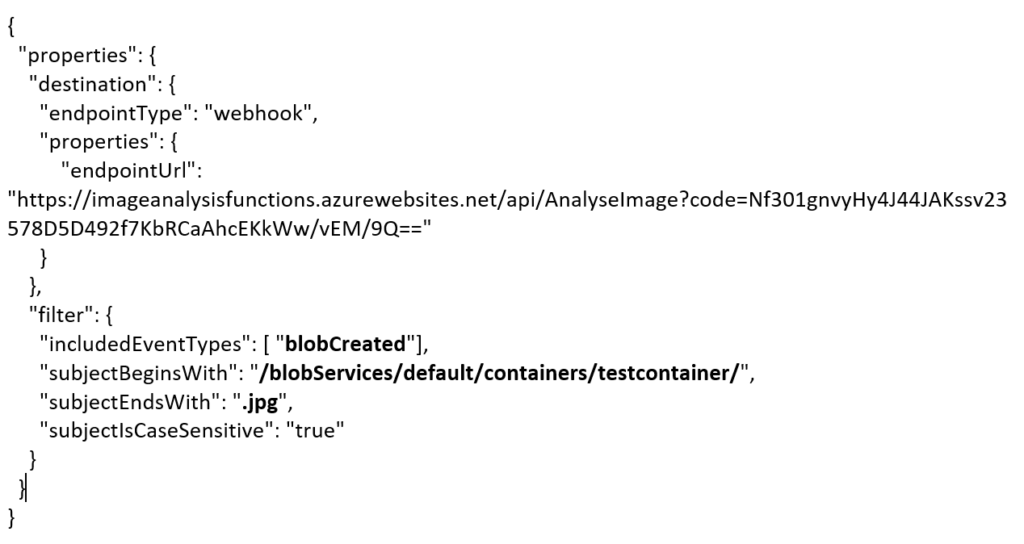

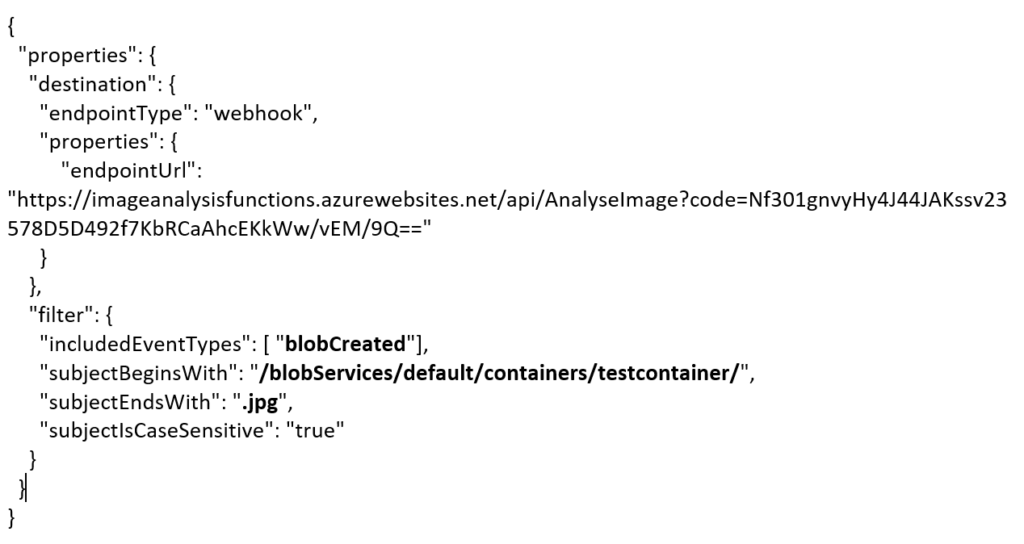

The prefix, a filter object, looks for the beginsWith in the subject field in the event. And the suffix looks for the subjectEndsWith in again the subject. In the event above you see that the subject has the specified Prefix and Suffix. See also Event Grid subscription schema in the documentation as it will explain the properties of the subscription schema. The subscription schema of the function is as follows:

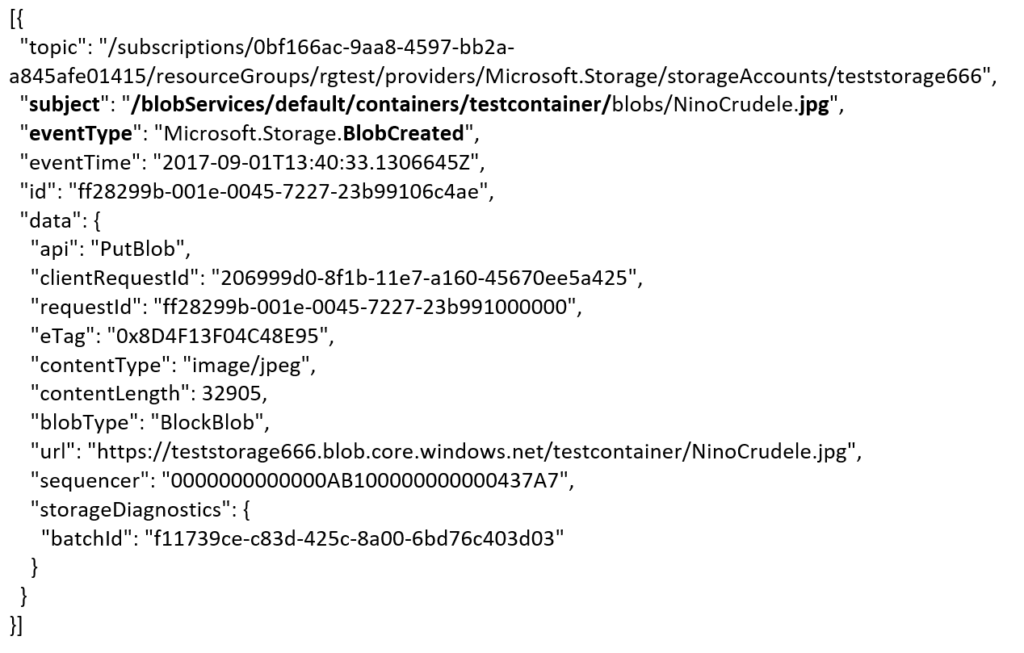

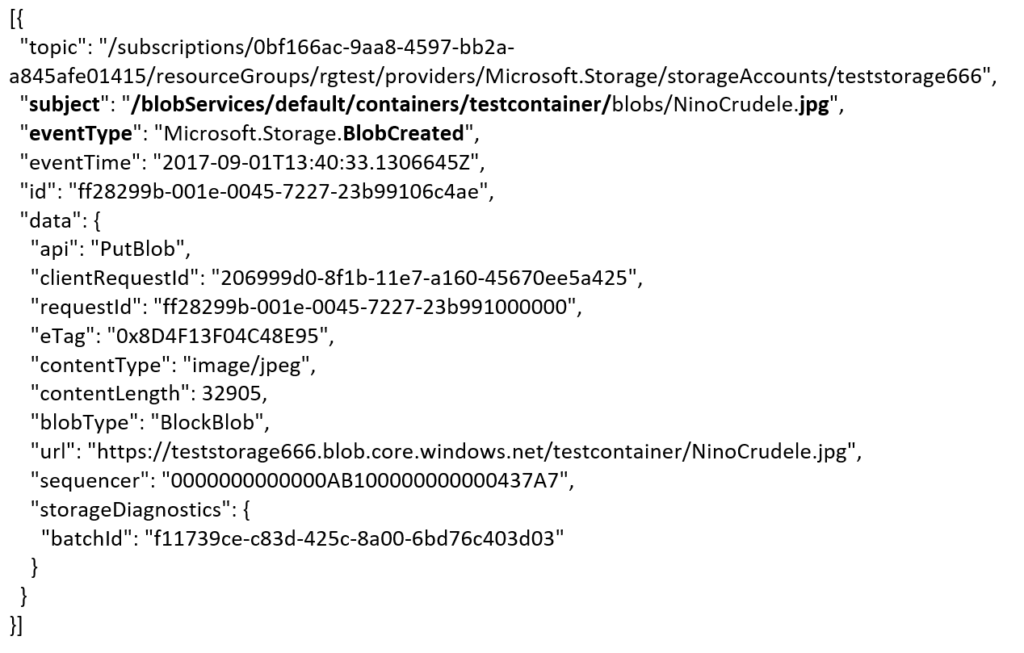

The Azure Function is only interested in a Blob Created event with a particular subject and content type (image .jpg). And this will be apparent once you inspect the incoming event to the function.

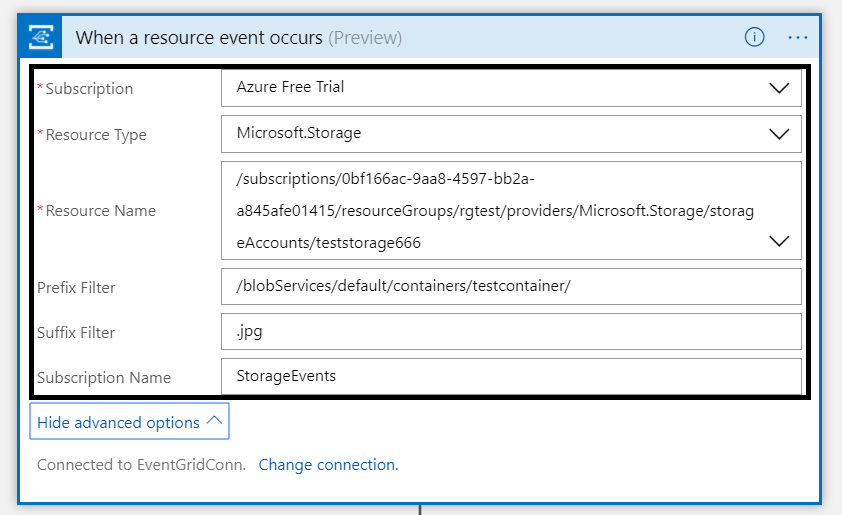

The same intelligence applies for the Logic App that is interested in the same event. The WebHook subscribes to all the events and lacks any filters.

The scenario solution

The solution contains of a storage account (blob), registered subscription for Event Grid Azure Storage, Request Bin (WebHook), a Logic App and a Function App containing a function. The Logic App and Azure Function subscribe to BlobCreated event with the filter settings.

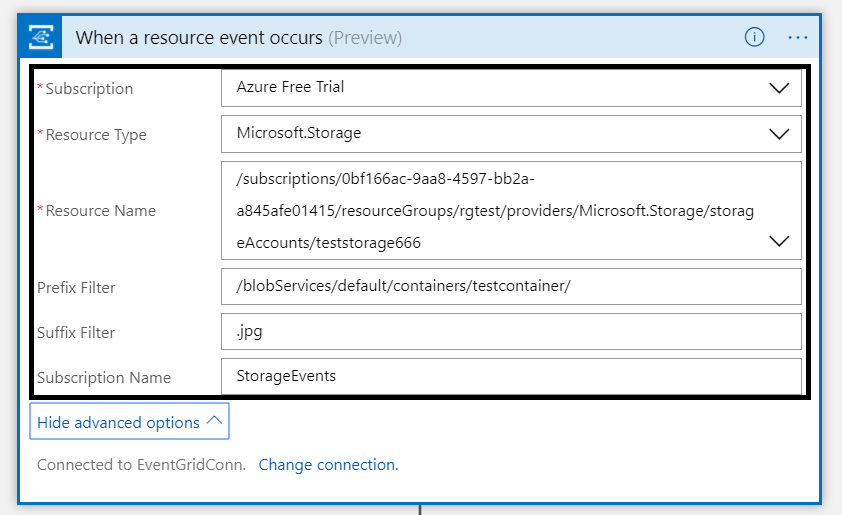

The Logic App subscribes to the event once the trigger action is defined. The definition is shown in the picture below.

Note that the resource name has to be specified explicitly (custom value) as the resource type Microsoft.Storage has be set explicitly too. The resource types that are listed are Resource Groups, Subscriptions, Event Grid Topics and Event Hub Namespace as Storage is still in a preview program. With this configuration the desired events can be evaluated and processed. In case of the Logic App it is parsing the event and sending an email notification.

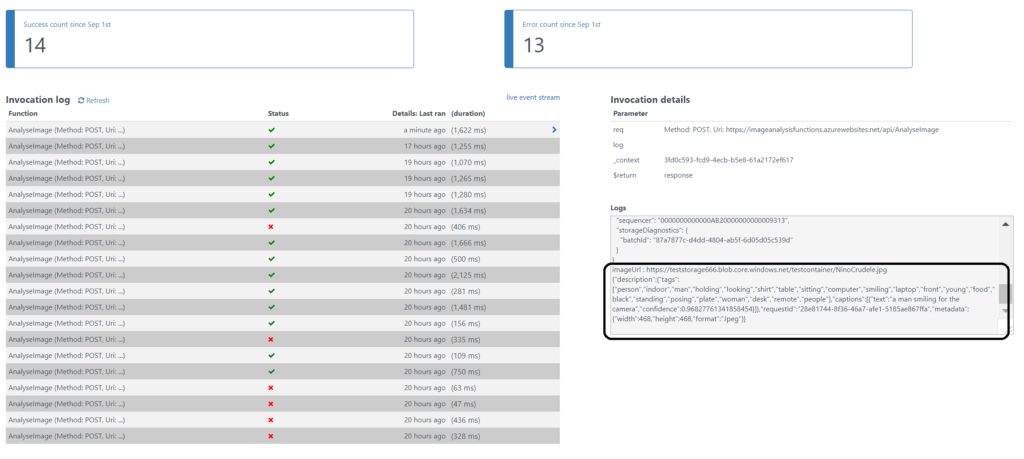

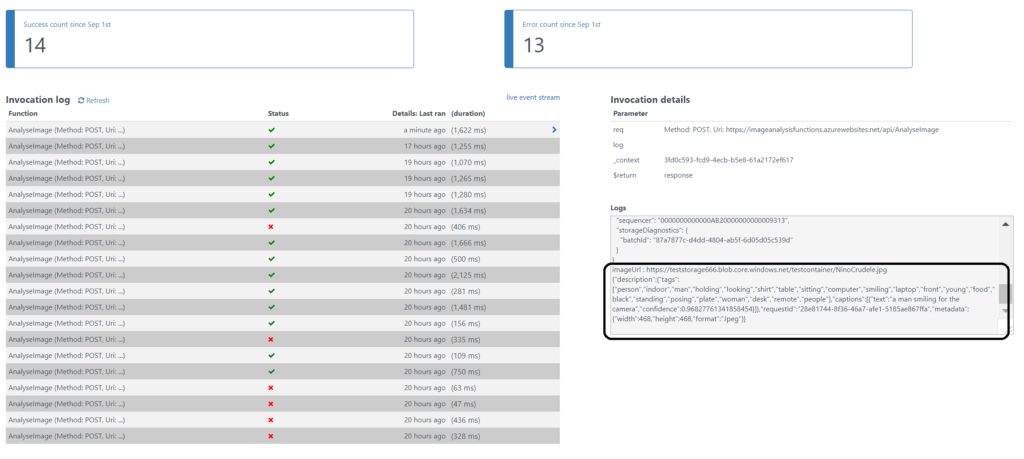

Azure Function Storage Event processing

The Azure Function is interested in the same event. And as soon as the event is pushed to Event Grid once a blob has been created it will process the event. The url in the event https://teststorage666.blob.core.windows.net/testcontainer/NinoCrudele.jpg will be used to analyze the image. The image is a picture of my good friend Nino Crudele.

This image will be streamed from the function to the Cognitive Services Computer Vision API. The result of the analysis can be viewed in the monitor tab of the Azure Function.

The result of the analysis that Nino is smiling for the camera with confidence. The Logic App will parse the event and sent an email. The Request Bin will show the raw event. And in case I deleted the blob than this will only be captured by the WebHook (Request Bin) as it is interested in any event on the Storage account.

Summary

Azure Event Grid is unique in its kind as now other Cloud vendor has this type of service that can handle events in a uniform and serverless way. Although it is still early days as this service is in preview a few week. However, with expansion of event publishers and subscribers, management capabilities and other features it will mature in the next couple of months. The service is currently only available in the West Central US and West US, yet over course of time it will become available in every region. And once it will become GA the price will increase.

Working with Storage Account as source (publisher) of events unlocked new insights in the Event Grid mechanisms. Moreover, it shows the benefits of having a managed service by Azure for events. And the pub-sub and push of events are the key differentiators towards the other two services Service Bus and Event Hubs. No longer do you have to poll for events and/or develop a solution for it. To conclude the Service Bus Team has completed the picture for messaging and event handling.

Author: Steef-Jan Wiggers

Steef-Jan Wiggers is all in on Microsoft Azure, Integration, and Data Science. He has over 15 years’ experience in a wide variety of scenarios such as custom .NET solution development, overseeing large enterprise integrations, building web services, managing projects, designing web services, experimenting with data, SQL Server database administration, and consulting. Steef-Jan loves challenges in the Microsoft playing field combining it with his domain knowledge in energy, utility, banking, insurance, health care, agriculture, (local) government, bio-sciences, retail, travel and logistics. He is very active in the community as a blogger, TechNet Wiki author, book author, and global public speaker. For these efforts, Microsoft has recognized him a Microsoft MVP for the past 7 years. View all posts by Steef-Jan Wiggers

by Bill Chesnut | Sep 1, 2017 | BizTalk Community Blogs via Syndication

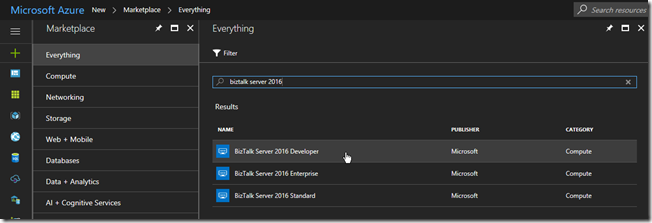

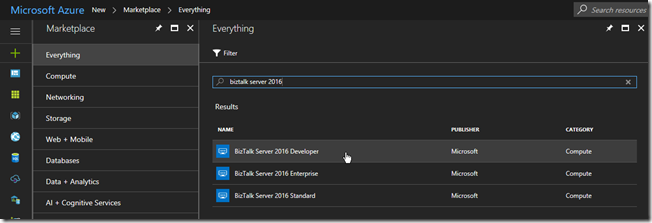

The process for creating a BizTalk 2016 Developer machine with 2016 is back to the way is was in the previous 2013R2 Azure Gallery Images, almost everything is install and all you need to do is some configuration. As an update from my

previous blog post, I will walk through the steps here:

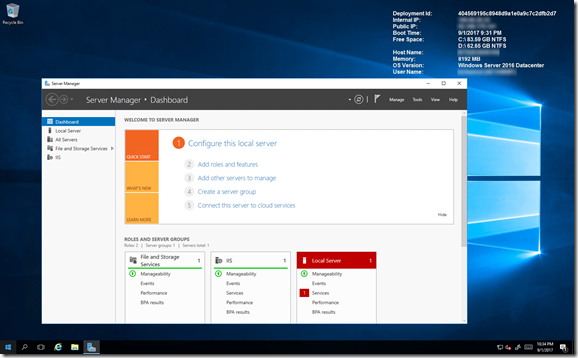

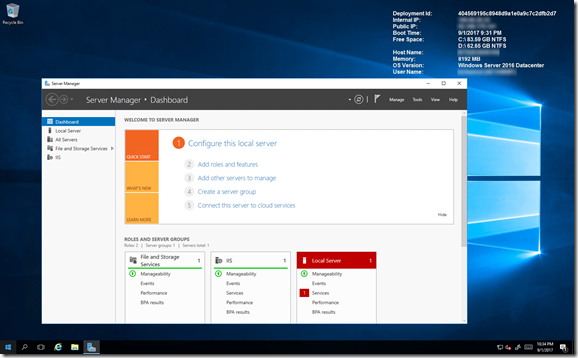

Start with the BizTalk Server 2016 Developer Azure Gallery Image

Create a new Virtual Machine from the Azure Gallery Image, Logon to your newly created machine

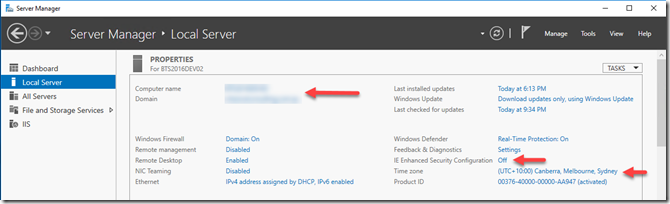

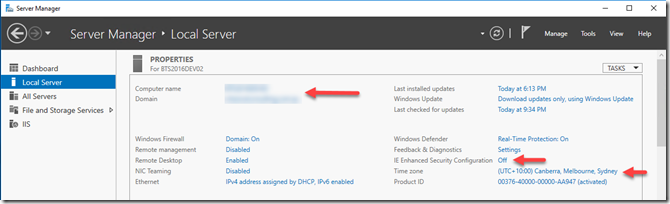

I then joined my machine to my Azure AD Domain Services Domain and updated some of the machine settings

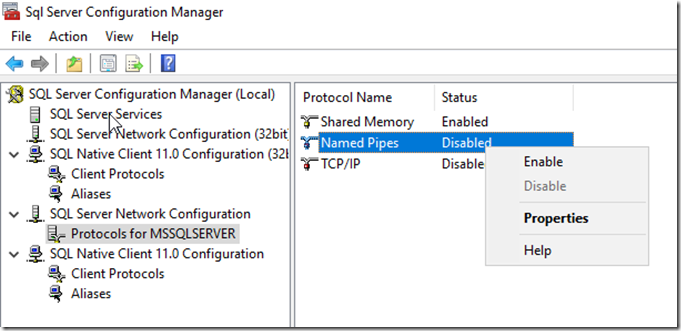

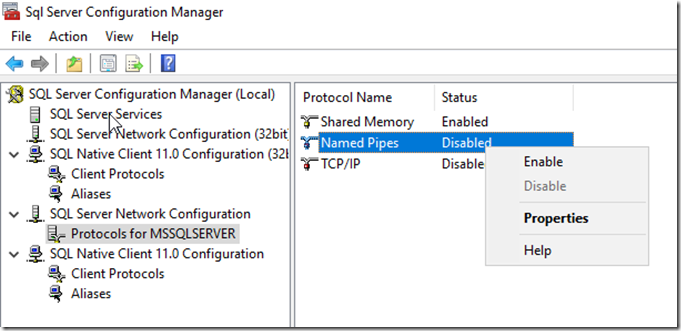

SQL 2016 is installed and configured, the only thing I found was around some of the enabled protocols, so open SQL Configuration manager and enable Named Pipes and TCP/IP, this requires a restart of SQL to become effective

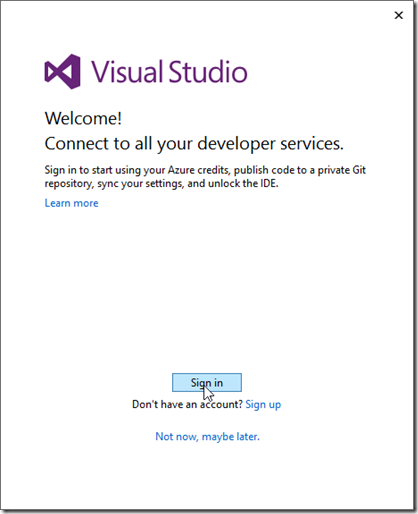

Visual Studio 2015 Professional is installed, you will just need to Sign In with your MSDN linked email account to activate

The remainder of building a BizTalk Server 2016 Developer machines is the same as my previous blog post, Starting from Configuring BizTalk Server 2016 Developer Edition – https://www.biztalkbill.com/2017/03/21/creating-biztalk-server-2016-developer-from-azure-gallery-image/