by Lex Hegt | Nov 30, 2017 | BizTalk Community Blogs via Syndication

| Application Health |

| Application Health |

|

|

|

All products deal with core Application Health scenarios very well. Nagios loses points for less visibility when determining the health of an application. |

| Platform Health |

| Host Instance Availability |

|

|

|

All products deal with Host Instance health scenarios. Good visibility exists when determining the health of a Host instance. The higher rank goes to BizTalk360, because of the Auto-correct feature, for which customization is needed with Nagios and SCOM. |

| Host Throttling |

|

|

|

All products provide capabilities when it comes to determining Host Throttling, although in case of Nagios, it will be monitoring of PerfMon counters. BizTalk360 however, provides a more comprehensive experience and allows you, besides Host Throttling monitoring, to historically go back to determine exactly when and why BizTalk was throttling. |

| Single Sign On Service availability |

|

|

|

All products provide suitable functionality in this area. BizTalk360 gets the higher mark as it also has an Auto correct feature, which tries to bring the SSO service in the expected state, after a failure |

| Monitoring SQL Agent Jobs |

|

|

|

All applications do provide this support. BizTalk360 provides a very friendly user experience, whereas SCOM provide deeper functionality that is found in the SQL Server Management Pack.

Nagios takes the lower ranking as complex customization is needed. |

| Core Infrastructure Monitoring |

|

|

|

Nagios and SCOM provide more Core Infrastructure Monitoring, but since we are keeping this relevant to BizTalk, BizTalk360 provides enough visibility to support a BizTalk environment and earns a top score as well. |

| BizTalk Health Monitor Integration |

|

|

|

BizTalk Health Monitor (BHM) integration is part of BizTalk360’s core offering and is very easy to setup. No integration exists between BizTalk Health Monitor and Nagios and SCOM. Custom development is required in order to provide this functionality. |

| Log/Database Query Monitoring |

|

|

|

All products receive partial scores for these features. Currently BizTalk360 provides no capability around parsing log files. It does provide support for SQL Server databases, but not others. Nagios and SCOM both provide support for both Log and Database Monitoring. The downside is that these features often require custom scripts to be written in order to support the requirement. SCOM loses marks on the usability aspects of implementing these functions, while Nagios loses marks as a separate product will be needed for Log monitoring. |

| Analytics |

|

|

|

Nagios has no BizTalk oriented analytics.

SCOM uses a data warehouse for reporting and long-term data storage. Because of the extended reporting capabilities, though not easy to use, SCOM gets the higher grade.

BizTalk360 provides a customizable Analytics Dashboard and many widgets give insight in the performance and processing of messages through BizTalk. |

| Operating Environment |

| Process Monitoring |

|

|

|

This is a core feature in BizTalk360 that detects when something is supposed to happen but does not. An example is not receiving a file from a trading partner when you expect to. Your environment can be completely healthy, but if the trading partner does not provide the file, traditional Monitoring techniques used by SCOM will not detect this. BizTalk360 will detect this scenario and send the appropriate notifications. |

| Maintenance Mode/Negative Monitoring |

|

|

|

Nagios supports Scheduling down time, but has no Negative monitoring for BizTalk Server resources.

Both SCOM and BizTalk360 provide functionality in these areas. BizTalk360 provides complete coverage whereas SCOM is only providing partial coverage.

SCOM is able to handle the Maintenance Mode requirements, but not the complete Negative Monitoring requirements. While a SCOM administrator can provide an override when it comes to a Host Instance or Receive Location, if someone starts or enables that BizTalk Service no notifications are generated. |

| Synthetic Transactions |

|

|

|

Nagios has support for Synthetic Transactions and other types of web site monitoring.

SCOM does provide additional features like running Synthetic Transactions from different servers within the environment. SCOM also provides deeper interrogation of these downstream applications by using .Net Application Performance Monitoring.

BizTalk360 provides good capabilities that will satisfy most requirements. Besides checking for expected HTTP return codes, it is also possible to fire custom requests and check for certain responses/response times. |

| Miscellaneous |

| Integration with other popular Monitoring Platforms |

|

|

|

SCOM provides broad integration with many other Monitoring Platforms and Service Desk applications.

BizTalk360 integrates with HP Operations Manager, Slack, ServiceNow, Microsoft Teams and provides a simple and elegant way of doing so. One could also develop custom Notification Channels to integrate with other Service Desk applications.

Nagios has slightly less features in this area and therefore gets the lower score |

| Composite Dashboards |

|

|

|

All products provide this capability. BizTalk360 gets a higher grade for the simplicity of the tool.

In order to build these types of Dashboards within SCOM, you need to be an advanced user of the system. Nagios Dashboards are not BizTalk oriented |

| Web based User Interfaces |

|

|

|

All products provide Web based interfaces. BizTalk360 gets the top grade because administrators can perform most of their BizTalk related activities with just BizTalk360, while with Nagios/SCOM they also need access to tools like BizTalk Admin Console, SQL Server, Event Viewer, Performance Monitor, portals, etc. |

| Summary Reports |

|

|

|

Nagios has all kind of reports, but these are not BizTalk oriented

SCOM does not provide a holistic report out of the box. In order to get this type of reports out of SCOM, you need to custom build a report.

BizTalk360 provides a scheduled Summary Report that will give subscribers an indicator that their environment is Healthy. This gives users a confirmation that everything is operating as expected within the environment. |

| Governance |

|

|

|

Nagios and SCOM provide both user management, but the no BizTalk operations can be done from these users.

BizTalk360 provides a much finer grained approach to Governance and Auditing. |

| Knowledgebase |

|

|

|

Nagios doesn’t have a Knowledge Base, but both SCOM and BizTalk360 include the ability to build up a Company Knowledge base.

SCOM also includes a Product Knowledge base provided by the BizTalk Product Group so it gets the higher score in this area.

BizTalk360 provides the capability to associate KB articles to certain events, like error codes of suspended instances etc. and therefore also deserves the highest rank. |

by Sriram Hariharan | Nov 30, 2017 | BizTalk Community Blogs via Syndication

It’s a bit longer this time! Exactly two months after the previous Azure Logic Apps Monthly Update from #MSIgnite, the Logic Apps team were back for their webcast on November 29, 2017. As always, the expectations were high to look into the updates that are coming into the Logic Apps portal. There was no Jeff Hollan in this session, so it was up to Jeff Hollan, Kevin Lam and Derek Li from the team to deliver the updates. So, buckle up! Here we take a look at the updates!!!

What’s New in Azure Logic Apps?

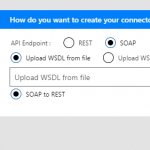

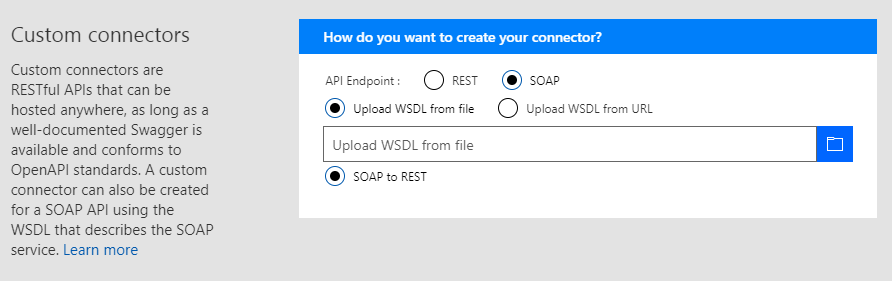

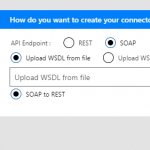

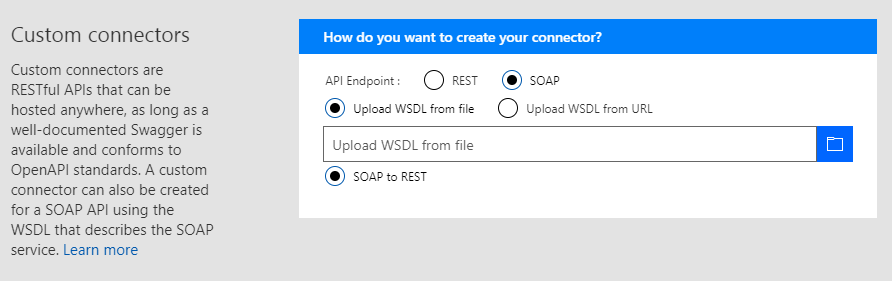

- SOAP!! Finally – The most requested and voted feature in UserVoice is now available in Azure Logic Apps. You can use the SOAP functionality over and above the already existing custom connector functionality.

- Azure Functions with Swagger – You can automatically render the Azure functions annotated with Swagger. You can have the properties exposed from your Azure Function showing up on the card to make the Azure Function look more richer with the information.

- HTTP OAuth with Certificates – OAuth2 is now supported for certificates

- Liquid Templates – This feature was released about a month ago. Liquid templates are used in Azure Logic Apps as an XSLT for JSON objects. You can create transformations on JSON objects (ex. JSON to JSON mapping and more other formats) without having to introduce a custom Azure Function. In addition, you can use Liquid templates to create a document/email template with replaceable parameters that you can use to create an output according to your requirement. Liquid templates are part of the Integration Account.

- Monitoring View – The Logic Apps team have added some cool functionalities in the monitoring view such as –

- Expression Tracing – You can actually get to see the intermediate values for complex expressions

- Decode/render XML with syntax coloring

- Do-until loop iterations

- For-each failure navigation

- Bulk resubmit in OMS

- Portal workflow settings page – New page where you can find all the workflow level settings in a single page and you make configuration changes from the single page. This avoids you to go to multiple places just to make specific configuration changes.

- New URI expressions – trim, uriHost, uriPathAndQuery, uriPort, uriScheme, uriQuery

- New Object Expressions – propertySet, property Add, propertyRemove. These expressions help you to manipulate the object throughout the life of the Logic App.

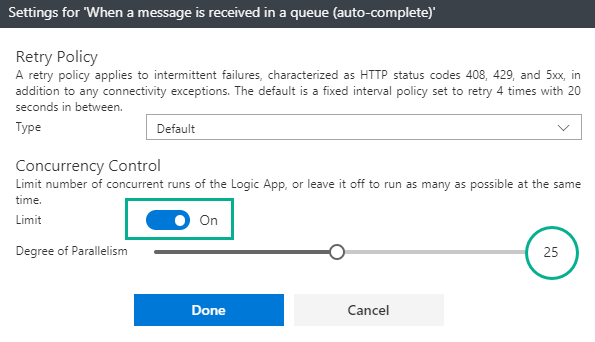

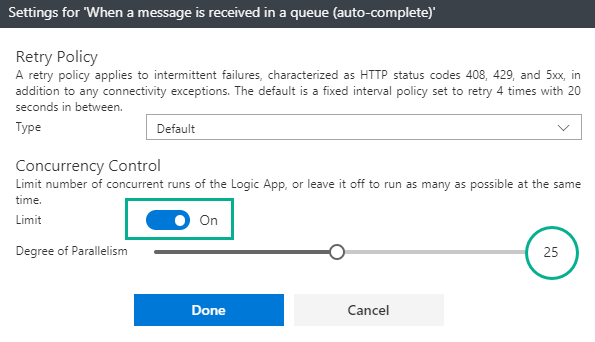

- Configurable parallelism for for-each loops and polling triggers – A toggle button to configure the degree of parallelism in a range of 1–50.

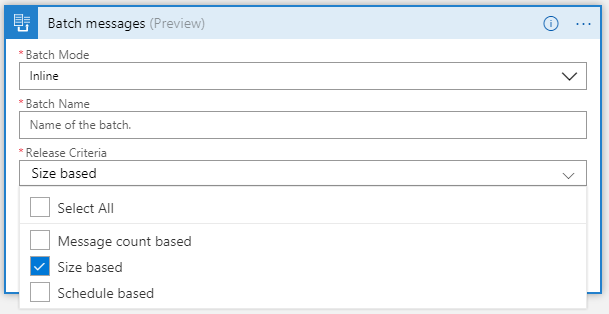

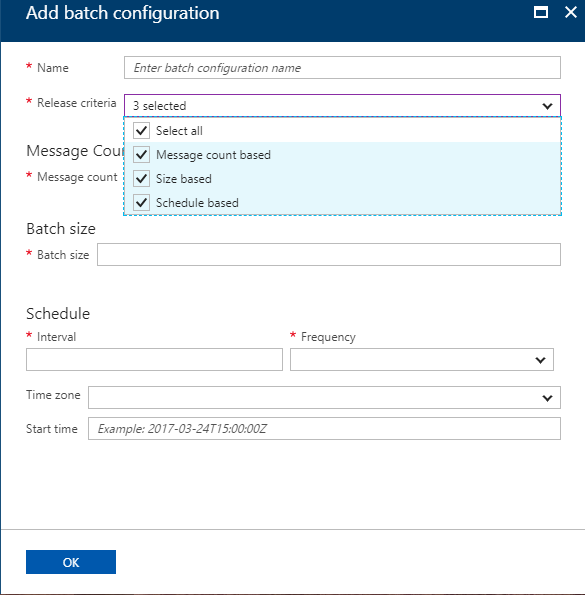

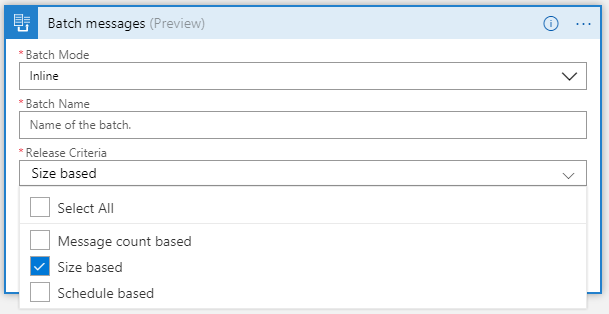

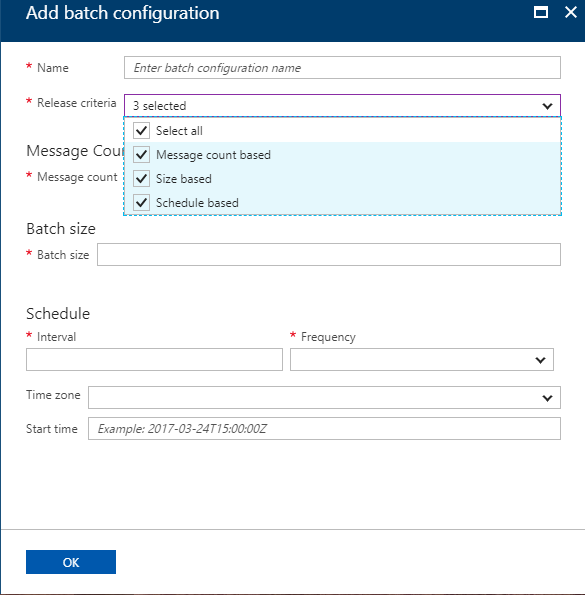

- Batching – a new trigger has been added to support the size based release. Furthermore, you can configure all the batches (size based, time based and count based) centrally in the Integration Account.

New Connectors

- Cognitive Services

- Content Moderator

- Custom Vision

- QnA Maker

- Azure Kusto

- Azure Container Instances – Manage containers right from Azure Logic Apps (create, group, manage (run workloads) and delete them)

- Microsoft Kaizala

- Marketo

- Outlook – webhook trigger

- SQL – dynamic schema for stored procedures

- Blob – create block blob

- Workday Human Capital Management (HCM)

- Pitney Bowes Data Validation

- D&B Optimizer

- Docparser

- iAuditor SafetyCulture

- Enadoc

- Derdack Signl4

- Tago

- Metatask

- Teradata – write operations

Derek Li showed a cool demo of how the configurable degree of parallelism works for for-each loops. You can watch the demo from 14:28 in the video.

[embedded content]

Logic Apps New Offerings (New Business Model)

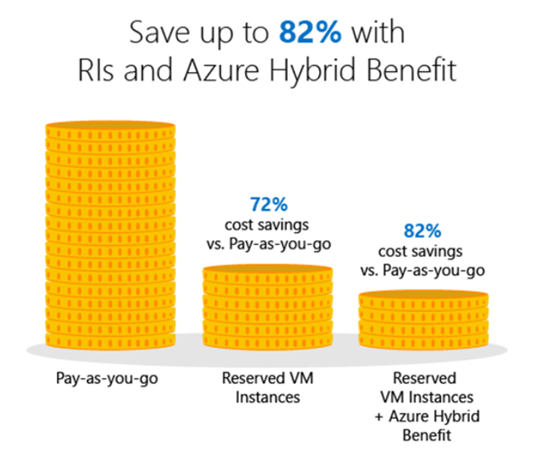

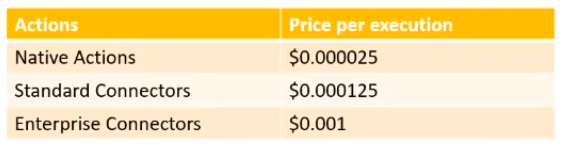

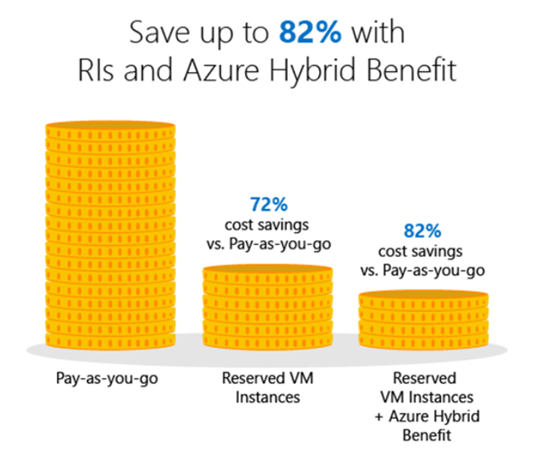

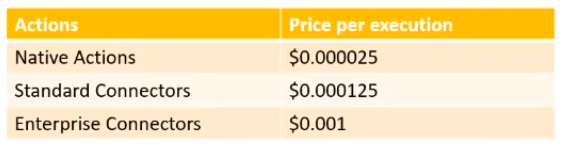

The cost of per action calls to Azure Logic Apps has been reduced and clear distinction has been made w.r.t Native calls ($800 per million actions to $25 per million actions)/Standard Connector calls ($800 per million actions to $25 per million actions)/Enterprise Connector calls.

Standard Integration Account Basic Integration Account

500 schemas & 500 maps 50 schemas & 50 maps

500 Partners 2 Partners

500 Agreements 1 Agreement

$1.35/hour $0.404/hour – 70% < standard

What’s in Progress?

- Complex Conditions within the designer – A Visual Studio Query like builder kind of experience so that they can build conditions as they want and build their applications

- Configurable lifetime – Currently the lifetime is set to 90 days. In future, this can be customized anywhere between 7 days and 365 days.

- Degrees of parallelism for split-on and request triggers

- Tracked properties in designer

- Snippets – patterns based approach (templates) to insert into a Logic App

- Updated Resource Blade

- On Premises Data Gateway

- Support for Custom Connectors (including SOAP)

- High Availability – create a gateway cluster with automatic failover capability

- Custom assemblies in Maps

- Support for XSLT 3

- New liquid actions – text/json

- Connectors

- SOAP Passthrough

- Office365 Excel

- Lithium

- Kronos

- K2

- Zoho

- Citrix ShareFile

- Netezza

- PostgreSQL

Watch the recording of the session here

[embedded content]

Community Events Logic Apps team are a part of

- Microsoft Tech Summit 2017-18 (happening Worldwide) – Logic Apps & API Management

- Gartner Application Strategies and Solutions Summit 2017 – December 4 — 6, 2017 at Las Vegas, NV

Feedback

If you are working on Logic Apps and have something interesting, feel free to share them with the Azure Logic Apps team via email or you can tweet to them at @logicappsio. You can also vote for features that you feel are important and that you’d like to see in logic apps here.

The Logic Apps team are currently running a survey to know how the product/features are useful for you as a user. The team would like to understand your experiences with the product. You can take the survey here.

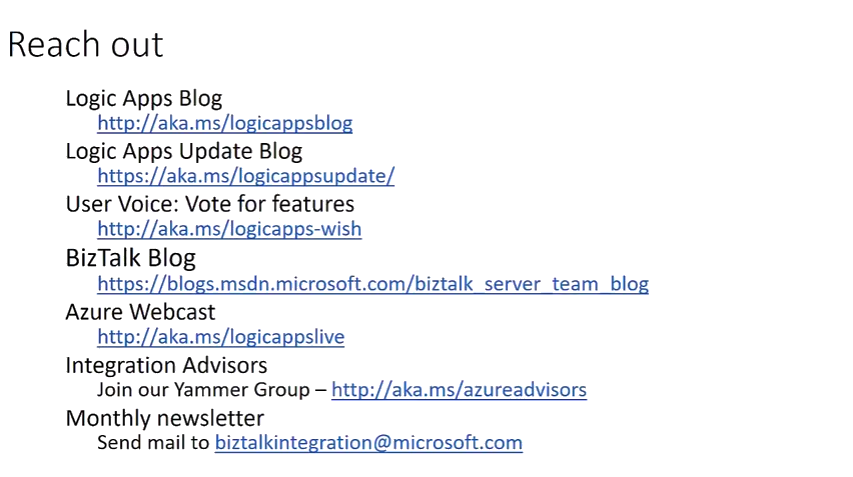

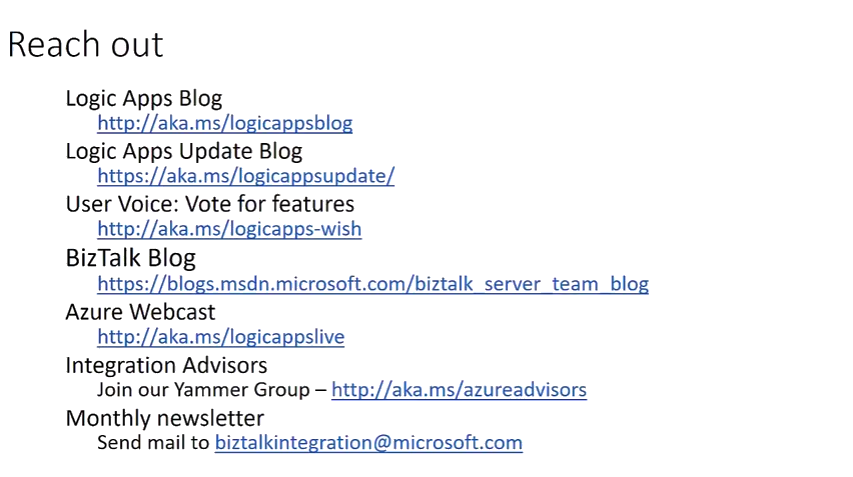

If you ever wanted to get in touch with the Azure Logic Apps team, here’s how you do it!

Previous Updates

In case you missed the earlier updates from the Logic Apps team, take a look at our recap blogs here –

Author: Sriram Hariharan

Sriram Hariharan is the Senior Technical and Content Writer at BizTalk360. He has over 9 years of experience working as documentation specialist for different products and domains. Writing is his passion and he believes in the following quote – “As wings are for an aircraft, a technical document is for a product — be it a product document, user guide, or release notes”. View all posts by Sriram Hariharan

by Sandro Pereira | Nov 27, 2017 | BizTalk Community Blogs via Syndication

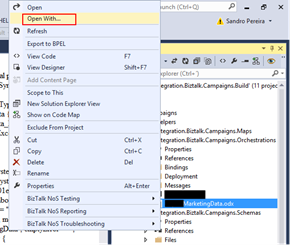

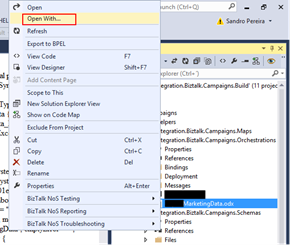

While trying to open a BizTalk Orchestration inside Visual Studio, normally a simple and easy double-click operation to open the BizTalk Orchestration Designer, I got a very famous behavior: BizTalk Orchestration didn’t open with the BizTalk Orchestration Designer, instead, it opened with the XML (Text) Editor

This behavior is happening to me a lot these last days. At first, I simply didn’t care because I know how to quickly workaround it, after a few times it just becomes annoying, after a few days and several orchestrations and different projects I got intrigued an entered in “Sherlock Holmes” mode.

Cause

Well, I don’t know exactly what can cause this problem but I suspect that this behavior happens more often when we migrate projects, or when we try to open previous BizTalk Server versions projects in recent versions of Visual Studio, special if we skip one or more versions, for example: from BizTalk Server 2010 to 2013 R2.

And may happen because of different configurations inside the structure of the “<BizTalk>.btproj” file.

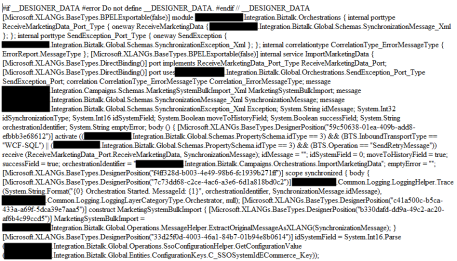

The cause of this strange behavior, is without a doubt related with a mismatch setting inside the structure of the “<BizTalk>.btproj” file in the XLang nodes (each orchestration inside your project will reflect to one XLang node specifying the name of the file, type name and namespace. Normally it has this aspect in recent versions of BizTalk Server:

<ItemGroup>

<XLang Include="MyOrchestrationName.odx">

<TypeName> MyOrchestrationName </TypeName>

<Namespace>MyProjectName.Orchestrations</Namespace>

</XLang>

</ItemGroup>

But sometimes we will find an additional element:

<ItemGroup>

<XLang Include="MyOrchestrationName.odx">

<TypeName> MyOrchestrationName </TypeName>

<Namespace>MyProjectName.Orchestrations</Namespace>

<SubType>Designer</SubType>

</XLang>

</ItemGroup>

When the SubType element is present, this strange behavior of automatically open the orchestration with the XML (Text) Editor.

Solution

First, let’s describe the easy workaround to this annoying problem:

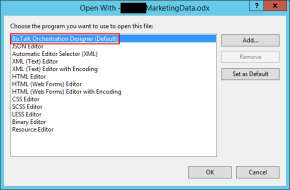

- On the solution explorer, right-click on the orchestration name and then select “Open With…” option

- On the “Open with …” window, select “BizTalk Orchestration Designer” option and click “OK”.

This will force Visual Studio to actually open the orchestration with the Orchestration Design. But again, this will be a simple work around because next time you try to open the orchestration inside Visual Studio it will open again with the XML (Text) Editor.

You may think that inside the “Open with …” window if we:

- Select “BizTalk Orchestration Designer” option, click “Set as Default”

- And then click “OK”.

It will solve the problem, but you are wrong, actually, if you notice in the picture above it is already configured as the default viewer.

So, to actually solve once and for all this annoying behavior you need to:

- Open the “<BizTalk>.btproj” (or project) file(s) that contain the orchestration(s) with this behavior with Notepad, Notepad++ or another text editor of your preference.

- Remove the <SubType>Designer</SubType> line

- Save the file and reload the project inside Visual Studio

If you then try to open the orchestration it will open with the BizTalk Orchestration Designer.

Author: Sandro Pereira

Sandro Pereira lives in Portugal and works as a consultant at DevScope. In the past years, he has been working on implementing Integration scenarios both on-premises and cloud for various clients, each with different scenarios from a technical point of view, size, and criticality, using Microsoft Azure, Microsoft BizTalk Server and different technologies like AS2, EDI, RosettaNet, SAP, TIBCO etc. He is a regular blogger, international speaker, and technical reviewer of several BizTalk books all focused on Integration. He is also the author of the book “BizTalk Mapping Patterns & Best Practices”. He has been awarded MVP since 2011 for his contributions to the integration community. View all posts by Sandro Pereira

by Jeroen | Nov 27, 2017 | BizTalk Community Blogs via Syndication

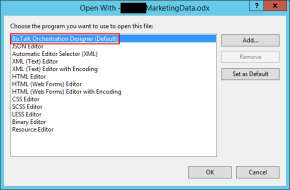

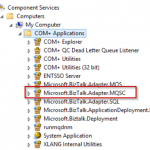

In a previous blogpost I wrote about how you could use HIS2016 together with BizTalk 2013R2 in order to use the MQSC adapter with IBM WebSphere MQ9.

This post is about upgrading an existing configured BizTalk 2013R2 environment without removing your current (port) configuration. By reading this description you should already know that this involves some “manual” actions…

These are the steps I followed to upgrade an already configured BizTalk 2013R2 with lots of MQSC SendPorts/ReceiveLocations where I didn’t want to remove all the current binding configuration (wich is what you need to do if you want to remove an adapter and follow the normal installation path…).

Before you start

- Make sure everything is backed-up correctly

- Stop everything: host-instances, SQL jobs, SSO,..

Uninstall the old

- Uninstall the currently installed IBM MQ Client

- Uninstall HIS2013 (but don’t unconfigure anything, just leave the MQSC adapter untouched)

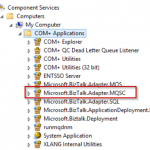

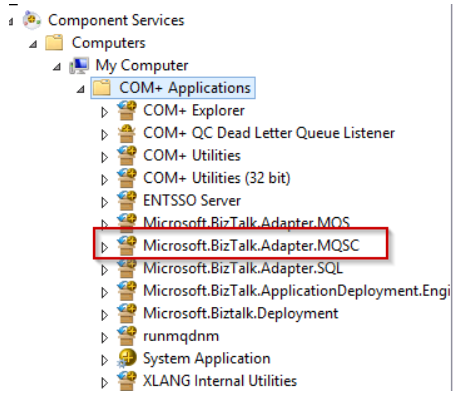

- Remove the MQSC COM+ Application

1

2

|

Uninstall MQSC COM+ Application:

%windir%Microsoft.NETFramework64v4.0.30319RegSvcs.exe /u "%snaroot%Microsoft.BizTalk.Adapter.MQSC.dll"

|

Install the new

- Install .NET 4.6.2 (.NET 4.6 is a minimal requirement for HIS 2016)

- Install HIS 2016 (no configuration, more details here)

- Install IBM MQ Client 8.0.0.7 (64 Bit)

- Install HIS 2016 CU1

The “manual” part

- Update the MQSC Adapter info in the BizTalkMgmtDb “Adapter” table

- Update the AssemblyVersion in InboundTypeName/OutboundTypeName columns to the newer version.

1

2

3

4

5

6

7

|

SELECT * FROM [BizTalkMgmtDb].[dbo].[adm_Adapter] where name like 'MQSC'

New InboundTypeName:

Microsoft.BizTalk.Adapter.Mqsc.MqscReceiver, Microsoft.BizTalk.Adapter.MQSC, Version=10.0.1000.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35, Custom=null

New OutboundTypeName:

Microsoft.BizTalk.Adapter.Mqsc.MqscTransmitter, Microsoft.BizTalk.Adapter.MQSC, Version=10.0.1000.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35, Custom=null

|

- Register the MQSC COM+ Application

1

2

|

Install MQSC COM+ Application:

%windir%Microsoft.NETFramework64v4.0.30319RegSvcs.exe "%snaroot%Microsoft.BizTalk.Adapter.MQSC.dll"

|

Finished!

This worked for a running setup at my current customer. I cannot give you any garantees this will work on your envirenment! Always test this kinds of upgrades before applying this in your production environment!

by Sriram Hariharan | Nov 22, 2017 | BizTalk Community Blogs via Syndication

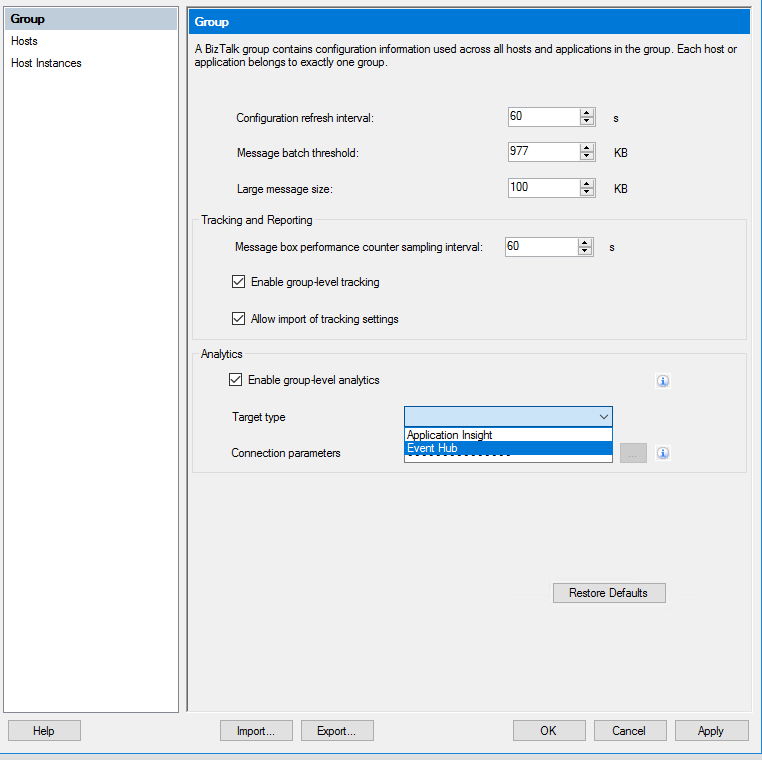

Microsoft earlier today released BizTalk Server 2016 Feature Pack 2 for Microsoft BizTalk Server. This release comes 7 months after Microsoft announced the Feature Pack 1 for Microsoft BizTalk Server 2016.

The BizTalk Server 2016 Feature Pack 2 (FP2) contains all functionalities of Feature Pack 1 and all the fixes in the Cumulative Update 3. This FP2 can be installed on BizTalk Server 2016 Enterprise and Developer Edition. You can download the latest version from here.

What’s available in BizTalk Server 2016 Feature Pack 2

In BizTalk Server 2016 Feature Pack 2, Microsoft is adding the following capabilities –

- Deploy applications easily into multiple servers using Deployment Groups

- Backup to Azure Blob Storage account

- Azure Service Bus adapter now supports the Service Bus Premium capabilities

- Full support for Transport Layer Security 1.2 authentication and encryption

- Support for HL7 2.7.1

- Expose SOAP endpoints with API Management

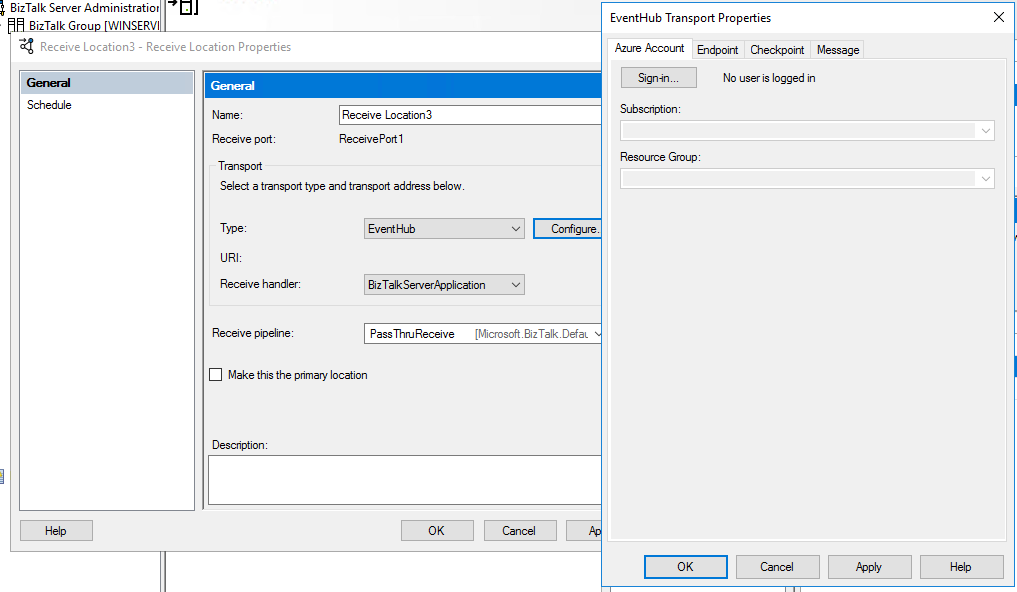

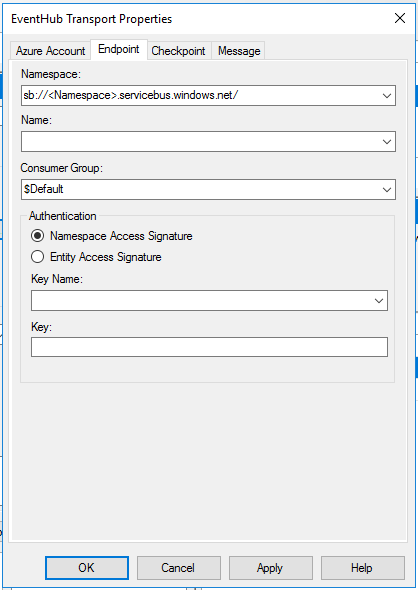

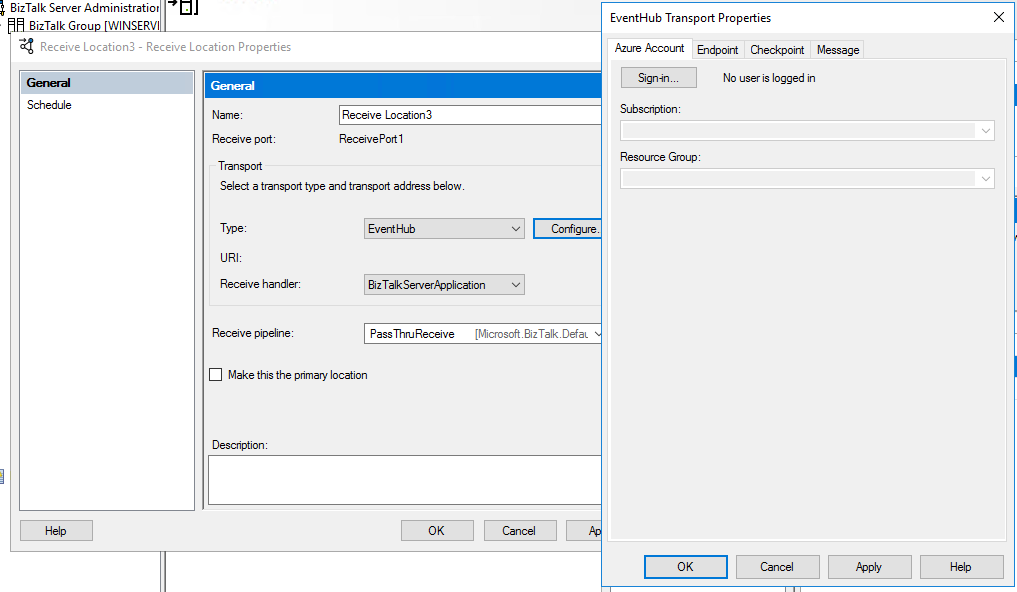

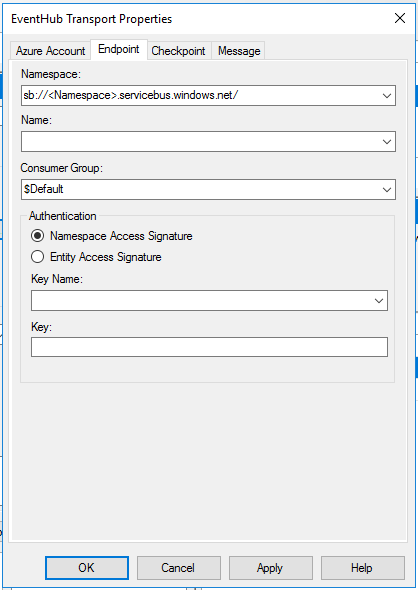

- Event Hub Adapter to send and receive messages from Azure Event Hubs

- Ability to use SQL default instances and SQL named instances with Application Insights

Application Lifecycle Management with VSTS

With BizTalk Server 2016 Feature Pack 1, Microsoft introduced the capability where users can perform continuous build and deployment seamlessly. Check out the detailed blog article that covers the ALM Continuous Deployment Support with VSTS via Visual Studio capability in detail.

In BizTalk Server 2016 Feature Pack 2, Microsoft has added improvements where users can use deployment groups to deploy BizTalk applications to multiple servers. This comes in addition to using the agent-based deployment.

Backup to Azure Blob Storage account

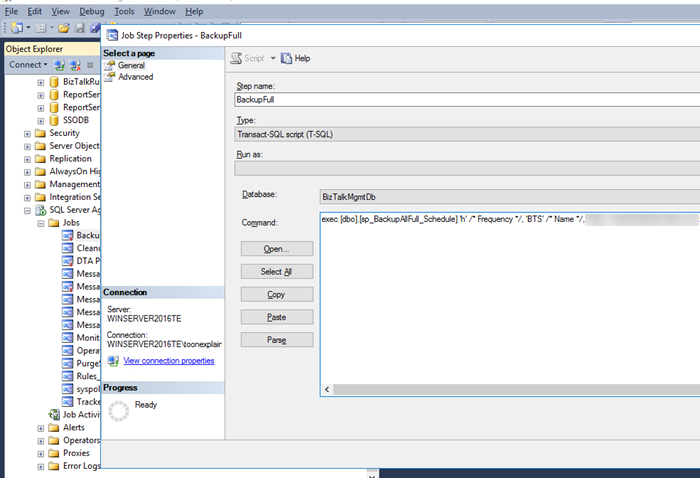

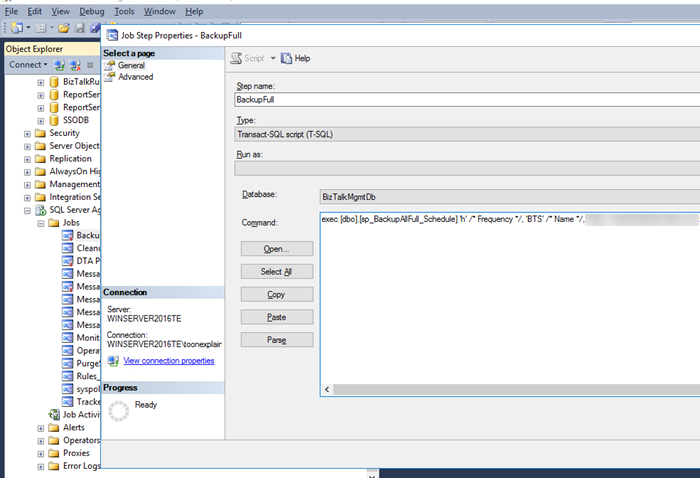

In BizTalk Server 2016 Feature Pack 2, once you have installed and configured BizTalk server, you can configure the Backup BizTalk Server job to backup your BizTalk databases and log files into Azure Blob storage account.

Event Hub Adapter in BizTalk Server 2016

With BizTalk Server 2016 Feature Pack 2, you can send and receive messages between Azure Event Hubs and BizTalk Server.

Azure Service Bus adapter now supports the Service Bus Premium capabilities

You can use the Service Bus adapter to send and receive messages from Service Bus queues, topics and relays. With this adapter, it becomes easy to connect the on-premise BizTalk server to Azure. In BizTalk Server 2016 Feature Pack 2, you can send messages to partitioned queues and topics. Additionally, FP2 supports Service Bus Premium capabilities for enterprise scale workloads.

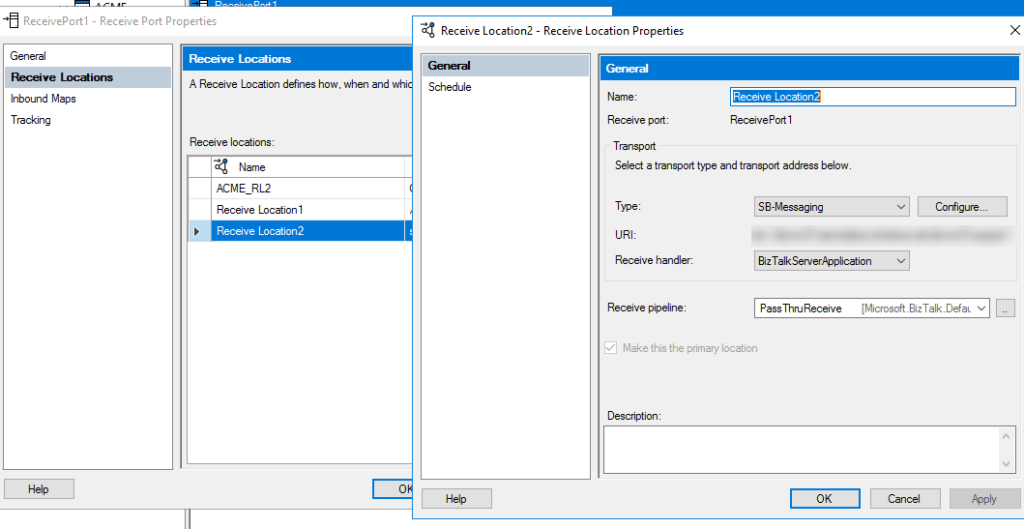

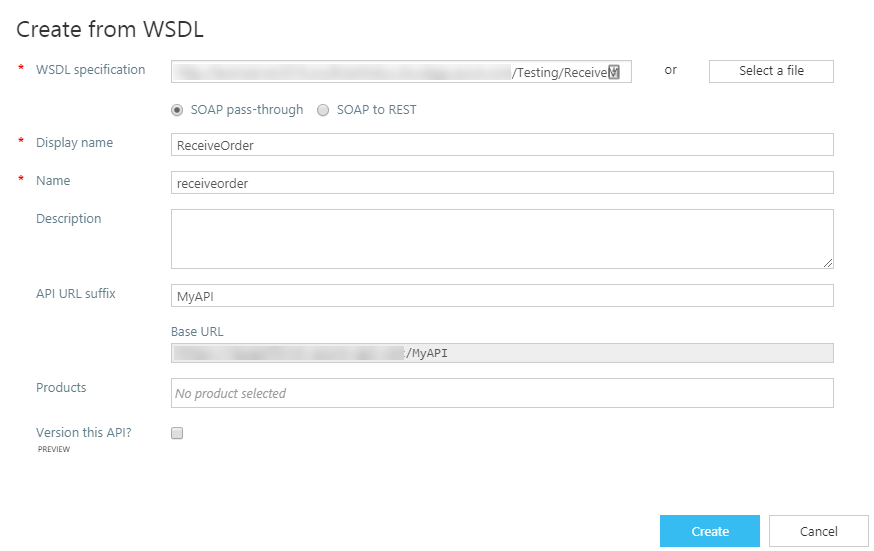

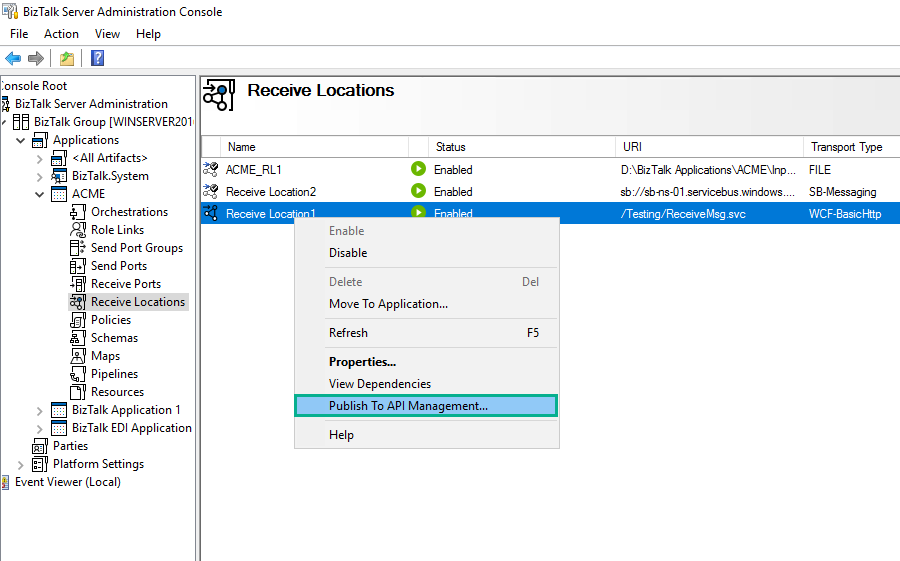

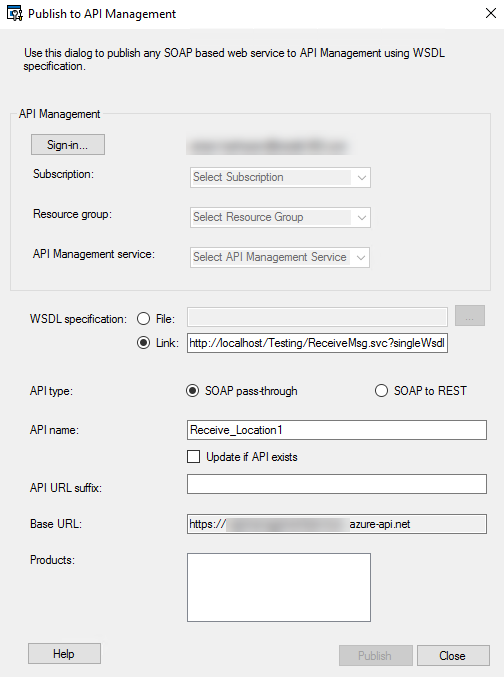

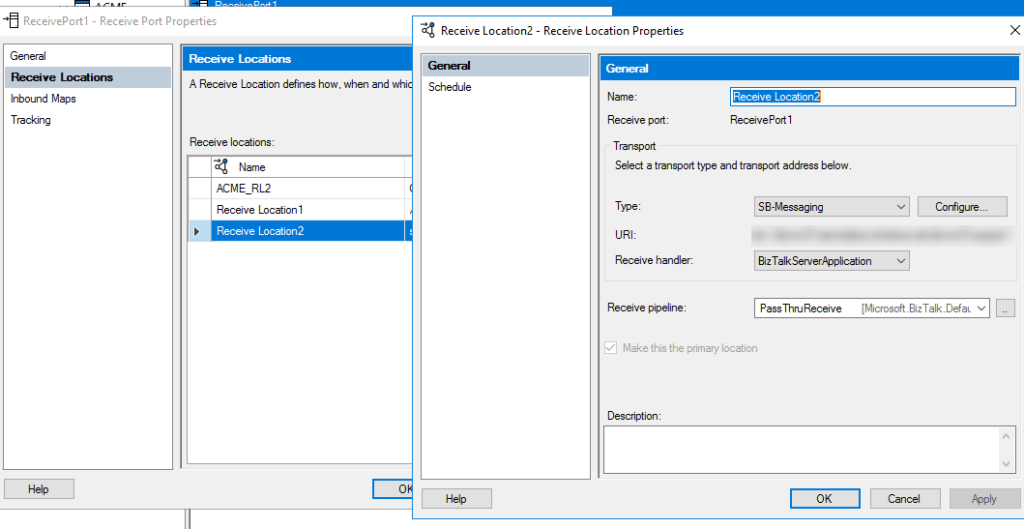

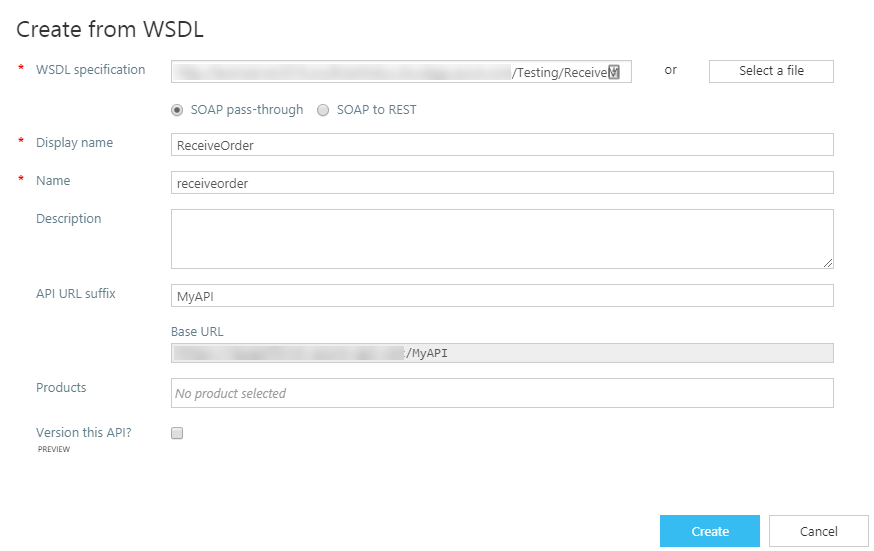

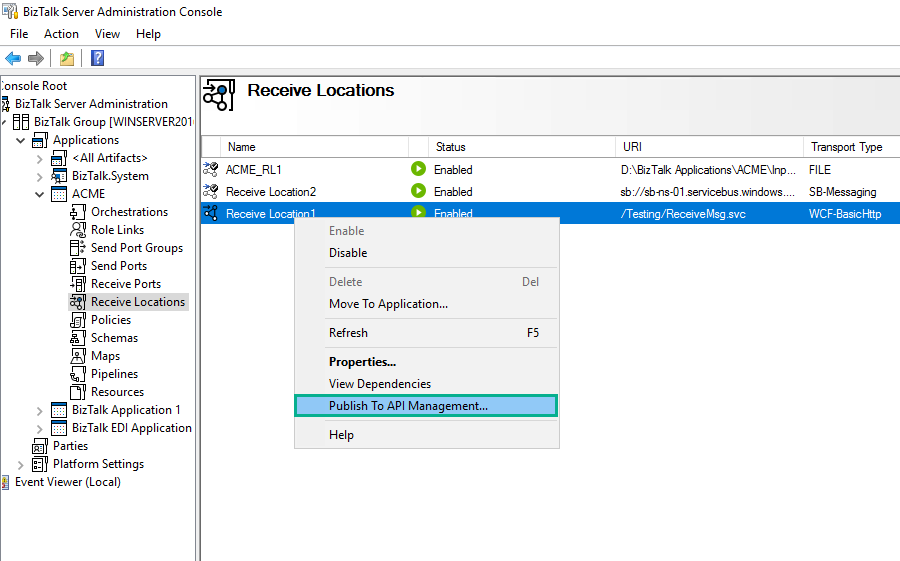

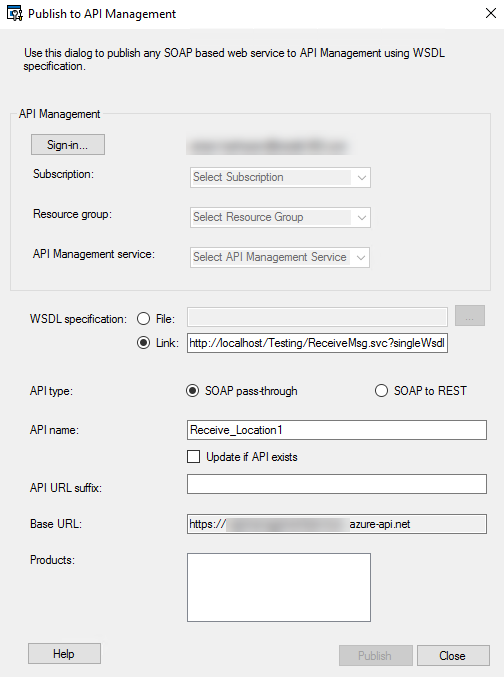

Expose SOAP endpoints with API Management

With the BizTalk Server 2016 Feature Pack 2 release, you can expose a WCF-BasicHTTP receive location as an endpoint (SOAP based) from the BizTalk Server Admin console. This enhancement comes in addition to the API Management integrations made in Feature Pack 1 where you can expose an endpoint through API Management from BizTalk.

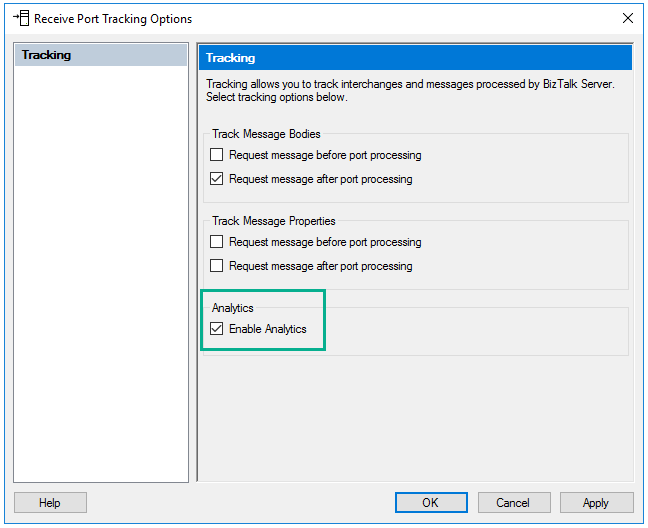

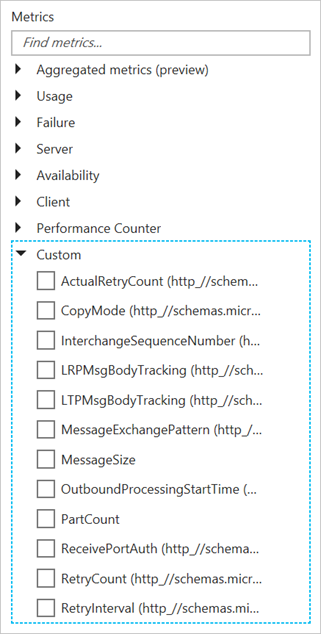

Ability to use SQL default instances and SQL named instances with Application Insights

In BizTalk Server 2016 Feature Pack 1, Microsoft introduced the capability for users to be able to send tracking data to Application Insights. The Feature Pack 2 supports additional capabilities such as support for SQL default instances and SQL named instances. In addition, users can also send tracking data to Azure Event Hubs.

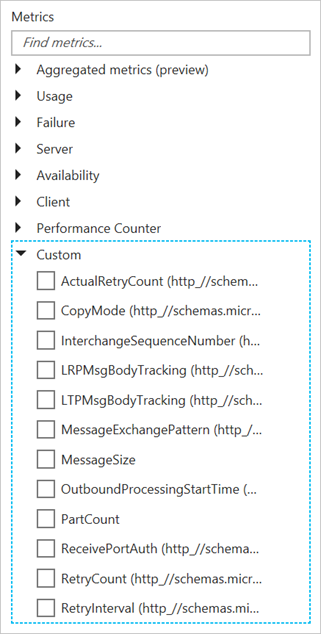

Within Application Insights, under the Metrics section, select Custom to view the available tracked properties.

Image Source – Microsoft Documentation

Image Source – Microsoft Documentation

Download and Get Started with BizTalk Server 2016 Feature Pack 2

The BizTalk Server 2016 Feature Pack 2 can be installed on BizTalk Server 2016 Enterprise and Developer Edition (retail, CU1, CU2, CU3, FP). You can download the latest version from here.

Summary

It is really exciting to see the Feature Pack updates being released by the Pro Integration team. This really shows their commitment to Microsoft BizTalk Server and their vision to integrate on-premise solutions with the cloud.

Author: Sriram Hariharan

Sriram Hariharan is the Senior Technical and Content Writer at BizTalk360. He has over 9 years of experience working as documentation specialist for different products and domains. Writing is his passion and he believes in the following quote – “As wings are for an aircraft, a technical document is for a product — be it a product document, user guide, or release notes”. View all posts by Sriram Hariharan

by BizTalk Team | Nov 21, 2017 | BizTalk Community Blogs via Syndication

Available now is Microsoft BizTalk Server 2016 Feature Pack 2. This update to BizTalk Server 2016 contains new and improved capabilities for modernizing BizTalk Server workloads in the areas of deployment, runtime and analytics.

Deployment and Administration

- Application Lifecycle Management with VSTS

- Using Visual Studio Team Services, you can define multi-server deployments of BizTalk Server 2016, and then maintain those systems throughout the application lifecycle.

- Backup to Azure Blob Storage

- When deploying BizTalk Server to Azure VMs, you can backup BizTalk Server databases to Azure blob storage.

Server Runtime

- Adapter for Service Bus v2

- When using the Service Bus Adapter, you can utilize Azure Service Bus Premium for enterprise-scale workloads.

- Transport Layer Security 1.2

- Securely deploy BizTalk Server using industry-standard TLS 1.2 authentication and encryption.

- API Management

- Publish Orchestration endpoints using Azure API Management, enabling organizations to publish APIs to external, partner and internal developers to unlock the potential of their data and services.

- Event Hubs

- Using the new Event Hub Adapter, BizTalk Server can send and receive messages with Azure Event Hubs, where BizTalk Server can function as both an event publisher and subscriber, as part of a new Azure cloud-based event-driven application.

Analytics and Reporting

- Event Hubs

- Send BizTalk Server tracking data to Azure Event Hubs, a hyper-scale telemetry ingestion service that collects, transforms, and stores millions of events.

- Application Insights

- When preparing BizTalk Server to send tracking data to Application Insights, released in FP1, you can use the new Azure sign-in dialog to simplify configuration and named instances of SQL Server.

Licensing

- Microsoft customers with Software Assurance or Azure Enterprise Agreements are licensed to use Feature Pack 2.

- BizTalk Server 2016 Feature Pack 2 can be installed on Enterprise and Developer editions only.

Software

- BizTalk Server 2016 Feature Pack 2 contains all the functionality of Feature Pack 1, plus all the fixes in Cumulative Update 3.

- You can install Feature Pack 2 on BizTalk Server 2016 Enterprise and Developer Edition (retail, CU1, CU2, CU3, FP1).

- Download the software now from the Microsoft Download Center.

Documentation

You can learn more about FP2 by reading the documentation articles. Please contribute to improving our documentation, by joining our BizTalk Server community on GitHub.

Feedback

Contribute to the community by participating in our forum, reading our blog, following us on Twitter (@BizTalk_Server), as well as providing product input using our BizTalk User Voice.

by Gautam | Nov 19, 2017 | BizTalk Community Blogs via Syndication

Do you feel difficult to keep up to date on all the frequent updates and announcements in the Microsoft Integration platform?

Integration weekly update can be your solution. It’s a weekly update on the topics related to Integration – enterprise integration, robust & scalable messaging capabilities and Citizen Integration capabilities empowered by Microsoft platform to deliver value to the business.

If you want to receive these updates weekly, then don’t forget to Subscribe!

On-Premise Integration:

Cloud and Hybrid Integration:

Back to Cloud Services via The Azure podcast

Feedback

Hope this would be helpful. Please feel free to let me know your feedback on the Integration weekly series.

by Jeroen | Nov 17, 2017 | BizTalk Community Blogs via Syndication

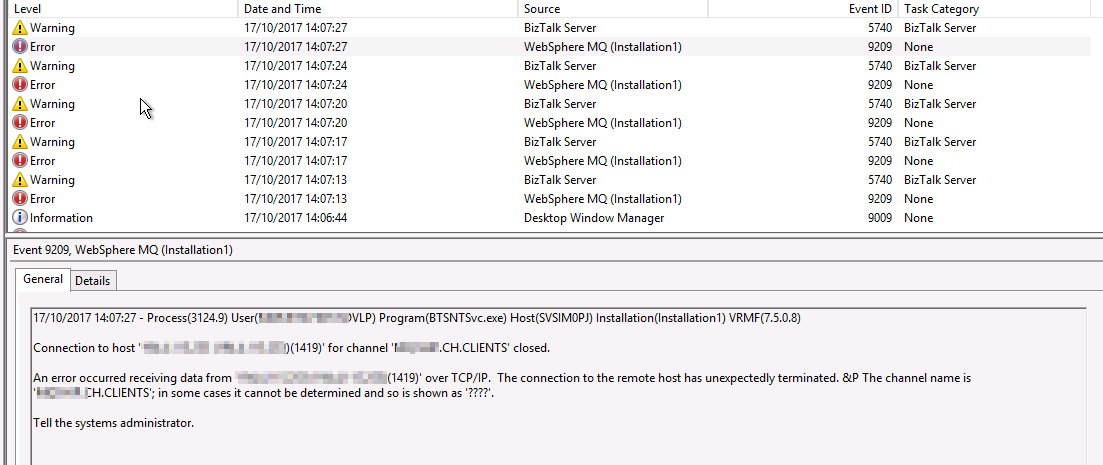

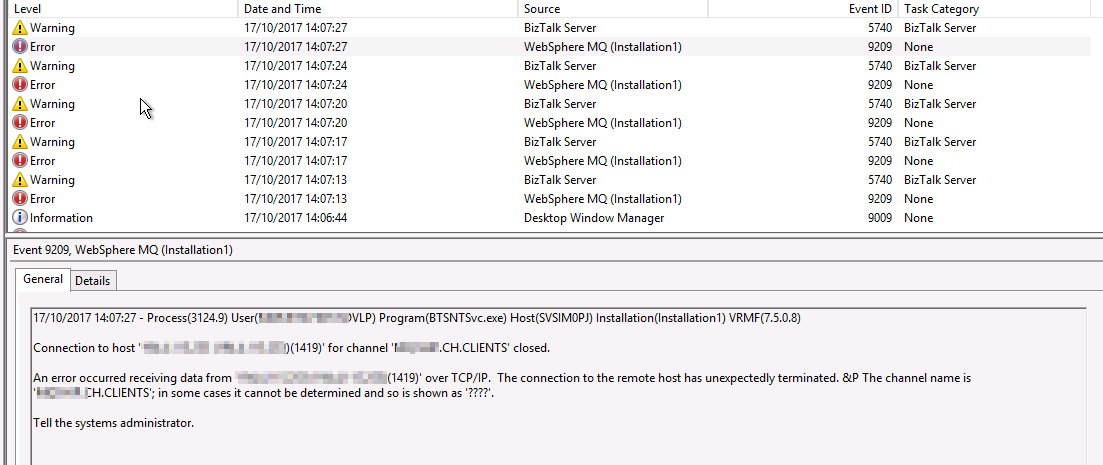

My current customer has an integration landscape with a lot of IBM WebSphere MQ. After an upgrade to IBM WebSphere MQ 9 of a certain queue manager, we were no longer able to receive messages from a queue that was working perfectly before the upgrade. Sending was still working as before.

At that moment in time we were running BizTalk 2013R2 CU7 with Host Integration Server (HIS) 2013 CU4 and using the IBM MQ Client 7.5.0.8.

Our eventlog was full of these:

This setup was still working perfectly with IBM WebSphere MQ 7 and 8 queue managers. I also tried to update the MQ client to a higher version (8.0.0.7), but this resulted in even more errors…

The Solution: Host Integration Server (HIS) 2016

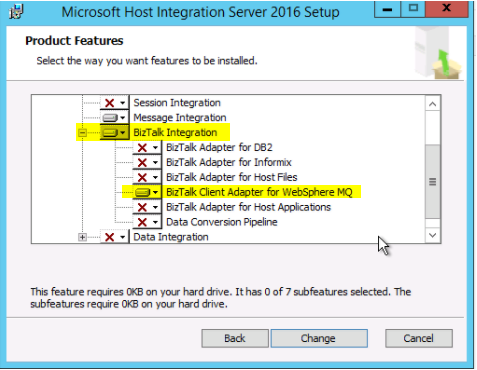

When you take a look at the System Requirements of HIS 2016 you see that it supports MQ 8. No mention of MQ 9, I know… But it also supports BizTalk Server 2013R2! At this point we really needed a solution, so we took it for a spin!

I installed and configured everything in following order (the installation is always very import!):

- Install BizTalk 2013 R2

- Install BizTalk Adapter Pack

- Configure BizTalk

- Install BizTalk 2013 R2 CU7

- Install .NET 4.6.2 (required for HIS 2016)

- Install HIS 2016 (no configuration)

- Install IBM MQ Client 8.0.0.7 (64 Bit)

- Add MQSC Adapter to BizTalk

- Install HIS 2016 CU1

- Reboot Servers

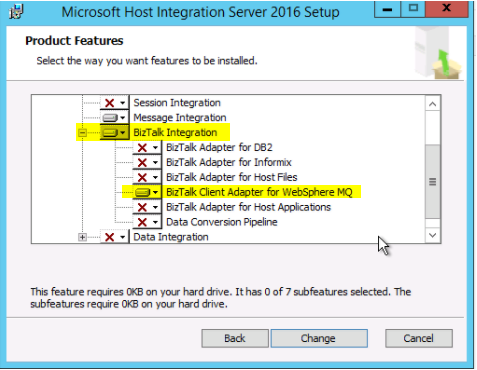

For HIS 2016 I used the following minimal installation (as we only require the MQSC Adapter):

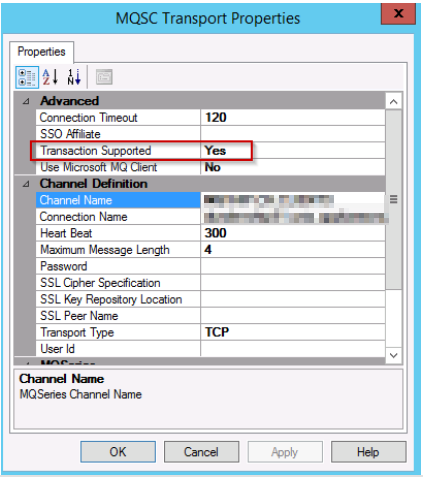

After all of this I was able to successfully do the following:

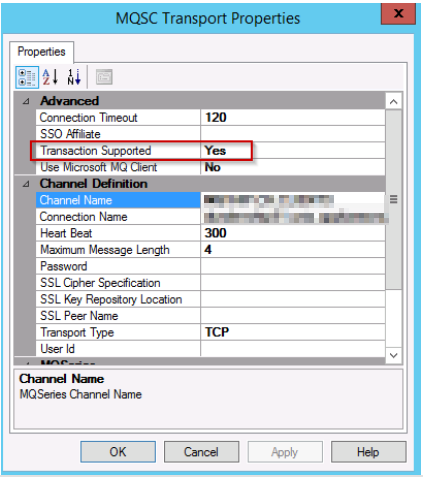

- Send and Receive message from IBM WebSphere MQ 9 queues with “Transactional Supported” by using a 64 Bit Host Instance

Conclusion

At the end we reached our goal and were able to send and receive messages from an IBM WebSphere MQ 9 queue with BizTalk Server 2013R2.

Some people may ask why I didn’t use the Microsoft MQ Client. Well, I didn’t work straight away and we agreed to not further research this as we already started our migration project to BizTalk Server 2016.

by Praveena Jayanarayanan | Nov 13, 2017 | BizTalk Community Blogs via Syndication

We, the product support team, often receive different types of support cases reported by the customers. Some of them may be functional, others may be related to installation and so on. Every support case is a new learning experience and we put in our best efforts to resolve the issues, thereby providing a better experience to the customers. As the below quotes say,

“Customer success is simply ensuring that our customers achieve their desired outcome through their interactions with our company” – Lincoln Murphy

We must make sure that we are taking the customers in the right direction when they raise an issue and must give them confidence about the product as well as service.

As already known, BizTalk360 is the one stop monitoring tool for BizTalk server. BizTalk360 not only contains the monitoring options for BizTalk server, in turn contains other in-built tools as well such as BAM, BRE etc. Whenever an issue is reported by the customer, we start our investigation from the basic troubleshooting steps. Hope it would be interesting to know what are the basic troubleshooting that we do. Yes, it would be. In this blog, I will share the information about the basic troubleshooting tools that we have in BizTalk360 that help us in resolving the customer issues.

Installer Log Information:

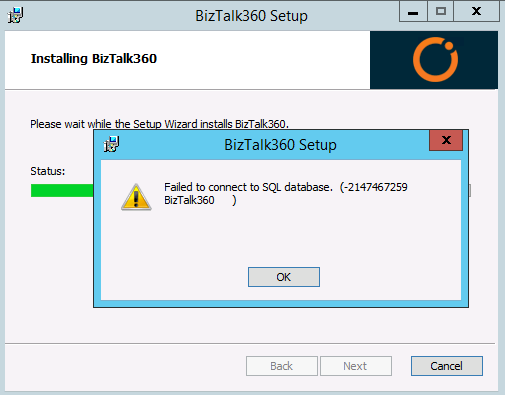

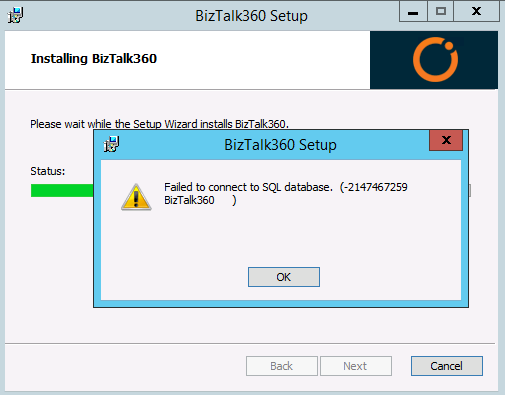

The first step in using BizTalk360 is the installation and configuration. The installation, as well as the upgrade process, is seamless with simple steps and some of the permission checks are done in the installer. But there may be some cases where the installer may fail with the below error.

There is no much information about the error on the screen. So how do we check this error? Here comes the Installer logs for our help. Generally, when we install BizTalk360, we just give the name of the MSI in the admin command prompt and run it. But to enable installer logs we need to run the installer with the below command with the BizTalk360 version number.

msiexec /i “BizTalk360.Setup.Enterprise.msi” /l*v install.log

The installer log location can also be provided in the command, else the log will be created in the same folder where the MSI file is located. The steps performed during the installation will be logged in the installer log. The log will also contain information about any exception thrown. So, for the above error, the logged information was:

MSI (s) (E0:D4) [13:57:50:776]: PROPERTY CHANGE: Modifying CONNECTION_ERROR property. Its current value is ‘dummy’. Its new value: ‘Cannot open database “BizTalk360” requested by the login. The login failed.

Login failed for user ‘CORPsvcbiztalk360′.’.

The error clearly states that it is a permission issue. When BizTalk360 is installed, the BizTalk360 database gets created in the SQL server. BizTalk360 may be installed on the same machine where BizTalk server resides, or in a standalone machine and the SQL database may be on a separate server. As a prerequisite for BizTalk360, we recommend providing the SYSADMIN permission for the service account on the SQL server. Giving this permission to the service account resolves the above error. Hence, any installation related error information can be identified from the installer logs and can be resolved.

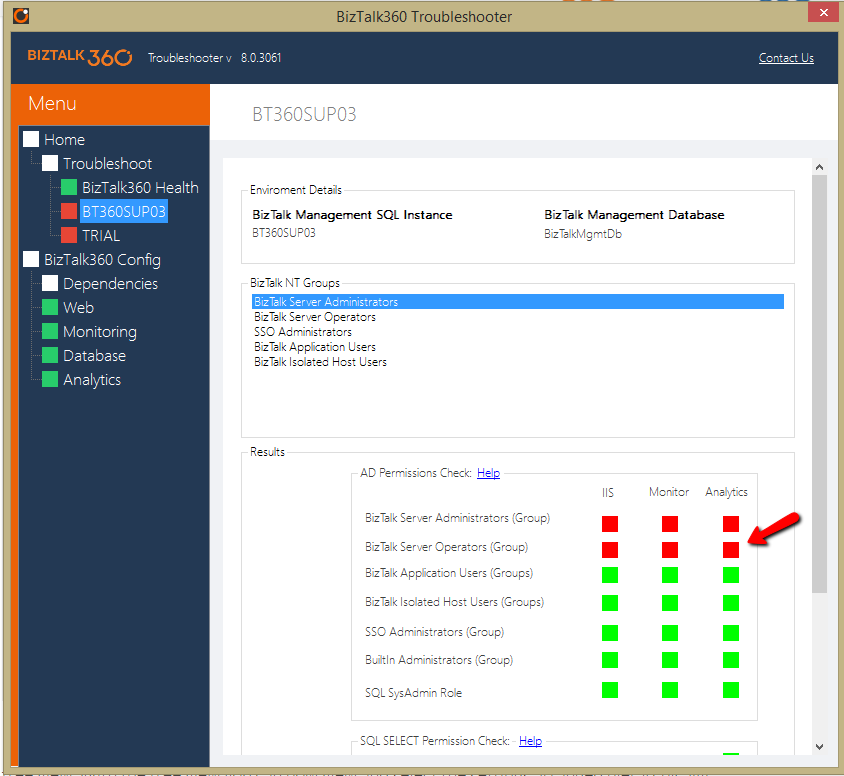

Troubleshooter:

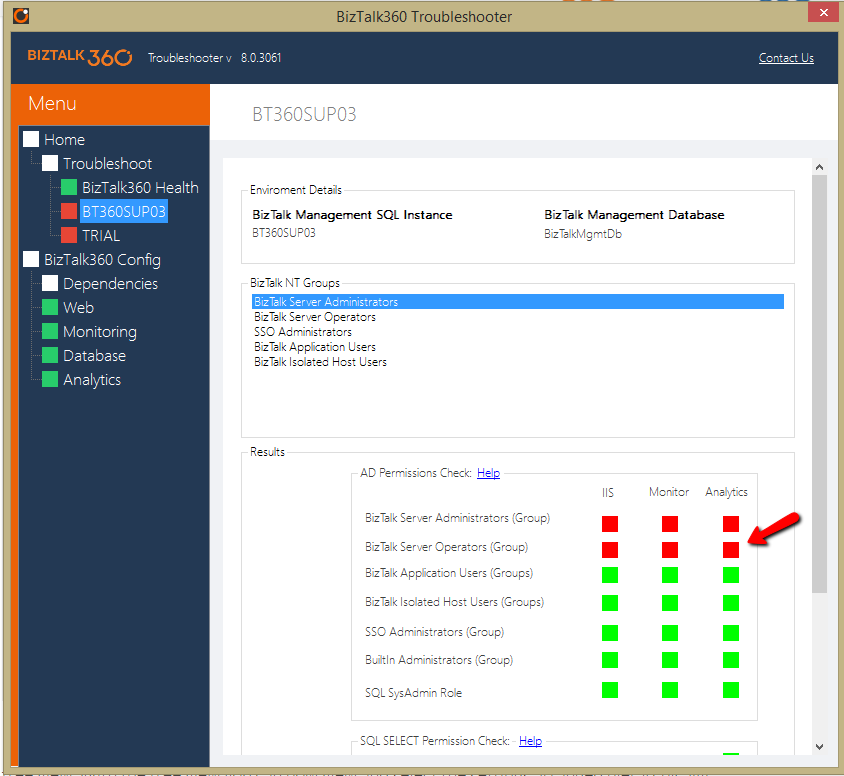

This is an interesting tool which is integrated within BizTalk360 and available as a separate window-based tool. contains an extensive set of rules to verify all the prerequisite conditions in order to successfully run BizTalk360. As you can see in the below picture, the user just enters the password for IIS application pool identity and monitoring service account and clicks the “Troubleshoot BizTalk360” button. The rules will be verified and results will be indicated in the form of RED/GREEN/ORANGE.

This way, we can check the missing permissions for the BizTalk360 service account and provide the same.

Apart from the permissions, the other checks done by the troubleshooter are:

- IIS Check

- SQL Select Permission check

- Configuration File check

- Database report

If the customer faces any issue during the initial launch of the application, then they can run the troubleshooter and check for the permissions. Once the errors are resolved and everything is green, they can start BizTalk360 and it should work.

Hence all the information regarding the service account permissions, BizTalk360 configuration and database can be obtained with the help of troubleshooter. The integrated troubleshooter can be accessed from BizTalk360 itself as seen below.

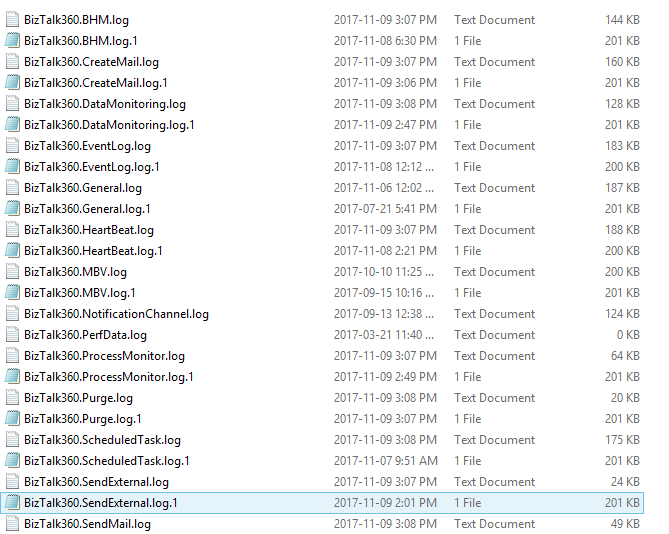

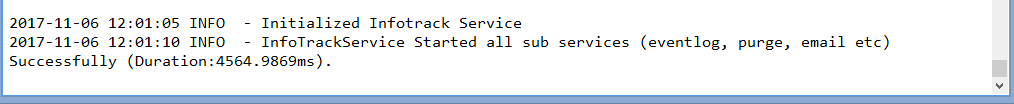

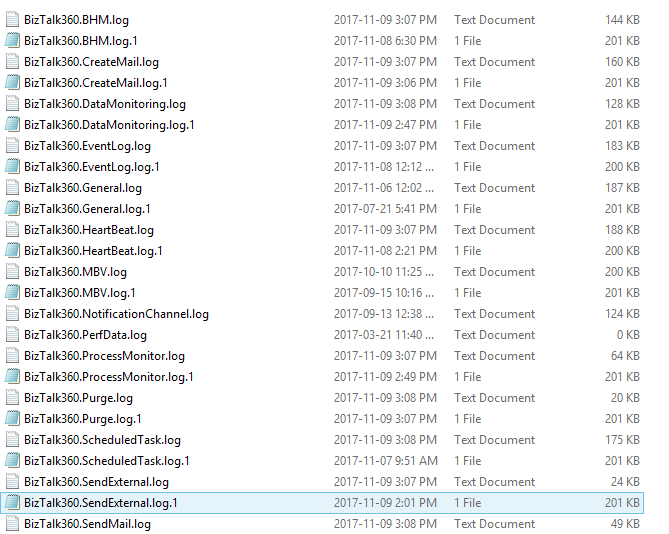

BizTalk360 Service Logs:

The details of the exceptions that occur in BizTalk360 are captured in the log files that are generated in the BizTalk360 folder. The logs files not only contain the information of the exceptions, but they also contain the information about the alarm processing and the subservices statuses. There are different logs for each of them which are described below.

Monitoring Logs

Monitoring is one of the most important tasks performed in BizTalk360. There are new features getting added in every release of BizTalk360. The monitoring capability is also extended to File Locations, Host Throttling monitoring, BizTalk server high availability and much more. The BizTalk360 monitoring service is installed along with the BizTalk360 web application. Once the artefacts are configured for monitoring, the service runs every 60 seconds and triggers alert emails according to the conditions configured.

What happens if there occurs some exception during the monitoring and alerts are not triggered? Where can we find the information about these exceptions and take necessary actions? Here come the service logs that are located in BizTalk360 installation folder/Service/Log folder. There are about 25 different logs that get generated for each monitoring configuration separately and get updated whenever the monitoring service runs. Say for example, if the alerts are created, but not transmitted due to an exception, this information will be logged in the BizTalk360.SendMail.log file. So, when the customer raises an issue regarding the transmission of alerts, the support team starts the investigation from the logs. We ask the customers to share the logs from their environment and we check them. Let’s look at a customer scenario.

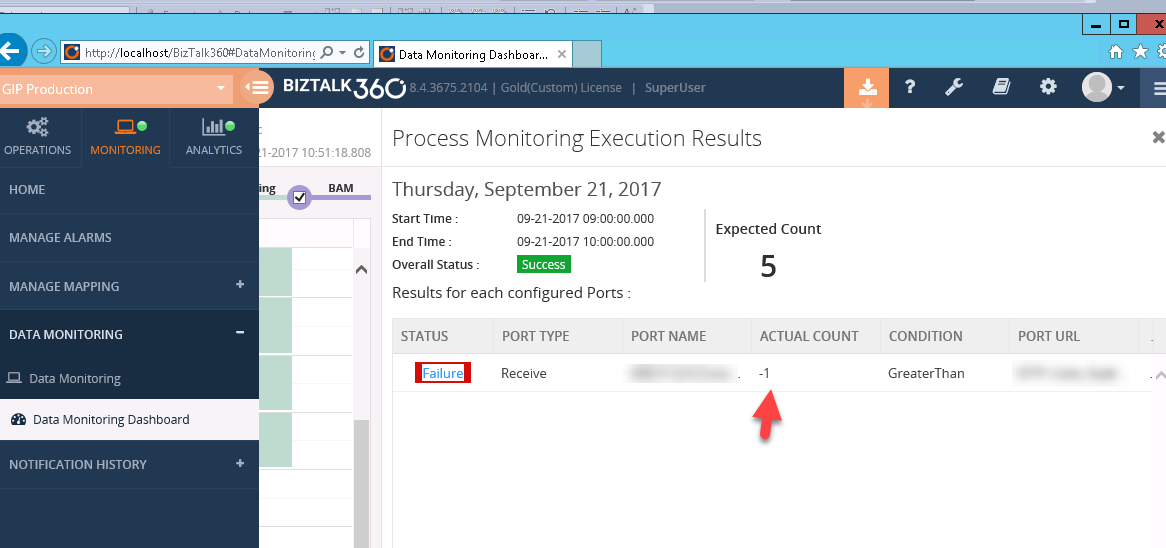

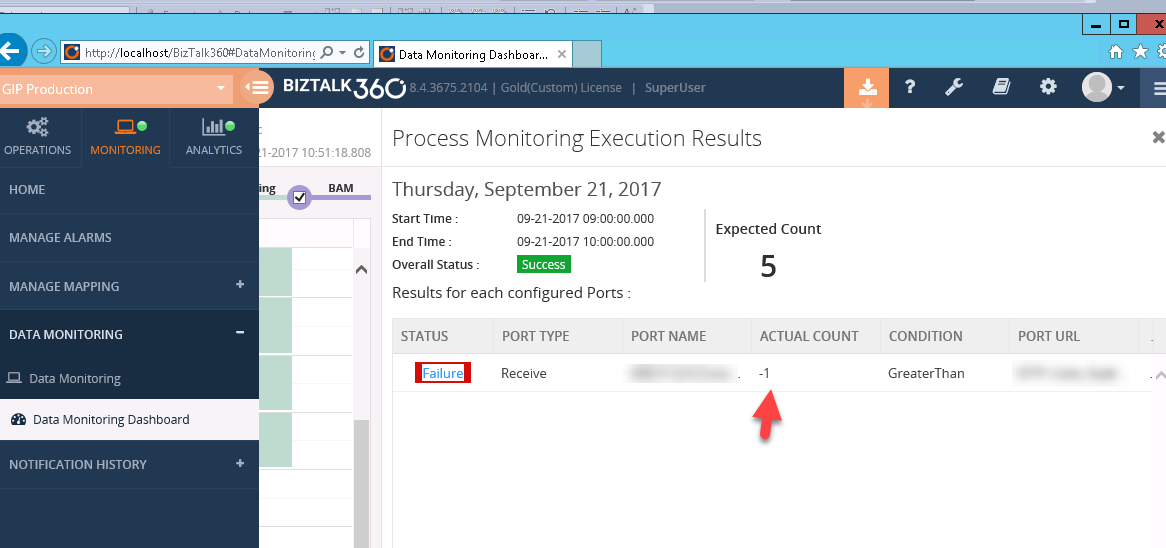

One of the issues came up such that:

- The customer has configured a receive port for process monitoring

- But they are getting Actual count = -1 even when there are messages processed via this port.

- They wanted to know the reason why the actual count was -1.

In BizTalk360, the negative value denotes that there has been some exception occurring. And, the exception would be logged in to the service logs. Hence, we asked them to share the logs.

From the BizTalk360.ProcessMonitor.log, we could see the following exception:

2017-09-21 10:16:45 ERROR – ProcessMonitoringHelper:GetMonitoringStatus. Alarm Name:PROD_DataMonitor: Name: Application : Atleast 20 Messages from DHL per hour: Exception:System.Data.SqlClient.SqlException (0x80131904): Timeout expired. The timeout period elapsed prior to completion of the operation or the server is not responding. —> System.ComponentModel.Win32Exception (0x80004005): The wait operation timed out at System.Data.SqlClient.SqlConnection.OnError(SqlException exception, Boolean breakConnection, Action`1 wrapCloseInAction)

The timeout exception generally happens when there is a huge volume of data in the BizTalkDTADb database since for Process Monitoring, we retrieve the results from this database and display it in BizTalk360. The database size was checked at their end and found to be 15 GB which was greater than the expected size of 10 GB. For more information on the database size, you can refer here.

Similarly, we have different log files generated from which we can get the information about the different sub services running for BizTalk360 Monitor and BizTalk360 Analytics services.

We can also check if all the subservices are started properly. The log information is captured along with the timestamp and this would make much easier for the support team to identify the cause and resolve the issues in time, thereby making the customer happy. In case of monitoring logs, the Alarm name and configurations are also captured. There are separate logs for Process Monitoring and other Data Monitoring alarms. We have separate logs for FTP and SFTP monitoring too for capturing the exceptions if any.

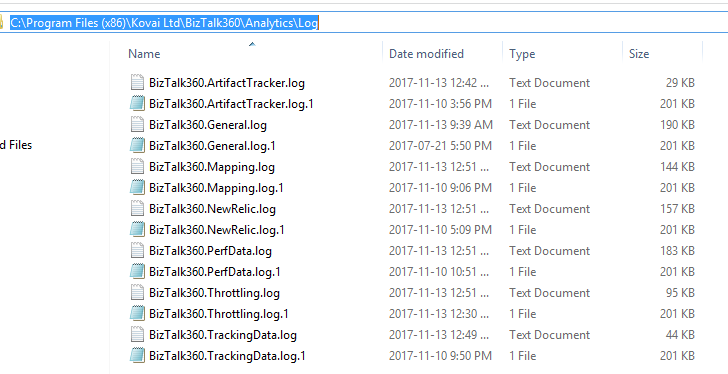

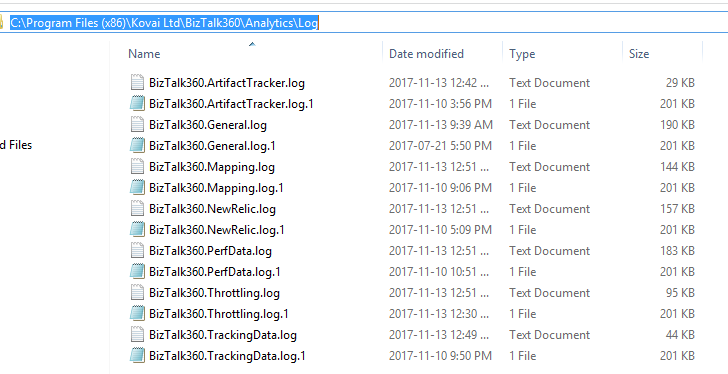

Analytics logs

Analytics is yet another important feature in BizTalk360 with help of which you can visualize a lot of interesting facts about your BizTalk environment like number of messages processed, failure rate at message type level, BizTalk server CPU/Memory performances, BizTalk process (host instances, SSO, rules engine, EDI etc) CPU/memory utilization and lots more. BizTalk360 Analytics service also contains different sub services run and any exception occurring for these services will be captured in the logs under C:Program Files (x86)Kovai LtdBizTalk360AnalyticsLog folder.

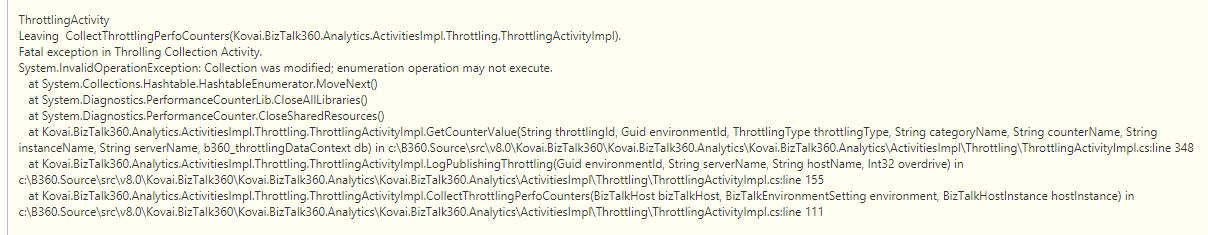

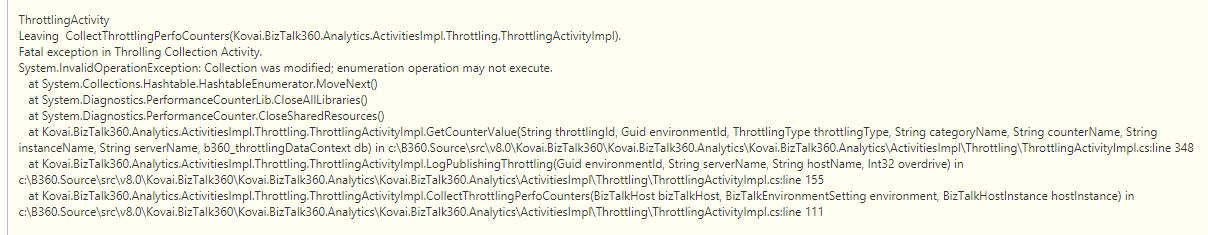

BizTalk360 Analytics is used to gather the information about the performance counters in the server and display them in the form of widgets. Also, BizTalk360 will display the information if the system is under throttling condition in a graphical format. There was a case from the customer that the Throttling Analyser was not displaying any formation when the system was under throttling condition. We then checked the logs and found the below error in the BizTalk360.Throttling.log.

From the logs, we could understand that the performance counters were corrupted and rebuilding the counters resolved the issue.

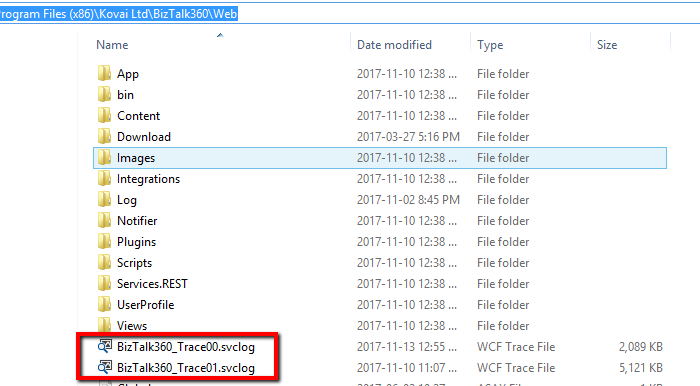

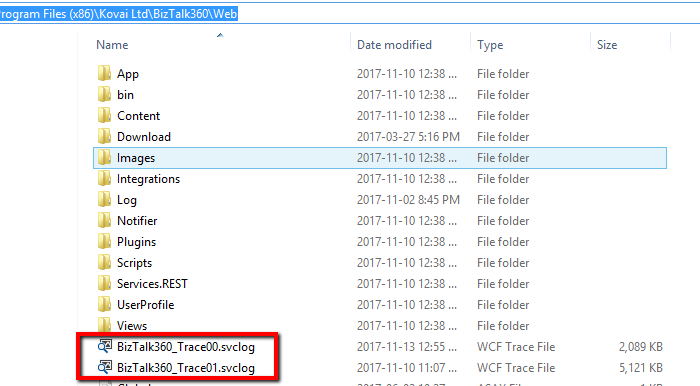

Web and Svc logs

At times, there are scenarios where a page in BizTalk360 may take some time to get loaded leading to performance issues. The time taken to load the page can be captured in the svc logs present in C:Program Files (x86)Kovai LtdBizTalk360Web folder.

Once a customer reported that there was performance latency in some of the BizTalk360 pages. We checked these trace logs and found that the service calls GetUserAccessPolicy & GetProfileInfo methods were taking more than 30 seconds to get resolved.

GetUserAccessPolicy–>Groups/user assigned to provide access to the features of BizTalk360.

GetUserProfile –> Fetch the UserProfile of the group/user been configured.

These methods were then optimized for caching in the next BizTalk360 version release and hence the performance issue was resolved.

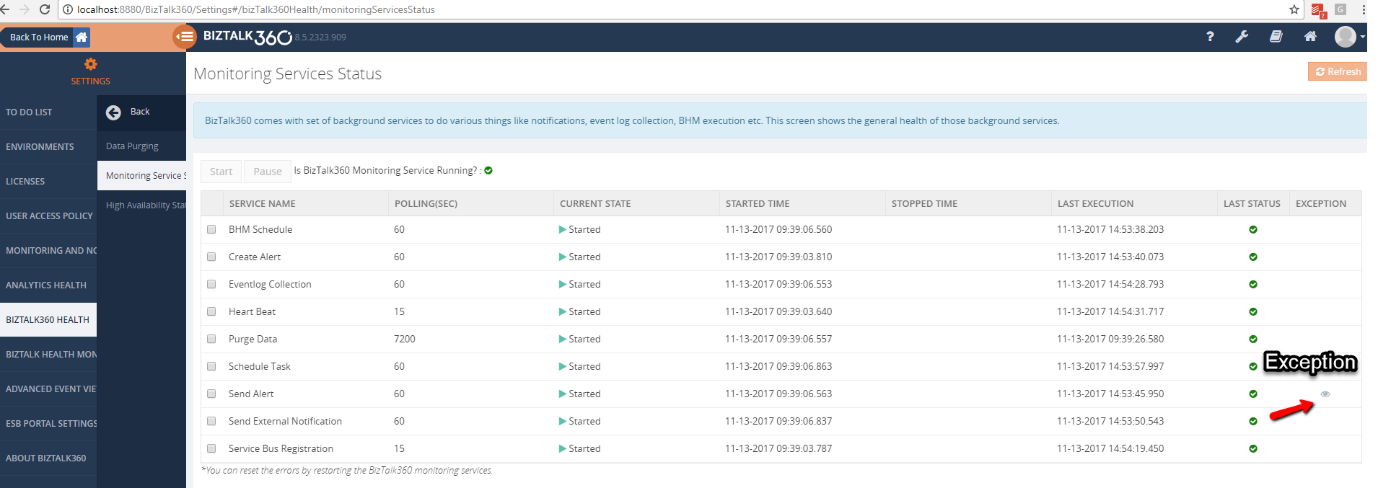

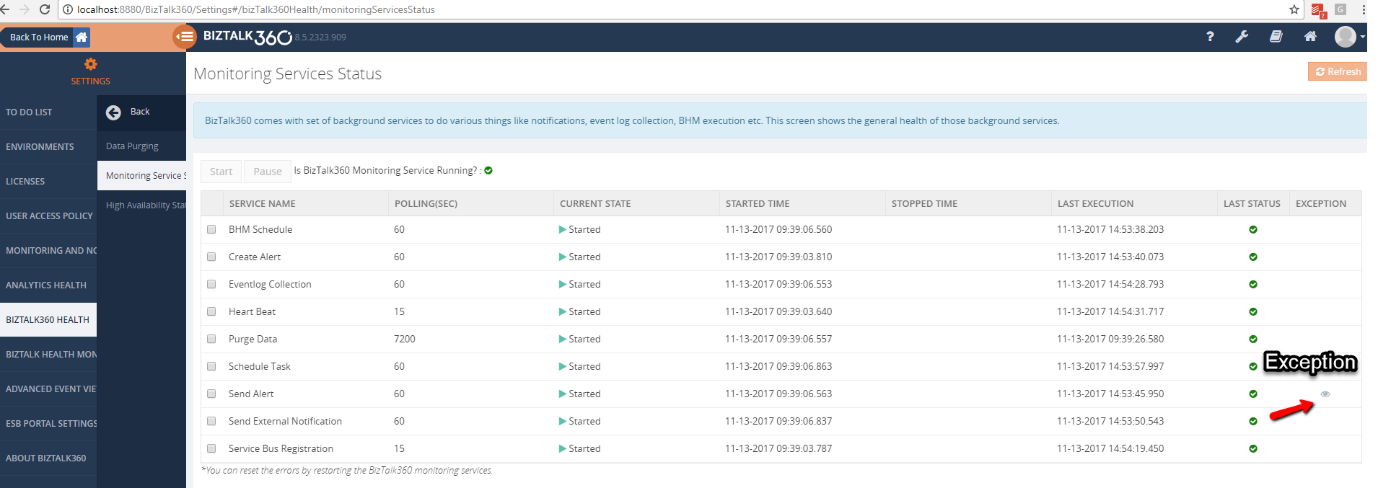

BizTalk360 subservices status

As mentioned before, we have different subservices running for BizTalk360 Monitor and Analytics services. In case, if there is any problem in receiving alerts or if the service is not running, the first step would be to check for the status of the monitoring subservices for any exceptions. This can be found in BizTalk360 Settings -> BizTalk360 Health -> Monitoring Service Status. The complete information will also be captured in the logs.

Similarly, we have the check for the Analytics sub services under Settings -> Analytics Health.

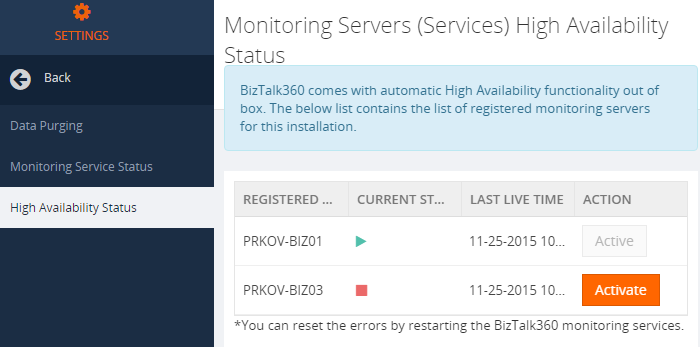

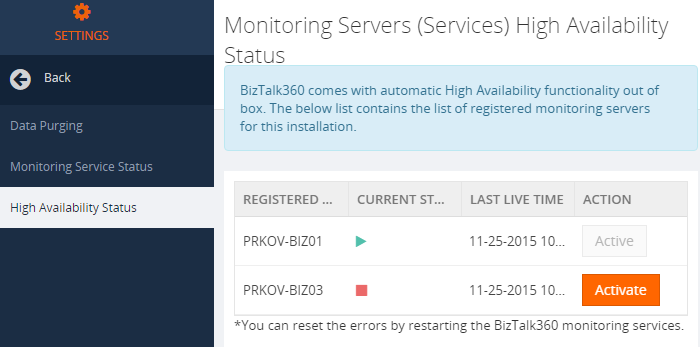

What if the customer has configured BizTalk360 under High Availability(HA)? High Availability is the scenario where BizTalk360 is installed on more than one servers pointing to the same database. The BizTalk360 Monitor and Analytics services can also be configured for HA. So, when there is an issue reported with these services, the logs from the active server must be investigated. The active server can be identified from BizTalk360 Settings -> BizTalk360 Health -> High Availability Status.

Conclusion:

These basic troubleshooting tools available in BizTalk360 make our support a little easier in resolving the customer issues. The first step analysis can be done with these tools which help us identify the root cause of the problem. We have our latest release BizTalk360 v8.6 coming up in a few weeks with more exciting features. In case of further queries, you can write to us at support@biztalk360.com.

Author: Praveena Jayanarayanan

I am working as Senior Support Engineer at BizTalk360. I always believe in team work leading to success because “We all cannot do everything or solve every issue. ‘It’s impossible’. However, if we each simply do our part, make our own contribution, regardless of how small we may think it is…. together it adds up and great things get accomplished.” View all posts by Praveena Jayanarayanan

by Gautam | Nov 12, 2017 | BizTalk Community Blogs via Syndication

Do you feel difficult to keep up to date on all the frequent updates and announcements in the Microsoft Integration platform?

Integration weekly update can be your solution. It’s a weekly update on the topics related to Integration – enterprise integration, robust & scalable messaging capabilities and Citizen Integration capabilities empowered by Microsoft platform to deliver value to the business.

If you want to receive these updates weekly, then don’t forget to Subscribe!

Feedback

Hope this would be helpful. Please feel free to let me know your feedback on the Integration weekly series.

Image Source – Microsoft Documentation

Image Source – Microsoft Documentation