by michaelstephensonuk | Feb 13, 2017 | BizTalk Community Blogs via Syndication

While chatting to Jeff Holland on integration Monday this evening I mentioned some thinking I had been doing around how to articulate the various products within the Microsoft Integration Platform in terms of their codeless vs Serverless nature.

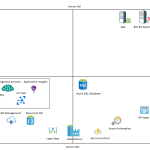

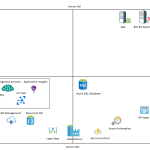

Below is a rough diagram with me brain dumping a rough idea where each product would be in terms of these two axis.

(Note: This is just my rough approximation of where things could be positioned)

If you look at the definition of Serverless then there are some obvious things like functions which are very Serverless, but the characteristics of what makes something Serverless or not is a little grey in some areas so I would argue that its possible for one service to be more Serverless than another. Lets take Azure App Service Web Jobs and Azure Functions. These two services are very similar, they can both be event driven, neither has an actual physical server that you touch but they have some differences. The Web Job is paid for in increments based on the hosting plan size and number instances where as the function is per execution. Based on this I think its fair to say that a lot of the PaaS services on Azure could fall into a catchment that they have characteristics of Serverless.

When you consider the codeless elements of the services I have tried to consider both code and configuration elements. It is really a case of how much code do I need to write and how complex is it that differentiates one service from another. If we take web apps either on Azure App Service or IIS you get a codebase and a lot of code to write to create your app. Compare this with functions where you tend to be writing a specific piece of code for a specific job and there is unlikely to be loads of nuget packages and code not aimed at the functions specific purpose.

Interesting Comparisons

If you look at the positioning of some things there are some interesting comparisons. Lets take BizTalk and Logic Apps. I would argue that a typical BizTalk App would take a lot more developer code to create than it would to do a Logic App. I don’t think you could really disagree with that. BizTalk Server is also clearly has one of the more complex server dependencies where as Logic Apps is very Serverless.

If you compare Flow against Logic Apps you can see they are similar in terms of Serverless(ness). I would argue that for Logic Apps the code and configuration knowledge required is higher and this is reinforced by the target user for each product.

What does this mean?

In reality the position of a product in terms of Serverless or codeless doesn’t really mean that much. It doesn’t necessarily mean one is better than the other but it can mean that in certain conditions a product with certain characteristics will have benefits. For example if you are working with a customer who wants to very specifically lock down their SFTP site to 1 or 2 ip addresses accessing it then you might find a server base approach such as BizTalk will find this quite easy where as Logic Apps might not support that requirement.

The spectrum also doesn’t consider other factors such as complexity, cost, time to value, scale, etc. When making decisions on product selection don’t forget to include these things too.

How can I use this information?

Ok with the above said this doesn’t mean that you cant draw some useful things from considering the Microsoft Integration Stack in terms of Codeless vs Serverless. The main one I would look at is thinking about the people in my organisation and the aims of my organisation and how to make technology choices which are aligned to that. As ever good architecture decision making is a key factor in the successful integration platform.

Here are some examples:

- If I am working with a company who wants to remove their on premise data centre foot print then I can still use server technologies in IaaS but that company will potentially be more in favour of Serverless and PaaS type solutions as it allows them to reduce the operational overhead of their solutions.

- If I am working with a company who has a lot of developers and wants to share the workload among a wider team and minimize niche skill sets then I would tend to look to solutions such as Functions, Web Jobs, Web Apps, Service Bus. The democratization of integration approach lets your wider team be involved in large portions of your integration developments

- If my customer wants to that that further and empower the Citizen Integrator then Flow and Power Apps are good choices. There is no Server-full equivalent to these in the Microsoft stack

The few things you can certainly draw from the Microsoft stack are:

- There are lots of choices which should suit organisations regardless of their attitude/preferences and reinforces the strength and breadth of the Microsoft Integration Platform through its core services and relationships to other Azure Services.

- Azure has a very strong PaaS platform covering codeless and coding solutions and this means there are lots of choices with Serverless characteristics which will reduce your operational overheads

Would be interesting to hear what others think.

by Dan Toomey | Feb 10, 2017 | BizTalk Community Blogs via Syndication

“When it rains, it pours.”

Well, I must say I’ve had a pretty remarkable run the past few weeks! I can’t remember any point in my professional life where I’ve enjoyed so much reward and recognition in such a short time span.

For starters, after six weeks and probably 50-60 hours of study, I managed to pass my MS 70-533 Implementing Azure Infrastructure Solutions exam. Since I’d already passed the MS 79-532 Developing Azure Solutions exam a couple of month earlier, this earned me the coveted Microsoft Certified Solutions Associate (MCSA) qualification in Cloud Platform. I now have just one more exam to pass to earn the Microsoft Certified Solutions Expert (MCSE) qualification.

Then just last week I was granted my first Microsoft Most Valuable Professional (MVP) Award in Azure! This is incredibly exciting to say the least, and not only a surprise to myself to have been identified with so many incredibly accomplished leaders in the industry, but also to many others because of the timing. Microsoft has revamped the MVP program so that now awards will be granted every month instead of every quarter, and everyone will have their review date on July 1st. I’m looking forward to using my newfound benefits to contribute more to the community.

Then just last week I was granted my first Microsoft Most Valuable Professional (MVP) Award in Azure! This is incredibly exciting to say the least, and not only a surprise to myself to have been identified with so many incredibly accomplished leaders in the industry, but also to many others because of the timing. Microsoft has revamped the MVP program so that now awards will be granted every month instead of every quarter, and everyone will have their review date on July 1st. I’m looking forward to using my newfound benefits to contribute more to the community.

And if that wasn’t enough, this week Mexia promoted me to the position of Principal Consultant! Aside from the CEO (Dean Robertson), I am the longest serving employee, and the journey over the last seven and a half years has been nothing short of amazing. Seeing the company grow from just three of us into the current roster of nearly forty has been remarkable enough, but I’ve also watched us become a Microsoft Gold Partner and win a plethora of awards (including ranking #10 in the Australia’s Great Place to Work competition) along the way. Working with such an awesome team has afforded me unparalleled opportunities to grow as an IT professional, and I will strive to serve both our team and our clients to the best of my ability in this role.

So what else is on the horizon? Well for starters, next week I join the speaker list at Ignite Australia for the first time delivering a Level 300 Instructor-Led Lab in Azure Service Fabric. I’m also organising and presenting for the first ever Global Integration Bootcamp in Brisbane on 25th March, and doing the same for the fifth Global Azure Bootcamp on 22nd April. And somewhere in there I need to fit in that third Azure exam… It’s going to be a busy year!

by michaelstephensonuk | Feb 7, 2017 | BizTalk Community Blogs via Syndication

Recently I have been thinking about a sample use case where you may want to expose legacy applications via a hybrid API but you have the challenge that the underlying LOB applications are not really fit for purpose to be the underlying sub-systems for a hybrid API. In this case we have some information held across 2 databases which are years old and clogged full of crappy technical debt which has festered over the years. Creating an API with a strong dependency on these would be like building on quick sand.

At the same time the organisation has a desire at some point (unknown timescale) to transition from the legacy applications to something new but we do not know what that will be yet.

I love these kinds of architecture challenges and it emphasises the point that there isn’t really such a thing as a target architecture because things always change so you need to think of architecture as always in transition and as a journey. If we are going to do something now we should add some thinking about what we might do later to make sure the journey is smooth.

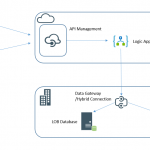

Expected Architecture

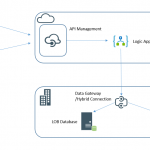

Below is a diagram of one of a number of ways you might expect to implement the typical hybrid API architecture using the Microsoft technology stack today. This would be one of the typical candidate architectures for consideration for this pattern in normal circumstances.

The problem with this architecture is mainly the dependency on the LOB systems which mean we inherit some very risky stuff for our API. For example if we suddenly had a large number of users on the API (or even a small number) you could easily see these legacy systems creaking.

Proposed Architecture

What I was considering instead was the idea that if we can define a canonical data definition for the data which we know doesn’t change very often then we can sync the data to the cloud and the API would use that cloud data to drive the API. This means our API would be very robust and scalable. At the same time it also means we take all of the load away from the LOB applications so we do not risk breaking them.

Once the data is in a nice json friendly format in the cloud this means we have distinct layer of separation between the API and underlying LOB applications. For our future transition to replace the LOB applications this means we just need to keep the JSON files up to date to support the API which should be relatively simple to do.

At present I think BizTalk is our best tool for bending the LOB data to the JSON formats as it does dirty LOB integration very well and can be scheduled to run when required. In the future we may or may not use BizTalk to do the sync process, that’s a decision we will make at the time.

API Management to Blob Storage

Following on from my previous post, by using APIM and a well structured data model using the files in blob storage it became very easy to expose the files in blob storage as the API with zero code. We could simply use the url template rewrite features to convert the API proxy friendly url for the client to the the back end Azure Blob Storage url.

The next result is our API would scale massively without too much trouble, have zero code, can use all of the APIM features and give a great way for client applications to search the course library without us worrying about the underlying LOB applications

The below video will demonstrate a quick walk through of this.

[embedded content]

by Steef-Jan Wiggers | Jan 28, 2017 | BizTalk Community Blogs via Syndication

Usually like most of my blogger friends I do a write up of what I have done and experienced at the end of the year. I write a year’s review, post a few pictures and publish it. During 2017 I want to do things differently, I’ll write a review post every month and look ahead a bit in the upcoming months.

Why Stef instead of Steef-Jan my real name? Well the explanation is that many of my international buddies call me Stef, rather than my full name. And that’s fine, I do not mind at all. Thus, I have decided I will call or label my monthly updates as Stef’s Monthly Update.

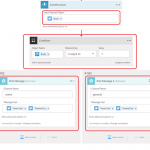

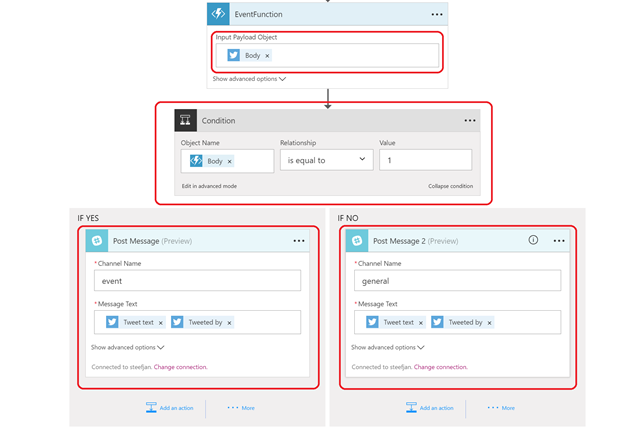

What have I been up to in January? I have written a few blog posts for BizTalk360’s blog. Last year at the MVP in November,Saravana asked me if I was interested in writing a biweekly guest blog post with integration in mind. And I said yes let’s do that and I started in December with two posts:

I followed up in January with two more, staying a bit in the integration space with BizTalk and Logic Apps:

Creating these blog posts is fun to do, you explore a new technology or get a bit more insight in building solutions with Logic App as a new cloud integration service. As a matter a fact, one my latest projects with Logic Apps for one of Macawcustomers went live this month. So, I have gained experience in building and deploying Logic Apps to production. Awesome!

What I like about BizTalk360 is that besides providing a platform for blogs, it sponsors and supports the Integration Mondays for over two years now. And this has been revamp into the Integration User Group, that is the integration Mondays are part of it together with Middleware Friday. The latter is a weekly update by Kent Weare a recorded session every week, and has 4 episodes so far. As for integration Monday, a set of speakers is line up all to way to the end of April and I will be one of them in March.

What else have I been up to? I have written an article for the Dutch SDNMagazine in Dutch, which has been a quite a while (a couple years I believe) since I have done that. Thanks to Marcel Meijer and Lex Hegt, both for reviewing the article. It is ready to be published in the upcoming magazine 131. The article is about Server less Integration using Logic Apps and Functions. As you might realize that means no more VM’s, just a Browser and Azure.

The benefit of writing that article was that I can also will present on the topic at the SDN Event in March. Super! And I’ll be on stage next month too, in New Zealand and Australia. Yes, this cool and this evolved from what happened last year. On the plane, back from Gothenburg, where me and Eldert did some talks we came up with the idea of going down under. A few weeks later we booked the tickets after we spoke with Mick Badran and Rene Brauwers. Rene has set up a Meetupin Sydney and Mark Brimbletogether with Craig Haiden onein Auckland. And we’ll probably have one in Melbourne and Brisbane too. Cool, eh!

During my trip, down under there’s an Ignite going on too in the Gold Coast. Eldert will be going there and meanwhile I’ll be a few days in Auckland, before I join him at the end of the event. We’ll be meeting up with Dan Toomey, Dean Robertson, some other Team Mexia guys/gals, the Microsoft PG (Jeff Holan, Kevin Lam and Jon Fancey), and fellow MVP Martin Abbott. So, will be mixing up work with leisure time while I am down there.

In this month, I read a few books on various topics, entrepreneurial, social media and politics. The first one being a book from Gary Vaynerchuck Ask GaryVee. Why the entrepreneurial topic, why the interest? Kent Weare my buddy mentioned this guy, as one of the hottest entrepreneurs at the moment, a few times and point to some points he stands for. Hence, I looked at a few YouTube movies of GaryVee, bought one of his books and read it. Besides this one I read another one, which I stumbled on called “Your One Word” by Evan Carmichael. And through GaryVee I also got interested in the “The Social Organism” book and read that one too.

Three books in total in combination of two other books, which I picked up while reading the Correspondent website:

- Utopia for realists. This book deals with an ideal world that we all have enough money, and can live happy lives. It discusses experiments with a base income for people. However, all these experiments have been done on a small scale and it yet needs to prove itself on a large scale. Politically is can be a hard sell.

- Je hebt wel iets te verbergen (You do have something to hide). A book that discussed our privacy in this day and age being online every day with all your device. Interesting read as everything we do these days is being tracked and used for all kinds of purposes like profiling, ectera.

My favorite albums, which we released in January, are:

Besides writing, preparing content, reading and listening to music have I been doing some workouts? Yes, I have done a few runs to prepare for a half marathon of The Hague in March and a full marathon in April (Rotterdam).

There you have it Stef’s first Monthly Update and I can look back with joy. Accomplished quite a few things and exciting moments are ahead of me.

Cheers,

Steef-Jan

Author: Steef-Jan Wiggers

Steef-Jan Wiggers is all in on Microsoft Azure, Integration, and Data Science. He has over 15 years’ experience in a wide variety of scenarios such as custom .NET solution development, overseeing large enterprise integrations, building web services, managing projects, designing web services, experimenting with data, SQL Server database administration, and consulting. Steef-Jan loves challenges in the Microsoft playing field combining it with his domain knowledge in energy, utility, banking, insurance, health care, agriculture, (local) government, bio-sciences, retail, travel and logistics. He is very active in the community as a blogger, TechNet Wiki author, book author, and global public speaker. For these efforts, Microsoft has recognized him a Microsoft MVP for the past 6 years. View all posts by Steef-Jan Wiggers

by BizTalk Team | Jan 23, 2017 | BizTalk Community Blogs via Syndication

BizTalk Server 2016 hit the pricelist December 1st 2016. With this we moved into a different era and are continuing our efforts in providing first class services to our customers around the world. To help us we want your feedback, suggestions and ideas. You can help us by going to our UserVoice page, either write new ideas or vote for some that we have there already.

You can also follow us on Twitter and Facebook.

by stephen-w-thomas | Jan 21, 2017 | BizTalk Community Blogs via Syndication

The purpose of this post is to talk about a side-project that I have going on with Saravana Kumar and BizTalk360. The purpose of #MiddlewareFriday is to create a video blog of new and interesting developments going on in the industry. Each week we will publish a short video that has some new content. The content may feature news, demos and will also highlight other activities going on in the community. From time to time we will also bring on some guests to keep the content fresh and get some different perspectives.

For both Saravana and myself there is no direct commercial incentive in doing the show. It really comes down to participating in a community, learning by doing, improving communication skills and having some fun along the way.

I am going to keep this post updated to keep a running list of the shows – in part to aid in search engine discovery.

| Episode |

Title |

Date |

Tags |

| 1 |

Protecting Azure Logic Apps with Azure API Management |

January 6, 2017 |

Azure API Management, Logic Apps, ServiceNow, API Apps |

| 2 |

Azure Logic Apps and Service Bus Peek-Lock

|

January 13, 2017 |

Logic Apps, Service Bus, Patterns |

| 3 |

Logic Apps and Cognitive Services Face API – Part 1

|

January 20, 2017 |

Logic Apps, Cognitive Service, Face API, Steef-Jan Wiggers |

| 4 |

Microsoft PowerApps and Cognitive Services Face API -Part 2 |

January 27, 2017 |

PowerApps, Cognitive Services, Face API |

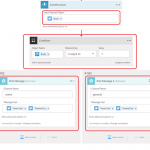

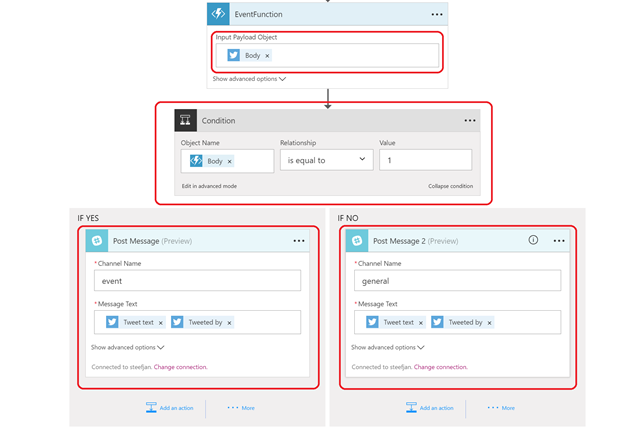

| 5 |

Serverless Integration with Steef-Jan Wiggers |

February 3rd, 2017 |

Logic Apps, Sentiment Analysis, Slack, Azure Functions, Steef-Jan Wiggers |

| 6 |

Azure Logic Apps and Power BI Real Time Data Sets |

February, 10, 2017 |

Logic Apps, Power BI connector, Sandro’s Integration stencils, Quicklearn, Global Integration Bootcamp |

| 7 |

Azure Monitoring, Azure Logic Apps and Creating ServiceNow Incidents |

February 17, 2017 |

Logic Apps, Azure Monitoring, API Apps, ServiceNow, Glen Colpaert SAP, Webhook Notification BizTalk360 |

| 8 |

Exploring ServiceBus360 Monitoring |

February 24, 2017 |

Service Bus, BizTalk360, Community Content: Team Flow + Luis, Exception handling for Logic App Web Services Toon Vanhoutte |

| 9 |

Coming Soon – SAP and Logic Apps |

March 3rd, 2017 |

TBD |

by Mark Brimble | Jan 9, 2017 | BizTalk Community Blogs via Syndication

I have been doing proof of concept where a application connects to BizTalk Server using a TCP/IP stream connection where the application will actively connect and BizTalk Server will listen for connections.

I have previously written a custom adapter called the GVCLC Adapter which actively connects to a vending machine. This was based on the Acme.Wcf.Lob.Socket Adapter example by Michael Stephenson. Another alternative that was considered was the Codeplex TCP/IP adapter. When I met Michael for the first time in Sydney two years ago I thanked him for his original post and he said to me why hadn’t I used the BizTalk MLLP adapter instead. In this post I examine whether it is possible to use MLLP adapter to connect to a socket.

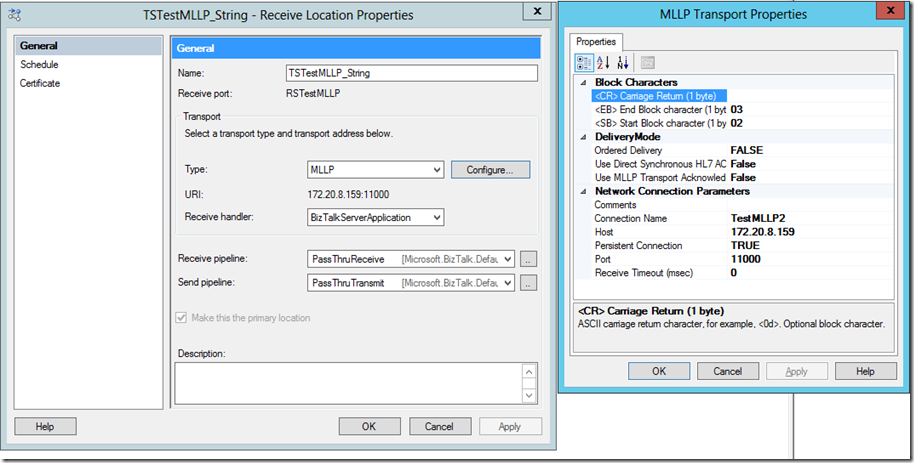

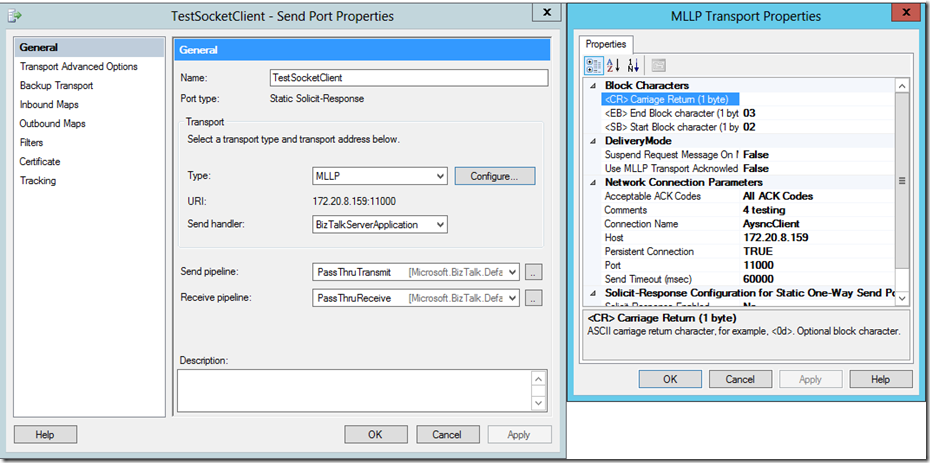

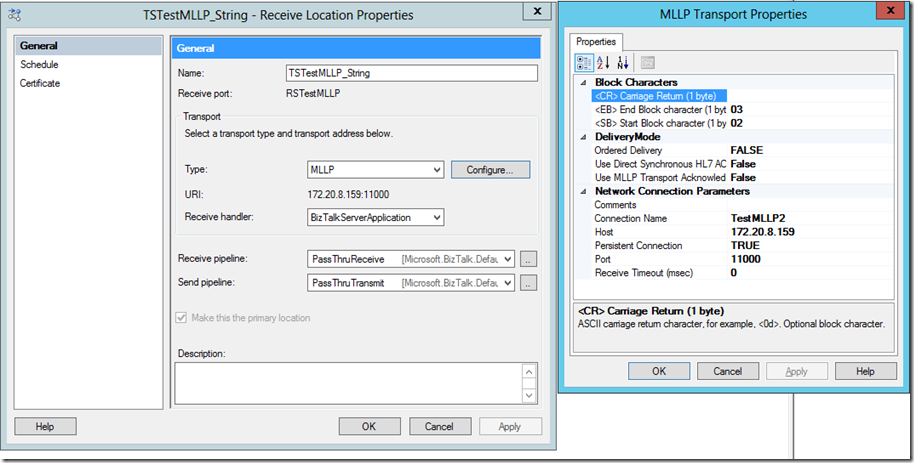

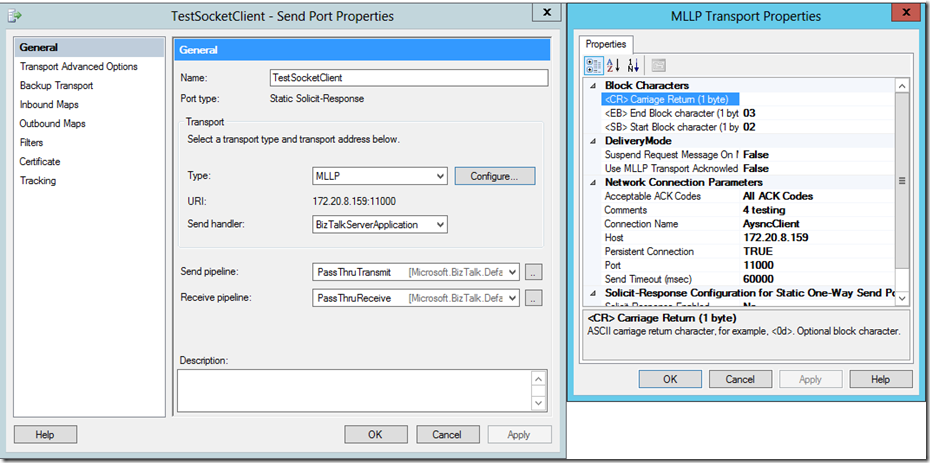

The BizTalk MLLP adapter is part of the BizTalk HL7 accelerator. This is usually used with healthcare systems. The MLLP adapter at its essence is a socket adapter as shown by the receive and send port configurations shown below. can it be used for other non-healthcare systems?

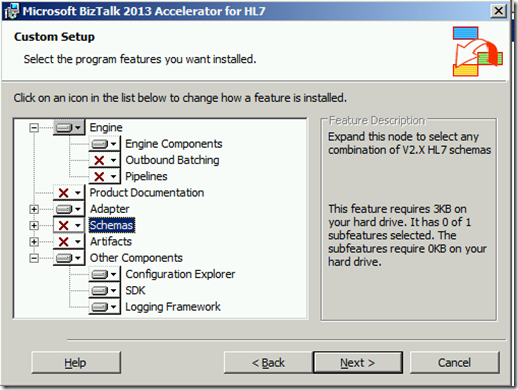

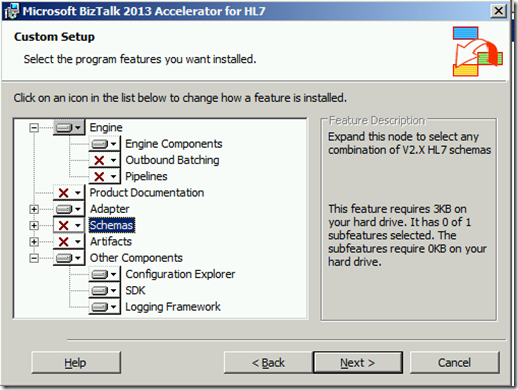

First I installed the BizTalk 2013 HL7 Accelerator with the minimal options to run the MLLP adapter. I had issues because you cannot install it if all the latest Windows Update have been installed but there is a workaround.

I setup a receive location as shown above once the MLLP adapter had been installed. Note i am not using any of the HL7 pipelines, no carriage return character, a custom start character and end character. Next I created a asynchronous socket test client very similar to the the Microsoft example here. I modified it so it would send a heart beat. I added the following lines at the right place

.//Start,End and ACK messages

protected const string STX = “02”;

protected const string ETX = “03”;

protected const string ENQ = “05”;

//…………………………….

// Send test data to the remote device.

//Send(client, “This is a test<EOF>”);

data = (char)Convert.ToInt32(STX, 16) + data + (char)Convert.ToInt32(ENQ, 16) + (char)Convert.ToInt32(ETX, 16);

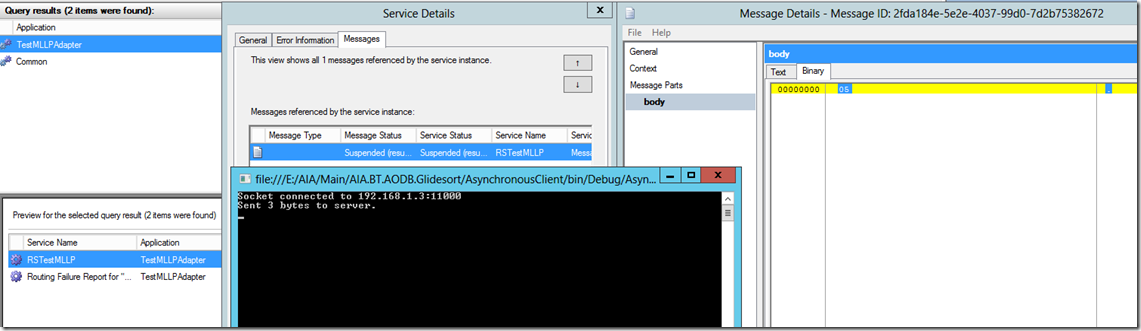

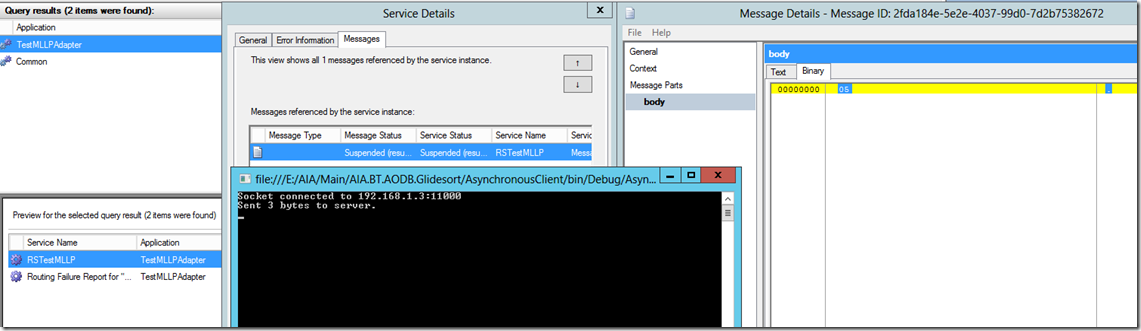

Now after changing the host to 192.168.1.3, enabling the receive location and starting the socket test client, a ENQ message is received in BizTalk. This proves that the MLLP adapter can consume a socket client with a message that is not a HL7 message.

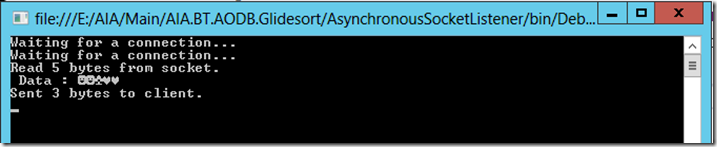

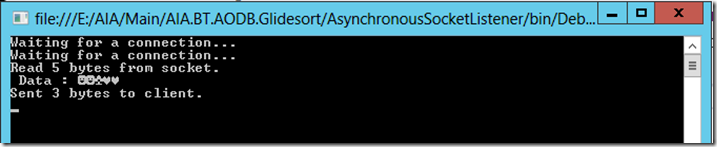

Next i set a file port to send a heartbeat message MLLP send adapter configure to send to 192.168.1.3 as above. The heartbeat message was <STX><ENQ><ETX>. I created a socket server similar to the Microsoft example here. I modified it be adding the following lines

//Start,End and ACK messages

protected const string STX = “02”;

protected const string ETX = “03”;

protected const string ACK = “06”;

//————–

// Echo the data back to the client.

//Send(handler, content);

// Send ACK message back to clinet

data = (char)Convert.ToInt32(STX, 16) + data + (char)Convert.ToInt32(ACK, 16) + (char)Convert.ToInt32(ETX, 16);

On dropping the file in the file receive port it was sent to the MLLP adapter and then sent to the socket server as shown by the printout below. There is five bytes because the configured adapter is also adding an extra STX and ETX character the message.

This proves the a MLLP receive adapter can consume messages from a socket client and that a send MLLP adapter can send to a socket server.

We have shown that MLLP adapter can be used instead of the Codeplex TCP/IP adapter or the Acme socket adapter

…….but this is not the end for me. My application wants to be a socket client and for BizTalk to send a heartbeat when it will send an AC i.e.

Application –>connect to BizTalk Server

BizTalk Server –>Message or heartbeat to the application

Application –> ACK message to BizTalk Server

I think I will have to create a custom adapter to do this.

In summary for the basic case the MLLP adapter can be used instead of the Codeplex TCP/IP adapter or the Acme socket adapter.

by Steef-Jan Wiggers | Jan 6, 2017 | BizTalk Community Blogs via Syndication

In the past, now and tomorrow I will keep saying that integration is relevant, even in this day and age of digitalization. Integration professionals will play an essential role in providing connectivity between systems, devices and services. Integration drives the digitalization forward by connecting everything with anything.

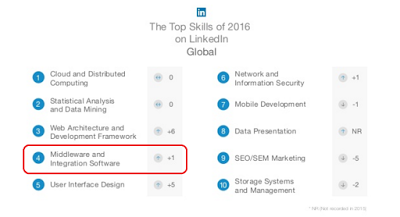

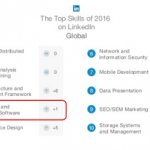

Integration skills are still in demand. A LinkedIn study revealed a top 10 with integration and middleware in top 5 with Cloud, Data Science, UX and Web. And the expectation for 2017 is that this will be similar.

Our toolbox is expanding from on premise tooling to cloud services; we have WCF, BizTalk Server, MSMQ to Logic Apps, API Management and Service Bus. Formats ranging from flat file, EDI, XML to JSON, several protocols open and proprietary, tooling from mappers to BizTalk360. It can be challenging yet much more exciting. My conclusion is more options, more fun, at least in my view.

In February I, will be travelling to Australia to meet up with my buddies Mick and Rene. And Rene is organizing a Meetup in Sydney on the 20th of February. And since I am kind of in the neighborhood, I have decided to pay my friends (Mark e.a.) in New Zealand a visit too. Hence, I will speak in Auckland on the 14th of February and in Melbourne, where Bill Chesnut resides. That’s three meet ups I will speak during my stay down under.

With Eldert I will be travelling around and our plans will look like:

• Saturday 11-2 – Monday 13-2: Sydney

• Tuesday 14-2 – Wednesday 15-2: Auckland (Meetup)

• Thursday 16-2 – Friday 17-2: Gold Coast (Eldert attends Ignite, Gold Coast)

• Saturday 18-2: Brisbane

• Sunday 19-2 – Monday 20-2: Sydney (Meetup)

• Tuesday 21-2 – Thursday 23-2: Melbourne (Meetup)

• Friday 24-2 – Monday 27-2: Sydney

Hope to see some of you there!

And that’s not all. The following month, March 25th there will be the Global Integration Bootcamp and the IntegrationMondays will start from the 9th of January onwards to end of April. And finally, there will be an Integrate 2017 in the UK and TUGAIT with an Integration Track in May.

Hence, there you go a tremendous community effort the coming months!

Author: Steef-Jan Wiggers

Steef-Jan Wiggers is all in on Microsoft Azure, Integration, and Data Science. He has over 15 years’ experience in a wide variety of scenarios such as custom .NET solution development, overseeing large enterprise integrations, building web services, managing projects, designing web services, experimenting with data, SQL Server database administration, and consulting. Steef-Jan loves challenges in the Microsoft playing field combining it with his domain knowledge in energy, utility, banking, insurance, health care, agriculture, (local) government, bio-sciences, retail, travel and logistics. He is very active in the community as a blogger, TechNet Wiki author, book author, and global public speaker. For these efforts, Microsoft has recognized him a Microsoft MVP for the past 6 years. View all posts by Steef-Jan Wiggers

by Nino Crudele | Jan 3, 2017 | BizTalk Community Blogs via Syndication

Why do people start comparing BizTalk Server to a T-Rex?

Long time ago the Microsoft marketing team created this mousepad in occasion of the BizTalk 12th birthday.

And my dear friend Sandro produced the fantastic sticker below

Time later people start comparing BizTalk Server to a T-Rex, honestly, I don’t remember the exact reasons why but I like to provide my opinion about that.

Why a T-Rex?

In the last year, I engaged many missions in UK around BizTalk Server, assessments, mentoring, development, migration, optimization and more and I heard many theories about that, let go across some of them :

Because BizTalk is old, well, in that case I’d like to say mature which is a very good point for a product, since the 2004 version the product grew up until the 2013 R2 version and in these last 10 years the community build so much material, tools and documentation that not many other products can claim.

Because BizTalk is big and monolithic, I think this is just a point of view, BizTalk can be very smart, most of the time I saw architects driving their solution in a monolithic way and, most of the times, the problem was in the lack of knowledge about BizTalk Server.

Because BizTalk is complicate, well Forrest Gump at this point would say “complicate is what complicate we do”, during my assessments and mentoring I see so many over complicated solutions which could be solved is very easy way and, the are many reasons for that, some time because we miss the knowledge, other time we don’t like to face the technology and we decide for, what I like to call, the “chicken way”.

Because now we have the cloud, well, in part I can agree with that but, believe or not, we also have the on premise and companies still use hardware, companies still integrate on premise applications and we believe or not, integrating system in productive way to send data into the cloud in efficient and reliable mode is something very complicate to do and, at the moment, BizTalk is still the number one on it.

Because BizTalk costs, the BizTalk license depends by the number of processors we need to use in order to run our solution and achieve the number of messages per second we need to consume, this is the main dilemma but, in my opinion, quite easy to solve and this is my simple development theory.

The number of messages we are able to achieve is inversely proportional to the number of wrong best practices we produce in our solution.

Many people make me this question, Nino what do you think is the future of BizTalk Server?

I don’t like to speak about future, I saw many frameworks came up and disappear after one or two years.

I like to consider the present and I think BizTalk is a solid product with tons of features and able to cover and support in great way any integration scenario.

In my opinion the main problem is how we approach to this technology.

Many times companies think about BizTalk like a single product to use in order to cover any aspect about a solution and this is deeply wrong.

I like to use many technologies together and combine them in the best way but, most important, each correct technology to solve the specific correct task.

In my opinion when we look to a technology, we need to get all the pros and cons and we must use the pros in the proper way to avoid any cons.

BizTalk can be easily extendable and we can compensate any cons in very easy way.

Below some of my personal best hints derived by my experience in the field:

If you are not comfortable or sure about BizTalk Server then call an expert, in one or two days he will be able to give you the right way, this is the most important and the best hint, I saw many people blaming BizTalk Server instead of blaming their lack of knowledge.

Use the best naming convention able to drive you in a proper way in your solution, I don’t like to follow the same one because any solution, to be well organized, needs a different structure, believe me the naming convention is all in a BizTalk Solution.

Use orchestration only when you need a strictly pattern between the processes, orchestrations are the most expensive resource in BizTalk Server, if I need to use it then I will use it for this specific reason only.

If I need to use an orchestration, then I like to simplify using code instead of using many BizTalk shapes, I like to use external libraries in my orchestrations, it’s simpler than create tons of shapes and persistent points in the orchestration.

Many times, we don’t need to use an adapter from an orchestration, which costs resources in the system, for example many times we need to retrieve data from a database or call another service and we don’t need to be reliable.

Drive your persistent points, we can drive the persistent points using atomic scopes and .Net code, I like to have the only persistent point I need to recover my process.

Anything can be extendable and reusable, when I start a new project I normally use my templates and I like to provide my templates to the customer.

I avoid the messagebox where I need real time performances, I like to use two different technics to do that, one is using Redis cache, the second is by RPC.

One of the big features provided by BizTalk Server is the possibility to reuse the artefacts separately and outside the BizTalk engine, in this way I can easily achieve real time performances in a BizTalk Server process.

Many times, we can use a Standard edition instead of an Enterprise edition, the standard edition has 2 main limitations, we can’t have multiple messageboxes and we can’t have multiple BizTalk nodes in the same group.

If the DTS (Down Time Service) is acceptable I like to use a couple of standard editions and with a proper configuration and virtual server environment I’m able to achieve a very good High Availability plan and saving costs.

I always use BAM and I implement a good notification and logging system, BizTalk Server is the middleware and, believe me, for any issue you will have in production the people will blame BizTalk, in this case, a good metrics to manage and troubleshoot in fast way any possible issue, will make you your life great.

Make the performance testing using mock services first and real external services after, in this way we are able to provide the real performances of our BizTalk solution, I saw many companies waste a lot of money trying to optimize a BizTalk process instead of the correct external service.

To conclude, when I look at BizTalk Server I don’t see a T-Rex.

BizTalk remembers me more a beautiful woman like Jessica Rabbit

full of qualities but, as any woman, sometime she plays up, we only need to know how to live together whit her 😉

Author: Nino Crudele

Nino has a deep knowledge and experience delivering world-class integration solutions using all Microsoft Azure stacks, Microsoft BizTalk Server and he has delivered world class Integration solutions using and integrating many different technologies as AS2, EDI, RosettaNet, HL7, RFID, SWIFT. View all posts by Nino Crudele

by stephen-w-thomas | Dec 30, 2016 | BizTalk Community Blogs via Syndication

It is that time of year where I like to reflect back on what the previous year has brought and also set my bearings for the road ahead. If you are interested in reading my 2015 recap, you can find it here.

Personal

2016 was a milestone birthdate for myself and my twin brother. In order to celebrate, and try to deny getting old for another year, we decided to run a marathon in New York City. The NYC Marathon is one of the 6 major marathons in the world so it acted as a fantastic backdrop for our celebration. Never one to turn down an adventure, my good friend Steef-Jan Wiggers joined us for this event. As you may recall, Steef-Jan and I ran the Berlin Marathon (another major) back in 2013.

The course was pretty tough. The long arching bridges created some challenges for me, but I fought through it and completed the race. We all finished within about 10 minutes of each and had a great experience touring the city.

Kurt, Kent and Steef-Jan in the TCS tent before the race

At the finish line with the hardware.

Celebrating our victory at Tavern on the Green in Central Park.

Speaking

Traveling and speaking is something I really like to do and the MVP program has given me many opportunities to scratch this itch. I also need to thank my previous boss and mentor Nipa Chakravarti for all of the support that she has provided which made all of this possible.

In Q2, I once again had a chance to head to Europe to speak at BizTalk360’s Integrate Event with the Microsoft Product Group. My topic was on Industrial IoT and some of the project work that we had been working on. You can find a recording of this talk here.

On stage….

I really like this photo as it reminds me of the conversation I was having with Sandro. He was trying to sell me a copy of his book, and I was trying to convince him that if he gave me a free copy, that I could help him sell more. Sandro has to be one of the hardest working MVPs I know who is recognized as one of the top Microsoft Integration Gurus. If you have ever having a problem in BizTalk, there is a good chance he has already solved it. You can find his book here in both digital and physical versions.

BizTalk360 continues to be an integral part of the Microsoft Integration community. Their 2016 event had record attendance from more than 20 countries. Thank-you BizTalk360 for another great event and for building a great product. We use BizTalk360 everyday to monitor our BizTalk and Azure services.

On a bit of a different note, this past year we had a new set of auditors come in for SOX compliance. For the first time, that I have experienced, the auditors were really interested in how we were monitoring our interfaces and what our governance model was. We passed the audit with flying colours, but that was really related to having BizTalk360. Without it, our results would not have been what they were.

Q3

Things really started to heat up in Q3. My first, of many trips, was out to Toronto to speak at Microsoft Canada’s Annual General meeting. I shared the stage with Microsoft Canada VP Chris Barry as we chatted about Digital Transformation and discuss our experiences with moving workloads to the cloud.

Next up was heading to the south east United States to participate in the BizTalk Bootcamp. This is my third time presenting at the event. I really enjoy speaking at this event as it is very well run and is in a very intimate setting. I have had the chance to meet some really passionate integration folks at this meetup so it was great to catch up once again. Thank-you Mandi Ohlinger and the Microsoft Pro Integration team for having me out in Charlotte once again.

At the Bootcamp talking about Azure Stream Analytics Windowing.

The following week, I was off to Atlanta to speak at Microsoft Ignite. Speaking at a Microsoft premier conference like Ignite (formerly TechEd) has been a bucket list item so this was a really great opportunity for me. At Ignite, I was lucky enough to participate in two sessions. The first session that I was involved in was a customer segment as part of the PowerApps session with Frank Weigel and Kees Hertogh. During this session I had the opportunity to show off one of the apps my team has built using PowerApps. This app was also featured as part of a case study here.

On stage with PowerApps team.

Next up, was a presentation with John Taubensee of the Azure Messaging team. Once again my presentation focused on some Cloud Messaging work that we had completed earlier in the year. Working with the Service Bus team has been fantastic this year. The team has been very open to our feedback and has helped validate different use cases that we have. In addition to this presentation, I also had the opportunity to work on a customer case study with them. You can find that document here. Thanks Dan Rosanova, John Taubensee, Clemens Vasters and Joe Sherman for all the support over the past year.

Lastly, at the MVP Summit in November, I had the opportunity to record a segment in the Channel 9 studio. Having watched countless videos on Channel 9, this is always a neat experience. The segment is not public yet, but I will be sure to post when it is. Once again, I had the opportunity to hang out with Sandro Pereira before our recordings.

In the booth, recording.

Prepping in the Channel 9 studio

Writing

I continue to write for InfoQ on Richard Seroter’s Cloud Editorial team. It has been a great experience writing as part of this team. Not only do I get exposed to some really smart people, I get exposed to a lot of interesting topics that only fuels my career growth. In total, I wrote 46 articles but here are my top 5 that I either really enjoyed writing or learned a tremendous amount about.

- Integration Platform as a Service (iPaaS) Virtual Panel In this article, I had the opportunity to interview some thought leaders in the iPaaS space from some industry leading organizations. Thank-you Jim Harrer (Microsoft), Dan Diephouse (MuleSoft) and Darren Cunningham (SnapLogic) for taking the time to contribute to this feature. I hope to run another panel in 2017 to gauge how far iPaaS has come.

- Building Conversational and Text Interfaces Using Amazon Lex – After researching this topic, I immediately became interested in Bots and Deep Learning. It was really this article that acted as a catalyst for spending more time in this space and writing about Google and Microsoft’s offerings.

- Azure Functions Reach General Availability – Something that I like to do, when possible, is to get a few sound bytes from people involved in the piece of news that I am covering. I met Chris Anderson at the Integrate event earlier in the year, so it was great to get more of his perspective when writing this article.

- Microsoft PowerApps Reaches General Availability – Another opportunity to interview someone directly involved in the news itself. This time it was Kees Hertogh, a Senior Director of Product Marketing at Microsoft.

- Netflix Cloud Migration Complete – Everyone in the industry knows that Netflix is a very innovative company and has disrupted and captured markets from large incumbents. I found it interesting to get more insight into how they have accomplished this. Many people probably thought the journey was very short, but what I found was that it wasn’t the case. It was a very methodical approach that actually took around 8 years to complete.

Another article that I enjoyed writing was for the Microsoft MVP blog called Technical Tuesday. My topic focused on Extending Azure Logic Apps using Azure Functions. The article was well received and I will have another Technical Tuesday article published early in the new year.

Back to School

Blockchain

I left this topic off of the top 5 deliberately as I will talk about it here, but it absolutely belongs up there. Back in June, I covered a topic for InfoQ called Microsoft Introduces Project Bletchley: A Modular Blockchain Fabric. I really picked up this topic out of our Cloud queue as my boss at the time had asked me about Blockchain and I didn’t really have a good answer. After researching and writing about the topic, I had the opportunity to attend a Microsoft presentation in Toronto for Financial organizations looking to understand Blockchain. At the Microsoft event (you can find similar talk here), Alex Tapscott gave a presentation about Blockchain and where he saw it heading. ConsenSys, a Microsoft partner and Blockchain thought leader was also there talking about the Brooklyn Microgrid. I remember walking out the venue that day thinking everything was about to change. And it did. I needed to better understand blockchain.

For those that are not familiar with blockchain, simply put, it is a paradigm that focuses on using a distributed ledger for recording transactions and providing the ability to execute smart contracts against these transactions. An underlying principle of blockchain is to address the transfer of trust amongst different parties. Historically, this has been achieved through intermediaries that act as a “middleman” between trading partners. In return, the intermediary takes a cut on the transaction, but doesn’t really add a lot of value beyond collecting and dispersing funds. Trading parties are then left to deal with the terms that the intermediary sets. Using this model typically does not provide incentives for innovation, in fact it typically does the opposite and stifles it due to complacency and entitlement by large incumbent organizations.

What you will quickly discover with blockchain is that it is more about business than technology. While technology plays a very significant role in blockchain, if your conversation starts off with technology, you are headed in the wrong direction. With this in mind, I read Blockchain Revolution by Alex and Don Tapscott which really focuses on the art of the possible and identifying some modern-day scenarios that can benefit from blockchain. While some of the content is very aspirational, it does set the tone for what blockchain could become.

Having completed the book, I decided to continue down the learning path. I wanted to now focus on the technical path. I am a firm believer that in order for me to truly understand something, I need to touch it. By taking the Blockchain Developer course from B9Lab I was able to get some hands on experience with the technology. As a person that spends a lot of time in the Microsoft ecosystem, this was a good learning opportunity to get back into Linux and more of the open source community as blockchain tools and platforms are pretty much all open source. Another technical course that I took was the following course on Udemy. The price point for this course is much lower, so it may be a good place to start without making a more significant financial investment in a longer course.

Next, I wanted to be able to apply some of my learnings. I found the Future Commerce certificate course from MIT. It was a three month course, all delivered online. There were about 1000 students, worldwide, in the course and it was very structured and based upon a lot of group work. I had a great group that I worked with on an Energy-based blockchain startup. We had to come up with a business plan, pitch deck, solution architecture and go to market strategy, Having never been involved in a start-up at this level (I did work for MuleSoft, but they were at more than 300 people at the time), it was a great experience to work through this under the tutelage of MIT instructors.

If you are interested in the future of finance, aka FinTech, I highly recommend this course. There is a great mix of Finance, Technology, Entrepreneurs, Risk and Legal folks in this class you will learn a lot.

Gary Vaynerchuk

While some people feel that Twitter is losing its relevancy, I still get tremendous value out of the platform. The following is just an example. Someone I follow on Twitter is Dona Sarkar, from Microsoft, I had the opportunity to see her speak at the Microsoft World Partner Conference and quickly became a fan. Back in October, she put out the following tweet, which required further investigation on my part.

Dona’s talks, from the ones that I have seen, are very engaging and also entertaining at the same time. If she is talking about “Gary Vee” in this manner, I am thinking there is something here. So I start to digest some of his content. I was very quickly impressed. What I like about Gary is he has a bias for action. Unfortunately, I don’t see this too often in Enterprise IT shops; we try to boil the ocean and watch initiatives fail because people have added so much baggage that the solution is unachievable or people have become disenfranchised. I have also seen people being rewarded for building “strategies” without a clue how to actual implement them. I find this really prevalent in Enterprise Architecture where some take pride in not getting into the details. While you may not need to stay in the details for long, without understanding the mechanics, a strategy is just a document. And a strategy that has not/cannot be executed is useless.

If you have not spent time listening to Gary, here are some of his quotes that really resonated with me.

- Bet on your strengths and don’t give a f&%# about what you are not good at.

- Educate…then Execute

- You didn’t grow up driving, but somehow you figured it out.

- Results are results are results

- I am just not built, to have it dictate my one at-bat at life.

- Document, Don’t Create.

- We will have people who are romantic and hold onto the old world who die and we will have people that execute and story tell on the new platform who emerge as leaders in the new world.

- I am built to get punched in the mouth, I am going spit my front tooth out and look right back at you and be like now what bitch.

If this sounds interesting to you, check out a few of his content clips that I have really enjoyed:

Looking Forward

I find it is harder and harder to do this. The world is changing so fast, why would anyone want to tie themselves down to an arbitrary list? Looking back on my recap from last year, you won’t find blockchain or bots anywhere in that post, yet those are two of the transformative topics that really interested me in 2016. But, there are some constants that I don’t see changing. I will continue to be involved in the Microsoft Integration community, developing content, really focused on iPaaS and API Management. IoT continues to be really important for us at work so I am sure I will continue to watch that space closely. In fact, I will be speaking about IoT at the next Azure Meetup in Calgary on January 10th. More details here.

I will also be focusing on blockchain and bots/artificial intelligence as I see a lot of potential in these spaces. One thing you can bet on is that I will be watching the markets closely and looking for opportunities where I see a technology disrupting or transforming incumbent business models.

Also, it looks like I will be running a marathon again in 2017. My training has begun and am just awaiting confirmation into the race.