by Lex Hegt | Jun 6, 2018 | BizTalk Community Blogs via Syndication

Why do we need this feature?

Many customers use their BizTalk platform to exchange EDI (Electronic Data Interchange) messages with their partners. BizTalk Server contains a number of features for EDI like Interchange processing, Batch processing and Trading Partner Management.

From an operational viewpoint, all kind of features exist in BizTalk Server and many have found their way to BizTalk360 as well. However, besides querying for running processes, there is no feature which shows the EDI processes from a statistical viewpoint.

What are the current challenges?

Often, BizTalk Server is considered as a black box and organizations with little knowledge of the product, sometimes prefer not to touch it. This behaviour can be quite dangerous as the (EDI) processes in BizTalk Server might not run as expected. So, insight into these processes is required to be able to guarantee everything is okay.

Information like the number of AS2 messages or the transaction count by transaction type or by partner id over a certain period is very useful to determine the well-being of the processes, but cannot be revealed from BizTalk Server.

How BizTalk360 solves this problem?

BizTalk360 tries to breach this black box behaviour by bringing a platform which makes BizTalk Server environments easier to understand and safe to operate on.

Related to EDI, we bring a couple of features:

- EDI Dashboards

- EDI Status Reports

- Reporting Manager

- Parties and Agreements

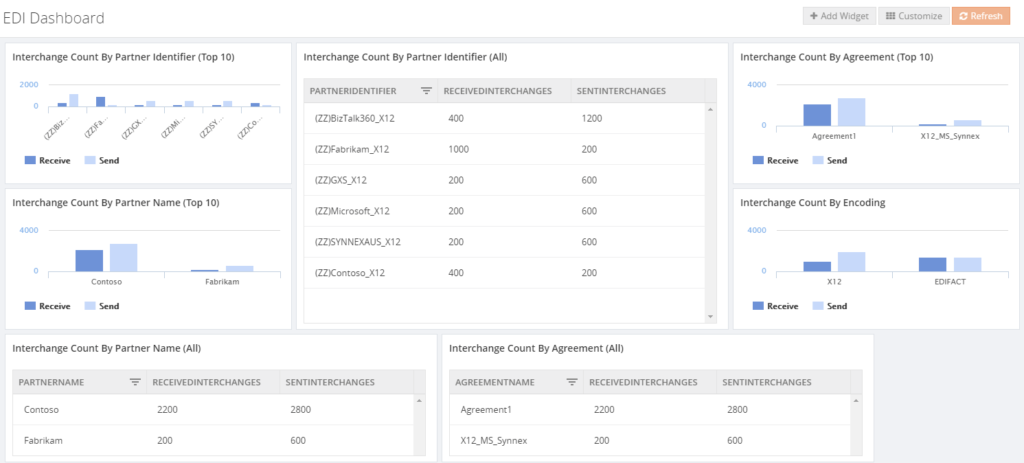

EDI Dashboards

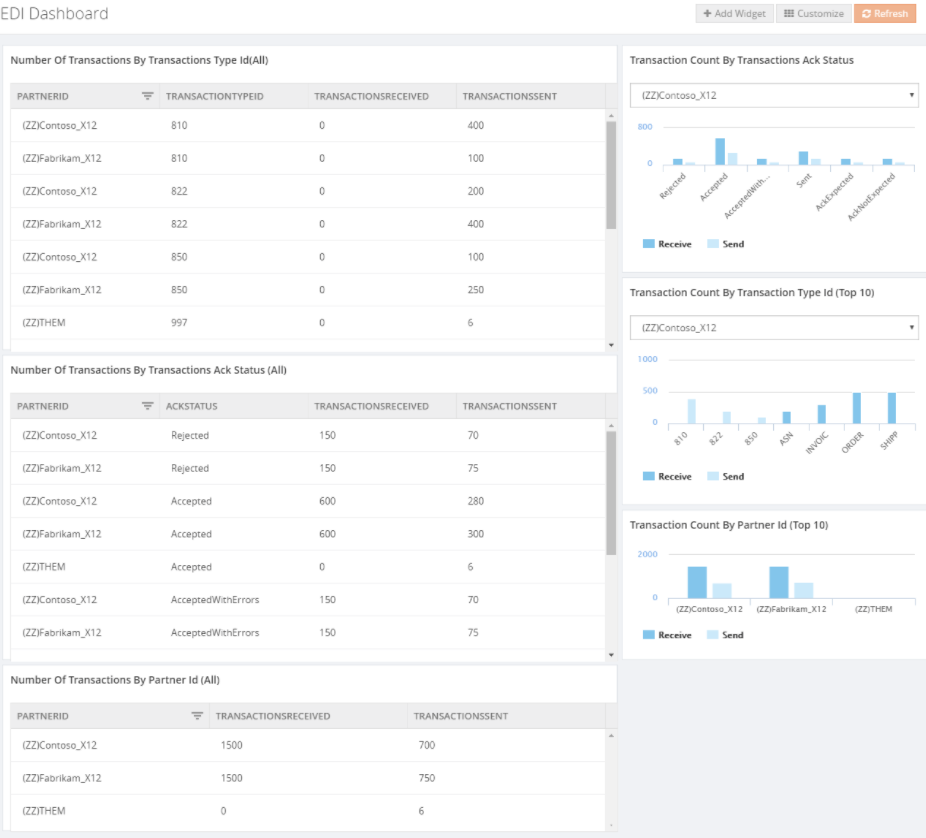

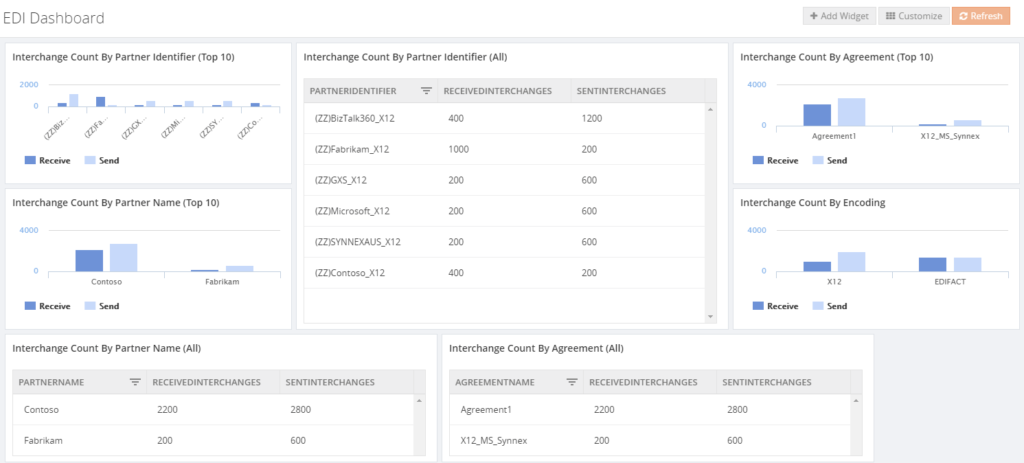

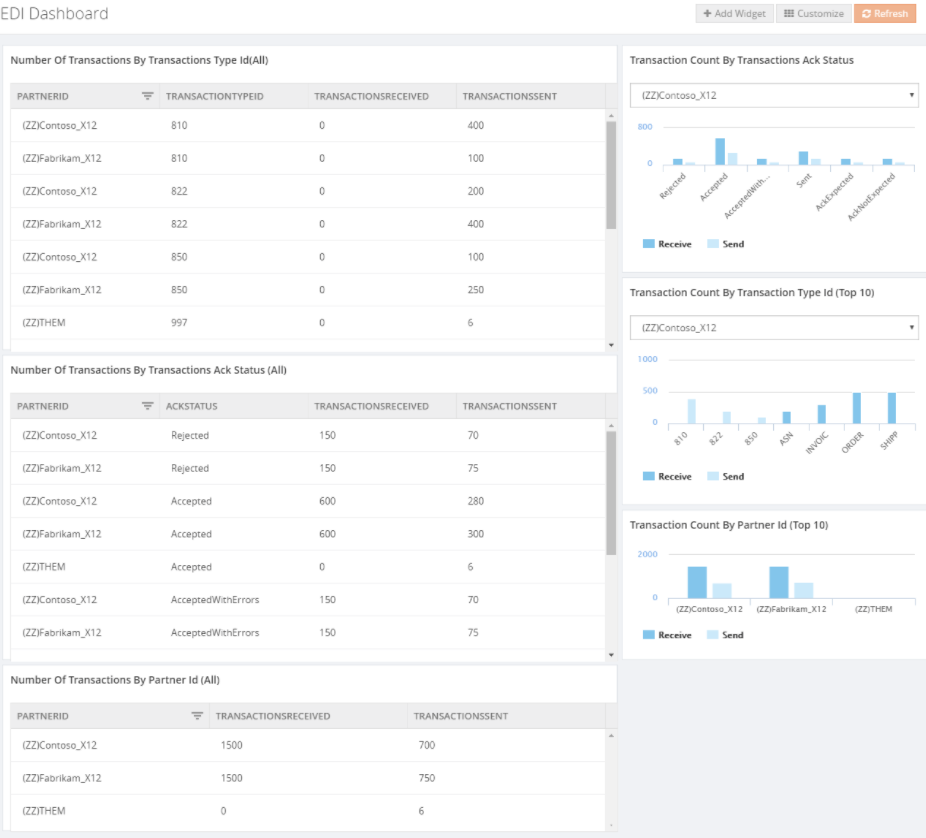

The EDI Dashboard is one of the features which enables the BizTalk user to get insight in what’s going in BizTalk. As the other dashboards in BizTalk360, also the EDI Dashboard is customizable and consists of widgets from different categories. In case of the EDI Dashboard, the following categories exist:

- EDI Interchanges – widgets exist for

- Interchange count by Partner Id/Name

- Agreement

- Encoding (X12/EDIFACT)

- EDI Transaction Sets – widgets exist for

- Transaction count by Partner Id/Name

- Transaction type (filtered by Partner Id)

- ACK Status (filtered by Partner Id)

- EDI AS – widgets exist for

- Number of messages by Partner Id

- Number of messages by Partner Id and MDN status

- Number of messages by MDN status

Below you can view some examples of EDI Dashboards you can create in BizTalk360.

EDI Reports

Comparable to the EDI query features in the BizTalk Administration Console, BizTalk360 brings a Reporting feature. On top of the set of queries you might know from the Admin console, BizTalk360 also provides a Functional ACK Status report.

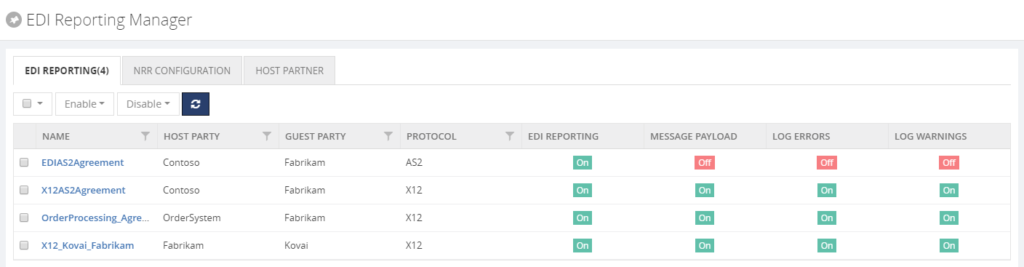

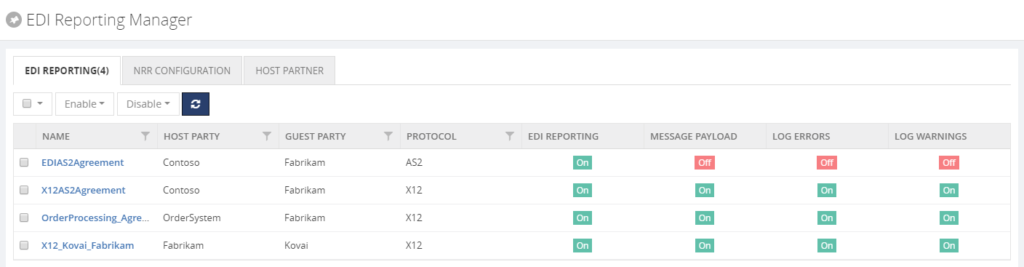

EDI Reporting Manager

For easy configuration of your EDI reports, BizTalk360 brings the EDI Reporting Manager. See below for a screen print of that feature.

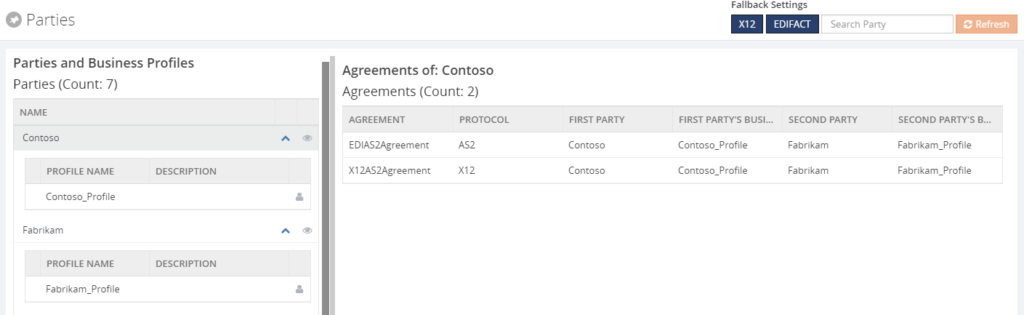

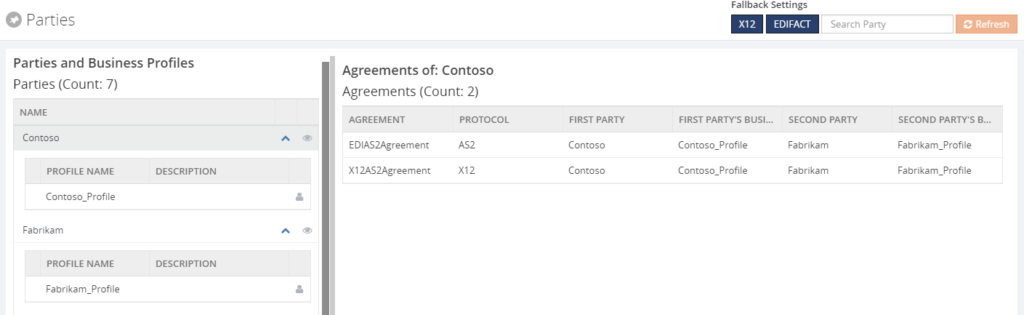

Parties and Agreements

Also the parties and agreements can be viewed in BizTalk360. See below for a screen print of that feature.

By bringing all these features, BizTalk360 gives good visibility of the EDI processes.

Author: Lex Hegt

Lex Hegt works in the IT sector for more than 25 years, mainly in roles as developer and administrator. He works with BizTalk since BizTalk Server 2004. Currently he is a Technical Lead at BizTalk360. View all posts by Lex Hegt

by Sriram Hariharan | Jun 5, 2018 | BizTalk Community Blogs via Syndication

Missed the Day 1 at INTEGRATE 2018? Here’s the recap of Day 1 events.

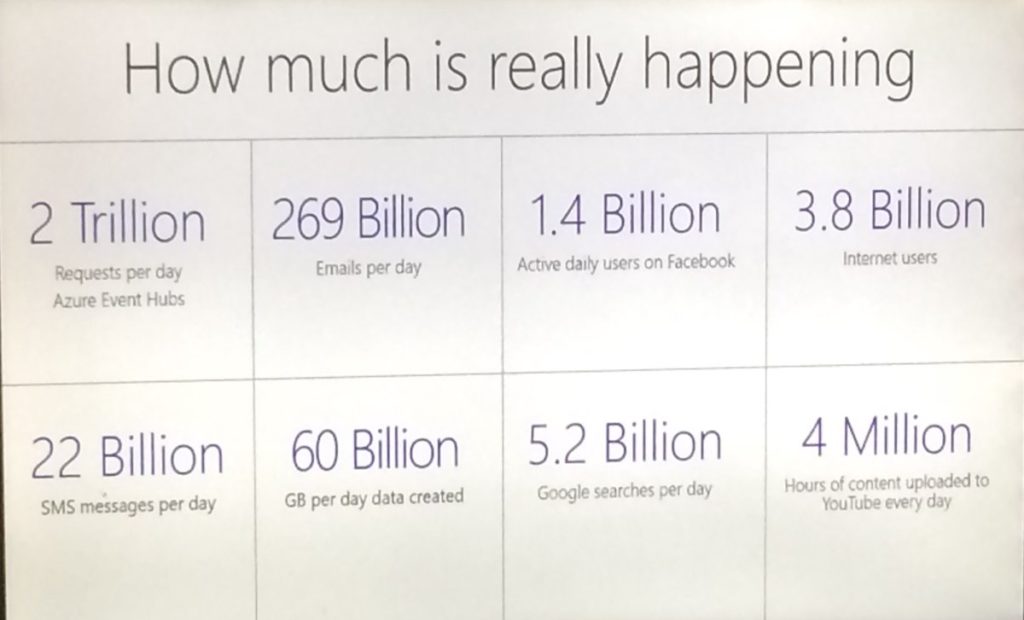

0830 — An early start on Day 2. The session started with consideration of using Logic Apps for System to System, Application to Application integration.

Logic Apps finds it applications in multi-billion-dollar transactions that happen through Enterprise Application Integration platform.

Most of these business cases are in BizTalk Server. Using Logic Apps and other Azure Services can modernise these platforms.

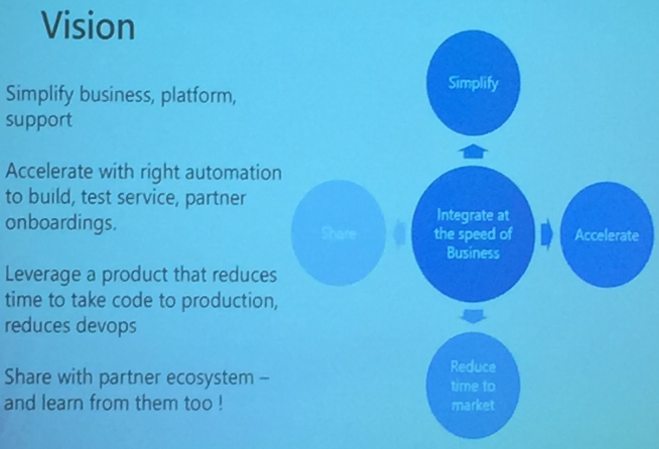

Microsoft Vision

We do integration at the speed of the business. We want to simplify the process

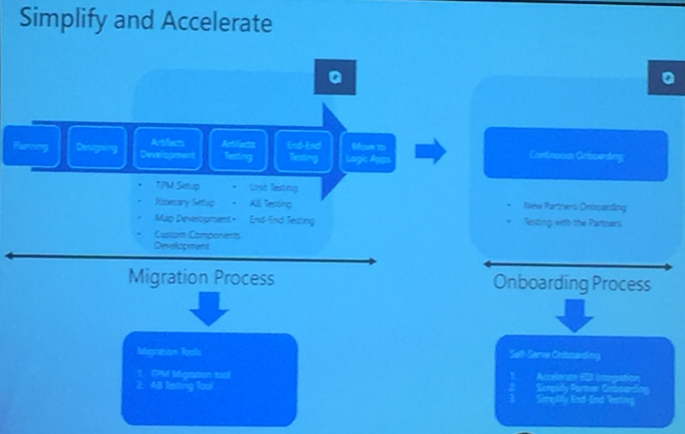

Microsoft is building tooling to automatically onboard partners and enable migration from BizTalk Server to Logic Apps. Embracing the change from taking the lead.

They are looking at reducing the DevOps time from Code -> Development -> Production

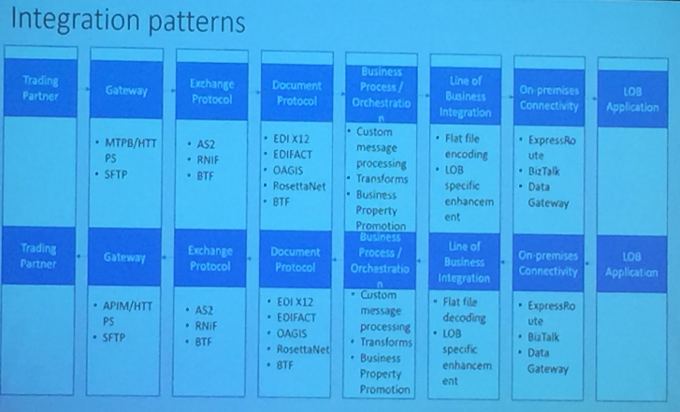

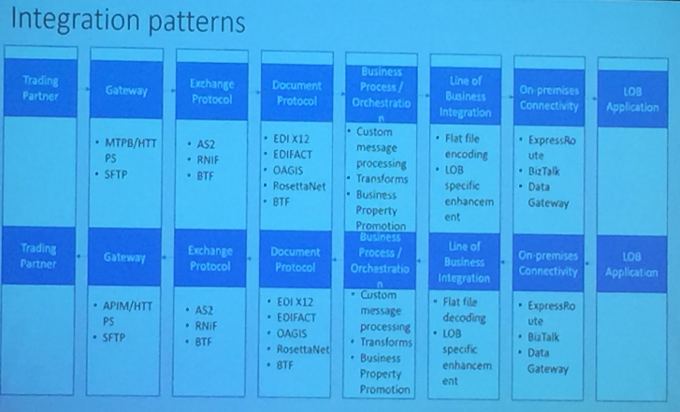

Microsoft is willing to share what they build to partners and community. Lot of integration patterns being built.

Microsoft is working on strategy to migrate from BizTalk Server to Logic Apps. How they plan to do?

- Use On-Premise Gateway

- Publish / Subscribe Model (work-in-progress)

The idea is to use integration workflows, publish to queues and there will be a subscriber reading from Queues to SAP / SFTP depending on the location.

They also mentioned about the categorization of Logic Apps under the following verticals:

- Policy / Route

- Processing

- LoB (Line of Business) Adapters

Suggestion is Have Logic Apps simple and self contained.

As more protocols are being added, they will update the policies. Also help decouple platform from onboarding.

- APIM Policies make it simple to drive itinerary

- Policies allow for dynamic routing of messages

- What properties need to be promoted can be derived from meta data

This means same logic app can be used for different partners and purposes by using routing and metadata

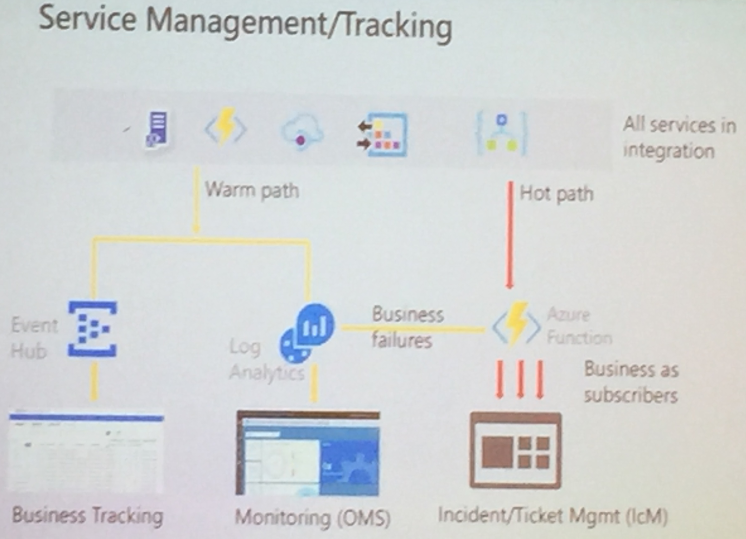

Exception Handling

- Enumerate over failures in run scope, extract properties relevant to business

- Forward to another logic app

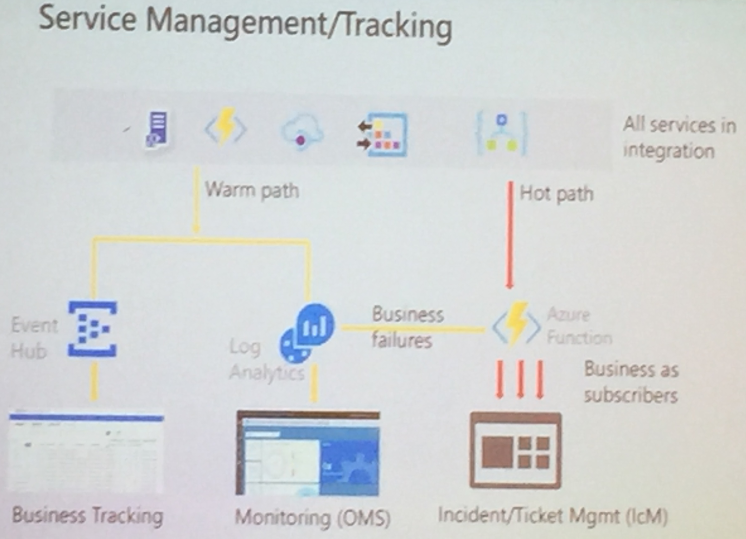

They are suitable in Warm Path and Hot Path Monitoring.

Future Considerations

Integration Account to be used for

- BizTalk Server Partner configurations

- Logic Apps for Partners

Microsoft is ready with Migration strategy for the same

Logic Apps are being built suitable for isolated businesses provide offering to meet strongest SLAs

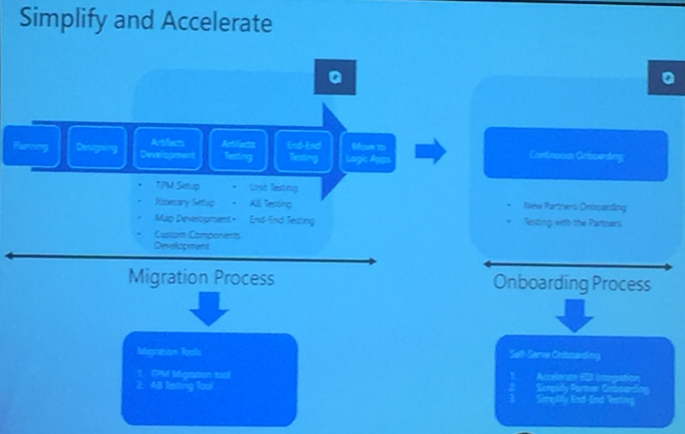

Amit demonstrated the following

- TPM Management tool

- Help self-service onboarding

- Accelerate EDI integration

- AB Testing tool

Basically, Microsoft is aimed at Simplification and Acceleration of Migration and on boarding process

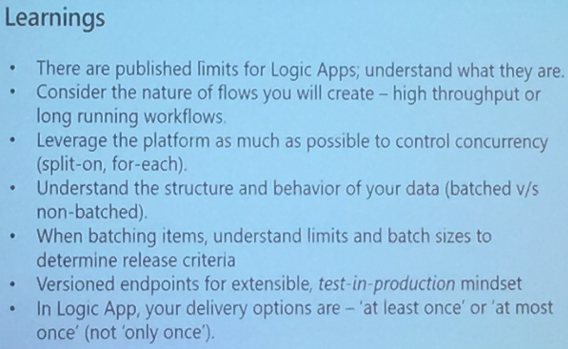

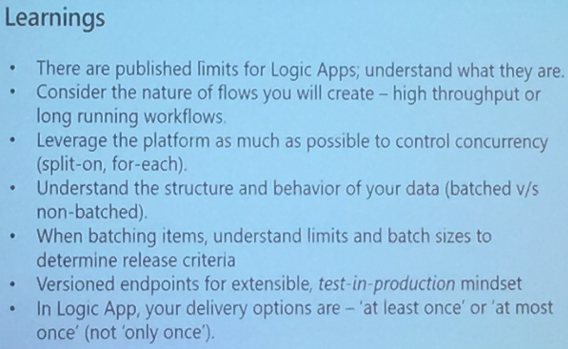

Finally, they concluded the session with the learning to migrate to Logic Apps

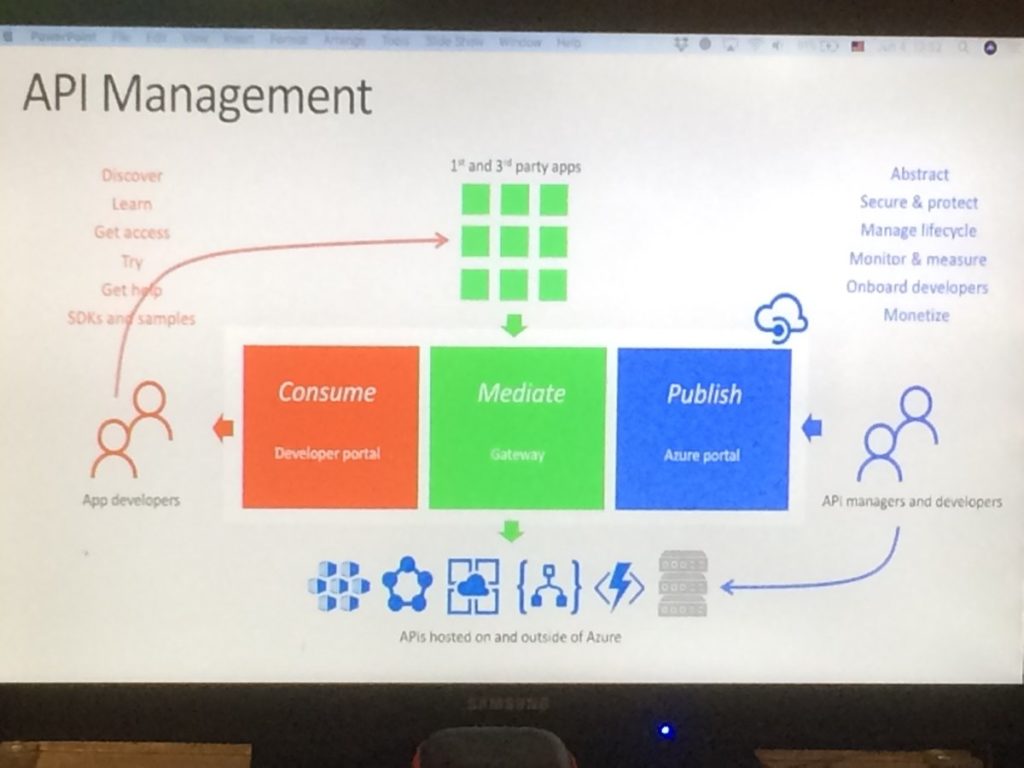

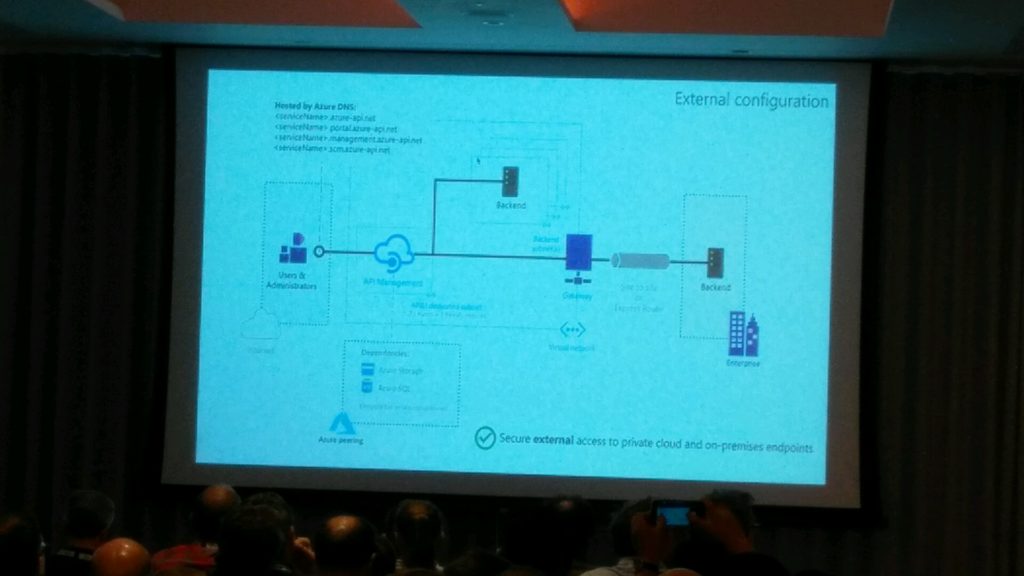

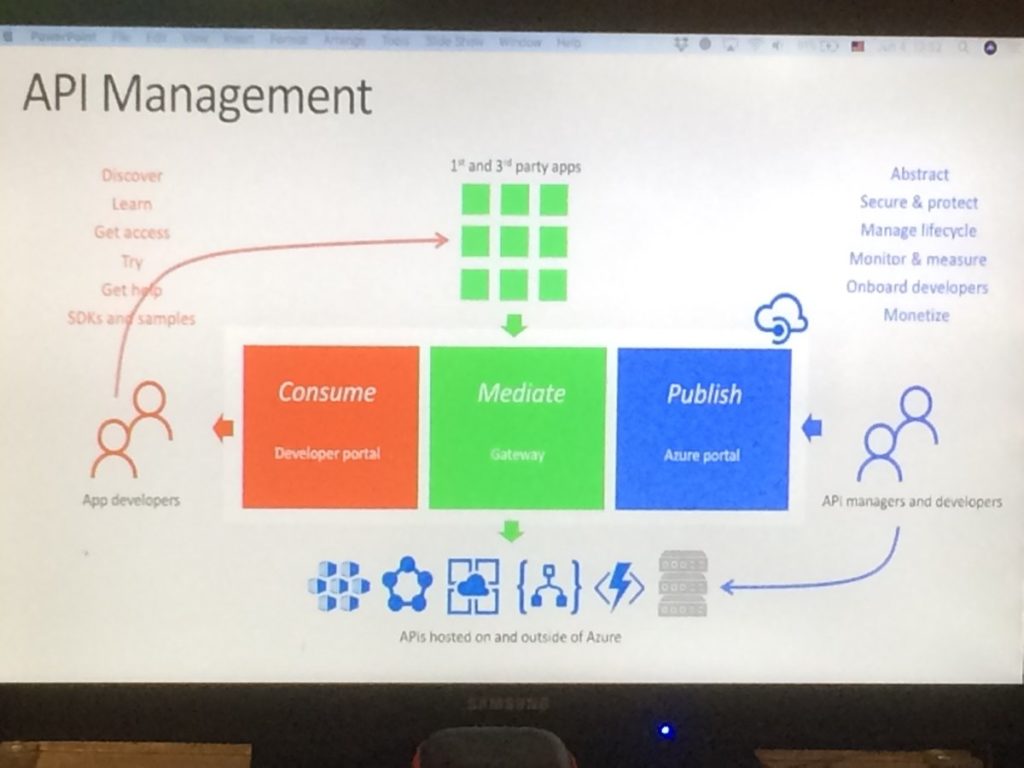

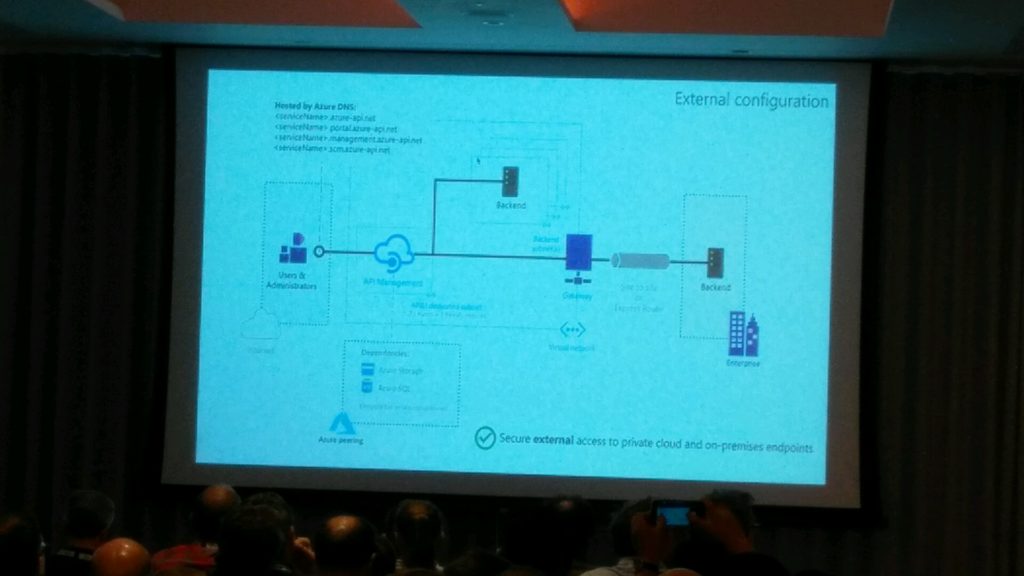

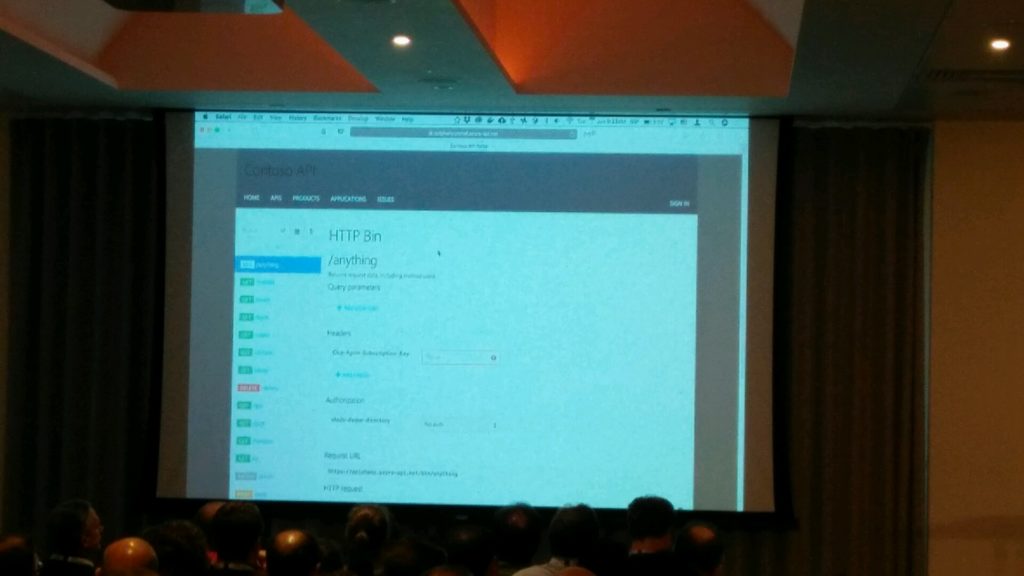

0915 — API Management deep dive

After the first session of day 2 Vladimir Vinogradsky took the stage to talk about API Management which is one of first Azure service that developers gets introduced too.

Vladimir has started of with list of features that Azure API Management offers and how it helps developers to consume, mediate and publish their web API’s. In this he has explained how you can open the azure portal and look at the documentation of your APIs which is powered by Swagger without writing single line of code.

He also went in to detail on authentication and authorization and explained how Azure API management will help you with various authentication mechanisms from simple username password combo to Azure AD authentication etc. He also mentioned how easy it is to use third party providers such as Google, Facebook with Azure API management.

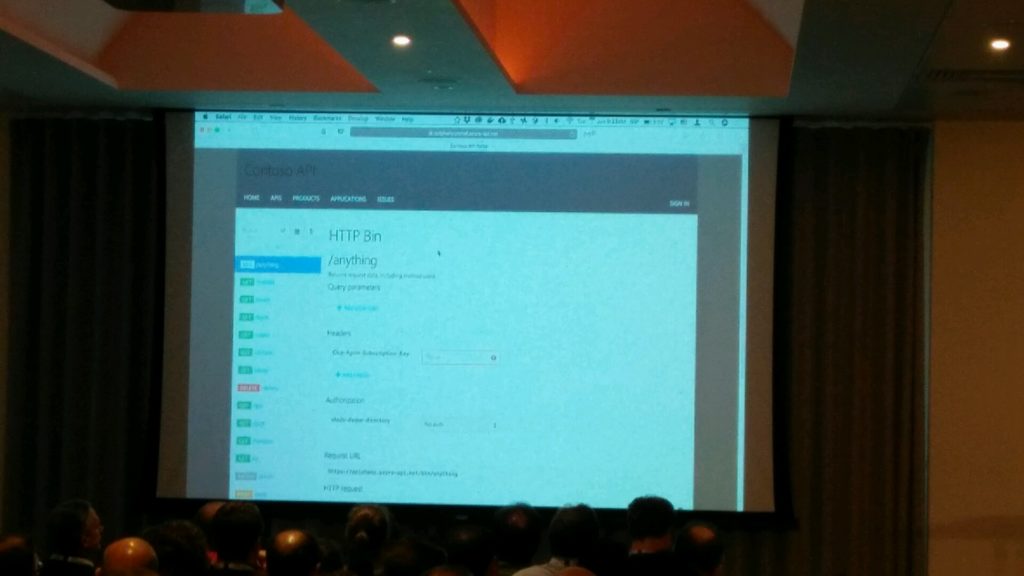

Vladimir then went into detail on what are all the frequent questions from API developers and how Azure API management can solve that. He explained this using some live demos which was well received from the audience.

1000 — Logic Apps Deep Dive

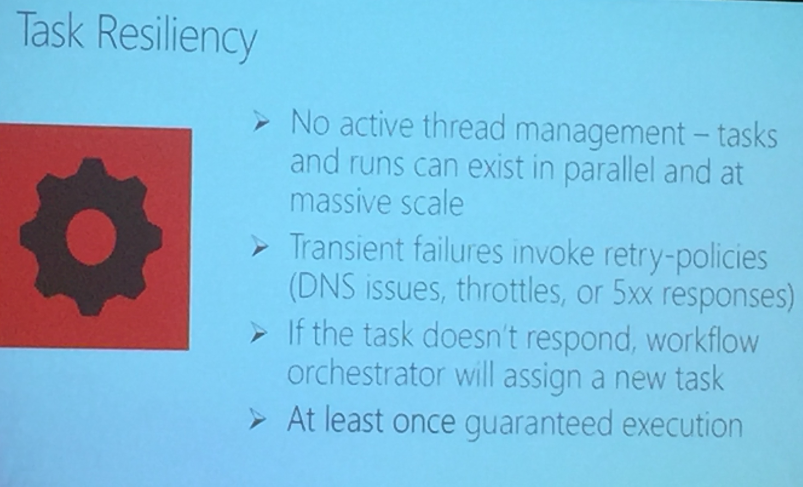

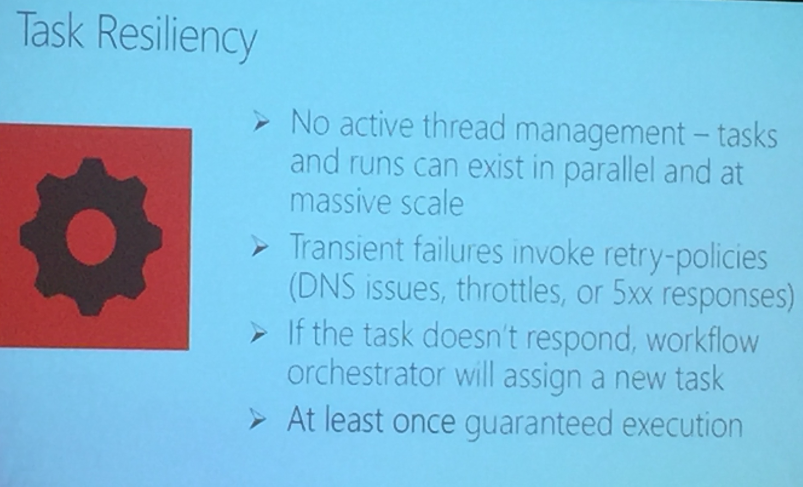

Kevin started with explaining Task Resiliency in Logic Apps

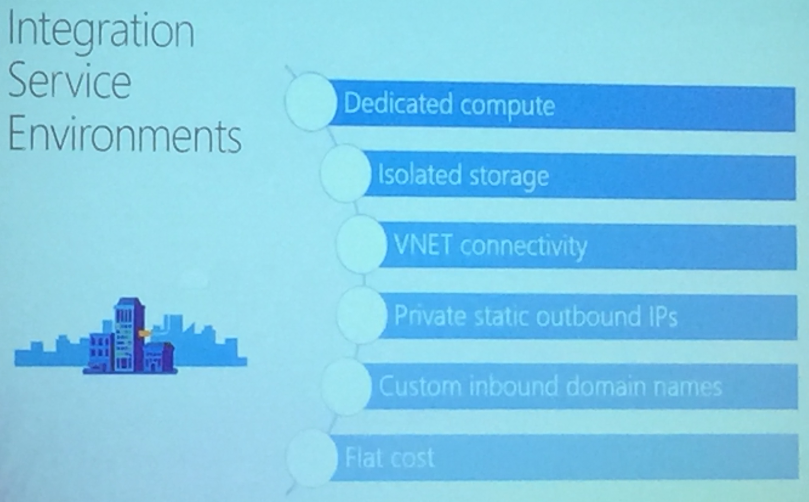

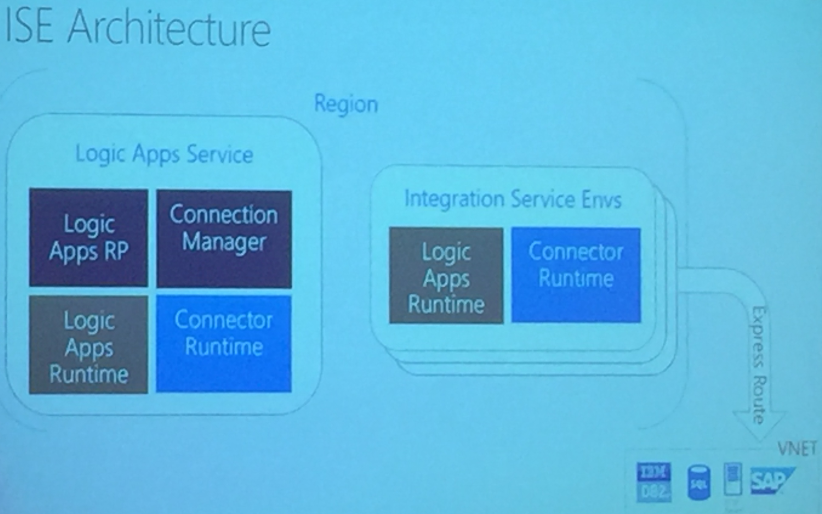

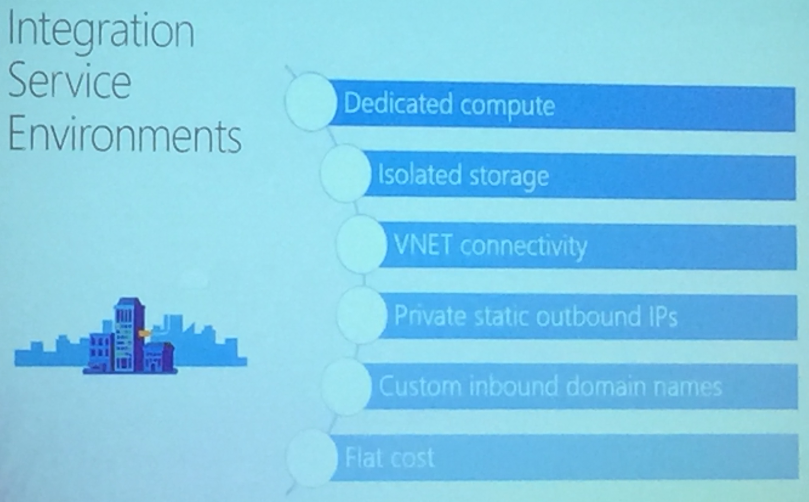

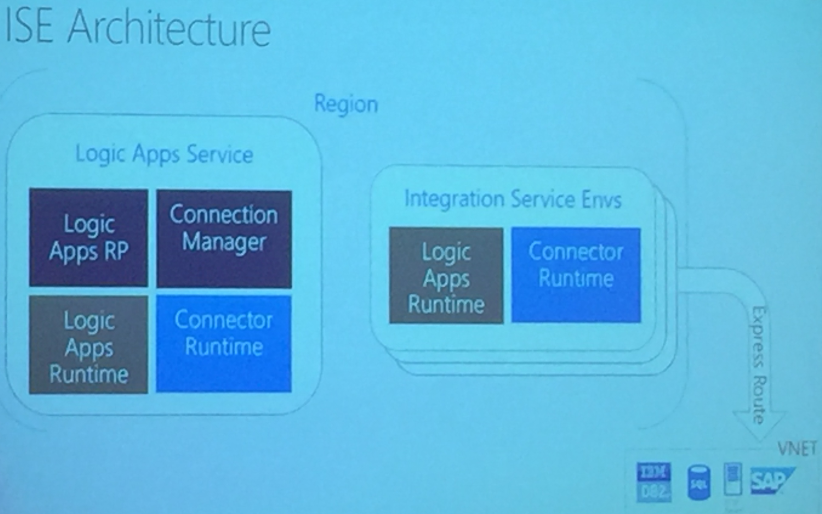

The highlight of the session was the demonstration of Integrated Service Environments (ISE) and its Architecture – but this is in the pre- private preview, means we need to wait for quite sometime.

Private Static IPs for Logic Apps are released with ISE

The deployment model of Logic Apps was also discussed with

Base Unit:

- 50 M action executions / month

- 1 standard integration account

- 1 enterprise connector (includes unlimited connections)

- VNET connectivity

Each additional processing unit

- Additional 50M executions / month

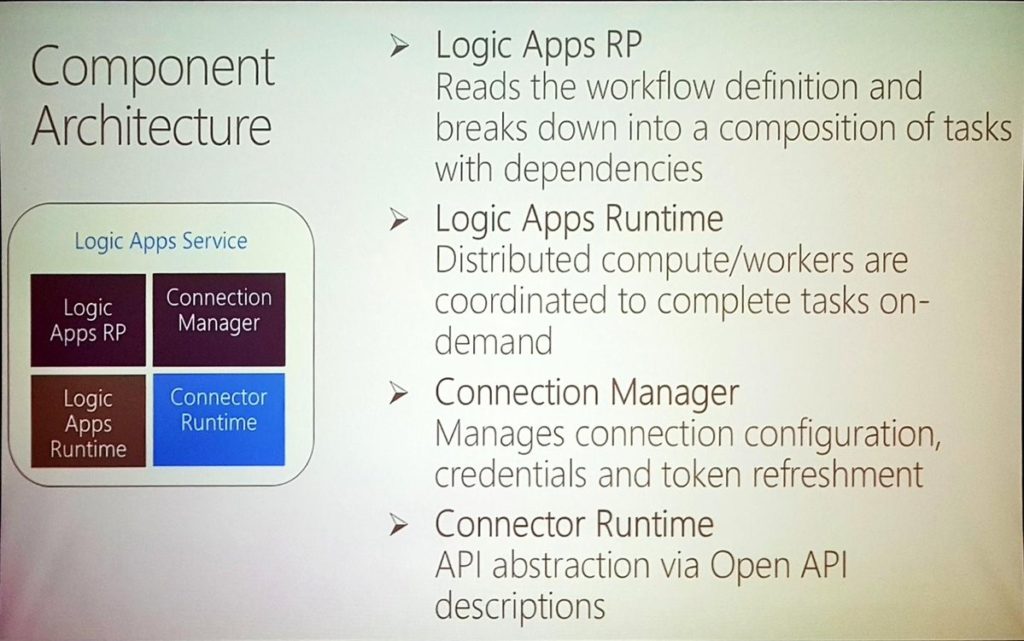

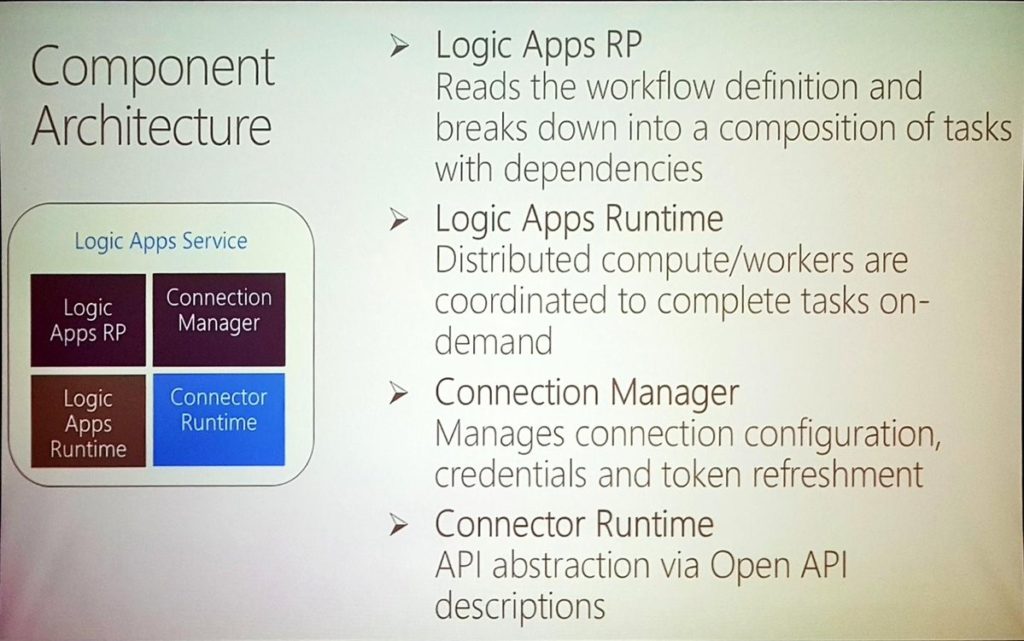

Logic Apps now has more than 200 Custom Connectors built. The Component Architecture of Logic Apps goes was also discussed.

1115 — Logic Apps Patterns & Best Practices

Kevin started this session with introducing the following patterns:

Work Flow patterns –

- Patterns are derived to implement Error Handling at work flows

- Define Retry policy – turn on/off retries, custom retries at custom and fixed rate as required for business.

- Run After patterns help in running logic apps after failure or time out. Limit can also be set for eg. You can stop Logic App execution after 30 seconds

- Patterns for Termination of execution of Logic Apps and associated Run Actions

- Scopes will have final status of all actions in that scope

- Implementation of Try-Catch-Finally in Logic Apps

- Concurrency Control for

- Runs

- Instances are created concurrently

- Singleton trigger executions include level parallelism

- Degrees of parallel execution can be defined

- Parallel Actions

- Explicit Parallelizations

- Join with Run After patterns

- For Each Loops

- Do Until Loops

- Patterns are discussed for Scheduling Executions. Example workloads can be clean up jobs

- Logic Apps can execute Run Once jobs. Example workloads can be time based jobs i.e. when you want to fire the action

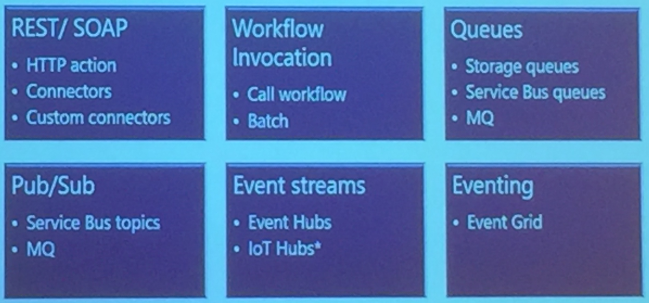

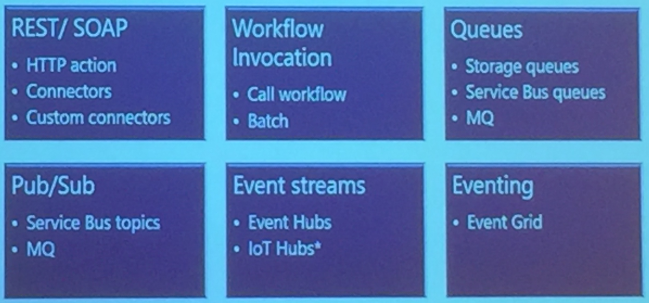

Logic Apps support Messaging Protocols like:

- REST/SOAP

- Workflow Invocation

- Queues

- Pub/Sub

- Event Streams

- Eventing

These provide workflow invocation and componentization of Logic Apps

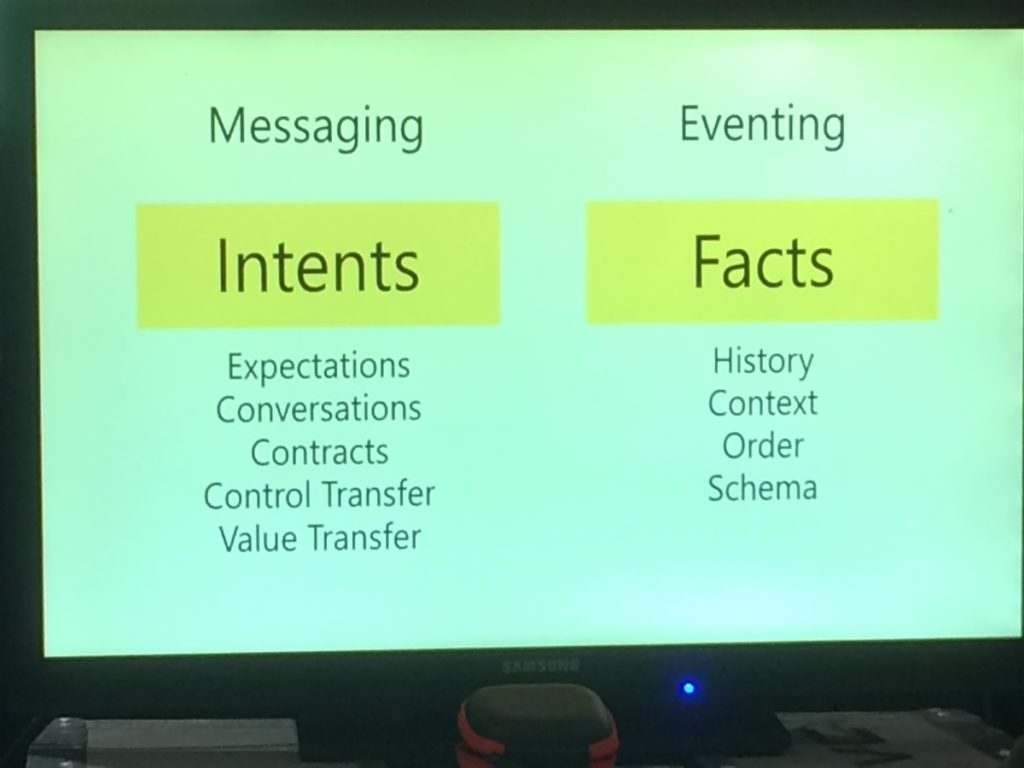

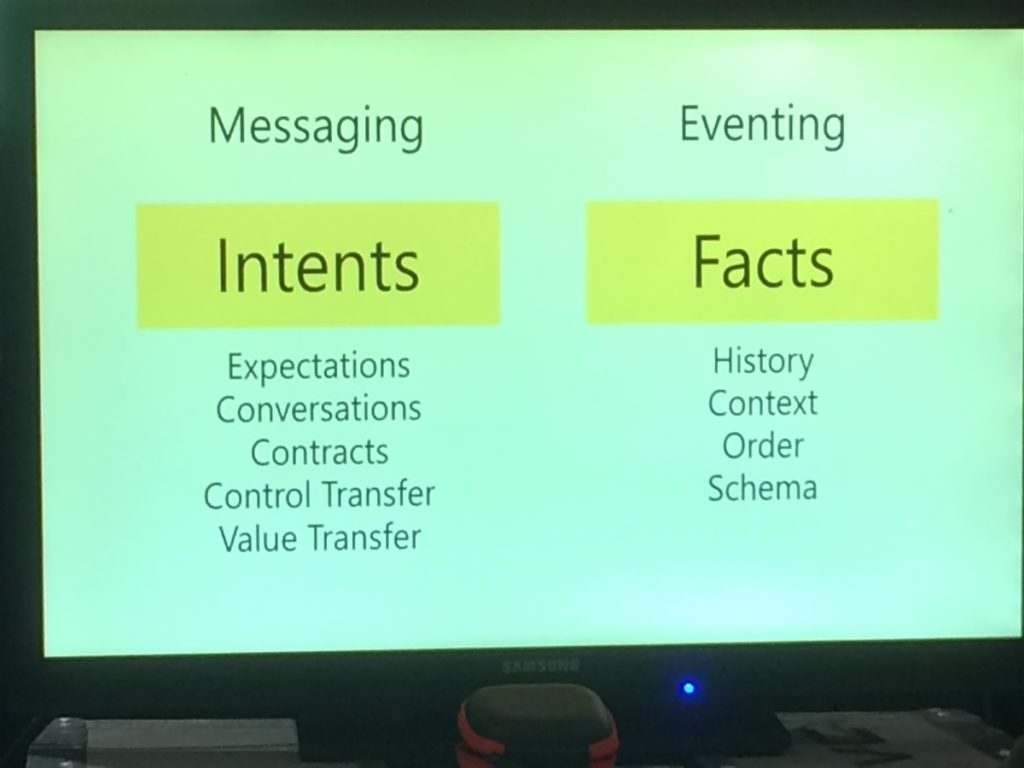

Messaging Patterns

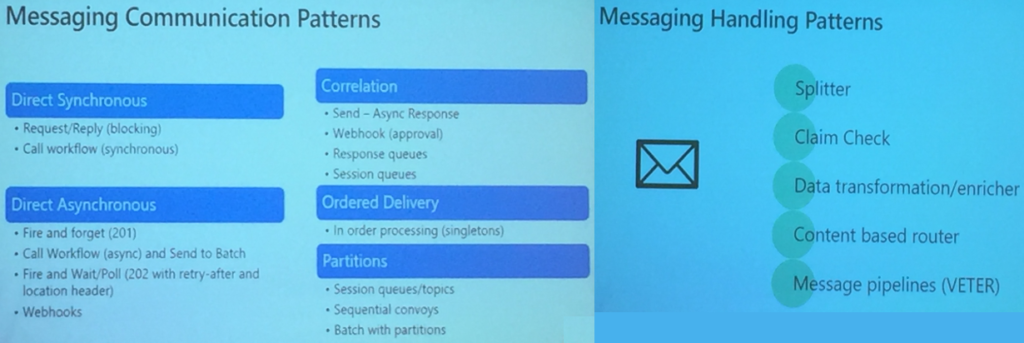

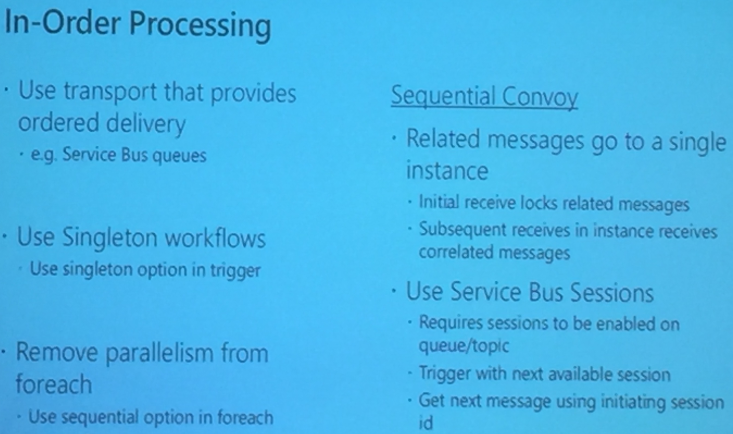

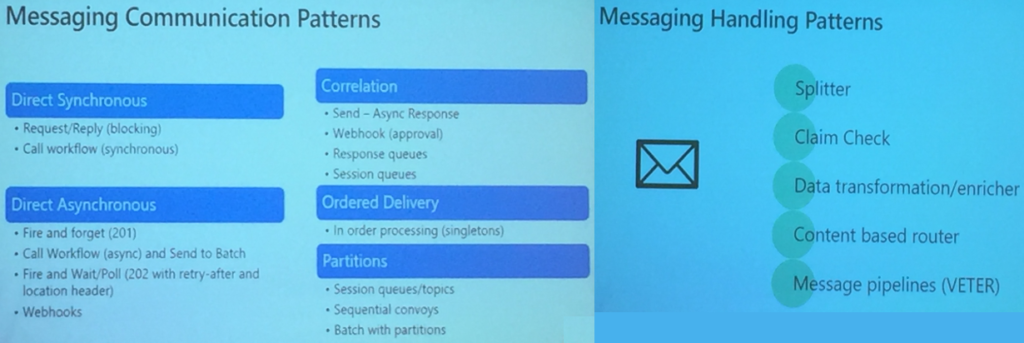

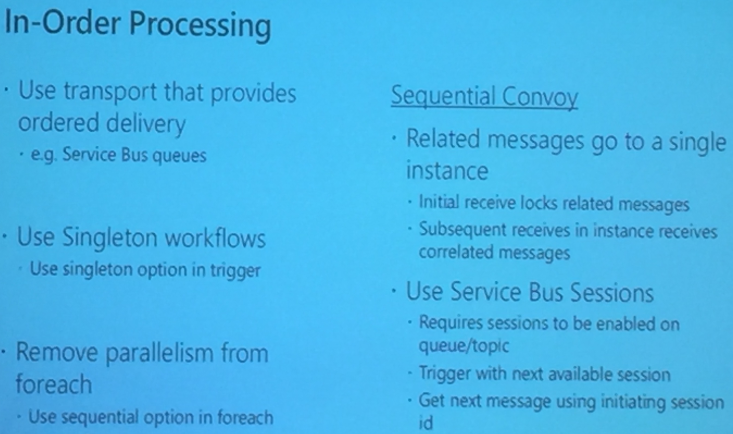

Kevin then discussed the patterns for messaging that are categorized as

- Messaging Communication Patterns

- Messaging Handling Patterns

Derek Li provided some Best Practices

- Working with Variables

- Variables in Logic Apps are global in scope

- Array is heterogenous

- Care needs to be taken when using variables in parallel for-each loop

- Sequential for-each comes handy for having order

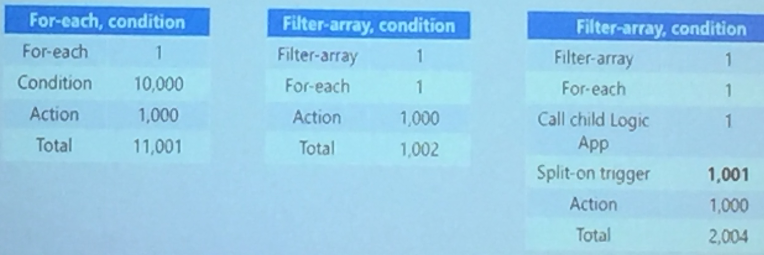

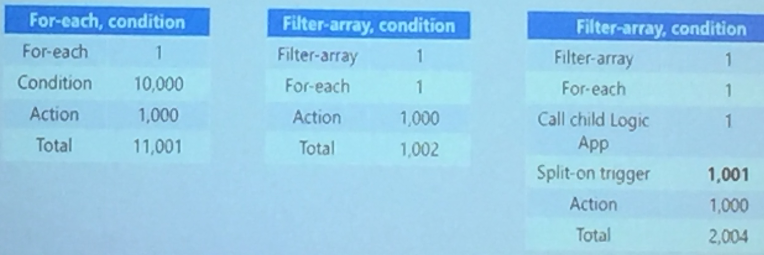

Derek Li made an impressive demo on how to efficiently use collections and parallel executions to process messages working with Arrays.

He also made a comparison of executing an array in Logic Apps in different possibilities that provided interesting inference.

He also made a comparison of executing an array in Logic Apps in different possibilities that provided interesting inference.

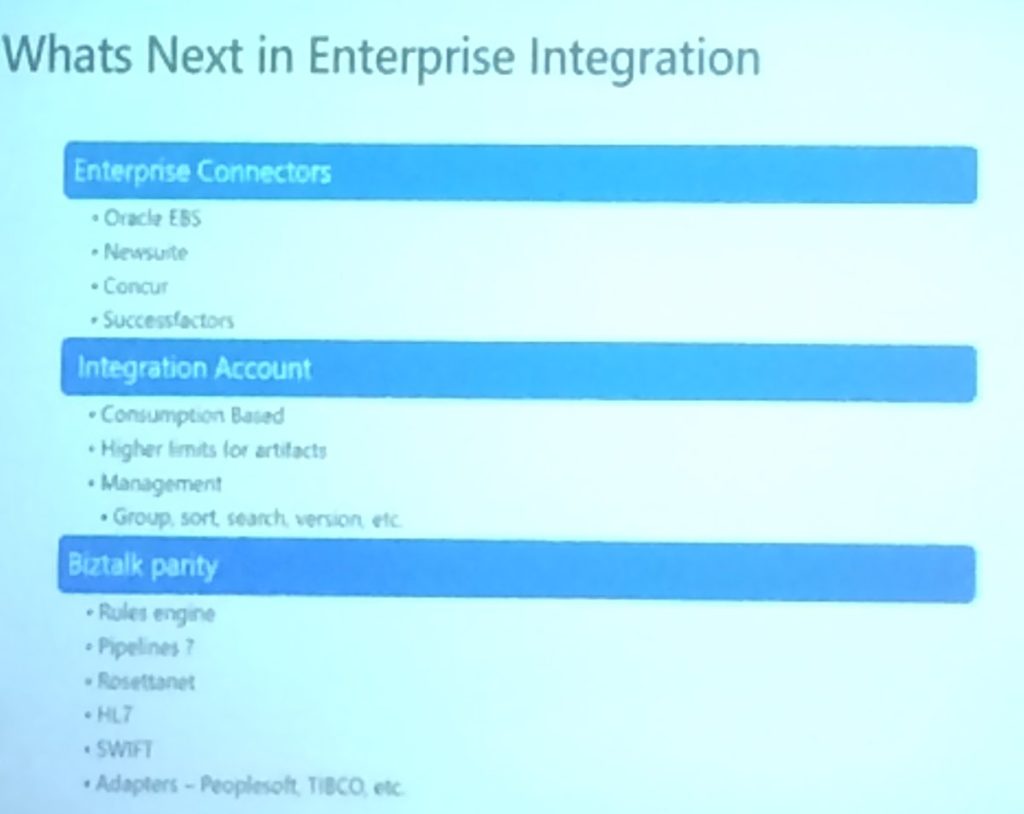

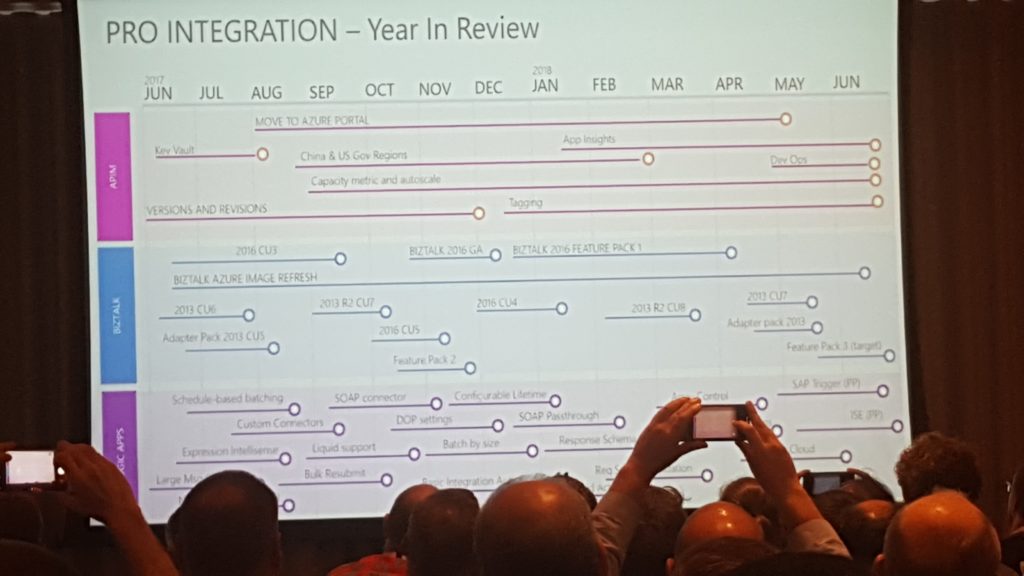

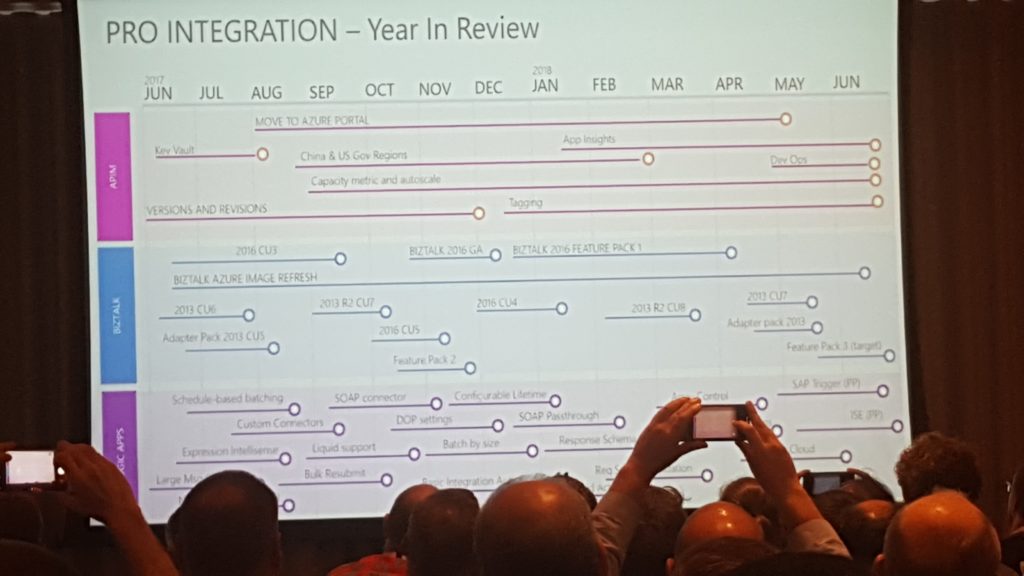

1200 — Microsoft Integration Roadmap

The last session before the lunch break was presented by Jon Fancey and Matt Farmer. The presentation was short and to the point. The audience got a view of the past and Microsoft plans towards future of integration.

Initially, we got a quick glimpse of all things that have been released as part of pro-integration in the past year. To emphasise that Microsoft is doing hard work in the integration space Jon and Matt announced that Microsoft has been recognised as a leader of enterprise integration in 2018 by Gartner.

Next came the interesting stuff, what Microsoft has in plans for the Logic Apps?

- Smart Designer – as seen in the other demos from Jeff and Derek they want to make the designer more user-friendly. They are looking into getting improved hints, suggestions and recommendations that actually apply to what you are using inside Azure.

- Dedicated and connected – for all the companies that care about the security of their integrations, Logic Apps will be available in a vnet.

- Obfuscation – another feature that will make the Logic Apps more secure within your organisation. Obfuscation will allow you to specify certain users that will be available to see the output of Logic App run.

- On-Prem – Logic Apps are coming to Azure stack.

- More: OAuth request trigger, China Cloud, Manage serviced identity, Testability, Manage serviced identity, Key Vault and Custom domain name for Logic Apps

Lastly, Jon and Matt revealed that they want to club every key azure integration such as Logic Apps, Event Grid, Azure Functions under one umbrella called Azure Integration Services.

The aim is to create a one-stop platform that will supply all the tools needed to fulfil your requirements in order to effortlessly bring your integrations to production in minimal time and maximum results. A platform that will allow you to run your integrations wherever you need it and however you need it, serverless or on-prem. We were told that Microsoft will provide guidance and templates across all the regions.

They finalised by acknowledging that there is still a lot to do and that many systems are not yet possible to connect with, but they strive to get everything into the platform including BizTalk, which they still see as part of the integration picture.

1330 — Post lunch, Duncan Barker, Business Development Manager at BizTalk360 thanked all the partners for their continued support. Then Saravana took stage to demonstrate the capabilities of BizTalk360 and ServiceBus360.

Highlight of this session — ServiceBus360 will be re-branded into Serverless360. For more updates, please read this blog post.

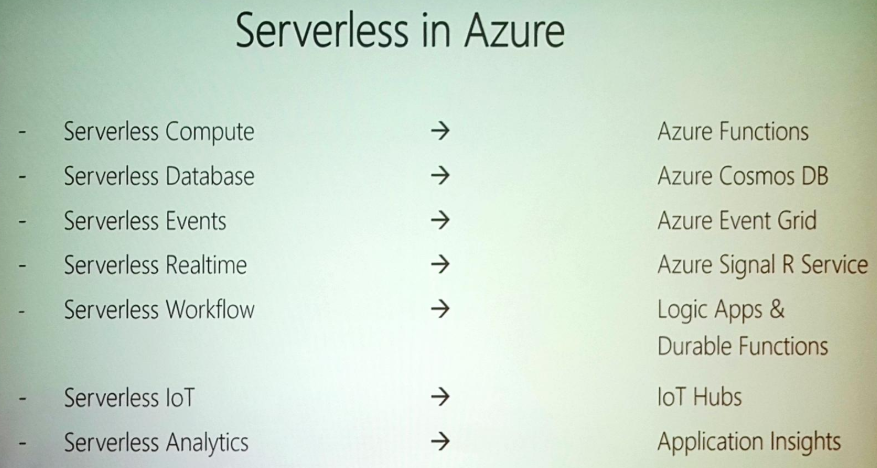

1415 – Serverless Messaging with Microsoft Azure

Steef started with introducing the concept of Severlesss with the evolution from VM -> Containers -> IaaS -> PaaS -> Serverless.

Serverless reduces Time to Market, billed at micro level unit, reduced DevOps.

Messaging in Serverless is like Down, Stay, Come i.e you retrieve message when you want and got good control over message processing

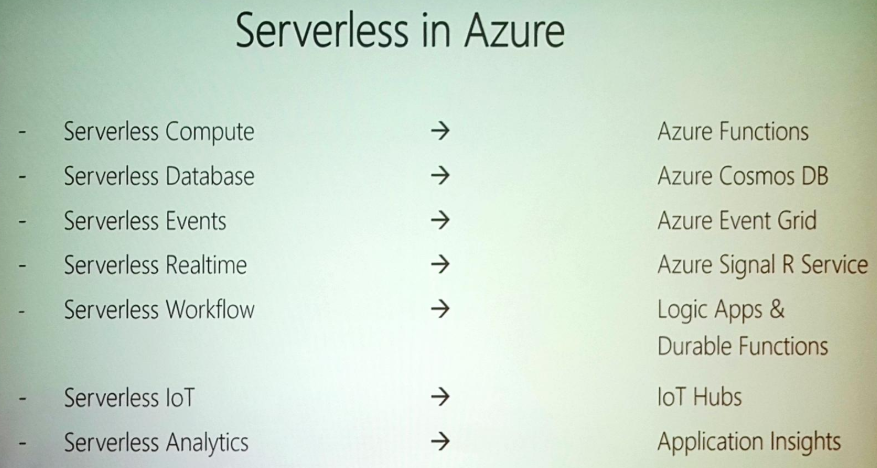

The categorization of Serverless in Azure looks like:

There are various applications for Messaging in Serverless

- Financial Services

- Order Processing

- Logging / Telemetry

- Connected Devices

- Notifications / Event Driven Systems

Azure Serverless Components that support messaging are – ServiceBus, EventHub, EventGrid & Storage Queues

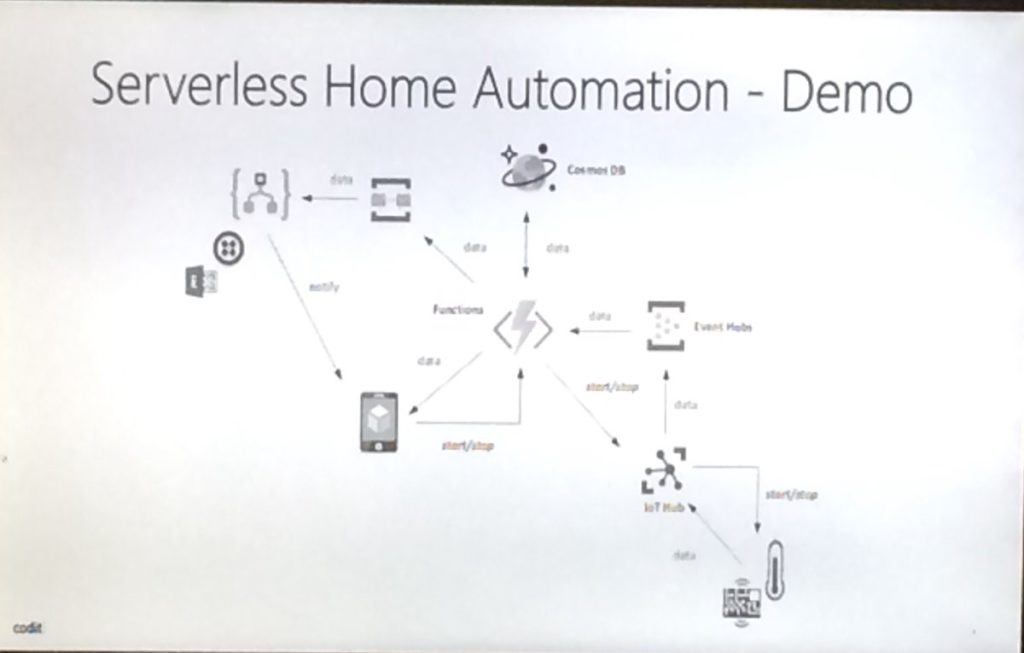

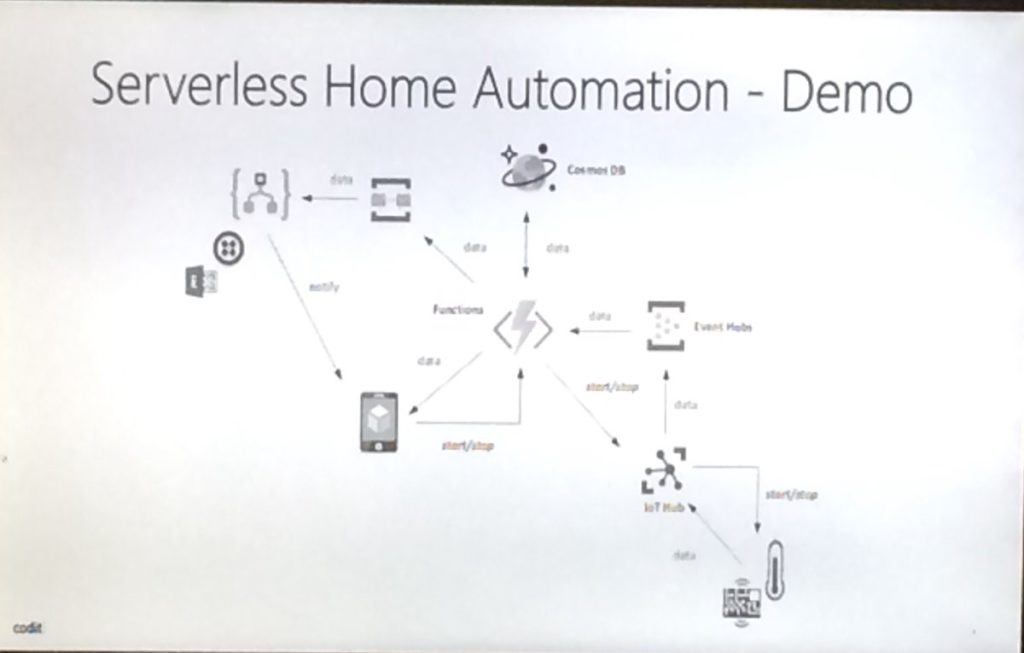

Steef provided lot of demo scenario for Messaging applications like

- Serverless Home Automation (Used Queues & Logic Apps)

- Connecting to Kafka Endpoint

- Toll Booth License plate recognition, that included IoT, OCR and Serverless components. He also used functions to process images.

- Pipes and Filters cloud patterns

- Microservice Processing

- Data and Event Pipeline

He suggested some messaging considerations:

He suggested some messaging considerations:

- Protocol

- Format

- Size

- Security

- Frequency

- Reliability

- Monitoring

- Networking

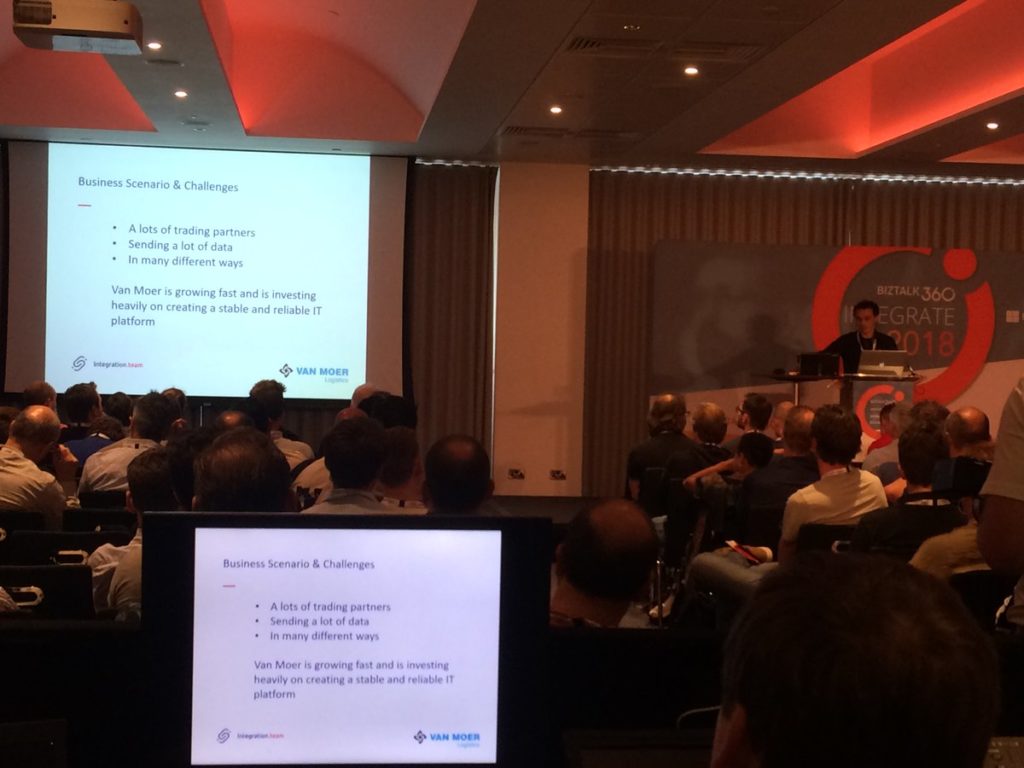

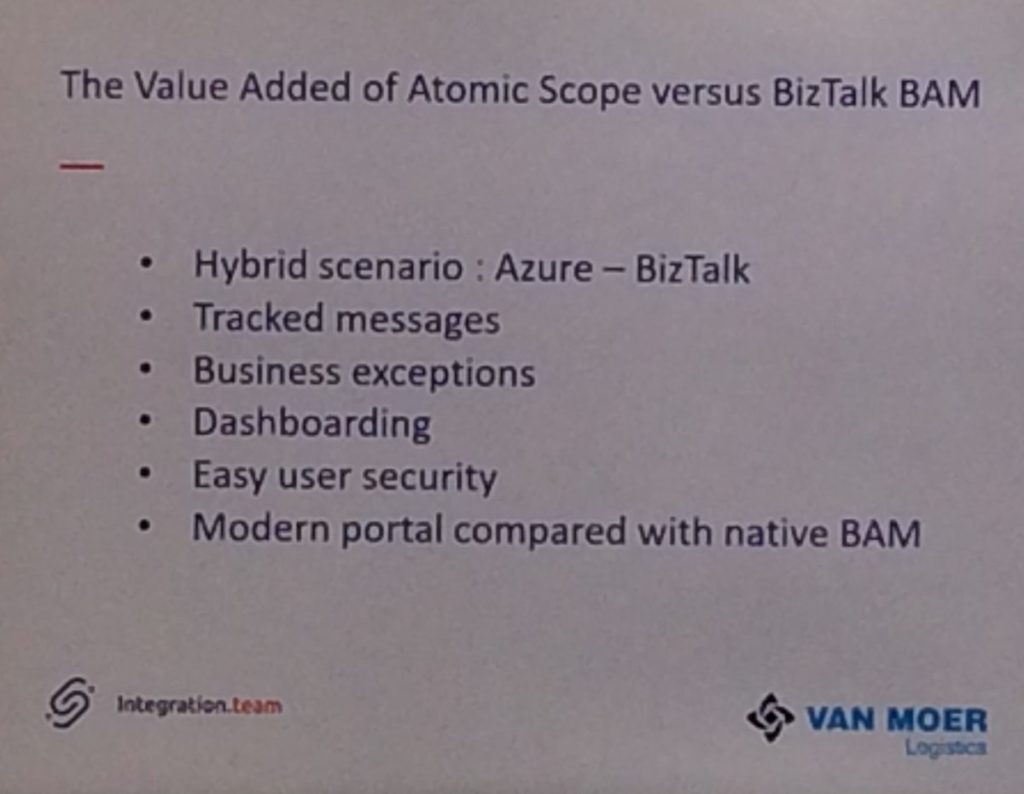

1450 — What’s there & what’s coming in Atomic Scope

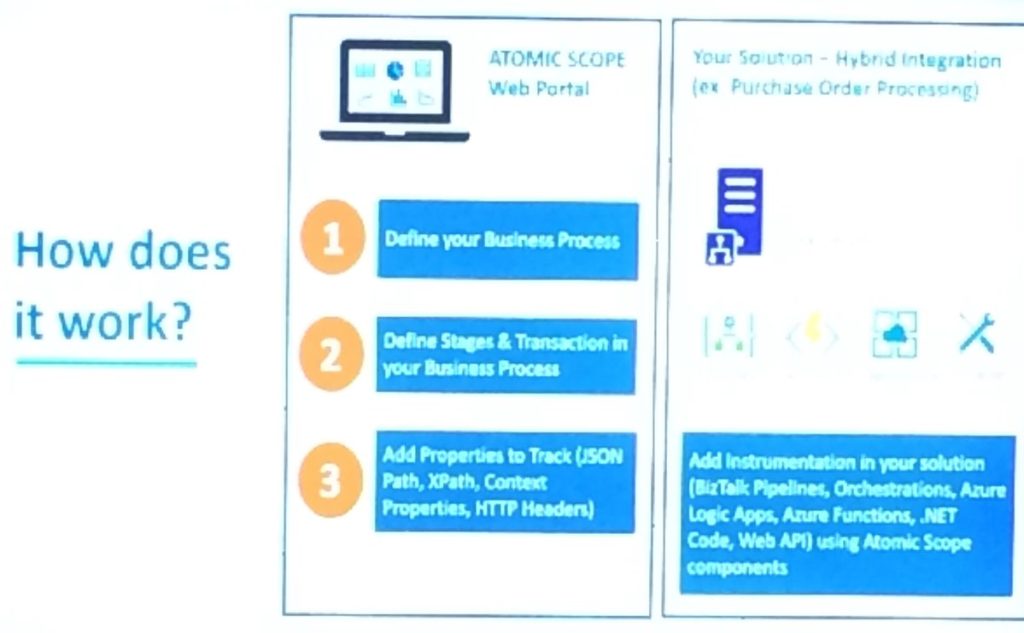

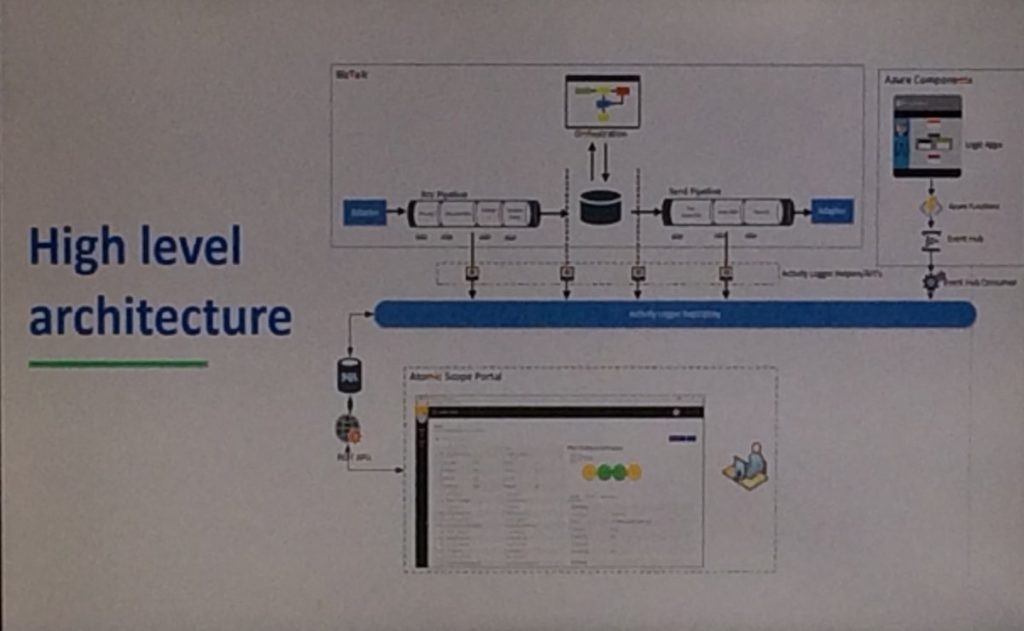

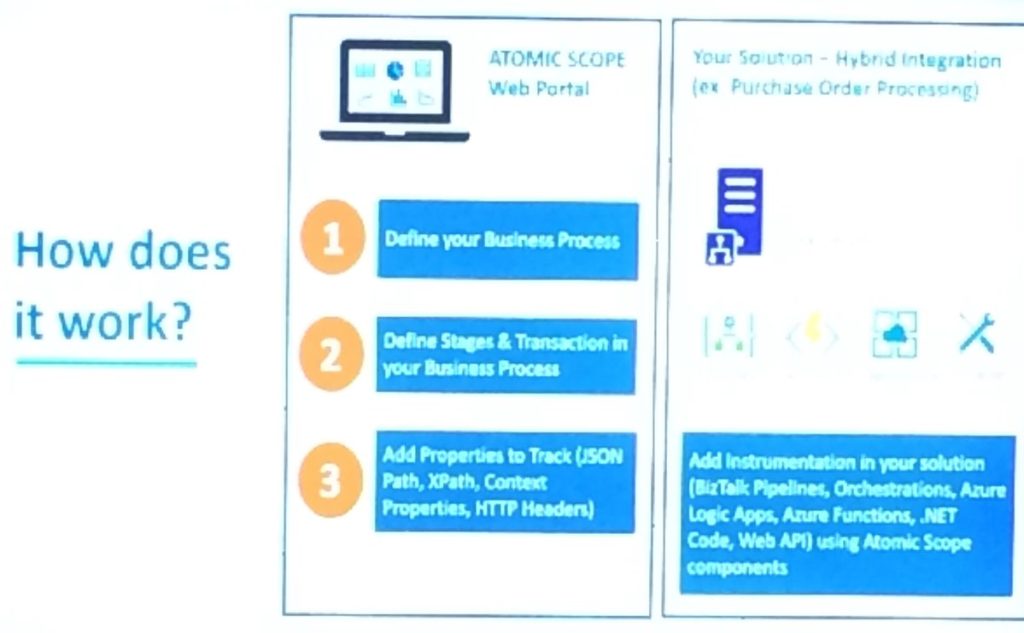

After the session on Serverless Messaging with Microsoft Azure, Saravana took the stage to present on Atomic Scope, our brand new product from Kovai Limited. Even though there was an considerable amount of interest in Atomic Scope throughout the day 1 of integration event, most of the participants haven’t got the chance to fully experience the product due to various reasons like time constraints etc.

Saravana started explaining the challenges of the end to end monitoring when it comes to BizTalk and Hybrid integration scenarios and provided a couple example business process belonging to different domains. He also then proceeded to explain list of things that Atomic Scope tries to address like security end to end business visibility etc.

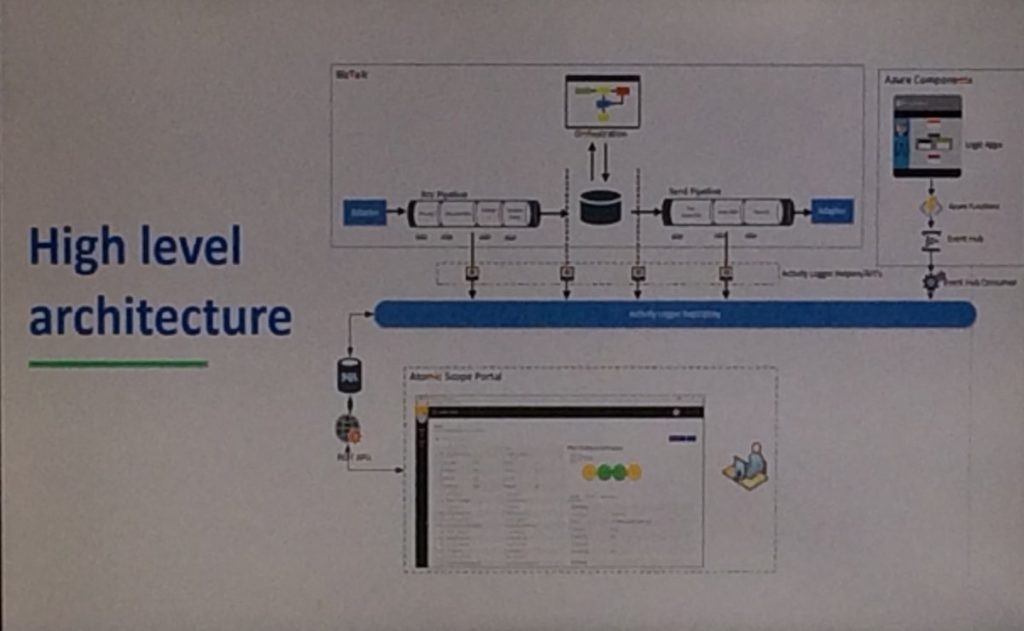

He then pointed out how much amount of effort that Atomic Scope can reduce when compared to the typical custom implementation solution for the end to end monitoring. Then Saravana went little bit deeper and explained how Atomic Scope actually works.

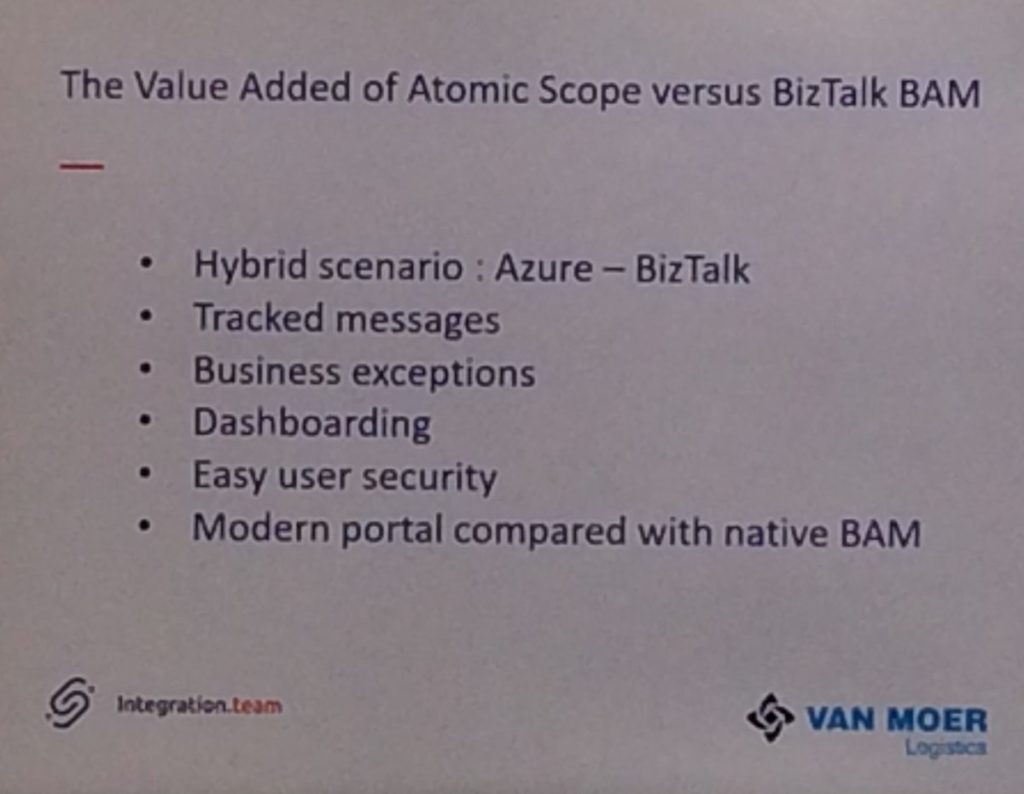

With that Saravana handed over the session to Bart from Integration Team and he showed how solution like BAM will not be sufficient for end to end monitoring and then he proceeded to explain a production ready atomic scope implementation which can add better value than BAM. Bart showed step by step process of how you can configure the business process and how tracking has happened once he dropped an EDI message to the APIM and On premise.

With that Saravana handed over the session to Bart from Integration Team and he showed how solution like BAM will not be sufficient for end to end monitoring and then he proceeded to explain a production ready atomic scope implementation which can add better value than BAM. Bart showed step by step process of how you can configure the business process and how tracking has happened once he dropped an EDI message to the APIM and On premise.

Bart’s presentation on Atomic Scope was very well received so much so that the AtomicScope booth was bombarded by participants after the presentation with people showing interest in the product and from people who was just curious to know more about the product.

1600 — BizTalk Server: Lessons from the Road

Sandro being a great lover of biz talk spoke about best practices such as making use of patterns , naming conventions , logging and tracing etc .

1640 — Using BizTalk Server as your Foundation to the Clouds

The last session of the day was done by Stephen M. Thomas, who gave his view on how BizTalk Server can be used as a foundation to using the cloud. Before he began his actual session, he introduced himself and mentioned a few resources he has been working on for learning purposes of Logic Apps. Amongst them are few Pluralsight trainings and hand-outs which can be found at his web site (http://www.stephenwthomas.com/labs).

The session consisted of two parts, being the Why you could use BizTalk Server combined with Logic Apps and Friction Factors which could prevent you from using them.

Why use BizTalk Server and Logic Apps

Stephen admits that Logic Apps don’t fit all scenarios. For example, in case your integrations are 100% on-premise or you have a low latency scenario at had, you could decide to stick with BizTalk Server. However, the following could be the reasons to start using Logic Apps:

- use connectors which do not exist in BizTalk Server

- load reduction on the BizTalk servers

- plan for the future

- save on hardware/software costs

- want to become an integration demo

Stephen sketched a scenario in which the need of connector exist which are not available in BizTalk. Think of for example scenarios for:

- social media monitoring, in which you need Twitter, Facebook or LinkedIn connectors

- cross team communication, in which you need a Skype connector

- incident management, in case you would need a ServiceNow adapter

Before Stephen showed few demos on batching and debatching with Logic Apps, he told that Logic Apps is quite good in batching, while BizTalk is not that good at it.

Friction factors

In the second part of his session, Stephen mentioned a number of factors which might prevent organisations to start using Logic Apps for your integration scenarios.

These factors included:

- We already have BizTalk (so we don’t need another integration platform)

- Our data is too sensitive to move it to the cloud

- The infrastructure manager says No

- Large learning curve for Logic Apps

- Azure changes too frequently

- CEO/CTO says NO to cloud

All these factors were addressed by Stephen, putting the door open to start using Logic Apps for your integrations, despite the given friction factors.w

With that, we wrapped up day 2 at INTEGRATE 2018 and it was time for the attendees to enjoy the INTEGRATE party over some drinks and music.

Read the Day 3 highlights here.

Thanks to the following people for helping me to collate this blog post—

- Arunkumar Kumaresan

- Umamaheswaran Manivannan

- Lex Hegt

- Srinivasa Mahendrakar

- Daniel Szweda

- Rochelle Saldanha

Author: Sriram Hariharan

Sriram Hariharan is the Senior Technical and Content Writer at BizTalk360. He has over 9 years of experience working as documentation specialist for different products and domains. Writing is his passion and he believes in the following quote – “As wings are for an aircraft, a technical document is for a product — be it a product document, user guide, or release notes”. View all posts by Sriram Hariharan

by Sriram Hariharan | Jun 4, 2018 | BizTalk Community Blogs via Syndication

June 4, 2018 — The day for INTEGRATE 2018.

0430 — It all started early for the BizTalk360 team. Train ride, walk to the underground station, a tube ride and finally the team reached 155 Bishopsgate, etc.Venues — the event venue.

0610 — Activities sprung off in a flash to get the venue set up for the big day — pulling up banners, setting up the registration desks, and more.. But what happens when 15 people get together to do these, job done in less than an hour.

0715 — Attendees start to come in to the venue and the numbers started to increase as the clock passed 8 AM. Everything started to pick up pace as we were quite strict to maintain the event on time.

0830 — We clocked over 350+ attendees who had been given their badges and the welcome kit.

0845 — Time for Saravana Kumar, Founder/CTO of BizTalk360 to get the event going with his welcome speech. Saravana extended his thanks to all the attendees (420+), speakers, sponsors and his team for making #INTEGRATE2018 a grand success.

0855 — Saravana introduced Jon Fancey to deliver the keynote speech on “The Microsoft Integration Platform”.

0900 — Jon Fancey started his talk with the words “I wanna talk about change“. His talk was focused on history, change, inevitable disruptions in the technology and Microsoft’s approach to work with partners and ISVs to keep up and innovate.

0920 — Jon Fancey presented a case study about Confused.com and introduced Mathew Fortunka, Head of development for car buying at Confused.com. Some of the very interesting insights from the talk of Mathew Fortunka were — Confused.com’s pricing flow is powered by Azure Service Bus and includes a consumption based model. He explained how Confused.com delivers the best price to customers.

0935 — Jon Fancey sets up the context for #INTEGRATE2018 with the fictional “Contoso Retail” example (very similar to the Contoso Fitness example they showed during INTEGRATE 2017). He showed how the traditional Contoso example can change with the Integration concepts, and how Microsoft is using IPaaS offerings in their supply chain solutions. The Contoso Retail is divided into four pieces —

- Inventory check and back order,

- Order processing and send to supplier,

- Register delivery and alerting the customer,

- Pick up and charge the credit card

Key updates from this fictional example were —

- VNET integration for Azure Logic Apps

- Private preview of bi-directional SAP trigger for Azure Logic Apps

- Demo using a Logic Apps trigger based on a SAP webhook trigger with the webhook registered in the gateway

- Private hosting of Azure Logic Apps using Integration Service Environments (ISE)

1005 — Just one hour into #INTEGRATE2018 and already few key announcements from the Microsoft Pro Integration team. This got the audience definitely interested and to look forward for more in the day / over the next few days. Jon Fancey wrapped up his talk with the recap of the key announcements during the talk and a quote from Satya Nadella, Microsoft CEO.

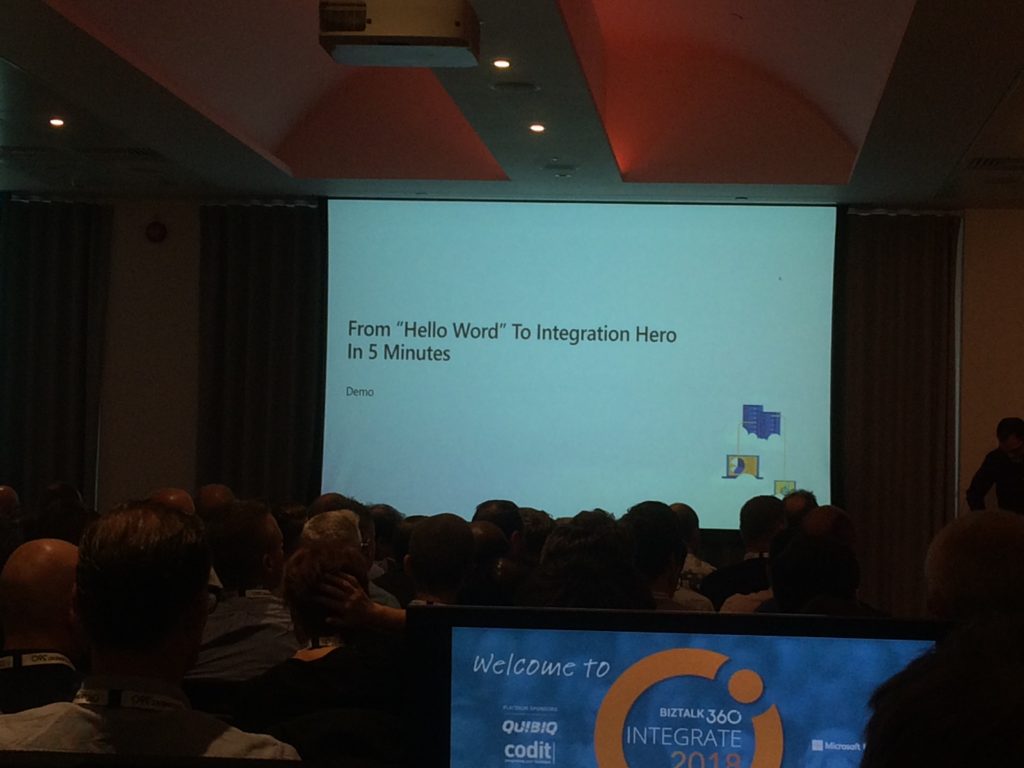

1015 — Second session of the day by Kevin Lam and Derek Li on “Introduction to Logic Apps“. Kevin started off with the question of “How many in this room have known/worked on Logic Apps?”. 50-60% of the audience raised their hand and this shows how popular Logic Apps is in the Integration space. Then, Kevin Lam explained about the basics of Azure Logic Apps for people who were new to the concept. Logic Apps has over 200+ connectors. Kevin showed the triggers that are available with Logic Apps.

1027 — Derek Li showed how easy it is to move from “Hello World” to Integration Hero in just 5 minutes with an interesting demo. The power of having Azure and using Machine Learning, Artificial Intelligence to the integration process really propels you to become an Integration hero.

Post the demo, Derek continued his talk and showed how you can get the same experience as in the Logic App designer in Visual Studio (for developers). Kevin also showcased a weather forecast demo in just a few clicks.

The second demo scenario was even an interesting one with a little complexity added to it — receiving an invoice, using Optical Character Recognition (OCR) to read the image and Azure Function to process the text in the image, and send an email if the amount in the invoice accounted to more than $10.

New Logic App features —

- Running Logic Apps in China Cloud

- Smart(er) designer – with AI – better predictive management of connector operations make it even faster

- Dedicated and connected – on the ISE

- Testability

- On-premise (Azure Stack)

- Managed Service Identity (MSI) – LA can have its own identity and access your system

- OAuth request trigger

- Output property obfuscation

- Key Vault Support

1045 – Networking break time over some coffee

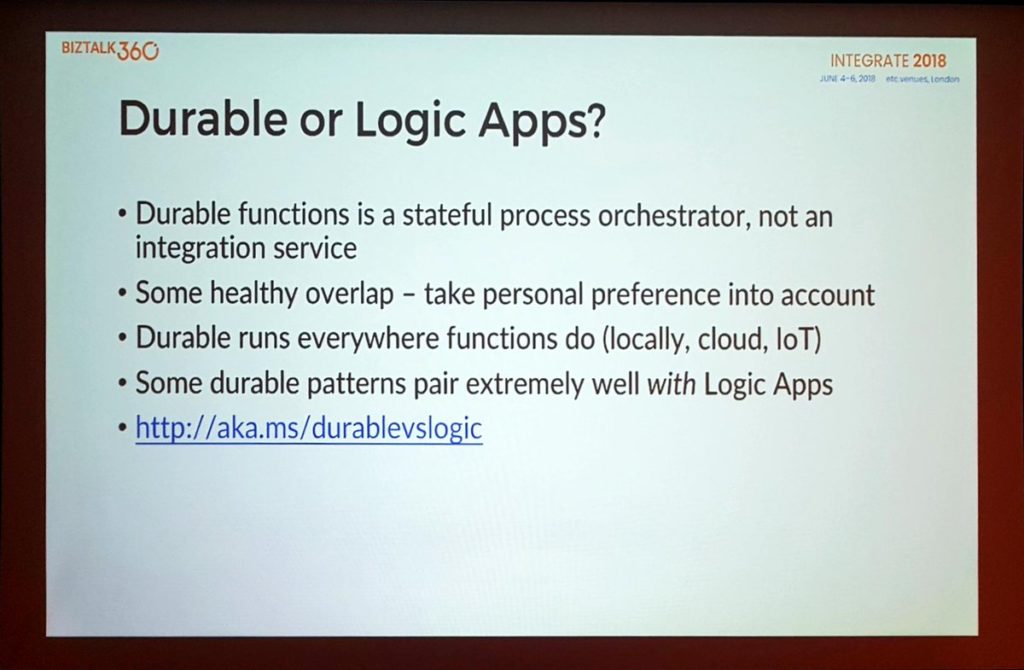

1115 — Jeff Hollan started his talk by saying “INTEGRATE is one of his favourite conference to attend and he loves coming here every year”. Jeff kicked off with a one liner explanation of “What is Azure Functions?”

Then Jeff went deeper into the Azure Functions concepts such as Trigger, Bindings. Jeff showed a nice demo of how you can create an Azure Function using Visual Studio.

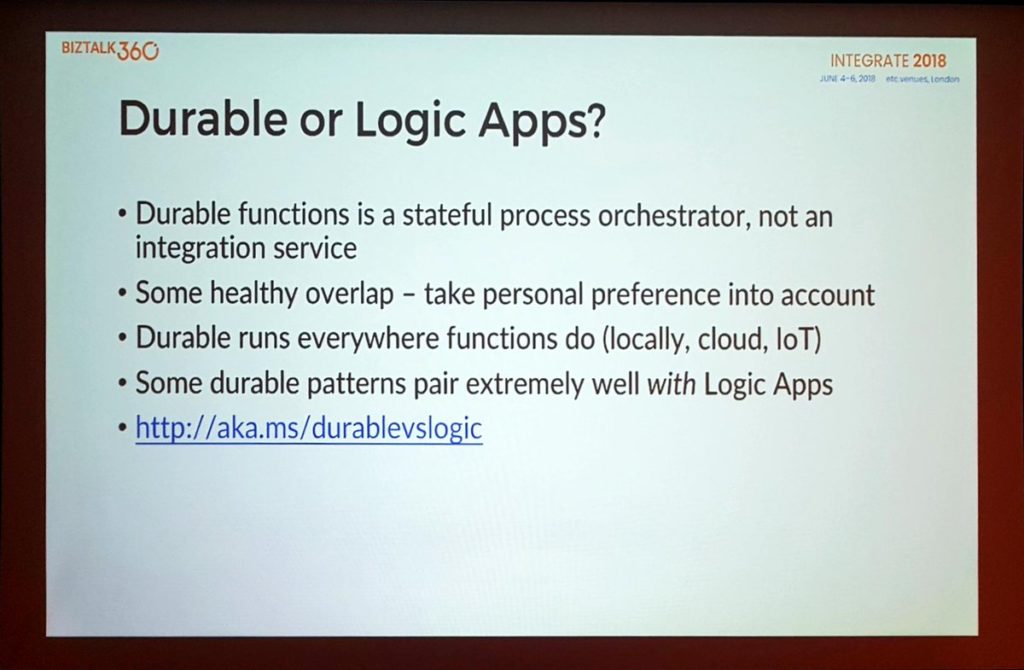

Jeff wrapped up his session by giving best practice tips for Azure Functions and the ways in which you can run Azure Functions. Jeff also spoke about Durable Functions and the limitations/tips to use Durable functions. Jeff gave a nice comparison of when you should use Durable and Logic Apps.

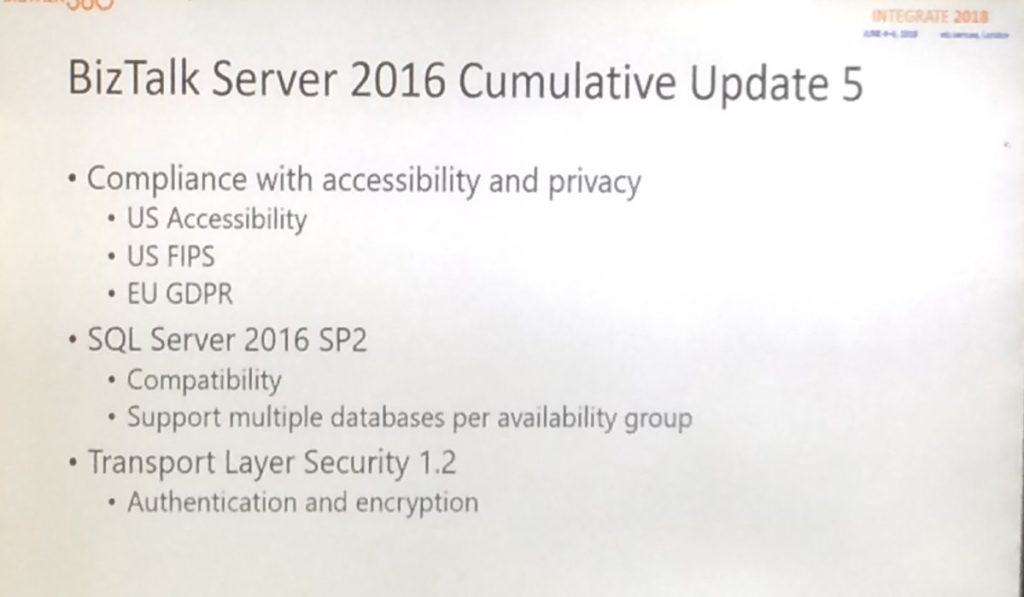

1200 — Paul Larsen and Valerie Robb took the stage to talk about “Hybrid integration with Legacy Systems”. They started off with whats coming in BizTalk Server 2016 and the most important update was the announcement of BizTalk Server 2016 Cumulative Update (CU) 5. They also showed the traditional BizTalk Server life cycle diagram that showed that just a month is left ahead for support to end for BizTalk Server 2013 and BizTalk Server 2013 R2.

Paul also pointed about the BizTalk Server Migration Tool, which will make migration from these versions to BizTalk Server 2016 easier.

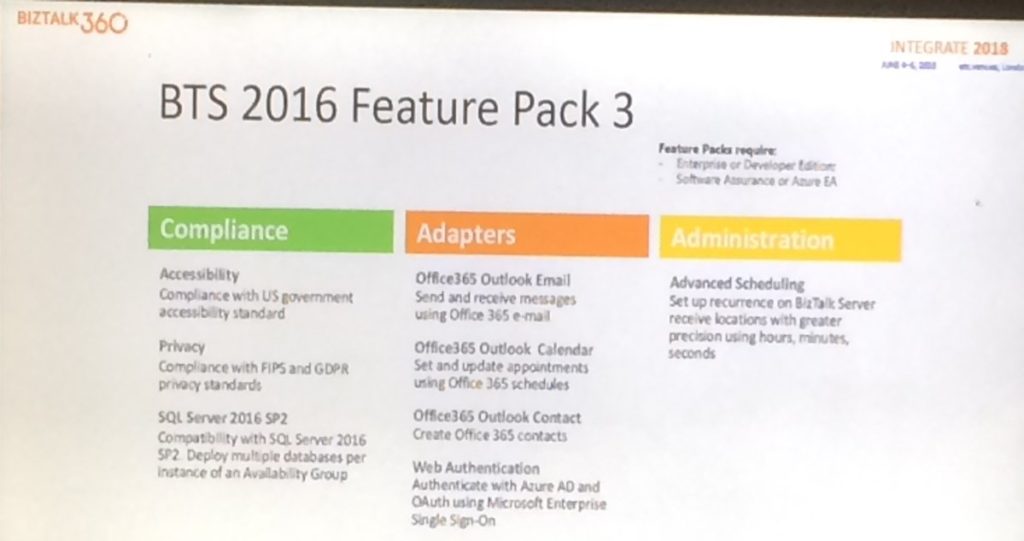

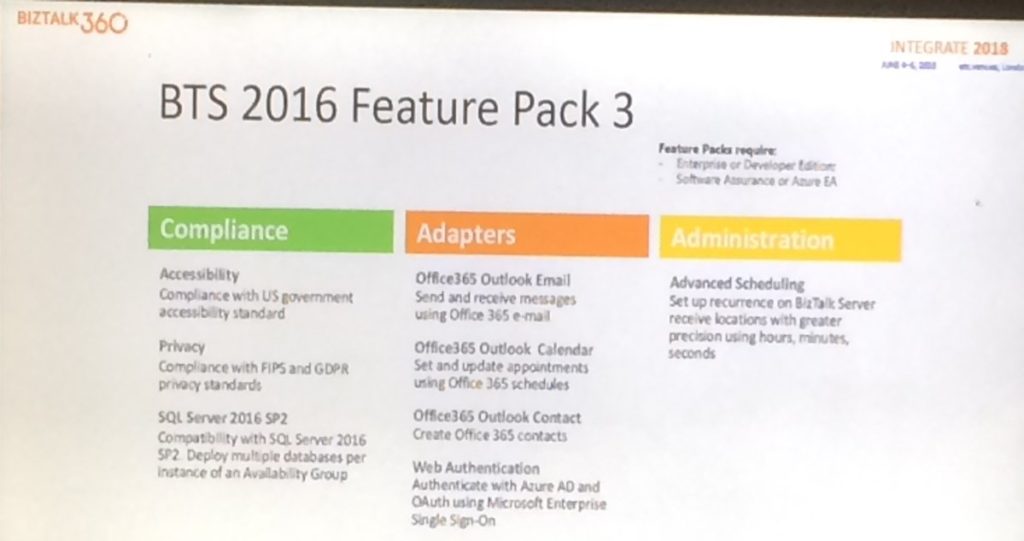

BizTalk Server 2016 Feature Pack 3

Paul announced the availability of BizTalk Server 2016 Feature Pack 3 by the end of June 2018. This Feature Pack will contain, amongst others, the following features:

Compliance:

- Accessibility – compliance to US government accessibility standard

- Privacy – compliance with GDPR and FIPS privacy standard

- Support of SQL Server 2016 SP2

Adapters:

- Office 365 Outlook Email

- Office 365 Outlook Calendar

- Office 365 Outlook Contacts

- Web Authentication

Administration:

The good thing of SQL Server 2016 SP2 support is, that when you have an Always On setup with Availability Group, you can have multiple BizTalk databases in the same SQL Instance, thereby cutting down license and operation costs.

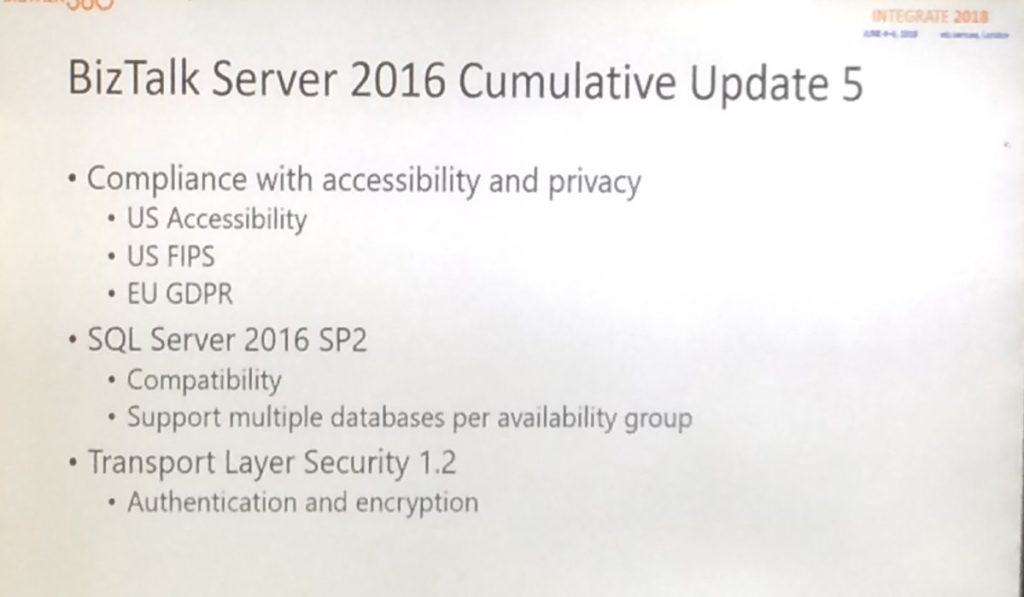

Besides FP3, the BizTalk team is also working on BizTalk Server CU 5, which is expected for coming July. Few months later, there will also be a CU5, which contains Feature Pack 3.

CU 5 will contain the following:

- compliance with US Accessibility

- US FIPS

- EU GDPR

- SQL Server 2016 SP 2 (multiple databases per Availability Group

- TLS 1.2

1245 — Lunch time

1345 — Post lunch, it was time for Miao Jiang from the Microsoft API Management team to talk about “Azure API Management Overview“.

During this session, Miao started with telling about the importance of API`s which started in the periphery but are now in the core of Enterprise IT. Nowadays, API’s can be considered the default way to do it.

Miao explained both the Publish side and the Consumption side of API’s and told about the steps to take with API Management, being:

- Consume – use the Developer portal

- Mediate – that’s done in the Gateway

- Publish – use the Azure portal

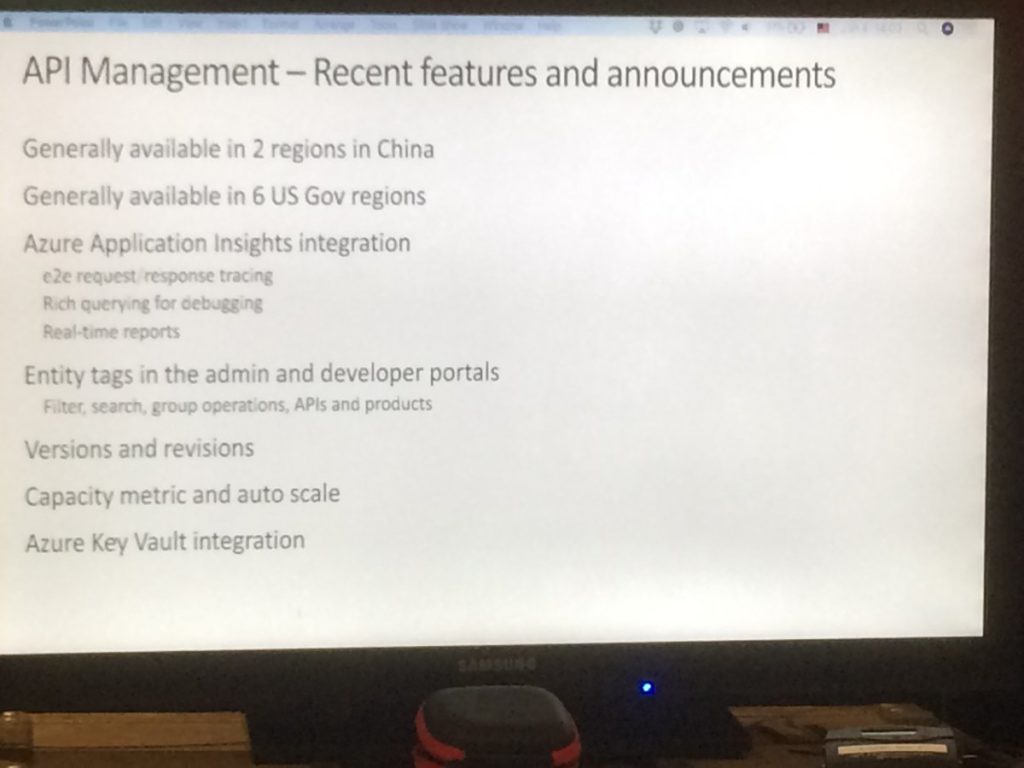

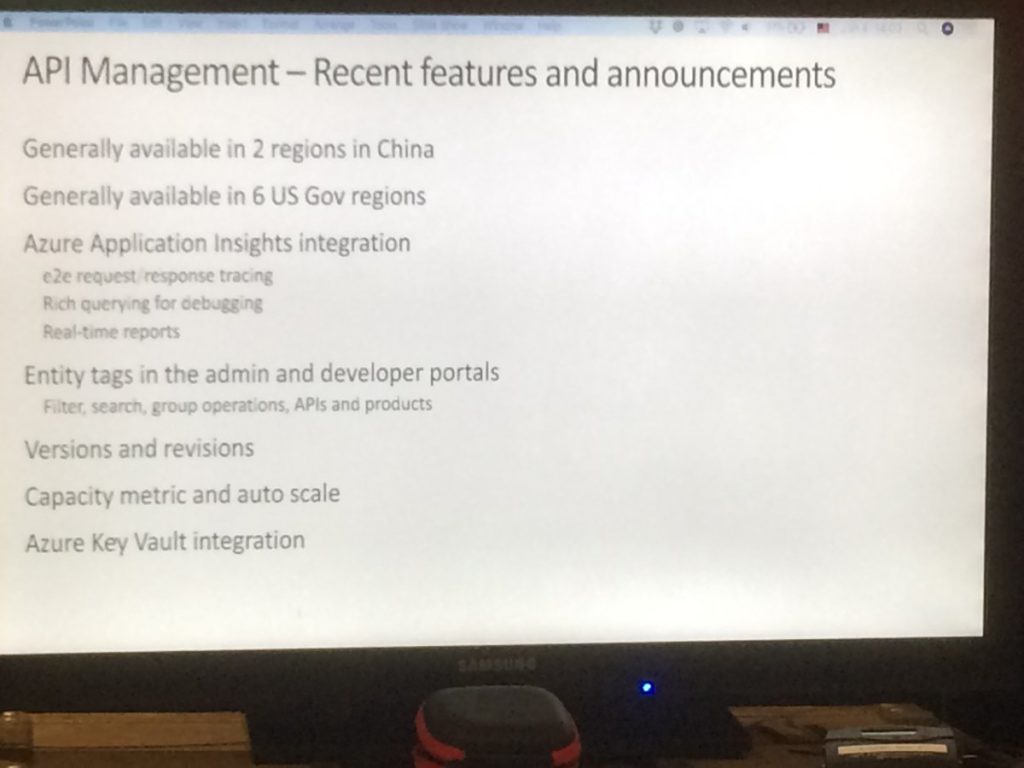

Next, he spoke about encapsulating common API functions like access control, protection, transformation and caching via API Policies. After also telling about Policy Expressions, he updated the audience on the recent features and announcements.

Recent features and announcements

Here’s the overview:

- General Availability of 2 Chinese regions

- General Availability of 6 US Governmental regions

- Integration with Azure App Insights

- Support of Entity Tags in the Admin and Dev portal

- Support of Versions and Revisions

- Integration with KeyVault

Miao ended his session with an extended demo, during which he showed, amongst others, how to use Policies to limit the number of requests, how non-breaking compatibility Revisions and breaking compatibility Versions work and also how to mock API’s, enabling Developers and Admins working in parallel on the same API.

1430 – Next up was Clemens Vasters to talk on “Eventing, Serverless and the Extensible Enterprise“. Clemens set the scene by sketching few scenarios. The first scenario was around a photographer who was uploading photos to Service Bus, which triggered an Azure function to automatically resize photos to a particular size. The photos could next by ingested, via an Azure Function, to for example Lightroom, Photoshop or Newsroom app.

The second scenario was about sensor driven management, which can be used for, for example Building management. Buildings could contain sensors for, for example:

- Occupance (motion sensors)

- Fire/Smoke

- Gaz/Bio hazard

- Climate (temperature/humidity)

With that information available, it will become easy to ask all kind of questions, which can be necessary in case of, for example, an emergency.

The scenarios were just used to point out the incredible amount of possibilities with the current feature set. Clemens pointed out few of the characteristics of services, like they are autonomous and should not hold state.

Clemens concluded that the modern notion of a service is not about code artifact counts or sizes or technology choices; it is about ownership.

Continuing, Clemens told about Eventing and Messaging and about their characteristics and gave some examples on both.

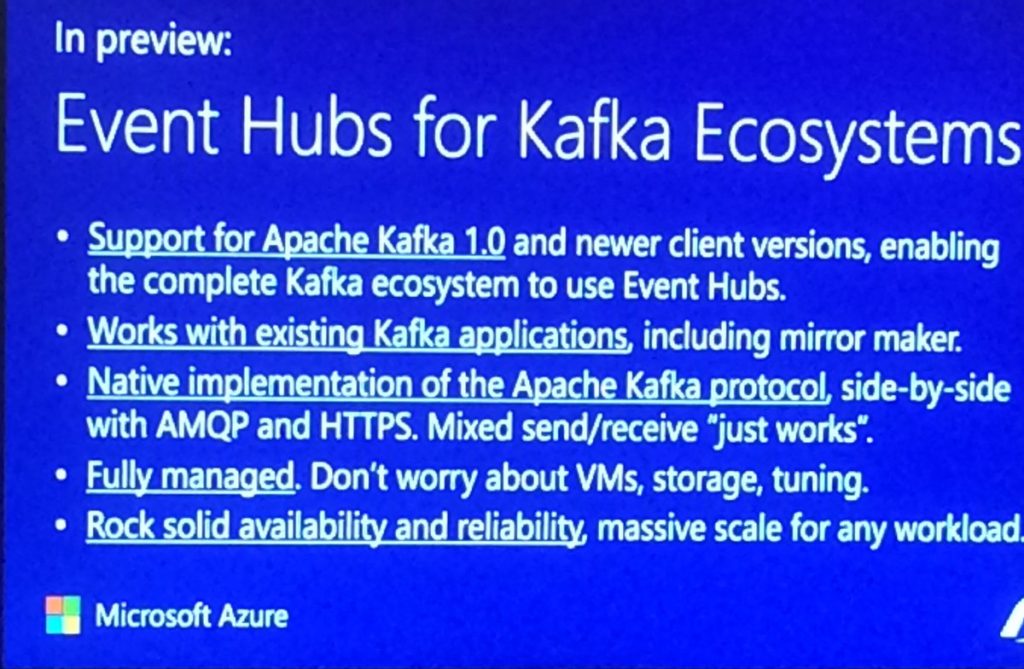

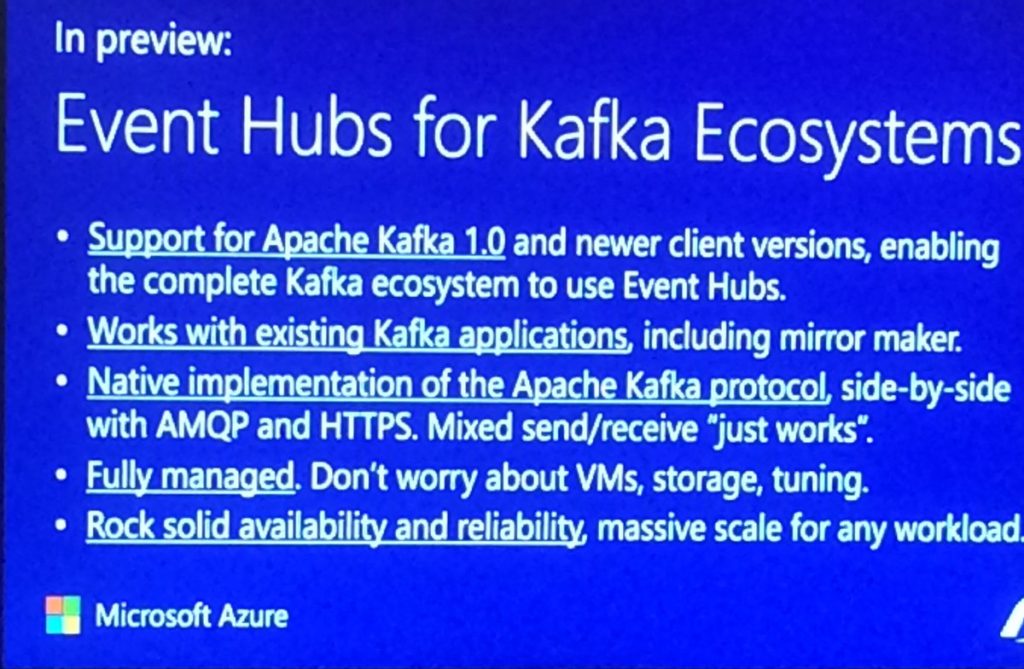

In preview: Event Hubs for the Kafka Eco system

Clemens announced that Event Hubs for Kafka Eco system is in preview. Features are:

– Supports for Apacha Kafka 1.0, enabling the complete Kafka Eco system to be used in Event Hubs

– Works with existing Kafka applications

– Native implementation of the Apache Kafka protocol

– Fully managed – don’t worry about VMs, storage and tuning

– Rock solid availability and reliability

1530 — After tea break, Dan Rosanova did a session on The Reactive Cloud: Azure Event Grid. He firstly explained the conceptual architecture of Event Hubs, with the flow from Event producers to Event Hub to Event consumers. In a simple demo, Dan showed how events could be generated, pushed to Event Hub and being received in a console application.

Dan pointed out that messaging services, like web hooks or queues, can both be publishers and subscribers to Event Grid. Dan also showed Stream Analytics, Time Series Insights and Java-based Open Source Eco system of Kafka.

1615 – Second last session of the day by Jon Fancey and Divya Swarnkar on “Enterprise Integration using Logic Apps“. Jon explained how the VETER pipeline can be used for enterprise messaging. He continued with telling about a number of characteristics of Message handling in Logic Apps. These characteristics contain:

- flexibility in content types: JSON, XML

- mapping: JSON-based and XML-based

- data operations: compose, CSV/HTML tables

- flat-file processing

- message validation

- EDI support

- batching support

Jon continued with telling about Disaster Recovery with B2B scenarios. He told amongst others about the ability to have Primary and Secondary Integration Accounts, which can be deployed in different regions. If necessary, you can have multiple Secondary Integration Accounts. All these Secondary Integration Accounts can be replicated from the Primary one by using Logic Apps, in case changes occur in the Primary Integration Account.

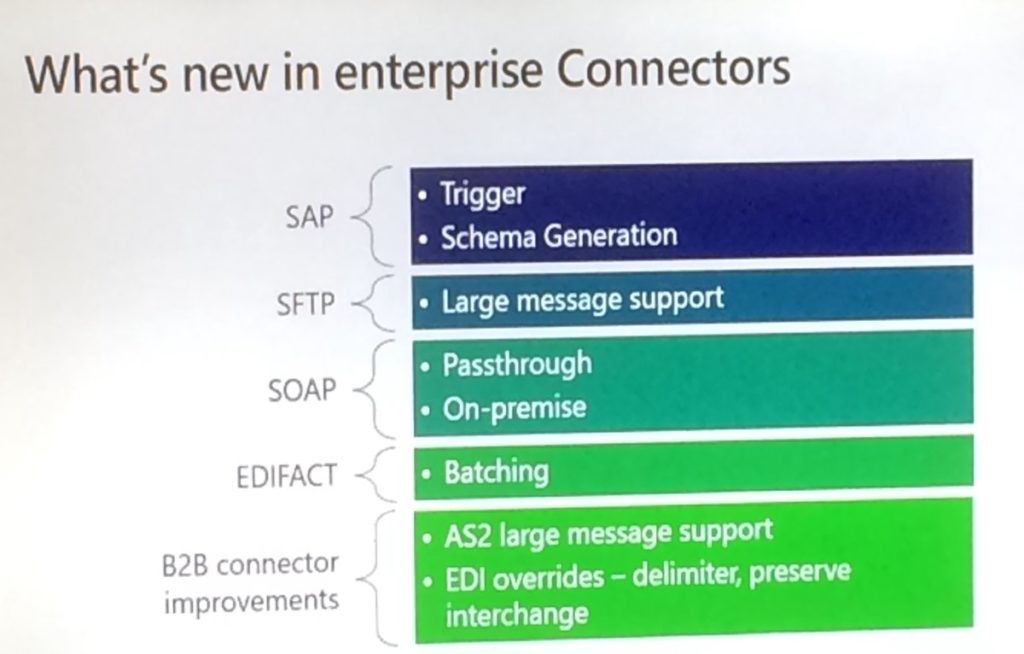

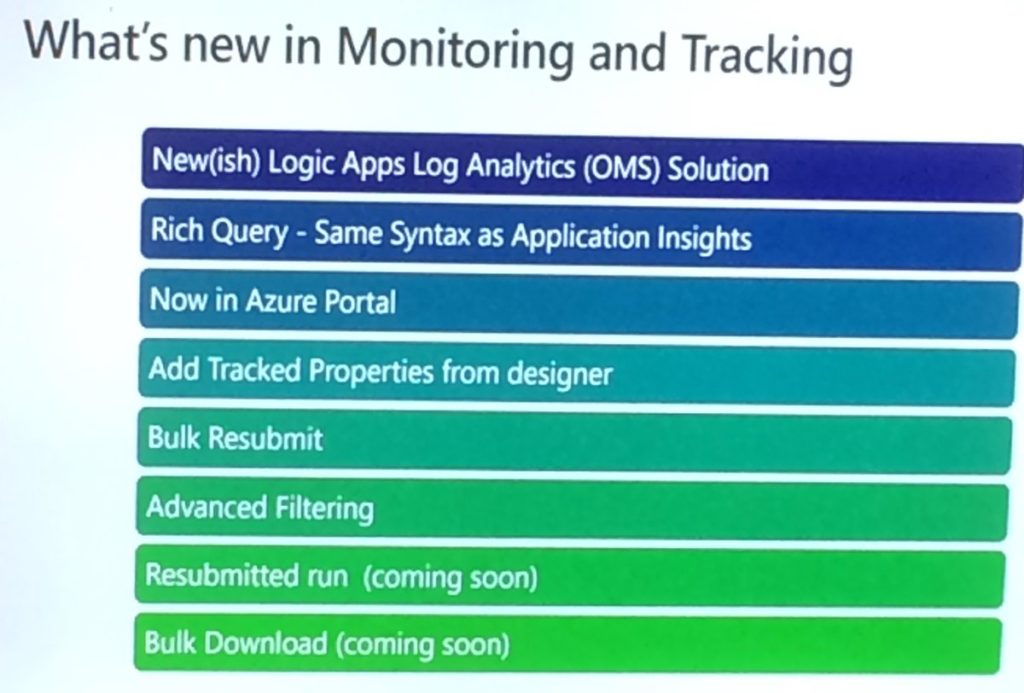

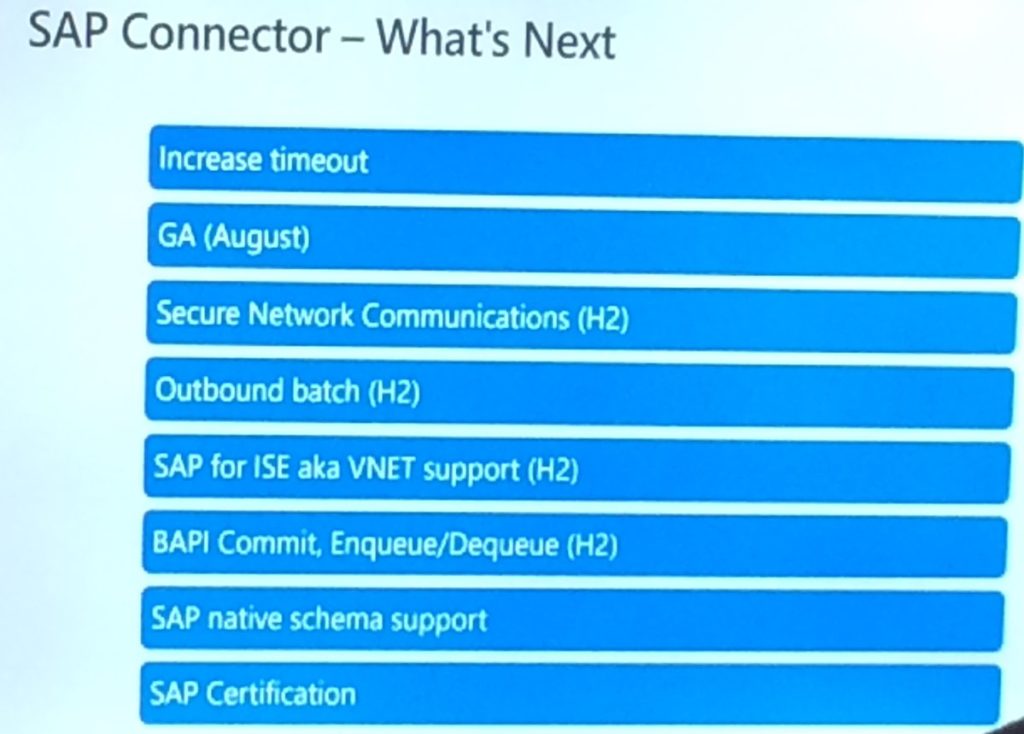

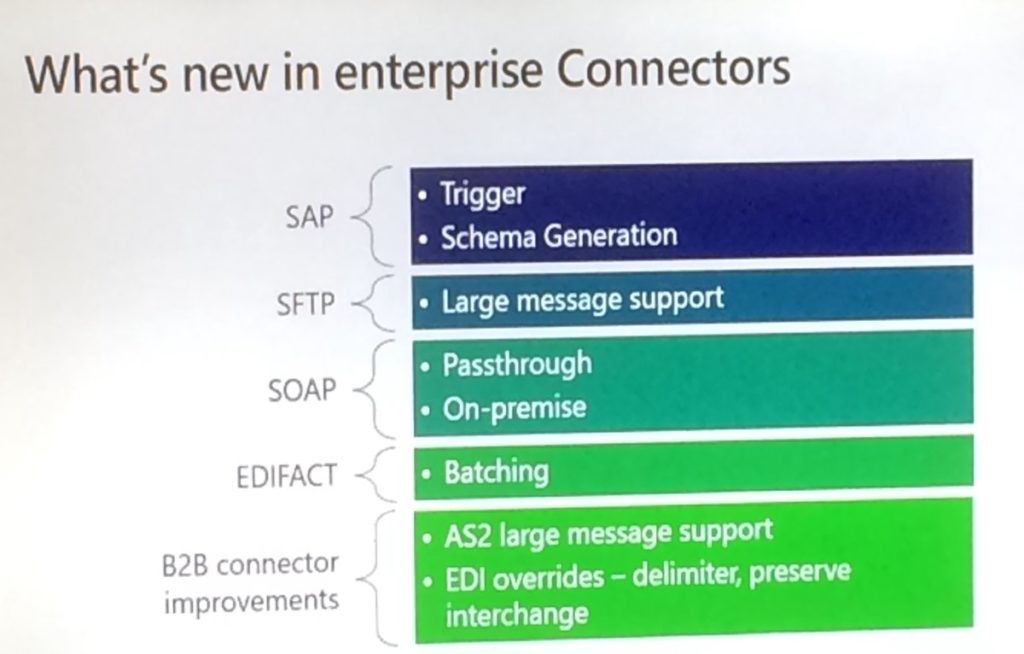

After discussing the tracking capabilities for Integration Accounts, Logic Apps, EDI, custom tracking and OMS, Jon explained what’s new to the Enterprise adapters. He mentioned the adapters for SAP, SFTP, SOAP, EDIFACT and also mentioned the B2B connector improvements like AS2 large message support and EDI overrides.

The SAP ECC connector was shown in a demo by Divya. She mentioned the pre-requirements of the adapter and in the demo she showed how to receive a message from that SAP adapter in Logic Apps and simply write the contents of that message to a file location.

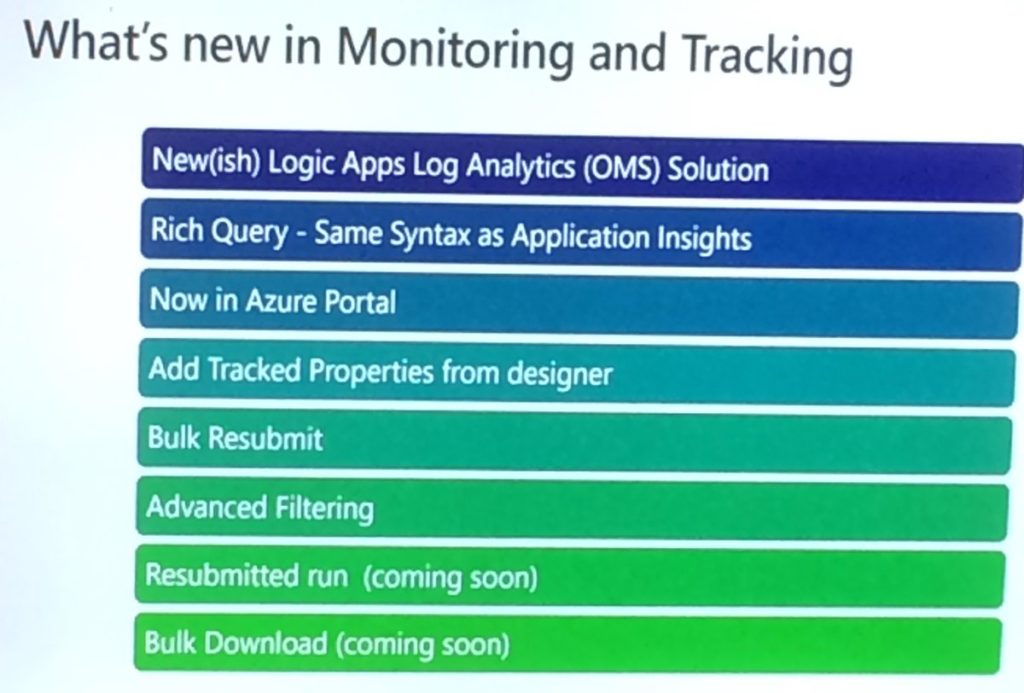

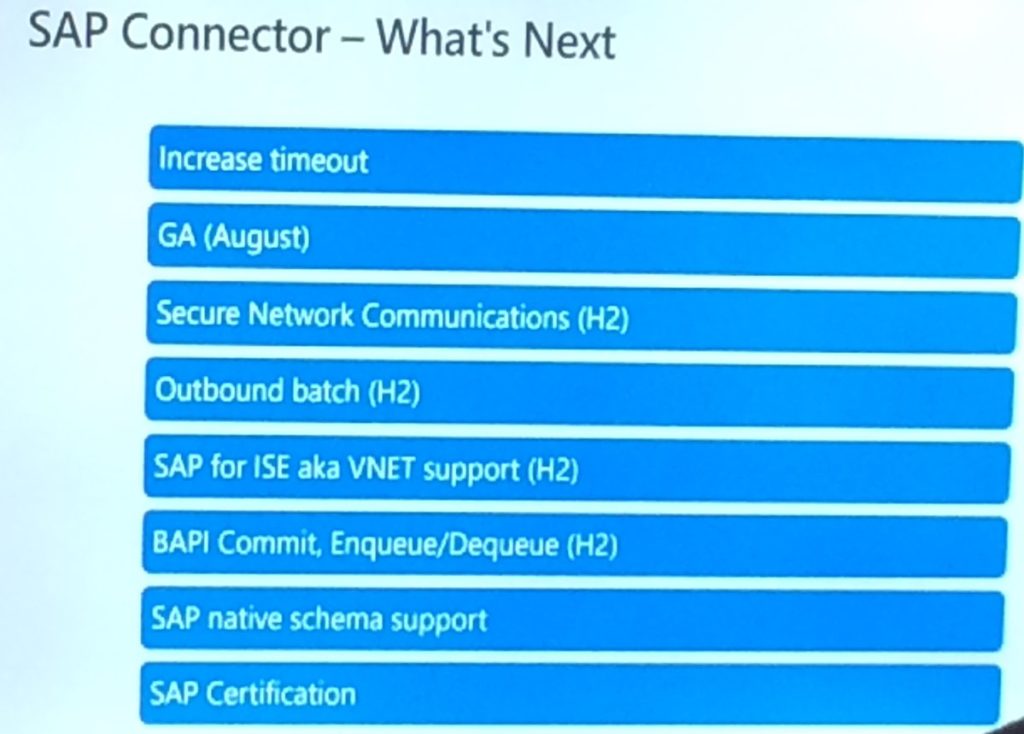

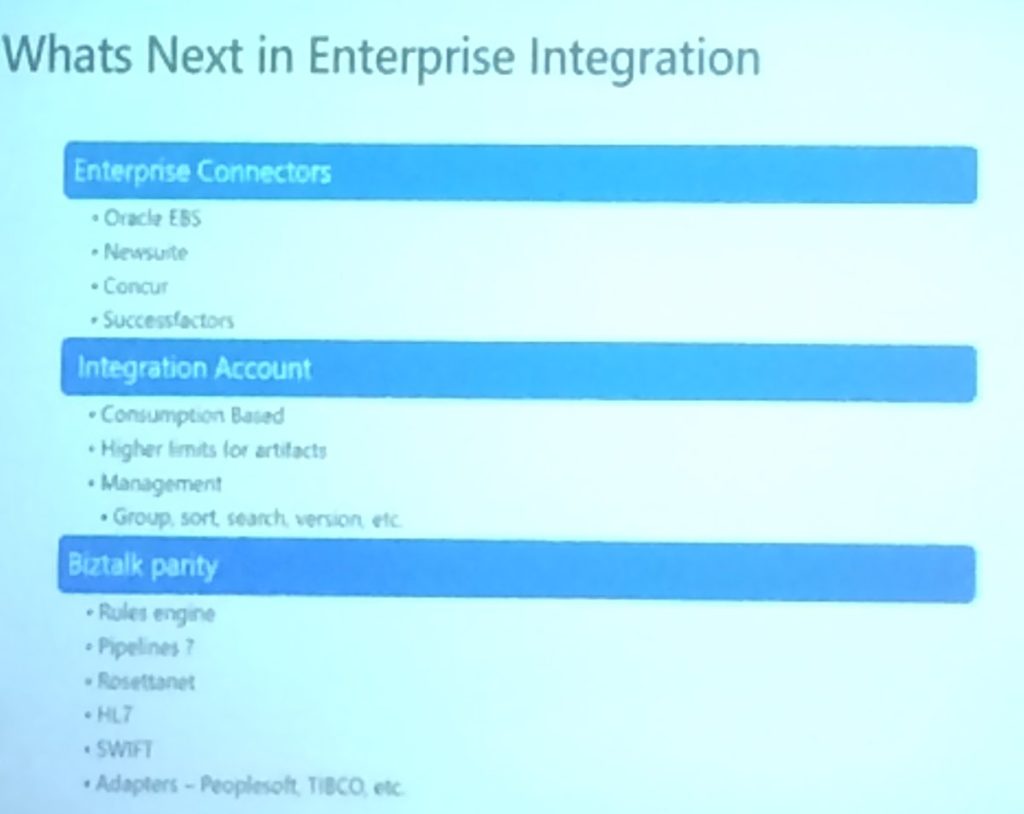

The session was wrapped by mentioning what’s new in Mapping, Monitoring and Tracking and what we can expect for the SAP connector and other enterprise adapters.

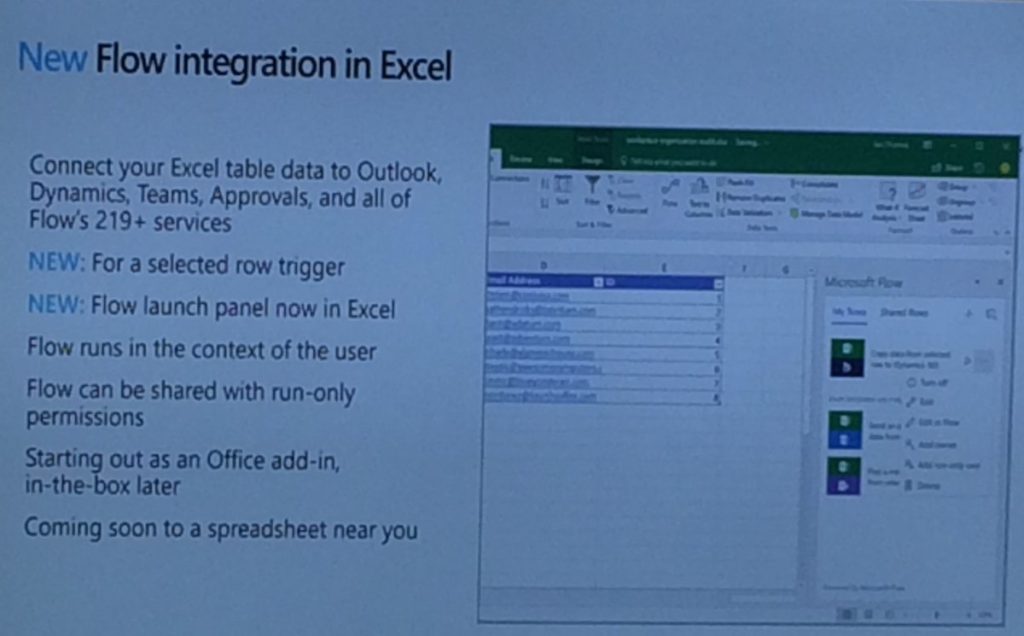

1700 — Kent’s session started with positioning Microsoft Flow, after which he explained how the product fits in the Business Application Platform. This platform consists of PowerApps (including Microsoft Flow) and Power BI.

Microsoft plans to unify these technologies to one powerful highly-productive application platform.

Momentarily, 1.2 million users, coming from over 213.000 companies, are using the platform on a monthly base.

Kent compared Microsoft Flow with MS Access, where MS Access has less to no visibility to the IT department, where (luckily) this is not the case with Microsoft Flow. Because of the better federation with Microsoft Flow, the risk of proliferation with Microsoft Flow is way less, than with Microsoft Access.

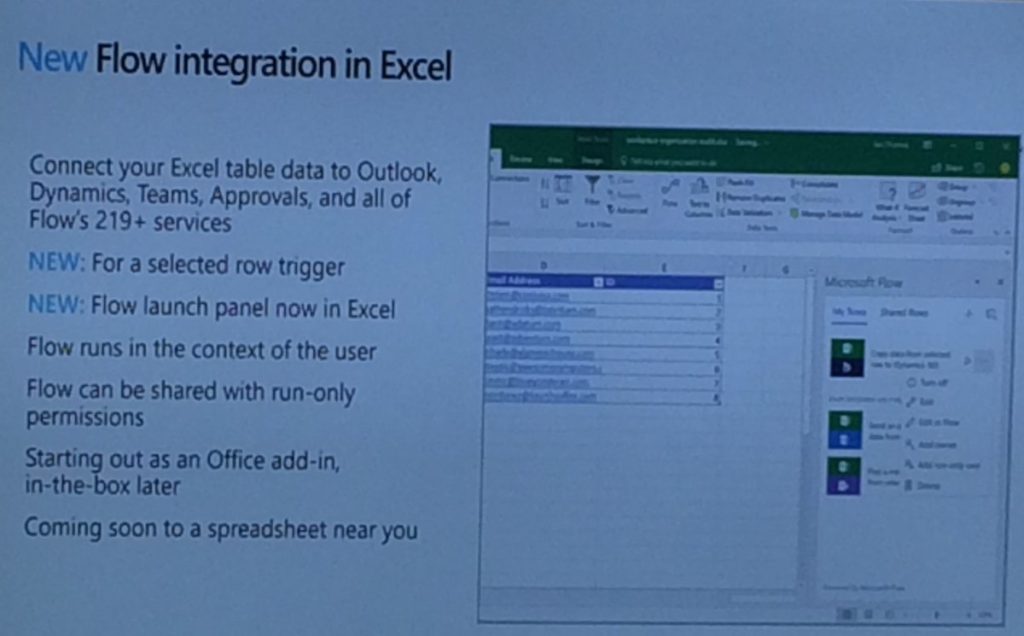

Next, Kent showed 4 demos to the audience, indicating the versatility of Microsoft Flow.

Kent concluded the session with the road map for the second and third quarter of the year. This exists of:

- Improving the user experience

- Office 365 integration

- Compliance (GDPR, US Gov cloud deployments)

- Sandbox environments for IT Pro’s, Admin, Developer

With that, it was a wrap on the session of Day 1 and time for some networking and drinks.

Stay tuned for the Day 2 blog updates.

Author: Sriram Hariharan

Sriram Hariharan is the Senior Technical and Content Writer at BizTalk360. He has over 9 years of experience working as documentation specialist for different products and domains. Writing is his passion and he believes in the following quote – “As wings are for an aircraft, a technical document is for a product — be it a product document, user guide, or release notes”. View all posts by Sriram Hariharan

by Eldert Grootenboer | Jun 4, 2018 | BizTalk Community Blogs via Syndication

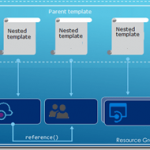

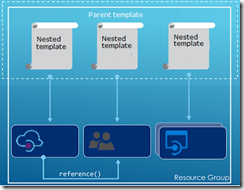

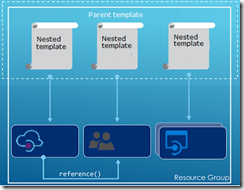

This is the fifth and final post in my series around setting up CI/CD for Azure API Management using Azure Resource Manager templates. We already created our API Management instance, added products, users and groups to the instance, and created unversioned and versioned APIs. In this final post, we will see how we can use linked ARM templates in combination with VSTS to deploy our solution all at once, and how this allows us to re-use existing templates to build up our API Management.

The posts in this series are the following, this list will be updated as the posts are being published.

So far we did each of these steps from their own repositories, which is great when you want to have different developers only working on their own parts of the total solution. However if you don’t need this type of granular security on your repositories, it probably makes more sense to have you entire API Management solution in a single repository. When working with ARM templates, they can quickly become quite large and cumbersome to maintain. To avoid this, we can split up the template into smaller templates, which each does it’s own piece of work, and link these together from a master template. This allows for re-use of templates and breaking them down into smaller pieces of functionality. For this post we will be creating a API Management instance, create products, users and groups for Contoso, and deploy a versioned API. We will be re-using the templates we created in the previous blogposts from this series for our content.

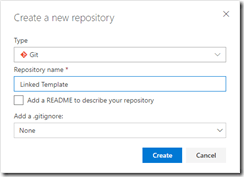

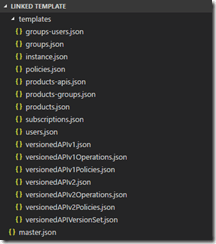

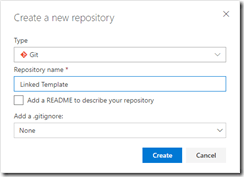

We will start by creating a new GIT repository in our VSTS project called Linked Template. This repository will hold the master and nested templates which will be used to roll out our API Management solution.

Create Linked Template repository

Once the repository has been created, we will clone it to our local machine, and add a folder called templates in the repository. In the templates folder, create a new file called instance.json, which will hold the following nested ARM template for the API Management instance. All the ARM templates in this post should be placed in the templates folder unless otherwise specified.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementSku": {

"type": "string",

"defaultValue": "Developer"

},

"APIManagementSkuCapacity": {

"type": "string",

"defaultValue": "1"

},

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

},

"PublisherName": {

"type": "string",

"defaultValue": "Eldert Grootenboer"

},

"PublisherEmail": {

"type": "string",

"defaultValue": "me@mydomaintwo.com"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service",

"name": "[parameters('APIManagementInstanceName')]",

"apiVersion": "2017-03-01",

"properties": {

"publisherEmail": "[parameters('PublisherEmail')]",

"publisherName": "[parameters('PublisherName')]",

"notificationSenderEmail": "apimgmt-noreply@mail.windowsazure.com",

"hostnameConfigurations": [],

"additionalLocations": null,

"virtualNetworkConfiguration": null,

"customProperties": {

"Microsoft.WindowsAzure.ApiManagement.Gateway.Security.Protocols.Tls10": "False",

"Microsoft.WindowsAzure.ApiManagement.Gateway.Security.Protocols.Tls11": "False",

"Microsoft.WindowsAzure.ApiManagement.Gateway.Security.Protocols.Ssl30": "False",

"Microsoft.WindowsAzure.ApiManagement.Gateway.Security.Ciphers.TripleDes168": "False",

"Microsoft.WindowsAzure.ApiManagement.Gateway.Security.Backend.Protocols.Tls10": "False",

"Microsoft.WindowsAzure.ApiManagement.Gateway.Security.Backend.Protocols.Tls11": "False",

"Microsoft.WindowsAzure.ApiManagement.Gateway.Security.Backend.Protocols.Ssl30": "False"

},

"virtualNetworkType": "None"

},

"resources": [],

"sku": {

"name": "[parameters('APIManagementSku')]",

"capacity": "[parameters('APIManagementSkuCapacity')]"

},

"location": "[resourceGroup().location]",

"tags": {},

"scale": null

}

]

}

|

The next ARM template will hold the users of Contoso, add a users.json file to the same local repository and add the following template.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/users",

"name": "[concat(parameters('APIManagementInstanceName'), '/john-smith-contoso-com')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"firstName": "John",

"lastName": "Smith",

"email": "john.smith@eldert.org",

"state": "active",

"note": "Developer working for Contoso, one of the consumers of our APIs",

"confirmation": "invite"

}

}

]

}

|

Now add a groups.json file to the repository containing the following ARM template, this will add the groups for Contoso.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/groups",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosogroup')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "ContosoGroup",

"description": "Group containing all developers and services from Contoso who will be consuming our APIs",

"type": "custom",

"externalId": null

}

}

]

}

|

The following ARM template will be used to add the product for Contoso, for this we are going to add products.json to the repository.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/products",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosoproduct')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "ContosoProduct",

"description": "Product which will apply the high-over policies for developers and services of Contoso.",

"terms": null,

"subscriptionRequired": true,

"approvalRequired": true,

"subscriptionsLimit": null,

"state": "published"

}

}

]

}

|

We have created our templates for the products and groups, so the next template, which will be called products-groups.json, will link the Contoso group to the Contoso product.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/products/groups",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosoproduct/contosogroup')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {}

}

]

}

|

Likewise we will also link the Contoso users to the Contoso group using the following ARM template in a file called groups-users.json.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/groups/users",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosogroup/john-smith-contoso-com')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {}

}

]

}

|

Next up are the policies for the product, for this add a file called policies.json to the repository.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/products/policies",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosoproduct/policy')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"policyContent": "<policies>rn <inbound>rn <base />rn <rate-limit calls="20" renewal-period="60" />rn </inbound>rn <backend>rn <base />rn </backend>rn <outbound>rn <base />rn </outbound>rn <on-error>rn <base />rn </on-error>rn</policies>"

}

}

]

}

|

Now lets add the subcription for the Contoso user, which contains the Contoso product, by adding a file called subscriptions.json.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/subscriptions",

"name": "[concat(parameters('APIManagementInstanceName'), '/5ae6ed2358c2795ab5aaba68')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"userId": "[resourceId('Microsoft.ApiManagement/service/users', parameters('APIManagementInstanceName'), 'john-smith-contoso-com')]",

"productId": "[resourceId('Microsoft.ApiManagement/service/products', parameters('APIManagementInstanceName'), 'contosoproduct')]",

"displayName": "ContosoProduct subscription",

"state": "active"

}

}

]

}

|

Next we will create the version set which will hold the different versions of our versioned API. Add a file to the repository called versionedAPIVersionSet.json and add the following template to it.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"name": "[concat(parameters('APIManagementInstanceName'), '/versionsetversionedapi')]",

"type": "Microsoft.ApiManagement/service/api-version-sets",

"apiVersion": "2017-03-01",

"properties": {

"description": "Version set for versioned API blog post ",

"versionQueryName": "api-version",

"displayName": "Versioned API",

"versioningScheme": "query"

}

}

]

}

|

We will now add the template for the first version of the versioned API, by creating a file in the repository called versionedAPIv1.json.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/apis",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "Versioned API",

"apiRevision": "1",

"description": "Wikipedia for Web APIs. Repository of API specs in OpenAPI(fka Swagger) 2.0 format.nn**Warning**: If you want to be notified about changes in advance please subscribe to our [Gitter channel](https://gitter.im/APIs-guru/api-models).nnClient sample: [[Demo]](https://apis.guru/simple-ui) [[Repo]](https://github.com/APIs-guru/simple-ui)n",

"serviceUrl": "https://api.apis.guru/v2/",

"path": "versioned-api",

"protocols": [

"https"

],

"authenticationSettings": null,

"subscriptionKeyParameterNames": null,

"apiVersion": "v1",

"apiVersionSetId": "[concat(resourceId('Microsoft.ApiManagement/service', parameters('APIManagementInstanceName')), '/api-version-sets/versionsetversionedapi')]"

}

}

]

}

|

Operations

For the first version of the API we will now add a file called versionedAPIv1Operations.json which will hold the various operations of this API.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/apis/operations",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api/getMetrics')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "Get basic metrics",

"method": "GET",

"urlTemplate": "/metrics",

"templateParameters": [],

"description": "Some basic metrics for the entire directory.nJust stunning numbers to put on a front page and are intended purely for WoW effect :)n",

"responses": [

{

"statusCode": 200,

"description": "OK",

"headers": []

}

],

"policies": null

}

},

{

"type": "Microsoft.ApiManagement/service/apis/operations",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api/listAPIs')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "List all APIs",

"method": "GET",

"urlTemplate": "/list",

"templateParameters": [],

"description": "List all APIs in the directory.nReturns links to OpenAPI specification for each API in the directory.nIf API exist in multiple versions `preferred` one is explicitly marked.nnSome basic info from OpenAPI spec is cached inside each object.nThis allows to generate some simple views without need to fetch OpenAPI spec for each API.n",

"responses": [

{

"statusCode": 200,

"description": "OK",

"headers": []

}

],

"policies": null

}

}

]

}

|

Policies

The policies for the first version of our API are next, lets add the versionedAPIv1Policies.json file for this.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/apis/operations/policies",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api/getMetrics/policy')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"policyContent": "[concat('<!--rn IMPORTANT:rn - Policy elements can appear only within the <inbound>, <outbound>, <backend> section elements.rn - Only the <forward-request> policy element can appear within the <backend> section element.rn - To apply a policy to the incoming request (before it is forwarded to the backend service), place a corresponding policy element within the <inbound> section element.rn - To apply a policy to the outgoing response (before it is sent back to the caller), place a corresponding policy element within the <outbound> section element.rn - To add a policy position the cursor at the desired insertion point and click on the round button associated with the policy.rn - To remove a policy, delete the corresponding policy statement from the policy document.rn - Position the <base> element within a section element to inherit all policies from the corresponding section element in the enclosing scope.rn - Remove the <base> element to prevent inheriting policies from the corresponding section element in the enclosing scope.rn - Policies are applied in the order of their appearance, from the top down.rn-->rn<policies>rn <inbound>rn <base />rn <set-backend-service base-url="https://api.apis.guru/v2/" />rn <rewrite-uri template="/metrics.json" />rn </inbound>rn <backend>rn <base />rn </backend>rn <outbound>rn <base />rn </outbound>rn <on-error>rn <base />rn </on-error>rn</policies>')]"

}

},

{

"type": "Microsoft.ApiManagement/service/apis/operations/policies",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api/listAPIs/policy')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"policyContent": "[concat('<!--rn IMPORTANT:rn - Policy elements can appear only within the <inbound>, <outbound>, <backend> section elements.rn - Only the <forward-request> policy element can appear within the <backend> section element.rn - To apply a policy to the incoming request (before it is forwarded to the backend service), place a corresponding policy element within the <inbound> section element.rn - To apply a policy to the outgoing response (before it is sent back to the caller), place a corresponding policy element within the <outbound> section element.rn - To add a policy position the cursor at the desired insertion point and click on the round button associated with the policy.rn - To remove a policy, delete the corresponding policy statement from the policy document.rn - Position the <base> element within a section element to inherit all policies from the corresponding section element in the enclosing scope.rn - Remove the <base> element to prevent inheriting policies from the corresponding section element in the enclosing scope.rn - Policies are applied in the order of their appearance, from the top down.rn-->rn<policies>rn <inbound>rn <base />rn <set-backend-service base-url="https://api.apis.guru/v2" />rn <rewrite-uri template="/list.json" />rn </inbound>rn <backend>rn <base />rn </backend>rn <outbound>rn <base />rn </outbound>rn <on-error>rn <base />rn </on-error>rn</policies>')]"

}

}

]

}

|

And now we will add the second version of our versioned API as well, in a file called versionedAPIv2.json.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/apis",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api-v2')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "Versioned API",

"apiRevision": "1",

"description": "Wikipedia for Web APIs. Repository of API specs in OpenAPI(fka Swagger) 2.0 format.nn**Warning**: If you want to be notified about changes in advance please subscribe to our [Gitter channel](https://gitter.im/APIs-guru/api-models).nnClient sample: [[Demo]](https://apis.guru/simple-ui) [[Repo]](https://github.com/APIs-guru/simple-ui)n",

"serviceUrl": "https://api.apis.guru/v2/",

"path": "versioned-api",

"protocols": [

"https"

],

"authenticationSettings": null,

"subscriptionKeyParameterNames": null,

"apiVersion": "v2",

"apiVersionSetId": "[concat(resourceId('Microsoft.ApiManagement/service', parameters('APIManagementInstanceName')), '/api-version-sets/versionsetversionedapi')]"

}

}

]

}

|

Operations

Add the operations for the second version of our API in a file called versionedAPIv2Operations.json.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/apis/operations",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api-v2/listAPIs')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "List all APIs",

"method": "GET",

"urlTemplate": "/list",

"templateParameters": [],

"description": "List all APIs in the directory.nReturns links to OpenAPI specification for each API in the directory.nIf API exist in multiple versions `preferred` one is explicitly marked.nnSome basic info from OpenAPI spec is cached inside each object.nThis allows to generate some simple views without need to fetch OpenAPI spec for each API.n",

"responses": [

{

"statusCode": 200,

"description": "OK",

"headers": []

}

],

"policies": null

}

}

]

}

|

Policies

And add a file called versionedAPIv2Policies.json which will hold the policies for the second version of our versioned API.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/apis/operations/policies",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api-v2/listAPIs/policy')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"policyContent": "[concat('<!--rn IMPORTANT:rn - Policy elements can appear only within the <inbound>, <outbound>, <backend> section elements.rn - Only the <forward-request> policy element can appear within the <backend> section element.rn - To apply a policy to the incoming request (before it is forwarded to the backend service), place a corresponding policy element within the <inbound> section element.rn - To apply a policy to the outgoing response (before it is sent back to the caller), place a corresponding policy element within the <outbound> section element.rn - To add a policy position the cursor at the desired insertion point and click on the round button associated with the policy.rn - To remove a policy, delete the corresponding policy statement from the policy document.rn - Position the <base> element within a section element to inherit all policies from the corresponding section element in the enclosing scope.rn - Remove the <base> element to prevent inheriting policies from the corresponding section element in the enclosing scope.rn - Policies are applied in the order of their appearance, from the top down.rn-->rn<policies>rn <inbound>rn <base />rn <set-backend-service base-url="https://api.apis.guru/v2" />rn <rewrite-uri template="/list.json" />rn </inbound>rn <backend>rn <base />rn </backend>rn <outbound>rn <base />rn </outbound>rn <on-error>rn <base />rn </on-error>rn</policies>')]"

}

}

]

}

|

And finally we will add the products-apis.json file, which will link the versions of our versioned API to the Contoso product.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/products/apis",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosoproduct/versioned-api')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {}

},

{

"type": "Microsoft.ApiManagement/service/products/apis",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosoproduct/versioned-api-v2')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {}

}

]

}

|

We have now created all of our linked templates, so lets create the master template in a file called master.json. Don’t place this file in the templates folder, but instead place it in the root directory of the repository. This template will call all our other templates, passing in parameters as required. This is also where we handle our dependOn dependencies.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"TemplatesStorageAccount": {

"type": "string",

"defaultValue": "https://mystorageaccount.blob.core.windows.net/templates/"

},

"TemplatesStorageAccountSASToken": {

"type": "string",

"defaultValue": ""

},

"APIManagementSku": {

"type": "string",

"defaultValue": "Developer"

},

"APIManagementSkuCapacity": {

"type": "string",

"defaultValue": "1"

},

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

},

"PublisherName": {

"type": "string",

"defaultValue": "Eldert Grootenboer"

},

"PublisherEmail": {

"type": "string",

"defaultValue": "me@mydomaintwo.com"

}

},

"resources": [

{

"apiVersion": "2017-05-10",

"name": "instanceTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/instance.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementSku": {"value": "[parameters('APIManagementSku')]" },

"APIManagementSkuCapacity": {"value": "[parameters('APIManagementSkuCapacity')]" },

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" },

"PublisherName": {"value": "[parameters('PublisherName')]" },

"PublisherEmail": {"value": "[parameters('PublisherEmail')]" }

}

}

},

{

"apiVersion": "2017-05-10",

"name": "usersTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/users.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'instanceTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "groupsTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/groups.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'instanceTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "productsTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/products.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'instanceTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "groupsUsersTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/groups-users.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'groupsTemplate')]",

"[resourceId('Microsoft.Resources/deployments', 'usersTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "productsGroupsTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/products-groups.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'productsTemplate')]",

"[resourceId('Microsoft.Resources/deployments', 'groupsTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "subscriptionsTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/subscriptions.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'productsTemplate')]",

"[resourceId('Microsoft.Resources/deployments', 'usersTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "policiesTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/policies.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'productsTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "versionedAPIVersionSetTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/versionedAPIVersionSet.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'instanceTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "versionedAPIv1Template",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/versionedAPIv1.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIVersionSetTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "versionedAPIv1OperationsTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/versionedAPIv1Operations.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIv1Template')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "versionedAPIv1PoliciesTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/versionedAPIv1Policies.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIv1Template')]",

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIv1OperationsTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "versionedAPIv2Template",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/versionedAPIv2.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIVersionSetTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "versionedAPIv2OperationsTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/versionedAPIv2Operations.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIv2Template')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "versionedAPIv2PoliciesTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/versionedAPIv2Policies.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIv2Template')]",

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIv2OperationsTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "productsAPIsTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/products-apis.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'productsTemplate')]",

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIv1Template')]",

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIv2Template')]"

]

}

]

}

|

As you will notice, the first parameter is a path to a container in a storage account, so be sure to create this container. You could even do this from the build pipeline, but for this blog post we will do it manually.

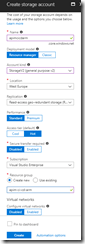

Create storage account

Create container

We should now have a repository filled with ARM templates, so commit and push these to the remote repository.

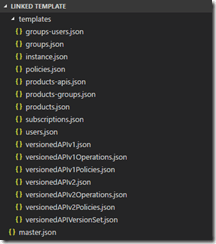

Overview of ARM template files

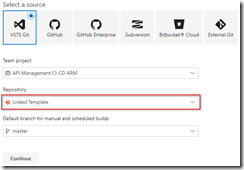

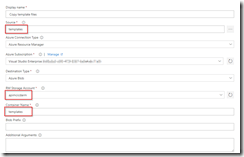

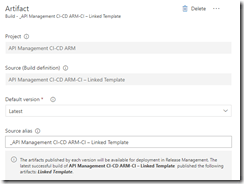

Switch to our project in VSTS, and create a build pipeline called API Management CI-CD ARM-CI – Linked Template to validate the ARM template. Remember to enable the continous integration trigger, so our build runs every time we do a push to our repository.

Create build template for Linked Template repository

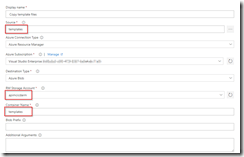

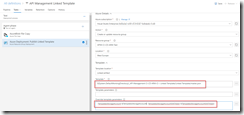

In the build definition, we will add a Azure File Copy step, which will copy our linked templates to the blob container we just created, so update the settings of the step accordingly.

Set Azure File Copy steps properties

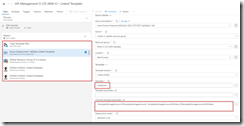

In the output of this step we will get back the URI and SAS token for our container which we will need in our ARM template, so make sure to update the variables here.

Set output names so we can use these in our ARM template

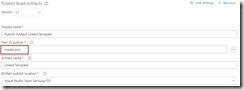

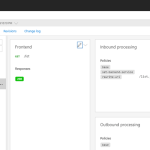

The other steps in the build pipeline are just as described in the first post in this series, except that we now use the variables from the Azure Copy File step in our Azure Resource Group Deployment step to update the location and SAS token of the storage account, and we include an extra Publish Built Artifacts step.

The complete build pipeline for validation

Note that in first the Publish Built Artifacts step we just publish the master.json files.

Only publish master template in first Publish Build Artifacts step

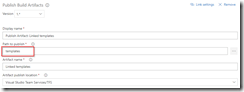

While in the second Publish Built Artifacts step we publish the linked templates.

Publish templates folder in second Publish Build Artifacts step

Once done, make sure to save and queue the build definition.

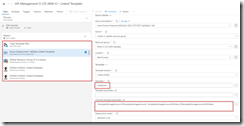

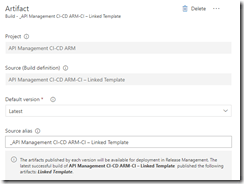

We will now create the deployment pipeline using a new release definition called API Management Linked Template, which again is almost the same as described in the first post of this series, except we will now include the Azure Copy File step just like in the build pipeline to copy our linked templates to the templates container.

Use output from build pipeline as artifact

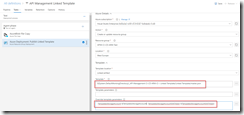

Do not forget to enable the Continuous deployment trigger so this deployment is triggered each time the build runs successfully. In the Test environment, add a Azure File Copy step to copy the linked template files to our templates container, and set variables containing the URI and SAS token.

Set properties for Azure Copy Files step

In the Azure Resource Group Deployment step use the variables from the Azure File Copy step to set the location and SAS token of the container.

Use URI and SAS token from previous step

Clone the test environment and set the cloned environment up as production environment, do remember to include an approval step.

Approval should be given before deploying to production

We have now finished our complete CI/CD pipeline, if we make push any changes to our repository it will trigger the build which does validation of the ARM templates, and then uses them to deploy or update our complete API Management instance including all users, groups, products and APIs which we defined in our templates.

API Management instance created including users, groups, products and APIs

by Gautam | Jun 3, 2018 | BizTalk Community Blogs via Syndication

Do you feel difficult to keep up to date on all the frequent updates and announcements in the Microsoft Integration platform?

Integration weekly update can be your solution. It’s a weekly update on the topics related to Integration – enterprise integration, robust & scalable messaging capabilities and Citizen Integration capabilities empowered by Microsoft platform to deliver value to the business.

If you want to receive these updates weekly, then don’t forget to Subscribe!

Feedback

Hope this would be helpful. Please feel free to reach out to me with your feedback and questions.

He suggested some messaging considerations:

He suggested some messaging considerations: