by community-syndication | Jul 24, 2014 | BizTalk Community Blogs via Syndication

the result

I regulalry need to check if my Azure virtual machines are turned off before leaving. Besides virtual machines, I also want to know in which pricing level my web sites, Azure SQL Databases, etc are running. I want to know if I have an HDInsight cluster running.

So I created an Azure automation job that checks the subscriptions at 6pm every day.

Here is how it looks:

How to start

You can start using Azure automation by following the instructions available here:

http://azure.microsoft.com/en-us/documentation/articles/automation-create-runbook-from-samples/

Credentials

The script will need to get access to the subscriptions.

So I created a management certificate. One way to do so is explained in this blog post by Keith Mayer.

In my case, here is how my environment looks:

In Azure automation, the same certificate is declared in the assets:

The script

Here is how the script itself:

workflow Inventory

{

# Get the Azure management certificate that is used to connect to this subscription

$Certificate = Get-AutomationCertificate -Name 'azure-admin.3-4.fr'

if ($Certificate -eq $null)

{

throw "Could not retrieve '$AzureConn.AutomationCertificateName' certificate asset. Check that you created this first in the Automation service."

}

InlineScript

{

$Certificate = $using:Certificate

$subscriptions = (('Azdem169A44055X','0fa8xxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx'),

('Azure bengui','b4edxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx'),

('APVX','0ec1xxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx'),

('demos-frazurete','4b57xxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx'))

foreach ($subscription in $subscriptions)

{

$subscriptionName = $subscription[0]

$subscriptionId = $subscription[1]

echo "------- Subscription $subscriptionName ----------"

# Set the Azure subscription configuration

Set-AzureSubscription -SubscriptionName $subscriptionName -SubscriptionId $subscriptionId -Certificate $Certificate

Select-AzureSubscription -Current $subscriptionName

$vms = @()

foreach ($s in Get-AzureService)

{

$vms += Get-AzureVm -ServiceName $s.ServiceName

}

echo "--- Virtual Machines ---"

$vms | select servicename, Name, PowerState | format-table

$hclusters=Get-AzureHDInsightCluster

echo "--- HDInsight clusters ---"

$hclusters | format-table

$webs=Get-AzureWebSite

echo "--- Web Sites ---"

$webs | select Name, SiteMode | sort Name | format-table

$dbs = @()

foreach ($s in Get-AzureSqlDatabaseServer)

{

$dbs += Get-AzureSqlDatabase -ServerName $s.ServerName

}

echo "--- SQL Databases ---"

$dbs | select Name, Edition, MaxSizeinGb | format-table

}

}

}

NB: I have obfuscated the subscription ids.

Make it your own

You can change the script for your own usage. You would need to change the certificate name (mine is azure-admin.3-4.fr), the names and ids of your subscriptions).

Benjamin (@benjguin)

Blog Post by: Benjamin GUINEBERTIERE

by community-syndication | Jul 23, 2014 | BizTalk Community Blogs via Syndication

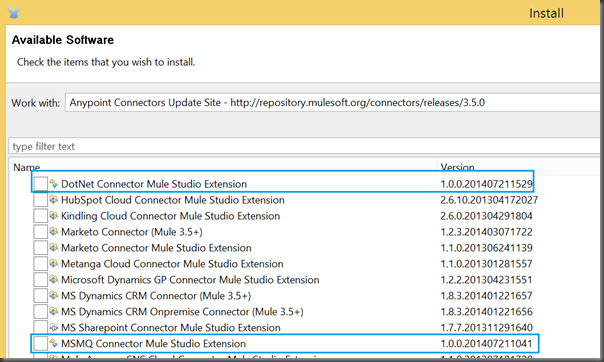

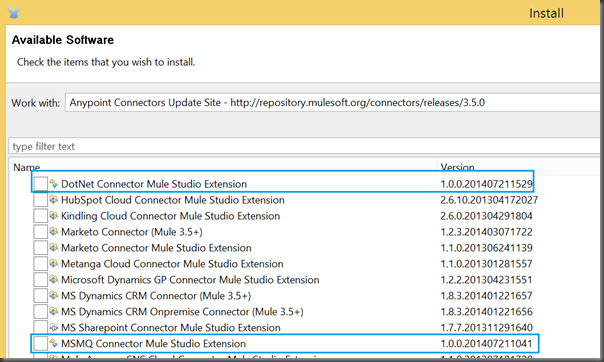

For those of you who have been keeping your AnyPoint Studio up to date you may have been pleasantly surprised this week. The reason? MuleSoft released two important connectors for customers who leverage the Microsoft platform in their architectures. (You can read more about the official announcement here.)

More specifically, the two capabilities that were released this week include:

- .NET Connector

- MSMQ Connector

The MSMQ Connector is self explanatory but what is a .NET Connector? The .NET Connector allows .NET code to be called from a Mule Flow.

Why are these connectors important? For some, hating Microsoft is a sport, but the reality is that Microsoft continues to be very relevant in the Enterprise. In case you missed their recent earnings, they made 4.6 billion in net income for their past quarteryes that is a ’b’ and yes that was only for a quarter of the year.

Many customers continue to use MSMQ. Sometimes these solutions are custom .Net solutions where they are using MSMQ to add some durability for their messaging needs. Sometimes, these are legacy applications in maintenance mode but not always. Other use cases include purchasing a COTS (Commercial Off The Shelf) product that has a dependency on MSMQ.

While the MSMQ Connector is a nice addition to the MuleSoft portfolio of Connectors, the .NET Connector is what really gets me excited. I have been using .Net since the 1.1 release and am very comfortable in Visual Studio.

For many organizations, they have standardized on building their custom applications in .NET. I have worked for these companies in the past and for many of these organizations, programming in another language is a showstopper. There may be concerns about re-training, interoperability and productivity as a result of introducing new programming languages. Some people may consider this fear mongering, but the reality is if you have a strong Enterprise Architecture practice, you need to adhere to standards. While some people are willing to introduce many different languages into an environment, others are not.

The combination of the AnyPoint Platform and the ability to write any integration logic that is required in .NET is a very powerful combination for organizations that want to leverage their .NET skill sets.

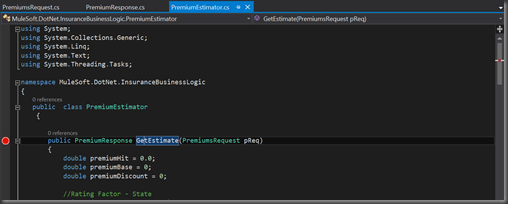

How to invoke .NET Code from a Mule Flow? There are many resources being made available as part of this release so I don’t want to spoil that party (See conclusion for more resources). But let me provide a sneak peak. For those of you who may not be familiar with MuleSoft, we have the ability to write Mule Flows. You can think of these much like a Workflow or an Orchestration for my BizTalk friends. On the right hand side we have our pallete where we can drag Message processors or Connectors from the pallete to our Mule Flow.

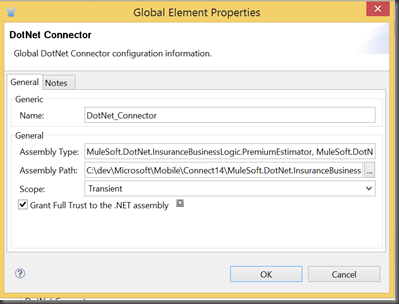

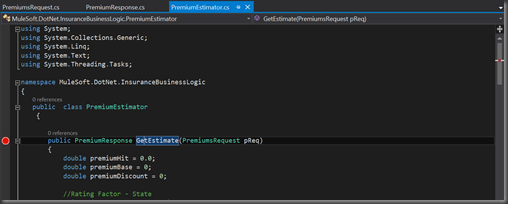

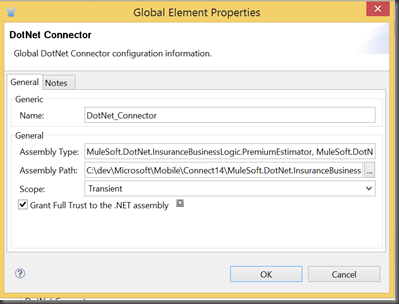

Once our Connector is on our Mule Flow, we can configure it. We need to provide an Assembly Type, Assembly Path (can be relative or absolute), a Scope and a Trust level. This configuration is considered to be a Global Element and we only have to configure this once per .NET assembly.

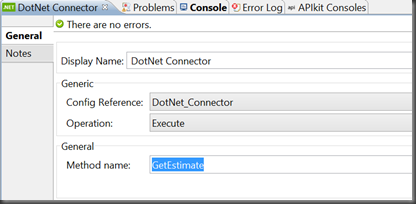

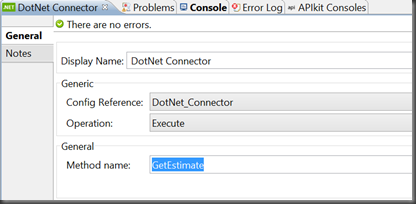

Next we provide the name of the .NET Method that we want to call.

From there it is business as usual from a .NET perspective. I can send and receive complex types, JSON, XML Documents etc.

Conclusion

Hopefully this gives you a little taste of what is to come. I have had the opportunity to work with many Beta customers on this functionality and am very excited with where we are and where we are headed. What we are releasing now is just the beginning.

Stay tuned for more details on both the MSMQ and .NET Connectors. Now that these bits are public I am really looking forward to sharing this information with both the Microsoft and MuleSoft communities.

Other resources:

- Press Release

- MuleSoft Blog Post including two short video demos and registration link for an upcoming Webinar.

BTW: If this sounds interesting to you, we are hiring!!!

by community-syndication | Jul 22, 2014 | BizTalk Community Blogs via Syndication

Integration with SQL Server is not always a walk in the park. Recently I had to integrate dynamic SQL scripts with Typed-Polling and some of the resulted in empty result sets. In this post I explain the pitfalls and lessons learned of our solution by using a sample scenario.

by community-syndication | Jul 18, 2014 | BizTalk Community Blogs via Syndication

Axon Olympus maakt de eerstvolgende trainingsdata bekend voor haar BizTalk Server trainingen voor ontwikkelaars en beheerders. In 2014 vindt de volgende training BizTalk Server for Developersplaats op maandag 15, dinsdag 16, maandag 22 en dinsdag 23 september. Deze vierdaagse training […]

Blog Post by: AxonOlympus

by community-syndication | Jul 18, 2014 | BizTalk Community Blogs via Syndication

It can be complicated to deploy changed .XSD Schemas, Maps and Orchestrations because BizTalk artifacts depend on each other. Changes in one BizTalk application may affect other BizTalk applications therefore you usually deploy at a certain time or date all your changes when there is no “traffic”. This is because you first have to remove the Schemas, Maps and Orchestrations before you can deploy the changes. Also other processes in BizTalk that make use of these artifacts have to be stopped and can not run. Especially when you use a Canonical Data Model (CDM) it can be difficult to deploy changes because also Maps and Orchestrations in other BizTalk applications can use the same Canonical Schemas.

One of the advantages of the ESB toolkit is that you can easily deploy modified Maps and XSD schemas because Orchestration-based and messaging-based services in an Itinerary don’t depend on Schemas and Maps.

- Change in a Map

- Orchestrations are not bound to a specific map because transformations are performed by the MapHelper class. Therefore they don’t have to be removed when deploying a change.

- Change in a .XSD schema

- Orchestrations are not bound to a specific .XSD schema because the used Message Type is a XmlDocument. Therefore they don’t have to be removed when deploying a change.

When Schemas and Maps are removed from BizTalk, the ESB still can process the messages and map to the other message types because the maps are still in the GAC. This can be very useful in an 24*7 environment where it’s crucial that it is possible to deploy changes without stopping other processes that do not depend on the changed Schema or Map.

Please note that deploying changes with the ESB Toolkit is very powerful so you have to be careful and know what you are doing!

See Also

For more information on deploying changed BizTalk artifacts in the ESB Toolkit see:

- Deploying changed Schemas and Maps with the ESB Toolkit with NO Downtime for other Processes

by community-syndication | Jul 15, 2014 | BizTalk Community Blogs via Syndication

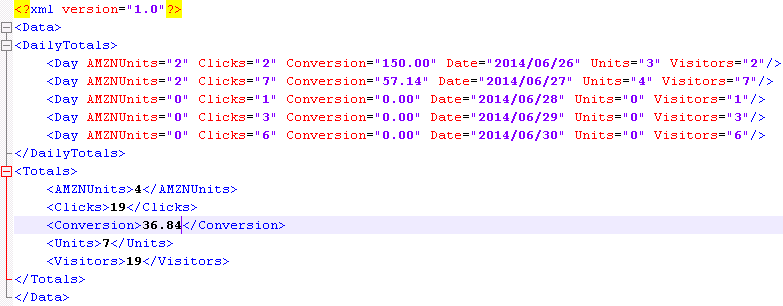

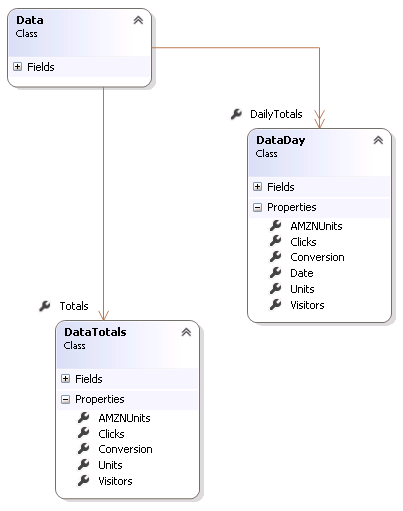

If you are an Amazon Associates it is possible to view a report over the Daily Trend for your account. This gives you the following information:

|

| Amazon Daily Trend report in XML format |

- AMZUnits (Items Ordered (Amazon) – how many products have been ordered, where Amazon is the seller

- Clicks (Clicks) – how many total visitors you have generated

- Conversion (Conversion)- how much did you sell compared to how many visitors you have. Note that it uses the total units sold, so if you have one customer that buys two products you have a Conversion rate of 200%

- Date (Date (yyyy/mm/dd)) – the day of the visit (Only in the Day structure)

- Units (Total Items Ordered) – the total amount of products that have been sold both with Amazon as the seller and 3rd party seller that uses Amazons platform.

- Visitors (Unique Visitors)- the unique number of visitors. If you refer the same customer twice it only counts as one unique visitor

In the above list the first name is the name in the XML file and the name in parents is the name of the column in the web page report.

|

| Amazon daily trend report in diagram format |

So what can you use this data for? You can use this information to see how many you refer to Amazon on a daily basic. If you use the Unique Visitors and compare it to the same measure in Goggle Analytics you will be able to see what you conversion rate is to Amazon. How many of your users uses a link/ad/widget to go to Amazon to consider to buy a product.

You should use this information to find out where on your pages you have the most success to add links to Amazon that send users to Amazon. Try to place you links/ads/widgets on different locations on your pages to find the optimal way to send customers to Amazon and get a nice referral rate.

by community-syndication | Jul 14, 2014 | BizTalk Community Blogs via Syndication

BizTalk Services is far from the most mature cloud-based integration solution, but it’s viable one for certain scenarios. I haven’t seen a whole lot of demos that show how to send data to SaaS endpoints, so I thought I’d spend some of my weekend trying to make that happen. In this blog post, I’m going […]

Blog Post by: Richard Seroter

by community-syndication | Jul 13, 2014 | BizTalk Community Blogs via Syndication

Today I’ve released version 2.0 of my Viasfora extension for Visual Studio, which supports VS2010, VS2012 and VS2013. Lots of work went into this release, not only to add some cool new features, but also to clean up the existing

Blog Post by: Tomas Restrepo

by community-syndication | Jul 12, 2014 | BizTalk Community Blogs via Syndication

A lesson I learnt (the hard way) while working on the BRE Pipeline Framework was that if you use one of the out of box disassembler pipeline components such as the XML/EDI/Flat File disassembler and you rely on them to promote context properties from the body of your message, you will find that those context […]

Blog Post by: Johann

by community-syndication | Jul 12, 2014 | BizTalk Community Blogs via Syndication

A lesson I learnt (the hard way) while working on the BRE Pipeline Framework was that if you use one of the out of box disassembler pipeline components such as the XML/EDI/Flat File disassembler and you rely on them to promote context properties from the body of your message, you will find that those context […]

Blog Post by: Johann