by community-syndication | Jan 14, 2010 | BizTalk Community Blogs via Syndication

Many times in BizTalk land we work with Schemas that are nested and have several related

Schemas that are Imported from URL locations etc.

When you include these schemas and deploy to Production, you find out that the BizTalk

server doesn’t access the Internet directly. Hence all the schema Imports fail.

You’ll then go and try hand edit the Imports, downloading the referenced Schema and

try and Mash up something that refers to local files and no URL based Schemas. It

may or may not worktill the next update

I recently came across a handy set of free tools that take all the pain out to do

with Schemas ->

Xml

Help Line

Which has Xml Schema Lightener, Xml Schema Flattener

Another very handy tool not to leave home without.

Enjoy.

by community-syndication | Jan 14, 2010 | BizTalk Community Blogs via Syndication

If you are in are in Minneapolis on Thursday January 21st please join us for the Twin Cities Connected Systems User Group Meeting.

The meeting takes place at the Microsoft offices at 8300 Norman Center Drive, Bloomington, MN 55437. This months meeting time has changed and we will be meeting from 5:00 to 6:30

Ed Jones from RBA will be presenting on Implementing a Service Bus Architecture with BizTalk 2009 and the BizTalk ESB Toolkit 2.0: A Real World Example

Here is a write-up of what will be covered:

Although BizTalk Server offers much in terms of flexibility and extensibility through the implementation of Service Oriented Architectures, most BizTalk applications are developed in “hub-and-spoke” models that are tightly coupled to specific points of functionality. Entire business processes are often represented as orchestration. As such, when business processes change, orchestrations also need to change, often requiring the reconstruction and redeployment of entire BizTalk solutions.

One way to alleviate this pain is to avoid the use of a “hub-and-spoke” model altogether in favor of a Service Bus approach. The BizTalk ESB Toolkit helps accomplish this by making the creation of a true Service Bus easier. One feature of the toolkit for example, itineraries, allows us to create capabilities that are independent of each other and independent of specific processes. This “Composition of Capabilities” method is preferred over the point-to-point solutions used in many BizTalk applications enabling more extensible and flexible Business Processes.

Our client required a system that would accept incoming shipment data in the form of flat-files in multiple formats, process that data through a series of resolutions, and then output the data in both its raw and processed form into an ERP system. Some data will be processed, while other data will be ignored. Over time it is expected that the various processes may change in size, scope, and sequential order. Our solution implements ESB Toolkit Itineraries to accomplish this composition of capabilities. We also use the Exception Management and other more traditional BizTalk functionalities such as Business Rules.

by community-syndication | Jan 14, 2010 | BizTalk Community Blogs via Syndication

This is part three of a twelve part series that introduces the features of WCF WebHttp Services in .NET 4. In this post we will cover:

- Creating operations for updating server state with the [WebInvoke] attribute

- Persisting changes with the ADO.NET Entity Framework in a web service scenario

Over the course of this blog post series, we are building a web service called TeamTask. TeamTask allows a team to track tasks assigned to members of the team. Because the code in a given blog post builds upon the code from the previous posts, the posts are intended to be read in-order.

Downloading the TeamTask Code

At the end of this blog post, you’ll find a link that will allow you to download the code for the current TeamTask Service as a compressed file. After extracting, you’ll find that it contains “Before” and “After” versions of the TeamTask solution. If you would like to follow along with the steps outlined in this post, download the code and open the “Before” solution in Visual Studio 2010. If you aren’t sure about a step, refer to the “After” version of the TeamTask solution.

Note: If you try running the sample code and see a Visual Studio Project Sample Loading Error that begins with “Assembly could not be loaded and will be ignored”, see here for troubleshooting.

Getting Visual Studio 2010

To follow along with this blog post series, you will need to have Microsoft Visual Studio 2010 and the full .NET 4 Framework installed on your machine. (The client profile of the .NET 4 Framework is not sufficient.) At the time of this posting, the Microsoft Visual Studio 2010 Ultimate Beta 2 is available for free download and there are numerous resources available regarding how to download and install, including this Channel 9 video.

Step 1: Adding an Operation to Update Tasks

In part one of this of this blog post series, we used the [WebGet] attribute to indicate that a given method was a service operation. By specifying a UriTemplate value on the [WebGet] attribute, we further indicated that the given service operation should handle all HTTP GET requests when the request URI matches the UriTemplate. However, we didn’t mention in part one that there is a second attribute used to specify service operations: the [WebInvoke] attribute.

The [WebInvoke] attribute is just like the [WebGet] attribute, except that with the [WebInvoke] attribute we are able to specify the HTTP method that the service operation should handle. With the [WebGet] attribute, the HTTP method is always implicitly an HTTP GET. Therefore, we’ll use the [WebInvoke] attribute to create a service operation for updating a given task.

-

If you haven’t already done so, download and open the “Before” solution of the code attached to this blog post.

-

Open the TeamTaskService.cs file in the code editor and copy the code below into the TeamTaskService class. You’ll need to add “using System.Data.Objects;” to the code file:

[Description(“Allows the details of a single task to be updated.”)]

[WebInvoke(UriTemplate = “Tasks/{id}”, Method = “PUT”)]

public Task UpdateTask(string id, Task task)

{

// The id from the URI determines the id of the task

int parsedId = int.Parse(id);

task.Id = parsedId;

using (TeamTaskObjectContext objectContext =

new TeamTaskObjectContext())

{

objectContext.AttachTask(task); // We’ll need to implement this!

objectContext.SaveChanges();

// Because we allow partial updates, we need to refresh from the dB

objectContext.Refresh(RefreshMode.StoreWins, task);

}

return task;

}

Notice that we used the [WebInvoke] attribute and specified the HTTP method to be “PUT”. Also notice that we included a [Description] attribute for the automatic help page as we did in part two of this blog post series.

The UpdateTask() method has two input parameters: id and task. The id parameter value will come from the URI of the request because of the matching {id} variable in the UriTemplate. The task parameter is not mapped to any variables in the UriTemplate, so its value will come from the body of the request.

In the implementation of the UpdateTask() method we need to parse the id parameter because it is of type string, but task id’s are of type int. Obviously, this call to int.Parse() could throw an exception, but for now we’ll simply let the exception bubble up. In part five of this blog post series we’ll add proper exception handling to ensure that a more appropriate HTTP response is returned if the parsing fails.

One final subtlety to explain is the call to the Refresh() method, which will update the task instance with the current values from the database. It might seem odd that we have to make this call given that we just persisted the same task instance to the database. However, we are doing this because we will allow clients to make partial updates. The task supplied in the request may only have values for the properties that are to be updated. However, the task provided in the response should have all of the properties of the task, so we must get these property values from the database. A custom stored procedure could be used to optimize this, but we won’t explore that option here.

-

If we build the TeamTask.Service project as it is now, it will fail because the TeamTaskObjectContext doesn’t contain a definition for the AttachTask() method. We’ll implement the AttachTask() method in the next step.

Step 2: Creating a mechanism for Attaching Tasks

As you may recall from part one of this blog post series, we are using the ADO.NET Entity Framework to move data between CLR instances and the database. The ADO.NET Entity Framework uses an ObjectContext to cache the CLR instances locally and track changes made to the them. To persist these local changes to the database, a developer calls the SaveChanges() method on the ObjectContext.

However, with a web service like the TeamTask service, the instances that need to be persisted to the database usually aren’t being tracked by the ObjectContext because they’ve just been deserialized from the HTTP request. Therefore, we need to inform the ObjectContext of the instances that we want to persist to the database and indicate which properties on the instances have new values.

To do the work of informing the ObjectContext of our updated task, we’ll add an AttachTask() method to our custom ObjectContext, the TeamTaskObjectContext class. We’ll also add a GetModifiedProperties() method to the Task class that will be responsible for indicating which properties on the task have new values.

-

Open the TeamTaskObjectContext.cs file in the code editor and copy the code below into the TeamTaskObjectContext class:

public void AttachTask(Task task)

{

this.Tasks.Attach(task);

ObjectStateEntry entry =

this.ObjectStateManager.GetObjectStateEntry(task);

foreach (string propertyName in task.GetModifiedProperties())

{

entry.SetModifiedProperty(propertyName);

}

}

The AttachTask() method uses an ADO.NET Entity Framework class called the ObjectStateManager to get an ObjectStateEntry for the task, and then sets the modified properties on the entry. The list of modified properties is supplied by the task itself in the GetModifiedProperties() method, which we’ll need to implement next.

-

Open the Task.cs file in the code editor and copy the code below into the Task class. You’ll need to add “using System.Collections.Generics;” to the code file:

internal IEnumerable<string> GetModifiedProperties()

{

// Create the list with the required properties

List<string> modifiedProperties = new List<string>() { “TaskStatusCode”,

“OwnerUserName”,

“Title” };

// Add the optional properties

if (!string.IsNullOrWhiteSpace(this.Description))

{

modifiedProperties.Add(“Description”);

}

if (this.CompletedOn.HasValue)

{

modifiedProperties.Add(“CompletedOn”);

}

return modifiedProperties;

}

The GetModifiedProperties() simple returns a list of property names. Notice that the Id and CreatedOn properties of the Task class will never be included in this list, indicating that these properties can never be updated. We obviously don’t want to update the Id value of a task since we are using it for identity purposes. A user might try to update the CreatedOn value of a give task by sending an HTTP PUT request with CreatedOn value supplied, but the value will always be ignored.

|

Helpful Tip: Web services like the TeamTask service are almost always a tier of an N-Tier application. If your interested in learning more about building N-Tier applications with WCF and the ADO .NET Entity Framework in .NET 4, Daniel Simmons from the Entity Framework Team has written some informative MSDN Magazine articles here and here.

|

Step 3: Updating Task Status with the HttpClient

Now that we’ve implemented the UpdateTask() operation, lets write some client code to update the status of one of the tasks. We already covered the basics of using the HttpClient in part two of this blog post series, so we’ll go through the client code quickly.

-

Open the Program.cs file of the TeamTask.Client project in the code editor and copy the following static method into the Program class:

static Task GetTask(HttpClient client, int id)

{

Console.WriteLine(“Getting task ‘{0}’:”, id);

using (HttpResponseMessage response = client.Get(“Tasks”))

{

response.EnsureStatusIsSuccessful();

return response.Content.ReadAsDataContract<List<Task>>()

.FirstOrDefault(task => task.Id == id);

}

}

The static GetTask() method is very similar to the client code we wrote in part two. The GetTask() method uses the ReadAsDataContract() extension method to get a typed list of tasks from the TeamTask service response and then returns a task from the the list with a given id.

-

Also copy the following static method into the Program class:

static Task UpdateTask(HttpClient client, Task task)

{

Console.WriteLine(“Updating task ‘{0}’:”, task.Id);

Console.WriteLine();

string updateUri = “Tasks/” + task.Id.ToString();

HttpContent content = HttpContentExtensions.CreateDataContract(task);

using (HttpResponseMessage response = client.Put(updateUri, content))

{

response.EnsureStatusIsSuccessful();

return response.Content.ReadAsDataContract<Task>();

}

}

The static UpdateTask() method is slightly more interesting than the GetTask() method since we have to provide a message body with the request. The Put() extension method for the HttpClient takes both a URI argument and a content argument. The URI includes the id of the task to be updated and the content is just a task instance that has been serialized using the static CreateDataContract() method.

-

Copy this last static method into the Program class so that we can write out the state of a task to the console:

static void WriteOutTask(Task task)

{

Console.WriteLine(” Id: {0}”, task.Id);

Console.WriteLine(” Title: {0}”, task.Title);

Console.WriteLine(” Status: {0}”, task.Status);

Console.WriteLine(” Created: {0}”, task.CreatedOn.ToShortDateString());

Console.WriteLine();

}

-

Now we’ll implement the Main() method to retrieve a task, update the status of the task, and then retrieve the task again to verify that the change was persisted to the database. Replace any code within the Main() method with the following code:

using (HttpClient client = new HttpClient(http://localhost:8080/TeamTask/))

{

// Getting task 1

int taskId = 1;

Task task1 = GetTask(client, taskId);

WriteOutTask(task1);

// Update task 1

task1.Status = TaskStatus.InProgress;

task1.CreatedOn = new DateTime(2009, 12, 1);

Task task1Updated = UpdateTask(client, task1);

WriteOutTask(task1Updated);

// Get task 1 again to see the updated status

Task task1Again = GetTask(client, taskId);

WriteOutTask(task1Again);

Console.ReadKey();

// Return the dB to its original state

task1.Status = TaskStatus.Completed;

UpdateTask(client, task1);

}

Notice that when we update the task1 status, we are also updating the task1 creation date. However, you’ll recall that in the GetModifiedProperties() method on the Task class, we never set the CreatedOn property as modified, so we shouldn’t see the new creation date in the response from the update or when we retrieve the task a second time.

-

Start without debugging (Ctrl+F5) to get the TeamTask service running and then start the client by right clicking on the TeamTask.Client project in the “Solution Explorer” window and selecting “Debug”->”Start New Instance”. The console should contain the following output:

Notice that the task status is updated from “Completed” to “InProgress”, but that the creation date remains unchanged.

Next Steps: Automatic and Explicit Format Selection in WCF WebHttp Services

Our TeamTask service development is coming along nicely. The TeamTask service now supports querying for tasks, retrieving users, and partial updates of tasks. We also have a client that can programmatically access the service.

However, you may have noticed that all of the responses we’ve received from the TeamTask service have been in XML. In part four of this blog post series, we’ll see how easy it is to also generate JSON responses when we take a look at automatic and explicit format selection in WCF WebHttp Services.

Randall Tombaugh

Developer, WCF WebHttp Services

by community-syndication | Jan 14, 2010 | BizTalk Community Blogs via Syndication

I’ve added two new webcasts to CloudCasts looking at the two different parts of AppFabric (server and cloud).

Brian Loesgen takes a look at “

Extending a BizTalk ESB On-Ramp into the Cloud” using the Azure platform AppFabric Service Bus.

I run though an “Introduction to Windows Server AppFabric Caching” using the Windows Server AppFabric (formally codename “Velocity”).

by community-syndication | Jan 13, 2010 | BizTalk Community Blogs via Syndication

Hi all

I just did a post on developing a custom cumulative functoid. You can find it here: http://blog.eliasen.dk/2010/01/13/DevelopingACustomCumulativeFunctoid.aspx

At the very end of the post I write that you should NEVER develop a custom referenced

cumulative functoid but instead develop a custom inline cumulative functoid. Given

the title of this blog post, probably by now you know why this is 🙂

When I developed my first cumulative functoid, I developed a referenced functoid,

since this is what I prefer. I tested it on an input and it worked fine. Then I deployed

it and threw 1023 copies of the same message through BizTalk at the same time. My

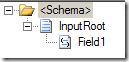

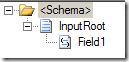

test solution had two very simple schemas:

Source

Source

schema.

Destination

Destination

schema.

The field “Field1” in the source schema has a maxOccurs = unbounded and the field

“Field1” in the destination schema has maxOccurs = 1.

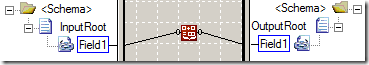

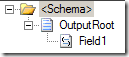

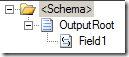

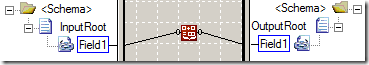

I then created a simple map between them:

The map merely utilizes my “Cumulative Comma” functoid (Yes, I know the screen shot

is of another functoid. Sorry about that 🙂 ) to get all occurrences of “Field1”

in the source schema concatenated into one value separated by commas that is output

to the “Field1” node in the output.

My 1023 test instanced all have 10 instances of the “Field1” in the input, so all

output XML should have these ten values in a comma separated list in the “Field1”

element in the output schema.

Basically, what I found was, that it was quite unpredictable what the outcome of that

was. Some of the output XML has a completely empty “Field1” element. Others had perhaps

42 values in their comma separated list. About 42% of the output files had the right

number of fields in the comma separated list, but I don’t really trust they are the right values

Anyway, I looked at my code, and looked again couldn’t see anything wrong. So I thought

I’d try with the cumulative functoids that ship with BizTalk. I replaced my functoid

with the built-in “Cumulative Concatenate” functoid and did the same test. The output

was just fine – nothing wrong. This baffled me a bit, but then I discovered that the

cumulative functoids that ship with BizTalk are actually developed so they can be

used as BOTH referenced functoids and inline functoids. Which one is used depends

on the value of the “Script Type Precedence” property on the map. By default, inline

C# has priority, so the built-in “Cumulative Concatenate” functoid wasn’t used as

a referenced functoid as my own functoid was. I changed the property to have “External

Assembly” as first priority and checked the generated XSLT to make sure that now it

was using the functoid as a referenced functoid. It was. So I deployed and tested

and guess what?

I got the same totally unpredictable output as I did with my

own functoid!

So the conclusion is simple; The cumulative functoids that

ship with BizTalk are NOT thread safe, when used as referenced functoids.

As a matter of fact, I claim that it is impossible to write a thread safe referenced

cumulative functoid, for reasons I will now explain.

When using a referenced cumulative functoid, the generated XSLT looks something like

this:

1: <xsl:template match="/s0:InputRoot">

2: <ns0:OutputRoot>

3: <xsl:variable name="var:v1" select="ScriptNS0:InitCumulativeConcat(0)" />

4: <xsl:for-each select="/s0:InputRoot/Field1">

5: <xsl:variable name="var:v2" select="ScriptNS0:AddToCumulativeConcat(0,string(./text()),"1000")" />

6: </xsl:for-each>

7: <xsl:variable name="var:v3" select="ScriptNS0:GetCumulativeConcat(0)" />

8: <Field1>

9: <xsl:value-of select="$var:v3" />

10: </Field1>

11: </ns0:OutputRoot>

12: </xsl:template>

As you can see, the “InitCumulativeConcat” is called once, then “AddToCumulativeConcat

is called for each occurrence of “Field1” and finally “GetCumulativeConcat” is called

and the value is inserted into the “Field1” node of the output.

In order to make sure the functoid can distinguish between instances of the functoid,

there is an “index” parameter to all three methods, which the documentation states

is unique for that instance. The issue here is, that this is only true for instances within

the same map and not across all instances of the map. As you can see in the XSLT,

a value of “0” is used for the index parameter. If the functoid was used twice in

the same map, a value of “1” would be hardcoded in the map for the second usage of

the functoid and so on.

But if the map runs 1000 times simultaneously, they will all send a value of “0” to

the functoids methods. And since the functoid is not instantiated for each map, but

rather the same object is used across all the maps, there will a whole lot of method

calls with the value “0” for the index parameter without the functoid having a clue

as to which instance of the map is calling it, basically mixing everything up good.

The reason it works for inline functoids is, of course, that there is no object to

be shared across map instances – it’s all inline for each map so here the index parameter

is actually unique and things work.

And the reason I cannot find anyone on the internet having described this before me

(This issue must have been there since BizTalk 2004) is probably that the default

behavior of maps is to use the inline functionality if present, then probably no one

has ever changed that property at the same time as having used a cumulative functoid

under high load.

What is really funny is, that the only example of developing a custom cumulative functoid

I have found online is at MSDN: http://msdn.microsoft.com/en-us/library/aa561338(BTS.10).aspx and

the example is actually a custom referenced cumulative functoid which doesn’t work,

because it isn’t thread safe. Funny, eh?

So, to sum up:

Never ever develop a custom cumulative referenced functoid – use the inline versions

instead. I will have o update the one at http://eebiztalkfunctoids.codeplex.com right

away 🙂

Good night

—

eliasen

by community-syndication | Jan 13, 2010 | BizTalk Community Blogs via Syndication

Hi all

As many of you know, I am currently writing a book alongside some of the best of the

community. Currently I am writing about developing functoids, and in doing this I

have discovered that there are plenty of blog posts and helpful articles out there

about developing functoids, but hardly any of them deal with developing cumulative

functoids. So I thought the world might end soon if this wasn’t rectified. 🙂

Developing functoids really isn’t as hard as it might seem. As I have explained numerous

times, I consider creating a good icon as the most difficult part of it 🙂

When developing custom functoids, you need to choose between developing a referenced

functoid or an inline

functoid. The difference is that a referenced functoid is a normal .NET assembly

that is GAC’ed and called from the map at runtime requiring it to be deployed on all

BizTalk Servers that have a map that use the functoid. Inline functoids on the other

hand output a string containing the method and this method is put inside the XSLT

and called from there.

There are ups

and downs to both – my preference usually goes towards the referenced functoid

not because of the reasons mentioned on MSDN, but simply because I can’t be bothered

creating a method that outputs a string that is a method. It just looks ugly 🙂

So, in this blog post I will develop a custom cumulative functoid that generates a

comma delimited string based on a reoccurring node as input.

First, the functionality that is needed for both referenced and inline functoids

All functoids must inherit from the BaseFunctoid class which is found in the Microsoft.BizTalk.BaseFunctoids

namespace which is usually found in <InstallationFolder>\Developer Tools\Microsoft.BizTalk.BaseFunctoids.dll.

Usually a custom functoid consists of:

-

A constructor that does almost all the work and setting up the functoid

-

The method that should be called at runtime for a referenced functoid or a method

that returns a string with a method for inline functoids.

-

Resources for name, tooltip, description and icon

A custom cumulative functoid consists of the same but also has a data structure

to keep aggregated values in and it has two methods more to specify. The reason a

cumulative functoid has three methods instead of one is that the first is called to

initialize the data structure, the second is called once for every occurrence of the

input node and the third is called to retrieve the aggregated value.

To exemplify, I have created two very simple schemas:

Source

Source

schema.

Destination

Destination

schema.

The field “Field1” in the source schema has a maxOccurs = unbounded and the field

“Field1” in the destination schema has maxOccurs = 1.

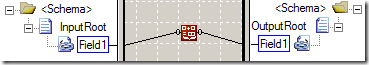

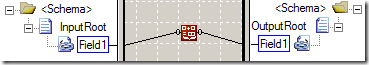

I have then created a simple map between them:

The map merely utilizes the built-in “Cumulative Concatenate” functoid to get all

occurrences of “Field1” in the source schema concatenated into one value that is output

to the “Field1” node in the output.

The generated XSLT looks something like this:

1: <xsl:template match="/s0:InputRoot">

2: <ns0:OutputRoot>

3: <xsl:variable name="var:v1" select="ScriptNS0:InitCumulativeConcat(0)" />

4: <xsl:for-each select="/s0:InputRoot/Field1">

5: <xsl:variable name="var:v2" select="ScriptNS0:AddToCumulativeConcat(0,string(./text()),"1000")" />

6: </xsl:for-each>

7: <xsl:variable name="var:v3" select="ScriptNS0:GetCumulativeConcat(0)" />

8: <Field1>

9: <xsl:value-of select="$var:v3" />

10: </Field1>

11: </ns0:OutputRoot>

12: </xsl:template>

As you can see, the “InitCumulativeConcat” is called once, then “AddToCumulativeConcat

is called for each occurrence of “Field1” and finally “GetCumulativeConcat” is called

and the value is inserted into the “Field1” node of the output.

So, back to the code needed for all functoids. It is basically the same as normal

functoids:

1: public class CummulativeComma

: BaseFunctoid

2: {

3: public CummulativeComma()

: base()

4: {

5: this.ID

= 7356;

6:

7: SetupResourceAssembly(GetType().Namespace

+ "." + NameOfResourceFile, Assembly.GetExecutingAssembly());

8:

9: SetName("Str_CummulativeComma_Name");

10: SetTooltip("Str_CummulativeComma_Tooltip");

11: SetDescription("Str_CummulativeComma_Description");

12: SetBitmap("Bmp_CummulativeComma_Icon");

13:

14: this.SetMinParams(1);

15: this.SetMaxParams(1);

16:

17: this.Category

= FunctoidCategory.Cumulative;

18: this.OutputConnectionType

= ConnectionType.AllExceptRecord;

19:

20: AddInputConnectionType(ConnectionType.AllExceptRecord);

21: }

22: }

Basically, you need to:

-

Set the ID of the functoid to a unique value that is greater than 6000. Values smaller

than 6000 are reserved for BizTalks own functoids.

-

Call SetupResourceAssembly to let the base class know what resource file to get resources

from

-

Call SetName, SetTooltip, SetDescription and SetBitmap to let the base class get the

appropriate resources from the resource file. Remember to add the appropriate resources

to the resource file.

-

Call SetMinParams and SetMaxParams to determine how many parameters the functoid can

have. They should be set to 1 and 2 respectively. The first is for the reoccurring

node and the second is a scoping input.

-

Set the category of the functoid to “Cumulative”

-

Determine both the type of nodes/functoids the functoid can get input from and what

it can output to.

I won’t describe these any more right now. They are explained in more details in the

book 🙂 And also, there are plenty of posts out there about these emthods and properties.

Now for the functionality needed for a referenced functoid:

Beside what you have seen above, for a referenced functoid, the three methods must

be written and referenced. This is done like this:

1: SetExternalFunctionName(GetType().Assembly.FullName,

GetType().FullName, "InitializeValue");

2: SetExternalFunctionName2("AddValue");

3: SetExternalFunctionName3("RetrieveFinalValue");

The above code must be in the constructor along with the rest. Now, all that is left

is to write the code for those three methods, which can look something like this:

1: private Hashtable

myCumulativeArray = new Hashtable();

2:

3: public string InitializeValue(int index)

4: {

5: myCumulativeArray[index]

= "";

6: return "";

7: }

8:

9: public string AddValue(int index, string value, string scope)

10: {

11: string str

= myCumulativeArray[index].ToString();

12: str

+= value + ",";

13: myCumulativeArray[index]

= str;

14: return "";

15: }

16:

17: public string RetrieveFinalValue(int index)

18: {

19: string str

= myCumulativeArray[index].ToString();

20: if (str.Length

> 0)

21: return str.Substring(0,

str.Length - 1);

22: else

23: return "";

24: }

So, as you can see, a data structure (in this case a Hashtable) is declared to store

the aggregated results and all three methods have an index parameter that is used

to know how to index the data structure for each method call in case the functoid

is used multiple times at the same time. The mapper will generate a unique index to

be used.

Compile the project, copy the DLL to “<InstallationFolder>\Developer Tools\Mapper

Extensions” and GAC the assembly and you are good to go. Just reset the toolbox to

load the functoid.

Now for the functionality needed for an inline functoid:

The idea behind a cumulative inline functoid is the same as for a cumulative referenced

functoid. You still need to specify three methods to use. For an inline functoid you

need to generate the methods that will be included in the XSLT, though.

For the constructor, add the following lines of code:

1: SetScriptGlobalBuffer(ScriptType.CSharp,

GetGlobalScript());

2: SetScriptBuffer(ScriptType.CSharp,

GetInitScript(), 0);

3: SetScriptBuffer(ScriptType.CSharp,

GetAggScript(), 1);

4: SetScriptBuffer(ScriptType.CSharp,

GetFinalValueScript(), 2);

The first method call sets a script that will be global for the map. In this script

you should initialize the needed data structure.

The second method call sets up the script that will initialize the data structure

for a given instance of the functoid.

The third method call sets up the script that will add a value to the aggregated value

in the data structure.

The fourth method call sets up the script that is used to retrieve the aggregated

value.

As you can see, the second, third and fourth line all call the same method. The last

parameter is used to let the functoid know if it is the initialization, aggregating

or retrieving method that is being setup.

So, what is left is to implement these four methods. The code for this can look quite

ugly, since you need to build a string and output it, but it goes something like this:

1: private string GetFinalValueScript()

2: {

3: StringBuilder

sb = new StringBuilder();

4: sb.Append("\npublic

string RetrieveFinalValue(int index)\n");

5: sb.Append("{\n");

6: sb.Append("\tstring

str = myCumulativeArray[index].ToString();");

7: sb.Append("\tif

(str.Length > 0)\n");

8: sb.Append("\t\treturn

str.Substring(0, str.Length - 1);\n");

9: sb.Append("\telse\n");

10: sb.Append("\t\treturn

\"\";\n");

11: sb.Append("}\n");

12: return sb.ToString();

13: }

14:

15: private string GetAggScript()

16: {

17: StringBuilder

sb = new StringBuilder();

18: sb.Append("\npublic

string AddValue(int index, string value, string scope)\n");

19: sb.Append("{\n");

20: sb.Append("\tstring

str = myCumulativeArray[index].ToString();");

21: sb.Append("\tstr

+= value + \",\";\n");

22: sb.Append("\tmyCumulativeArray[index]

= str;\n");

23: sb.Append("\treturn

\"\";\n");

24: sb.Append("}\n");

25: return sb.ToString();

26: }

27:

28: private string GetInitScript()

29: {

30: StringBuilder

sb = new StringBuilder();

31: sb.Append("\npublic

string InitializeValue(int index)\n");

32: sb.Append("{\n");

33: sb.Append("\tmyCumulativeArray[index]

= \"\";\n");

34: sb.Append("\treturn

\"\";\n");

35: sb.Append("}\n");

36: return sb.ToString();

37: }

38:

39: private string GetGlobalScript()

40: {

41: return "private

Hashtable myCumulativeArray = new Hashtable();";

42: }

I suppose, by now you get why I prefer referenced functoids? 🙂 You need to write

the method anyway in order to check that it compiles. Wrapping it in methods that

output the strings is just plain ugly.

Conclusion

As you can hopefully see, developing a cumulative functoids really isn’t that much

harder than developing a normal functoid. Just a couple more methods. I did mention

that I usually prefer the referenced functoids because of the uglyness of creating

inline functoids. For cumulative functoids, however, you should NEVER use referenced

functoids but instead only use inline functoids. The reason for this is quite good,

actually – and you can see it in my next blog post which will come in a day or two.

Thanks

—

eliasen

by community-syndication | Jan 12, 2010 | BizTalk Community Blogs via Syndication

A while ago I posted an article about an issue when the tracking information for BizTalk appeared not to be working and it was because the stream status was out of sync and information was not passing from the messagebox database to the tracking database.

http://geekswithblogs.net/michaelstephenson/archive/2008/10/30/126375.aspx

Since then I did a small SQL script which could be used to check if they were in sync and if not could fix the issue. This script is below incase anyone needs it.

Note:

- I havent tested it on BizTalk 2009 just on 2006 and 2006 R2

- It isnt a good idea to use this in a production environment you might want to engage with Microsoft support first

/*

This query will validate the stream status and check if its in sync. If it isnt in sync then setting

@IncludeUpdate to 1 will get the script to sync back up

*/

Declare @IncludeUpdate bit

Set @IncludeUpdate = 0 — Set me to 1 to update the values

Declare

@TrackingValue0 bigint,

@TrackingValue1 bigint,

@TrackingValue2 bigint,

@TrackingValue3 bigint,

@StatusValue0 bigint,

@StatusValue1 bigint,

@StatusValue2 bigint,

@StatusValue3 bigint

Select @TrackingValue0 = Min(SeqNum) From BizTalkMsgBoxDb.dbo.TrackingData_1_0

Select @TrackingValue1 = Min(SeqNum) From BizTalkMsgBoxDb.dbo.TrackingData_1_1

Select @TrackingValue2 = Min(SeqNum) From BizTalkMsgBoxDb.dbo.TrackingData_1_2

Select @TrackingValue3 = Min(SeqNum) From BizTalkMsgBoxDb.dbo.TrackingData_1_3

Select @StatusValue0 = LastSeqNum From BizTalkDtaDb.dbo.TDDS_StreamStatus Where Destinationid = 1 And Partitionid = 0

Select @StatusValue1 = LastSeqNum From BizTalkDtaDb.dbo.TDDS_StreamStatus Where Destinationid = 1 And Partitionid = 1

Select @StatusValue2 = LastSeqNum From BizTalkDtaDb.dbo.TDDS_StreamStatus Where Destinationid = 1 And Partitionid = 2

Select @StatusValue3 = LastSeqNum From BizTalkDtaDb.dbo.TDDS_StreamStatus Where Destinationid = 1 And Partitionid = 3

IF(@TrackingValue0 != @StatusValue0)

Begin

Print ‘The Stream Status for Partition 0 and Destination 1 is ‘ + Cast(@StatusValue0 as varchar) + ‘ when it should be ‘ + Cast(@TrackingValue0 as varchar)

End

IF(@TrackingValue1 != @StatusValue1)

Begin

Print ‘The Stream Status for Partition 1 and Destination 1 is ‘ + Cast(@StatusValue1 as varchar) + ‘ when it should be ‘ + Cast(@TrackingValue1 as varchar)

End

IF(@TrackingValue2 != @StatusValue2)

Begin

Print ‘The Stream Status for Partition 2 and Destination 1 is ‘ + Cast(@StatusValue2 as varchar) + ‘ when it should be ‘ + Cast(@TrackingValue2 as varchar)

End

IF(@TrackingValue3 != @StatusValue3)

Begin

Print ‘The Stream Status for Partition 3 and Destination 1 is ‘ + Cast(@StatusValue3 as varchar) + ‘ when it should be ‘ + Cast(@TrackingValue3 as varchar)

End

If(@IncludeUpdate = 1)

Begin

Print ‘The stream status is being synced’

Update BizTalkDtaDb.dbo.TDDS_StreamStatus Set LastSeqNum = @TrackingValue0 – 1 Where Destinationid = 1 And Partitionid = 0

Update BizTalkDtaDb.dbo.TDDS_StreamStatus Set LastSeqNum = @TrackingValue1 – 1 Where Destinationid = 1 And Partitionid = 1

Update BizTalkDtaDb.dbo.TDDS_StreamStatus Set LastSeqNum = @TrackingValue2 – 1 Where Destinationid = 1 And Partitionid = 2

Update BizTalkDtaDb.dbo.TDDS_StreamStatus Set LastSeqNum = @TrackingValue3 – 1 Where Destinationid = 1 And Partitionid = 3

Print ‘Stream status sync complete’

End

by community-syndication | Jan 12, 2010 | BizTalk Community Blogs via Syndication

Previous Parts:

For more information:

Benchmark your BizTalk Server (Part 1)

How to install:

Benchmark your BizTalk Server (Part 2)

Drill Down on BizTalk Baseline Wizard

Now that you understand what the tools is let’s look at how it can be used in a realistic situation. The tool provides a simple Orchestration and a messaging scenario which you can use to test your BizTalk Server deployment. In this post I am going to walk through

%u00b7 Running the tool against a default BizTalk environment e.g. BizTalk and SQL Server have been installed on an operating system with the default configuration

%u00b7 Demonstrate the techniques I use here in our Redmond Performance labs to analyze and determine the bottleneck in a BizTalk Server solution. This will include looking at perfmon counters and SQL Dynamic Management Views

Scenarios

To refresh here are the scenarios:Scenario 1: Messaging

- Loadgen generates a new XML message and sends it over NetTCP

- A WCF-NetTCP Receive Location receives a the xml document from Loadgen.

- The PassThruReceive pipeline performs no processing and the message is published by the EPM to the MessageBox.

- The WCF One-Way Send Port, which is the only subscriber to the message, retrieves the message from the MessageBox

- The PassThruTransmit pipeline provides no additional processing

- The message is delivered to the back end WCF service by the WCF NetTCP adapter

Scenario 2: Orchestration

- Loadgen generates a new XML message and sends it over NetTCP

- A WCF-NetTCP Receive Location receives a the xml document from Loadgen.

- The XMLReceive pipeline performs no processing and the message is published by the EPM to the MessageBox.

- The message is delivered to a simple Orchestration which consists of a receive location and a send port

- The WCF One-Way Send Port, which is the only subscriber to the Orchestration message, retrieves the message from the MessageBox

- The PassThruTransmit pipeline provides no additional processing

- The message is delivered to the back end WCF service by the WCF NetTCP adapter

My Deployment

The tests for this blog post were done on the kit below. A EMC Clarion CX-960 storage array with 240 disk drives was used as our back end storage. We used a single SQL Server with 16 logical processors (cores) and 64 GB of memory. I was fortunate enough to have access to four Dell R805 machines which are Dual Processor Quad Core with 32 GB RAM. Two of these were used for BizTalk, one to generate load and one to host the simple back end WCF service. Please note the specification of the hardware used here for the load generator servers and WCF service box is many times greater than is actually required, they were simply what was available for us in the lab at the time.

BizTalk Server 2009 and SQL Server were installed with their default configuration with the following exceptions:

1) TempDB log and data file were moved to a dedicated 4+4 RAID 10 LUN

2) The default data file location in the SQL setup was configured to be a dedicated 8+8 RAID 10 LUN

3) The default log file location for the SQL instance was configured to be a dedicated 8+8 RAID 10 LUN

4) For simplicity sake the recovery model for the BizTalk databases was set to simple. Because we do not use bulk logged operations the level of logging is still the same we avoid having to perform clean up of the log after every testing run. This should not be done in a production environment

5) The BizTalk MessageBox data and log files were set to a size of 5GB and

Running The Test – Messaging Scenario

We installed the tool, see http://blogical.se/blogs/mikael/archive/2009/11/26/benchmark-your-biztalk-server-part-2.aspx for detailed instructions. The tool was installed on two servers so that I could run the load client on one server and the WCF service on another, it is of course possible to run both on the same.

As shown in the table below the results that we got from the first run were 789 messages/sec across a ten minute test.

|

Change

|

Configuration

|

Avg Processor Time (BTS Receive)

|

Avg Processor (BTS Send)

|

SQL Processor

|

Received Msgs/sec

|

Send msgs/sec

|

|

Default BizTalk and SQL Configuration

|

2 BizTalk (8 cores each), 1 SQL 16 CPU

|

50

|

53

|

62

|

789

|

789

|

The first thing that I examine to determine whether a BizTalk environment is properly configured and balanced is the receive and send rate for this use case should be approximately equal throughout the test. This is measured using the BizTalk:Messaging\Documents Processed/Sec performance counter for the send side and BizTalk:Messaging\Documents received/sec performance counter for the receive side. This perfmon screenshot shows a subsection of the tests I ran. As you can see from the graphic below in my test they were approximately equal throughout.

Typically the next step that I take is to examine the Message Delivery (outbound) and Message Publishing throttling (inbound) counters for all my BizTalk host instances. These can be found under the “BizTalk:Message Agent” perfmon object, there is an instance of each counter per host. Each BizTalk host can be in a number of different throttling states, the state shifts if throttling thresholds such as database size, inbalance between send and receive rates and numerous more are breached. The links below provide full information on this, to summarize a throttling state of 0 is good because this means that the engine is running at maximum speed. When looking at these counters it is important to check that throttling state duration, if the value of this for one host is small it indicates that either it was restarted or that throttling occurred.

How BizTalk Server Implements Throttling

The screenshot below shows the rate counters (Documents Processed and Received per second) for my hosts as well as the throttling state and duration for each of the hosts. The BBW_RxHost throttling state duration is 550 compared to 9494 seconds for the BBW_TxHost. In this case it was because I restarted the receive host just before our test run started.

What was SQL Server Doing During This Time

It is now important to examine the SQL Server tier and understand what is affecting performance here. A good starting point is to look at the following counters

%u00b7 Processor\% Processor Time

%u00b7 Memory\Available Mbytes

%u00b7 Logical Disk\Avg. Disk sec/Read

%u00b7 Logical Disk\Avg. Disk sec/Write

If the processor utilization is high then examine the privileged and user mode time, high privileged mode, which is typically defined as greater than 20%, can indicate that the box is I/O bound. ON a 64-bit SQL Server running with greater than 16 GB of RAM I would expect at least 500 Mbytes of RAM to be available. When examining the logical disk counters indicated it is important to look at each of the individual instances not just the total instance. For SAN LUNs if you are seeing response times less than 5 milliseconds for reads or writes you are very likely hitting the SAN cache.

BizTalk Database and the Buffer Pool

The primary purpose of the buffer pool (also known as the buffer cache) in SQL Server is to reduce intensive disk I/O for reading and writing pages. When an update is made to a page to reduce future read and write operations it remains in the buffer pool until the buffer manager needs the buffer area to read in more data. Modern 64 bit servers now regularly have 32 GB or more RAM available, because the size of most BizTalk MessageBox databases is just several GB in size, this means that the data pages for it can be kept in memory. The benefit of this is that under these conditions an insert or update to the MessageBox will only require a log write to physical disk, the data page will be modified in the Buffer Pool memory. The modified, known as a dirty page, will be written to disk by the checkpoint process during its next execution. This flushing of dirty pages to disk is done to minimize the amount of traffic that needs to be replayed from the transaction log in the event or an unexpected or unplanned server restart. For more information on the Buffer Pool see http://msdn.microsoft.com/en-us/library/aa337525.aspx

SQL Server conveniently exposes information about the Buffer Pool through perfmon. From the SQL Server:Buffer Manager perfmon object look at the following counters:

%u00b7 Buffer Cache Hit Ratio: A value of 100 means that all pages required for read and write were able to be retrieved from the Buffer Pool

%u00b7 Lazy Writes/sec: This is the mechanism that SQL Server uses to remove pages from the buffer pool to free up space. This indicates that the instance is under some memory pressure at the time

%u00b7 Checkpoint Pages/sec: Use this counter to determine when checkpoint is occurring. To ensure that your disk sub-system can keep up with the rate please ensure that Avg Disk Sec/Write does not go above 15 milliseconds when the checkpoint spike occurs. For further information see http://msdn.microsoft.com/en-us/library/ms189573.aspx

SQL Server also provides the SQL Server:Memory Manager perfmon counter object. Target Server Memory (KB) indicates the amount of physical memory that SQL Server can allocate to this instance. In the screenshot below you can see that this is approximately 57 GB. The Total Server Memory (KB) indicates the amount of memory that is currently allocated to the instance, in our case this is approximately 20 GB, which indicates that there is memory headroom on the box and pressure is not likely to occur on the buffer pool. This of course assumes that the value of the Memory\Available Mbytes counter is not low.

Note: It is expected behavior that the Total Server Memory Counter will increase in value over time. SQL Server will not free up memory as this is an expensive task and could degrade performance in a number of ways including reducing the memory available to cache data and index pages in the buffer pool, hence increasing I/O required, therefore increasing response times as I/O is slower than memory access. If the operating system indicates that there is global memory pressure on the server then SQL will release memory. The min and max server memory settings can be used to manually control the amount of memory that each SQL instance can address. I have found this most useful when running multiple instances on the same server. See http://msdn.microsoft.com/en-us/library/ms178067.aspx for more information.

Since we now know that BizTalk Server is not resource bound or throttling and SQL Server does not appear to be resource bound by memory, disk or processor either we will delve deeper.

DMV’s – Delving Further Into SQL Server

SQL Server 2005 introduced Dynamic Management Views (DMVs) which provide instance wide and database specific state information. To explain the full scope of what DMVs offer is outside the scope of this article, indeed there are several books written on this topic.

From our initial analysis using perfmon we do not appear to be resource bound. When executing concurrent high throughput loads SQL Server worker threads often have to wait either to gain access to a particular database object, for accesss to a physical resource and numerous other factors. When this occurs SQL Server will write the current Wait Type to the DMVs. This is useful for troubleshooting as we will see.

The following query can be used to display the current waiting tasks.

SELECT wt.session_id, wt.wait_type

, er.last_wait_type AS last_wait_type

, wt.wait_duration_ms

, wt.blocking_session_id, wt.blocking_exec_context_id, resource_description

FROM sys.dm_os_waiting_tasks wt

JOIN sys.dm_exec_sessions es ON wt.session_id = es.session_id

JOIN sys.dm_exec_requests er ON wt.session_id = er.session_id

WHERE es.is_user_process = 1

AND wt.wait_type <> ‘SLEEP_TASK’

ORDER BY wt.wait_duration_ms desc

Script can be downloaded here: List%20Current%20Waiting%20Tasks.sql

The screenshot below is a snapshot from the first test run, the waits are ordered by wait duration. The WAITFOR can be ignored as this is defined explicitly in code and is hence intended. The second wait type is a PageLatch_Ex, this is a lightweight memory semaphore construct which is used to ensure that two schedulers do not update the same page within the buffer pool. The resource description column displays the value 8:1:176839. This refers to the page in the buffer pool that that session 72 is attempting to take the exclusive latch on, the leading 8:1 indicates that it is database 8 (the Message Box), file 1.

select DB_NAME (8) — Use this to resolve the name of the database

The dbcc traceon command enables trace flag 3604 which exposes further information about the page via DBCC Page with the following syntax:

dbcc page (8,1,176839, –1)

The page is m_type 3 which is a data page. The ObjectID that this page relates to is 1813581499. For more information on using DBCC Page see:

How To Use DBCC Page https://blogs.msdn.com/sqlserverstorageengine/archive/2006/06/10/625659.aspx

By using select OBJECT_NAME (1813581499)we can determine that the wait is latch is waiting on the spool table. This makes sense because the spool table is a shared table across the BizTalk group, every time a message is inserted into the MessageBox a row needs to be added to the spool. Because the row size of the spool table is relatively small, multiple rows will be present on each page (up to 8 KB), therefore on a large multi-CPU SQL Server this can result in latch contention on these data pages.

I then proceeded to look at several of the pages in the list, going in descending order by wait time. The first few where all waiting for a shared or exclusive latch on a page from the spool table. The next page that was of interest was 8:1:258816, the output from DBCC Page is shown below in the screenshot. Pay attention to the M_Type value which in this case is 11. M_Type 11 is significant because this refers to a PFS page. PFS stands for Page Free Space, this page holds allocation and free space information about pages within the next PFS interval, which is approximately 64 MB. This structure is to used to ensure that multiple schedulers (SQL’s term for CPU) do not access the same page at the same time. On a high CPU system this can become a bottleneck. Because there is a PFS page per 64 MB chunk of each database file a common best practice technique which is used to alleviate this is to create as many files per filegroup as there are schedulers (CPU’s) in your system. This is what we will do next, whilst we are it we will also fix one of the other common allocation bottlenecks within SQL Server by creating multiple TempDB data files.

For more information on allocation bottlenecks and PFS see:

What is an allocation bottleneck: http://blogs.msdn.com/sqlserverstorageengine/archive/2009/01/04/what-is-allocation-bottleneck.aspx

Under the covers: GAM, SGAM and PFS Pages:

http://blogs.msdn.com/sqlserverstorageengine/archive/2006/07/08/under-the-covers-gam-sgam-and-pfs-pages.aspx

Anatomy of a page:

http://www.sqlskills.com/blogs/paul/post/Inside-the-Storage-Engine-Anatomy-of-a-page.aspx

Easier Way To Troubleshoot Latch Contention

Before we move onto the next test run where wie will add more files to the MessageBox and TempDB databases to alleviate allocation issues. Let’s explore another technique which some of my colleagues from our SQLCAT team shared with me. One of the drawbacks of drawing conclusions from point in time snapshots is that it can result in false positives or negatives. The attached script takes 600 samples over an approximate 60 second period (precise run time depends on the size of your buffer pool) from the sys.dm_os_waiting_tasks DMV view and aggregates all this information together, in addition it uses a number of other DMV views including sys.dm_os_buffer_descriptors to display the actual object name that is the waiting resource for the latch. This information is aggregated in a temporary table, by adjusting the number of samples that the query uses you can gain an understanding of what your MessageBox is waiting for when under load. Now let’s look at the output that I got in the screenshot below.

Script can be downloaded here:

Modified%5E_LatestFindWaitResource.sql

You can see that the majority of wait time was actually spent in our tracking data tables, this means that by disabling tracking by configuring the global tracking option in the adm_group table in the management database I can reduce the latching that I get in my system. To minimize the changes I make between test runs let’s make the change to the number of MessageBox and TempDB files first

I’ll do that in Part 4 🙂

by community-syndication | Jan 12, 2010 | BizTalk Community Blogs via Syndication

During the fall of 2009 I teamed up with fellow MVP Mikael H%u00e5kansson and delivered internal training to Logica employees in Sweden. Training in part based upon existing training material from Microsoft but with presentation material that we made especially for this course. We have since developed this material even further.

Being a MCT I was contacted by one of the major certified learning providers in Sweden that through mutual contacts had learned of what we had done. I have the pleasure of being able to start this year by announcing that an agreement have been reached with AddSkills to deliver this course to the public.

So if you are in my part of the world (a.k.a. Sweden) you now have an additional choice to get quality BizTalk Server training that covers the latest version. See available sign-up details and dates here. Delivery of custom on or off site courses based on the same material is also possible.

by community-syndication | Jan 12, 2010 | BizTalk Community Blogs via Syndication

Tonight’s meeting of the San Diego .NET user group’s Connected Systems SIG will be all about Windows Workflow 4.0.

If you’re in San Diego and not on the mailing list for the Connected Systems SIG, you should head over to http://www.SanDiegoDotNet.com and sign up in order to ensure you get these notifications.

Meeting will be at Microsoft La Jolla office, pizza at 6:00, meeting starts at 6:30. Hope to see you there!

———————————————————————

Connected Systems SIG Meeting

Tuesday, January 12th

Bob Schmidt, Principal Program Manager

on

Windows Workflow Foundation 4.0,

Top to Bottom

Topic

Windows Workflow Foundation (WF) 4.0, part of the new version of the .NET Framework, lets you author and run programs called "workflows." Workflows offer some interesting capabilities to the application developer – they can be designed visually (though they can also be developed in code), they can make use of traditional imperative control flow (e.g. Sequence, If, While) as well as non-traditional (e.g. Flowchart, Parallel, Pick) and custom control flow, and they can run robustly for arbitrarily long periods of time (while residing in a database and not in memory when idle awaiting input).

Whether you used WF in .NET 3.x or not, whether you consider yourself a WF4 novice or expert, this discussion will have something interesting for you. And, if you are using WF today, I’d love to discuss any feedback you have.

Speaker

Bob Schmidt is a Principal Program Manager at Microsoft on the team that ships WF. He’s mostly worked on BizTalk and WF since joining Microsoft in 2002. Prior to Microsoft, Bob worked as a developer (mostly Java) after graduating from Stanford with BS & MS degrees in computer science.