by community-syndication | Jan 18, 2010 | BizTalk Community Blogs via Syndication

This is part four of a twelve part series that introduces the features of WCF WebHttp Services in .NET 4. In this post we will cover:

- Configuring automatic format selection for a WCF WebHttp Service

- Setting the response format explicitly in code per request

Over the course of this blog post series, we are building a web service called TeamTask. TeamTask allows a team to track tasks assigned to members of the team. Because the code in a given blog post builds upon the code from the previous posts, the posts are intended to be read in-order.

Downloading the TeamTask Code

At the end of this blog post, you’ll find a link that will allow you to download the code for the current TeamTask Service as a compressed file. After extracting, you’ll find that it contains “Before” and “After” versions of the TeamTask solution. If you would like to follow along with the steps outlined in this post, download the code and open the “Before” solution in Visual Studio 2010. If you aren’t sure about a step, refer to the “After” version of the TeamTask solution.

Note: If you try running the sample code and see a Visual Studio Project Sample Loading Error that begins with “Assembly could not be loaded and will be ignored”, see here for troubleshooting.

Getting Visual Studio 2010

To follow along with this blog post series, you will need to have Microsoft Visual Studio 2010 and the full .NET 4 Framework installed on your machine. (The client profile of the .NET 4 Framework is not sufficient.) At the time of this posting, the Microsoft Visual Studio 2010 Ultimate Beta 2 is available for free download and there are numerous resources available regarding how to download and install, including this Channel 9 video.

Step 1: Handling Requests in JSON

So far in this blog post series we’ve been sending and receiving HTTP messages with XML content only. But WCF WebHttp Services also provides first-class support for JSON. In fact, by default a WCF WebHttp Service will handle requests in JSON automatically. Just to prove this, we’ll have our client send an HTTP PUT request to the TeamTask service in JSON.

If you haven’t already done so, download and open the “Before” solution of the code attached to this blog post.

Open the Program.cs file from the TeamTask.Client project in the code editor and add the following static method to the Program class:

static void WriteOutContent(HttpContent content)

{

content.LoadIntoBuffer();

Console.WriteLine(content.ReadAsString());

Console.WriteLine();

}

This static WriteOutContent() method will allow us to easily write out the body of a request or response message to the console. The content is streamed by default, so we call the LoadIntoBuffer() method to give us the ability to read the content multiple times.

Change the UpdateTask() method to serialize the request to JSON instead of XML by replacing:

HttpContent content = HttpContentExtensions.CreateDataContract(task);

With:

HttpContent content = HttpContentExtensions.CreateJsonDataContract(task);

Add a call to the WriteOutContent() method after the request content is created so that we can verify the request really is in JSON like so:

HttpContent content = HttpContentExtensions.CreateJsonDataContract(task);

Console.WriteLine(“Request:”);

WriteOutContent(content);

Open the Web.Config file from the TeamTask.Server project and change the value of the “automaticFormatSelectionEnabled” setting from “true” to “false” like so:

<webHttpEndpoint>

<standardEndpoint name=“” helpEnabled=“true”

automaticFormatSelectionEnabled=“false“/>

</webHttpEndpoint>

Note: The default value for automaticFormatSelectionEnabled is actually false, so we could simply delete the attribute altogether. However, we’ll be re-enabling it soon so it is easier to keep it around.

Start without debugging (Ctrl+F5) to get the TeamTask service running and then start the client by right clicking on the TeamTask.Client project in the “Solution Explorer” window and selecting “Debug”->”Start New Instance”. The console should contain the following output:

Notice that the HTTP PUT request is in JSON, and yet the TeamTask service handles it without any problem.

Note: Throughout this blog post, we’ll be running both the service and the client projects together a number of times. You might find it convenient to configure the TeamTask solution to automatically launch the server and then the client when you press (F5). To do this, right click on the TeamTask solution in the Solution Explorer (Ctrl+W,S) and select “Properties” from the context menu that appears. In the Solution Properties window, select the “Multiple startup projects” radio button. Make sure that the TeamTask.Service project is the top project in the list by selecting it and clicking on the up arrow button. Click on the “Action” cell for the TeamTask.Service project and select “Start without Debugging”. Then click on the “Action” cell for the TeamTask.Client project and select “Start”.

Helpful Tip: It might seem counterintuitive that we set “automaticFormatSelectionEnabled” to false when we were explicitly trying to demonstrate how our service could automatically handle a JSON request. You would probably assume that “automaticFormatSelectionEnabled” needs to be set to “true” to handle a JSON request. But this isn’t the case, as we saw when we executed the client. The “automaticFormatSelectionEnabled” setting applies to outgoing responses only. All incoming requests in either XML or JSON are automatically handled, and in fact, there is no way to disable this behavior to accept only one format or the other. |

Step 2: Setting the Response Format Automatically

In step one, we wrote the request message body to the console to show that it was in fact JSON. But what about the response message? We didn’t write it out to the console, but it happens to be in XML. You can see for yourself by adding a call to the WriteOutContent() method and passing in the response content.

Of course this is odd-a client sends JSON and gets XML back. This occurs because the default response format of the UpdateTask() operation is XML. You specify the default response format for an operation using the ResponseFormat property on the [WebGet] or [WebInvoke] attributes, but the ResponseFormat property isn’t explicitly set with the UpdateTask() operation and it defaults to XML.

We could set the ResponseFormat for the UpdateTask() operation to JSON, but that wouldn’t really solve our problem. If a client were to send a request in XML, it would get back a response in JSON. What we really want is for the WCF infrastructure to be smart enough to choose the correct response format given the request itself. And in fact, this is exactly the behavior the new automatic format selection feature in WCF WebHttp Services provides.

Enabling automatic format selection is as easy as setting “automaticFormatSelectionEnabled” to “true” in the Web.config for the service. With the online templates it is set to “true” by default, which is why we had to set it to “false” in step one.

When automatic format selection is enabled, the WCF infrastructure will try to determine the appropriate response format using:

The value of the HTTP Accept header of the request. If the request doesn’t provide an Accept header or the Accept header doesn’t list an appropriate format, the WCF infrastructure will try to use

The content-type of the request. If the request doesn’t have a body or if the content-type of the request isn’t an appropriate format, the WCF infrastructure will use

The default response format for the operation.

Now we’ll re-enable automatic format selection and demonstrate how it works by sending an HTTP GET request with an Accept header indicating a preference for JSON. We’ll also update our client to write out the response from the HTTP PUT request and show that the response format matches that of the request (even without an Accept header).

Open the Web.Config file from the TeamTask.Server project and change the value of the “automaticFormatSelectionEnabled” setting from “false” to “true” like so:

<webHttpEndpoint>

<standardEndpoint name=“” helpEnabled=“true”

automaticFormatSelectionEnabled=“true“/>

</webHttpEndpoint>

Open the Program.cs file from the TeamTask.Client project in the code editor and replace the static GetTask() method implementation with the implementation below. You’ll also need to add “using System.Runtime.Serialization.Json;” to the code file:

static Task GetTask(HttpClient client, int id)

{

Console.WriteLine(“Getting task ‘{0}’:”, id);

using (HttpRequestMessage request =

new HttpRequestMessage(“GET”, “Tasks”))

{

request.Headers.Accept.AddString(“application/json”);

using (HttpResponseMessage response = client.Send(request))

{

response.EnsureStatusIsSuccessful();

WriteOutContent(response.Content);

return response.Content.ReadAsJsonDataContract<List<Task>>()

.FirstOrDefault(task => task.Id == id);

}

}

}

This implementation of the static GetTask() method is very similar to the client code we wrote in part two of this blog post series. However, we are now instantiating an HttpRequestMessage so that we can set the Accept header to “application/json”. We are also using the ReadAsJsonDataContract() extension method instead of the ReadAsDataContract() extension method since the content is now JSON instead of XML.

If you try to build you’ll find that the ReadAsJsonDataContract() extension method has a dependency, so in the “Solution Explorer” window, right click on the TeamTask.Client project and select “Add Reference”. In the “Add Reference” window, select the “.NET” tab and choose the System.ServiceModel.Web assembly.

In the UpdateTask() method, also write the response out to the console and replace the ReadAsDataContract() call with a ReadAsJsonDataContract() call like so:

using (HttpResponseMessage response = client.Put(updateUri, content))

{

response.EnsureStatusIsSuccessful();

Console.WriteLine(“Response:”);

WriteOutContent(response.Content);

return response.Content.ReadAsJsonDataContract<Task>();

}

Start without debugging (Ctrl+F5) to get the TeamTask service running and then start the client by right clicking on the TeamTask.Client project in the “Solution Explorer” window and selecting “Debug”->”Start New Instance”. The console should contain the following output:

Notice that JSON was used for the responses of both the GET and PUT requests. JSON was used with the GET request because of the HTTP Accept header value, and it was used for the PUT request because the request itself used JSON.

Step 3: Setting the Response Format Explicitly

Automatic format selection is a powerful new feature, but it may not be the solution for all scenarios. For example, it won’t work when a service wants to support clients that aren’t able to set the HTTP Accept header when they send GET requests. Therefore WCF WebHttp Services also allows the response format to be set explicitly in code.

We’ll demonstrate how to explicitly set the response format by adding a “format” query string parameter to the GetTasks() operation of the TeamTask service. The value of this query string parameter will determine the response format.

Open the TeamTaskService.cs file in the code editor.

Add a “format” query string parameter to the UriTemplate of the GetTasks() operation along with a matching method parameter like so:

[WebGet(UriTemplate =

“Tasks?skip={skip}&top={top}&owner={userName}&format={format}”)]

public List<Task> GetTasks(int skip, int top, string userName, string format)

Within the body of the GetTasks() operation, add the following code:

// Set the format from the format query variable if it is available

if (string.Equals(“json”, format, StringComparison.OrdinalIgnoreCase))

{

WebOperationContext.Current.OutgoingResponse.Format =

WebMessageFormat.Json;

}

Setting the response format explicitly is as simple as setting the Format property on the OutgoingResponse of the WebOperationContext. When the “format” query string is equal to “json”, the response format is set to JSON, otherwise the default value of XML is used.

Start without debugging (Ctrl+F5) and use the browser of your choice to navigate to http://localhost:8080/TeamTask/Tasks. In Internet Explorer, the list of tasks will be displayed as shown below:

Now, send another request with the browser, but add the “format” query string parameter to the URI like so: http://localhost:8080/TeamTask/Tasks?format=json. In Internet Explorer, you’ll be presented with a dialog box asking if you want to download the response as a file. Save the file and open it in notepad and it will contain the tasks in the JSON format as shown below:

Helpful Tip: While WCF WebHttp Services offers first-class support for XML and JSON, it also provides a more advanced API for returning plain text, Atom feeds, or any other possible content-type. We’ll cover this feature in part eight of this blog post series. |

Next Steps: Error Handling with WebFaultException

So far in our implementation of the TeamTask service, we haven’t provided any real error handling. In part five of this blog post series we’ll rectify this and use the new WebFaultException class to communicate why a request has failed by providing an appropriate HTTP status code.

Randall Tombaugh

Developer, WCF WebHttp Services

by community-syndication | Jan 16, 2010 | BizTalk Community Blogs via Syndication

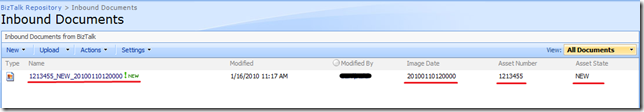

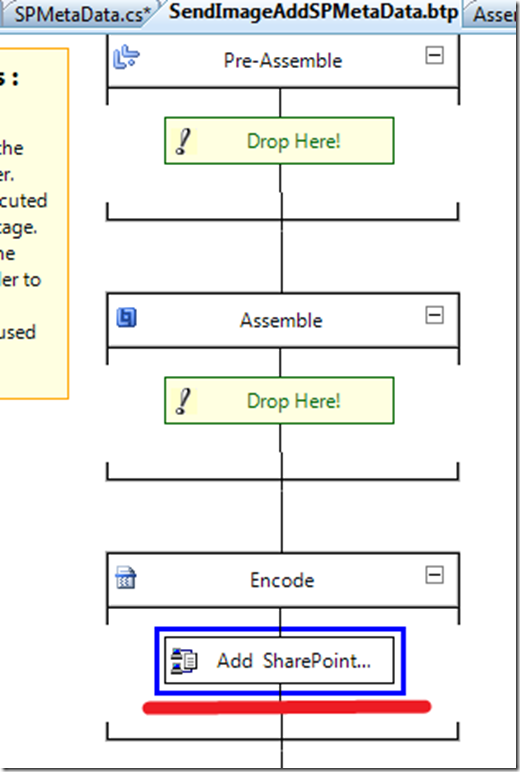

I had a requirement where I needed to upload images to a SharePoint library and use the information from the image filename to populate columns within the document library. Performing these tasks within an orchestration is pretty trivial as you can set context properties in a Message Assignment shape that will drive the behavior of the WSS Adapter. I couldn’t justify performing these tasks in an Orchestration since it would involve an extra hop to the MessageBox in order for Orchestration to be invoked.

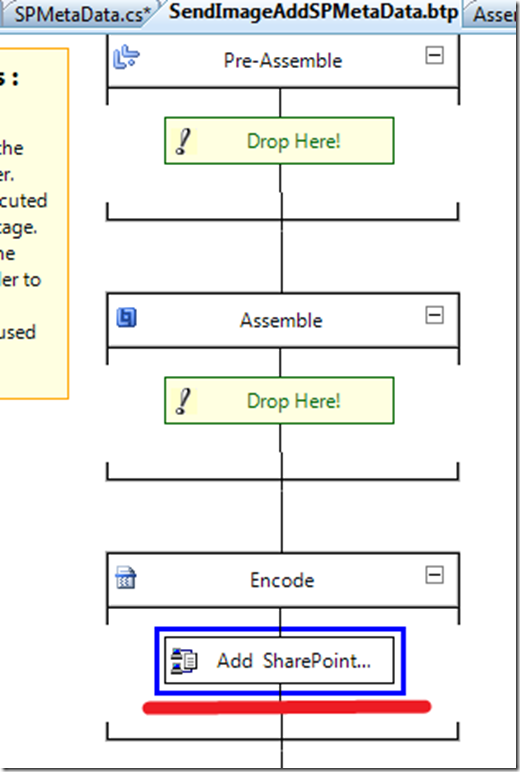

Another option is to do all of this work in a Pipeline component. I can create a Send Port subscription that would allow me to access the context properties from the message received and update them to include the WSS properties all within in a pipeline. This would allow the solution to become a pure messaging solution and I can save an extra Message Box hop. When building this solution, I referenced Saravana Kumar’s white paper on Pipeline Components. It came in handy, especially in the area of creating the Design time pipeline properties.

Within the Pipeline Component, the first thing that I wanted to to was retrieve the source file name. I am able to do this by reading the “ReceivedFileName” property from the File Adapter’s context properties. I then wanted to clean this file name up since the value of this property includes the entire UNC path: \\Servery\RootFolder\SubFolder\filename.jpg. I have written some utility methods to parse the file name from this long string.

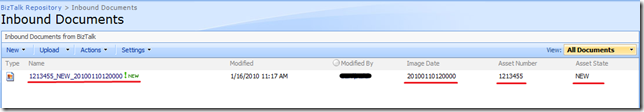

There are 3 parts to the image file name(1213455_NEW_20100110120000.jpg) that I am particularly interested in. They include an Asset Number, Asset State and the Date/Time that the image was taken. The scenario itself is a field worker who needs to capture an image of an asset, indicate the Asset Number and its state i.e. New/Old. This information is then captured in the name of the image. Since it is an image, there is no other reasonable way to store this meta data outside the file name. This in itself is the reason why I need to to use a pipeline component. Standard WSS adapter functionality includes the ability to use XPATH statements to extract data from the message payload and populate a SharePoint document library column.

Once I have captured this meta data and massaged it to my liking, I want to then provide this context data to the WSS Adapter. The WSS adapter is a little different than most of the other adapters in that you can populate an XML document and push that into the ConfigPropertiesXml context property. The document structure itself is a flat XML structure that uses a “key-value” convention.

<ConfigPropertiesXml><PropertyName1>Column 1 Name</PropertyName1><PropertySource1>Column 1 Value</PropertySource1><PropertyName2>Column 2 Name</PropertyName2><PropertySource2>Column 2 Value</PropertySource2><PropertyName3>Column 3 Name</PropertyName3><PropertySource3>Column 3 Value</PropertySource3></ConfigPropertiesXml>

I also want to populate the WSS Adapter’s Filename context property. I can achieve this by the following statement:

pInMsg.Context.Write("Filename",

"http://schemas.microsoft.com/BizTalk/2006/WindowsSharePointServices-properties", ImageFileName);

Below is my Execute method, in my pipeline component, where all of this processing takes place. You can download the entire sample here. This code is at a proof of concept stage so you will want to evaluate your own error handling requirements. Use at your own risk.

public IBaseMessage Execute(IPipelineContext pContext, IBaseMessage pInMsg)

{

//Get received file name by retrieving it from Context

string FilePath = pInMsg.Context.Read("ReceivedFileName", "http://schemas.microsoft.com/BizTalk/2003/file-properties") as string;

//strip path from filename

string ImageFileName = GetFileNameFromPath(FilePath);

//Utility methods to parse filename

string msgAssetNumber = GetAssetNumberFromFileName (ImageFileName);

string msgAssetState = GetAssetStateFromFileName(ImageFileName);

string msgImageDateTime = GetImageDateFromFileName(ImageFileName);

//Write desired file name to context of WSS Adapter

pInMsg.Context.Write("Filename",

"http://schemas.microsoft.com/BizTalk/2006/WindowsSharePointServices-properties", ImageFileName);

// Populate Document Library Columns with values from file name

string strWSSConfigPropertiesXml = string.Format("<ConfigPropertiesXml><PropertyName1>{0}</PropertyName1><PropertySource1>{1}</PropertySource1>" +

"<PropertyName2>{2}</PropertyName2><PropertySource2>{3}</PropertySource2><PropertyName3>{4}</PropertyName3><PropertySource3>{5}</PropertySource3></ConfigPropertiesXml>",

this.AssetNumber,msgAssetNumber,this.AssetState,msgAssetState,this.ImageDateTime,msgImageDateTime);

pInMsg.Context.Write("ConfigPropertiesXml", "http://schemas.microsoft.com/BizTalk/2006/WindowsSharePointServices-properties", strWSSConfigPropertiesXml);

return pInMsg;

}

A feature that I wanted to provide is the ability to provide the SharePoint column names at configuration time. I didn’t want to have to compile code if the SharePoint team wanted to change a column name. So this is driven from the pipeline configuration editor. The values that you provide (on the right hand side) will set the column names in the ConfigPropertiesXml property that is established at run time.

If you provide a value in this configuration that does not correspond to a column in SharePoint, you will get a warning/error on the Send Port.

Event Type: Warning

Event Source: BizTalk Server 2009

Event Category: (1)

Event ID: 5743

Date: 1/10/2010

Time: 7:38:19 PM

User: N/A

Computer:

Description:

The adapter failed to transmit message going to send port "SendDocToSharePoint" with URL "wss://SERVER/sites/BizTalk%%20Repository/Inbound%%20Documents". It will be retransmitted after the retry interval specified for this Send Port. Details:"The Windows SharePoint Services adapter Web service encountered an error accessing column "Missing Column" in document library http://SERVER/sites/BizTalk%%20Repository/Inbound%%20Documents. The column does not exist. The following error was encountered: "Value does not fall within the expected range.".

This error was triggered by the Windows SharePoint Services receive location or send port with URI wss://SERVER/sites/BizTalk Repository/Inbound Documents.

Windows SharePoint Services adapter event ID: 12295".

For more information, see Help and Support Center at http://go.microsoft.com/fwlink/events.asp.

The end result is that I can use information contained in the file name to populate meta data columns in SharePoint without an Orchestration.

Also note, I have built this pipeline component so that it can be used in a Receive Pipeline or as Send Pipeline by including the CATID_Decoder and CATID_Encoder attributes.

[ComponentCategory(CategoryTypes.CATID_PipelineComponent)]

[ComponentCategory(CategoryTypes.CATID_Decoder)]

[ComponentCategory(CategoryTypes.CATID_Encoder)]

[System.Runtime.InteropServices.Guid("9d0e4103-4cce-4536-83fa-4a5040674ad6")]

public class AddSharePointMetaData : IBaseComponent, IComponentUI, IComponent, IPersistPropertyBag

by community-syndication | Jan 15, 2010 | BizTalk Community Blogs via Syndication

[In addition to blogging, I am also now using Twitter for quick updates and to share links. Follow me at: twitter.com/scottgu]

This is the second in a series of blog posts I’m doing on the upcoming ASP.NET MVC 2 release. This blog post covers some of the validation improvements coming with ASP.NET MVC 2.

ASP.NET MVC 2 Validation

Validating user-input and enforcing business rules/logic is a core requirement of most web applications. ASP.NET MVC 2 includes a bunch of new features that make validating user input and enforcing validation logic on models/viewmodels significantly easier. These features are designed so that the validation logic is always enforced on the server, and can optionally also be enforced on the client via JavaScript. The validation infrastructure and features in ASP.NET MVC 2 are designed so that:

1) Developers can easily take advantage of the DataAnnotation validation support built-into the .NET Framework. DataAnnotations provide a really easy way to declaratively add validation rules to objects and properties with minimal code.

2) Developers can optionally integrate either their own validation engine, or take advantage of existing validation frameworks like Castle Validator or the EntLib Validation Library. ASP.NET MVC 2’s validation features are designed to make it easy to plug-in any type of validation architecture – while still taking advantage of the new ASP.NET MVC 2 validation infrastructure (including client-side validation, model binding validation, etc).

This means that enabling validation is really easy for common application scenarios, while at the same time still remaining very flexible for more advanced ones.

Enabling Validation with ASP.NET MVC 2 and DataAnnotations

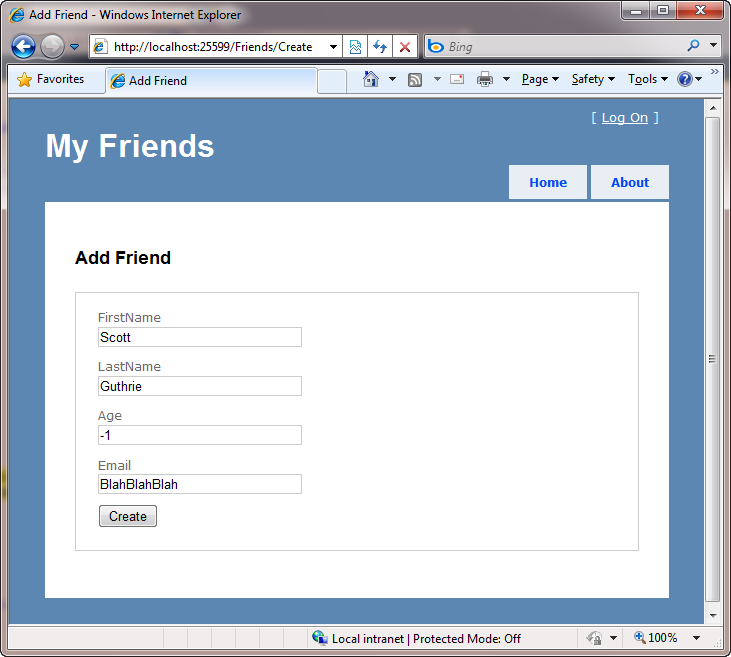

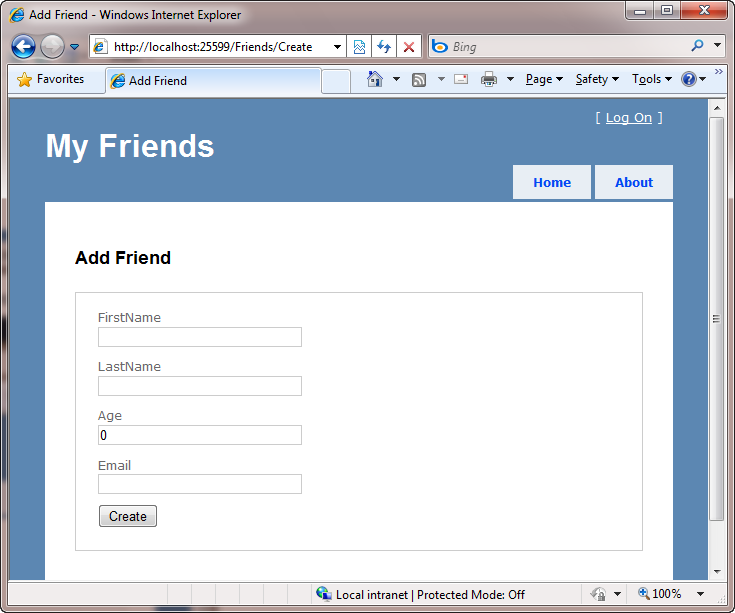

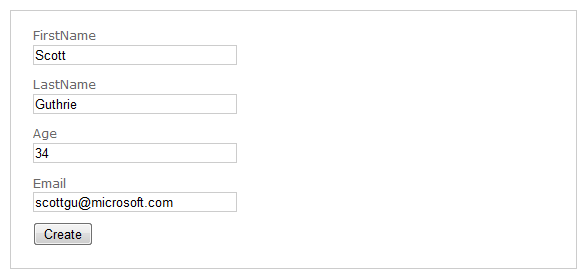

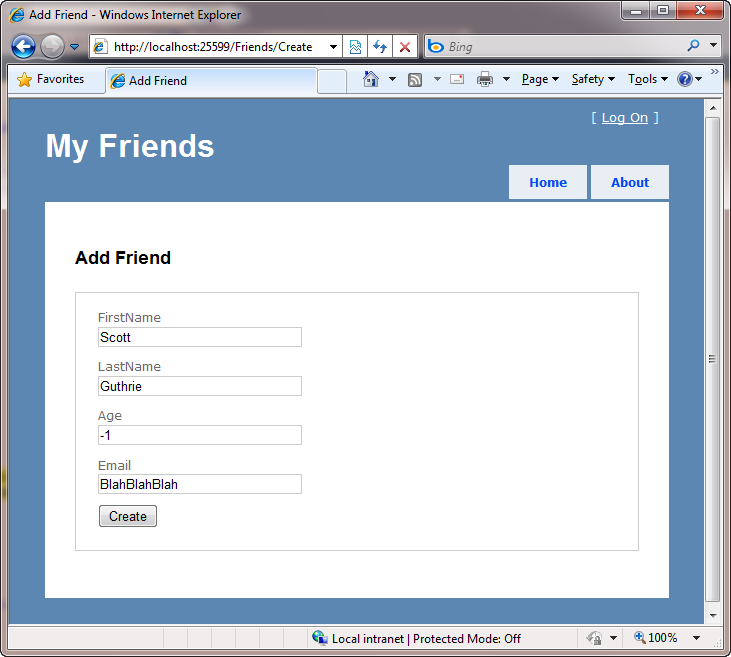

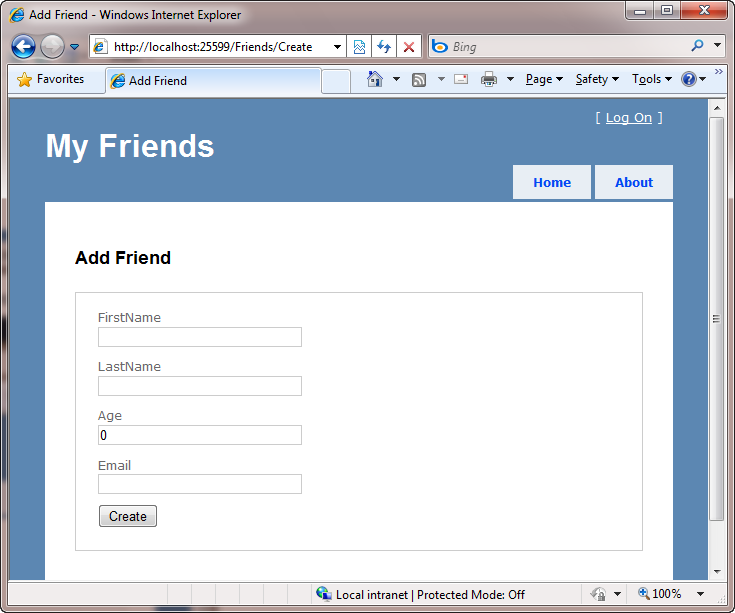

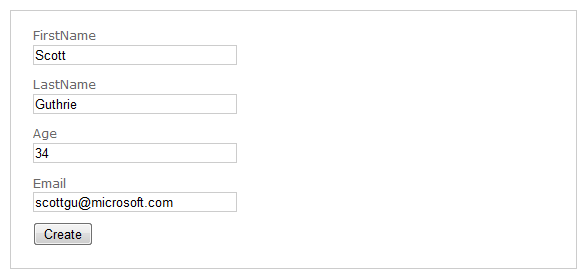

Let’s walkthrough a simple CRUD scenario with ASP.NET MVC 2 that takes advantage of the new built-in DataAnnotation validation support. Specifically, let’s implement a “Create” form that enables a user to enter friend data:

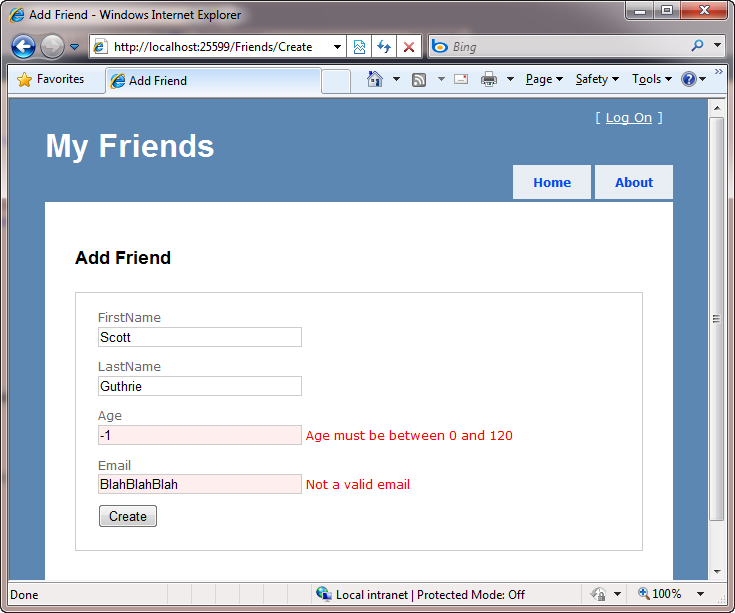

We want to ensure that the information entered is valid before saving it in a database – and display appropriate error messages if it isn’t:

We want to enable this validation to occur on both the server and on the client (via JavaScript). We also want to ensure that our code maintains the DRY principle (“don’t repeat yourself”) – meaning that we should only apply the validation rules in one place, and then have all our controllers, actions and views honor it.

Below I’m going to be using VS 2010 to implement the above scenario using ASP.NET MVC 2. You could also implement the exact same scenario using VS 2008 and ASP.NET MVC 2 as well.

Step 1: Implementing a FriendsController (with no validation to begin with)

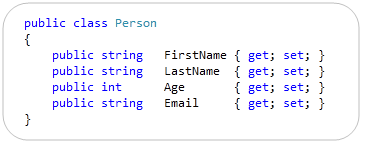

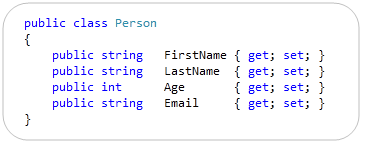

We’ll begin by adding a simple “Person” class to a new ASP.NET MVC 2 project that looks like below:

It has four properties (implemented using C#’s automatic property support, which VB in VS 2010 now supports too – woot!).

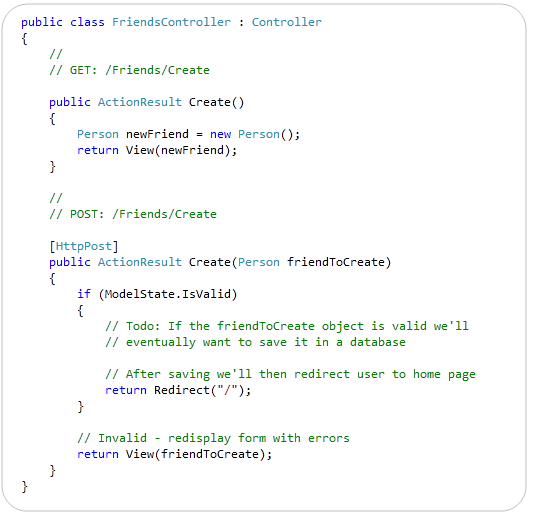

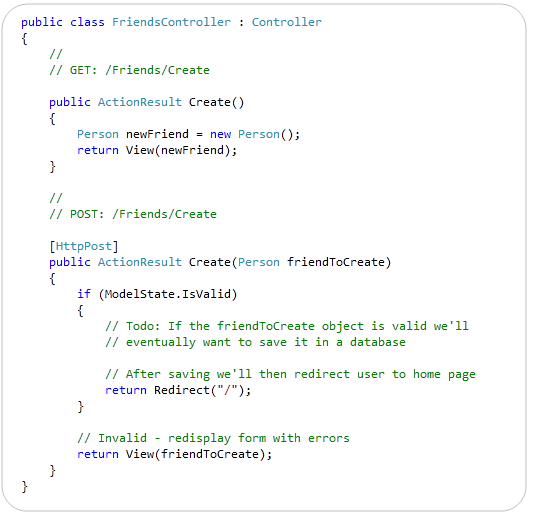

We’ll then add a “FriendsController” controller class to our project that exposes two “Create” action methods. The first action method is called when an HTTP-GET request comes for the /Friends/Create URL. It will display a blank form for entering person data. The second action method is called when an HTTP-POST request comes for the /Friends/Create URL. It maps the posted form input to a Person object, verifies that no binding errors occurred, and if it is valid will eventually save it to a database (we’ll implement the DB work later in this tutorial). If the posted form input is invalid, the action method redisplays the form with errors:

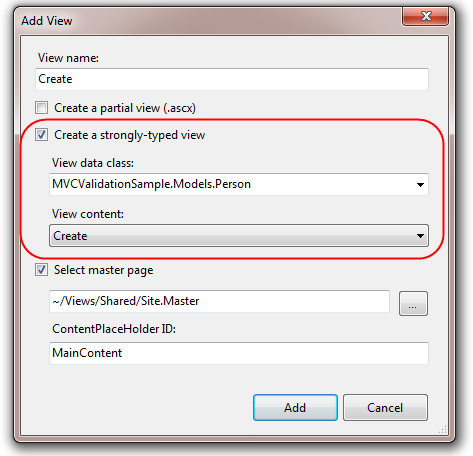

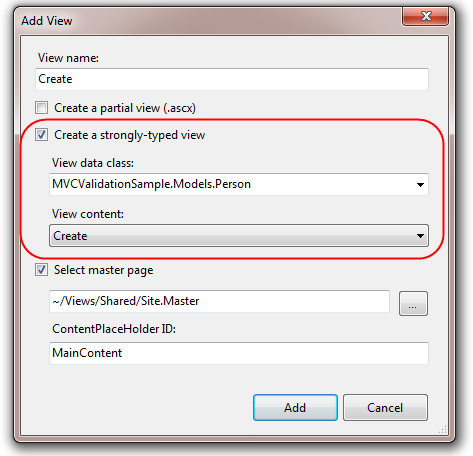

After we’ve implemented our controller, we’ll right-click within one of its action methods and choose the “Add View” command within Visual Studio – which will bring up the “Add View” dialog. We’ll choose to scaffold a “Create” view that is passed a Person object:

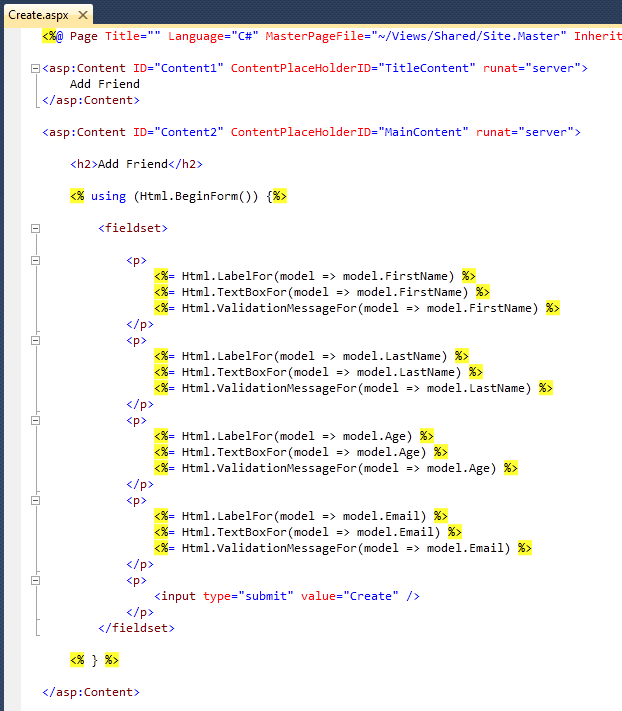

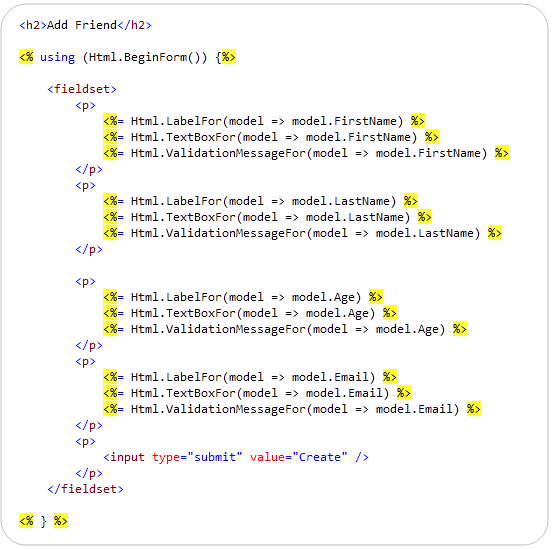

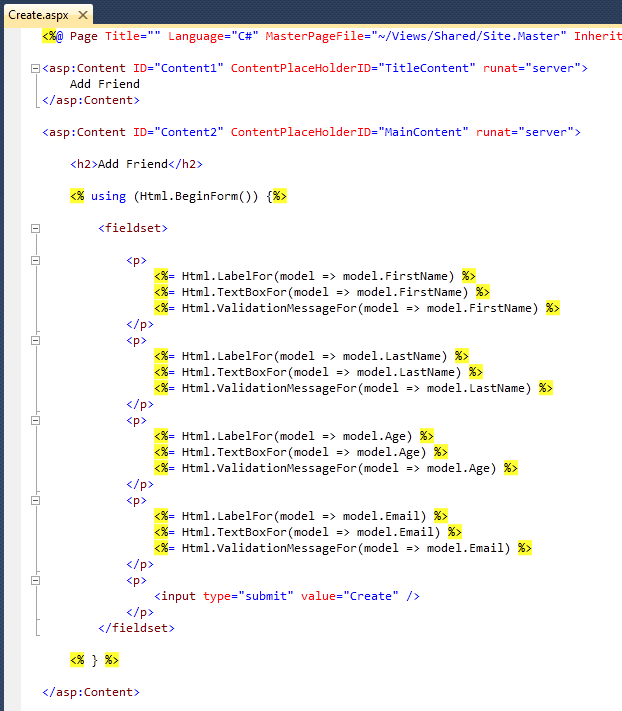

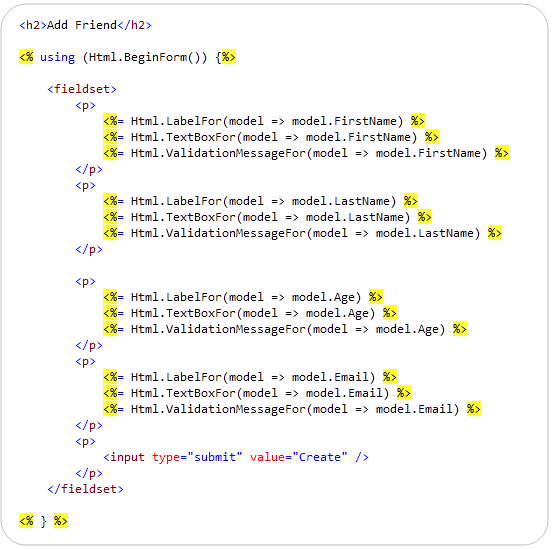

Visual Studio will then generate a scaffolded Create.aspx view file for us under the \Views\Friends\ directory of our project. Notice below how it takes advantage of the new strongly-typed HTML helpers in ASP.NET MVC 2 (enabling better intellisense and compile time checking support):

And now when we run the application and hit the /Friends/Create URL we’ll get a blank form that we can enter data into:

Because we have not implemented any validation within the application, though, nothing prevents us from entering bogus input within the form and posting it to the server.

Step 2: Enabling Validation using DataAnnotations

Let’s now update our application to enforce some basic input validation rules. We’ll implement these rules on our Person model object – and not within our Controller or our View. The benefit of implementing the rules within our Person object is that this will ensure that the validation will be enforced via any scenario within our application that uses the Person object (for example: if we later added an edit scenario). This will help ensure that we keep our code DRY and avoid repeating rules in multiple places.

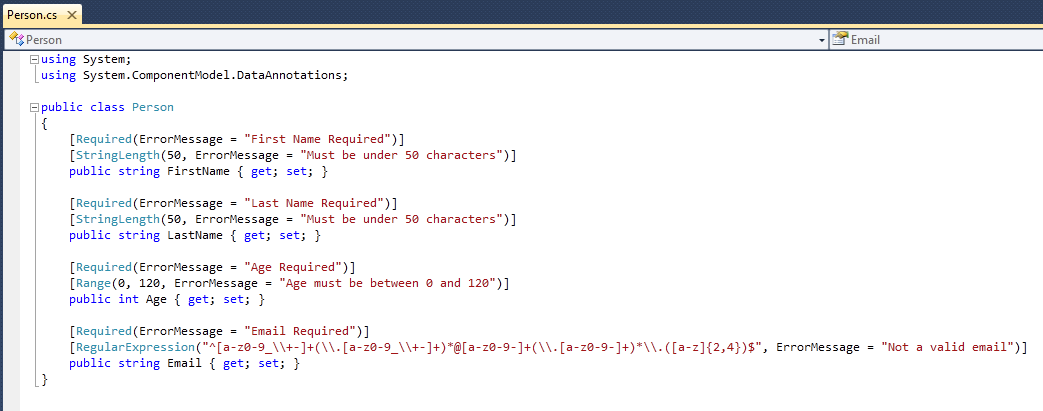

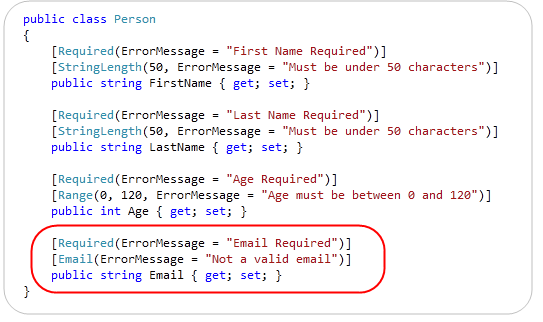

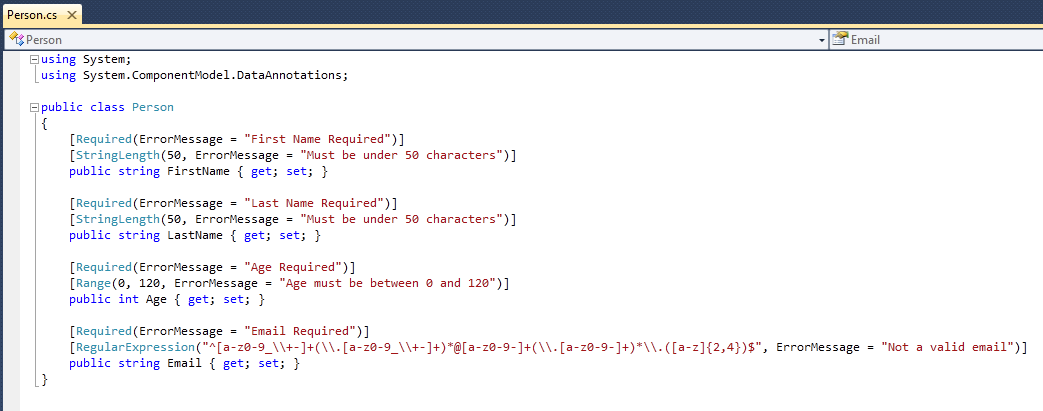

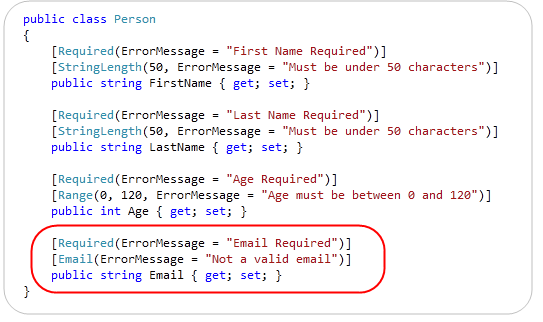

ASP.NET MVC 2 enables developers to easily add declarative validation attributes to model or viewmodel classes, and then have those validation rules automatically be enforced whenever ASP.NET MVC performs model binding operations within an application. To see this in action, let’s update our Person class to have a few validation attributes on it. To do this we’ll add a “using” statement for the “System.ComponentModel.DataAnnotations” namespace to the top of the file – and then decorate the Person properties with [Required], [StringLength], [Range], and [RegularExpression] validation attributes (which are all implemented within that namespace):

Note: Above we are explicitly specifying error messages as strings. Alternatively you can define them within resource files and optionally localize them depending on the language/culture of the incoming user. You can learn more about how to localize validation error messages here.

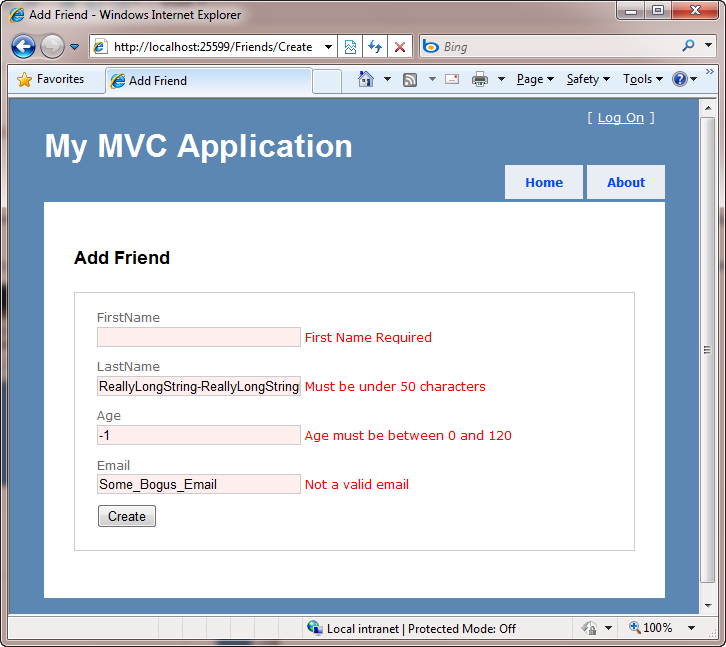

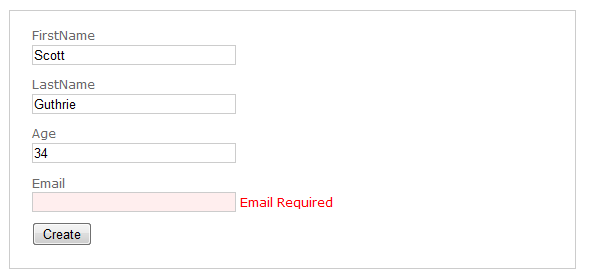

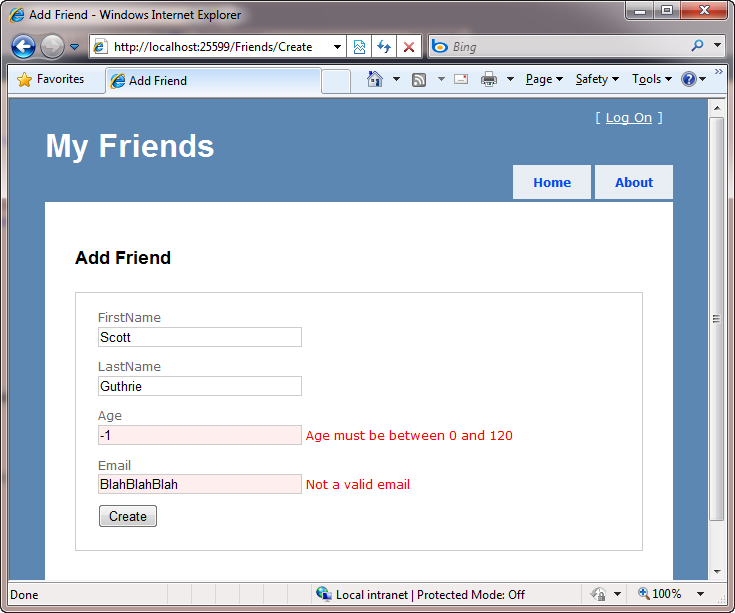

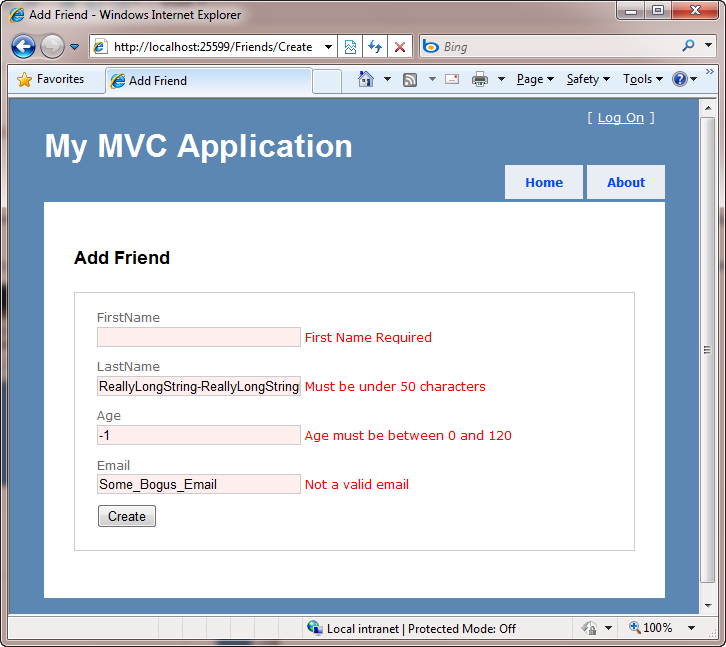

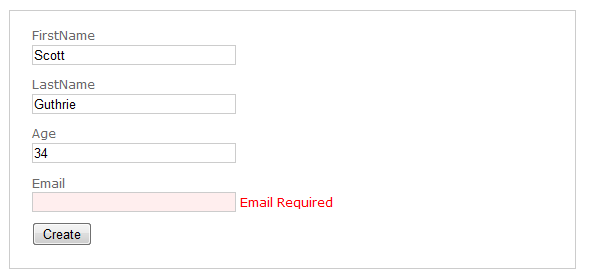

Now that we’ve added the validation attributes to our Person class, let’s re-run our application and see what happens when we enter bogus values and post them back to the server:

Notice above how our application now has a decent error experience. The text elements with the invalid input are highlighted in red, and the validation error messages we specified are displayed to the end user about them. The form is also preserving the input data the user originally entered – so that they don’t have to refill anything.

How though, you might ask, did this happen?

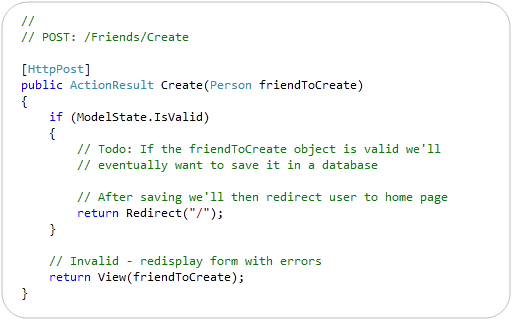

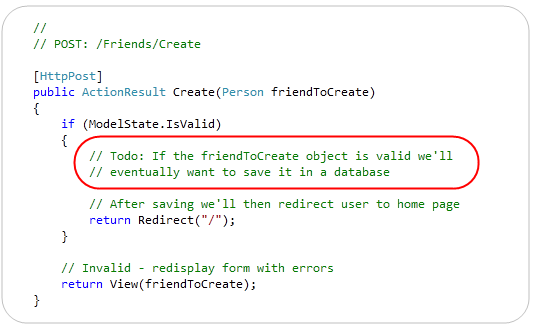

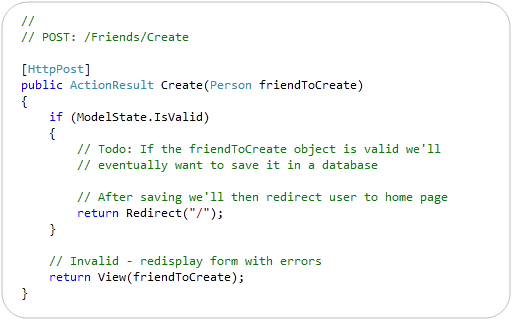

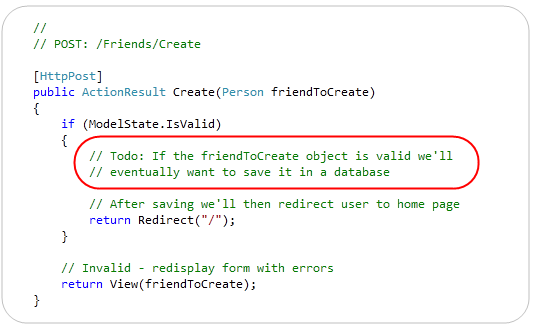

To understand this behavior, let’s look at the Create action method that handles the POST scenario for our form:

When our HTML form is posted back to the server, the above method will be called. Because the action method accepts a “Person” object as a parameter, ASP.NET MVC will create a Person object and automatically map the incoming form input values to it. As part of this process, it will also check to see whether the DataAnnotation validation attributes for the Person object are valid. If everything is valid, then the ModelState.IsValid check within our code will return true – in which case we will (eventually) save the Person to a database and then redirect back to the home-page.

If there are any validation errors on the Person object, though, our action method redisplays the form with the invalid Person. This is done via the last line of code in the code snippet above.

The error messages are then displayed within our view because our Create form has <%= Html.ValidationMessageFor() %> helper method calls next to each <%= Html.TextBoxFor() %> helper. The Html.ValidationMessageFor() helper will output the appropriate error message for any invalid model property passed to the view:

The nice thing about this pattern/approach is that it is pretty easy to setup – and it then allows us to easily add or change validation rules on our Person class without having to change any code within our controllers or views. This ability to specify the validation rules one place and have it be honored and respected everywhere allows us to rapidly evolve our application and rules with a minimum amount of effort and keep our code very DRY.

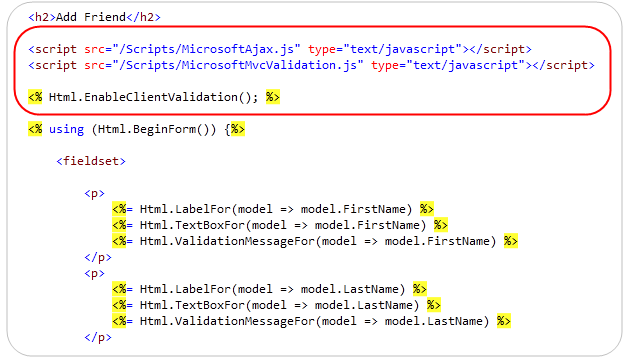

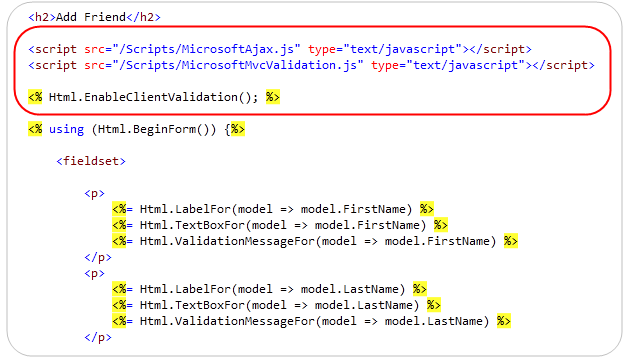

Step 3: Enabling Client-side Validation

Our application currently only performs server-side validation – which means that our end users will need to perform a form submit to the server before they’ll see any validation error messages.

One of the cool things about ASP.NET MVC 2’s validation architecture is that it supports both server-side and client-side validation. To enable this, all we need to do is to add two JavaScript references to our view, and write one line of code:

When we add these three lines, ASP.NET MVC 2 will use the validation meta-data we’ve added to our Person class and wire-up client-side JavaScript validation logic for us. This means that users will get immediate validation errors when they tab out of an input element that is invalid.

To see the client-side JavaScript support in action for our friends application, let’s rerun the application and fill in the first three textboxes with legal values – and then try and click “Create”. Notice how we’ll get an immediate error message for our missing value without having to hit the server:

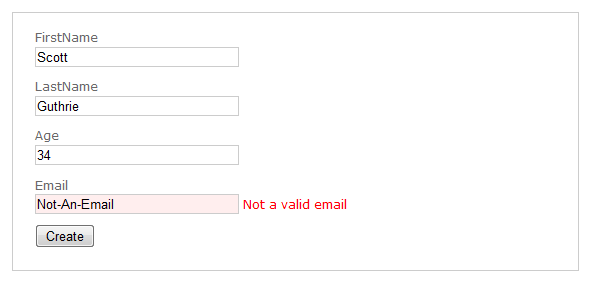

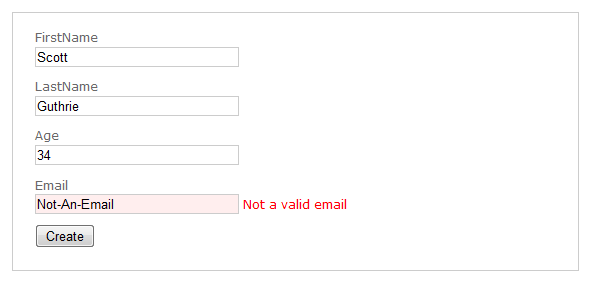

If we enter some text that is not a legal email the error message will immediately change from “Email Required” to “Not a valid email” (which are the error messages we specified when we added the rules to our Person class):

When we enter a legal email the error message will immediately disappear and the textbox background color will go back to its normal state:

The nice thing is that we did not have to write any custom JavaScript of our own to enable the above validation logic. Our validation code is also still very DRY- we can specify the rules in one place and have them be enforced across all across the application – and on both the client and server.

Note that for security reasons the server-side validation rules always execute even if you have the client-side support enabled. This prevents hackers from trying to spoof your server and circumvent the client-side rules.

The client-side JavaScript validation support in ASP.NET MVC 2 can work with any validation framework/engine you use with ASP.NET MVC. It does not require that you use the DataAnnotation validation approach – all of the infrastructure works independent of DataAnnotations and can work with Castle Validator, the EntLib Validation Block, or any custom validation solution you choose to use.

If you don’t want to use our client-side JavaScript files, you can also substitute in the jQuery validation plugin and use that library instead. The ASP.NET MVC Futures download will include support for enable jQuery validation against the ASP.NET MVC 2 server-side validation framework as well.

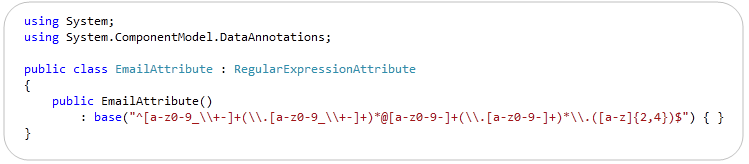

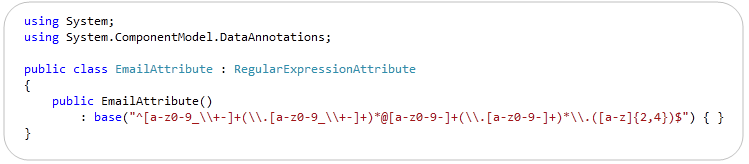

Step 4: Creating a Custom [Email] Validation Attribute

The System.ComponentModel.DataAnnotations namespace within the .NET Framework includes a number of built-in validation attributes that you can use. We’ve used 4 different ones in the sample above – [Required], [StringLength], [Required], and [RegularExpression].

You can also optionally define your own custom validation attributes and use them as well. You can define completely custom attributes by deriving from the ValidationAttribute base class within the System.ComponentModel.DataAnnotations namespace. Alternatively, you can choose to derive from any of the existing validation attributes if you want to simply extend their base functionality.

For example, to help clean up the code within our Person class we might want to create a new [Email] validation attribute that encapsulates the regular expression to check for valid emails. To do this we could simply derive it from the RegularExpression base class like so, and call the RegularExpression’s base constructor with the appropriate email regex:

We can then update our Person class to use our new [Email] validation attribute in place of the previous regular expression we used before – which makes the code more clean and encapsulated:

When creating custom validation attributes you can specify validation logic that runs both on the server and on the client via JavaScript.

Step 5: Persisting to a Database

Let’s now implement the logic necessary to save our friends to a database.

Right now we are simply working against a plain-old C# class (sometimes referred to as a “POCO” class – “plain old CLR (or C#) object”). One approach we could use would be to write some separate persistence code that maps this existing class we’ve already written to a database. Object relational mapping (ORM) solutions like NHibernate support this POCO / PI style of mapping today very well. The ADO.NET Entity Framework (EF) that ships with .NET 4 will also support POCO / PI mapping, and like NHibernate will also optionally enable the ability to define persistence mappings in a “code only” way (no mapping file or designers required).

If our Person object was mapped to a database in this way then we wouldn’t need to make any changes to our Person class or to any of our validation rules – it would continue to work just fine.

But what if we are using a graphical tool for our ORM mappings?

Most developers using Visual Studio today don’t write their own ORM mapping/persistence logic – and instead use the built-in designers within Visual Studio to help manage this.

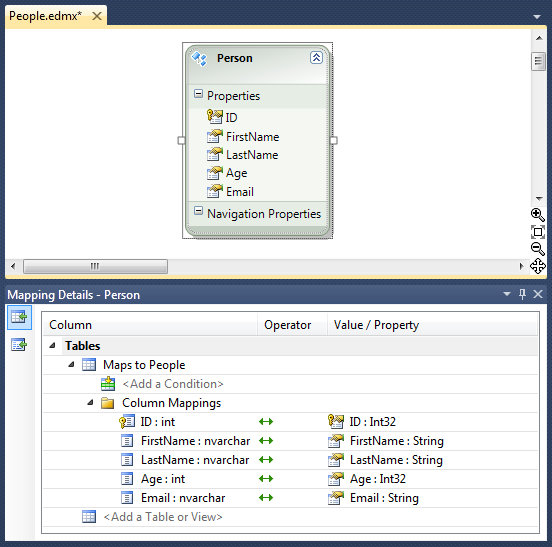

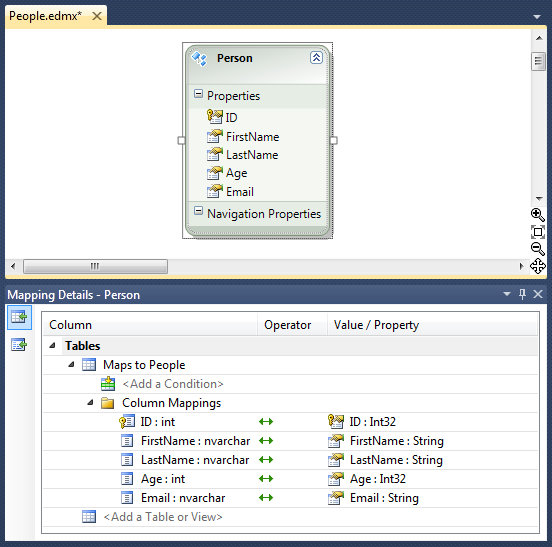

One question that often comes up when using DataAnnotations (or any other form of attribute based validation) is “how do you apply them when the model object you are working with is created/maintained by a GUI designer”. For example, what if instead of having a POCO style Person class like we’ve been using so far, we instead defined/maintained our Person class within Visual Studio via a GUI mapping tool like the LINQ to SQL or ADO.NET EF designer:

Above is a screen-shot that shows a Person class defined using the ADO.NET EF designer in VS 2010. The window at the top defines the Person class, the window at the bottom shows the mapping editor for how its properties map to/from a “People” table within a database. When you click save on the designer it automatically generates a Person class for you within your project. This is great, except that every time you make a change and hit save it will re-generate the Person class – which would cause any validation attribute declarations you make on it to be lost.

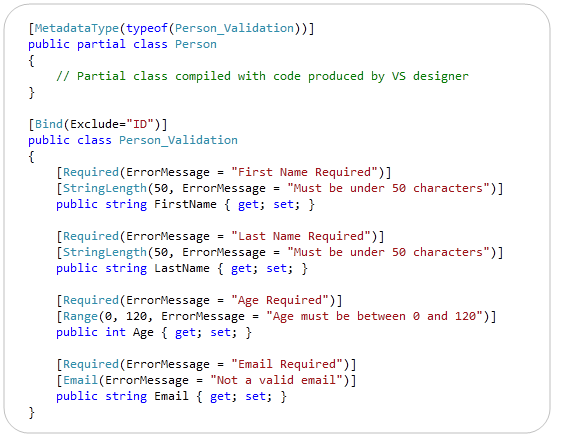

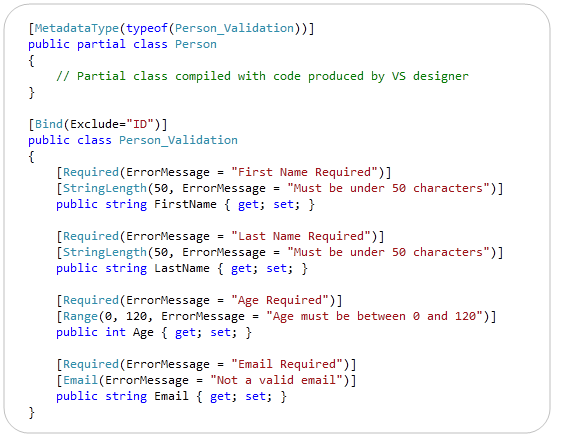

One way you can apply additional attribute-based meta-data (like validation attributes) to a class that is auto-generated/maintained by a VS designer is to employ a technique we call “buddy classes”. Basically you create a separate class that contains your validation attributes and meta-data, and then link it to the class generated by the designer by applying a “MetadataType” attribute to a partial class that is compiled with the tool-generated class. For example, if we wanted to apply the validation rules we used earlier to a Person class maintained by a LINQ to SQL or ADO.NET EF designer we could update our validation code to instead live in a separate “Person_Validation” class that is linked to the “Person” class created by VS using the code below:

The above approach is not as elegant as a pure POCO approach – but has the benefit of working with pretty much any tool or designer-generated code within Visual Studio.

Last Step – Saving the Friend to the Database

Our last step – regardless of whether we use a POCO or tool-generated Person class – will be to save our valid friends into the database.

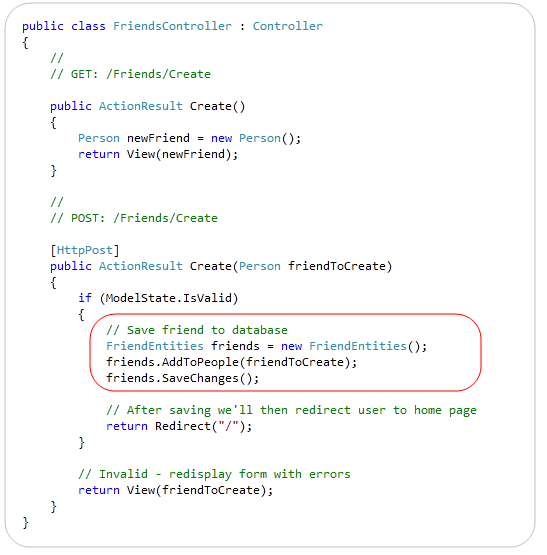

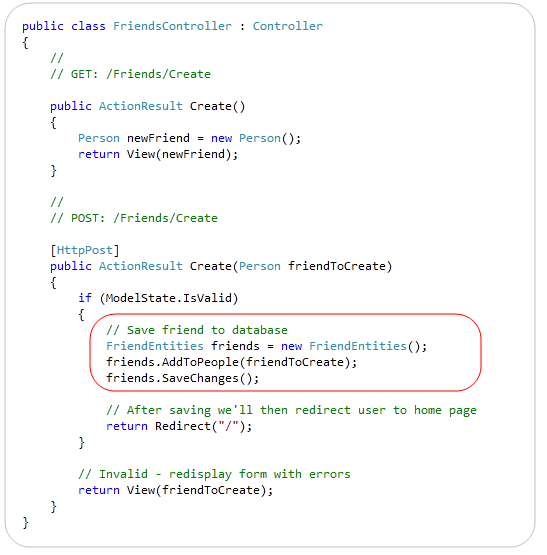

Doing that simply requires us to replace the “Todo” placeholder statement within our FriendsController class with 3 lines of code that saves the new friend to a database. Below is the complete code for the entire FriendsController class – when using ADO.NET EF to do the database persistence for us:

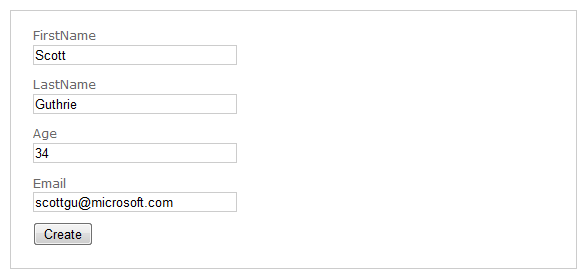

And now when we visit the /Friends/Create URL we can easily add new People to our friends database:

Validation for all the data is enforced on both the client and server. We can easily add/modify/delete validation rules in one place, and have those rules be enforced by all controllers and views across our application.

Summary

ASP.NET MVC 2 makes it much easier to integrate validation into web applications. It promotes a model-based validation approach that enables you to keep your applications very DRY, and helps ensure that validation rules are enforced consistently throughout an application. The built-in DataAnnotations support within ASP.NET MVC 2 makes supporting common validation scenarios really easy out of the box. The extensibility support within the ASP.NET MVC 2 validation infrastructure then enables you to support a wide variety of more advanced validation scenarios – and plugin any existing or custom validation framework/engine.

Hope this helps,

Scott