by Eldert Grootenboer | Jun 4, 2018 | BizTalk Community Blogs via Syndication

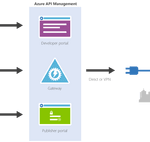

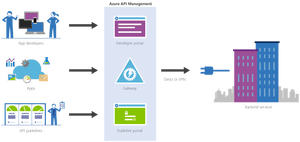

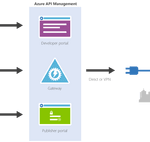

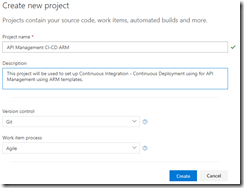

This is the fifth and final post in my series around setting up CI/CD for Azure API Management using Azure Resource Manager templates. We already created our API Management instance, added products, users and groups to the instance, and created unversioned and versioned APIs. In this final post, we will see how we can use linked ARM templates in combination with VSTS to deploy our solution all at once, and how this allows us to re-use existing templates to build up our API Management.

The posts in this series are the following, this list will be updated as the posts are being published.

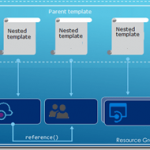

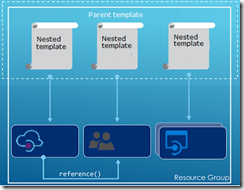

So far we did each of these steps from their own repositories, which is great when you want to have different developers only working on their own parts of the total solution. However if you don’t need this type of granular security on your repositories, it probably makes more sense to have you entire API Management solution in a single repository. When working with ARM templates, they can quickly become quite large and cumbersome to maintain. To avoid this, we can split up the template into smaller templates, which each does it’s own piece of work, and link these together from a master template. This allows for re-use of templates and breaking them down into smaller pieces of functionality. For this post we will be creating a API Management instance, create products, users and groups for Contoso, and deploy a versioned API. We will be re-using the templates we created in the previous blogposts from this series for our content.

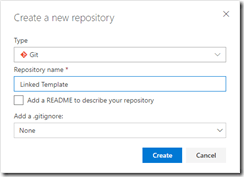

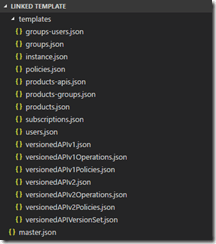

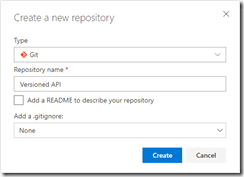

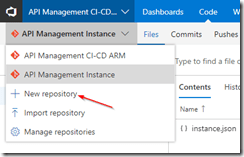

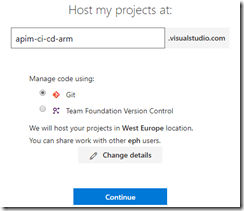

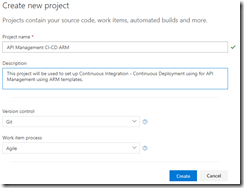

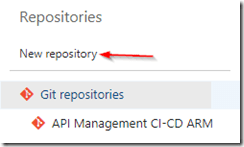

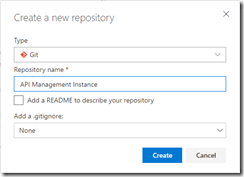

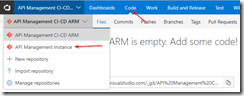

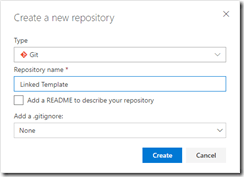

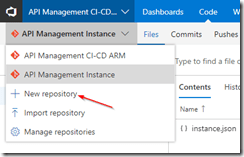

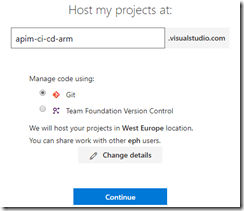

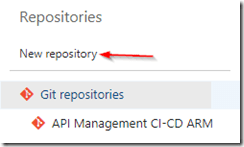

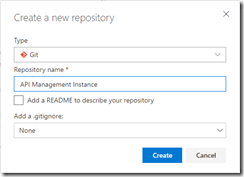

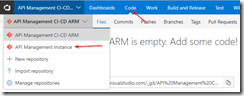

We will start by creating a new GIT repository in our VSTS project called Linked Template. This repository will hold the master and nested templates which will be used to roll out our API Management solution.

Create Linked Template repository

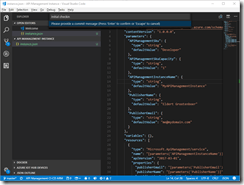

Once the repository has been created, we will clone it to our local machine, and add a folder called templates in the repository. In the templates folder, create a new file called instance.json, which will hold the following nested ARM template for the API Management instance. All the ARM templates in this post should be placed in the templates folder unless otherwise specified.

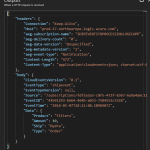

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementSku": {

"type": "string",

"defaultValue": "Developer"

},

"APIManagementSkuCapacity": {

"type": "string",

"defaultValue": "1"

},

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

},

"PublisherName": {

"type": "string",

"defaultValue": "Eldert Grootenboer"

},

"PublisherEmail": {

"type": "string",

"defaultValue": "me@mydomaintwo.com"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service",

"name": "[parameters('APIManagementInstanceName')]",

"apiVersion": "2017-03-01",

"properties": {

"publisherEmail": "[parameters('PublisherEmail')]",

"publisherName": "[parameters('PublisherName')]",

"notificationSenderEmail": "apimgmt-noreply@mail.windowsazure.com",

"hostnameConfigurations": [],

"additionalLocations": null,

"virtualNetworkConfiguration": null,

"customProperties": {

"Microsoft.WindowsAzure.ApiManagement.Gateway.Security.Protocols.Tls10": "False",

"Microsoft.WindowsAzure.ApiManagement.Gateway.Security.Protocols.Tls11": "False",

"Microsoft.WindowsAzure.ApiManagement.Gateway.Security.Protocols.Ssl30": "False",

"Microsoft.WindowsAzure.ApiManagement.Gateway.Security.Ciphers.TripleDes168": "False",

"Microsoft.WindowsAzure.ApiManagement.Gateway.Security.Backend.Protocols.Tls10": "False",

"Microsoft.WindowsAzure.ApiManagement.Gateway.Security.Backend.Protocols.Tls11": "False",

"Microsoft.WindowsAzure.ApiManagement.Gateway.Security.Backend.Protocols.Ssl30": "False"

},

"virtualNetworkType": "None"

},

"resources": [],

"sku": {

"name": "[parameters('APIManagementSku')]",

"capacity": "[parameters('APIManagementSkuCapacity')]"

},

"location": "[resourceGroup().location]",

"tags": {},

"scale": null

}

]

}

|

The next ARM template will hold the users of Contoso, add a users.json file to the same local repository and add the following template.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/users",

"name": "[concat(parameters('APIManagementInstanceName'), '/john-smith-contoso-com')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"firstName": "John",

"lastName": "Smith",

"email": "john.smith@eldert.org",

"state": "active",

"note": "Developer working for Contoso, one of the consumers of our APIs",

"confirmation": "invite"

}

}

]

}

|

Now add a groups.json file to the repository containing the following ARM template, this will add the groups for Contoso.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/groups",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosogroup')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "ContosoGroup",

"description": "Group containing all developers and services from Contoso who will be consuming our APIs",

"type": "custom",

"externalId": null

}

}

]

}

|

The following ARM template will be used to add the product for Contoso, for this we are going to add products.json to the repository.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/products",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosoproduct')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "ContosoProduct",

"description": "Product which will apply the high-over policies for developers and services of Contoso.",

"terms": null,

"subscriptionRequired": true,

"approvalRequired": true,

"subscriptionsLimit": null,

"state": "published"

}

}

]

}

|

We have created our templates for the products and groups, so the next template, which will be called products-groups.json, will link the Contoso group to the Contoso product.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/products/groups",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosoproduct/contosogroup')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {}

}

]

}

|

Likewise we will also link the Contoso users to the Contoso group using the following ARM template in a file called groups-users.json.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/groups/users",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosogroup/john-smith-contoso-com')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {}

}

]

}

|

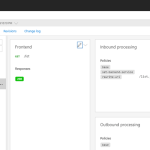

Next up are the policies for the product, for this add a file called policies.json to the repository.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/products/policies",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosoproduct/policy')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"policyContent": "<policies>rn <inbound>rn <base />rn <rate-limit calls="20" renewal-period="60" />rn </inbound>rn <backend>rn <base />rn </backend>rn <outbound>rn <base />rn </outbound>rn <on-error>rn <base />rn </on-error>rn</policies>"

}

}

]

}

|

Now lets add the subcription for the Contoso user, which contains the Contoso product, by adding a file called subscriptions.json.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/subscriptions",

"name": "[concat(parameters('APIManagementInstanceName'), '/5ae6ed2358c2795ab5aaba68')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"userId": "[resourceId('Microsoft.ApiManagement/service/users', parameters('APIManagementInstanceName'), 'john-smith-contoso-com')]",

"productId": "[resourceId('Microsoft.ApiManagement/service/products', parameters('APIManagementInstanceName'), 'contosoproduct')]",

"displayName": "ContosoProduct subscription",

"state": "active"

}

}

]

}

|

Next we will create the version set which will hold the different versions of our versioned API. Add a file to the repository called versionedAPIVersionSet.json and add the following template to it.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"name": "[concat(parameters('APIManagementInstanceName'), '/versionsetversionedapi')]",

"type": "Microsoft.ApiManagement/service/api-version-sets",

"apiVersion": "2017-03-01",

"properties": {

"description": "Version set for versioned API blog post ",

"versionQueryName": "api-version",

"displayName": "Versioned API",

"versioningScheme": "query"

}

}

]

}

|

We will now add the template for the first version of the versioned API, by creating a file in the repository called versionedAPIv1.json.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/apis",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "Versioned API",

"apiRevision": "1",

"description": "Wikipedia for Web APIs. Repository of API specs in OpenAPI(fka Swagger) 2.0 format.nn**Warning**: If you want to be notified about changes in advance please subscribe to our [Gitter channel](https://gitter.im/APIs-guru/api-models).nnClient sample: [[Demo]](https://apis.guru/simple-ui) [[Repo]](https://github.com/APIs-guru/simple-ui)n",

"serviceUrl": "https://api.apis.guru/v2/",

"path": "versioned-api",

"protocols": [

"https"

],

"authenticationSettings": null,

"subscriptionKeyParameterNames": null,

"apiVersion": "v1",

"apiVersionSetId": "[concat(resourceId('Microsoft.ApiManagement/service', parameters('APIManagementInstanceName')), '/api-version-sets/versionsetversionedapi')]"

}

}

]

}

|

Operations

For the first version of the API we will now add a file called versionedAPIv1Operations.json which will hold the various operations of this API.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/apis/operations",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api/getMetrics')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "Get basic metrics",

"method": "GET",

"urlTemplate": "/metrics",

"templateParameters": [],

"description": "Some basic metrics for the entire directory.nJust stunning numbers to put on a front page and are intended purely for WoW effect :)n",

"responses": [

{

"statusCode": 200,

"description": "OK",

"headers": []

}

],

"policies": null

}

},

{

"type": "Microsoft.ApiManagement/service/apis/operations",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api/listAPIs')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "List all APIs",

"method": "GET",

"urlTemplate": "/list",

"templateParameters": [],

"description": "List all APIs in the directory.nReturns links to OpenAPI specification for each API in the directory.nIf API exist in multiple versions `preferred` one is explicitly marked.nnSome basic info from OpenAPI spec is cached inside each object.nThis allows to generate some simple views without need to fetch OpenAPI spec for each API.n",

"responses": [

{

"statusCode": 200,

"description": "OK",

"headers": []

}

],

"policies": null

}

}

]

}

|

Policies

The policies for the first version of our API are next, lets add the versionedAPIv1Policies.json file for this.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/apis/operations/policies",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api/getMetrics/policy')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"policyContent": "[concat('<!--rn IMPORTANT:rn - Policy elements can appear only within the <inbound>, <outbound>, <backend> section elements.rn - Only the <forward-request> policy element can appear within the <backend> section element.rn - To apply a policy to the incoming request (before it is forwarded to the backend service), place a corresponding policy element within the <inbound> section element.rn - To apply a policy to the outgoing response (before it is sent back to the caller), place a corresponding policy element within the <outbound> section element.rn - To add a policy position the cursor at the desired insertion point and click on the round button associated with the policy.rn - To remove a policy, delete the corresponding policy statement from the policy document.rn - Position the <base> element within a section element to inherit all policies from the corresponding section element in the enclosing scope.rn - Remove the <base> element to prevent inheriting policies from the corresponding section element in the enclosing scope.rn - Policies are applied in the order of their appearance, from the top down.rn-->rn<policies>rn <inbound>rn <base />rn <set-backend-service base-url="https://api.apis.guru/v2/" />rn <rewrite-uri template="/metrics.json" />rn </inbound>rn <backend>rn <base />rn </backend>rn <outbound>rn <base />rn </outbound>rn <on-error>rn <base />rn </on-error>rn</policies>')]"

}

},

{

"type": "Microsoft.ApiManagement/service/apis/operations/policies",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api/listAPIs/policy')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"policyContent": "[concat('<!--rn IMPORTANT:rn - Policy elements can appear only within the <inbound>, <outbound>, <backend> section elements.rn - Only the <forward-request> policy element can appear within the <backend> section element.rn - To apply a policy to the incoming request (before it is forwarded to the backend service), place a corresponding policy element within the <inbound> section element.rn - To apply a policy to the outgoing response (before it is sent back to the caller), place a corresponding policy element within the <outbound> section element.rn - To add a policy position the cursor at the desired insertion point and click on the round button associated with the policy.rn - To remove a policy, delete the corresponding policy statement from the policy document.rn - Position the <base> element within a section element to inherit all policies from the corresponding section element in the enclosing scope.rn - Remove the <base> element to prevent inheriting policies from the corresponding section element in the enclosing scope.rn - Policies are applied in the order of their appearance, from the top down.rn-->rn<policies>rn <inbound>rn <base />rn <set-backend-service base-url="https://api.apis.guru/v2" />rn <rewrite-uri template="/list.json" />rn </inbound>rn <backend>rn <base />rn </backend>rn <outbound>rn <base />rn </outbound>rn <on-error>rn <base />rn </on-error>rn</policies>')]"

}

}

]

}

|

And now we will add the second version of our versioned API as well, in a file called versionedAPIv2.json.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/apis",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api-v2')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "Versioned API",

"apiRevision": "1",

"description": "Wikipedia for Web APIs. Repository of API specs in OpenAPI(fka Swagger) 2.0 format.nn**Warning**: If you want to be notified about changes in advance please subscribe to our [Gitter channel](https://gitter.im/APIs-guru/api-models).nnClient sample: [[Demo]](https://apis.guru/simple-ui) [[Repo]](https://github.com/APIs-guru/simple-ui)n",

"serviceUrl": "https://api.apis.guru/v2/",

"path": "versioned-api",

"protocols": [

"https"

],

"authenticationSettings": null,

"subscriptionKeyParameterNames": null,

"apiVersion": "v2",

"apiVersionSetId": "[concat(resourceId('Microsoft.ApiManagement/service', parameters('APIManagementInstanceName')), '/api-version-sets/versionsetversionedapi')]"

}

}

]

}

|

Operations

Add the operations for the second version of our API in a file called versionedAPIv2Operations.json.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/apis/operations",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api-v2/listAPIs')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "List all APIs",

"method": "GET",

"urlTemplate": "/list",

"templateParameters": [],

"description": "List all APIs in the directory.nReturns links to OpenAPI specification for each API in the directory.nIf API exist in multiple versions `preferred` one is explicitly marked.nnSome basic info from OpenAPI spec is cached inside each object.nThis allows to generate some simple views without need to fetch OpenAPI spec for each API.n",

"responses": [

{

"statusCode": 200,

"description": "OK",

"headers": []

}

],

"policies": null

}

}

]

}

|

Policies

And add a file called versionedAPIv2Policies.json which will hold the policies for the second version of our versioned API.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/apis/operations/policies",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api-v2/listAPIs/policy')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"policyContent": "[concat('<!--rn IMPORTANT:rn - Policy elements can appear only within the <inbound>, <outbound>, <backend> section elements.rn - Only the <forward-request> policy element can appear within the <backend> section element.rn - To apply a policy to the incoming request (before it is forwarded to the backend service), place a corresponding policy element within the <inbound> section element.rn - To apply a policy to the outgoing response (before it is sent back to the caller), place a corresponding policy element within the <outbound> section element.rn - To add a policy position the cursor at the desired insertion point and click on the round button associated with the policy.rn - To remove a policy, delete the corresponding policy statement from the policy document.rn - Position the <base> element within a section element to inherit all policies from the corresponding section element in the enclosing scope.rn - Remove the <base> element to prevent inheriting policies from the corresponding section element in the enclosing scope.rn - Policies are applied in the order of their appearance, from the top down.rn-->rn<policies>rn <inbound>rn <base />rn <set-backend-service base-url="https://api.apis.guru/v2" />rn <rewrite-uri template="/list.json" />rn </inbound>rn <backend>rn <base />rn </backend>rn <outbound>rn <base />rn </outbound>rn <on-error>rn <base />rn </on-error>rn</policies>')]"

}

}

]

}

|

And finally we will add the products-apis.json file, which will link the versions of our versioned API to the Contoso product.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"resources": [

{

"type": "Microsoft.ApiManagement/service/products/apis",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosoproduct/versioned-api')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {}

},

{

"type": "Microsoft.ApiManagement/service/products/apis",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosoproduct/versioned-api-v2')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {}

}

]

}

|

We have now created all of our linked templates, so lets create the master template in a file called master.json. Don’t place this file in the templates folder, but instead place it in the root directory of the repository. This template will call all our other templates, passing in parameters as required. This is also where we handle our dependOn dependencies.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"TemplatesStorageAccount": {

"type": "string",

"defaultValue": "https://mystorageaccount.blob.core.windows.net/templates/"

},

"TemplatesStorageAccountSASToken": {

"type": "string",

"defaultValue": ""

},

"APIManagementSku": {

"type": "string",

"defaultValue": "Developer"

},

"APIManagementSkuCapacity": {

"type": "string",

"defaultValue": "1"

},

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

},

"PublisherName": {

"type": "string",

"defaultValue": "Eldert Grootenboer"

},

"PublisherEmail": {

"type": "string",

"defaultValue": "me@mydomaintwo.com"

}

},

"resources": [

{

"apiVersion": "2017-05-10",

"name": "instanceTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/instance.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementSku": {"value": "[parameters('APIManagementSku')]" },

"APIManagementSkuCapacity": {"value": "[parameters('APIManagementSkuCapacity')]" },

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" },

"PublisherName": {"value": "[parameters('PublisherName')]" },

"PublisherEmail": {"value": "[parameters('PublisherEmail')]" }

}

}

},

{

"apiVersion": "2017-05-10",

"name": "usersTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/users.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'instanceTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "groupsTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/groups.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'instanceTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "productsTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/products.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'instanceTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "groupsUsersTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/groups-users.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'groupsTemplate')]",

"[resourceId('Microsoft.Resources/deployments', 'usersTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "productsGroupsTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/products-groups.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'productsTemplate')]",

"[resourceId('Microsoft.Resources/deployments', 'groupsTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "subscriptionsTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/subscriptions.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'productsTemplate')]",

"[resourceId('Microsoft.Resources/deployments', 'usersTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "policiesTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/policies.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'productsTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "versionedAPIVersionSetTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/versionedAPIVersionSet.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'instanceTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "versionedAPIv1Template",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/versionedAPIv1.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIVersionSetTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "versionedAPIv1OperationsTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/versionedAPIv1Operations.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIv1Template')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "versionedAPIv1PoliciesTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/versionedAPIv1Policies.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIv1Template')]",

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIv1OperationsTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "versionedAPIv2Template",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/versionedAPIv2.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIVersionSetTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "versionedAPIv2OperationsTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/versionedAPIv2Operations.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIv2Template')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "versionedAPIv2PoliciesTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/versionedAPIv2Policies.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIv2Template')]",

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIv2OperationsTemplate')]"

]

},

{

"apiVersion": "2017-05-10",

"name": "productsAPIsTemplate",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "Incremental",

"templateLink": {

"uri":"[concat(parameters('TemplatesStorageAccount'), '/products-apis.json', parameters('TemplatesStorageAccountSASToken'))]",

"contentVersion":"1.0.0.0"

},

"parameters": {

"APIManagementInstanceName": {"value": "[parameters('APIManagementInstanceName')]" }

}

},

"dependsOn": [

"[resourceId('Microsoft.Resources/deployments', 'productsTemplate')]",

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIv1Template')]",

"[resourceId('Microsoft.Resources/deployments', 'versionedAPIv2Template')]"

]

}

]

}

|

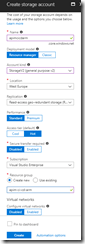

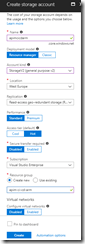

As you will notice, the first parameter is a path to a container in a storage account, so be sure to create this container. You could even do this from the build pipeline, but for this blog post we will do it manually.

Create storage account

Create container

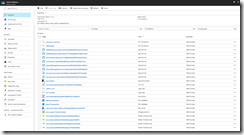

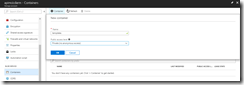

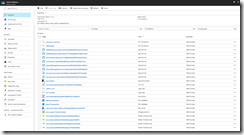

We should now have a repository filled with ARM templates, so commit and push these to the remote repository.

Overview of ARM template files

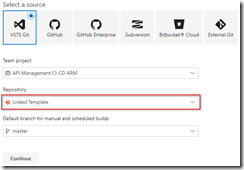

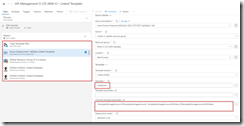

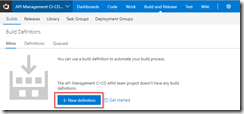

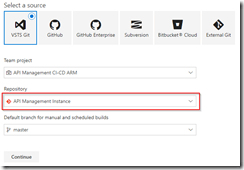

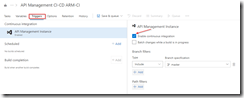

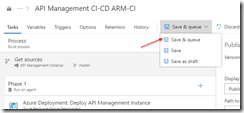

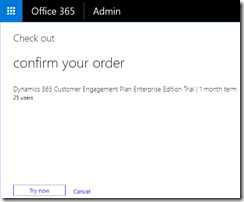

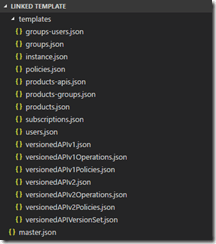

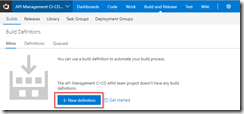

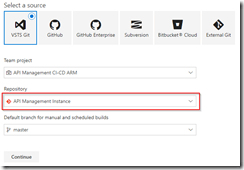

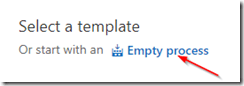

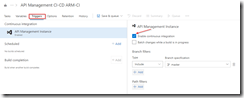

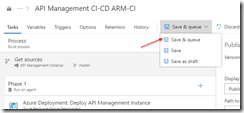

Switch to our project in VSTS, and create a build pipeline called API Management CI-CD ARM-CI – Linked Template to validate the ARM template. Remember to enable the continous integration trigger, so our build runs every time we do a push to our repository.

Create build template for Linked Template repository

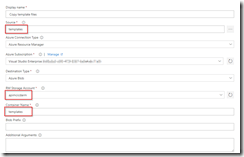

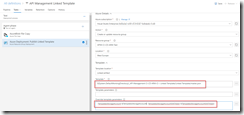

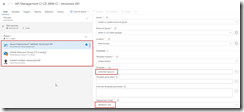

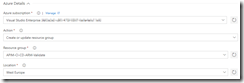

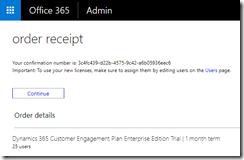

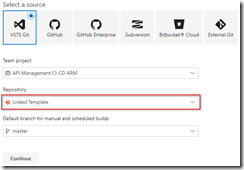

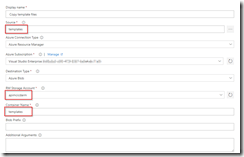

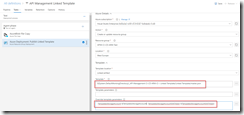

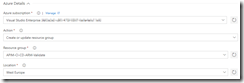

In the build definition, we will add a Azure File Copy step, which will copy our linked templates to the blob container we just created, so update the settings of the step accordingly.

Set Azure File Copy steps properties

In the output of this step we will get back the URI and SAS token for our container which we will need in our ARM template, so make sure to update the variables here.

Set output names so we can use these in our ARM template

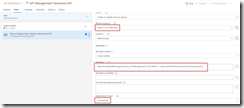

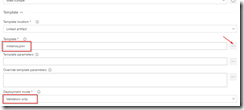

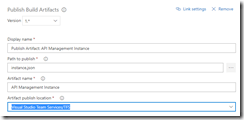

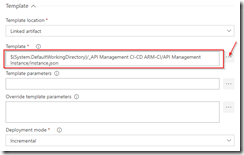

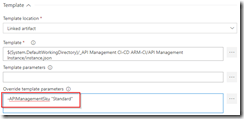

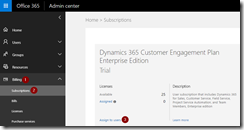

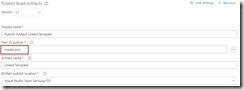

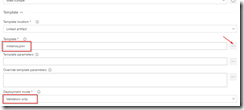

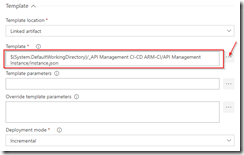

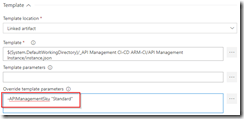

The other steps in the build pipeline are just as described in the first post in this series, except that we now use the variables from the Azure Copy File step in our Azure Resource Group Deployment step to update the location and SAS token of the storage account, and we include an extra Publish Built Artifacts step.

The complete build pipeline for validation

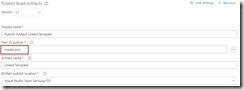

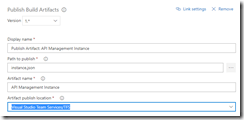

Note that in first the Publish Built Artifacts step we just publish the master.json files.

Only publish master template in first Publish Build Artifacts step

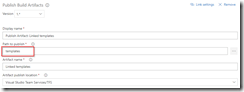

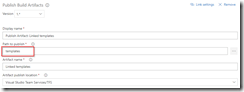

While in the second Publish Built Artifacts step we publish the linked templates.

Publish templates folder in second Publish Build Artifacts step

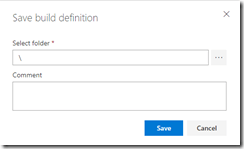

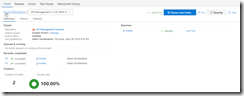

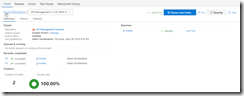

Once done, make sure to save and queue the build definition.

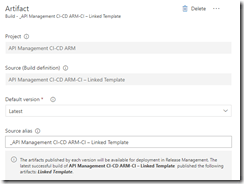

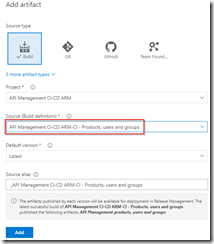

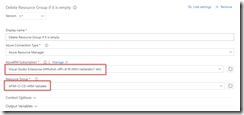

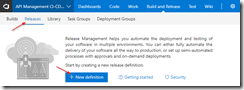

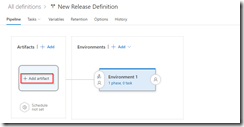

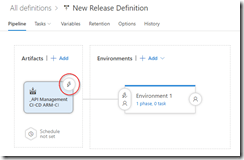

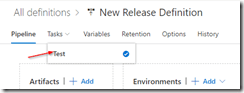

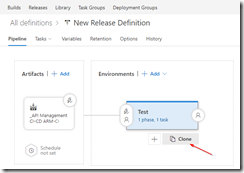

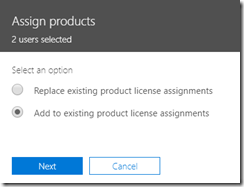

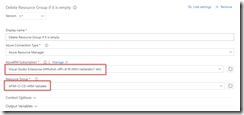

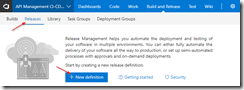

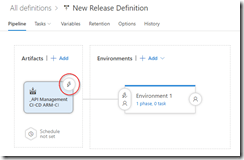

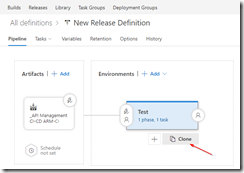

We will now create the deployment pipeline using a new release definition called API Management Linked Template, which again is almost the same as described in the first post of this series, except we will now include the Azure Copy File step just like in the build pipeline to copy our linked templates to the templates container.

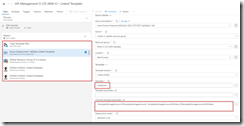

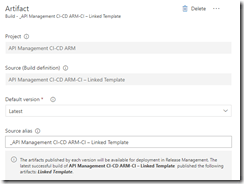

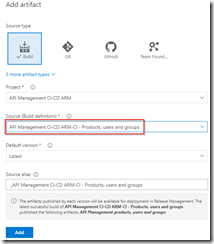

Use output from build pipeline as artifact

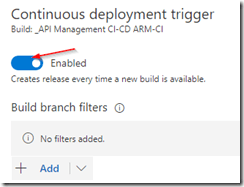

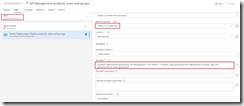

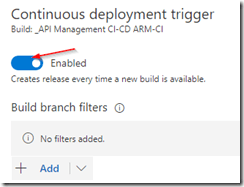

Do not forget to enable the Continuous deployment trigger so this deployment is triggered each time the build runs successfully. In the Test environment, add a Azure File Copy step to copy the linked template files to our templates container, and set variables containing the URI and SAS token.

Set properties for Azure Copy Files step

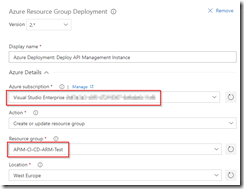

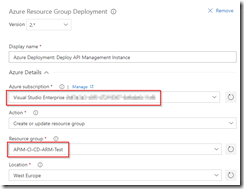

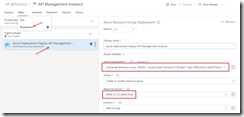

In the Azure Resource Group Deployment step use the variables from the Azure File Copy step to set the location and SAS token of the container.

Use URI and SAS token from previous step

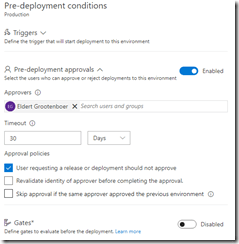

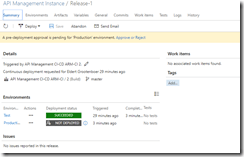

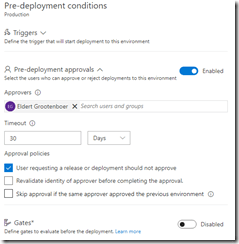

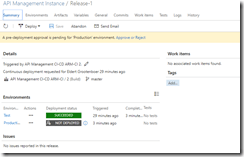

Clone the test environment and set the cloned environment up as production environment, do remember to include an approval step.

Approval should be given before deploying to production

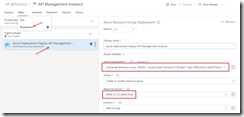

We have now finished our complete CI/CD pipeline, if we make push any changes to our repository it will trigger the build which does validation of the ARM templates, and then uses them to deploy or update our complete API Management instance including all users, groups, products and APIs which we defined in our templates.

API Management instance created including users, groups, products and APIs

by Eldert Grootenboer | May 28, 2018 | BizTalk Community Blogs via Syndication

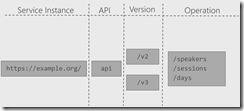

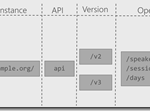

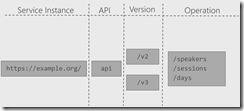

This is the fourth post in my series around setting up CI/CD for Azure API Management using Azure Resource Manager templates. So far we have created our API Management instance, added the products, users and groups for Contoso, and created an unversioned API. In this post we will create an versioned API, allowing us to run multiple versions of an API side by side.

The posts in this series are the following, this list will be updated as the posts are being published.

When working with APIs we will sometimes have to implement breaking changes to our solution. Whenever possible, we should give the consumers of our API the chance to migrate to the new implementation at their own pace, which can be done by exposing multiple versions of an API. In this post we will again be exposing the APIs.guru service through API Management, with two versions, where we remove an operation in the second version.

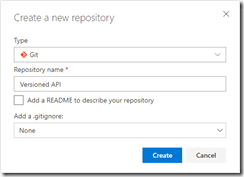

Use the guidance from the first post of this series to set up a repository and clone this to our local machine. The name of the repository we will be creating should be Versioned API, and will hold the ARM template for this post.

Create Versioned API repository

Once the GIT repository has been created and cloned to your local machine, add a file called versioned-api.json and add the following ARM template to it.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"variables": {},

"resources": [

{

"name": "[concat(parameters('APIManagementInstanceName'), '/versionsetversionedapi')]",

"type": "Microsoft.ApiManagement/service/api-version-sets",

"apiVersion": "2017-03-01",

"properties": {

"description": "Version set for versioned API blog post",

"versionQueryName": "api-version",

"displayName": "Versioned API",

"versioningScheme": "query"

}

}

]

}

|

This will create the version set which is needed to create versioned APIs. In this case we will be using a query string as the versioning scheme.

Next we will implement the two versions of the API.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"variables": {},

"resources": [

{

"name": "[concat(parameters('APIManagementInstanceName'), '/versionsetversionedapi')]",

"type": "Microsoft.ApiManagement/service/api-version-sets",

"apiVersion": "2017-03-01",

"properties": {

"description": "Version set for versioned API blog post",

"versionQueryName": "api-version",

"displayName": "Versioned API",

"versioningScheme": "query"

}

},

{

"type": "Microsoft.ApiManagement/service/apis",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "Versioned API",

"apiRevision": "1",

"description": "Wikipedia for Web APIs. Repository of API specs in OpenAPI(fka Swagger) 2.0 format.nn**Warning**: If you want to be notified about changes in advance please subscribe to our [Gitter channel](https://gitter.im/APIs-guru/api-models).nnClient sample: [[Demo]](https://apis.guru/simple-ui) [[Repo]](https://github.com/APIs-guru/simple-ui)n",

"serviceUrl": "https://api.apis.guru/v2/",

"path": "versioned-api",

"protocols": [

"https"

],

"authenticationSettings": null,

"subscriptionKeyParameterNames": null,

"apiVersion": "v1",

"apiVersionSetId": "[concat(resourceId('Microsoft.ApiManagement/service', parameters('APIManagementInstanceName')), '/api-version-sets/versionsetversionedapi')]"

},

"dependsOn": [

"[concat(resourceId('Microsoft.ApiManagement/service', parameters('APIManagementInstanceName')), '/api-version-sets/versionsetversionedapi')]"

]

},

{

"type": "Microsoft.ApiManagement/service/apis",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api-v2')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "Versioned API",

"apiRevision": "1",

"description": "Wikipedia for Web APIs. Repository of API specs in OpenAPI(fka Swagger) 2.0 format.nn**Warning**: If you want to be notified about changes in advance please subscribe to our [Gitter channel](https://gitter.im/APIs-guru/api-models).nnClient sample: [[Demo]](https://apis.guru/simple-ui) [[Repo]](https://github.com/APIs-guru/simple-ui)n",

"serviceUrl": "https://api.apis.guru/v2/",

"path": "versioned-api",

"protocols": [

"https"

],

"authenticationSettings": null,

"subscriptionKeyParameterNames": null,

"apiVersion": "v2",

"apiVersionSetId": "[concat(resourceId('Microsoft.ApiManagement/service', parameters('APIManagementInstanceName')), '/api-version-sets/versionsetversionedapi')]"

},

"dependsOn": [

"[concat(resourceId('Microsoft.ApiManagement/service', parameters('APIManagementInstanceName')), '/api-version-sets/versionsetversionedapi')]"

]

},

{

"type": "Microsoft.ApiManagement/service/products/apis",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosoproduct/versioned-api')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {},

"dependsOn": [

"[resourceId('Microsoft.ApiManagement/service/apis', parameters('APIManagementInstanceName'), 'versioned-api')]"

]

},

{

"type": "Microsoft.ApiManagement/service/products/apis",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosoproduct/versioned-api-v2')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {},

"dependsOn": [

"[resourceId('Microsoft.ApiManagement/service/apis', parameters('APIManagementInstanceName'), 'versioned-api-v2')]"

]

},

{

"type": "Microsoft.ApiManagement/service/apis/operations",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api/getMetrics')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "Get basic metrics",

"method": "GET",

"urlTemplate": "/metrics",

"templateParameters": [],

"description": "Some basic metrics for the entire directory.nJust stunning numbers to put on a front page and are intended purely for WoW effect :)n",

"responses": [

{

"statusCode": 200,

"description": "OK",

"headers": []

}

],

"policies": null

},

"dependsOn": [

"[resourceId('Microsoft.ApiManagement/service/apis', parameters('APIManagementInstanceName'), 'versioned-api')]"

]

},

{

"type": "Microsoft.ApiManagement/service/apis/operations",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api/listAPIs')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "List all APIs",

"method": "GET",

"urlTemplate": "/list",

"templateParameters": [],

"description": "List all APIs in the directory.nReturns links to OpenAPI specification for each API in the directory.nIf API exist in multiple versions `preferred` one is explicitly marked.nnSome basic info from OpenAPI spec is cached inside each object.nThis allows to generate some simple views without need to fetch OpenAPI spec for each API.n",

"responses": [

{

"statusCode": 200,

"description": "OK",

"headers": []

}

],

"policies": null

},

"dependsOn": [

"[resourceId('Microsoft.ApiManagement/service/apis', parameters('APIManagementInstanceName'), 'versioned-api')]"

]

},

{

"type": "Microsoft.ApiManagement/service/apis/operations",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api-v2/listAPIs')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "List all APIs",

"method": "GET",

"urlTemplate": "/list",

"templateParameters": [],

"description": "List all APIs in the directory.nReturns links to OpenAPI specification for each API in the directory.nIf API exist in multiple versions `preferred` one is explicitly marked.nnSome basic info from OpenAPI spec is cached inside each object.nThis allows to generate some simple views without need to fetch OpenAPI spec for each API.n",

"responses": [

{

"statusCode": 200,

"description": "OK",

"headers": []

}

],

"policies": null

},

"dependsOn": [

"[resourceId('Microsoft.ApiManagement/service/apis', parameters('APIManagementInstanceName'), 'versioned-api-v2')]"

]

},

{

"type": "Microsoft.ApiManagement/service/apis/operations/policies",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api/getMetrics/policy')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"policyContent": "[concat('<!--rn IMPORTANT:rn - Policy elements can appear only within the <inbound>, <outbound>, <backend> section elements.rn - Only the <forward-request> policy element can appear within the <backend> section element.rn - To apply a policy to the incoming request (before it is forwarded to the backend service), place a corresponding policy element within the <inbound> section element.rn - To apply a policy to the outgoing response (before it is sent back to the caller), place a corresponding policy element within the <outbound> section element.rn - To add a policy position the cursor at the desired insertion point and click on the round button associated with the policy.rn - To remove a policy, delete the corresponding policy statement from the policy document.rn - Position the <base> element within a section element to inherit all policies from the corresponding section element in the enclosing scope.rn - Remove the <base> element to prevent inheriting policies from the corresponding section element in the enclosing scope.rn - Policies are applied in the order of their appearance, from the top down.rn-->rn<policies>rn <inbound>rn <base />rn <set-backend-service base-url="https://api.apis.guru/v2/" />rn <rewrite-uri template="/metrics.json" />rn </inbound>rn <backend>rn <base />rn </backend>rn <outbound>rn <base />rn </outbound>rn <on-error>rn <base />rn </on-error>rn</policies>')]"

},

"dependsOn": [

"[resourceId('Microsoft.ApiManagement/service/apis', parameters('APIManagementInstanceName'), 'versioned-api')]",

"[resourceId('Microsoft.ApiManagement/service/apis/operations', parameters('APIManagementInstanceName'), 'versioned-api', 'getMetrics')]"

]

},

{

"type": "Microsoft.ApiManagement/service/apis/operations/policies",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api/listAPIs/policy')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"policyContent": "[concat('<!--rn IMPORTANT:rn - Policy elements can appear only within the <inbound>, <outbound>, <backend> section elements.rn - Only the <forward-request> policy element can appear within the <backend> section element.rn - To apply a policy to the incoming request (before it is forwarded to the backend service), place a corresponding policy element within the <inbound> section element.rn - To apply a policy to the outgoing response (before it is sent back to the caller), place a corresponding policy element within the <outbound> section element.rn - To add a policy position the cursor at the desired insertion point and click on the round button associated with the policy.rn - To remove a policy, delete the corresponding policy statement from the policy document.rn - Position the <base> element within a section element to inherit all policies from the corresponding section element in the enclosing scope.rn - Remove the <base> element to prevent inheriting policies from the corresponding section element in the enclosing scope.rn - Policies are applied in the order of their appearance, from the top down.rn-->rn<policies>rn <inbound>rn <base />rn <set-backend-service base-url="https://api.apis.guru/v2" />rn <rewrite-uri template="/list.json" />rn </inbound>rn <backend>rn <base />rn </backend>rn <outbound>rn <base />rn </outbound>rn <on-error>rn <base />rn </on-error>rn</policies>')]"

},

"dependsOn": [

"[resourceId('Microsoft.ApiManagement/service/apis', parameters('APIManagementInstanceName'), 'versioned-api')]",

"[resourceId('Microsoft.ApiManagement/service/apis/operations', parameters('APIManagementInstanceName'), 'versioned-api', 'listAPIs')]"

]

},

{

"type": "Microsoft.ApiManagement/service/apis/operations/policies",

"name": "[concat(parameters('APIManagementInstanceName'), '/versioned-api-v2/listAPIs/policy')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"policyContent": "[concat('<!--rn IMPORTANT:rn - Policy elements can appear only within the <inbound>, <outbound>, <backend> section elements.rn - Only the <forward-request> policy element can appear within the <backend> section element.rn - To apply a policy to the incoming request (before it is forwarded to the backend service), place a corresponding policy element within the <inbound> section element.rn - To apply a policy to the outgoing response (before it is sent back to the caller), place a corresponding policy element within the <outbound> section element.rn - To add a policy position the cursor at the desired insertion point and click on the round button associated with the policy.rn - To remove a policy, delete the corresponding policy statement from the policy document.rn - Position the <base> element within a section element to inherit all policies from the corresponding section element in the enclosing scope.rn - Remove the <base> element to prevent inheriting policies from the corresponding section element in the enclosing scope.rn - Policies are applied in the order of their appearance, from the top down.rn-->rn<policies>rn <inbound>rn <base />rn <set-backend-service base-url="https://api.apis.guru/v2" />rn <rewrite-uri template="/list.json" />rn </inbound>rn <backend>rn <base />rn </backend>rn <outbound>rn <base />rn </outbound>rn <on-error>rn <base />rn </on-error>rn</policies>')]"

},

"dependsOn": [

"[resourceId('Microsoft.ApiManagement/service/apis', parameters('APIManagementInstanceName'), 'versioned-api-v2')]",

"[resourceId('Microsoft.ApiManagement/service/apis/operations', parameters('APIManagementInstanceName'), 'versioned-api-v2', 'listAPIs')]"

]

}

]

}

|

What we did here, was add two versions of the API, set their operations and policies, add them to the product for Contoso, and link them to the version set by setting the apiVersionSetId property on the APIs. We now have finished our ARM template, so commit it and push it to our repository.

Build pipeline

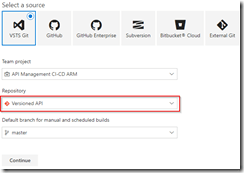

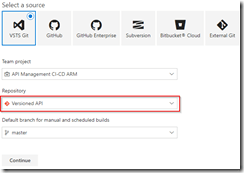

Now switch back to VSTS and create a build template called API Management CI-CD ARM-CI – Versioned API. Once again make sure to select the correct GIT repository.

Create build template for Versioned API

Once the build template has been created, make sure to set enable the continuous integration trigger, and create a validation pipeline just like in the first post of this series.

Create validation build pipeline

Once finished, save and queue the build definition.

Now create a new release definition called API Management Versioned API with a continious deployment trigger on the artifact deployed by our build pipeline we just created. Set up the test environment to deploy as soon as a new artifact is available.

Set up test environment

And finally clone the Test environment, and set the cloned environment up for the production environment. Remember to provide a approval step before deploying in this environment.

Set up deployment pipeline including approvals

We now have completed our CI/CD process for the versioned API, if we want to test this we’ll just make a change in the ARM template on our local machine and check this in, which will start the build pipeline, which in turn will trigger the deployment pipeline updating our API Management instance.

Versioned API has been deployed

by Eldert Grootenboer | May 21, 2018 | BizTalk Community Blogs via Syndication

This is the thirth post in my series around setting up CI/CD for Azure API Management using Azure Resource Manager templates. In the first post we created our API Management instance, and have set up our build and release pipelines, … Continue reading →

by Eldert Grootenboer | May 14, 2018 | BizTalk Community Blogs via Syndication

This is the second post in my series around setting up CI/CD for Azure API Management using Azure Resource Manager templates. In the previous post we created our API Management instance, and have set up our build and release pipelines. In this post we will add custom products, users and groups to our API Management instance, which will be used to set up our policies and access to our APIs.

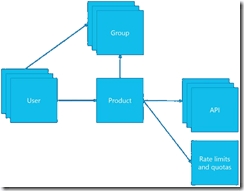

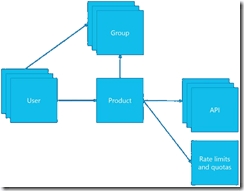

The posts in this series are the following, this list will be updated as the posts are being published.

For this post, we will be adding a new user to the API Management instance we created in the previous blog post in this series. This user will represent a client developer from the Contoso company, who will be using the APIs which we will define later on. In this scenario, Contoso consumes our APIs in their own processes. The user will be placed into a group, which represents the Contoso company. In a real life scenario, this group would contain users for all the developers and services of this particular client. And finally we will create a product for the Contoso company as well, and link the group to the product. The product is where we will be setting up policies and quotas, so we can limit the usage our services.

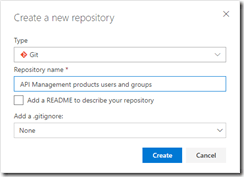

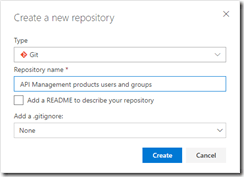

As explained in the first post in this series, we will be using different repositories for the various parts of our API Management setup. In that post, we already showed how we can set up a repository and clone this to our local machine. For this post, we will be creating a new repository, in which we will create the ARM template for our products, users and groups. Create the API Management products, users and groups repository and clone it to you machine.

Create new repository

API Management products, users and groups repository

Now we will start by creating the ARM template for adding a user for Contoso, who will be consuming our APIs. In your cloned repository, create a new file and name it products-users-groups.json, and add the following ARM template contents to the file.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"variables": {},

"resources": [

{

"type": "Microsoft.ApiManagement/service/users",

"name": "[concat(parameters('APIManagementInstanceName'), '/john-smith-contoso-com')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"firstName": "John",

"lastName": "Smith",

"email": "john.smith@contoso.com",

"state": "active",

"note": "Developer working for Contoso, one of the consumers of our APIs",

"confirmation": "invite"

},

"dependsOn": []

}

]

}

|

What we do here, is creating a new user (John Smith), and add it to our API Management instance. We have the name of the instance as a parameter, so we could override this from our deployment pipeline. As you will notice, we don’t set anything in our dependsOn, as the API Management instance has been created from another template. Also note the “confirmation”: “invite” line, which makes sure that the user will receive an email on the specified address to finish his registration by setting his own password.

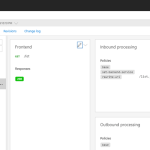

Next we will expand our ARM template to also create the group, so let’s update the ARM template to the following.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"variables": {},

"resources": [

{

"type": "Microsoft.ApiManagement/service/users",

"name": "[concat(parameters('APIManagementInstanceName'), '/john-smith-contoso-com')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"firstName": "John",

"lastName": "Smith",

"email": "john.smith@contoso.com",

"state": "active",

"note": "Developer working for Contoso, one of the consumers of our APIs",

"confirmation": "invite"

},

"dependsOn": []

},

{

"type": "Microsoft.ApiManagement/service/groups",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosogroup')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "ContosoGroup",

"description": "Group containing all developers and services from Contoso who will be consuming our APIs",

"type": "custom",

"externalId": null

},

"dependsOn": []

},

{

"type": "Microsoft.ApiManagement/service/groups/users",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosogroup/john-smith-contoso-com')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {},

"dependsOn": [

"[resourceId('Microsoft.ApiManagement/service/groups', parameters('APIManagementInstanceName'), 'contosogroup')]"

]

}

]

}

|

What we did here, was add two additional resources, one for the ContosoGroup group, and one to link the user to the group.

And finally, we will add a product for the Contoso consumers. On this product we will set a throttling policy, so these consumers are limited in the number of calls they can make to our APIs. Update the ARM template as following, this will also be the final version of this ARM template.

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"APIManagementInstanceName": {

"type": "string",

"defaultValue": "MyAPIManagementInstance"

}

},

"variables": {},

"resources": [

{

"type": "Microsoft.ApiManagement/service/users",

"name": "[concat(parameters('APIManagementInstanceName'), '/john-smith-contoso-com')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"firstName": "John",

"lastName": "Smith",

"email": "john.smith@contoso.com",

"state": "active",

"note": "Developer working for Contoso, one of the consumers of our APIs",

"confirmation": "invite"

},

"dependsOn": []

},

{

"type": "Microsoft.ApiManagement/service/groups",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosogroup')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "ContosoGroup",

"description": "Group containing all developers and services from Contoso who will be consuming our APIs",

"type": "custom",

"externalId": null

},

"dependsOn": []

},

{

"type": "Microsoft.ApiManagement/service/groups/users",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosogroup/john-smith-contoso-com')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {},

"dependsOn": [

"[resourceId('Microsoft.ApiManagement/service/groups', parameters('APIManagementInstanceName'), 'contosogroup')]"

]

},

{

"type": "Microsoft.ApiManagement/service/products",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosoproduct')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"displayName": "ContosoProduct",

"description": "Product which will apply the high-over policies for developers and services of Contoso.",

"subscriptionRequired": true,

"approvalRequired": true,

"state": "published"

},

"dependsOn": []

},

{

"type": "Microsoft.ApiManagement/service/products/groups",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosoproduct/contosogroup')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {},

"dependsOn": [

"[resourceId('Microsoft.ApiManagement/service/products', parameters('APIManagementInstanceName'), 'contosoproduct')]",

"[resourceId('Microsoft.ApiManagement/service/groups', parameters('APIManagementInstanceName'), 'contosogroup')]"

]

},

{

"type": "Microsoft.ApiManagement/service/subscriptions",

"name": "[concat(parameters('APIManagementInstanceName'), '/5ae6ed2358c2795ab5aaba68')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"userId": "[resourceId('Microsoft.ApiManagement/service/users', parameters('APIManagementInstanceName'), 'john-smith-contoso-com')]",

"productId": "[resourceId('Microsoft.ApiManagement/service/products', parameters('APIManagementInstanceName'), 'contosoproduct')]",

"displayName": "ContosoProduct subscription",

"state": "active"

},

"dependsOn": [

"[resourceId('Microsoft.ApiManagement/service/users', parameters('APIManagementInstanceName'), 'john-smith-contoso-com')]",

"[resourceId('Microsoft.ApiManagement/service/products', parameters('APIManagementInstanceName'), 'contosoproduct')]"

]

},

{

"type": "Microsoft.ApiManagement/service/products/policies",

"name": "[concat(parameters('APIManagementInstanceName'), '/contosoproduct/policy')]",

"apiVersion": "2017-03-01",

"scale": null,

"properties": {

"policyContent": "<policies>rn <inbound>rn <base />rn <rate-limit calls="20" renewal-period="60" />rn </inbound>rn <backend>rn <base />rn </backend>rn <outbound>rn <base />rn </outbound>rn <on-error>rn <base />rn </on-error>rn</policies>"

},

"dependsOn": [

"[resourceId('Microsoft.ApiManagement/service/products', parameters('APIManagementInstanceName'), 'contosoproduct')]"

]

}

]

}

|

The steps we added in this template were to create the ContosoProduct product, add ContosoGroup to the product, create a subscription for the Contoso user John Smith and link it to the product, and finally create a policy which implements throttling on the product level. Commit and push this final ARM template to your repository.

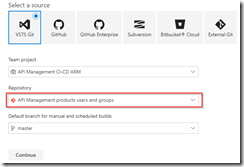

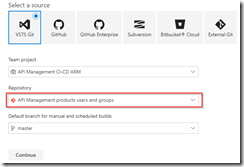

Now that we have finished our template, we will create new build definition called API Management CI-CD ARM-CI – Products, users and groups. The exact steps for creating a build pipeline have already been described in the previous blogpost. Make sure to select the correct GIT repository.

Select correct GIT repository

Set up the build pipeline to validate the ARM template using a continuous integration tirgger and publish if the template is correct, just like in the previous post. Once done make sure to save and queue the build definition.

Set up build pipeline for validation

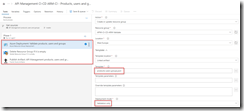

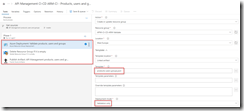

The next step will be to set up the deployment pipeline, which has also been thoroughly described in the previous post. Create a new continous deployment triggered release definition called API Management products, users and groups and use the artifact we just published from our build pipeline.

Create release pipeline with artifact published from build pipeline

Set up the test environment to be triggered as soon as the artifact is available, and deploy to your test environment.

Deploy to test environment

Clone the Test environment and update it to deploy to your production environment. Make sure to include an approval before deployment is being done.

Deploy to production environment after approval

We now have completed our CI/CD process for the products, users and groups, so to test this we just need to make a change in the ARM template on our local machine and check this in, after which our build and deployment pipelines will kick off and update our API Management instance.

API Management instance has been updated

by Eldert Grootenboer | May 7, 2018 | BizTalk Community Blogs via Syndication

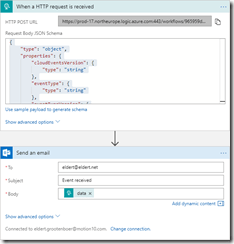

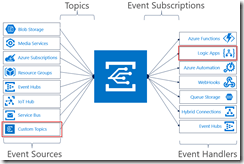

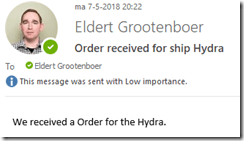

Recently Microsoft announced Azure Event Grid, a highly scalable serverless event driven offering allowing us to implement publish and subscribe patterns. Event driven scenarios are becoming more common by the day, which means that we see these type of integrations increasing a lot as well. A lot of times applications will define their own message formats for their events, however, with the recent announcement of native support in Azure Event Grid for CloudEvents our lives should be made a lot easier. CloudEvents is a standard for working with events accross platforms, and gives us a specification for describing event data in a common way. This will allow any platform or application which is working with events, to implement a common format, allowing easy integration and interoperability, for example between Azure, AWS and Oracle. The specification is still under active development, and Microsoft is one of the big contributors, especially Clemens Vasters, Lead Architect on Azure Messaging Services.

In this blog post we will be looking into Event Grid’s support for CloudEvents, and how to set this up. The specifications for the CloudEvents message format can be found on GitHub, and how this maps to Event Grid’s own schema can be found on Microsoft Docs. For this post we will use the application created in this bogpost, which will generate events when an order has been placed, as well as when a repair has been requested. These events will be handled by a Logic App, which will send out an email. In a real life scenario we could, for example, use this Logic App to create place the order at the ship’s supplier. And because we are using the CloudEvents format, the application can easily integrate with any system which supports this new specification, so they are not just bound to Azure.

Send event from custom application to Logic Apps

Currently support for Cloud Events in Event Grid is still in preview only available in a select group of regions (West Central US, Central US and North Europe), and to use it we need to enable an extension in Azure CLI by giving the following command.

az extension add --name eventgrid

|

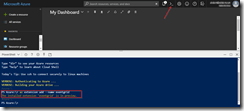

Enable Event Grid extension

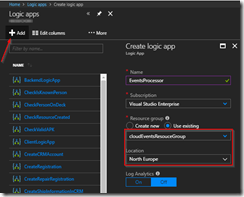

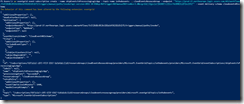

We can now create our Event Grid topic, where we will receive the events. Currently this is not yet supported in the portal, so we will stay in our Azure CLI, and give the following commands.

az group create -l northeurope -n cloudEventsResourceGroup

az eventgrid topic create --name cloudevents -l northeurope -g cloudEventsResourceGroup --input-schema cloudeventv01schema

|

The first command creates the resource group, while the second command creates the Event Grid topic. Note the input-schema switch, which allows us to set the CloudEvents format.

When the topic has been created, go to the Event Grid Topics blade in the portal, open the topic we just created, and grab the Topic Endpoint, we will need this later on.

Save the topic endpoint for later use

Switch to the Access keys for the topic, and grab one of the keys, we will need this later as well.

Also save on of the keys for later use

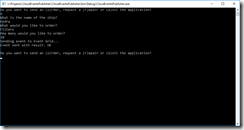

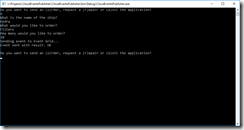

Next we will create the application which will send the events to our custom topic which we just created. For ease of this demo, this will just be a simple console application, but in a real life solution this could be any type of system. Start by creating a new solution in Visual Studio for our application.

Create console app solution

Data Classes

Add the following data classes, which describe the orders and repairs, as explained in this blog post.

/// <summary>

/// Event sent for a specific ship.

/// </summary>

public class ShipEvent

{

/// <summary>

/// Name of the ship.

/// </summary>

public string Ship { get; set; }

/// <summary>

/// Type of event.

/// </summary>

public string Type { get; set; }

}

|

/// <summary>

/// Used to place an order.

/// </summary>

public class Order : ShipEvent

{

/// <summary>

/// Name of the product.

/// </summary>

public string Product { get; set; }

/// <summary>

/// Number of items to be ordered.

/// </summary>

public int Amount { get; set; }

/// <summary>

/// Constructor.

/// </summary>

public Order()

{

Type = "Order";

}

}

|

/// <summary>

/// Used to request a repair.

/// </summary>

public class Repair : ShipEvent

{

/// <summary>

/// Device which needs to be repaired.

/// </summary>

public string Device { get; set; }

/// <summary>

/// Description of the defect.

/// </summary>

public string Description { get; set; }

/// <summary>

/// Constructor.

/// </summary>

public Repair()

{

Type = "Repair";

}

}

|

CloudEvents class

Add the CloudEvents class, which will be used to create a CloudEvents message which we will send to our Azure Event Grid. The schema for a CloudEvents message can be found here.

/// <summary>

/// Representation of the CloudEvents specification, to be sent to Event Grid Topic.

/// </summary>

class CloudEvents

{

/// <summary>

/// This will be used to update the Source and Data properties.

/// </summary>

public ShipEvent UpdateProperties

{

set

{

Source = $"{Program.TOPIC}#{value.Ship}/{value.Type}";

Data = value;

}

}

/// <summary>

/// Gets the version number of the CloudEvents specification which has been used.

/// </summary>

public string CloudEventsVersion { get; }

/// <summary>

/// Gets the registered event type for this event source.

/// </summary>

public string EventType { get; }

/// <summary>

/// Gets the The version of the eventType.

/// </summary>

public string EventTypeVersion { get; }

/// <summary>

/// Gets the event producer properties.

/// </summary>

public string Source { get; set; }

/// <summary>

/// Gets the unique identifier for the event.

/// </summary>

public string EventID { get; }

/// <summary>

/// Gets the time the event is generated based on the provider's UTC time.

/// </summary>

public string EventTime { get; }

/// <summary>

/// Gets or sets the event data specific to the resource provider.

/// </summary>

public ShipEvent Data { get; set; }

/// <summary>

/// Constructor.

/// </summary>

public CloudEvents()

{

CloudEventsVersion = "0.1";

EventID = Guid.NewGuid().ToString();

EventType = "shipevent";

EventTime = DateTime.UtcNow.ToString("o");

}

}

|

.Program Class

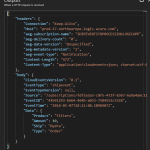

And finally we will update the Program class. Here we will get the input from the user, and create a CloudEvents message which will be sent to Event Grid. Make sure to update the topic endpoint and access key with the entries we retrieved from the portal in the previous step. Also update the topic property with your subscription id, and the resource group and topic name you used when creating the topic. One more thing to notice, is how we only send a single message, instead of a List of messages as we did in this blog post. Currently CloudEvents does not support batching of events, which is why we can only send a single event.

/// <summary>

/// Send CloudEvents messages to an Event Grid Topic.

/// </summary>

class Program

{

/// <summary>

/// Endpoint of the Event Grid Topic.

/// Update this with your own endpoint from the Azure Portal.

/// </summary>

private const string TOPIC_ENDPOINT = "<your-topic-endpoint>";

/// <summary>

/// Key of the Event Grid Topic.

/// Update this with your own key from the Azure Portal.

/// </summary>

private const string KEY = "<your-access-key>";

/// <summary>

/// Topic to which we will be publishing.

/// Update the subscription id, resource group and topic name here.

/// </summary>

public const string TOPIC = "/subscriptions/<your-subscription-id>/resourceGroups/<your-resource-group>/providers/Microsoft.EventGrid/topics/<your-topic-name>";

/// <summary>

/// Main method.

/// </summary>

public static void Main(string[] args)

{

// Set default values

var entry = string.Empty;

// Loop until user exits