by Dan Toomey | May 19, 2020 | BizTalk Community Blogs via Syndication

I feel very privileged to be a speaker at INTEGRATE for the 4th year in a row. Many thanks to Saravana Kumar and Kovai for the privilege & opportunity! Of course, thanks to COVID-19 this year will be a bit different… no jet lag, no expensive bar tabs, and (sadly) no catching up with my good friends & colleagues from around the world (at least not in person anyway). But on the plus side, an online event does have the potential to reach a limitless number of integration enthusiasts. And if you think that you might be one of them, here’s a discount code for you to use!

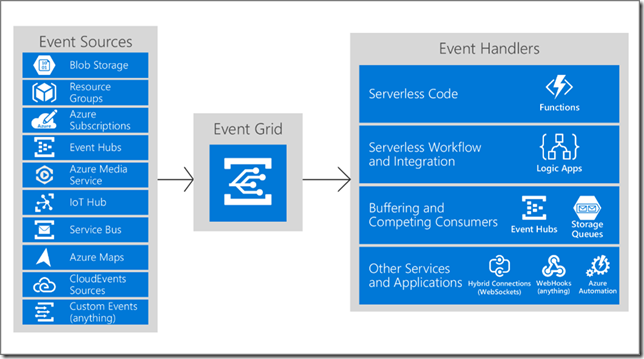

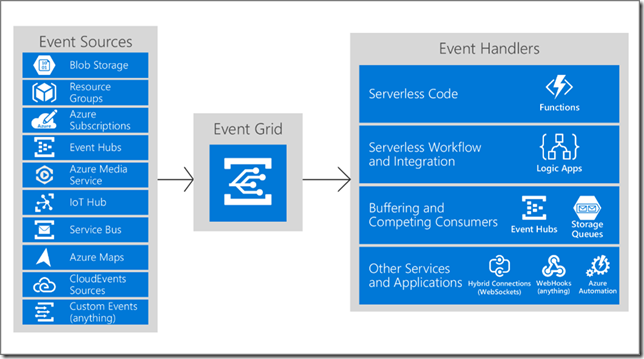

So what will my talk be about? Well as the title suggests, you will learn about the benefits of event-based integration and how it can help modernise your applications to be reactive, scalable, and extensible. The star of the show here is Event Grid, a lynchpin capability offered as part of Azure Integration Services.

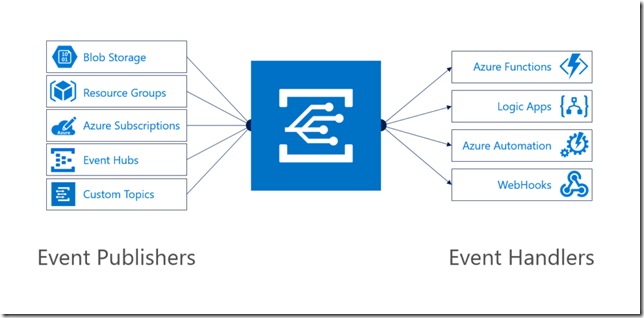

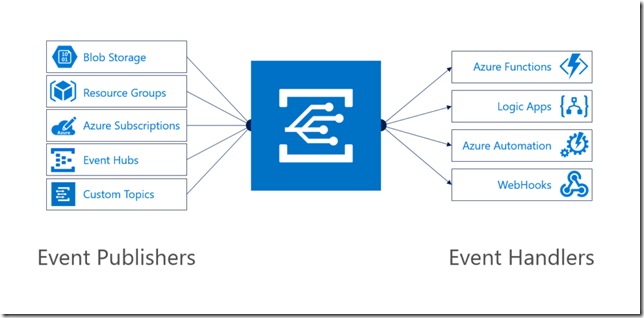

Event Grid offers a single point for managing events sourced from within and without Azure, intelligently routing them to any number of interested subscribers. It not only supports 1st class integration with a large number of built-in Azure services, but also supports custom event sources and routing to any accessible webhook. On top of that, it boasts low-latency, massive scalability, and exceptional resiliency. It even supports the Cloud Events specification for describing events, as well as your own custom schemas.

My talk will feature a demo showing how Event Grid easily enables real-time monitoring of Azure resources – but this is only one of many possible scenarios that are supported. Register for Integrate 2020 Remote so you can not only attend this session but also 40 other topics presented by 30+ integration experts from around the world! Use the discount code INT2020-SPEAKER-DAN to get 15% off any ticket price.

by Dan Toomey | Sep 26, 2019 | BizTalk Community Blogs via Syndication

Microsoft MVPs are recognised for their voluntary contributions to the technical community. There are many types of eligible contributions, but one of my more notable ones was serving as a user group leader. This is a significant undertaking, and in this post I hope to outline some of the aspects of the commitment and also some lessons I’ve learned over my 14 years of fulfilling this duty.

My Experience

In 2005, I was asked by Microsoft to start the Brisbane BizTalk User Group. The motivation came through working for one of several organisations that adopted BizTalk Server to handle critical enterprise integration processes. As a newbie to the product, I was heavily reliant on the help I received from the very few experts around Australia and the world, including Bill Chesnut, Mick Badran, and several other MVPs who blogged about their experience. With so little available knowledge and experience in Brisbane, Microsoft’s Geoff Clarke decided it would be a great idea to start a user group. It was a daunting challenge and Geoff had to twist my arm a little… but I was encouraged when over 30 people turned up at the first meetup, proving that I wasn’t alone in my struggles. I also had lots of support from Microsoft and my colleagues, and the group met monthly for years to follow.

Then in 2014, I was asked to take the reigns for the Brisbane Azure User Group, which had been established by Paul Bouwer about a year or two earlier. When Paul earned his “blue card” and became a Microsoft employee that year, he felt it was inappropriate for him to continue leading the group and that a community member would be more appropriate for the role. Again, I reluctantly agreed on the condition that I had at least two co-organisers to help. One of these gentlemen (Damien Berry) remains a co-organiser to this day.

I’ve also ran the Global Azure Bootcamp in Brisbane for four years, and the Global Integration Bootcamp for a couple of years as well.

Time Commitment

Several years ago, Greg Low led a Tech-Ed breakout session on “How to be a Good User Group Leader”. He was asked by someone whether 5-10 hours per month was a reasonable expectation for a time commitment. Greg agreed. Experience has shown me that is a pretty good estimate, at least once you get the group up & running. Initially it may take more time getting things organised. And of course, if you happen to be speaking at an event, then you would need to add those hours of preparation as well.

Several years ago, Greg Low led a Tech-Ed breakout session on “How to be a Good User Group Leader”. He was asked by someone whether 5-10 hours per month was a reasonable expectation for a time commitment. Greg agreed. Experience has shown me that is a pretty good estimate, at least once you get the group up & running. Initially it may take more time getting things organised. And of course, if you happen to be speaking at an event, then you would need to add those hours of preparation as well.

It certainly helps to have a co-organiser assist with various tasks. But it is vital that there is constant communication between all organisers so that everyone knows what they are responsible for. We recently had an unusual gaff where both Damien & I invited and confirmed a different speaker for the same date. Fortunately one of them was flexible and we were able to shift him to another date. Today with so many collaborative communication mediums such as Microsoft Teams and Slack, it shouldn’t be difficult to keep all organisers informed of activities. I know some folks who live by Trello, which is another extremely useful tool for tracking tasks. We also use Microsoft OneNote to record information and share files.

Some of the tasks involved in organising just a single meetup session include:

- Finding a speaker

- Booking a venue

- Organising catering

- Advertising on social media

- Sending and tracking invites (e.g. Meetup or EventBrite)

Not to mention all of the ongoing maintenance tasks for group, which may include:

- Securing sponsorship

- Managing finances

- Paying subscriptions & dues

Challenges

There are numerous challenges with both getting a user group off the ground and keeping it running. Here are but a few:

Generating Interest

Your user group isn’t going to be much of a community if no one shows up, right?

Your user group isn’t going to be much of a community if no one shows up, right?

First and foremost, make sure your group’s area of focus has a community to support it! If the topic is too narrow, you’ll have trouble attracting enough members. If the topic is too broad, you risk overlapping and competing with other user groups in the same area (always worth checking to see that there isn’t a competing group already before you embark on this journey!) Also beware of focusing on a specific product offering, as that can limit the lifetime of the community. For example, my BizTalk User Group survived for a good five years, but because it was product based and that product had a very narrow following, it was difficult to attract a sizable audience each month. It can also tend to limit the presentation topics a bit, unless it is a very formidable product.

By contrast, the Brisbane Azure User Group has an extremely healthy membership (1600+) and we generally get a solid 30-50 attendees at each session. There is a broad range of topics that come under that heading, so we’ll never run out of things to speak about. We also manage to attract good speakers with very little effort.

Next, you’ll need to plaster your meeting announcements all over social media: Twitter, LinkedIn, Facebook, etc. It’s a good idea to setup a group page on Facebook and LinkedIn to attract members. Make sure you setup a Twitter account and a memorable hashtag so that you can be followed easily. When first getting the group started, you might hit up other Meetup organisers in your area who have related topics to plug your meeting for you. Appeal to an organisation who is invested in your user group theme (e.g. Microsoft for the Azure UG) and get them to plug your group in their community publications. Send emails to co-workers and colleagues whom you think might be interested, and invite them to bring along a friend (use discretion here, unwanted spam doesn’t help to generate interest!).

For our Azure meetups, I usually send out tweets two weeks before, one week before, and then daily from two days out to remind folks. This is of course in addition to the Meetup announcement and posts on LinkedIn, etc.

Finding Speakers

This is related to the previous challenge in ensuring that you choose an supportable theme/topic for your group. If it’s a rare or highly specialised focus, you may find yourself having to speak at every event! Some organisers don’t mind that, they like having a forum to promote themselves – but chances are your following will dwindle after a short while if there isn’t enough variety.

This is related to the previous challenge in ensuring that you choose an supportable theme/topic for your group. If it’s a rare or highly specialised focus, you may find yourself having to speak at every event! Some organisers don’t mind that, they like having a forum to promote themselves – but chances are your following will dwindle after a short while if there isn’t enough variety.

You generally want to have speakers lined up for at least 2 or 3 months in advance. This helps keep the community engaged as well; when they see you have a solid schedule of speakers they have more confidence in the group’s vitality.

Not everyone feels comfortable with public speaking, even those who have lots of knowledge to share. One technique I find that works well is occasionally hosting an “Unconvention Night” where instead of featuring one or two main speakers, dedicating the event to a series of short, sharp topics about 10-15 minutes in length. This is a lot less intimidating and can provide an initiation for future speakers as they speak about something really focussed, with or without slides or demos. It can be a stepping stone for inexperienced speakers to build more confidence.

You can also put out Calls For Papers (CFPs) to solicit speakers. There are many people (like some of us Microsoft MVPs) who actually seek out opportunities to engage with the community via public speaking. Two sites that I know of are Sessionize and PaperCall. Be specific about the topic scope you want. You can also use social media to solicit potential speakers.

Lastly, be sure to treat your speakers well! They donate a lot of their time preparing the talks and deserve to be recognised for this. Make sure you prepare a nice introduction and… introduce them! Also be on hand beforehand to help them get setup with A/V equipment, etc. Make sure they know what their time constraint is well in advance. If you intend to record them, be sure they are comfortable with that first. Lastly, I always like to give my speakers a gift as a token of appreciation – usually a bottle of wine or perhaps a gift card of some sort.

When other people see the benefits your speakers are afforded, they will have more incentive to step forward and offer themselves to speak at a future event.

Finding Sponsorship

User groups take money to run, if not for paying for a venue than almost certainly for providing catering. Most user group attendees expect to have pizza or something similar on offer, especially for evening or lunchtime events. Moreover, they are used to the events being free of charge. Unless you are independently wealthy or very generous, you’ll need sponsorship of some sort.

User groups take money to run, if not for paying for a venue than almost certainly for providing catering. Most user group attendees expect to have pizza or something similar on offer, especially for evening or lunchtime events. Moreover, they are used to the events being free of charge. Unless you are independently wealthy or very generous, you’ll need sponsorship of some sort.

There are a lot of companies out there who want the publicity and advertising opportunities that come with sponsoring communities. But you may have to do some searching. Start with your own company! Chances are that the user group you started centres on a technology or subject related to your work. If not, reach out to companies that have an interest in your subject matter, as they know that attendees are possible customers.

Sponsors of course will want something in return. You can offer them the opportunity to display a banner or poster at the meetup site. You can acknowledge them with their logo on your group’s website or Meetup site. Perhaps even offer them a brief presentation slot occasionally to promote their product or services. But be careful to set clear boundaries. Never offer your group mailing list to a sponsor! This is a terrible violation of privacy and trust, and it is the fastest way to lose members at best, and invite legal action at worst.

Remember that your caterer of choice can also be a sponsor as well. For example, our Azure group orders from Crust Pizza who offer special services for us; they come in earlier than usual to cook the pizzas and usually throw in free soft drinks. Be sure to promote their logo as well on your site, as either a sponsor or a preferred caterer.

There are different ways that sponsors can help, for example paying the caterers directly, providing a venue for free, etc. In our case, Microsoft Brisbane provides the venue for free, including a host who kindly stays back late (and often presents for us too). I find the most convenient arrangement is a sponsor who provides a fixed monthly stipend, as this can be used to serve multiple expense types (catering, subscription fees, travel costs for speakers, swag/prizes, etc). Of course you will need to set up a bank account for this, and that can be tricky in itself.

Finding a Venue

This is often a big stumbling block for some cities. Venues for hire are typically very expensive. The best solution is if your employer can accommodate a large meeting space, or perhaps another business that chooses to donate a space as sponsorship. Other options are university or community spaces. Some of these may come with a price tag, but will be much cheaper than commercial hosting venues. In Brisbane, we have used The Precinct for an event by just paying a nominal cleaning fee, as well as QUT Gardens Point for another event at a reasonable price. I’m sure there would be similar spaces in other cities. Fortunately, Microsoft Brisbane is extremely generous in providing a large theatre for our regular meetings, all for free.

This is often a big stumbling block for some cities. Venues for hire are typically very expensive. The best solution is if your employer can accommodate a large meeting space, or perhaps another business that chooses to donate a space as sponsorship. Other options are university or community spaces. Some of these may come with a price tag, but will be much cheaper than commercial hosting venues. In Brisbane, we have used The Precinct for an event by just paying a nominal cleaning fee, as well as QUT Gardens Point for another event at a reasonable price. I’m sure there would be similar spaces in other cities. Fortunately, Microsoft Brisbane is extremely generous in providing a large theatre for our regular meetings, all for free.

If you’re lucky enough to find a free venue, make sure you are respectful to the owners by leaving the place clean and tidy afterwards. And of course don’t forget to acknowledge the venue provider as a sponsor!

Other Tips and Pitfalls

Here’s a random collection of other tips and traps:

- Event publication and tracking – You may not like paying the subscription fee for Meetup, but by golly it is worth it. No other tool I’m aware of is designed to support user groups as well. You can schedule events, send out announcements, track attendance, and post related artefacts (e.g. links to PowerPoint slides, sample code, pictures, etc) all in one tool. Some folks use EventBrite to track “tickets”, which also has some good features. One thing I would warn against… use one tool or the other to track RSVPs for a given event, but not both. That will just create confusion as some people will respond on one, some on the other, and some on both. Keeping the RSVPs to one tool will make life a lot simpler for you and more reassuring for your members.

- Getting the catering right – The trouble with free events is that you will find that a lot of people will RSVP and then just not show up. This is a big bugbear for me, but there’s not much you can do about it. I’ve learned to expect about a 30% attrition and then cater accordingly. Only on rare occasions have we run out of food or drink because I’ve underestimated.

- Wasted tickets – The second biggest bugbear for me. If your venue is limited in size and you have to issue a capped number of tickets for your event (EventBrite is really good for managing a waitlist, by the way), the no-shows are even more bothersome because potentially there were other more interested (and responsible) parties who missed out because your event sold out. Some organisers keep track of these ill-mannered folks and put them on a “black list” for future events (I don’t – but I can certainly understand the motivation).

- Alcohol – If you’re going to serve alcohol at your event(s), be sure to check with the venue first and make certain that there are no rules or restrictions. You may also want to consider if liability insurance might be required.

- Keeping it going – If your meetups are intended to be regular (i.e. monthly), do your best to keep that rhythm and not miss a month. It’s also best to keep it to the same night (e.g. the 2nd Wednesday of each month) as your members will get used to that pattern and attendance will be more regular. If you have to move an event off the usual schedule (perhaps because of a public holiday or to accommodate an out-of-town speaker), then be sure to give plenty of notice and broadcast at least twice as much as usual on social media. A member who turns up at the normal day/time expecting a meeting only to be disappointed is likely to leave your group with a bad taste in his/her mouth.

- Member buy-in – Ask your attendees what topics they are interested in hearing about. This is best done live in a meeting, as those that actually turn up should be rewarded by having influence. Then do your best to find speakers on those topics. Remind your members that this is their community – and that they can and should take some ownership in terms of where it goes.

- Extend the Reach – Nothing beats a live event. However, if you can convince your speaker to allow a recording, publishing the video presents an opportunity to reach more people, even from around the globe. Just be aware that not every speaker will agree to this; don’t push them if they are uncomfortable. You could always invite them to make their own offline recording if they wish. If you can afford the equipment, I’ve found the RØDELink Filmmaker wireless mic to be excellent for crystal clear sound quality. For recording software, I use Camtasia, but there are free programs out there as well, for example OBS. Just be aware that editing these recordings can take time. For an example of how this can work, please visit the Brisbane Azure User Group YouTube channel where we have posted a number of session recordings.

- Be welcoming! – Make your members feel appreciated. Ensure they get a nice welcome email when they register for your group. Make an effort to meet and greet newcomers. Try to learn their names so that you can greet them the next time they turn (“Hey Bob! Great to see you again!”) A large benefit of live meetups is the networking and social aspect; make the most of it! Members are likely to come back more often if they get a warm & fuzzy feeling. If they are ignored and/or feel unappreciated… well then you know what to expect.

Summary

Running a user group takes some time, effort and planning – but it is a very rewarding experience, especially if you can build up a healthy attendance. Forums and blogs are useful, but nothing beats the impact of live presentations, not to mention the networking opportunities of meeting people who share the same passion as you.

by Dan Toomey | Aug 6, 2019 | BizTalk Community Blogs via Syndication

Last week, Microsoft responded to numerous requests from the community by announcing a new developer tier offering for Integration Service Environments (ISE). The ISE has been generally available for several weeks, but the single available SKU prior to this announcement carried a hefty price tag.

I had the great honour and privilege of speaking about ISE at the INTEGRATE 2019 conference at Microsoft headquarters in Redmond, WA (USA) last month. My topic was Four Scenarios for Using an Integration Service Environment, which attempted to shed some light on what type of situations would justify using this flat-cost product as opposed to the consumption-based serverless offering of Logic Apps.

I had the great honour and privilege of speaking about ISE at the INTEGRATE 2019 conference at Microsoft headquarters in Redmond, WA (USA) last month. My topic was Four Scenarios for Using an Integration Service Environment, which attempted to shed some light on what type of situations would justify using this flat-cost product as opposed to the consumption-based serverless offering of Logic Apps.

While this presentation hopefully piqued interest in the offering, one of the burning questions from the attendees was “When will a lower cost developer SKU be available so we can try it out?” All Microsoft was able to say at that point was, “Soon.” Well, at least they were right, as it is now available only a few weeks later!

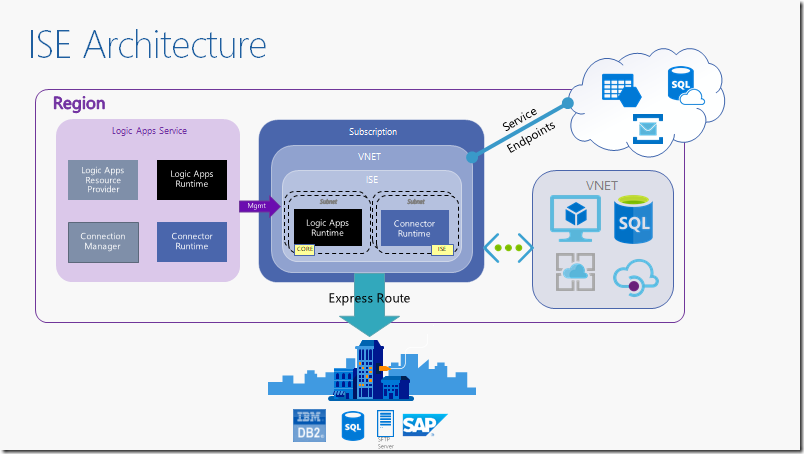

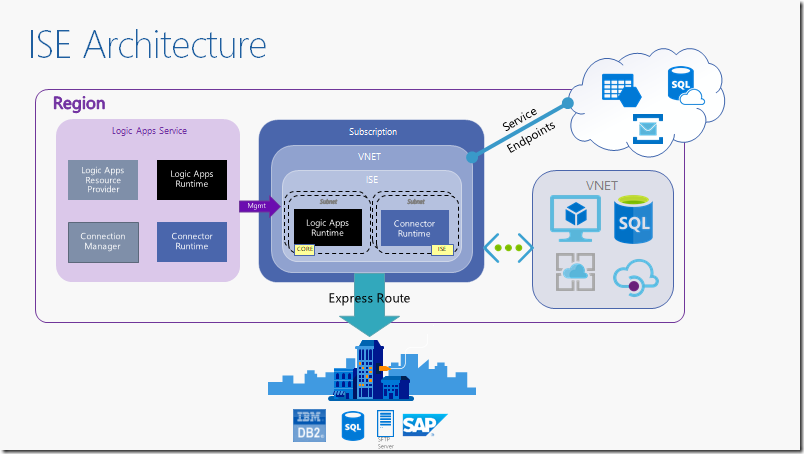

What is an Integration Service Environment?

The ISE is a relatively recent offering from the within Microsoft’s Azure Integration Services that enables you to provision Logic Apps and Integration Accounts within a dedicated environment – much like App Service Environments enable you to do the same for App Services. The advantages of this include:

- Predictable performance

- VNET connectivity

- Isolated storage

- Higher data transfer rates (especially for hybrid scenarios)

- Flat cost

The VNET connectivity is a large driving factor for using an ISE. Consumption-based Logic Apps do not offer any direct integration with VNETs – they operate within a multi-tenant context inside of each Azure location. This means that all connectors share the same pool of static outbound IP addresses for all instances deployed to that region. It also means that performance can potentially be affected by “noisy neighbours”.

Because an ISE is injected into a VNET that you create, all the runtime components now operate within that VNET and as such are isolated from other resources in that region. It also gives you the ability to put network-level security controls around your Logic Apps (for example using Network Security Groups), and also explicitly control the scaling of your application as opposed to relying on the reactive scaling offered by the serverless option (mind you, auto-scaling rules are still available within an ISE).

Flat-Cost Model

Unlike the consumption-based serverless offering of Logic Apps which charges per action execution, ISE runs at a flat hourly cost. The cost is calculated at a scale unit level. The base offering includes a single scale unit which can accommodate roughly 160 million action executions per month. Additional scale units will add capacity for about 80 million action executions each, and each costs 1/2 the price of the base unit.

While it may seem that the cost benefit is only realised in very high-volume scenarios, you need to consider that the base unit also includes a Standard Integration Account (worth ~US$1000/month) as well as an Enterprise Connector with unlimited connectivity (these connectors are charged at a higher rate in the consumption-based model). And that’s not considering the other benefits of an ISE (VNET connectivity, isolation, etc) that may justify the investment. It is worth noting that VNET integration for most other Azure services generally requires a premium tier or plan (e.g. Service Bus, API Management, Functions, Event Hubs).

Developer SKU

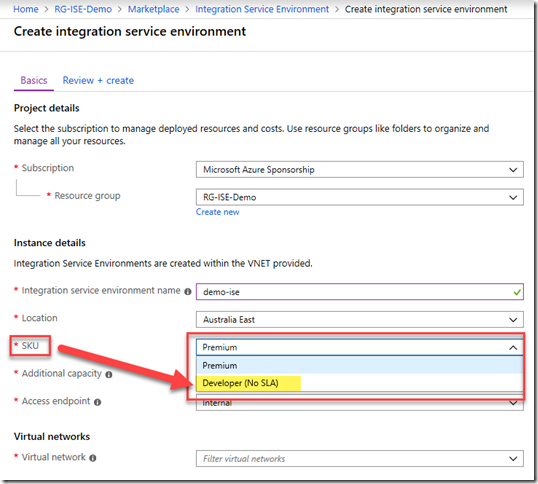

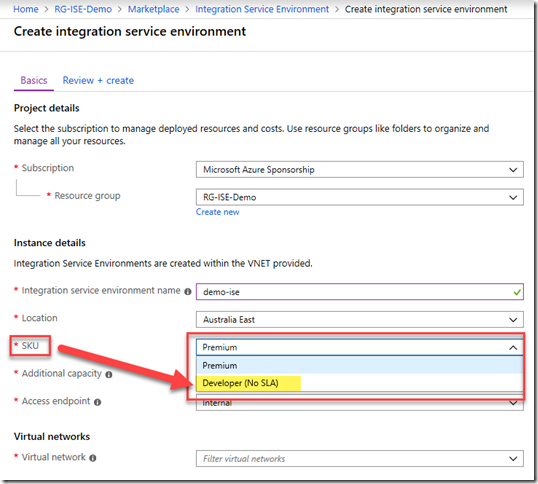

Still, the cost of an ISE in production is a significant investment, just as it is for an ASE. That can make it an expensive proposition, particularly for deployment into non-production environments (DEV, TEST). Initially, the ISE was only available in one SKU.

That is why the announcement last week was so greatly anticipated. The new developer pricing tier allows organisations to deploy and test an ISE in non-production environments at approximately one-sixth of the base cost of the production SKU (please refer to the Logic Apps pricing page). This makes it a much more affordable proposition and opens up the ISE to many organisations that would otherwise be unable to afford it.

Or course, this developer tier offers no SLA, does not support additional scale units, and includes only a free integration account – so this is definitely not suited for production use. It is a good idea to review the artefact limits imposed on integration accounts.

To choose the developer tier, simply select the non-premium option from the SKU drop-down list when creating your ISE:

For detailed instructions on how to provision an ISE including all of the pre-requisites, please refer to the Microsoft documentation.

Summary

Azure Integration Service Environments offer a solid solution for integrating your Logic Apps with a VNET, enabling your entire integration solution to be managed within the controls of a private network. It enables additional security controls and better performance in hybrid scenarios over the On-Premises Data Gateway, whilst reducing the impact of “noisy neighbours”. The introduction of the developer pricing tier now makes it much more affordable for enterprises to build and test solutions using an ISE before committing to production.

This article has been cross-posted. The original post may be found here.

by Dan Toomey | Mar 5, 2019 | BizTalk Community Blogs via Syndication

A few weeks ago I had the great privilege of presenting a 60 minute breakout session at Microsoft Ignite | The Tour in Sydney. It was thrilling to have over 200 people registered to see my topic “Seamless Deployments with Azure Service Fabric”, especially in the massive Convention Centre.

In the session I demonstrated the self-healing capabilities of Service Fabric by introducing a bug in the code and then attempting a rolling upgrade. It was impressive to see how Service Fabric detected the bug after the first node was upgraded and then immediately started rolling it back.

As you can imagine, it took a fair amount of practice to get the demo smooth and functioning within the tight time limits of the average audience attention span. (In fact, I had to learn how to tweak both the cluster and the application health check settings to shorten the interval – perhaps the subject of another blog post!) Naturally this also entailed frequently “resetting” the environment so that I could start over when things didn’t go quite as planned, or if I wanted to reset the version number. If you’ve ever worked with Service Fabric before you would know that deployments from Visual Studio (or Azure DevOps) can take a while; and undeploying an application from Service Fabric manually in the portal is painful!

For example, if I want to undeploy an application from a Service Fabric cluster in the web-based Service Fabric Explorer, I have to do the following in this order:

Remove the service

Remove the service- Remove the application

- Unregister the application type

- Remove the application package

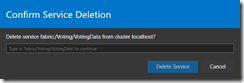

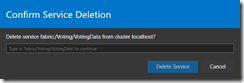

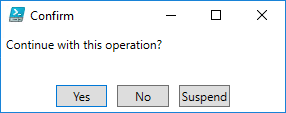

What becomes really annoying is that each step elicits a confirmation prompt where you need to type the name of the artefact you want to remove! That gets old pretty fast.

Thankfully, there is an alternative. Service Fabric offers a number of different ways to deploy applications, including Visual Studio, Azure CLI, and PowerShell. Underneath the covers I expect these all make use of the REST API. But in my case I found the simplest and most efficient choice was PowerShell. Using the documented commands, it is easy to create a script that will deploy or undeploy your application package in seconds. And I mean seconds! It was astounding to see how quickly the undeploy script could tear down the application!

The script I created is available in my demo code on GitHub. I’ll walk through some of it here.

Pre-requisites

First, it is necessary to have the Azure PowerShell installed. This is normally included when you install the Service Fabric SDK, but you must enable execution of the scripts first.

Second, in order for the Deploy_SFApplication.ps1 script to work, you must have already packaged the application. You do this by right-clicking the Service Fabric project in Visual Studio (not the solution file!) and selecting “Package”. The path to this package is a mandatory parameter for this script. The Undeploy_SFApplication.ps1 script does not require this.

Parameters

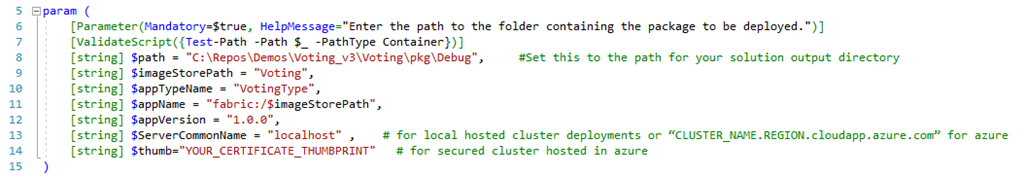

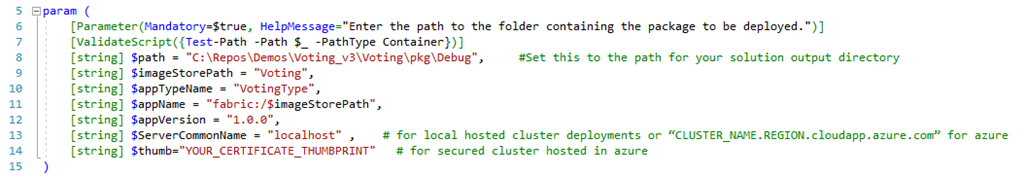

The make the scripts reusable with the minimum amount of changes, I’ve parameterized all of the potentially variable settings:

| Parameter Name |

Description |

Example/Default Value |

| path |

This is the path to your packaged application. (This parameter is not required for the Undeploy_SFApplication.ps1 script) |

C:ReposDemosVoting_v3VotingpkgDebug |

| imageStorePath |

Where you want the package stored when uploaded in Service Fabric. Typically this can be the application name, perhaps with a version. |

Voting |

| appTypeName |

Usually the app name with “Type” appended |

VotingType |

| appName |

Must be prepended with “fabric:/” |

fabric:/Voting |

| appVersion |

IMPORTANT! Cannot deploy the same version already existing, it will fail |

1.0.0 |

| ServerCommonName |

If using your local development cluster, just “localhost”.

Otherwise, if in Azure, “CLUSTER_NAME.REGION.cloudapp.azure.com” |

myCluster.australiaeast.cloudapp.azure.com |

| clusterAddress |

Append the port number to the $ServerCommonName variable, usually 19000 |

$(ServerCommonName):19000

resolves to:

myCluster.australiaeast.cloudapp.azure.com:19000

|

| thumb |

The thumbprint of the certificate used for a secured cluster (not generally required for a local cluster)

NOTE: The script currently sets the location of the certificate in the current user’s personal store. However this could be easily parameterized. |

https://docs.microsoft.com/en-us/dotnet/framework/wcf/feature-details/how-to-retrieve-the-thumbprint-of-a-certificate |

Script Execution Steps

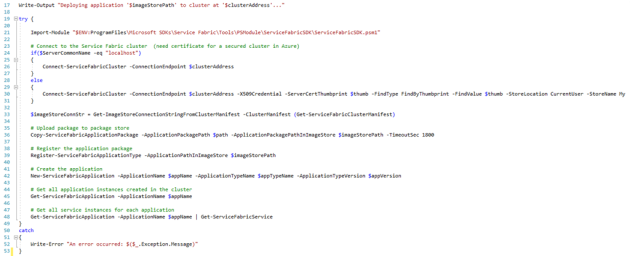

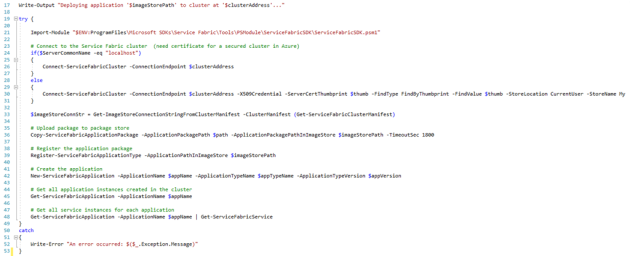

First thing we do is import the appropriate module:

Import-Module "$ENV:ProgramFilesMicrosoft SDKsService FabricToolsPSModuleServiceFabricSDKServiceFabricSDK.psm1"

Then it’s simply a matter of following using the documented commands, substituting the variables as appropriate in order to:

- Connect to the cluster

- Upload the package to the package store

- Register the application type

- Create the application instance

My Deploy_SFApplication.ps1 script also prints out the application instance details as well as the associated service instance details:

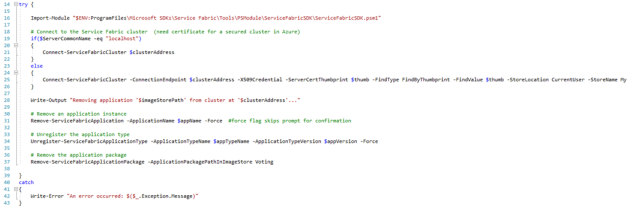

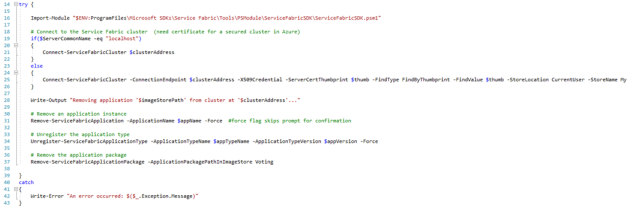

The Undeploy_SFApplication.ps1 script does much the same, except in reverse of course:

- Connect to the cluster

- Remove the application instance

- Unregister the application type

- Remove the application package

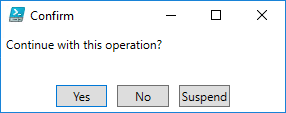

The use of the –force flag means that when you run this script you will NOT be prompted for confirmation like this:

Whilst the deployment script takes about 20 seconds for this Voting application, the undeploy script takes less than five seconds!

As mentioned previously, the scripts are freely downloadable along with the rest of the demo code on GitHub. I’m no PowerShell guru, so I’m sure there’s plenty of room for improvement. Send me a pull request if you have any suggestions! And feel free to get in touch if you have any questions.

This post was originally published on Deloitte’s Platform Engineering blog.

by Dan Toomey | Dec 10, 2018 | BizTalk Community Blogs via Syndication

First of all, I’d like to apologise to all grandmothers out there… I mean you no disrespect. It’s just meant to be a catchy title, really. I know grandmothers who are smarter than most of us.

A couple of months ago I had the privilege of speaking at the API Days event in Melbourne. My topic was on Building Event-Driven Integration Architectures, and within that talk I felt a need to compare events to messages, as Clement Vasters did so eloquently in his presentation at INTEGRATE 2018. In a slight divergence within that talk I highlighted three common messaging patterns using a pizza based analogy. Given the time constraint that segment was compressed into less than a minute, but I thought it might be valuable enough to put in a blog post.

Photo courtesy of mypizzachoice.com

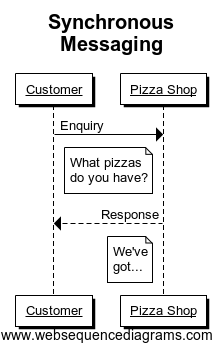

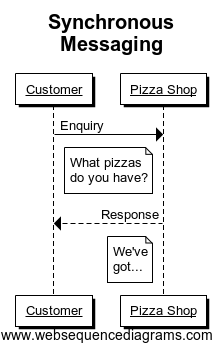

1) Synchronous Messaging

So before you can order a pizza, you need to know a couple of things. First of all, whether the pizza shop is open, and then of course what pizzas they have on offer. You really can’t do anything else without this knowledge, and those facts should be readily available – either by browsing a website, or by picking up the phone and dialling the shop. Essentially you make a request for information and that information is delivered to you straight away.

So before you can order a pizza, you need to know a couple of things. First of all, whether the pizza shop is open, and then of course what pizzas they have on offer. You really can’t do anything else without this knowledge, and those facts should be readily available – either by browsing a website, or by picking up the phone and dialling the shop. Essentially you make a request for information and that information is delivered to you straight away.

That’s what we expect with synchronous messaging – a request and response within the same channel, session and connection. And we shouldn’t have time to go get a coffee before the answer comes back. From an application perspective, that is very simple to implement as the service provider doesn’t have to initiate or establish a connection to the client; the client does all of that and service simply responds. However care must be take to ensure the response is swift, lest you risk incurring a timeout exception. Then you create ambiguity for the client who doesn’t really know whether the request was processed or not (especially troublesome if it were a transactional command that requires idempotency).

2) Asynchronous Messaging

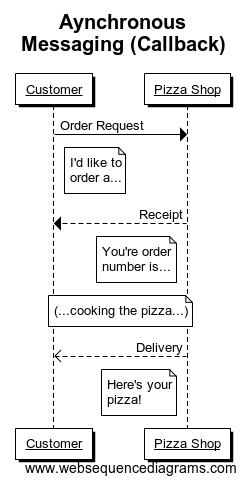

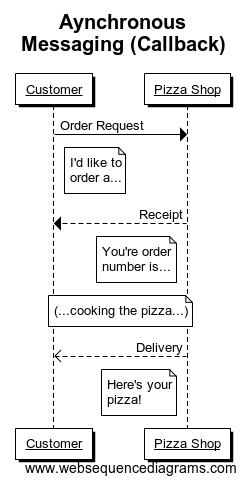

So now you know the store is open and what pizzas to choose from. Great. So you settle on that wonderful ham & pineapple pizza on a traditional crust with a garnish of basil, and you place your order. Now if the shopkeeper hands you a pizza straight away, you’re probably not terribly inclined to accept it. Clearly you expect your pizza to be cooked fresh to order, not just pulled ready-made off a shelf. More likely you’ll be given an order number or a ticket and told your pizza will be ready in 20 minutes or so.

So now you know the store is open and what pizzas to choose from. Great. So you settle on that wonderful ham & pineapple pizza on a traditional crust with a garnish of basil, and you place your order. Now if the shopkeeper hands you a pizza straight away, you’re probably not terribly inclined to accept it. Clearly you expect your pizza to be cooked fresh to order, not just pulled ready-made off a shelf. More likely you’ll be given an order number or a ticket and told your pizza will be ready in 20 minutes or so.

Now comes the interesting part – the delivery. Typically you will have two choices. You can either ask them to deliver the pizza to you in your home. This frees you up to do other things while you wait, and you don’t have to worry about chasing after your purchase. They bring it to you. But there’s a slight catch: you have to give them a valid address.

In the asynchronous messaging world, we would call this address a “callback” endpoint. In this scenario, the service provider has the burden of delivering the response to the client when it’s ready. This also means catering for a scenario where the callback endpoint it invalid or unavailable. Handling this could be as blunt as dropping the response and forgetting about it, or as robust as storing it and sending an out-of-band message through some alternate route to the client to come pick it up. Either way, in most cases this is an easier solution for the client than for the service provider.

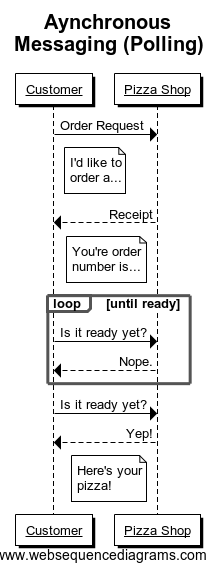

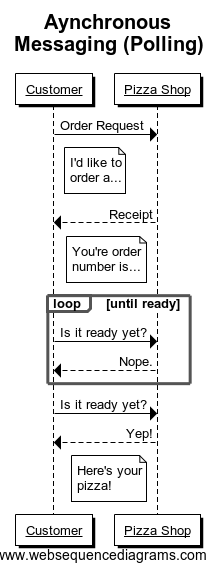

But what if you don’t want to give out your address? In this case, you might say to the pizza maker, “I’ll come in and pick it up.” So they say fine, it’ll be ready in 20 minutes. Only you get there in 10 minutes and ask if it’s ready; they say not yet. You wait a few more minutes and ask again, and get the same response. Eventually it is ready and they hand you your nice fresh piping hot pizza.

This is an example of a polling pattern. The only burden on the service provider is to produce the response and then store it somewhere temporarily. The client has the job of continually asking if it is ready, and needs to cater for a series of negative responses before finally retrieving the result it is after. You might see this as a less favourable approach for the client – but sometimes this is driven by constraints on the client side, such as difficulties opening up a firewall rule to allow incoming traffic.

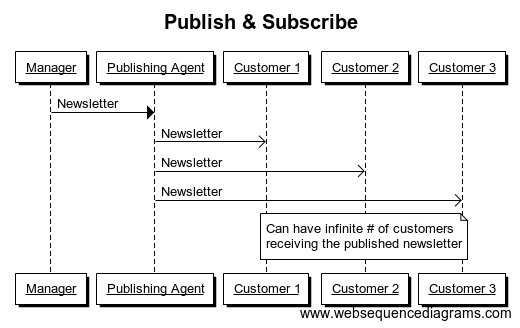

3) Publish & Subscribe

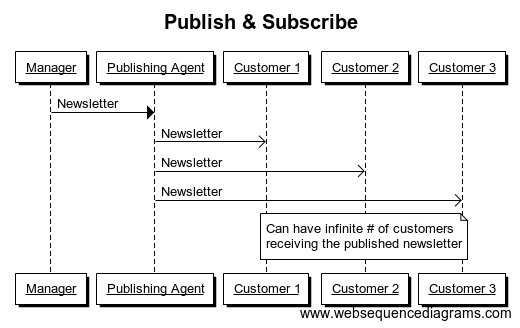

Now let’s say that the pizza was so good (even if a little on the pricey side) that you decide to compliment the manager. He asks if you’d like to be notified when there are special discounts or when new pizzas are introduced. You say “Sure!” and sign up on his mailing list.

Now let’s say that the pizza was so good (even if a little on the pricey side) that you decide to compliment the manager. He asks if you’d like to be notified when there are special discounts or when new pizzas are introduced. You say “Sure!” and sign up on his mailing list.

Now the beauty of this arrangement is that it costs the manager no extra effort to have you join his mailing list. He still produces the same newsletter and publishes it through his mailing agent. The number of subscribers on the list can grow or shrink, it makes no difference. And the manager doesn’t even have to be aware of who is on that list or how many (although he/she may care if the list becomes very very short!) You as the subscriber have the flexibility to opt in or opt out.

It is precisely this flexibility and scalability that makes this pattern so attractive in the messaging world. An application can easily be extended by creating new subscribers to a message, and this is unlikely to have an impact on the existing processes that consume the same message. It is also the most decoupled solution, as the publisher and subscriber need not know anything about each other in terms of protocols, language, endpoints, etc. (except of course for the publishing endpoint which it typically distinct and isolated from either the publisher or consumer systems). This is the whole concept behind a message bus, and is the fundamental principle behind many integration and eventing platforms such as BizTalk Server and Azure Event Grid.

The challenge comes when the publisher needs to make a change, as it can be difficult sometimes to determine the impact on the subscribers, particularly when the details of those subscribers are sketchy or unknown. Most platforms come with tooling that helps with this, but if you’re designing complex applications where many different services are glued together using publish / subscribe, you will need some very good documentation and some maintenance skills to look after it.

So I hope this analogy is useful – not just for explaining to your grandmothers, but to anyone who needs to grasp the concept of messaging patterns. And now… I think I’m going to go order a pizza.

by Dan Toomey | Jun 21, 2018 | BizTalk Community Blogs via Syndication

Photo by Tariq Sheikh

Two weeks ago I had not only the privilege to attend the sixth INTEGRATE event in London, but also the great honour of speaking for the second time. These events always provide a wealth of information and insight as well as opportunities to meet face-to-face with the greatest minds in the enterprise integration space. This year was no exception, with at least 24 sessions featuring as many speakers from both the Microsoft and the MVP community.

As usual, the first half of the 3-day conference was devoted to the Microsoft product team, with presentations from Jon Fancy (who also gave the keynote), Kevin Lam, Derek Li, Jeff Hollan, Paul Larsen, Valerie Robb, Vladimir Vinogradsky, Miao Jiang, Clemens Vasters, Dan Rosanova, Divya Swarnkar, Kent Weare, Amit Kumar Dua, and Matt Farmer. For me, the highlights of these sessions were:

- Jon Fancey’s keynote address. Jon talked about the inevitability of change, underpinning this with a collection of images showing how much technology has progressed in the last 30-40 years alone. Innovation often causes disruption, but this isn’t always a bad thing; the phases of denial and questioning eventually lead to enlightenment. Jon included several notable quotes including one by the historian Lewis Mumford (“Continuities inevitably represent inertia, the dead past; and only mutations are likely to prove durable.”) and a final one by Microsoft CEO Satya Nadella (“What are you going to change to create the future?”).

- Kevin Lam and Derek Li taking us through the basics of Logic Apps. It’s easy to become an “Integration Hero” using Logic Apps to solve integration problems quickly. Derek built two impressive demos from scratch during this session, including one that performed OCR on an image of an order and then conditionally sent an approval email based on the amount of the order.

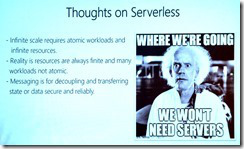

- Jeff Hollan and his explanation of Azure Durable Functions. An extension of Azure functions, Durable Functions enable long-running, stateful, serverless operations which can execute complex orchestrations. Built on the Durable Task framework, they manage state, checkpoints and restarts. Jeff also gave some practical advice about scalable patterns for sharing code resources across multiple instances.

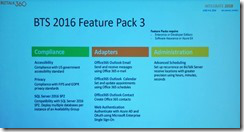

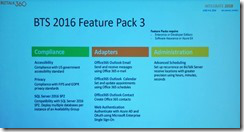

Paul Larsen and Valerie Robb with a preview of Feature Pack 3 for BizTalk Server 2016. This upcoming feature pack will include GDPR support, SQL Server 2016 SP2 support (including the long-awaited ability to deploy multiple databases per instance of an Availability Group), new Office365 adapters, and advanced scheduling support for receive locations.

Paul Larsen and Valerie Robb with a preview of Feature Pack 3 for BizTalk Server 2016. This upcoming feature pack will include GDPR support, SQL Server 2016 SP2 support (including the long-awaited ability to deploy multiple databases per instance of an Availability Group), new Office365 adapters, and advanced scheduling support for receive locations.- Miao Jiang and his preview of end-to-end tracing via Application Insights in API Management. This includes the ability to map an application, view metrics, detect issues, diagnose errors, and much more.

- Clemens Vasters. (Need I say more?) The genius architect behind Azure Service Bus gave a clear, in-depth discussion about the difference between messaging (which is about intents) and eventing (which is about facts). He also introduced Event Grid’s support for CNCF Cloud Events, and open standard for events.

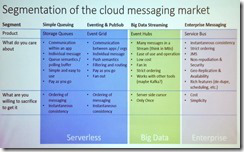

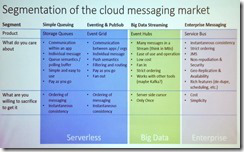

Dan Rosanova and his clear explanation of the different cloud messaging options available in Azure. His slide showing the segmentation of services into Serverless, Big Data and Enterprise provided a lot of clarity as to when to use what. He also covered Event Grid, Event Hubs, and Event Hub’s support for Kafka.

Dan Rosanova and his clear explanation of the different cloud messaging options available in Azure. His slide showing the segmentation of services into Serverless, Big Data and Enterprise provided a lot of clarity as to when to use what. He also covered Event Grid, Event Hubs, and Event Hub’s support for Kafka.- Jon Fancey & Divya Swarnkar talking about Logic Apps for enterprise integration. It was especially nice to see support for XSLT 3.0 and Liquid for mapping, as well as the new SAP connector. Also there was an enthusiastic response from the audience when revealed there were plans to introduce a consumption based model for Integration Accounts!

- Kent Weare talking about Microsoft Flow and how it is the automation tool for Office365. Together with PowerApps. Power BI, and Common Data Services you can can build a Business Application Platform (BAP) that supports powerful enterprise-grade applications. Best of all, Flow makes it possible for citizen integrators to automate non-critical processes without the need for a professional development team. A notable quote in Kent’s presentation was “Transformation does not occur while waiting in line!”

- Divya Swarnkar and Amit Kumar Dua talking about how Microsoft IT use Logic Apps for Enterprise Integration. It is always interesting to see how Microsoft “dog foods” its own technology to support in-house processes. In this case they built a Trading Partner Migration tool to lift more than 1000 profiles from BizTalk into Logic Apps. Highlights from the lessons learnt were understanding the published limits of Logic Apps and also that there is no “exactly once” delivery option.

- Vladimir Vinogradsky with a deep-dive into API Management. Covering the topics of developer authentication, data plane security, and deployment automation, Vladimir gave some great tips including how to use Azure AD and Azure AD B2C for different scenarios, identifying the difference between key vs. JWT vs. client certificate for authorisation (as well as when to use which), and the use of granular service and API templates to improve the devops story. It was also fun watching him launch his cool demos using Visual Studio Code and httpbin.org.

- Kevin Lam and Matt Farmer’s deep-dive into Logic Apps. This session yielded an enthusiastic applause from the audience with Kevin’s announcement of the upcoming preview of Integration Service Environments. This is a true game-changer bringing dedicated compute, isolated VNET connectivity, custom inbound domain names, static outbound IPs, and flat cost to Logic Apps. There was also a demonstration by Matt of building a custom connector, and how an ISV can turn this into a “real” connector providing it is for a SaaS service you own.

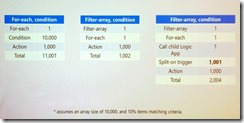

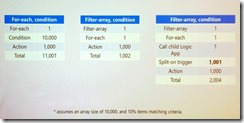

Derek Li and Kevin Lam with Logic App patterns and best practices. This session sported some great performance improving and cost saving advice by limiting the number of actions with clever loop processing logic (hint: check out the Filter Array action as per this blog post). There was also a tip on how to easily distinguish built-ins, connectors and enterprise connectors: you can filter for these when choosing a connector in the dialog.

Derek Li and Kevin Lam with Logic App patterns and best practices. This session sported some great performance improving and cost saving advice by limiting the number of actions with clever loop processing logic (hint: check out the Filter Array action as per this blog post). There was also a tip on how to easily distinguish built-ins, connectors and enterprise connectors: you can filter for these when choosing a connector in the dialog.- Jon Fancey and Matt Farmer talking about the Microsoft integration roadmap. Key messages here were Jon confirming that Logic App will be coming to Azure Stack, and that Integration Service Environments (ISE) will also eventually come to on-premises. Matt also talked about Azure Integration Services, which on the surface appears to be a new marketing name to the collection of existing services (API Management, Logic Apps, Service Bus and Event Grid). However it promises to include reference architectures, templates and other assets to provide better guidance on using these services.

This concluded the Microsoft presentations on the agenda. After a brief introduction to the BizTalk360 Partnership Program by Business Development Manager Duncan Barker, the MVP sessions began, featuring speakers Saravana Kumar, Steef-Jan Wiggers, Sandro Pereira, Stephen W. Thomas, Richard Seroter, Michael Stephenson, Johan Hedberg, Wagner Silveira, Dan Toomey, Toon Vanhoutte and Mattias Lögdberg. Highlights for me included:

- Saravana Kumar giving a BizTalk360 product update. Saravana revealed that ServiceBus360 is being renamed to Serverless360, which supports not only Service Bus queues, topics and relays, but also Event Hubs, Logic Apps and API Management. And there is an option to either let BizTalk360 host it or host on-prem in your own datacentre.

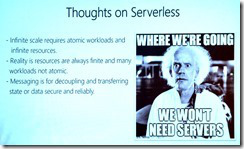

Steef-Jan Wiggers talking about Serverless Messaging in Azure. This was a great roundup of all the messaging capabilities in Azure including Service Bus, Storage Queues, Event Hubs and Event Grid. Steef-Jan explained the difference between each very clearly before launching into some very compelling demos.

Steef-Jan Wiggers talking about Serverless Messaging in Azure. This was a great roundup of all the messaging capabilities in Azure including Service Bus, Storage Queues, Event Hubs and Event Grid. Steef-Jan explained the difference between each very clearly before launching into some very compelling demos.- Saravana Kumar giving an Atomic Scope update. A product that provides end-to-end tracking for hybrid solutions featuring BizTalk Server, Logic Apps, Azure Functions, and App Services, Atomic Scope can reduce the time spent building this capability from 20-30% right down to 5%. Saravana affirmed that it is designed for business users, not technical users. Included in this session was a real-life testimonial by Bart Scheurweghs from Integration.Team where Atomic Scope solved their tracking issues across a hybrid application built for Van Moer Logistics.

- Sandro Pereira with his BizTalk Server lessons from the road. Sandro’s colourful presentations are always full of good advice, and this was no exception. Whilst talking about a variety of best practices from a security perspective, Sandro reminded us that the BizTalk Server platform is inherently GPDR compliant – but not necessarily the applications we build on it! He also talked about the benefits of feature packs (which may contain breaking code) and cumulative updates (which shouldn’t break code), being wary of JSON schema element names with spaces in them, REST support in BizTalk (how it doesn’t handle optional parameters OOTB), and cautioning against use of some popular patterns like singletons and sequence convoys which are notorious for introducing performance issues and zombies.

- Stephen W. Thomas and using BizTalk Server as your foundation to the cloud. Stephen talked about a bunch of scenarios that justify migration to or use of Logic Apps as opposed to BizTalk Server, including use of connectors not in BizTalk, reducing the load on on-prem infrastructure, saving costs, improved batching capability, and planning for the future. He also identified a number of typical “blockers” to cloud migration and discussed various strategies to address them.

- Richard Seroter on architecting highly available cloud solutions. One of the highlights of this event for me was Richard’s extremely useful advice on ensuring your apps are architected correctly for high-availability. Taking us through a number of Azure services from storage to databases to Service Bus to Logic Apps and more, Richard pointed out what is provided OOTB by Azure and what you as the architect need to cater for. His summary pointers included 1) only integrate with highly available endpoints, 2) clearly understand what services failover together, and 3) regularly perform chaos testing.

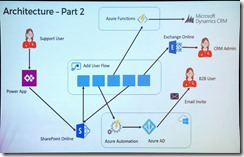

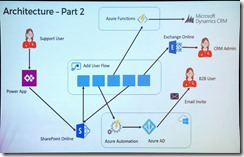

Michael Stephenson and using Microsoft Flow to empower DevOps. It was great to see Michael return to the stage this year, and his Minecraft hosted demo of an automated process for onboarding users was a real crowd-pleaser. Adhoc tasks such as these can waste time and money, but automating the process with Flow not only mitigates this but is also relatively easy to achieve if the process can be broken down to well-understood tasks.

Michael Stephenson and using Microsoft Flow to empower DevOps. It was great to see Michael return to the stage this year, and his Minecraft hosted demo of an automated process for onboarding users was a real crowd-pleaser. Adhoc tasks such as these can waste time and money, but automating the process with Flow not only mitigates this but is also relatively easy to achieve if the process can be broken down to well-understood tasks.- Unfortunately I had to miss Johan Hedberg’s session on VSTS and BizTalk Server, but by all accounts it was a demo-heavy presentation that provided lots of useful tips on establishing a CI/CD process with BizTalk Server. I look forward to watching the video when it’s out.

- Wagner Silveira on exposing BizTalk Server to the world. This session continued the hybrid application theme by discussing the various ways of exposing HTTP endpoints from BizTalk Server for consumption by external clients. Options included Azure Relay, Logic Apps, Function Proxies and API Management – each with their own strengths and limitations. Wagner performed multiple demos before summarising the need to identify your choices and needs, and “find the balance”.

- Dan Toomey and the anatomy of an enterprise integration architecture. Well… I didn’t take any notes on this one, seeing as I was onstage speaking! But you can download the slides to get an overview of how Microsoft integration technologies can be leveraged to reduce friction across layers of applications that move at different speeds.

- Toon Vanhoutte’s presentation on using webhooks with BizTalk Server. In his first time appearance at INTEGRATE, Toon gave a very compelling talk about the efficiency of webhooks over a polling architecture. He then walked through the three responsibilities of the publisher (reliability, security, and endpoint validation) as well as the five responsibilities of the consumer (high availability, scalability, reliability, security, and sequencing) and performed multiple demos to illustrate these.

Wrapping up the presentations was Mattias Lögdberg on refining BizTalk Server implementations. Also a first-time speaker at INTEGRATE, Mattias wowed us by doing his entire presentation as a live drawing on his tablet! He used this medium to walk us through a real-life project where a webshop was migrated to Azure whilst retaining connectivity to the on-prem ERP system. A transition to a microservices-based approach and addition of Azure services enhanced the capabilities of BizTalk which still handles the on-prem integration needs.

Wrapping up the presentations was Mattias Lögdberg on refining BizTalk Server implementations. Also a first-time speaker at INTEGRATE, Mattias wowed us by doing his entire presentation as a live drawing on his tablet! He used this medium to walk us through a real-life project where a webshop was migrated to Azure whilst retaining connectivity to the on-prem ERP system. A transition to a microservices-based approach and addition of Azure services enhanced the capabilities of BizTalk which still handles the on-prem integration needs.

The conference finished with a Q&A session with the principal PMs from the product group, including Jon Fancey, Paul Larsen, Kevin Lam, Vladimir Vinogradsky, Kent Weare, and Dan Rosanova as panellists. The inevitable question of BizTalk vNext came up, and there was some obvious frustration from the audience as the panellists were unable to provide a clear and definitive statement about whether BizTalk Server 2016 would be the final release or not. Considering that we are halfway through the main support period for this product, the concern is very understandable as we need to know what message to convey to our clients. Reading between the lines, many have concluded for themselves that there will be no vNext and that migration to the cloud is now becoming a critical urgency. Posts such as this one from Michael Stephenson help to put things in context and show that compared to competing products we’re not as bad off as some alarmists may suggest, but it was still disappointing to end the conference with such a vague view of the horizon. I hope that Microsoft sends out a clear message soon about what level of support can be expected for the significant investment that many of our clients have made in BizTalk recently.

One especially cool thing that the organisers did was to hire Visual Scribing to come and draw a mural of all the presentations, capturing the key messages throughout the conference:

Day 1

Day 2 & 3

For more in-depth coverage of the sessions and announcements from INTEGRATE 2018, I encourage you to check out the following:

Also, keep an eye on the INTEGRATE 2018 website, as eventually the video and the slide decks will be uploaded there.

I really want to thank Saravana and his whole team at BizTalk360 for organising such a mammoth event where everything ran so smoothly. I also want to thank Microsoft and my MVP colleagues for their contributions to the event, as well as my generous employer Mexia for sending me to London to enjoy this experience!

by Dan Toomey | Mar 30, 2018 | BizTalk Community Blogs via Syndication

Last Saturday I had the great privilege of organising and hosting the 2nd annual Global Integration Bootcamp in Brisbane. This was a free event hosted by 15 communities around the globe, including four in Australia and one in New Zealand!

Last Saturday I had the great privilege of organising and hosting the 2nd annual Global Integration Bootcamp in Brisbane. This was a free event hosted by 15 communities around the globe, including four in Australia and one in New Zealand!

It’s a lot of work to put on these events, but it’s worth it when you see a whole bunch of dedicated professionals give up part of their weekend because they are enthusiastic to learn about Microsoft’s awesome integration capabilities.

The day’s agenda concentrated on Integration Platform as a Service (iPaaS) offerings in Microsoft Azure. It was a packed schedule with both presentations and hands-on labs:

It wasn’t all work… we had some delicious morning tea, lunch and afternoon tea catered by Artisan’s Café & Catering, and there was a bit of swag to give away as well thanks to Microsoft and also Mexia (who generously sponsored the event).

Overall, feedback was good and most attendees were appreciative of what they learned. The slide decks for most of the presentations are available online and linked above, and the labs are available here if you would like to have a go.

Overall, feedback was good and most attendees were appreciative of what they learned. The slide decks for most of the presentations are available online and linked above, and the labs are available here if you would like to have a go.

I’d like to thank my colleagues Susie, Lee and Adam for stepping up into the speaker slots and giving me a couple of much needed breaks! I’d also like to thank Joern Staby for helping out with the lab proctoring and also writing an excellent post-event article.

Finally, I be remiss in not mentioning the global sponsors who were responsible for getting this world-wide event off of the ground and providing the lab materials:

- Martin Abbott

- Glenn Colpaert

- Steef-Jan Wiggers

- Tomasso Groenendijk

- Eldert Grootenboer

- Sven Van den brande

- Gijs in ‘t Veld

- Rob Fox

Really looking forward to next year’s event!

by Dan Toomey | Jan 29, 2018 | BizTalk Community Blogs via Syndication

For years now Integration Monday has been faithfully giving us webinars almost every week. There have been some outstanding sessions from international leaders in the integration space including MVPs, members of the Microsoft product team, and other community members. For the Asia Pacific community, however, it has always been a challenge to participate in the live sessions due to the unfriendly time zone. (I certainly know what a struggle it was to present my own session last October at 4:30am!) Even from the listener’s perspective, it is usually nicer to be able to join a live webinar and ask questions rather than to consume the recordings afterwards.

Thanks to the initiative of veteran MVP Bill Chesnut (aka “BizTalk Bill”) and the sponsorship of his employer SixPivot, we now have a brand new webinar series starting up in a friendlier time slot for our APAC community! Integration Down Under is launching its inaugural webinar session on Thursday, 8th February at 7:00pm AEST. You can register for this free event here.

Thanks to the initiative of veteran MVP Bill Chesnut (aka “BizTalk Bill”) and the sponsorship of his employer SixPivot, we now have a brand new webinar series starting up in a friendlier time slot for our APAC community! Integration Down Under is launching its inaugural webinar session on Thursday, 8th February at 7:00pm AEST. You can register for this free event here.

This initial session will introduce the leaders and allow each of us to present as very short talk on a chosen topic:

There are already more than twenty registrations even though the link has been live for only a few days. I hope that this is a good sign of the interest within the community!

Feeling really fortunate to be part of this initiative, and looking forward to delivering my intro to Event Grid talk! It will be a slightly scaled down version of what I presented at the Sydney Tech Summit back in November. Hope to see you there!

by Dan Toomey | Sep 3, 2017 | BizTalk Community Blogs via Syndication

(This post was originally published on Mexia’s blog on 1st September 2017)

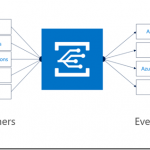

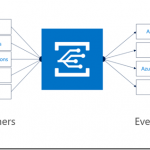

Microsoft recently released the public preview of Azure Event Grid – a hyper-scalable serverless platform for routing events with intelligent filtering. No more polling for events – Event Grid is a reactive programming platform for pushing events out to interested subscribers. This is an extremely significant innovation, for as veteran MVP Steef-Jan Wiggers points out in his blog post, it completes the existing serverless messaging capability in Azure:

- Azure Functions – Serverless compute

- Logic Apps – Serverless connectivity and workflows

- Service Bus – Serverless messaging

- Event Grid – Serverless Events

And as Tord Glad Nordahl says in his post From chaos to control in Azure, “With dynamic scale and consistent performance Azure Event grid lets you focus on your app logic rather than the infrastructure around it.”

The preview version not only comes with several supported publishers and subscribers out of the box, but also supports customer publishers and (via WebHooks) custom subscribers:

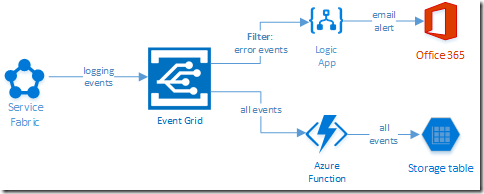

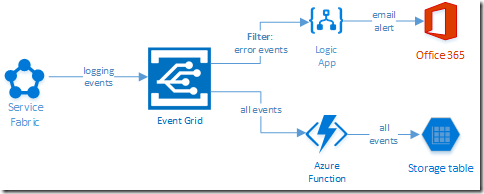

In this blog post, I’ll describe the experience in building a sample logging mechanism for a service hosted in Azure Service Fabric. The solution not only logs all events to table storage, but also sends alert emails for any error events:

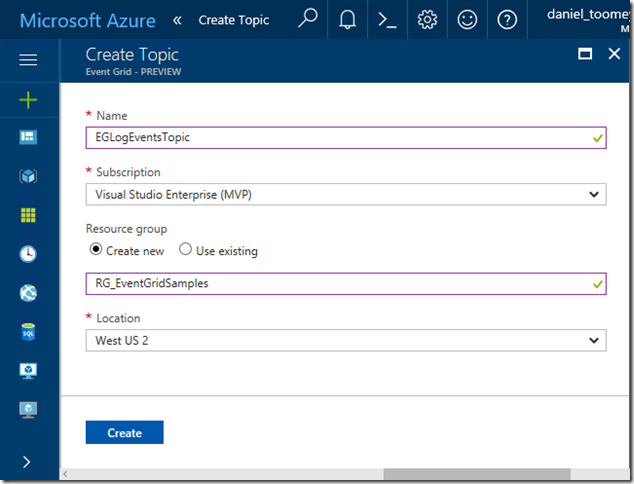

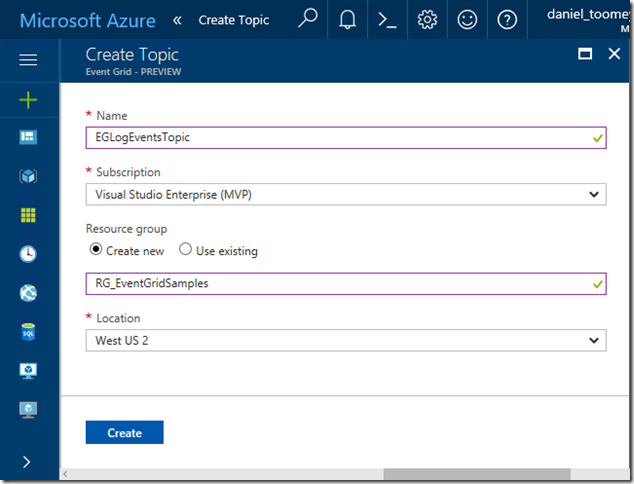

Creating the Event Grid Topic

This was an extremely simple process executed in the Azure Portal. Create a new item by searching for “Event Grid Topic”, and then supply the requested basic information:

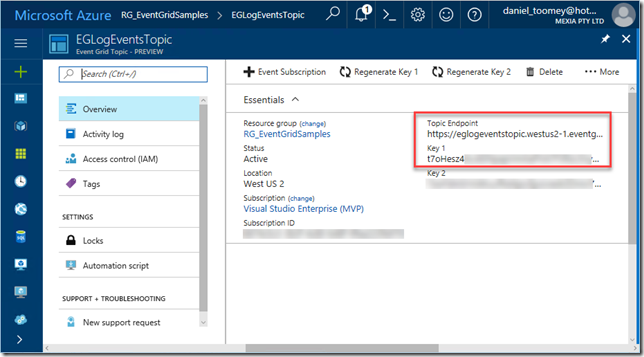

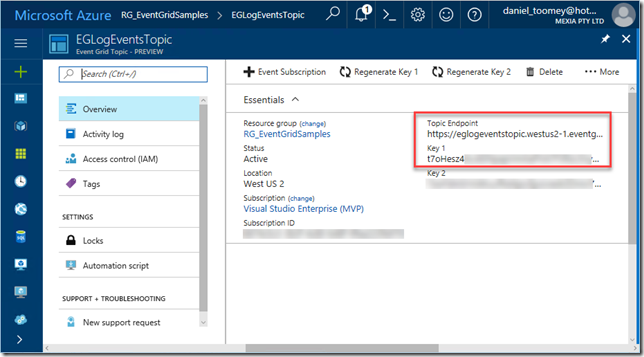

Once created, the key items you will need once the topic is created is the Topic Endpoint and the associated key:

Creating the Event Publisher

As mentioned previously, there are a number of existing Azure services that can publish events to Event Grid including Event Hubs, resource groups, subscriptions, etc. – and there will be more coming as the service moves toward general availability. However, in this case we create a custom publisher which is a service hosted in Azure Service Fabric. For this sample, I used an existing Voting App demo which I’ve written about in a previous blog post, modifying it slightly by adding code to publish logging events to Event Grid.

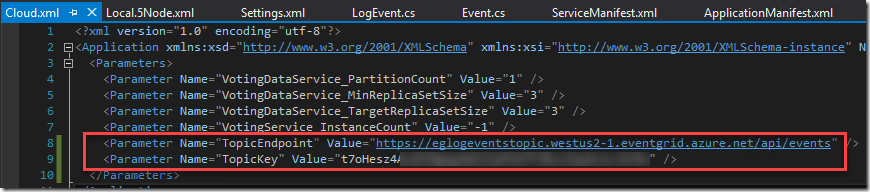

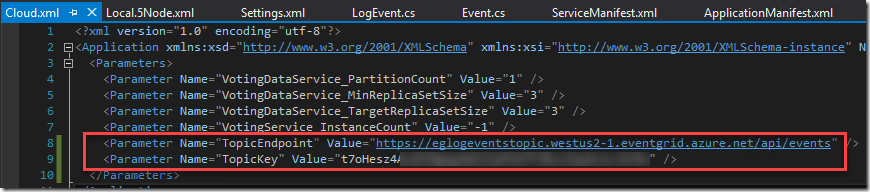

The first requirement was storing the topic endpoint and key in the parameter files, and of course creating the associated configuration items in the ServiceManifest.xml and ApplicationManifest.xml files (this article provides information about application configuration in Service Fabric):

Note that in a production situation the TopicKey should be encrypted within this file – but for the purposes of this example we will keep it simple.

Next step was creating a small class library in the solution to house the following items:

- The Event class which represents the Event Grid events schema

- A LogEvent class which represents the “Data” element in the Event schema

- A utility class which includes the static SendLogEvent method

- A LogEventType enum to define logging severity levels (ERROR|WARNING|INFO|VERBOSE)

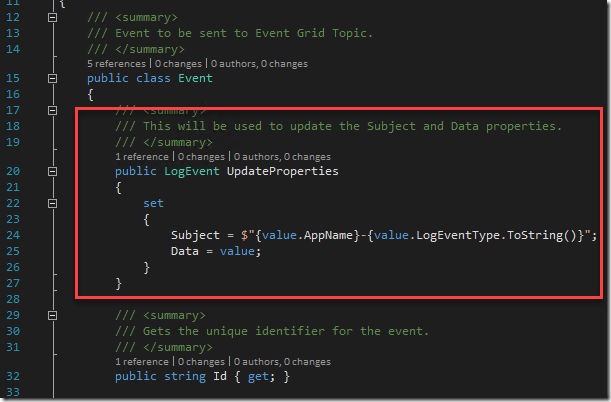

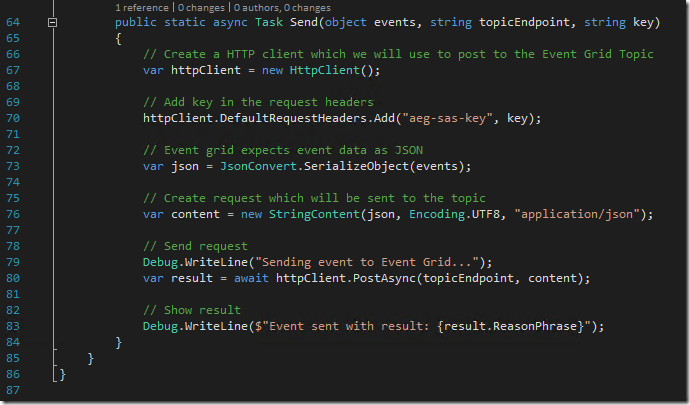

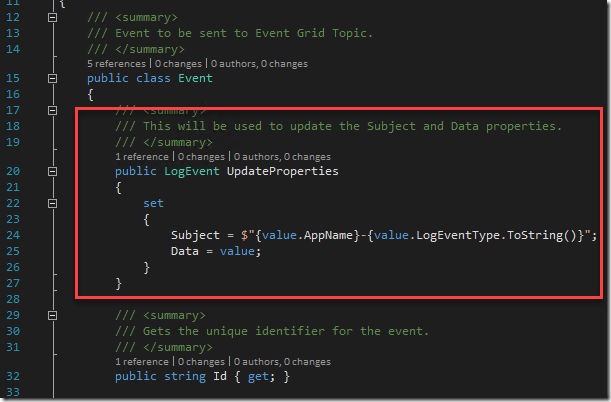

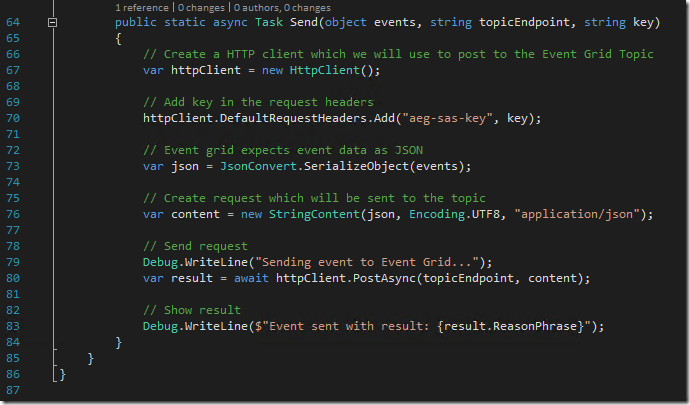

To see an example of how to create the Event class, refer to fellow Azure MVP Eldert Grootenboer’s excellent post. The only changes I made were to assign the properties for my custom LogEvent, and to add a static method for sending a collection of Event objects to Event Grid (notice how the Event.Subject field is a concatenation of the Application Name and the LogEventType – this will be important later on):

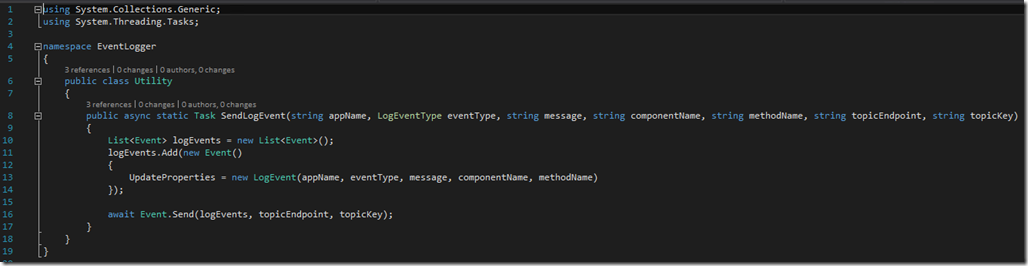

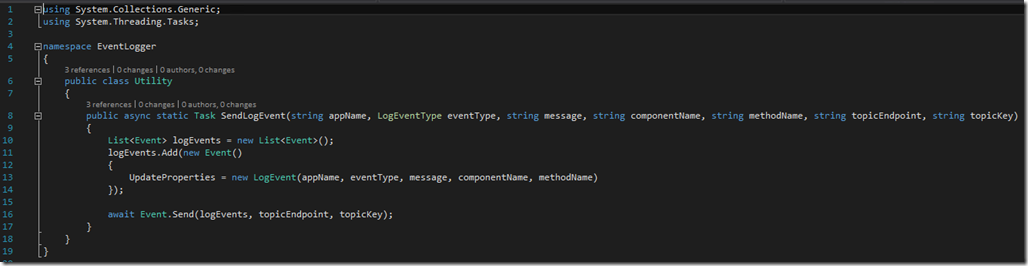

The utility method that creates the collection and invokes this static method is pretty straight forward:

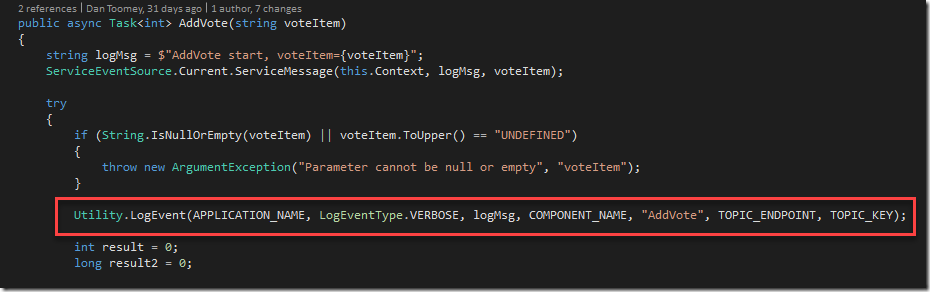

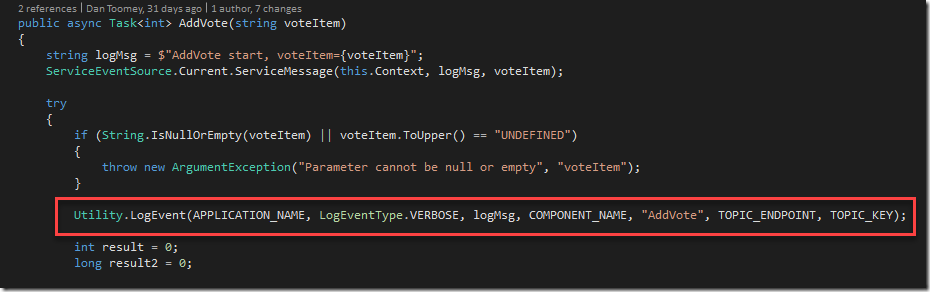

This all makes it simple to embed logging calls into the application code:

Creating the Event Subscribers

Capturing All Events

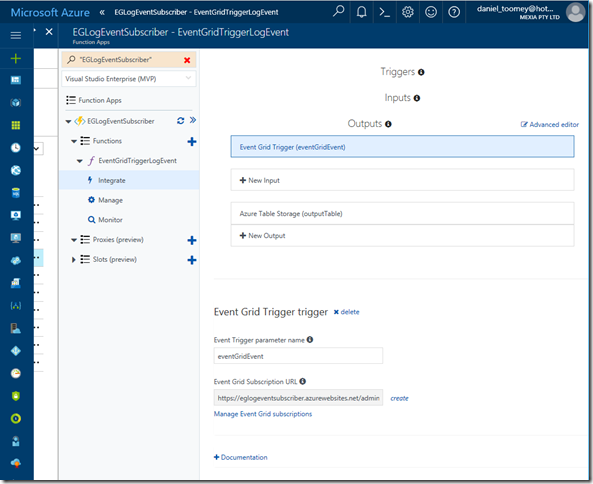

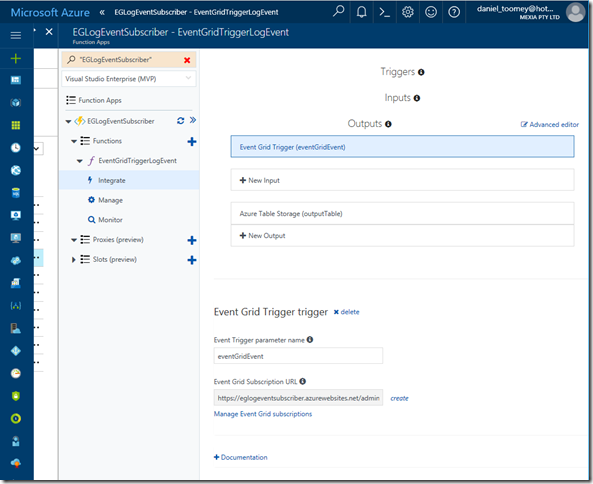

The first topic subscription will be an Azure Function that will write all events to Azure table storage. Provided you’ve created your Function App in a region that supports the Event Grid preview (I’ve just created everything aside from the Service Fabric solution within the same resource group and location), you will see that there is already an Event Grid Trigger available to choose. Here is my configured trigger:

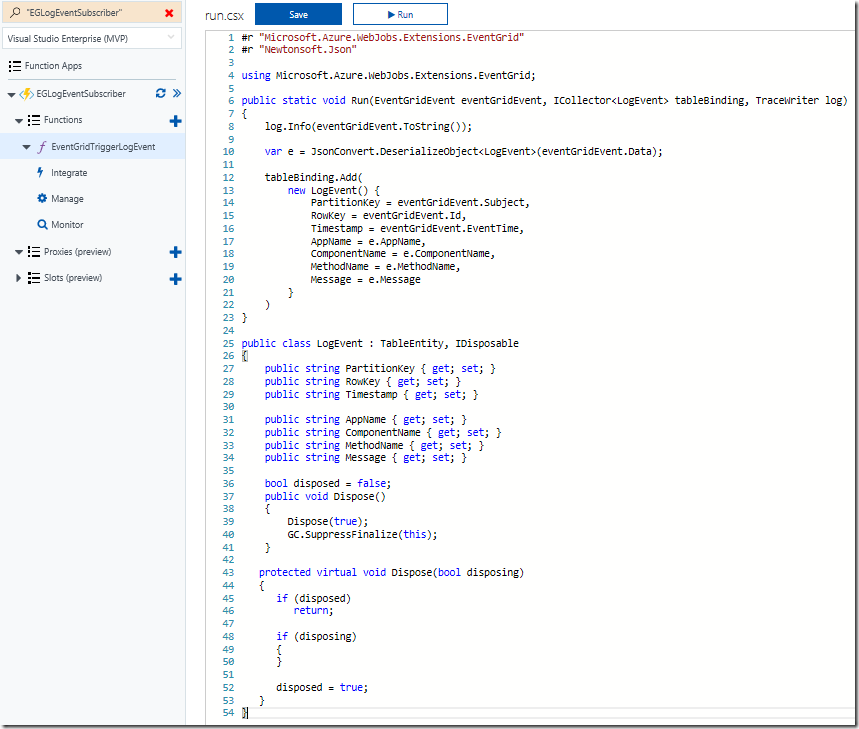

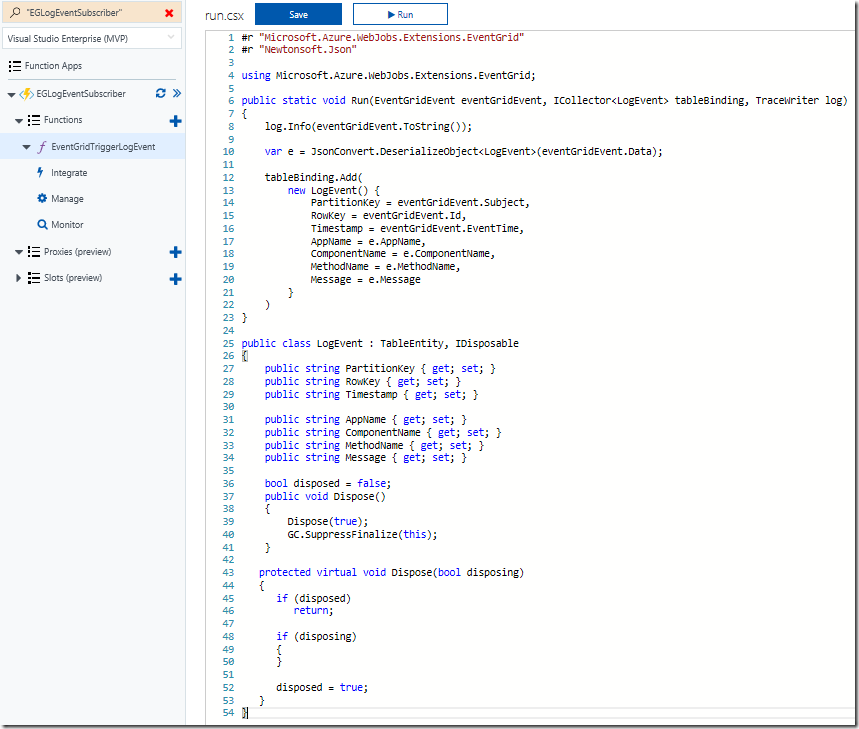

As you can see, I’ve also configured a Table Storage output. The code within this function creates a record in the table using the Event.Subject as a partition and the Event.Id as the row key:

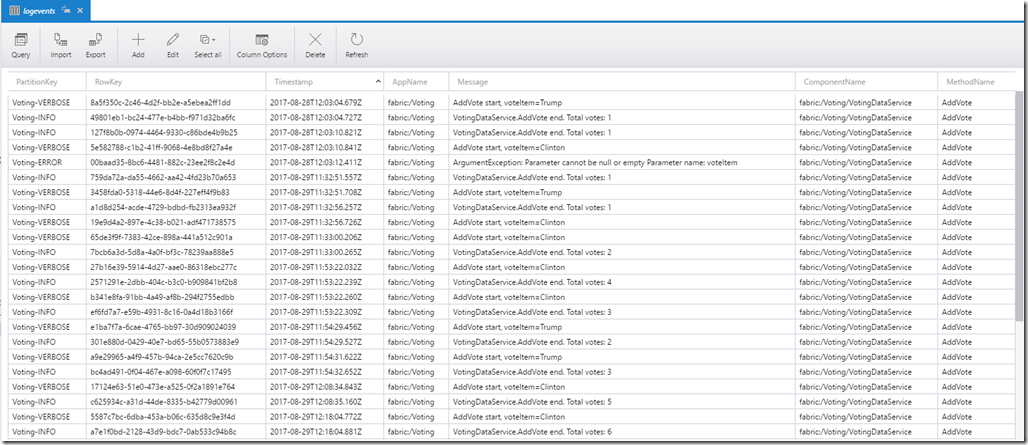

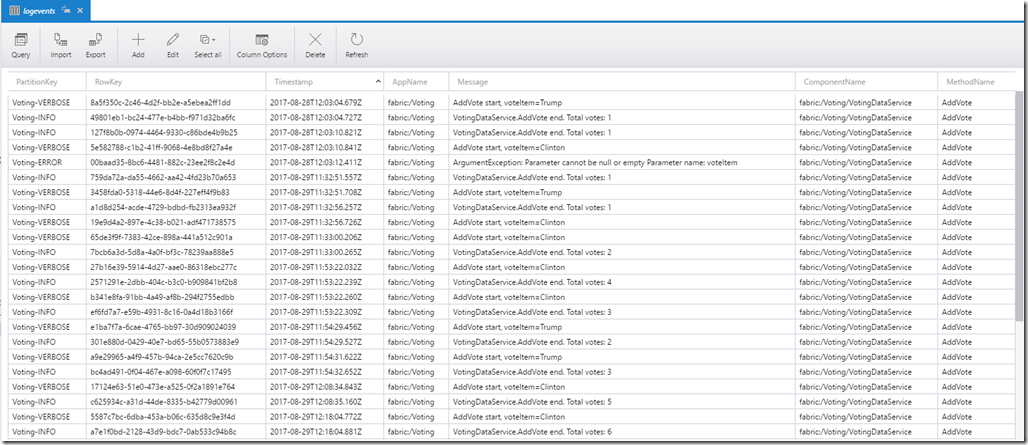

Using the free Azure Storage Explorer tool, we can see the output of our testing:

Alerting on ERROR Events

Now that we’ve completed one of the two subscriptions for our solution, we can create the other subscription which will use a filter on ERROR events and raise an alert via sending an email notification.

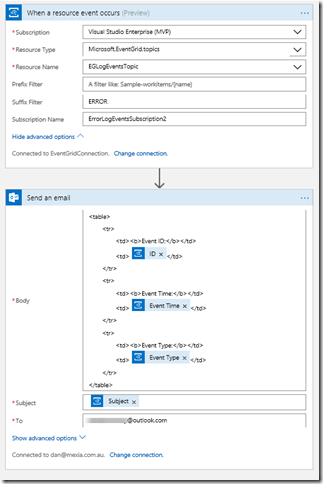

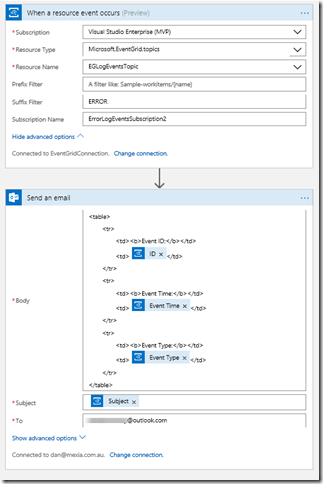

The first step is to create the Logic App (in the same region as the Event Grid) and add the Event Grid Trigger. There are a few things to watch out for here:

- When you are prompted to sign in, the account that your subscription belongs to may or may not work. If it doesn’t, try creating a Service Principal with contributor rights for the Event Grid topic (here is an excellent article on how to create a service principal)

- The Resource Type should be Microsoft.EventGrid.topics

- The Suffix field contains “ERROR” which will serve as the filter for our events

- If the Resource Name drop-down list does not display your Event Grid topic at first, type something in, save it and then click the “x”; the list should hopefully appear. It is important to select from the list as just typing the display name will not create the necessary resource ID in the topic field and the subscription will not be created.

You can then follow this with an Office365 Email action (or any other type of notification action you prefer). There are four dynamic properties that are available from the Event Grid Trigger action (Subject, ID, Event Type and Event Time):

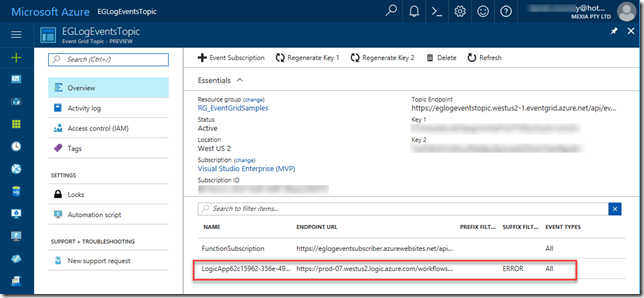

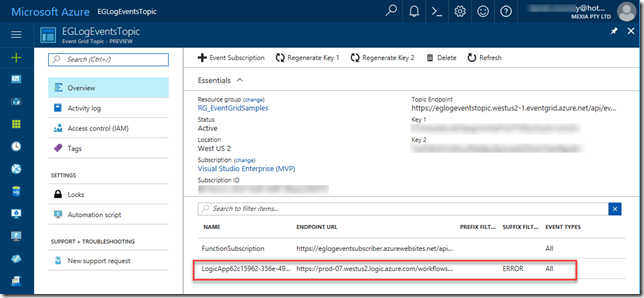

After saving the Logic App, check for any errors in the Overview blade, and then check the Overview blade for the Event Grid Topic – you should see the new subscription created there:

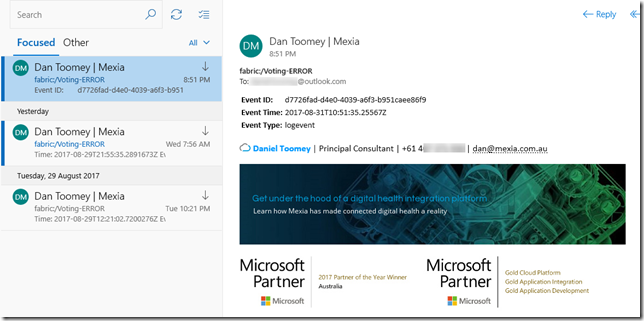

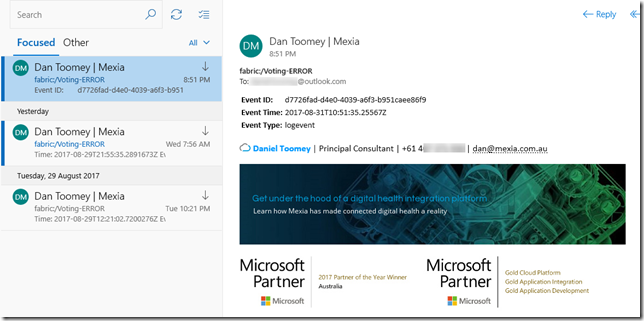

Finally, we can test the application. My Voting demo service generates an exception (and a ERROR logging event) when a vote is cast for a null/empty candidate (see the ERROR entry in the table screenshot above). This event now triggers an email notification:

Summary

So this example may not be the niftiest logging application on the market (especially considering all of the excellent logging tools that are available today), but it does demonstrate how easy it is to get up and running with Event Grid. You’ve seen an example of using a custom publisher and two built-in subscribers, including one with intelligent filtering. To see how to write a custom subscriber, have a look at Eldert’s post “Custom Subscribers in Event Grid” where he uses an API App subscriber to write shipping orders to table storage.

Event Grid is enormously scalable and its consumption pricing model is extremely competitive. I doubt there is anything else quite like this on offer today. Moreover, there will be additional connectors coming in the near future, including Azure AD, Service Bus, Azure Data Factory, API Management, Cosmos DB, and more.

For a broader overview of Event Grid’s features and the capabilities it brings to Azure, have a read of Tom Kerkhove’s post “Exploring Event Grid”. And to understand the differences between Event Hub, Service Bus and Event Grid, Saravana Kumar’s recent post sums it up quite nicely. Finally, if you want to get your hands dirty and have a play, Microsoft has provided a quickstart page to get you up and running.

Happy Eventing!

by Dan Toomey | Jul 6, 2017 | BizTalk Community Blogs via Syndication

Last week I had the privilege not only of attending the INTEGRATE 2017 conference in London, but presenting as well. A huge thanks to Saravana Kumar and BizTalk360 for inviting me as a speaker – what a tremendous honour and thrill to stand in front of nearly 400 integration enthusiasts from around the world and talk about Hybrid Connectivity! Also, a big thanks to Mexia for generously funding my trip.

With 380+ attendees from 52 countries around the globe, this is by far the biggest Microsoft integration event of the year. Of those 380, only four of us that I know of came from APAC: fellow MVP speakers Martin Abbott from Perth and Wagner Silveira from Auckland NZ, as well as Cameron Shackell from Brisbane who manned his ActiveADAPTER sponsor stand. Wagner would have to take the prize for the furthest travelled with his 30+ hour journey!

I’ve already published one blog post summarising my take on the messages delivered by Microsoft (which accounted for half of the sessions at the event). This will soon be followed by a similar post with highlights of the MVP community presentations, which in addition to BizTalk Server, Logic Apps, and other traditional integration topics also spanned into the new areas of Bots, IoT and PowerApps.

Aside from the main event, Saravana and his team also arranged for a few social events as well, including networking drinks after the first day, a dinner at Nando’s for the speakers, and another social evening for the BizTalk360 partners. They also presented each of the BizTalk360 product specialists with a beautiful award – an unexpected treat!

Aside from the main event, Saravana and his team also arranged for a few social events as well, including networking drinks after the first day, a dinner at Nando’s for the speakers, and another social evening for the BizTalk360 partners. They also presented each of the BizTalk360 product specialists with a beautiful award – an unexpected treat!

You have to hand it to Saravana and his team – everything went like clockwork, even keeping the speakers on schedule. And I thought it was a really nice touch that each speaker was introduced by a BizTalk360 team member. Not only did it make the speakers feel special, but it provided an opportunity to highlight the people behind the scenes who not only work to make BizTalk360 a great product but also ensure events like these come off. I hope all of them had a good rest this week!

As with all of these events, one of the things I treasure the most is the opportunity to catch up with my friends from around the globe who share my passion for integration, as well as meeting new friends. In my talk, I commented about how strong our community is, and that we not only integrate as professionals but integrate well as people too.

Arriving a day and a half before the three day event, I had hoped to conquer most of the jet-lag early on. But alas, the proximity to the solstice in a country so far North meant the sun didn’t set until past 10:30pm while rising just before 4:30am – which is the time I would involuntarily wake up each day no matter how late I stayed up the night before! Still, adrenalin kept me going and the engaging content kept me awake for every session.

And no matter what.., there was always time for a beer or two!

I look forward to the next time I get to meet up with my integration friends! If you missed the event in London, you’ll have a second chance at INTEGRATE 2017 USA which will be held in Redmond on October 25-27. And of course, if you keep your eyes on the website, the videos and slides should be published soon.

(Photos by Nick Hauenstein, Dan Toomey, Mikael Sand, and Tom Canter)

Several years ago, Greg Low led a Tech-Ed breakout session on “How to be a Good User Group Leader”. He was asked by someone whether 5-10 hours per month was a reasonable expectation for a time commitment. Greg agreed. Experience has shown me that is a pretty good estimate, at least once you get the group up & running. Initially it may take more time getting things organised. And of course, if you happen to be speaking at an event, then you would need to add those hours of preparation as well.

Several years ago, Greg Low led a Tech-Ed breakout session on “How to be a Good User Group Leader”. He was asked by someone whether 5-10 hours per month was a reasonable expectation for a time commitment. Greg agreed. Experience has shown me that is a pretty good estimate, at least once you get the group up & running. Initially it may take more time getting things organised. And of course, if you happen to be speaking at an event, then you would need to add those hours of preparation as well. Your user group isn’t going to be much of a community if no one shows up, right?

Your user group isn’t going to be much of a community if no one shows up, right? This is related to the previous challenge in ensuring that you choose an supportable theme/topic for your group. If it’s a rare or highly specialised focus, you may find yourself having to speak at every event! Some organisers don’t mind that, they like having a forum to promote themselves – but chances are your following will dwindle after a short while if there isn’t enough variety.

This is related to the previous challenge in ensuring that you choose an supportable theme/topic for your group. If it’s a rare or highly specialised focus, you may find yourself having to speak at every event! Some organisers don’t mind that, they like having a forum to promote themselves – but chances are your following will dwindle after a short while if there isn’t enough variety. This is often a big stumbling block for some cities. Venues for hire are typically very expensive. The best solution is if your employer can accommodate a large meeting space, or perhaps another business that chooses to donate a space as sponsorship. Other options are university or community spaces. Some of these may come with a price tag, but will be much cheaper than commercial hosting venues. In Brisbane, we have used

This is often a big stumbling block for some cities. Venues for hire are typically very expensive. The best solution is if your employer can accommodate a large meeting space, or perhaps another business that chooses to donate a space as sponsorship. Other options are university or community spaces. Some of these may come with a price tag, but will be much cheaper than commercial hosting venues. In Brisbane, we have used