by michaelstephensonuk | May 1, 2017 | BizTalk Community Blogs via Syndication

In todays organisations there is a massive theme that API’s and iPaaS are the keys to building modern applications because these technologies simplify the integration experience. While this is true I think one of the things that is regularly forgotten is the power of a well thought out messaging element within your architecture. When building solutions with API’s and iPaaS we are often implementing patterns where an action in one application will result in an RPC call to another application to get some data or do something. This does not cover the scenarios where applications need a relatively up to date synchronised copy of certain data so that various actions and transactions can be performed.

While data can be moved around behind the scenes with these technologies I believe there is a big opportunity in looking at evolving your architecture to make your key data entities available on service bus in a messaging solution so you can plug and play applications into this rather than having to rebuild entire application to application interfaces each time.

When working on one project last year this was one of the solutions we implemented and it has been really interesting to see the customer reap the benefits and repeat the pattern over and over.

The initial project we were working on was about implementing Dynamics CRM as a system of engagement in a higher education establishment to engage with students and to provide a great support experience for students. For pretty much any IT project in an education setting there are certain data entities you probably need your application to be able to access:

- Students

- Staff

- Courses

- Course Modules

- Course Timetable

- Which student is on which course

- Marks and Grades

In the project initial discussions talked about using SSIS and some CRM add-ons for SSIS to do ETL style integration with Dynamics CRM to send it the latest staff, student and course information on a daily basis. While this would work, there is little strategic value in this because the next project is likely to come along and implement the same interfaces again and again and before you know it all systems have point to point integrations passing this data around.

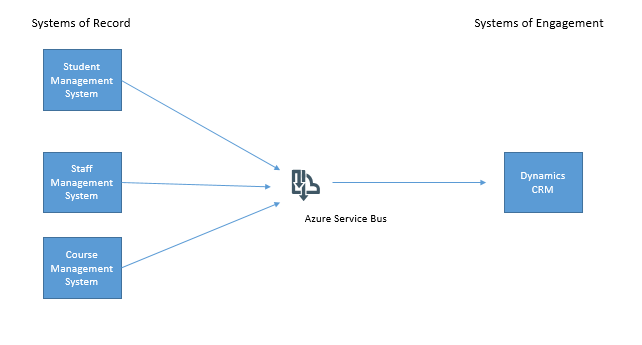

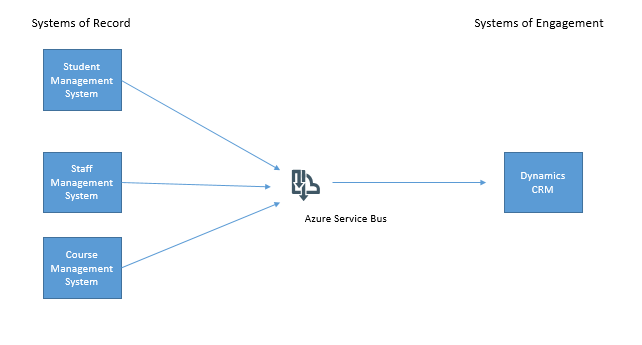

Our preferred approach however was to use Azure Service Bus as an intermediary and to publish data from the system of record for each key data entity to Service Bus then we could subscribe to this data and send it to CRM. In the next project another subscriber could subscribe to data on service bus and take what it needs.

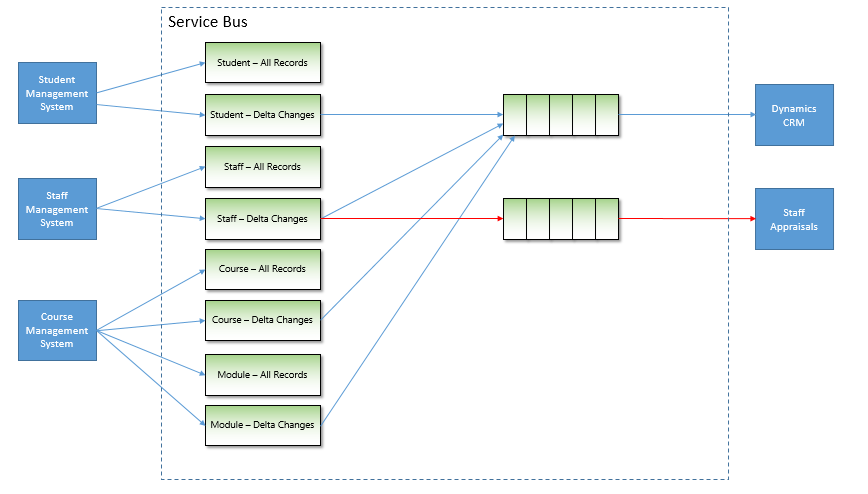

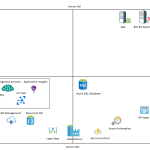

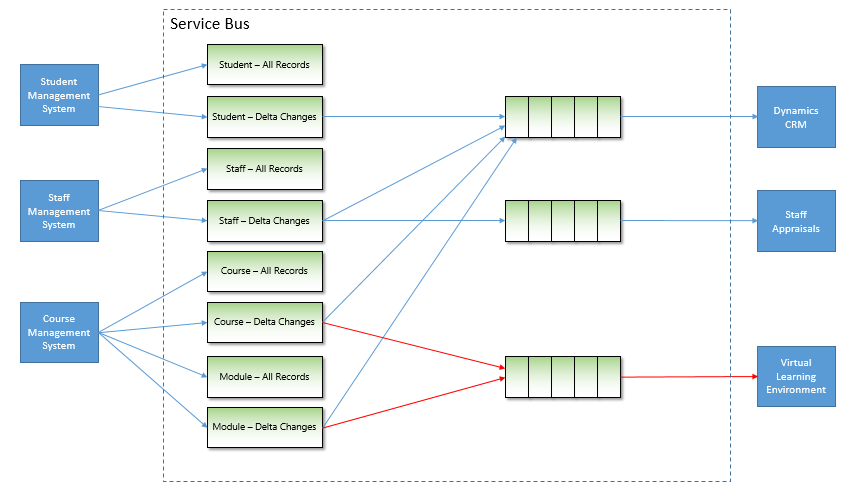

The below diagram shows the initial concept for our project.

Rather than try to solve all possible problems in day one we chose to use this approach as a pattern but to implement it in a just in time fashion alongside our main business project so that eventually we would make all key data entities available on service bus.

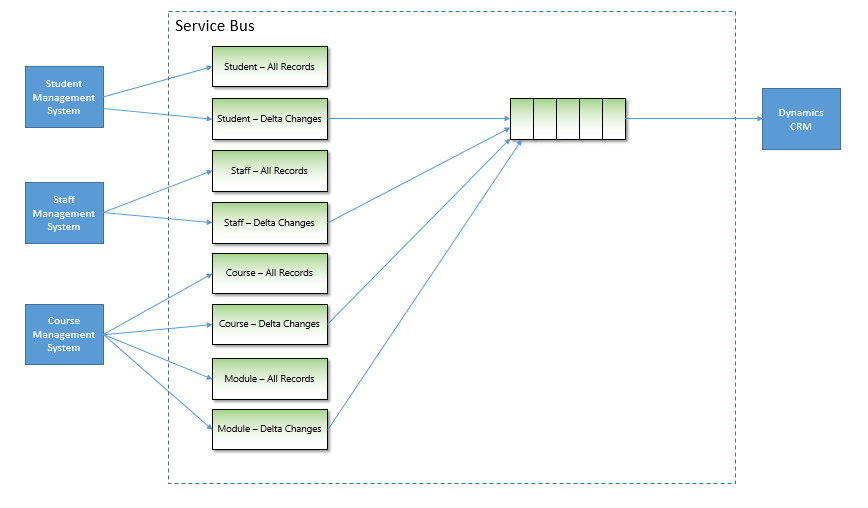

One of the key things we thought about was the idea of sending to topics and receiving from queues. This is really the best practice way to use service bus in my opinion anyway. Think of a topic as a virtual queue and we had 1 topic per type of entity.

We also extended this so that for each entity we would have 1 topic for all instances of the entity and one topic just for changes. We had 2 types of system of record for our main data entities, some applications would be able to publish events in a near real time fashion (for example CRM would be capable of doing this for any data entities it was determined to be the master system for) and other applications were only able to have information published if an Integration technology pulled out data and published it. Any system publishing events would be using a delta topic and any system publishing in “batches” would use a topic for delta changes and a topic for all elements. To elaborate on this with an example, imagine the student records system has a SQL table of students. We might have a process with BizTalk that took all students from the table and published them to the “all” topic. We would then have another BizTalk process which would use a last modified field on the table and publish just the records that had changed to the “delta” topic. This means that receiving applications could take messages from either topic depending on the scenarios:

- They always want all records

- They want all records the first time and then after initial sync they only want changes

- The application only ever wants changes

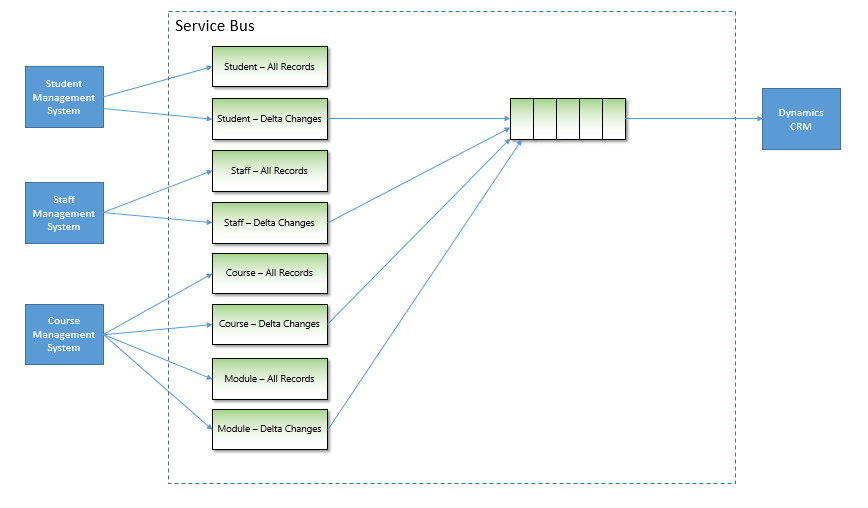

This approach would give us a really rich hub of data and changes which applications could take what they needed. In the diagram below you can see how we created a worker queue for CRM to be fed messages and we had many more topics which applications were publishing data to. Using Azure Service Bus we can define subscriptions to create rules on which applications want which messages. We then use the Forward To property to forward messages from the subscription to the worker queue for CRM.

Just a quick point to note, we never receive messages directly from a subscription, we always use forward to and send the messages to a queue. The reason for this is:

- You cant define fine granular security for a topic

- Forward To allows the worker queue to process many different kinds of messages

- Its easier to see which applications are receiving which messages

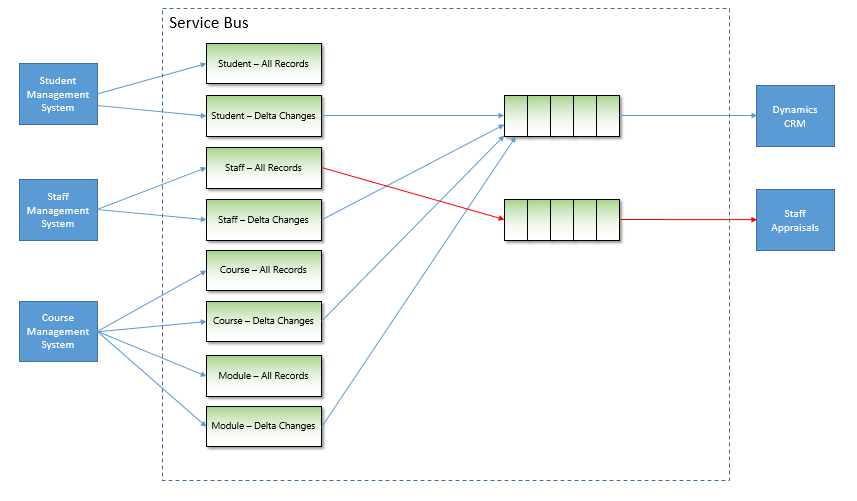

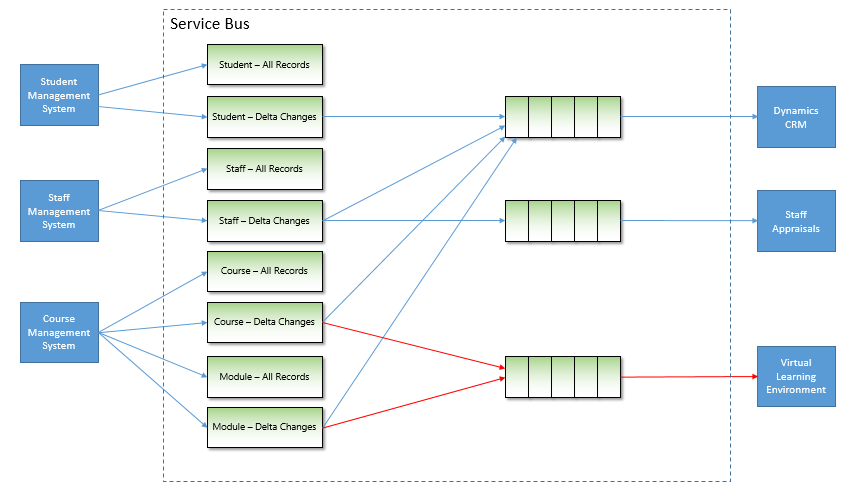

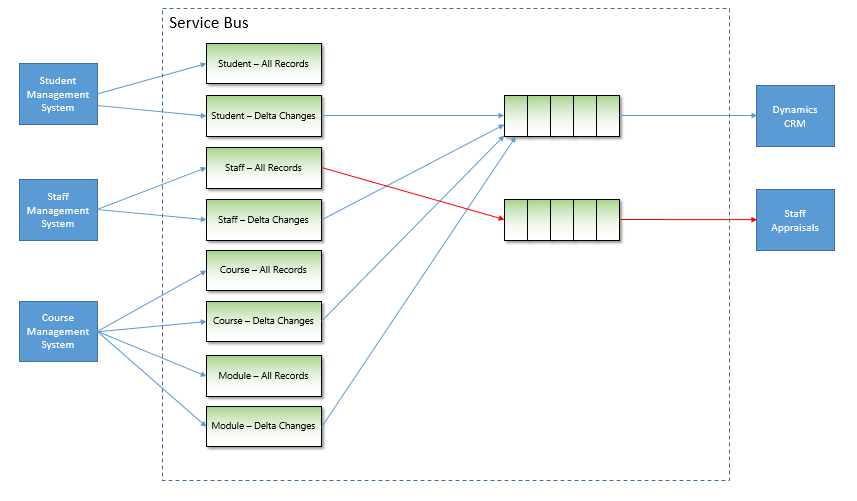

Once we had done our initial project where we had the core data entities available on Service Bus when the next project came along and they wanted staff information then we could simply add a subscription and routing to forward staff messages to a queue for this new application. The below diagram shows how a staff appraisal application was easily able to be fed staff information without having to care about the staff system of record. That problem had already been solved.

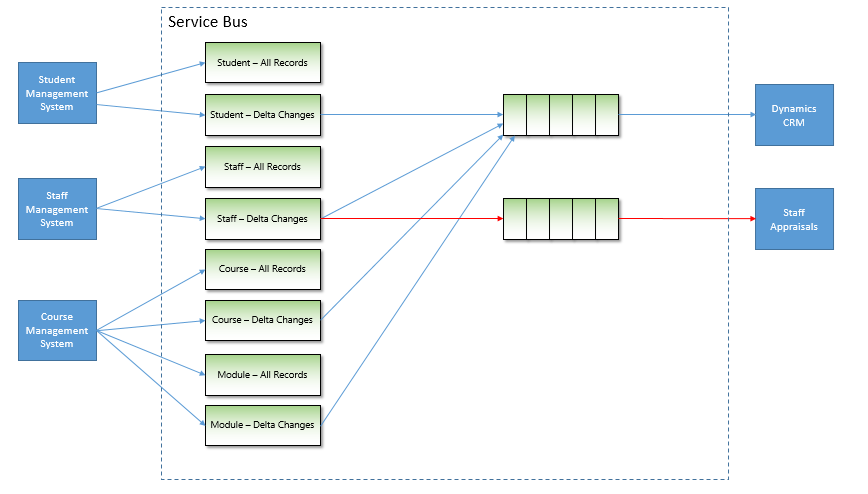

If you notice the staff appraisal application was getting all staff information every time it was published, when the project got to a more stable point we modified the routing rules so that the staff appraisal application was only sent staff records which are changing. To do this we just modified the subscription rules like in the diagram below:

In the next project we had a similar scenario where this time the virtual learning environment needed course data. This data was already available in service bus so we could create subscriptions and start sending this information with a significant reduction in the effort required. The below diagram illustrates the extension to the architecture:

After a number of iterations of our platform with various projects adding more key data entities to the platform we now have most of the key organisational data available in a plug and play architecture. While this may sound a bit like the ESB pattern to some, the key thing to remember is that the challenges of ESB were that they combined messaging alongside logical processing and business process to make things very complex. In Service Bus the focus is purely on messaging, this keeps things very simple and allows you to build the architecture up with the right technologies for each application scenario. For example in one scenario I might have a Logic App which processes a queue and sends messages to Salesforce. We might have a BizTalk Server which converts a message to HL7 and sends it to a healthcare app or I might do things in .net code with a Web Job. I have lots of choices on how to process the messages. The only real coupling in this architecture is the coupling of an application to a queue and some message formats.

There are still some challenges you may come across in this architecture, mainly it will be in cases where an application needs more information than the core message provides. Lots of applications have slightly different views on data entities and different attributes. One of the possibilities is to use something like a Logic App so that when you process a message you may make an API call and look up additional data to help with message processing. This would be a typical enrichment pattern.

The key thing I wanted to get across in this article is that in a time where we are being told API and iPaaS are simplifying integration, messaging as an architecture pattern still has a very valuable and effective place and if done well can really empower your business rather than building the same interface over and over again but in slightly different forms.

by michaelstephensonuk | Apr 28, 2017 | BizTalk Community Blogs via Syndication

Recently I had the opportunity to look at the options for combining BizTalk and Azure Functions for some real world scenarios. The most likely scenarios today are:

- Call a function using the HTTP trigger

- Add a message to a service bus queue and allow the function to execute via the Service Bus trigger

This is great because this gives us support for synchronous and asynchronous execution of a function from BizTalk.

The queue option doesn’t really need any elaboration, there is an adapter to call Service Bus from BizTalk and there are lots of resources out there to show you how to use a Queue trigger in a function.

What I did want to show however was an example of how to call a function using the http trigger. First question though is why would you want to do this?

I think the best example would be when using a façade pattern. This is a pattern we use a lot where we often create a web service component to encapsulate some logic to call an external system and then we call that web service from BizTalk. The idea is that we make the interaction from BizTalk to the web service simple and the web service hides the complexity. In the real world we usually do this for the following reasons:

- It makes the solution simpler

- The performance of the solution will be improved

- It is cheaper and easier to maintain in some cases

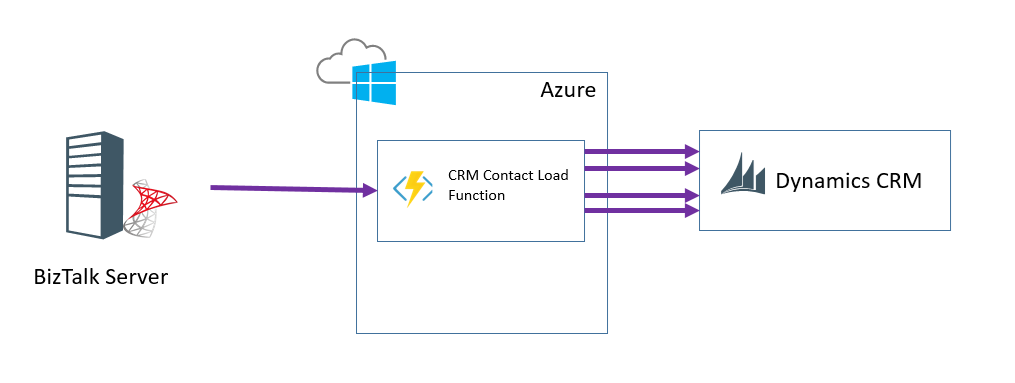

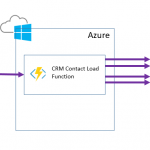

Azure Functions offers us an alternative way to implement the façade component compared to using a normal web service which we might host in IIS or on Azure App Service. Lets take a look at an imaginary architecture showing this in action.

In this example imagine that BizTalk makes a call to the function. In the function it makes multiple calls to CRM to lookup various entity relationships and then create a final entity to insert. This would be a typical use of the façade pattern for this type of integration.

Demo

In the video below I show an example of consuming a function from BizTalk.

[embedded content]

What would I like to see Microsoft do with BizTalk + Functions?

There are lots of ways you can use Functions today from within BizTalk, but Microsoft are now investing quite heavily in enhancing BizTalk and combining it with cloud features. I would love to see some “Call Function” out of the box capabilities.

Imagine if BizTalk included some platform settings where you could register a function. This would mean a list of functions which had a friendly name, the url to call the function and a key for calling it. Maybe there was a couple of variations on this register function capability so you could use it from different places. First off:

- Call Azure Function shape in an Orchestration

This would be a shape where we could pass 1 message into it a bit like the transform shape we have now and get 1 message out. Under the hood the shape would convert the messages to xml or json then call the function. In the orchestration you would specify the function to call from a drop down choosing from the registered functions. This would abstract the orchestration from the functions changeable information (url/key).

This would be like the expression shape in some ways but by externalising the logic into a function it would become much easier to change the logic if needed.

- Call Azure Function mapping functoid

We drag the azure function functoid onto the map designer. We wire up the input parameters and destination. You supply your friendly named function from the functions you have registered with BizTalk. When the map executes again it makes an http call to the function. The function would probably have to make some assumptions about the data it was getting, maybe a collection of strings coming in and a single string coming out which the map could then further convert to other data types

The use of a function in mapping would be great for reference data mapping where you want an easy way to change the code at runtime.

by michaelstephensonuk | Mar 12, 2017 | BizTalk Community Blogs via Syndication

A friend shared a couple of links with me recently about the Mulesoft IPO which is happening soon and it got me thinking about how this might affect us in the Microsoft integration world. First off those links:

It is really interesting to see a major move like this by one of the big iPaas players. Mulesoft is a company I have followed for some time, in particular during the years when Microsoft were making such a marketing disaster around their integration offering up until 2015. At that point Microsoft has lost their way in the integration space there was a point when there was a serious consideration about switching to focus on Mulesoft for my customers. The main driver for this wasn’t technical. It was all driven by the fact that Mulesoft made such a great marketing story and such a lot of noise in the industry that it was difficult not to want to follow it. At the time I held fire because I wanted to make a major bet on Microsoft Azure Cloud and I felt that the changes Microsoft were looking to make around their integration technologies would eventually pay off in the long run and the marketing as part of the Azure brand would fix the problems Microsoft were experiencing pre-2015.

If we take a look at some of the interesting points from the Mulesoft articles however we notice that:

- Mulesoft is growing at 70%

- Subscription recenue is at $150m per year

- Professional Services is $35 per year

- Their growth looks to have dropped off slightly in 2016 compared to 2015

- They now have 1071 customers (I assume this is subscription paying customers)

- New customer value nearly doubled from $77k to $169k (assume per year)

- Mulesoft is expected to go public at something north of $1.5 billion

The interesting bit between those articles which is a bit of a conflict is the first one suggests that Mulesoft is “rapidly approaching cashflow from operations breakeven and net income profitability” where as the article from CNBC suggests that Mulesoft “lost $50million of $188 million in revenue in 2016 and $65 million in 2015”. Im not sure which is right but lets assume its somewhere in the middle.

On paper Mulesoft would seem to be an interesting investment for customers and investors however I feel there are a few threats to Mulesoft which will make the future from the current industry positioning and the various things Microsoft are doing which would make an interesting discussion.

Threat 1 – Pricing Model

I think one thing has been missed however in the analysis and that is how the iPaaS landscape is starting to change. A similar thing happened with API Management (APIM) a couple of years ago. If you think back to 2013-2015 people were going crazy about API Management and it was viewed as a premium service which companies were paying 100K+ per year for a proxy in front of their API which offered added value features. The problem was that those APIM vendors were typically niche vendors who only did APIM. Eventually along came Microsoft and Amazon who offered APIM as a commodity service. They didn’t need to charge a high premium because if you use their APIM then you are likely to be using many of their other services too. This cross sell ability of the big cloud players meant you could now get APIM for 5 times cheaper in some cases. You might sacrifice some nice to have features but the core capability was there. If we now compare this with the iPaaS world. If you compare this to the iPaaS world today and look at some of the main players on Gartner or Forrester you will see a similar pattern in their pricing models. The pricing is often quite vague with things like “per connection” or “for N connections” with no real definition of what a connection is. Some examples are below:

In all of these cases the pricing model boils down to having to contact the vendor and get their sales team involved before you can start. Not very “cloudy” in my opinion.

If we now consider how iPaaS is changing from a premium offering to a commodity, in particular driven by Microsoft with their Logic Apps offering the pricing model has these key differences:

- The price is publically displayed

- The price is charged on a per action basis which is genuinely pay as you go rather than paid for by “Compute unit” which means that the customer is usually paying for a % more capacity than they need just like in the old om premise server capacity models

If we consider the typical new Mulesoft customer who is spending approximately 169,000 per year for Mulesoft compute units then the equivalent is on Logic Apps you would get in the region of 4,000,000,000 actions per year. I think I would consider the costing models of Logic Apps to be a proper per usage cost model vs a per compute unit cost model used by most of the other vendors and perhaps this is going to be the evolution of Generation 2 iPaaS as other vendors follow this trend moving to a more Serverless model.

While Mulesoft has been in a great position for the last couple of years and made great progress, I wonder if the public offering is coordinated to this new threat of Generation 2 iPaaS which is genuinely Serverless whereas Generation 1 may look like Platform as a Service but it is clearly tied to underlying server infrastructure which is abstracted from the end customer. I would guess from my playing around with the product that it would take a reasonably big re-architecture of their product to be able to support a similar cost model to Logic Apps in particular when Mulesoft seems to runs on AWS rather than its own cloud fabric.

Threat 2 – Cross Sell

Mulesoft has a limited set of products focused around the integration space. They cover:

- iPaaS

- API Management

- Connectors

- Message Queue

This is the core bits of most integration platforms and they state that when you need something they don’t do then you should use “best of breed”. This is a valid approach and one used for a long time by vendors, but when competing against the big cloud vendors who have other stuff on their cloud the question is do I want:

- Option A – Go to another vendor, start a whole procurement process, evaluate options and N months later I can start using another product

- Option B – Click 3 buttons and have the capability on the cloud im already using

I would argue that in todays world of agility and speed option B is much more popular than an IT procurement exercise.

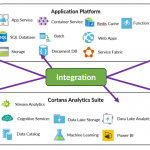

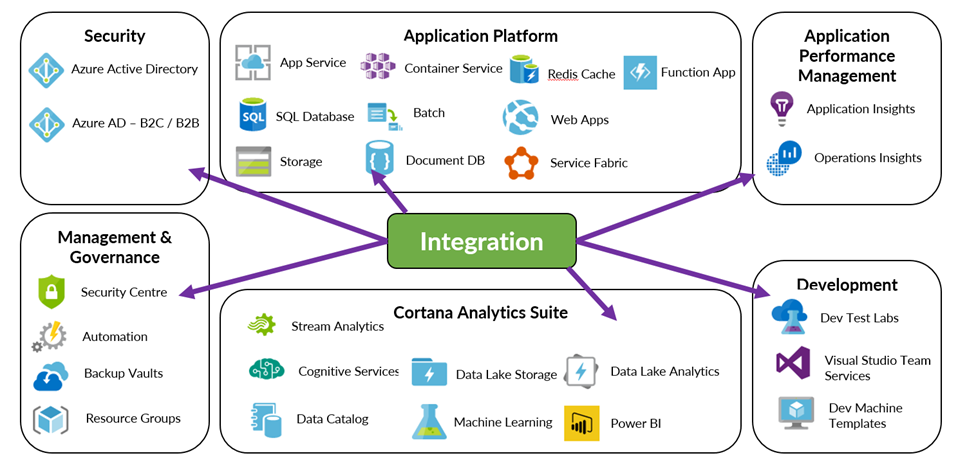

If we think about the Azure offering, the secret sauce to the Microsoft Integration platform is that you have the rest of the Azure cloud to use as illustrated below.

The key difference from this cross sell capability at the vendor is that companies like Amazon & Microsoft can make a platform play where they provide the platform for holistic solutions for the entire enterprise. This can be things such as classic infrastructure, through PaaS and to innovative stuff like machine learning, BOT frameworks, Big Data, Block Chain. Mulesoft is not in this platform level cloud game and can only offer a specialised niche around integration.

Thread 3 – Democratisation of Integration

One of the big themes in integration today is the democratization of integration. Two of the key elements within this are:

- Allowing the citizen integrator to be involved in the integration solutions the organisation uses

- Opening insights into the integration solutions your organisation has

In the first case, at present Mulesoft has no offering and no visibility of any offering that ive seen aimed at the Citizen Integrator. This is slightly strange as their marketing and blogging teams are usually all over big industry themes in integration but they seem to be giving this one a wide berth. The only stuff ive seen is forums which suggest teaching the citizen integrator to be a developer. If you compare this to the Microsoft offering where you have a very solid offering around Power Apps and Flow which are part of the integration suite but specifically aimed to empower the Citizen Integrator.

In the 2nd case analytics, insights and interesting stuff from your integration solutions is one of the best things about modern integration which can allow the business to get real added value from integration. Mulesoft has the analytics you would expect for their APIM offering and it also has a business events capability within the management console. While this ticks the basic boxes of reporting and insights it is lacking in the things the other major cloud vendors can offer. For example with Microsoft you have the ability to use Operations Manager Suite, Power BI, Cortana Analytics Suite, Application Insights all along side your integration solution to give you deep insights which can be targeted at different audiences such as an IT Pro or Business User or even a customer. The power to build a much richer solution is there.

Threat 4 – Target Customers

The fourth big threat is also associated with the pricing model. Mulesoft is only really relevant for big enterprise customers. They have 1000 of those but they are in a place where lots of established names such as Oracle, Tibco, Microsoft and various others already have major products with much higher customer numbers. EG: Microsoft BizTalk Server has 10,000+ customers. While Mulesoft may have made some inroads in winning customers by replacing their traditional integration platform, or more likely complimenting it with an iPaaS capability, this area is a competitive area. I said a few years ago at the Integrate conference that I felt the next big place for System Integrators would be with iPaaS products who could offer solutions for the SME companies. This is exactly where the Microsoft Logic Apps offering hits the nail on the head. With Azure an SME can setup a cloud scale, enterprise ready integration product and spend next to $0. They could build 1 simple interface to begin with and pay as they go. Over time its feasible they could grow significantly and just pay more as they use more. This opens up a world where Microsoft could conceivably have hundreds of thousands of SME customers using their iPaaS offering in a way none of the above vendors could compete with.

Its difficult to see how Mulesoft could compete in this space with their current cost model and its difficult to see how the cost model could change with the current product architecture.

Threat 5 – Questionable Innovation

If you look at the Mulesoft product offering over the last few years and consider how it has evolved, changed and how they have innovated then you could argue the answer is “not that much”. In the last couple of years the main new features are:

- A new mapper

- Anypoint MQ – JMS

- Monitoring

The reality is those 3 key areas are basic product capabilities required of ESB/iPaaS offerings so id hardly call that innovation.

Instead in the last couple of years Mulesoft have focused on getting as much return for the product they had through fantastic marketing and PR creating awareness in the industry. While they have lots of success you could argue that their ability to execute and completeness of vision has been overtaken by other vendors and also the integration world has been evolving.

In a post IPO world, is it likely that investment in R&D will see many new innovations when there will be drivers to reduce losses?

Threat 6 – Security Story

Following on from the innovation question, I also wonder about the positioning of Mulesofts product stack in terms of collaboration with security products that are out there. If you consider the Microsoft world for a moment we see security in fundamental places like Azure Active Directory, Azure Active Directory B2C, Role Based Access Security, API Management security stories, multifactor authentication all giving Azure customers a fantastic hybrid security model covering the enterprise and customers. You then add to the mix Azure offerings such as Security Centre, Azure Advisor and Operations Manager Suite which all look at your solutions and tell you how they are doing against good practices, if there are any vulnerabilities and other good things like that.

The Integration Platform from Microsoft inherits all of this good stuff.

In the Mulesoft space, outside of the security used by its connectors to talk to an application there is a very limited security or governance story. I believe in the coming years this is one of the key areas customers will focus on much more when their cloud maturity increases.

Threat 7 – Post IPO Changes

I would suggest this is the biggest threat to Mulesoft, after an IPO many companies change in various ways. Some examples might include:

- Some of your good staff who were here for the IPO opportunity may move on to the next opportunity

- You now have to change from an attractive looking proposition to a business that makes a profit

- Its not so easy to go back to the industry for additional rounds of funding like Mulesoft have done a few times in recent years

I feel the biggest challenge is when the company now has to start being profitable. Well the challenge is that the customers you already have are paying a lot per customer for the services (based on what the articles above suggest) so its probably difficult to sell more compute to your existing customers. This leaves 2 avenues:

- Sell to more customers

- Reduce costs

Selling to more customers is going to be difficult, 3 years ago few people had heard of Mulesoft but today you see their ads everywhere and its hard to come across an organisation who hasn’t already heard of Mulesoft. Based on the numbers being mentioned in those articles, im not sure if even doubling their customer numbers from 1000 to 2000 would get them into regular profit. That’s before the fact that customers are becoming wiser to the challenge that iPaaS is not all about marketing blagware and buzz words and also realize that integration today doesn’t always need to be expensive.

This leaves reducing costs as a likely course of action and that means less noise and activity from Mulesoft.

Prediction

While I think it’s a great time to go public, I do wonder if the future for Mulesoft could be similar to what happened to APIGee when they went public (http://uk.businessinsider.com/why-google-spent-625-million-on-apigee-2016-11?r=US&IR=T). The problem is they are a niche company and cannot easily cross sell other services which they do as they don’t have the platform that the big cloud players have.

My prediction will be that as vendors need to move towards being a Generation 2 iPaaS vendor this is where Mulesoft will struggle. They have had major investment so far but will they need to rearchitect their core product to compete in the future? If you look at their products they have spent a lot of time over the last couple of years trying to bolt bits on so it to meet their sales commitment and lots of investment around sales, marketing and promotion, but there has been limited real product innovation in this time?

If Mulesoft have a similar journey to APIGee then one thing is for sure, Amazon and Google have not got much of an iPaaS offering so you could see an obvious acquisition target which would boost their cloud offerings. The only question around that however is with major investors like Salesforce, Cisco and Service Now you do wonder if that would be feasible.

From a Microsoft Integration persons perspective, all of this is fantastic news. Times have been tough for use for a few years competing with the marketing power of Mulesoft when Microsoft had up until recently been investing so little in marketing their integration stack and equally as little effort in selling it. Since that time though they now have a far superior integration suite and a genuine cloud platform offering which suits most customers. If Mulesoft turn out to have a bunch of internal challenges as result of the transition from private to public company then this will make life a lot simpler and perhaps my linked in ad’s will eventually stop spamming me with Mulesoft 10 times per day J

One thing is for sure it will certainly be interesting to watch the journey of Mulesoft in the public world and taking it to the next level and I wish them all the best.

The post Mulesoft IPO and what it means for Microsoft System Integrators appeared first on Microsoft Integration & Cloud Architect.

by michaelstephensonuk | Mar 9, 2017 | BizTalk Community Blogs via Syndication

When we build integration solutions one of the biggest challenges we face is “sh!t in sh!t out”. Explained more eloquently we often have line of business systems which have some poor data in it and then we have to massage this and work around it in the integration solution so that the receivers of the data don’t break when they get that data. Also sometimes the receiver doesn’t break but its functionality is impaired by poor data.

Having faced this challenge recently and the problem in many organizations is as follows:

- No one knows there is a data quality issue

- If it is known then its difficult to workout how bad it is or estimate its impact

- Often no one own the problem

- If no one owns the problem then its unlikely anyone is fixing the problem

Imagine we have a scenario where we have loaded all of the students from one of our line of business systems into our new CRM system and then we are trying to load course data from another system into CRM and to make it all match up. When we try to ask questions of the data in CRM we are not getting the answers we expect and people lack confidence in the new solution. The thing is, the root cause of the problem is poor data quality from the underlying systems but the end users don’t have visibility of that so they just see the problem being with the new system as the old stuff has been around and kind of worked for years.

Dealing with the Issue

There are a number of ways you can tackle this problem and we saw business steering groups discussing data quality and other such things but nothing was as effective and cheap as a simple solution we put in place.

If you can imagine that we use BizTalk to extract the data from the source system and then load it to Service Bus, from where we have various approaches to put/sub the data into other systems. The main recipient of most of the data was Microsoft Dynamics CRM Online. Our idea was to implement some tests of the data as we attempted to load it into CRM. We implemented these in .net and the result of the tests would be a decimal value representing a % score based on the number of tests passed and a string listing the names of the tests that failed.

We would then save this data alongside the record as part of the CRM entity so it was very visible. You can see an example of this below:

We implemented tests like the following:

- Is a field populated

- Does the text match a regular expression

- If we had a relationship to another entity can we find a match

For most of the records we would implement 10 to 20 tests of the data coming from other systems. We can then in CRM easily sort and manage records based on their data quality score.

Making the results visible

At this point from an operational perspective we were able to see how good and bad the data coming into CRM is on a per record basis. The next thing we need to do is to get some focus on fixing the data. The best way to do this is to provide visualisations to the key stakeholders to show how good or bad the data is.

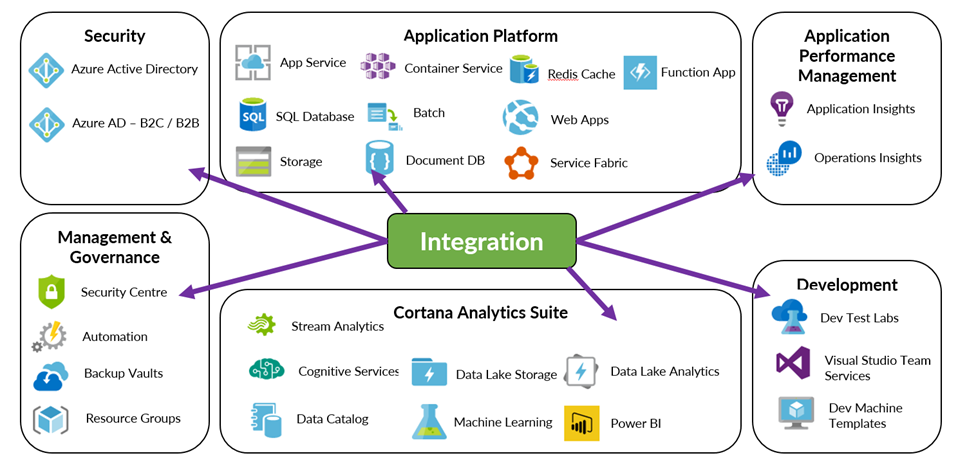

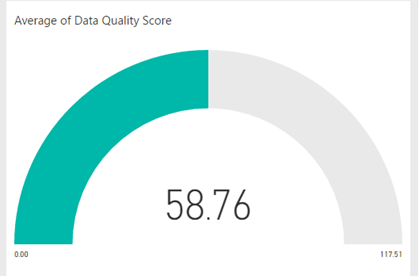

To do this we used a simple Power BI report dashboard pointing at CRM which would do an average for each entity of the data quality score. This is shown in the below picture.

If I am able to say to the business stakeholders that we can not reliably answer certain questions in CRM because the data coming into CRM has a quality score of 50% then this is a powerful statement. Backed up by some specific tests which show whats good and isnt. This is highly likely to create an interest in the stakeholders in improving the data quality so that is serves the purpose they require. The great thing is each time they fix missing data or partly complete data which has accrued over the years into the LOB application, each time data is fixed and reloaded we should see the data quality score improving which means you will get more out of your investment in the new applications.

Summary

The key thing here isnt really how we implemented this solution. We were lucky that adding a few fields to CRM is dead easy. You could implement this in a number of different ways. What is important about this approach is the idea of testing the data during the loading process and recording that quality score and most importantly making it very visible to help everyone have the same view.

The post Dealing with Bad Data in Integration Solutions appeared first on Microsoft Integration & Cloud Architect.

by michaelstephensonuk | Feb 13, 2017 | BizTalk Community Blogs via Syndication

One of my favourite things in Azure is the ability to group stuff into resource groups. Maybe it’s a reflection on myself but I hate looking at messy environments that look like a kid has just emptied their toy box over the floor.

With resource groups you have the ability to group related stuff together in kind of a sandbox. Now there are much more powerful features to resource groups such as the ability to automate their setup and deployment but without even considering those, just the grouping of stuff on its own is very handy. Recently I was working with some of the support team around some knowledge transfer sessions to help them understand our integration platform and as you would expect when a group has limited experience with cloud there is an element of fear of the unknown but when I started explaining how the complex platform was grouped into solutions to make it easier to understand you could see this was a good first step rather than showing them a million different components which they wouldn’t be able to understand the relationships between.

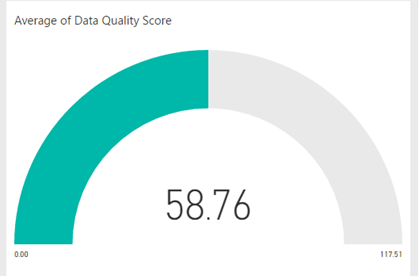

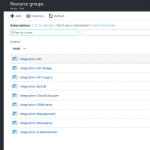

Below is a picture showing some of our resource groups.

In this particular one here is what some of them do:

- Integration-API = This contains app service components which support our API & Microservices architecture

- Integration-API-Bridge = This contains a web job which acts as a bridge between service bus queues and our API

- Integration-API-Legacy = This contains some older API style components which are expected to be deprecated soon

- Integration-BizTalk = This contains some Azure services which support some of our BizTalk applications. Mainly app insights and some storage. Note our BizTalk instance is on premise in this instance

- Integration-CloudDataLayer = We have some shared data which is available and is stored in this resource group, it includes DocDB, Azure Storage and Azure Search

- Integration-CRMEvents = This contains some Web Jobs and Functions which handle events published from Dynamics CRM Online to Azure Service Bus

- Integration-Management = This contains some tools and widgets to help us monitor and manage the platform

- Integration-Messaging = This contains service bus namespaces for relay and brokered messaging

- Integration-ScheduledJobs = This contains Azure Scheduler to help us have a single place for triggering time based integration scenarios

As we use new Azure features it helps to group these into new or existing resource groups so we have a clear way of logically partitioning the integration platform and I think this helps from the maintenance perspective and keeps your total cost of ownership down.

Wonder how other people are grouping their stuff in Azure for integration?

by michaelstephensonuk | Feb 13, 2017 | BizTalk Community Blogs via Syndication

While chatting to Jeff Holland on integration Monday this evening I mentioned some thinking I had been doing around how to articulate the various products within the Microsoft Integration Platform in terms of their codeless vs Serverless nature.

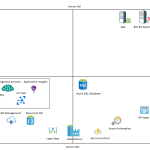

Below is a rough diagram with me brain dumping a rough idea where each product would be in terms of these two axis.

(Note: This is just my rough approximation of where things could be positioned)

If you look at the definition of Serverless then there are some obvious things like functions which are very Serverless, but the characteristics of what makes something Serverless or not is a little grey in some areas so I would argue that its possible for one service to be more Serverless than another. Lets take Azure App Service Web Jobs and Azure Functions. These two services are very similar, they can both be event driven, neither has an actual physical server that you touch but they have some differences. The Web Job is paid for in increments based on the hosting plan size and number instances where as the function is per execution. Based on this I think its fair to say that a lot of the PaaS services on Azure could fall into a catchment that they have characteristics of Serverless.

When you consider the codeless elements of the services I have tried to consider both code and configuration elements. It is really a case of how much code do I need to write and how complex is it that differentiates one service from another. If we take web apps either on Azure App Service or IIS you get a codebase and a lot of code to write to create your app. Compare this with functions where you tend to be writing a specific piece of code for a specific job and there is unlikely to be loads of nuget packages and code not aimed at the functions specific purpose.

Interesting Comparisons

If you look at the positioning of some things there are some interesting comparisons. Lets take BizTalk and Logic Apps. I would argue that a typical BizTalk App would take a lot more developer code to create than it would to do a Logic App. I don’t think you could really disagree with that. BizTalk Server is also clearly has one of the more complex server dependencies where as Logic Apps is very Serverless.

If you compare Flow against Logic Apps you can see they are similar in terms of Serverless(ness). I would argue that for Logic Apps the code and configuration knowledge required is higher and this is reinforced by the target user for each product.

What does this mean?

In reality the position of a product in terms of Serverless or codeless doesn’t really mean that much. It doesn’t necessarily mean one is better than the other but it can mean that in certain conditions a product with certain characteristics will have benefits. For example if you are working with a customer who wants to very specifically lock down their SFTP site to 1 or 2 ip addresses accessing it then you might find a server base approach such as BizTalk will find this quite easy where as Logic Apps might not support that requirement.

The spectrum also doesn’t consider other factors such as complexity, cost, time to value, scale, etc. When making decisions on product selection don’t forget to include these things too.

How can I use this information?

Ok with the above said this doesn’t mean that you cant draw some useful things from considering the Microsoft Integration Stack in terms of Codeless vs Serverless. The main one I would look at is thinking about the people in my organisation and the aims of my organisation and how to make technology choices which are aligned to that. As ever good architecture decision making is a key factor in the successful integration platform.

Here are some examples:

- If I am working with a company who wants to remove their on premise data centre foot print then I can still use server technologies in IaaS but that company will potentially be more in favour of Serverless and PaaS type solutions as it allows them to reduce the operational overhead of their solutions.

- If I am working with a company who has a lot of developers and wants to share the workload among a wider team and minimize niche skill sets then I would tend to look to solutions such as Functions, Web Jobs, Web Apps, Service Bus. The democratization of integration approach lets your wider team be involved in large portions of your integration developments

- If my customer wants to that that further and empower the Citizen Integrator then Flow and Power Apps are good choices. There is no Server-full equivalent to these in the Microsoft stack

The few things you can certainly draw from the Microsoft stack are:

- There are lots of choices which should suit organisations regardless of their attitude/preferences and reinforces the strength and breadth of the Microsoft Integration Platform through its core services and relationships to other Azure Services.

- Azure has a very strong PaaS platform covering codeless and coding solutions and this means there are lots of choices with Serverless characteristics which will reduce your operational overheads

Would be interesting to hear what others think.

by michaelstephensonuk | Feb 7, 2017 | BizTalk Community Blogs via Syndication

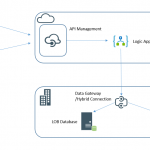

Recently I have been thinking about a sample use case where you may want to expose legacy applications via a hybrid API but you have the challenge that the underlying LOB applications are not really fit for purpose to be the underlying sub-systems for a hybrid API. In this case we have some information held across 2 databases which are years old and clogged full of crappy technical debt which has festered over the years. Creating an API with a strong dependency on these would be like building on quick sand.

At the same time the organisation has a desire at some point (unknown timescale) to transition from the legacy applications to something new but we do not know what that will be yet.

I love these kinds of architecture challenges and it emphasises the point that there isn’t really such a thing as a target architecture because things always change so you need to think of architecture as always in transition and as a journey. If we are going to do something now we should add some thinking about what we might do later to make sure the journey is smooth.

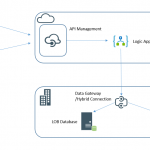

Expected Architecture

Below is a diagram of one of a number of ways you might expect to implement the typical hybrid API architecture using the Microsoft technology stack today. This would be one of the typical candidate architectures for consideration for this pattern in normal circumstances.

The problem with this architecture is mainly the dependency on the LOB systems which mean we inherit some very risky stuff for our API. For example if we suddenly had a large number of users on the API (or even a small number) you could easily see these legacy systems creaking.

Proposed Architecture

What I was considering instead was the idea that if we can define a canonical data definition for the data which we know doesn’t change very often then we can sync the data to the cloud and the API would use that cloud data to drive the API. This means our API would be very robust and scalable. At the same time it also means we take all of the load away from the LOB applications so we do not risk breaking them.

Once the data is in a nice json friendly format in the cloud this means we have distinct layer of separation between the API and underlying LOB applications. For our future transition to replace the LOB applications this means we just need to keep the JSON files up to date to support the API which should be relatively simple to do.

At present I think BizTalk is our best tool for bending the LOB data to the JSON formats as it does dirty LOB integration very well and can be scheduled to run when required. In the future we may or may not use BizTalk to do the sync process, that’s a decision we will make at the time.

API Management to Blob Storage

Following on from my previous post, by using APIM and a well structured data model using the files in blob storage it became very easy to expose the files in blob storage as the API with zero code. We could simply use the url template rewrite features to convert the API proxy friendly url for the client to the the back end Azure Blob Storage url.

The next result is our API would scale massively without too much trouble, have zero code, can use all of the APIM features and give a great way for client applications to search the course library without us worrying about the underlying LOB applications

The below video will demonstrate a quick walk through of this.

[embedded content]

by michaelstephensonuk | Dec 18, 2016 | BizTalk Community Blogs via Syndication

Cutting a long story short I was exploring migrating a BizTalk 2013 solution to BizTalk 2016. The solution uses SFTP and I wanted to migrate from the open source Blogical SFTP adapter to the out of the box SFTP adapter which has had some enhancements. The documentation around this enhancement and in particular the setup is lacking. It will not work out of the box from a BizTalk install unless you additionally setup WinSCP. The problems are:

- The BizTalk documentation lists the wrong version on the dependencies, you need 5.7.7 not 5.7.5

- The WinSCP pages cover lots of other versions and it can be confusing because while their documentation is good and detailed, it talks about using WinSCP with the GAC which you will assume you need for BizTalk. This is a red herring so dont read this bit unless you need to

- There is WinSCP and WinSCP .net library. I got confused between the two and didnt initially realise I need both

To get this up and running the steps you need to follow are:

- Download WinSCP and the .net Library making sure you get the right versions

- Copy the .exe and .dll to the BizTalk installation folder

- DO NOT gac anything. If you GAC the .net library it will not work because it expects WinSCP.exe to be in the same path so thats why they both go in the BizTalk installation folder

This is pretty simple enough in the end but I felt the easiest way to ensure you get the right version is to use NuGet to get version 5.7.7 and to make your life even simpler just use the powershell script below once you have setup your BizTalk environment and it will do it for you. You just need to set the path for your BizTalk install folder and also where you want to download NuGet stuff to.

#Parameters

$downloadNoGetTo = “F:SoftwareWinSCP”

$bizTalkInstallFolder = “F:Program Files (x86)Microsoft BizTalk Server 2016”

#Download NuGet

Write-Host “Downloading Nuget”

$sourceNugetExe = “https://dist.nuget.org/win-x86-commandline/latest/nuget.exe”

$targetNugetExe = “$downloadNoGetTonuget.exe”

Invoke-WebRequest $sourceNugetExe -OutFile $targetNugetExe

#Download the right version of WinSCP

Write-Host “Downloading WinSCP from NuGet”

Invoke-Expression “$targetNugetExe Install WinSCP -Version 5.7.7 -OutputDirectory $downloadNoGetTo”

#Copy WinSCP items to BizTalk Folder

Write-Host “Copying WinSCP Nuget to BizTalk Folder”

Copy-Item “$downloadNoGetToWinSCP.5.7.7contentWinSCP.exe” $bizTalkInstallFolder

Copy-Item “$downloadNoGetToWinSCP.5.7.7libWinSCPnet.dll” $bizTalkInstallFolder

by michaelstephensonuk | Nov 17, 2016 | BizTalk Community Blogs via Syndication

Today we have so many technologies available when it comes to developing integration solutions. In some ways things are a lot easier and in other ways things are harder. One thing is for sure that in technology there has been a lot of change. For many organisations one thing that definitely has not changed is the challenges they face with the non-technical side of integration projects. You know for most companies, the technology you use for the implementation of the project isn’t that important when it comes to the decisive factor that determines success from failure. If you choose vendor A or vendor B, as long as your team know how to use the technology they will usually be able to build stuff successfully. With that said the thing organisations struggle with still is “how do we get the technology people to build something to do what we want it to do” and the IT organisation then has the challenge of how to live with that solution through its life span.

These are not technology problems, they are problems about communication, collaboration, documentation and allowing people the time to do stuff properly.

In my opinion two of the most common organisational challenges facing integration teams within a business today are:

- Shitty Requirements

Whenever I meet people around the world who are doing integration the one thing that seems to be a common challenge is that generally integration projects start off with a 1 line requirement. “I want to get this data from here to there”.

- Lack of Knowledge Sharing

The world of an IT department is generally a chaotic place so the idea of giving people time to do stuff properly is never really a thing for many organisations. Think of poor developers who barely finish writing code for one solution and then they are shipped to the next project and the department is generally surviving because of all of the information in people’s heads.

For many organisations the thing that is really needed is the ability to collaborate around projects in a way that brings people together and artefacts and information into one place. In some ways this is a big culture shift for some organisations and for others the problem is the lack of tooling. For quite a while now I have been a fan of combining TFS for source code, work items, and other stuff with Confluence and a few other tools but the challenge around the tooling is often licensing, procurement processes and the fragmented nature of using a number of different tools. Recently however I have been playing with Microsoft Teams and I think this is a really good package which I can see helping a lot of organisations. First off there are many ways your company could use it, but in this post I would like to talk about how it could help an integration team. Before going any further here are a couple of links which are useful:

How can my Integration Team Use Teams

First off in Microsoft Teams you could create a team and include people in your integration team. I would recommend not storing sensitive data in the teams area because what you want to do is open the transparency of your team so that your business users can work with you. Include your team members but your stakeholders and key business contacts should be included too. These are the people you will need to capture information from and you want them contributing to the team.

In terms of structuring your team in MS Teams I went for something like shown in the below picture.

Under the team you have Channels. I am thinking of using Channels for the following reasons:

- One for architecture related to the integration platform

- One for infrastructure related to the integration platform

- 1 Channel per interface or integration solution you develop

You may also choose to put in channels for guidance and training and other stuff like that.

What’s in a Channel

The cool thing about a channel is you have a few customization options about what you can have in the channel. Out of the box you get the following:

- Conversations – This is a bit like a slack/yammer style conversation thread

- Files – This is a place to upload documents related to the channel

- Notes – This is a one note work book for the channel

Those are some really handy things, you can also add other tabs to your channel like the below graphic:

My first thoughts for this are that you could use a SharePoint side as a tab to link to a site where you might store any sensitive stuff. You could also use planner as a light weight task board of to do stuff related to the channel. You could maybe link to Team Services for more complex planning.

In general the basic channel provides a way to have conversations, documents and stuff in a single place for a related context. Halleluiah, if we could have no more projects managed via email then the world would be a far less stressful place.

Channel Per Interface?

I mentioned above a few general channels for the bigger areas such as architecture and infrastructure, but one of the biggest wins could be a channel per interface. Imagine we had an interface which did a B2B style integration with a partner to send a list of customer marketing preferences so they could do out sources marketing for us. Think how many companies you may have worked with who may have delivered such an interface and they will often have an interface catalogue but it is usually just a spreadsheet list of interfaces they have (or more often it doesn’t exist), but if you asked the question “tell me everything about this interface”. Well I would guess in Average Company Inc, the answer would be to make you sit with 1 person who is the subject matter expert on it, they 2 more people who are stakeholders and know a bit about it. If your lucky there might also be some documents but I bet they get emailed to you and there are probably a few other documents which kind of say the same stuff but in a different way.

With MS Teams having 1 channel per interface means this list can be out interface catalogue, but it can also be the holder for everything about that interface. Lets have a look at what we could do:

Conversations

First off with conversations, imagine all discussion about the interface happens in 1 place. No more email threads. The conversation would still be available 2 years in the future when the original people on the project have left and the new people can see the history of discussion around the interface. Below shows some example conversations. Given this is just me but the example comments are from a real project. In many projects the history of the journey of how a project/interface got from start to implementation is a goldmine of knowledge which often leaves the organisation when the project is over. This can be avoided by using conversations.

Files

Files provides a simple place for any documentation which relates to the interface. Ideally any internal documentation produced by the team would be in the One Note notes which we will talk about in a minute. Often documentation is produced before your team is involved or gets supplied by vendors and its often a challenge to find where to keep it. This file store with the interface is a great option.

Below is an example:

You might ask why MS Teams and why not SharePoint. First off im not a big fan of documents. They are often old and obsolete and incorrect. I much prefer the wiki, one note and confluence style of approach. That said documents do still exist on projects. Keeping them close to the context where they are used just means they don’t get lost or forgotten about. I think using SharePoint if you need the added security etc that it brings is fair enough but for many cases its probably a bit over the top and just adds more steps to maintaining effective documentation.

Notes

Having a One Note workbook in the team is really cool. Im a big fan of using this for elaborating on the interface, flushing out requirements and then maintaining this for the support team and dev team for the long term. One Note encourages it to be light weight and effective documentation. This is a view of how we can use it. The page structure could look like below:

I think this is a minimum set of pages which will help you structure your information effectively.

The high level requirements page can just be a table of requirements which are teased out of stakeholders and taken from conversations in the team space. It might look like below:

The features and scenarios page would help us to write gherkin style stories of what we want the interface to do. These stories should be simple enough for everyone in the team to understand.

Next we might have message specifications. They could be json, xml, flat file, edi, etc. The key thing is to include sample messages and definitions of the messages so we know data is in the correct formats.

When it comes to the architecture element of your interface, I am a fan of the context, containers and components approach as a lightweight way of expressing the architecture of an interface. Although the diagrams below probably could be flushed out a bit more they will do for this example. In the One Note page I can start with some simple pen drawn diagrams to illustrate the key points. This is shown below.

Later when the project starts to stabilise I might choose to draw the diagrams in a more formal way using Visio or Lucidchart but certainly early in the project you spend lots of time redrawing the diagram as things evolve so lets keep it simple and use pen. You can open the One Note page in the full One Note client to get the richer drawing experience.

In the interface design section we can again elaborate on the interface further and include some specifics on the implementation. Again in the early stages I can just use pen drawn diagrams if I want and later replace them.

In the code and deployment pages id simple document what it does and how to deploy it. Im also a fan of using videos so we can do a video walk through of the code and upload it to the files section and provide a link to watch a walk through.

In the support page this will be a 2 way set of documentation between your ops/DevOps team and everyone else who is a stakeholder around support aspects of the interface. Everyone should be able to contribute from things developers learn in development and ops people also learn post go live. An example is below:

The notes should really be the living documentation to support the interface through its lifecycle.

Plan

I like the idea of being able to have planning options associated with the interface. I have some options here. First off for a higher level plan I can link to a Team Services project and see this at team level, but another option I really like is Planner. If your not familiar with it then this is a feature in Office 365 which is a bit like Trello. It gives me a basic task board and if I consider this to be at interface level it’s a great way to keep an eye on tasks at that level. You could include delivery tasks, bugs, technical debt clean up and loads of things specific to this interface. I think this is especially important post go live for the initial release of the interface as it gives you a place to keep tasks that may not be done until some future time as an optimisation activity.

In the below picture it shows the simple task board for Planner created directly from our Team channel

Power BI

One of the challenges of changing the culture is how to get people in contributing to the team, one of the best ways to do this is to connect and reporting or MI related to the interface to the Team channel. MS Teams lets you have multiple Power BI tabs in the channel and you can then bring in team dashboards. In the below picture I have chosen to bring in a UAT and Production dashboard for the interface as this lets the team see how things are performing in test and live.

I mean how cool is that!!

What about Cross Functional Teams

If you watched me talk at Integrate in 2016 you may remember how I talked about how organisations are changing from having centralised integration teams to cross functional teams which means those doing integration are all over the organisation. Well the reality of it is that that approach takes all of the non technical challenges organisations face and makes them worse. Possibly having multiple teams doing their own thing in their own way.

With MS Teams you can treat the Integration Team as a virtual team which is comprised of people who are in different delivery teams. What you want is them working in a manner with respect to integration that is aligned and allows for teams to create, change and disappear without knowledge leaving the organisation or your interfaces becoming orphaned. Using MS Teams to collaborate as a virtual team is a great way to support your organisation doing cross functional team based development while allowing your integration specialists to have visibility and to apply governance across those teams.

Conclusion

Im really excited about how I think MS Teams could be applied by integration teams to help solve some of the problems we face with many customers and organisations which fall into the culture, communication and collaboration space which lets face it has a much bigger impact on the overall success of your project than anything technical will.

Id love to hear how others are looking to use it.

by michaelstephensonuk | Nov 7, 2016 | BizTalk Community Blogs via Syndication

Tonight we had loads of people on the integration monday webcast and there were a few people who wanted the slides asap to share with their teams. We will be posting the video from tonights session and the slides on the link below tomorrow once the video is processed as we do each week along side all past events.

For those who wanted slides asap just sharing them here temporarily but do check back on the below link for the video: http://cscblogsamples.blob.core.windows.net/publicblogsamples/Integration%20monday%20-%20Product%20Group%20presentation.pdf

Past Events