by community-syndication | Oct 17, 2012 | BizTalk Community Blogs via Syndication

Have you heard that the Microsoft Surface is now available for pre-order?

Be one of the first to score the hottest Windows tablet! Register for any 5-day class before November 1st and when you attend you will receive a free Microsoft Surface from QuickLearn.

Visit QuickLearn’s Course Calendar and select your next course!

Take advantage of this offer while registering by using the promotion code: SURFACE.

This offer is only valid for 5-day courses from Kirkland, WA with a retail value of $2,995 or higher booked between October 17th and November 1st, 2012. Not valid when combined with any other offer or discount. Offer is for 32 GB Surface with Windows RT ($499 value). Does not include cover or accessories.

See the ads

by community-syndication | Oct 17, 2012 | BizTalk Community Blogs via Syndication

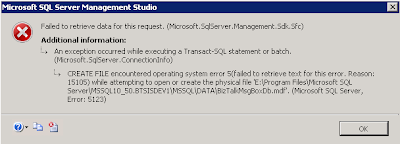

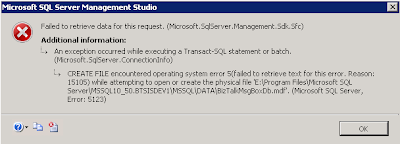

When trying to reattach a database I kept getting following error:

In the SQL log I could find below error. The same error was given when trying to attach the DB with a T-SQL statement.

Msg 5120, Level 16, State 101, Line 3 Unable to open the physical file “E:\Program Files\Microsoft SQL Server\MSSQL10_50.BTSISDEV1\MSSQL\DATA\BizTalkMsgBoxDb.mdf”. Operating system error 5: “5(failed to retrieve text for this error. Reason: 15105)”.

After several attempts on how to solve this I finally found the solution.

Turned out, all I needed to do was run the SQL Server Management Studio as an Administrator.

How can it be that simple, yet has me looking for a solution for over an hour :).

by community-syndication | Oct 17, 2012 | BizTalk Community Blogs via Syndication

I’ve been asked quite often of late what the best way to keep track of BizTalk WCF Services is, and I have found myself changing my mind quite a few times on this one (theprevious incumbent involved creating WCF Service projects in my BizTalk solutions but I’ve since gone sour on this idea)before I settled […]

Blog Post by: Johann

by community-syndication | Oct 17, 2012 | BizTalk Community Blogs via Syndication

I recently ran into an intermittent problem where we were receiving the following exception: System.InvalidOperationException: There were not enough free threads in the ThreadPool to complete the operation. in conjunction with a stack trace indicating we were well and truly down in the WCF ServiceModel code base. This resulted in numerous suspended messages, and a […]

Blog Post by: Brett

by community-syndication | Oct 16, 2012 | BizTalk Community Blogs via Syndication

This is a continuation to my previous article. Please have a look if you hadn’t seen it. Sending Soap with Attachments (SwA) using BizTalk Below are the steps that we have to follow for this exercise. 1. Create a custom message encoder which can send XML as an attachment in the SOAP envelope message. 2. […]

Blog Post by: shankarsbiztalk

by community-syndication | Oct 16, 2012 | BizTalk Community Blogs via Syndication

Soap With attachments is a very old technique and is still used in lot of legacy systems. More new technologies has emerged like MTOM which is widely used within the WCF arena. That said, there is no out of the box support within WCF to send a SOAP message with attachments. An Austrian Microsoft Interoperability […]

Blog Post by: shankarsbiztalk

by community-syndication | Oct 16, 2012 | BizTalk Community Blogs via Syndication

I am happy to see that my contribution – BizTalk custom pipeline component has made it to list. http://social.technet.microsoft.com/wiki/contents/articles/11679.biztalk-list-of-custom-pipeline-components-en-us.aspx My component is listed in the Transformation section Mapper Pipeline Component This gave me an idea of developing the tranform concept in a pipeline component, wherein you don’t have to actually create a map for transforming […]

Blog Post by: shankarsbiztalk

by community-syndication | Oct 16, 2012 | BizTalk Community Blogs via Syndication

Last week, as part of Tellago’s Technology Update, I delivered a presentation about the modern enterprise mobility powered by cloud-based, mobile backend as a service models. During the presentation we covered some of the most common enterprise mBaaS…(read more)

Blog Post by: gsusx

by community-syndication | Oct 15, 2012 | BizTalk Community Blogs via Syndication

We recently hit a problem on a project we were working on whereby we were forced to switch from using a native BizTalk database adapter to using the third-party Community Adapter for ODBC which has been refactored for BizTalk 2010 by TwoConnect. Having access to the source code allowed us the opportunity to work around […]

Blog Post by: Johann

by community-syndication | Oct 15, 2012 | BizTalk Community Blogs via Syndication

I am working on a project where we are uploading client files to Azure Blob Storage. Blob storage is perfect for this type of application and uploading them with public access is the default behavior. Therefore any anonymous client can read blob data out of your container. This doesn’t work so well for this situation. Luckily there is the Shared Access Signature feature.

The Shared Access Signature feature provides a means for you to provide permissions, policies and a time window for those permissions and policies to be valid. You can also modify or revoke the permissions as necessary. This is important because Azure storage works by providing a password for your storage account. Anyone who has that password has access/ownership of your storage account. That is why you can’t give out your shared key. The only exception to this is of course if you mark your container public. The Shared Access Signature features allows you to give permissions but still keep control over security.

So, the Shared Access Signatures provides code that lets us separate the code that signs the request from the execution. It is implemented as a query string parameter that proves that the creator of the URL is authorized to perform the operations. The following MSDN article shows how to manually create the signature string. This is here just so that you can see what is provided and how the string is make up. In addition, there is another MSDN article that shows how to create a shared access signature and provides the following graphic to show the part that make up the query string.

How can we code the blob access (upload and download) to include the Shared Access Signature?

The following code shows how to upload a file to blob storage using a Shared Access Signature:

public string UploadBlobToAzure(string fileName)

{

if (fileName == null)

{

throw new ArgumentNullException("fileName");

}

try

{

// create storage account

string storageConnnectString = string.Format("DefaultEndpointsProtocol={0}; AccountName={1};

AccountKey={2}", "http", ConfigurationManager.AppSettings["storageAccountName"],

ConfigurationManager.AppSettings["storageAccountKey"]);

var account = CloudStorageAccount.Parse(storageConnnectString);

// create blob client

CloudBlobClient blobStorage = account.CreateCloudBlobClient();

//Get the container. This is what we will attach the signature to.

CloudBlobContainer container = blobStorage.GetContainerReference("<storagecontainername>");

container.CreateIfNotExist();

//Create the shared access permissions and policy

BlobContainerPermissions containerPermissions = new BlobContainerPermissions();

string sas = container.GetSharedAccessSignature(new SharedAccessPolicy()

{

SharedAccessExpiryTime = DateTime.UtcNow.AddMinutes(30), Permissions =

SharedAccessPermissions.Write | SharedAccessPermissions.Read

});

//Turn off public access

containerPermissions.PublicAccess = BlobContainerPublicAccessType.Off;

container.SetPermissions(containerPermissions);

string uniqueBlobName = string.Format(@"<storagecontainername>/<filename>_{0}.config",

Guid.NewGuid().ToString());

//assign the shared access policy

CloudBlobClient blobClient = new CloudBlobClient(account.BlobEndpoint, new

StorageCredentialsSharedAccessSignature(sas));

CloudBlob blb = blobClient.GetBlobReference(uniqueBlobName);

//upload the file

blb.UploadFile(fileName);

System.Console.WriteLine("File successfully uploaded to " + blb.Uri);

return "File successfully uploaded to " + blb.Uri;

}

catch (StorageClientException e)

{

return "Blob Storage error encountered: " + e.Message;

}

catch (Exception e)

{

return "Error encountered: " + e.Message;

}

finally

{

}

}

Once you upload the file then you can use the following code to access that file and download it locally:

public string DownloadBlobfromAzure(string fileName)

{

if (fileName == null)

{

throw new ArgumentNullException("fileName");

}

try

{

// create storage account

string storageConnnectString = string.Format("DefaultEndpointsProtocol={0}; AccountName={1};

AccountKey={2}", "http", ConfigurationManager.AppSettings["storageAccountName"],

ConfigurationManager.AppSettings["storageAccountKey"]);

var account = CloudStorageAccount.Parse(storageConnnectString);;

// create blob client

CloudBlobClient blobStorage = account.CreateCloudBlobClient();

CloudBlobContainer container = blobStorage.GetContainerReference("<storagecontainername>");

container.CreateIfNotExist();

//Create the shared access permission and policy

BlobContainerPermissions containerPermissions = new BlobContainerPermissions();

string sas = container.GetSharedAccessSignature(new SharedAccessPolicy()

{

SharedAccessExpiryTime = DateTime.UtcNow.AddMinutes(30),

Permissions = SharedAccessPermissions.Write | SharedAccessPermissions.Read

});

//Turn off public access

containerPermissions.PublicAccess = BlobContainerPublicAccessType.Off;

container.SetPermissions(containerPermissions);

string uniqueBlobName = string.Format(fileName);

//Assign the shared access policy

CloudBlobClient blobClient = new CloudBlobClient(account.BlobEndpoint, new

StorageCredentialsSharedAccessSignature(sas));

//Get a point to the blob object

CloudBlob blb = blobClient.GetBlobReference(uniqueBlobName);

string fileLocation = @"C:\Documents\";

int pos = fileName.IndexOf('/');

string fname = fileName.Substring(pos + 1, fileName.Length - pos - 1 );

//Download the blob object

blb.DownloadToFile(fileLocation + fname);

System.Console.WriteLine("File successfully downloaded to " + fileLocation + fname);

return "File successfully downloaded to " + fileLocation + fname;

}

catch (StorageClientException e)

{

return "Blob Storage error encountered: " + e.Message;

}

catch (Exception e)

{

return "Error encountered: " + e.Message;

}

finally

{

}

}

You may have noticed that when we created the policy we didn’t specify a SharedAccessStartTime attribute. We can specify a start time that policy will be good for or we can leave it off in which case the policy will go into effect immediately.

NOTE: The files names are case sensitive. If you attempt to find a blob and are not using lower case for the file name you will receive a return error

Outer Exception

Server failed to authenticate the request. Make sure the value of Authorization header is formed correctly including the signature.

Inner Exception Message

{"The remote server returned an error: (403) Forbidden."}

Blog Post by: Stephen Kaufman