by Rob Callaway | Apr 28, 2017 | BizTalk Community Blogs via Syndication

On April 26th 2017, Microsoft released Feature Pack 1 (FP1) for BizTalk Server 2016 and it’s been a while since I was this excited for a BizTalk Server release. Yeah, I just said that. I’m more excited for this feature pack than I was for BizTalk Server 2016 or even 2013 and 2013 R2, and here’s why… this is the first ever Feature Pack for any release of BizTalk Server, and is setting a precedent that we have never seen before in the 16+ years of the product.

A feature pack is a release of new non-breaking features for the product. These are not bug fixes or anything like that (those are distributed quarterly through Cumulative Updates). These are brand-new features that extend the product in new ways and help customers get the most out of their BizTalk Server investment.

The product team has confirmed that other feature packs are in the works, but they have not publicly confirmed when we can expect them. In discussions I’ve had with Tord Glad Nordahl (a program manager at Microsoft and longtime lover of BizTalk Server), he said:

“If it takes 6 months to build new features there will be another feature pack in 6 months, and if it takes 2 months there will be a new one in 2 months.”

My takeaway is that the team’s goal is to offer real answers to problems that customers face in the timeliest manner possible.

I’m excited that the BizTalk Engineering team at Microsoft will be releasing new features on a more regular cadence. It’s awesome that they are building new features to address long-overlooked issues and not making us wait another 2 years to get our hands on them.

FP1 is available to customers with Software Assurance who are using the Developer or Enterprise editions of BizTalk Server 2016.

FP1 introduces some innovative new features to BizTalk Server and addresses some longstanding concerns that many customers have had. The new features break down into three categories.

Deployment

Anyone who’s worked with BizTalk Server knows that the deployment/ALM story has left something to be desired. For years, the “official” deployment story has been to deploy applications using BizTalk MSI Packages. Although the BizTalk MSIs are pretty easy to use and they work well for simple applications, they tend to be inflexible and break with the complexities of a real application in the real world.

For example, to create a BizTalk MSI Package I have to deploy all my assets to the BizTalk Server Management database and then I can generate an MSI with those assets. It sounds easy and it is. The issue is that if I introduce a new assembly, or port, or anything at all, I have to add that new resource to the BizTalkMgmtDb and then generate a new MSI. In the modern world of DevOps and continuous integration/deployment, the standard MSI-based deployment is pretty cumbersome and most teams wanting to adopt those types of strategies need a different answer.

In the past, those teams have used community-designed tools such as the BizTalk Deployment Framework to automate building an MSI from source code repositories (like most modern ALM solutions) and therefore eliminating the need to deploy to a BizTalk Server system to create the deployment package. Feature Pack 1 for BizTalk Server 2016 introduces two new features that will serve as a foundation for more sophisticated deployment strategies in the future (which my inside sources at Microsoft have confirmed are coming in later feature packs).

- Deploy with VSTS – Enable Continuous Integration to automatically deploy and update applications using Visual Studio Team Services (VSTS).

For anyone who has used the build/release features of TFS or VSTS, this will be immediately familiar. This is a deployment task that you add to your release pipelines to deploy new or redeploy/update existing BizTalk Server applications.

If you haven’t used the build/release features of TFS or VSTS check out this post where I explain how to use those features to enable continuous release for a Logic App.

- New management APIs – Manage your environment remotely using the new REST APIs with full Swagger support.

Imagine having RESTful web APIs for updating, adding, or querying the status of your BizTalk Server applications and their resources… now stop imagining it because it’s a real thing!

Analytics

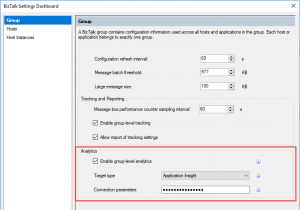

The tracking capabilities in BizTalk Server are extensive, but the configuration is often unintuitive, and no one likes digging through the BizTalkDTADb for the instance data they need.

FP1 enables you to send your tracking data to Azure Application Insights and feed operational data (subscriptions, batching status, message instance counts, etc.) to Power BI.

Runtime

If I’m being completely honest, the two features in this runtime category weren’t really on my radar at all until Tord Glad Nordahl stopped by one of my classes last month and discussed them with the students. But now that I’ve seen them, I’m excited for the potential and happy that customers with these requirements are getting some much needed love.

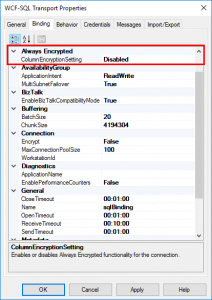

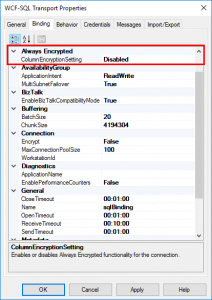

- Support for Always Encrypted – Use the WCF-SQL adapter to connect to SQL Server secure Always Encrypted columns.

Basically, SQL Server 2016 introduced a feature that enables client applications to read/write encrypted data within a SQL table without actually providing the encryption keys to SQL Server. This gives a new level of data security since the owners of the secure data (i.e., the client applications) can see it, but the manager of the data (i.e., SQL Server) cannot.

This ensures that on-premises or cloud database administrators or other high-privileged (but unauthorized) users cannot access the sensitive data.

With Feature Pack 1 of BizTalk Server 2016, the WCF-SQL adapter now offers an Always Encrypted property where you can simply enable or disable the feature as your needs dictate.

WCF-SQL Adapter Always Encrypted property as introduced in BizTalk Server 2016 Feature Pack 1

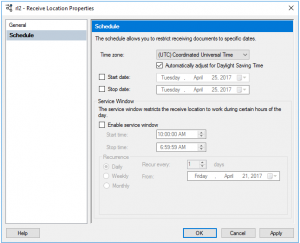

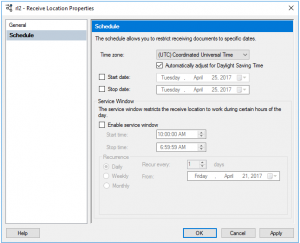

- Advanced Scheduling – Set up advanced schedules for BizTalk receive locations.

The Schedule page of receive locations has additional options for shifting time zones and setting up recurrence schedules.

BizTalk Server receive location advanced scheduling options as introduced in BizTalk Server 2016 Feature Pack 1

As always, the QuickLearn Training team is already looking for the best ways to incorporate these new features into our courses, but until we do you should grab the Feature Pack for yourself and give these new features a spin for yourself. While you’re at it go to the BizTalk Server User Voice page and vote for the features that you’d like to see in the next feature pack, or if you have an original idea for a feature add it there and see how love it gets.

by Rob Callaway | Apr 28, 2017 | BizTalk Community Blogs via Syndication

On April 26th 2017, Microsoft released Feature Pack 1 (FP1) for BizTalk Server 2016 and it’s been a while since I was this excited for a BizTalk Server release. Yeah, I just said that. I’m more excited for this feature pack than I was for BizTalk Server 2016 or even 2013 and 2013 R2, and here’s why… this is the first ever Feature Pack for any release of BizTalk Server, and is setting a precedent that we have never seen before in the 16+ years of the product.

A feature pack is a release of new non-breaking features for the product. These are not bug fixes or anything like that (those are distributed quarterly through Cumulative Updates). These are brand-new features that extend the product in new ways and help customers get the most out of their BizTalk Server investment.

The product team has confirmed that other feature packs are in the works, but they have not publicly confirmed when we can expect them. In discussions I’ve had with Tord Glad Nordahl (a program manager at Microsoft and longtime lover of BizTalk Server), he said:

“If it takes 6 months to build new features there will be another feature pack in 6 months, and if it takes 2 months there will be a new one in 2 months.”

My takeaway is that the team’s goal is to offer real answers to problems that customers face in the timeliest manner possible.

I’m excited that the BizTalk Engineering team at Microsoft will be releasing new features on a more regular cadence. It’s awesome that they are building new features to address long-overlooked issues and not making us wait another 2 years to get our hands on them.

FP1 is available to customers with Software Assurance who are using the Developer or Enterprise editions of BizTalk Server 2016.

FP1 introduces some innovative new features to BizTalk Server and addresses some longstanding concerns that many customers have had. The new features break down into three categories.

Deployment

Anyone who’s worked with BizTalk Server knows that the deployment/ALM story has left something to be desired. For years, the “official” deployment story has been to deploy applications using BizTalk MSI Packages. Although the BizTalk MSIs are pretty easy to use and they work well for simple applications, they tend to be inflexible and break with the complexities of a real application in the real world.

For example, to create a BizTalk MSI Package I have to deploy all my assets to the BizTalk Server Management database and then I can generate an MSI with those assets. It sounds easy and it is. The issue is that if I introduce a new assembly, or port, or anything at all, I have to add that new resource to the BizTalkMgmtDb and then generate a new MSI. In the modern world of DevOps and continuous integration/deployment, the standard MSI-based deployment is pretty cumbersome and most teams wanting to adopt those types of strategies need a different answer.

In the past, those teams have used community-designed tools such as the BizTalk Deployment Framework to automate building an MSI from source code repositories (like most modern ALM solutions) and therefore eliminating the need to deploy to a BizTalk Server system to create the deployment package. Feature Pack 1 for BizTalk Server 2016 introduces two new features that will serve as a foundation for more sophisticated deployment strategies in the future (which my inside sources at Microsoft have confirmed are coming in later feature packs).

- Deploy with VSTS – Enable Continuous Integration to automatically deploy and update applications using Visual Studio Team Services (VSTS).

For anyone who has used the build/release features of TFS or VSTS, this will be immediately familiar. This is a deployment task that you add to your release pipelines to deploy new or redeploy/update existing BizTalk Server applications.

If you haven’t used the build/release features of TFS or VSTS check out this post where I explain how to use those features to enable continuous release for a Logic App.

- New management APIs – Manage your environment remotely using the new REST APIs with full Swagger support.

Imagine having RESTful web APIs for updating, adding, or querying the status of your BizTalk Server applications and their resources… now stop imagining it because it’s a real thing!

Analytics

The tracking capabilities in BizTalk Server are extensive, but the configuration is often unintuitive, and no one likes digging through the BizTalkDTADb for the instance data they need.

FP1 enables you to send your tracking data to Azure Application Insights and feed operational data (subscriptions, batching status, message instance counts, etc.) to Power BI.

Runtime

If I’m being completely honest, the two features in this runtime category weren’t really on my radar at all until Tord Glad Nordahl stopped by one of my classes last month and discussed them with the students. But now that I’ve seen them, I’m excited for the potential and happy that customers with these requirements are getting some much needed love.

- Support for Always Encrypted – Use the WCF-SQL adapter to connect to SQL Server secure Always Encrypted columns.

Basically, SQL Server 2016 introduced a feature that enables client applications to read/write encrypted data within a SQL table without actually providing the encryption keys to SQL Server. This gives a new level of data security since the owners of the secure data (i.e., the client applications) can see it, but the manager of the data (i.e., SQL Server) cannot.

This ensures that on-premises or cloud database administrators or other high-privileged (but unauthorized) users cannot access the sensitive data.

With Feature Pack 1 of BizTalk Server 2016, the WCF-SQL adapter now offers an Always Encrypted property where you can simply enable or disable the feature as your needs dictate.

WCF-SQL Adapter Always Encrypted property as introduced in BizTalk Server 2016 Feature Pack 1

- Advanced Scheduling – Set up advanced schedules for BizTalk receive locations.

The Schedule page of receive locations has additional options for shifting time zones and setting up recurrence schedules.

BizTalk Server receive location advanced scheduling options as introduced in BizTalk Server 2016 Feature Pack 1

As always, the QuickLearn Training team is already looking for the best ways to incorporate these new features into our courses, but until we do you should grab the Feature Pack for yourself and give these new features a spin for yourself. While you’re at it go to the BizTalk Server User Voice page and vote for the features that you’d like to see in the next feature pack, or if you have an original idea for a feature add it there and see how love it gets.

by Rob Callaway | Mar 2, 2017 | BizTalk Community Blogs via Syndication

A few weeks ago, QuickLearn Training hosted a webinar with an overview of a few of the new features in BizTalk Server 2016. This post serves as a proper write-up of the feature that I shared. In this write-up, I’d like to drill a little deeper into the things that were discussed and even explore some other aspects that we simply didn’t have time for. In my portion of the webinar, I spoke about using the XslCompiledTransform class in maps. If you missed the full webinar, check it out over on the QuickLearn Training YouTube channel. If you prefer you can just watch the section on the XslCompiledTransform class.

There are so many resources comparing these .NET classes against one another that I’m really not sure that there’s anything I can add to the discussion. The best of these resources is the one I cited in the webinar by Anton Lapounov. Simply put, if you put these two classes in a straight-up foot race, the XslCompiledTransform is going to take longer to load, but will blow away the XslTransform class when it comes to the actual time to transform.

Now, if you’ve closely followed the features of other releases you may think this isn’t actually a new feature, and you would be mostly correct. You see, in BizTalk Server 2013 the mapping engine was changed to utilize the XslCompiledTransform class instead of the XslTransform class that the mapping engine had been using since BizTalk Server 2004. While this change was made to reap the performance benefits of the XslCompiledTransform class over the XslTransform class, it was a change that Microsoft made unilaterally to all BizTalk maps compiled for BizTalk Server 2013. While the intention was pure, this change wasn’t universally welcomed by BizTalk developers. There are several great write-ups exploring issues that arose in existing maps when updated (for example this great blog post from Dan Rosanova, or just the Known Issues for BizTalk Server 2013). In case you’re pressed for time and can’t read those, the issues arise from the differences in behavior between the XslCompiledTransform class and the XslTransform class. The specific differences are:

- If an input XML field is empty or contains a false value, the Scripting functoid will treat the input as a true

- The XslCompiledTransform class only supports calling public methods

- The XslCompiledTransform class does not support returning null

- In the XslCompiledTransform class, function overloads are differentiated by number of parameters rather than types

- The XslCompiledTransform class utilizes the XPathArrayIterator type rather than the XPathSelectionIterator type for looping through repeating records within the Scripting Your script must call the MoveNext() method to advance properly

While these five changes may seem relatively trivial, for some people they presented issues that completely broke their maps. There is a registry setting which allows you to tell the mapping engine to use the XslTransform class, but the change is applied globally to all maps and negates the potential performance gains offered by the XslCompiledTransform.

BizTalk Server 2016 now surfaces the option for the transformation class as a UI element. Furthermore, the transformation class isn’t specified globally, but instead we can set it for each map separately. So, any maps I’m upgrading that are negatively affected by the XslCompiledTransform class can target the XslTransform class. This ability to opt-in or opt-out gives us true backward compatibility that was sorely missing in the previous releases.

By default, any new or existing maps that you are upgrading will target the XslTransform class (for backward compatibility), but setting the transform class for the map couldn’t be easier. In your map, if you go to the Properties for the map grid, you will see a new property named Use XSL Transform (the default is True and indicates that the XslTransform class will be used). If you change it to False, the XslCompiledTransform is targeted instead. There is a third option for the property, Undefined. If you choose this option, that map will check the same registry settings used in earlier releases to control the transformation class.

Configuring the Use XSL Transform property

I don’t really want to rehash my test process or results, as you can watch the webinar or download the slides to get all of that. Instead, I wanted to answer a question that was posed in the webinar that I was unprepared to answer. Niyati asked if the increase in performance would have been the same if I were calling the map in an orchestration. This configuration was not part of my initial round of tests, but I cracked open my solution, and created an orchestration so I could definitively show whether it was true or not.

My orchestration has the simplest design possible. It receives the message, runs the map, and sends the transformed message out.

Orchestration used to test the XslCompiledTransform map

I made one of these babies that runs the XslCompiledTransform map, and another that runs the XslTransform map.

I went crazy in my testing and processed 10,000 instances of each orchestration using my batch file submission method. I’m not going to write a full-blown analysis of the results because I think the numbers speak for themselves.

The XslCompiledTransform class once again executed much faster

I had a lot of fun exploring transformation classes in preparation for this webinar and I really look forward to the next one. Please be on the lookout for details on that webinar in the coming weeks.

If you need to learn more about measuring and analyzing BizTalk Server performance, tuning performance, or controlling the throttling behaviors in BizTalk Server you really should check out our BizTalk Server Administrator Deep Dive course.

by Rob Callaway | Jan 20, 2017 | BizTalk Community Blogs via Syndication

Yesterday the QuickLearn Training team got together to host a webinar for other members of the integration community to reflect on a few of the new features in BizTalk Server 2016. Rather than trying to cram all of the features into a 1 hour webinar. We focused on three of the new features and explored the fun implications of each.

- SAS Authentication for Azure Relays

- Using the XslCompiledTransform Class in BizTalk Maps

- Enhancements to the management experience

If you missed it, you can find it on the QuickLearn Training YouTube channel. We will be releasing detailed write-ups of each of these topics in the coming weeks.

This was just part one of a series of Webinars that we are planning. Keep an eye out for more details on the next webinar via our Twitter account.

by Rob Callaway | Dec 1, 2016 | BizTalk Community Blogs via Syndication

Introduction

This blog is usually reserved for technical posts and QuickLearn Training announcements, but something happened across my Facebook feed a while back and I’ve found myself revisiting it in my mind over and over so I have some thoughts / predictions / musings that I want to express.

Some Background

I’ve been training people how to be BizTalk Server developers and administrators since 2005. That’s a pretty long time; and in that time I’ve hit the job market looking for a new position on only a couple of occasions because I really love my job.

But I know that I’m one of the lucky ones. There are plenty of people out there looking to advance their careers. Others who hate the company they work for. Plenty of people feel stuck in dead-end positions. And there’s definitely a few looking to completely start over.

What’s the Point?

This brings me to my point. If any of that sounds like you, or someone you know, check out LinkedIn’s “Top Skills That Can Get You Hired in 2017” blog post (this is the thing that I saw on my Facebook feed). In it they list the top 10 skills based on the jobs listed on LinkedIn in 2016.

Of course, as someone who specializes in integration, I was pleased as punch to see Middleware and Integration Software in the #4 spot globally. Furthermore, Cloud and Distributed Computing is in the #1 spot (not surprising).

Naturally I couldn’t help but think of Logic Apps since it’s the convergence of those two categories. Logic Apps are in a position to change the game for a lot of organizations and people. I think we’re going to see a dramatic increase in the number of organizations / development teams looking for “cloud” developers with an integration background.

Don’t Tell Me BizTalk Is Dead, Because It’s Not

Just because that flashy new cloud-based integration platform comes rolling down the street doesn’t mean I’ve forgotten about BizTalk Server (my first love). Microsoft has increased their investment in BizTalk Server over the past 2 years, and just released BizTalk Server 2016 (I’m still waiting for Nick Hauenstein to start writing about all the new features). In the past year, Microsoft has changed its tune regarding Azure.

The new buzzword is Hybrid. I don’t want to dismiss that as a buzzword though. Hybrid (or more specifically, Hybrid Integration) is blending new Azure or cloud-based systems with existing on-premises systems. No one is going to abandon all of their on-premises investments overnight to adopt a cloud platform. The companies that are moving to the cloud are doing so slowly and deliberately one system / project at a time. No one is saying “Pack everything up Ted, we’re moving to the cloud.” Instead cloud services are used for new development.

As more workloads start running in the cloud, organizations need skilled people to connect those cloud services to data and services that live on-premises. BizTalk Server is a prime candidate to be your hybrid integration platform. Gartner estimates that by 2020, 75% of large organizations will have a hybrid integration platform. Those companies are going to need savvy integration professionals to build those platforms.

We Live in a Connected World

Our world seems to get more connected day-by-day. Mobile apps and IoT (Internet of Things) have changed the way people live their lives and neither is fading away any time soon. Oh yeah, I almost forgot to mention that in the LinkedIn article, Mobile Development holds the #7 spot.

That Gartner report I referenced a second ago states that 70% of mobile app development costs are related to integration and that integration represents 50% of the cost in IoT solutions. You know that all these systems don’t magically connect to each other. Someone has to build those connections, and that someone could be you.

Becoming a Unicorn

That sea-change the cloud was supposed to bring… it’s here. Companies have started adopting cloud technologies and they aren’t going to stop. As integration professionals, we are in a unique position to capitalize on this change. But with the demand as high as it is, you’re going to have to stand out. If your skills included integration (on-premises and cloud) + cloud development + mobile development, you’d be poised to land some of the most coveted jobs.

I didn’t intend for this to be a sales pitch, but if you need help getting there, QuickLearn Training can help you out. Our courses on BizTalk Server (updated for BizTalk Server 2016 starting in January 2017) and our Cloud-Based Integration Using Azure Logic Apps course will equip you with the deep skills you need to become the elusive unicorn that companies are looking for.

On the other side of the coin, if you’re looking to get some unicorns on your team, they are hard to find and will come at a cost. Honestly, you’re probably better off making your own unicorn. Time and again I hear from customers about horror stories where they hired someone who wasn’t a good fit. Or the consultant they contracted with disappeared and now they are stuck without support. I genuinely think the best option for most teams or organizations is to find the person you want and then help them gain the skills you need.

I’m not boasting when I say that I’ve had more than a handful of students tell me that my course(s) helped them find a direction for their career; if anything it is a rather humbling experience to realize that you have played a role in changing their lives. As a trainer, I love that my job is to make other people’s lives better, and I’d like to help make yours better too.

I know that I speak for everyone here at QuickLearn Training when I say, make 2017 awesome by becoming a unicorn!

by Rob Callaway | Jun 9, 2016 | BizTalk Community Blogs via Syndication

One of the concerns that I have repeatedly heard from customers when we talk about Azure is application lifecycle management. If you do most of your resource deployment and management using the Azure Portal, then you probably picture a very manual migration process if you wanted to move your app from dev to test, or if you wanted to share your app with another developer.

A clear example of this occurred during a run of QuickLearn’s Cloud-Based Integration Using Azure App Service course when my students were quick to see that the Logic Apps they created was pretty much stuck where they created them. Moving from one resource group to another was impossible at the time, and exporting the Logic App (and all the API Apps it depended on) was only a dream, so the only option was to redo all your work in order to create the Logic App in another resource group or subscription.

Logic Apps and Azure App Service have come a long way since then and the QuickLearn staff has been working its collective noodle to come up with application lifecycle management guidance for Logic Apps using the tools that are available today, which will hopefully improve the way you go about deploying and managing your Logic Apps.

Some readers may already be aware of the Azure Resource Manager or ARM for short. For those who haven’t previously met my little friend I’ll give a short introduction of ARM and the tools that exist around it. ARM is the underlying technology that the Azure Portal uses for all its deployment and management tasks. For example, if you create any resource within a new Resource Group using the Portal it’s really ARM behind the scenes orchestrating the provisioning process.

“Great Rob, but why do I care?”

I’ll tell you why. There are tools designed around ARM that make it not only possible, but down-right easy to run ARM commands. For example, you can get the Azure PowerShell module or the Azure Command Line Interface (CLI) and script your management tasks.

There’s a little more to it though, you see, those Azure resources (Logic Apps, Resource Groups, Azure App Service plans, etc.) are complex objects. Resource Groups, for example, have dozens of configurable properties and serve as containers for other objects (e.g., Web Sites, API Apps, Logic Apps, etc.). Let’s not over simplify reality; your cloud applications aren’t made up of a single resource, but instead are many resources that work in tandem. Therefore, any deployment or management strategy needs to bear that in mind. If you want to pull back the covers on your own resources, head over to the Azure Resource Explorer and you’ll see what I’m talking about.

“It’s nice to have a command that I can run in a console window to create a Resource Group, but I need more than that!”

You’re right. You do need more than that. The way you get more is using ARM Templates. ARM Templates provide a declarative way to define deployment of resources. The ARM Template itself is a JSON file that defines the structure and configuration of one or more Azure resources.

“So how I do I get one of these templates?”

There are several ways that you can get your hands on the ARM Template that you want.

- Build it by hand – The template is a JSON file so I guess if you understand the schema of the JSON well enough you could write an ARM Template using Notepad, Kate, or Visual Studio Code. This doesn’t seem very practical to me.

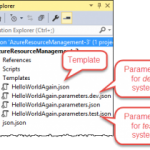

- Use starter templates – The Azure SDK for Visual Studio includes an Azure Resource Group project type which includes empty templates for an array of Azure resources. These templates are actually retrieved from an online source and can be updated at any time to include the latest resources. This looks a lot more viable than using Notepad, but in the end you are still modifying a JSON file to define the resource that you want.

- Export the template – You can export existing resources into a new ARM Template file. The process varies slightly from one type of resource to the next but you essentially go to the resource in the Azure Portal and export the resource to an ARM Template file. Sadly, at the time this article is being written this is not supported for Logic Apps, but Jeff Hollan has a custom PowerShell cmdlet that he built to export a Logic App to an ARM Template file.

One more thing — these templates are designed to utilize parameter files, so any aspect of the resource you’re deploying could be set at deploy-time via a parameter in a parameter file. For example, the pricing tier utilized by your App Service plan might be Free in your development environment and Standard in your test environment. The obvious approach is to create a different parameter file for each environment or configuration you want to use.

“I see what you did there… So now what?”

Well, now you’ve got your template and a way to represent the differences in environments as your application flows through the release pipeline, and you have an easy and repeatable way to deploy your resources wherever and whenever you want. The only piece that’s missing are the tools to perform the deployment.

As mentioned above, you could use the Azure PowerShell tools or Azure CLI to create scripts that you manually execute. Those Visual Studio ARM Template projects even include a pre-built PowerShell script that you could execute.

Personally, I love automation but I’ve never been a big fan of asking a person to manually run a random script and feed it some random files. I want something that’s more streamlined. I want something that is simultaneously:

- Automated – The process once triggered should not require manual help or intervention

- Controlled – The process should accommodate appropriate approvals along the way if needed

- Consistent and Repeatable – The process should not vary with each execution; it should have predictable outcomes based on the same inputs

- Transparent – The whole team should have visibility into the deployments that have taken place, and be able to identify which versions of the code live where, and why (i.e., I should have work item-level traceability)

- Versioned – Changes within the process and/or the process inputs (i.e., Logic App code) should be documented and discoverable

- Scalable – It should be just as easy to deploy 20 things as it is to deploy 1 thing.

For the past few years my team has been using TFS / VSTS as our primary source control and project management tool. In that time we’ve become more reliant on the excellent build system (Team Foundation Build) that TFS offers.

Team Build is much more than a traditional local build using Visual Studio. Team Builds run on a build server (i.e., not on your local computer) and are defined using a Build Definition. The Build Definition is a declarative definition of both the process that the build server will execute, as well as the settings regarding how the build is triggered, and how it will execute. It’s essentially a workflow for preparing your application for deployment.

The Build Definition is made up of tasks. Each task performs a specific step required in the build process. For example, the Visual Studio Build task is used to compile .NET projects within Visual Studio Solutions, and within the step you can control the Platform (Win32, x86, x64, etc.), and the Configuration (debug or release). While the Xamarin.Android task is used for compiling Android applications with settings appropriate for them.

Build Definitions can have Tasks that do more than compile your code. You might include tasks to run scripts, copy files to the build server, execute tests (Load Tests, Web Performance Tests, Unit Tests, Coded UI tests etc.), or create installation packages (though this would generally just be done through another project in your solution [e.g., with Flexera InstallShield and/or the WiX Toolset]). This gives you the power to quickly and automatically execute the tasks that are appropriate for your application.

Furthermore, a single Team Project in TFS could have multiple build definitions associated with it; because sometimes you want the build to simply compile, but other times you want to burn down the village, compile, run tests, and then deploy your web site to Azure for manual testing. Or perhaps you’re managing builds for multiple feature branches or even multiple applications within the Team Project.

“So what does this have to do with Logic Apps?”

If I add one of those ARM Template Visual Studio projects to my TFS / VSTS source control repository (whether it’s a Git repository or TFVC), I can create a Build Definition that compiles the ARM Deployment Project and other Visual Studio projects that include resources used by my cloud application (e.g., custom API Apps, Web Sites, etc.), and then publishes the ARM Template files (templates and parameter files) to a shared location where they can be accessed by automated deployment processes.

This was surprisingly easy to set up, I think it only took about 5 minutes. The best part is I can have this build trigger on check-in, so my deployment files are always up-to-date.

Here’s what my Build Definition looks like:

First I compile the project.

Then I copy the ARM Template files and parameter files from the build output directory to a temporary file location.

Finally, I publish the files from the temporary location. I’m using a Server location that other steps in the build (or a Release Manager release task) could use. It could have also been a file share to give access to processes not hosted in TFS.

“So what does all this add up to?”

Whenever someone changes the ARM Deployment project (whether modifying the template or parameters file or adding a new template/parameter file to it) Team Build runs my Build Definition to: (1) compile my project, (2) extract the ARM deployment files from the build directory, and (3) publish the files as an Artifact named templates. That Artifact lives on the build server and can be accessed by VSTS Release Management release tasks that will actually deploy my Azure resources to the cloud.

Release Management (a component of TFS / VSTS) helps you automate the deployment and testing of your software in multiple environments. You can either fully automate the delivery of your software all the way to production, or set up semi-automated processes with approvals and on-demand deployments.

In Release Management, you create Release Definitions that are conceptually similar to build definitions. A Release Definition is a declarative definition of the deployment process. Just like a Build Definition, a Release Definition is composed of tasks and each task provides a deployment step. The primary input for a Release Definition is one or more Artifacts created by your Build(s).

Release Definitions add a couple extra layers of complexity. One of those layers is the Environment. We all know that release pipelines are made up of multiple environments, and often each environment will come with its own unique requirements and/or configuration details. Within a single release definition you can create as many environments as you want, and then configure the Tasks within a given environment as appropriate for that system. The various Environments in you Release Definition can have similar or different Tasks

Each environment can also utilize variables if you’d prefer to avoid hard-coding things that are subject to change.

In this simple example, I created a Release Definition with two environments: Development and Test. Within each environment I used the Azure Resource Group Deployment task to deploy my Logic App, Service Plan, and Resource Group as defined in my ARM Deployment Template JSON file.

I configured the deployment to Development to happen automatically upon successful build (remember the build runs when I check-in the source code). But I wanted Test deployments to be manual.

I also created variables that enabled me to parameterize the name of the Resource Group, and the name of the Parameter File to use in each environment.

You can see here how I’m using those variables within the Azure Resource Group Deployment task.

Of course it works.

If I go to my Visual Studio project and modify something about my Logic App template. Maybe I finally get around to fixing that grammatical error in my response message.

Then I check-in my changes.

In VSTS, I can see that my build automatically started.

After the build completes, in the Release Hub I can see that a new release (Release-4) using the latest build (13) has started deploying to the Development environment.

I’ve got logs to show me what happened during the deployment.

I can see the commits or changesets included in this release compared to earlier releases. So a month from now Nick can see what modifications were deployed in Release-4.

What’s going on in Azure though? It looks like the Logic App in the Development Resource Group was updated to match my changes.

But my Test environment wasn’t touched.

Over on the Release Hub, I can manually start the Deployment to Test.

I almost forgot, deploying to Test requires an approval as well.

Just like that, it’s done.

In about 30 minutes I was able to create a deployment pipeline for my Logic App. The deployment pipeline is flexible enough that changes can be made easily, but structured in a way that I (and everyone else on my team) can see exactly what it does.

QuickLearn Training offers courses to enhance your understanding of TFS / VSTS and Logic Apps. Our Build and Release Management Using TFS 2015 course has all the finer details that you’ll never get out of a blog article, and our Cloud-Based Integration Using Azure App Service course teaches you how to build enterprise-ready integration solutions using features of the Azure cloud.

by Rob Callaway | Nov 2, 2015 | BizTalk Community Blogs via Syndication

In conversations with students and other integration specialists, I’m discovering more and more how confused some people are about the evolution of cloud-based integration technologies. I suspect that cloud-based integration is going to be big business in the coming years, but this confusion will be an impediment to us all.

To address this I want to write a less technical, very casual, blog post explaining where we are today (November of 2015), and generally how we got here. I’ll try to refrain from passing judgement on the technologies that came before and I’ll avoid theorizing on what may come in the future. I simply want to give a timeline that anyone can use to understand this evolution, along with a high-level description of each technology.

I’ll only speak to Microsoft technologies because that’s where my expertise lies, but it’s worth acknowledging that there are alternatives in the marketplace.

If you’d like a more technical write-up of these technologies and how to use them, Richard Seroter has a good article on his blog that can be found here.

Way, way back in October of 2008 Microsoft unveiled Windows Azure (although it wouldn’t be until February of 2010 that Azure went “live”). On that first day, Azure wasn’t nearly the monster it has become.

It provided a service platform for .NET services, SQL Services, and Live Services. Many people were still very skeptical about “the cloud” (if they even knew what that meant). As an industry we were entering a brave new world with many possibilities.

From an integration perspective, Windows Azure .NET Services offered Service Bus as a secure, standards-based messaging infrastructure.

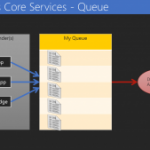

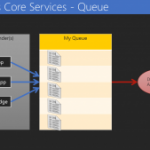

Over the years, Service Bus has been rebranded several times but the core concepts have stayed the same: reduce the barriers for building composite applications, even when their components have to communicate across organizational boundaries. Initially, Service Bus offered Topics/Subscriptions and Queues as a means for systems and services to exchange data reliably through the cloud.

Service Bus Queues are just like any other queueing technology. We have a queue to which any number of clients can post messages. These messages can be received from the queue later by some process. Transactional delivery, message expiry, and ordered delivery are all built-in features.

I like to call Topics/Subscriptions “smart queues.” We have concepts similar to queues with the addition of message routing logic. That is, within a Topic I can define one or more Subscription(s). Each Subscription is used to identify messages that meet certain conditions and “grab” them. Clients don’t pick up messages from the Topic, but rather from a Subscription within the Topic. A single message can be routed to multiple Subscriptions once published to the Topic.

Sample Service Bus Topic and Subscriptions

If you have a BizTalk Server background, you can essentially think of each Service Bus Topic as a MessageBox database.

Interacting with Service Bus is easy to do across a variety of clients using the .NET or REST APIs. With the ability to connect on-premises applications to cloud-based systems and services, or even connect cloud services to each other, Service Bus offered the first real “integration” features to Azure.

Since its release, Service Bus has grown to include other messaging features such as Relays, Event Hubs, and Notification Hubs, but at its heart it has remained the same and continues to provide a rock-solid foundation for exchanging messages between systems in a reliable and programmable way. In June of 2015, Service Bus processed over 1 trillion (1,000,000,000,000) messages! (Starts at 1:20)

As integration specialists we know that integration problems are more complex than simply grabbing some data from System A and dumping it in System B.

Message transport is important but it’s not the full story. For us, and the integration applications we build, VETRO (Validate, Enrich, Transform, Route, and Operate) is a way of life. I want to validate my input data. I may need to enrich the data with alternate values or contextual information. I’ll most likely need to transform the data from one format or schema to another. Identifying and routing the message to the correct destination is certainly a requirement. Any integration solution that fails to deliver all of these capabilities probably won’t interest me much.

So, in a world where Service Bus is the only integration tool available to me, do I have VETRO? Not really.

I have a powerful, scalable, reliable, messaging infrastructure that I can use to transport messages, but I cannot transform that data, nor can I manipulate that data in a meaningful way, so I need something more.

I need something that works in conjunction with this messaging engine.

Microsoft’s first attempt at providing a more traditional integration platform that provided VETRO-esque capabilities was Microsoft Azure BizTalk Services (MABS) (to confuse things further, this was originally branded as Windows Azure BizTalk Services, or WABS). You’ll notice that Azure itself has changed its name from Windows Azure to Microsoft Azure, but I digress.

MABS was announced publicly at TechEd 2013.

Despite the name, Microsoft Azure BizTalk Services DOES NOT have a common code-base with Microsoft BizTalk Server (on second thought, perhaps the EDI pieces share some code with BizTalk Server, but that’s about all). In the MABS world we could create itineraries. These itineraries contained connections to source and destination systems (on-premises & cloud) and bridges. Bridges were processing pipelines made up of stages. Each stage could be configured to provide a particular type of VETRO function. For example, the Enrich stage could be used to add properties to the context of the message travelling through the bridge/itinerary.

Complex integration solutions could be built by chaining multiple bridges together using a single itinerary.

MABS was our first real shot at building full integration solutions in the cloud, and it was pretty good, but Microsoft wasn’t fully satisfied, and the industry was changing the approach for service-based architectures. Now we want Microservices (more on that in the next section).

The MABS architecture had some shortcomings of its own. For example, there was little or no ability to incorporate custom components into the bridges, and a lack of connectors to source and destination systems.

Over the past couple of years the trending design architecture has been Microservices. For those of you who aren’t already familiar with it, or don’t want to read pages of theory, it boils down to this:

“Architect the application by applying the Scale Cube (specifically y-axis scaling) and functionally decompose the application into a set of collaborating services. Each service implements a set of narrowly related functions. For example, an application might consist of services such as the order management service, the customer management service etc.

Services communicate using either synchronous protocols such as HTTP/REST or asynchronous protocols such as AMQP.

Services are developed and deployed independently of one another.

Each service has its own database in order to be decoupled from other services. When necessary, consistency is between databases is maintained using either database replication mechanisms or application-level events.”

So the shot-callers at Microsoft see this growing trend and want to ensure that the Azure platform is suited to enable this type of application design. At the same time, MABS has been in the wild for just over a year and the team needs to address the issues that exist there. MABS Itineraries are deployed as one big chunk of code, and that does not align well to the Microservices way of doing things. Therefore, need something new but familiar!

Azure App Service is a cloud platform for building powerful web and mobile apps that connect to data anywhere, in the cloud or on-premises. Under the App Service umbrella we have Web Apps, Mobile Apps, API Apps, and Logic Apps.

I don’t want to get into Web and Mobile Apps. I want to get into API Apps and Logic Apps.

API Apps and logic Apps were publicly unveiled in March of 2015, and are currently still in preview.

API Apps provide capabilities for developing, deploying, publishing, consuming, and managing RESTful web APIs. The simple, less sales-pitch sounding version of that is that I can put RESTful services in the Azure cloud so I can easily use them in other Azure App Service-hosted things, or call the API (you know, since it’s an HTTP service) from anywhere else. Not only is the service hosted in Azure and infinitely scalable, but Azure App Service also provides security and client consumption features.

So, API Apps are HTTP / RESTful services running in the cloud. These API Apps are intended to enable a Microservices architecture. Microsoft offers a bunch of API Apps in Azure App Service already and I have the ability to create my own if I want. Furthermore, to address the integration needs that exist in our application designs, there is a special set of BizTalk API Apps that provide MABS/BizTalk Server style functionality (i.e., VETRO).

This is all pretty cool, but I want more. That’s where Logic Apps come in.

Logic Apps are cloud-hosted workflows made up of API Apps. I can use Logic Apps to design workflows that start from a trigger and then execute a series of steps, each invoking an API App whilst the Logic App run-time deals with pesky things like authentication, checkpoints, and durable execution. Plus it has a cool rocket ship logo.

What does all this mean? How can I use these Azure technologies together to build awesome things today?

Service Bus provides an awesome way to get messages from one place to another using either Queues or Topics/Subscriptions.

API Apps are cloud-hosted services that do work for me. For example, hit a SaaS provider or talk to an on-premises system (we call these connectors), transform data, change an XML payload to JSON, etc.

Logic Apps are workflows composed of multiple API Apps. So I can create a composite process from a series of Microservices.

But if I were building an entire integration solution, breaking the process across multiple Logic Apps might make great sense. So I use Service Bus to connect the two workflows to each other in a loosely-coupled way.

Logic Apps and Service Bus working together

And as my integration solution becomes more sophisticated, perhaps I have need for more Logic Apps to manage each “step” in the process. I further use the power of Topics to control the workflow to which a message is delivered.

More Logic Apps and Service Bus Topics provide a sophisticated integration solution

In the purest of integration terms, each Logic App serves as its own VETRO (or subset of VETRO features) component. Decomposing a process into several different Logic Apps and then connecting them to each other using Service Bus gives us the ability to create durable, long-running composite processes that remain loosely-coupled.

Doing VERTO using Service Bus and Logic Apps

Today Microsoft Azure offers the most complete story to date for cloud-based integration, and it’s a story that is only getting better and better. The Azure App Service team and the BizTalk Server team are working together to deliver amazing integration technologies. As an integration specialist, you may have been able to ignore the cloud for the past few years, but in the coming years you won’t be able to get away with it.

We’ve all endeavored to eliminate those nasty data islands. We’ve worked to tear down the walls dividing our systems. Today, a new generation of technologies is emerging to solve the problems of the future. We need people like you, the seasoned integration professional, to help direct the technology, and lead the developers using it.

If any of this has gotten you at all excited to dig in and start building great things, you might want to check out QuickLearn Training’s 5-day instructor-led course detailing how to create complete integration solutions using the technologies discussed in this article. Please come join us in class so we can work together to build magical things.

by Rob Callaway | Jun 2, 2015 | BizTalk Community Blogs via Syndication

Lessons Learned

Over the last few months, everyone here at QuickLearn Training has learned a thing or two about the Azure App Service and Logic Apps team at Microsoft. The most obvious is that the team is full of Work-a-saurus-Rexes. The number of changes and added features since Azure App Service Logic Apps went into Public Preview (on March 24th) is astounding.

Here’s another thing we’ve learned: keeping up with those changes (and more importantly keeping our Cloud-Based Integration Using Azure App Service course up-to-date with those changes) is going to be a fascinating process. It seems like every day we discover something new or different and we have to decide the best way to incorporate it into our course. Honestly, with the cutting-edge technology, the always interesting integration stories, and awesome team that I work with, I’ve never had more fun designing a course.

Updates to Azure App Service Logic Apps

Enough with the praise! The real purpose of this entry is to provide a log of the updates that we’ve made to the course since our first run last month (May 6th – 8th).

- Coverage of the updates and changes to Visual Studio templates introduced in the Azure SDK 2.6

- Added coverage for the JSON encoder API App

- Added lecture and labs on building custom API Apps that implement Push and Poll triggers

- Added using the T-Rex Metadata Library to markup API App objects and create custom Swagger metadata for use by the Logic App Designer

- Restructured the course to provide a more seamless flow through the various technologies

These changes represent a month’s worth of work for the QuickLearn team, and are additive to all the amazing content that we had previously.

Trust Us, We’re Professionals

Azure App Service Logic Apps are the future of the Microsoft integration story. If you haven’t looked at it yet, the time to start is now. If you have looked and you’re finding it hard to keep up with the rapid evolution, don’t fret because we have your back. It’s probably not your full-time job to stay up-to-date on these rapid changes, but it is ours. We love doing it and our team is committed to staying up-to-date on everything in the realm of Logic Apps, and we’re happy to help keep you up-to-date too. Your next chance to catch this exciting and fun class is July 13th, 2015.

As always, your purchase of our class comes with the ability to retake the course for free anytime within 6 months.