by Daniel probert | Jan 21, 2016 | BizTalk Community Blogs via Syndication

So a few months ago, I was trying to work out how to create an instance of a marketplace API App via code. There was no API or samples for this at the time. API Apps (like Logic/Mobile/Web apps) can be deployed using ARM templates, so it was just a matter of working out what to put in the template.

Since then, the AAS team have released a guide on what needs to go in the template, what the contract looks like, and supplied a sample on GitHub:

https://azure.microsoft.com/en-gb/documentation/articles/app-service-logic-arm-with-api-app-provision/

https://github.com/Azure/azure-quickstart-templates/tree/master/201-logic-app-api-app-create

But their example requires you to supply *all* the information – and for an API App, that’s quite a lot e.g. gateway name, secret etc. Especially as your gateway host name needs to be unique.

Note: Just as a primer, when you deploy an API App, you’re actually deploying two web sites: a gateway app (which is how you interact with the API App, and which handles security, load balancing, etc.) and then the actual API App itself.

I found an easier way to do all this: you can ask the Azure API for an instance of an API App deployment template, with everything all filled out for you.

The TL;DR version is that there is an API you can call to get a completed deployment template for any marketplace API App, and then you just need to wrap this in a Resource Group template and deploy it – see further down this article for the details.

The technique for working this out can be used to figure out other undocumented APIs in Azure. Not that when I say “undocumented” here, I don’t mean as in the sense of the Win32 API, where undocumented APIs are subject to change and use of them is frowned upon: here I just mean APIs that have yet to be documented, or which are documented poorly.

First things first: the Azure Portal is just a rich RESTful-client running in your browser. Whenever you do anything in the portal, it makes REST calls against the Azure APIs. By tracing those calls, we can recreate what the portal does.

Most modern browsers have some sort of developer option you can turn on which captures those network calls and lets you examine them (e.g. Dev Tools for Internet Explorer).

Alternatively, you can download a tool such as Fiddler (https://www.telerik.com/download/fiddler), which acts as a local proxy to capture all the requests your browser makes.

In my case, I used Internet Explorer, and pressed F12 to open the dev tools dialog.

What I wanted to know was: how do I create an instance of the FlatFileEncoder API App?

Here are the steps I took to work it out.

Step 1: Open the Portal to the point just before you create your resource

When you start the dev tools, all requests (called Sessions) are logged. The portal makes a *lot* of requests, as it’s always updating the data displayed. In order to cut down the number of requests we have to sort through, it’s best to navigate to the correct screen before starting dev tools – in my case, I navigated to the New option, then to Marketplace, then chose the Everything option, and searched for “BizTalk” – this showed me a list of integration API Apps, including the BizTalk Flat File Encoder:

At this point, I would normally select the API App and click Create, but I stopped and moved to Step 2.

Step 2: Open Dev Tools (or Fiddler) and clear the sessions

In IE, you open Dev Tools by pressing F12. You get a new window at the bottom. Select the Network tab.

In Chrome, it’s also F12 (or Ctrl-Shift-I) to open Dev Tools, and again you select the Network tab.

In Fiddler, just start Fiddler and ensure File/Capture Traffic is enabled.

Clear the list of current sessions: In IE, click the Clear Session button:

In Chrome, it’s the clear button:

And in Fiddler, click the Edit menu, then Remove, then All Sessions (or press Ctrl-X).

Step 3: Take the actions in the portal to create your resource

Leaving Dev Tools open (or Fiddler running), in the portal create your resource – in my case, I selected the BizTalk Flat File Encoder item, selected Create, typed in values (I chose a new App Service Plan and Resource Group), then clicked OK:

Step 4: Stop the Network trace and save it

Once the portal has finished (which takes about 2 minutes for a new API App) we can stop the network trace so we don’t end up with a lot more information than necessary.

We stop the trace by pressing the Stop Button in Dev Tools (IE and Chrome) or deselecting the Capture Traffic option in Fiddler.

Now save the results file – in IE or Chrome, click the Save button, or in Fiddler click File/Export Sessions/All Sessions.

Step 5: Filter the results file

Both IE and Chrome save the results file as an HAR file – an HTTP Analysis Results file. Although you can just look at the file in your browser (in the Dev Tools window) I prefer to open it up in Fiddler, as there is more choice for decoding requests and responses.

Open the file in Fiddler by selecting File, then Import Sessions, then choosing the HTTPArchive (HAR) file type, and clicking Next to select the file.

You should end up with a window that looks a bit like this:

You’ll probably have over 500 items in your list, depending on when you started your trace and when you stopped it, and what you created.

And now the fun part: analysing the results.

Luckily Fiddler makes this easier for us, by displaying a little icon in the left (which tells us the type of HTTP Verb and content type) – and even better, we can filter the list of sessions so that we only see JSON responses (which is what the Azure Management APIs use): on the right side of the window, select the Filters tab, then under Response Type and Size, select “Show only JSON”. Then click the Actions button (top right of the Filters tab) and select “Run filterset now”:

The list of sessions should now be drastically reduced – in my case, I only had 86:

And now we can start analysing those API calls!

Step 6: Analysing the API calls

This is probably the hardest step – looking at all the calls, seeing what they do. An easy search is to just look for the words FlatFileEncoder in the URL or request data, but I like to go through the calls seeing what each do.

The majority of the early calls are to do with populating the dialog displayed when you create a new API App i.e. a list of App Service Plans (ASPs), Resource Groups, Regions, etc.

For example, there is a bunch of calls one after another that look like this:

They all do a POST against the Microsoft.Web provider API, supplying an api-version, and optionally the type of data we want:

- The first call asks for serverfarms – this is the old name for App Service Plans (and helps you understand what an App Service Plan is, as it’s implemented as a server farm: a collection of web servers – the number of servers and their capabilities are determined by the pricing tier).

A GET request is issued on this URL:

https://management.azure.com/subscriptions/(subId)/providers/Microsoft.Web/serverfarms?api-version=2015-04-01&_=1452592242795

Note: the suffix at the end of that URL (the _=1452592242795 part) is a sequence number, used to differentiate multiple identical requests from each other.

If you have any ASPs you’ll see a response similar to this:

- The second call doesn’t ask for a particular type of data, which means all generic data is returned e.g. details on mobile sites, site pools, available hosting locations, etc.

A GET request is issued on this URL:

https://management.azure.com/subscriptions/(subid)/providers/Microsoft.Web?api-version=2015-01-01&_=1452592242797

The response will look something like this:

- The third call asks for deploymentLocations – and this is what is returned, along with a sort order (interestingly the Indian regions are always given a sort order of Int32.Max (sortOrder=2147483647) so they always appear at the end of the list, if you’re able to see them.

A GET request is issued on this URL:

https://management.azure.com/subscriptions/(subid)/providers/Microsoft.Web/deploymentLocations?api-version=2015-04-01&_=1452592242798

The response looks like this:

- The fourth call is for gateways – this returns a list of any API App gateways that exist already.

A GET request is issued on this URL:

https://management.azure.com/subscriptions/(subid)/providers/Microsoft.AppService/gateways?api-version=2015-03-01-preview&_=1452592242801

The response will look something like this:

- The fifth call is for sites – this returns any mobile, web, API or Logic Apps you have deployed, as they’re all deployed as web sites.

A GET request is issued on this URL:

https://management.azure.com/subscriptions/(subid)/providers/Microsoft.Web/sites?api-version=2015-04-01&_=1452592242804

The response will look something like this:

The above is all well and good – it’s useful to know how to get this data. But it doesn’t help us in our aim of creating a new API App.

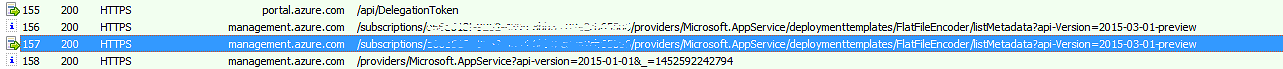

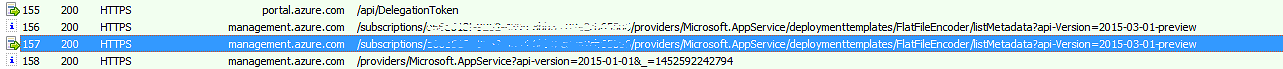

But by looking through the rest of the calls, we can spot 3 calls that are key:

- Getting the version number or internal name for the Flat File Encoder.

This is the first call we can see that has the words FlatFileEncoder in the request:

(ignore the seemingly duplicate call above it (session 156), that’s an HTTP OPTIONS call used to check what actions this endpoint supports – the portal uses this pattern a lot of issuing an OPTIONS call before doing a POST).

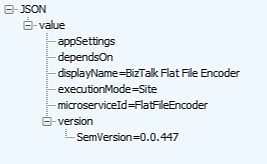

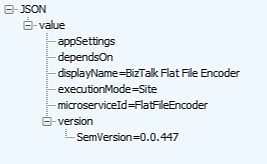

As per the request, we can see that we’ve requested metadata for the FlatFileEncoder. Let’s look at the response:

We can see that the response gives us the current version of the API App, the type of app, the display name, and the microServiceId i.e. the name of the API App we have to use to create it (although we actually needed to know that part in order to request the metadata!).

We can also see that there are no specific app settings, and no dependencies needed for the API App.

- Getting a deployment template for the FlatFileEncoder

This is the request I found most interesting.

We create a request that looks like this:

Here’s that in text format:

{

“microserviceId”: “FlatFileEncoder”,

“settings”: {

},

“hostingPlan”: {

“subscriptionId”: “subid”,

“resourceGroup”: “Api-App-0”,

“hostingPlanName”: “FFASPNew”,

“isNewHostingPlan”: “true”,

“computeMode”: “Dedicated”,

“siteMode”: “Limited”,

“sku”: “Standard”,

“workerSize”: “0”,

“location”: “West Europe”

},

“dependsOn”: [

]

}

The request is saying that we’d like to create a new FlatFileEncoder microservice (i.e. API App), and we’d like to use a new ASP called FFASPNew, and we’re using a Resource Group called API-App-0 (which is obviously a temp name).

We POST this request to this URL:

https://management.azure.com/subscriptions/(subid)/resourcegroups/(resourcegroupname)/providers/Microsoft.AppService/deploymenttemplates/FlatFileEncoder/generate?api-version=2015-03-01-preview

I wasn’t sure what to expect from the response, but look at this:

It’s a really long response… but what’s in there is a complete ARM template to create an API App – all you need to do is wrap it in the template to create a Resource Group.

What’s interesting is that not only does it use variables (which are passed to the template) to define things such as the gateway name, or ASP name, it also supplies us with a unique gateway name, plus the secrets to use for the API App:

- Deploying the FlatFileEncoder

Deploying the API App now becomes a simple matter of wrapping the response from the last call in a new ARM template for the resource group.

The request looks like this:

In text, that looks like:

{

“resourceGroupLocation”: “West Europe”,

“resourceGroupName”: “(resource group name)”,

“resourceProviders”: [

“Microsoft.Web”,

“Microsoft.AppService”

],

“subscriptionId”: “(subid)”,

“deploymentName”: “FlatFileEncoder”,

“templateLinkUri”: null,

“templateJson”: “(api app template)”,

“parameters”: {

“FlatFileEncoder”: {

“value”: {

“$apiAppName”: “FlatFileEncoder”

}

},

“location”: {

“value”: “West Europe”

}

}

}

The response you got from the previous step (i.e. the context of the value field) is supplied in the templateJson field, but it is escaped – that is, any double-quotes (“) are escaped (“) and any single backslashes are escaped (i.e. becomes ).

This request is POSTed against this URL:

https://portal.azure.com/AzureHubs/api/deployments

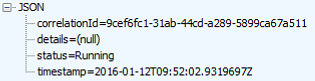

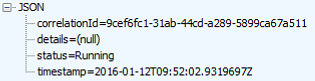

The response you get back will give you details about the provisioning of the API App, including a CorrelationId you can use to check progress.

This is a snippet of the response:

“mode”: “Incremental”,

“provisioningState”: “Accepted”,

“timestamp”: “2016-01-12T22:55:41.6680867Z”,

“duration”: “PT0.4716789S”,

“correlationId”: “30f8ddc6-a44d-4afc-9b03-9c2645c569b4”,

- Checking the status of the deployment

The portal then issues multiple calls to check the status of the deployment.

A GET request is made to this URL:

https://portal.azure.com/AzureHubs/api/deployments?subscriptionId=(subid)&resourceGroupName=(resource group name)&deploymentName=(api app name)&_=1452592189872

This will return a response like this:

Once the deployment has finished, it will change to this:

And if it fails, you’ll get a failure response.

This is how the portal knows to show you a tile with a “deploying” animation on it, and how it knows to stop showing that when the deployment has finished.

- Bonus: Finding recommended items in Azure

I noticed this little request whilst looking through the calls that the portal makes.

A GET request is made to this URL:

https://recommendationsvc.azure.com/Recommendations/ListFrequentlyBoughtTogetherProducts?api-version=2015-11-01

This will return a response like this:

This appears to be a way for the portal to recommend other items that you might be interested in. I haven’t noticed this functionality before!

And that’s it – there’s really only two steps involved: get the deployment template, and then deploy it.

All the rest is useful if you’re creating a UI where a user can select from a list of existing Resource Groups and ASPs and Locations etc., but not necessary if you already have all that information or are creating new ASPs and Resource Groups.

The techniques I showed here can be used for lots of different activities in the portal – you don’t have to wait until Microsoft creates .NET management libraries or publish details of management APIs, you can go find out how to do it yourself. Go get to it!

I’m hoping this has all been useful.