This post was originally published here

Missed the Day 1 at INTEGRATE 2018? Here’s the recap of Day 1 events.

Missed the Day 2 at INTEGRATE 2018? Here’s the recap of Day 2 events.

0815 — Once again, it was an early start but a good number of attendees were ready to listen to Richard Seroter’s session.

Architecting Highly available cloud integrations

Richard Sereter started his talk about building a highly available cloud integrations. He clearly conveyed the message that if there is any problem with the solution with multiple cloud services, it is not the responsibility of the services itself but a responsibility of solution architecture. He suggested to follow the practices such as chaos testing in which one randomly turn off few services and see how the solution behaves.

Core patterns

Richard started off with some of the core patterns for a highly available solution.

Handling Transient failures — It is quite common that services will have temporary hiccups such as network issues, database issues etc. Our solution need to be designed to handle such scenarios.

Load balancing — scale out Via more instances. Redundancy applies to compute, messaging , event streaming, data and networking, and auto scale.

Replicate your Data — Both transactional and metadata. Consider read and write. Cross regional replication and Disaster recovery

Throttle some of your users — one user’s load can impact all other integration tenants. Reject or return low quality results, and do it in a transparent way.

Introduce load leveling

Secure with least privilege, encryption, and anti-DDOS

- access should be as restrictive as possible

- turn on encryption with every possible ways

Configure for availability

What Azure storage service Provides

- Get file , disk , blob storage

- Four replication options

- Encryption at rest

What we have to do

- Set replication option

- Create strategy for secondary storage

- Consider server or client side encryption

What SQL Table Provides

- Highly available storage

- Ability to scale up or out

- Easily create read replicas

- Built in backup and restore

- Includes threat detection

What we have to do

- Create replicas

- Decide when to scale horizontally or vertically

- Restore database from back up

- Turn on threat detection

What Azure Cosmos DB Provides

- 99.999% availablity for reads

- Automatically partitions data and replicates it

- Supports for multiple consistency levels

- Automatic and manual failover

WHAT WE HAVE TO DO

- Define partition key , through put, replication policies

- Configure regions for read , write

- Choose consistancy for DB

- Decide to trigger a manual failover

What Azure service bus Provides

- Resilience within a region

- Initiates throttling to prevent overload

- Automatic partitioning

- Offers geo-disaster recovery

WHAT WE HAVE TO DO

- Select message retention time

- Choose to use partitioning

- Configure geo-disaster recovery

What Azure EVENT HUBS Provides

- Handles massive ingest load

- Auto-inflate adds throughput units to meet need

- Supports geo-disaster recovery

What Azure logic apps Provides

- In region HA is built in

- Impose limits on timeout , message size

- Supports synchronizing B2B resources to another region

WHAT WE HAVE TO DO

- Configure B2B resource synchronization

- Configure integration to highly available endpoints

- Duplicate logic app in other regions

What Azure FUNCTIONs Provides

- Scale underlying resources with consumption plan

- Scales VM’s automatically

- Basic uptime SLA at this time

WHAT WE HAVE TO DO

- Choose plan type

- Set scaling policies while using app service plan

- Replicate functions to other regions

What VPN GATEWAY Provides

- Deploys active-standby instances by default

- Run as a managed service and your never access underlying virtual machines

WHAT WE HAVE TO DO

- Resize as needed

- Configure redundant on prem VPNdevices

- Create active-active VPNgateway configuration

To sum it all

Richard finished his talk with few points —

- Only integrate with highly available endpoints

- Clearly understand what services failover together

- Regularly perform chaos testing

0900 — DevOps empowered by Microsoft Flow

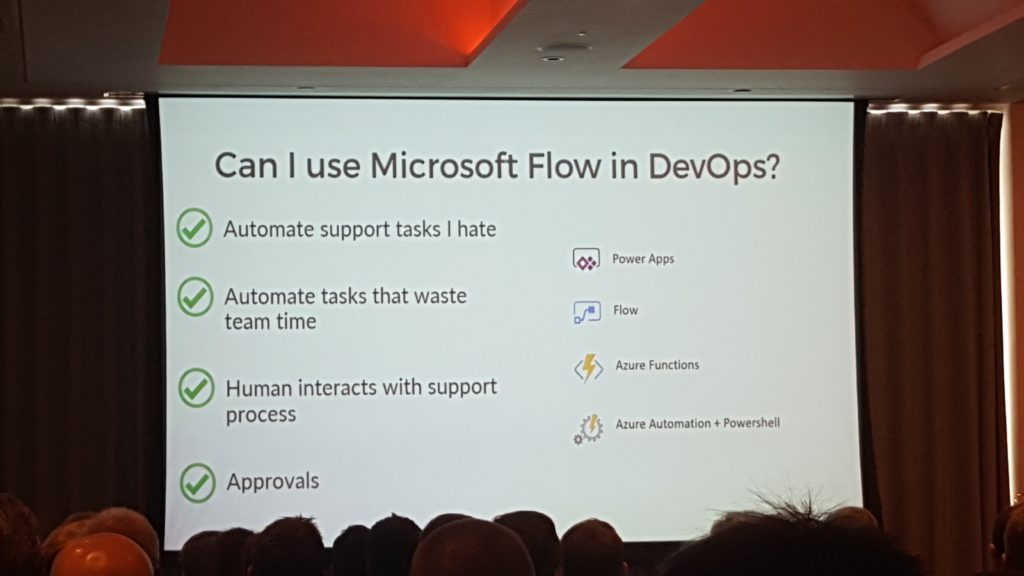

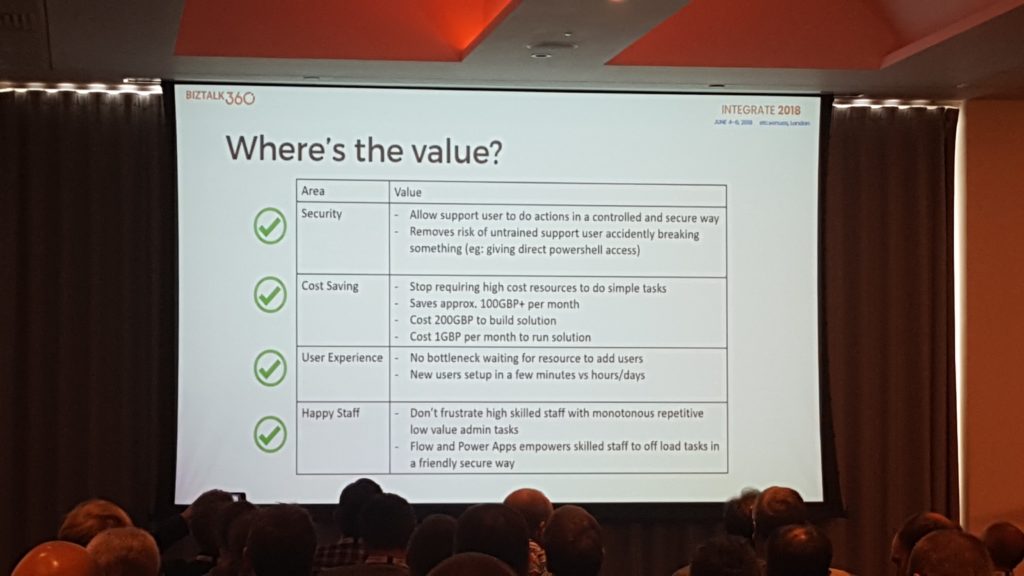

During the second session of the day Michael Stephenson explained how Microsoft flow can be used to simplify tasks that can be tedious in execution and bring very little business value e.g. User Onboarding.

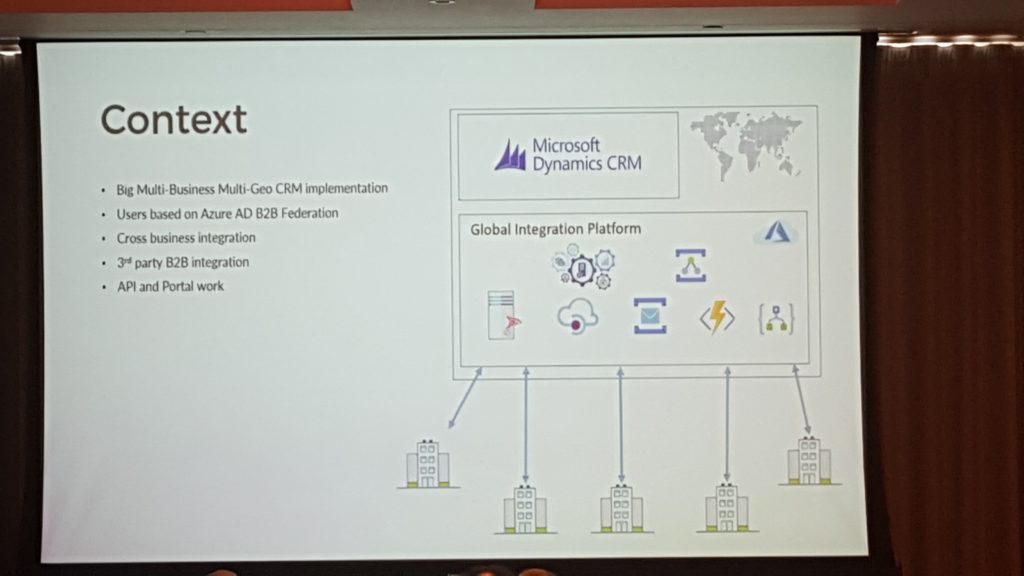

The presentation was based on a big multi-business multi-geo CRM Implementation where multiple parties were involved to create a new B2B User. The process consisted of sending a CSV file through PowerShell to create the user in Azure AD and then in Dynamics CRM.

The problem was cross team friction:

- Support user does not have enough skills to execute this process and on top of that this kind of scripts require elevated permissions

- Admin – does not have enough time to perform this task due to basic daily admin tasks and does not want the support user to accidently delete the system.

Michael then explained how Microsoft flow can be used to create a black box on top of the entire on boarding process and allow the support user to easily execute it without any permissions to the system.

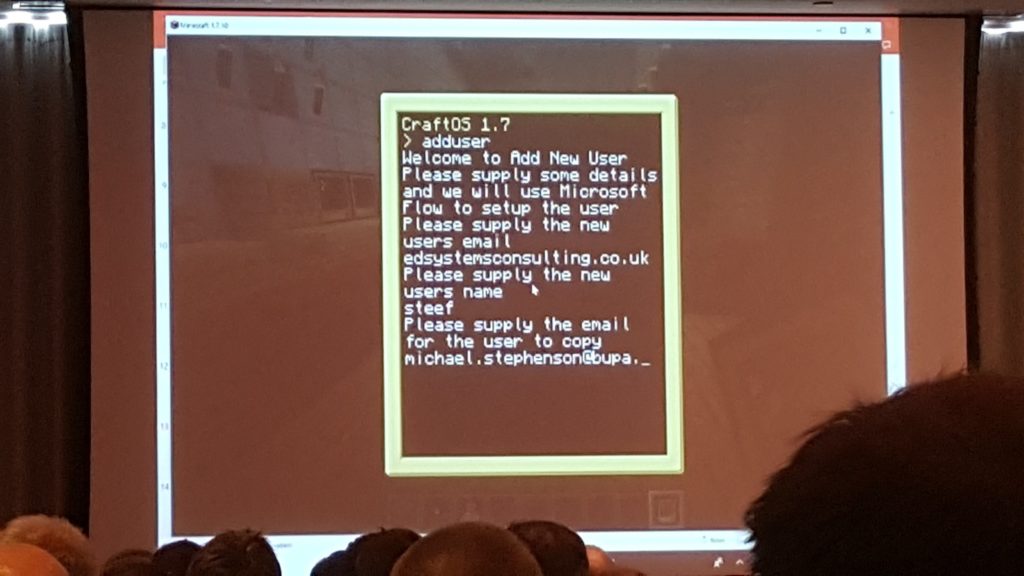

Then Michael together with Steef-Jan, demonstrated the Microsoft flow in action within Minecraft. It looks like the audience really liked the other demo he did from few years back and everyone wanted more.

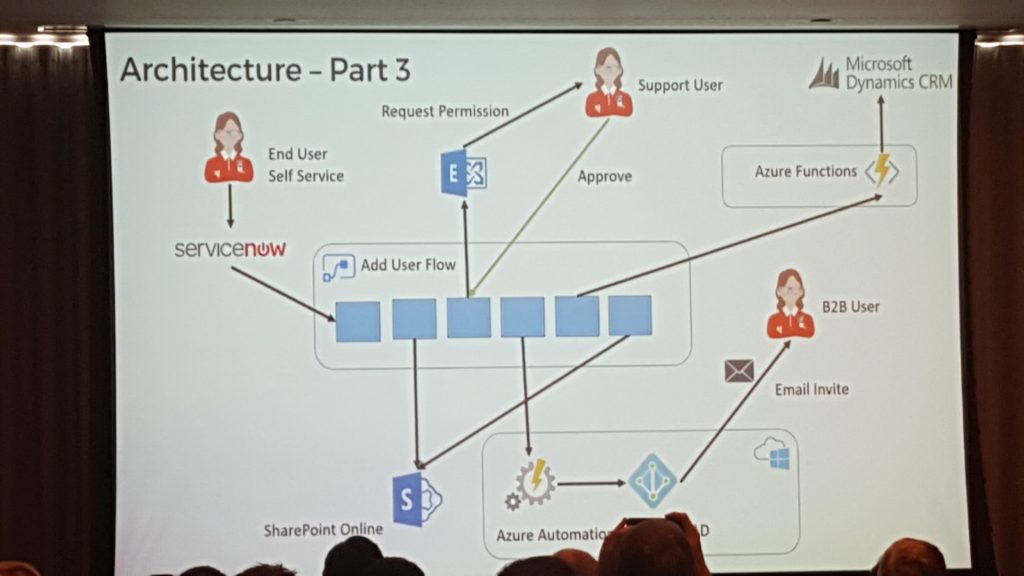

Next, he showed a diagram on how easily this process can be extended to eliminate the support user from the execution and fully automate it, by extracting relevant information from the request email of the end user.

Lastly, Michael emphasised the importance of automating repetitive tasks within the organisation to improve efficiency and reduce the costs of performing tasks that do not bring business value.

0940 — Using VSTS to deploy to a BizTalk Server, what you need to know

In this session, Johan Hedberg showed us how easy it is to setup the CI and CD for your BizTalk projects using VSTS using the BizTalk Server 2016 Feature pack 1. He showed us how to configure the BizTalk build project and how to create a build definition in VSTS to run the build whenever a developer tries to check in the code.

He stressed the importance of having automated unit tests and how to run it as part of your build pipeline. He also mentioned the advantages of using a custom agent instead of hosted agent. Overall the session was well received by the audience.

1050 — Exposing BizTalk Server to the World

During this session Wagner explained and demonstrated how you can unlock your BizTalk endpoints to the outside world using Azure Services. Wagner emphasised that there are options such as email, queues and file exchange, but his session focused more on the HTTP endpoints.

The options which were demoed during the session included:

- Azure Relays

- Logic Apps

- Azure Function Proxies

- Azure API Management

Each of the available options were clearly explained and Wagner provided detailed information on various aspects such as security, message format and ease of implementation.

“Identify your needs” – was the key to the session. Wagner clearly explained that not every option will suite your requirements, as it all depends on what you want to achieve. For example, Logic Apps is a perfect option to extend your workflows, on the other hand, relays are perfect to securely expose on premise services without the need to punch through your firewall.

1130 — Anatomy of an Enterprise Integration Architecture

In this session, Dan Toomey talked about complexity of the integration process especially when it comes to integration large number applications together. He talked about how are we doing the integration currently and what problems are we trying to solve using integration and where are all the areas in the integration space where we can do it better.

He further went on to explain how we can use Gartner’s pace layers which includes Systems of records, System of differentiation and System of innovation to create a technology characteristics for the integration scenarios.

For this work we need a solid system of record layer which includes things like Security, API’s, service fabric etc. We should limit the customization in this layer. On top that in the differentiation layer we need systems like Logic Apps which are loosely coupled inter-layer communication systems to take care of the integration needs.

On top of the differentiation layer we need to allow room for innovation for examples things like cognitive services, power apps, Microsoft Flow etc.

1210 — Unlock the power of hybrid integration with BizTalk Server and webhooks!

Toon started out his session by showing the difference between polling and using Webhooks. He pointed out that while polling, you are actually hammering an endpoint, which might not (yet) have the data you requested for. In many scenarios it might be that using Webhooks is more efficient, than using polling. Toon gave an overview of both the advantages and the disadvantages of using Webhooks.

Advantages

- more efficient

- faster

- no client side state

- provides extensibility

Disadvantages

- not standardized

- extra responsibilities for both client and server

- considered as a black box

After giving few examples of solutions which are using Webhook (GitHub, TeamLeader, Azure Monitoring), Toon continued by giving a number of design considerations for Webhooks, which contained that it’s best to give your Webhooks a clear and descriptive name and use a consistent name convention for easy of use. Also make sure that consumers are able to register and unregister your Webhook via a user interface or via an API.

From a Publisher perspective, you should take care of the following:

- reliability (asynchronously, not synchronously)

- security (use HTTPS)

- validity (check accessibility at registration)

Also Consumers of Webhooks should be aware of reliability and security, but they should also keep high availability, scalability and sequencing of Webhooks.

Toon also showed some demos on how to manage Webhooks with BizTalk and Event Grid and how to synchronize contacts and documents.

1340 — Refining BizTalk implementations

The last session of the event was done by Mattias Logdberg. By taking a user case he explained how you could start with a basic BizTalk scenario in which a web shop was integrated with an ERP by using BizTalk, but based on business needs all kind of Azure technologies were introduced to end up with an innovative solution which enabled for much more possibilities.

He mentioned that Business needs often conflict with IT needs; where the business often needs more and more capabilities, it can be hard for IT departments to keep up with the pace.

Mattias started drawing that basic scenario, which involved that web shop, BizTalk and that ERP system and showed how this scenario could greatly be improved by using Azure technologies like Service Bus topics, API Management, DocumentDb, etc. That monolithic application was turned in a loosely coupled solution with many more capabilities. Bottom line was that by going through this process, Mattias pointed out that unnecessary complexity was removed and the earlier existing complex framework was replaced by a set of micro functions, thereby giving the business more flexibility to further develop their needs.

After the last session, it was time for an open Q&A session with the Microsoft Product Group. The discussion spanned across different areas such as BizTalk Server, Logic Apps, Microsoft Flow and was an engaging one.

With that, it was time to wrap up what was a great 3 days at INTEGRATE 2018.

Check out the pictures captured by our event photographer Tariq Sheikh here —

Thanks to the following people for helping me to collate this blog post—

- Umamaheswaran Manivannan

- Lex Hegt

- Srinivasa Mahendrakar

- Daniel Szweda