by Steef-Jan Wiggers | Sep 2, 2017 | BizTalk Community Blogs via Syndication

A few weeks ago Azure Event Grid service became available in preview. This service enables centralized management of events in a uniform way. It’s scales with you when the number of events increases. And this is made possible by the foundation the event grid relies on service fabric. Not only does is auto scale you also do not have to provision anything beside a Event Topic to support custom events (see my blog Routing an Event with a custom Event Topic). Event Grid is Serverless, you only pay for each action (Ingress events, Advanced matches, Delivery attempts, Management calls). Moreover, the price will be 30 cents per million action in preview, and will be 60 cents once the service will be GA.

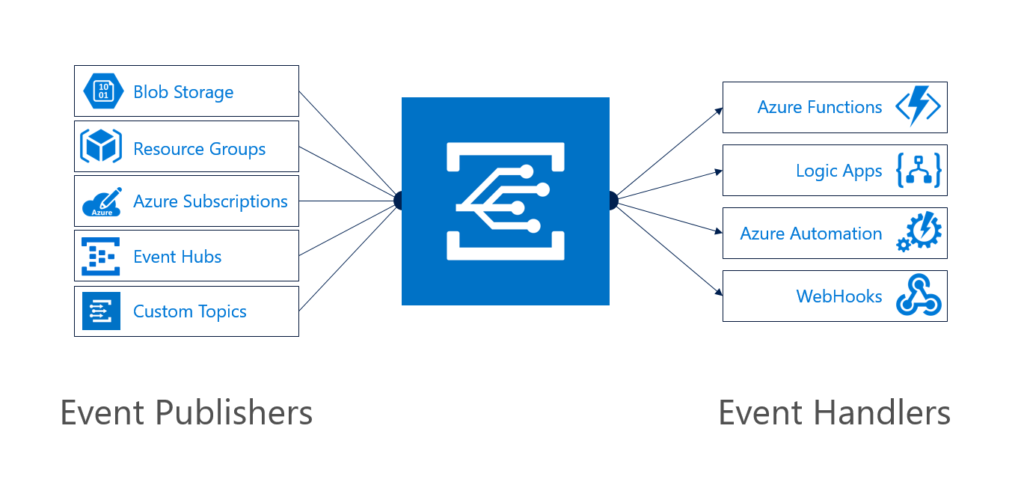

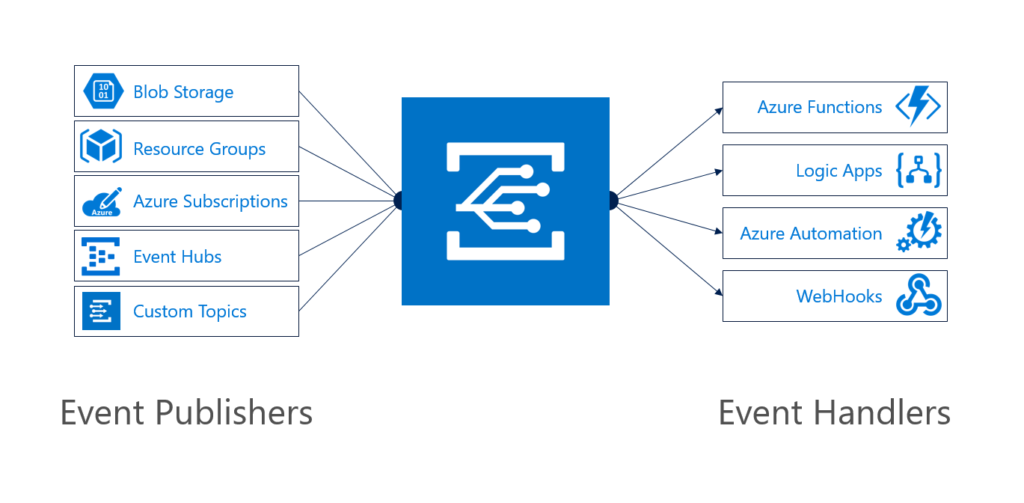

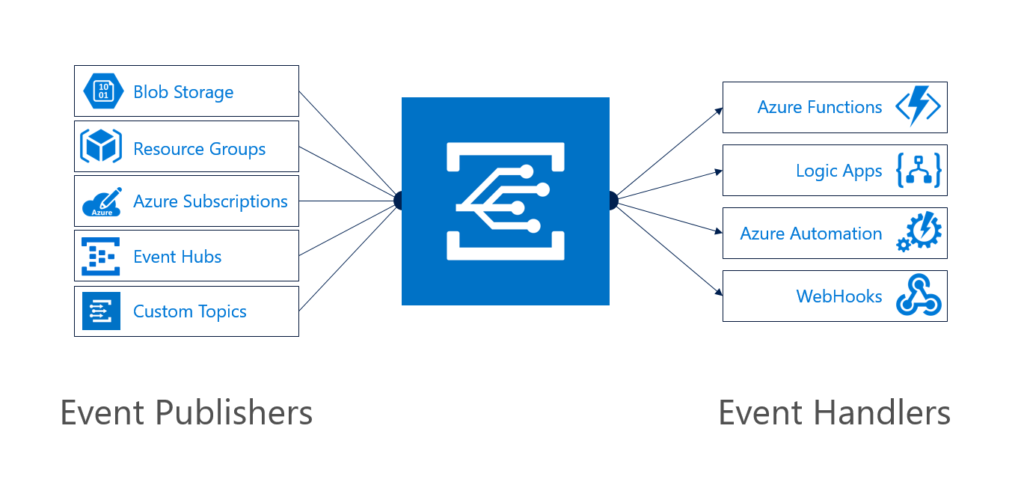

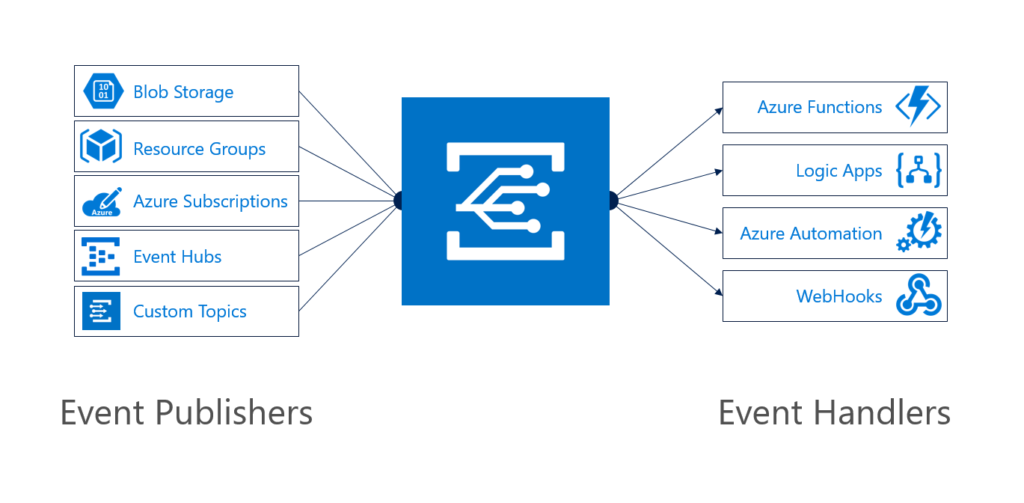

Azure Event Grid can be described as an event broker that has one of more event publishers and subscribers. Event publishers are currently Azure blob storage, resource groups, subscriptions, event hubs and custom events. More will be added in the coming months like IoT Hub, Service Bus, and Azure Active Directory. Subsequently, there are consumers of events (subscribers) like Azure Functions, Logic Apps, and WebHooks. And more will be added on the subscriber side too with Azure Data Factory, Service Bus and Storage Queues for instance.

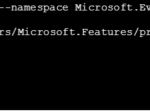

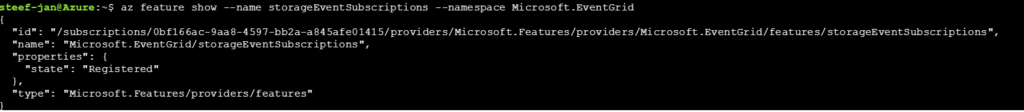

Azure Event Grid Storage registeration

Currently to capture Azure Blob Storage events you will need to register your subscription through a preview program. Once you have registered your subscription, which could take a day or two you can leverage Event Grid in Azure Blob Storage only in Central West US!

The Microsoft documentation on Event Grid has a section “Reacting to Blob storage events”, which contains a walkthrough to try out the Azure Blob Storage as an event publisher.

Azure Event Grid Storage Account Events Scenario

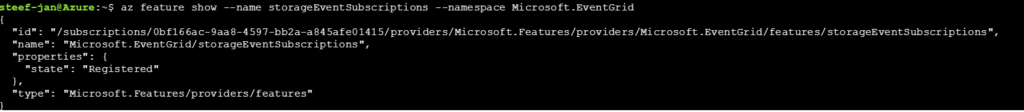

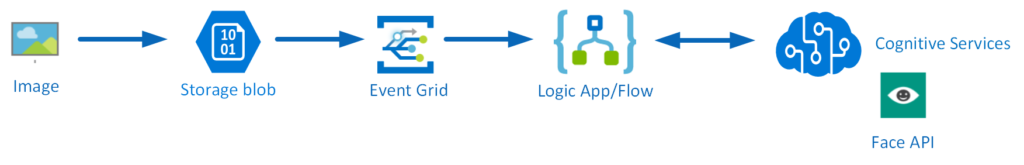

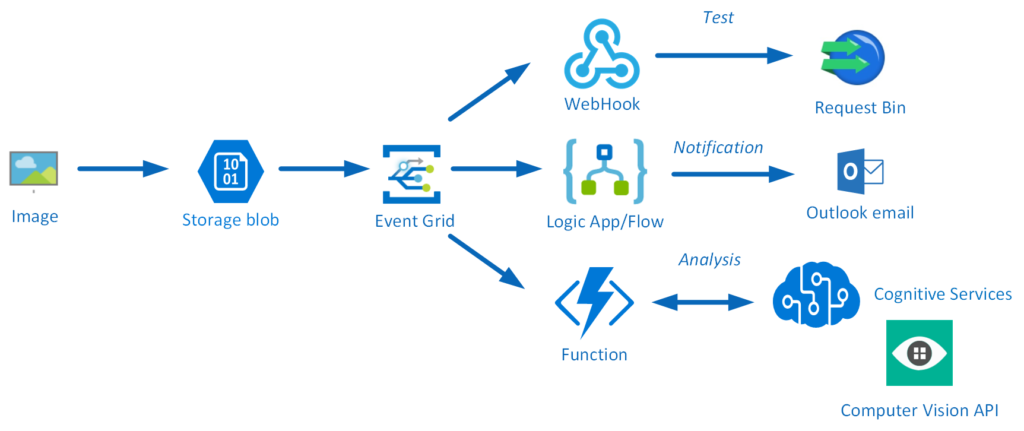

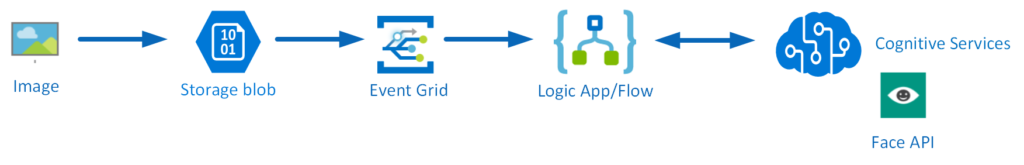

Having registered my subscription to the preview program I started exploring its capability as in the landing page of Event Grid sample scenario’s were explained. And I wanted to try out the serverless architecture sample, where one can use Event Grid to instantly trigger a serverless function to run image analysis each time a new photo is added to a blob storage container. Hence, I build a demo according to the diagram below.

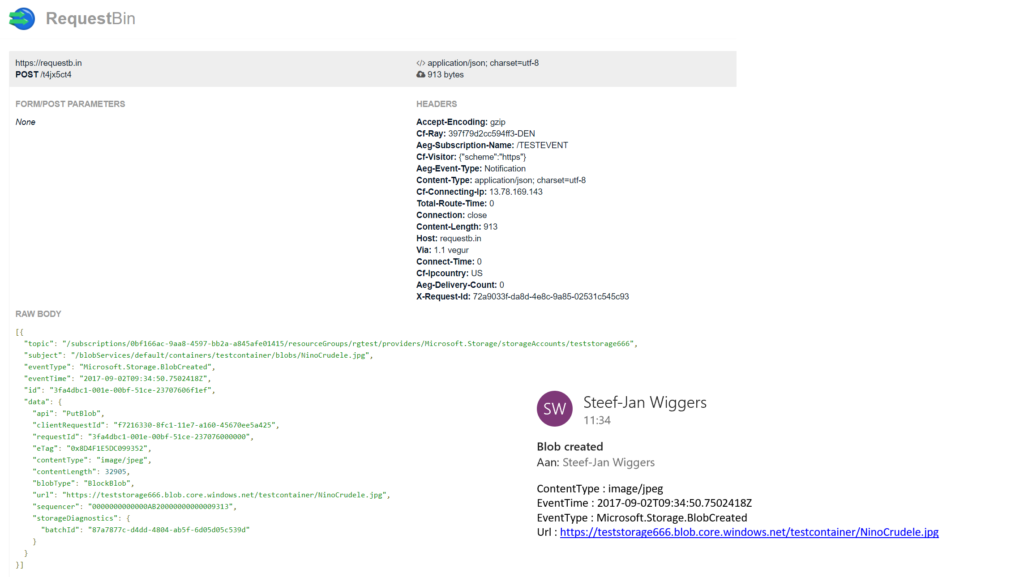

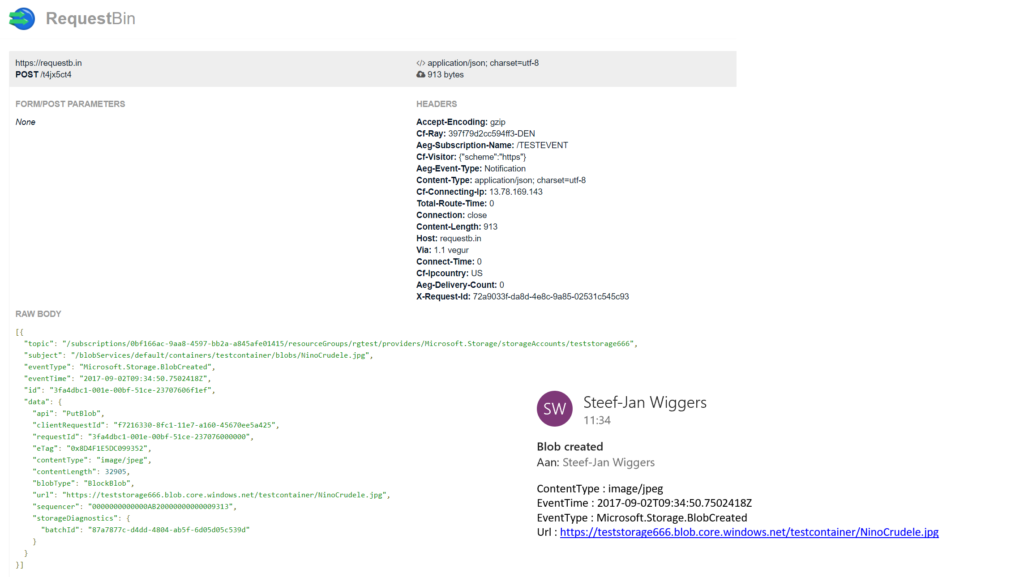

An image will be uploaded to a Storage blob container, which will be the event source (publisher). Subsequently, the Storage blob container belongs to a Storage Account containing the Event Grid capability. And the Event Grid has three subscribers, a WebHook (Request Bin) to capture the output of the event, a Logic App to notify me a blob has been created and an Azure Function that will analyze the image created in the blob storage, by extracting the URL from the event and use it to analyze the actual image.

Intelligent routing

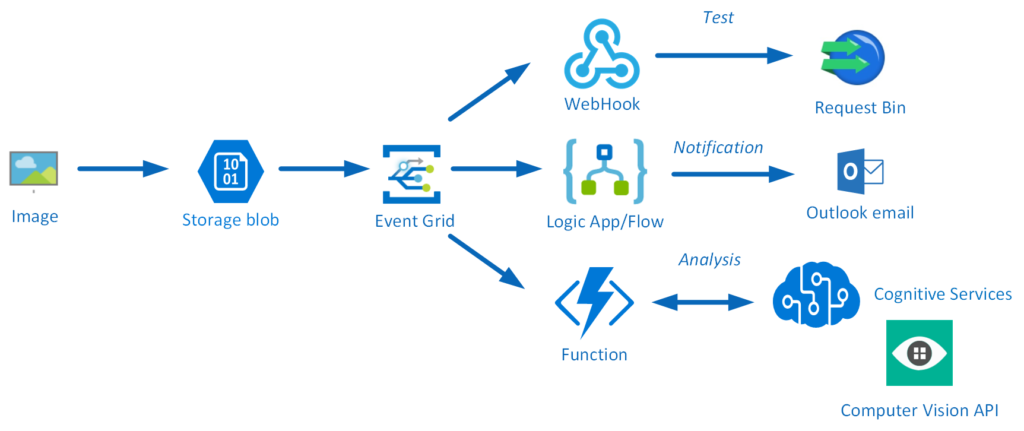

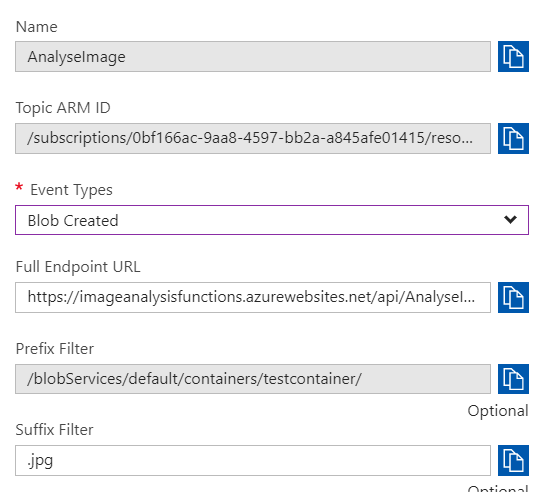

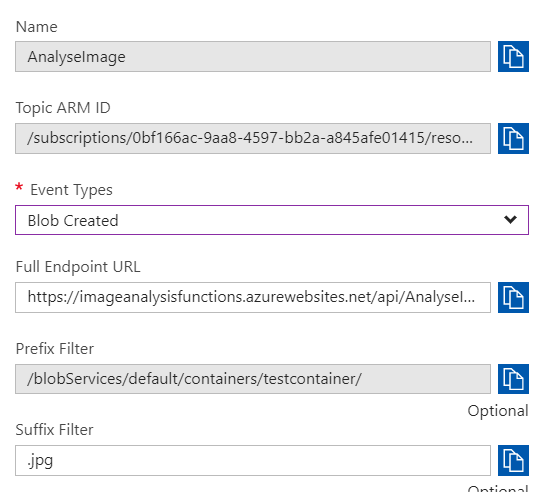

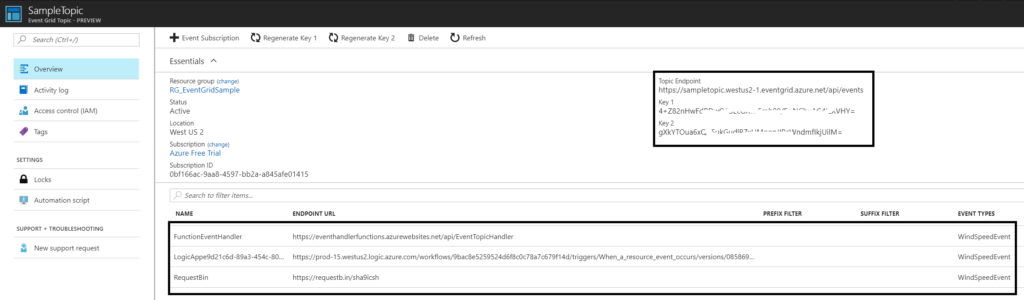

The screenshot below depicts the subscriptions on the events on the Blob Storage account. The WebHook will subscribe to each event, while the Logic App and Azure Function are only interested in the BlobCreated event, in a particular container(prefix filter) and type (suffix filter).

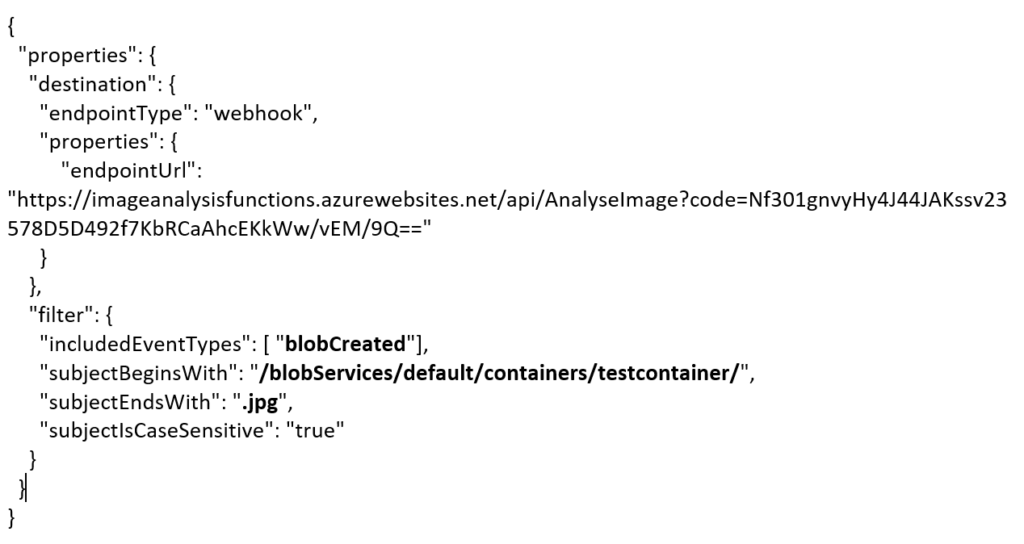

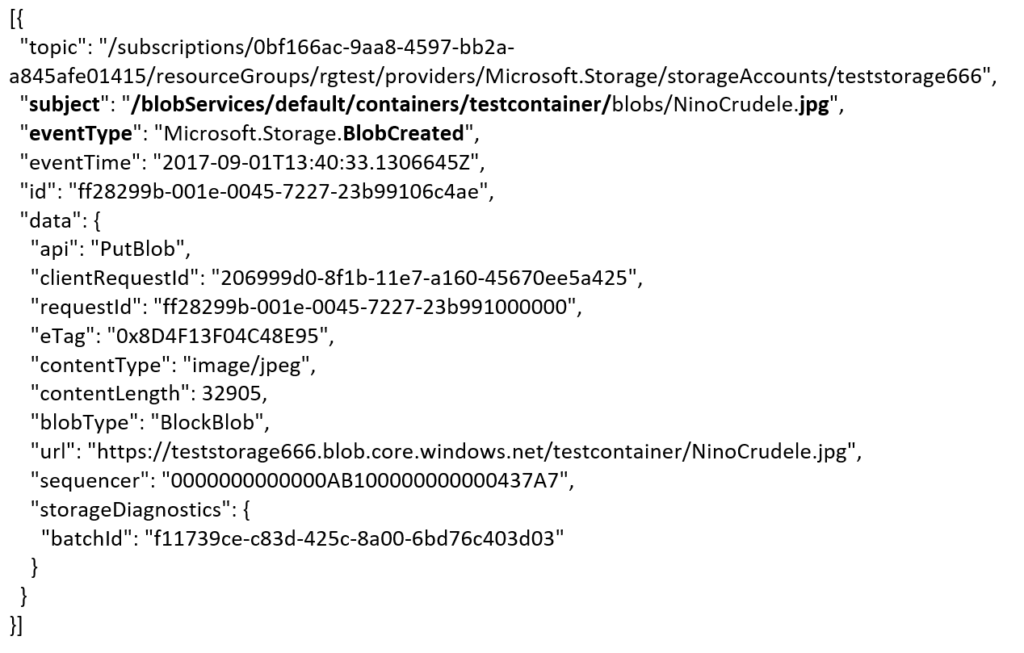

Besides being centrally managed Event Grid offers intelligent routing, which is the core feature of Event Grid. And you can use filters for event type, or subject pattern (pre- and suffix). Moreover, the filters are intended for the subscribers to indicate what type of event and/or subject they are interested in. When we look at our scenario the event subscription for Azure Functions is as follows.

- Event Type : Blob Created

- Prefix : /blobServices/default/containers/testcontainer/

- Suffix : .jpg

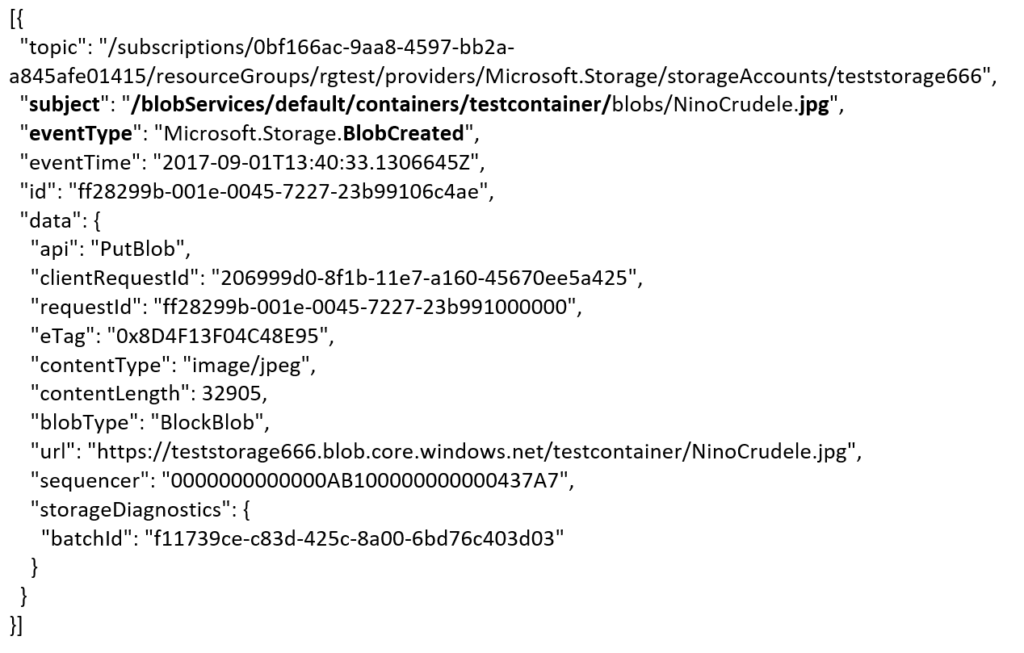

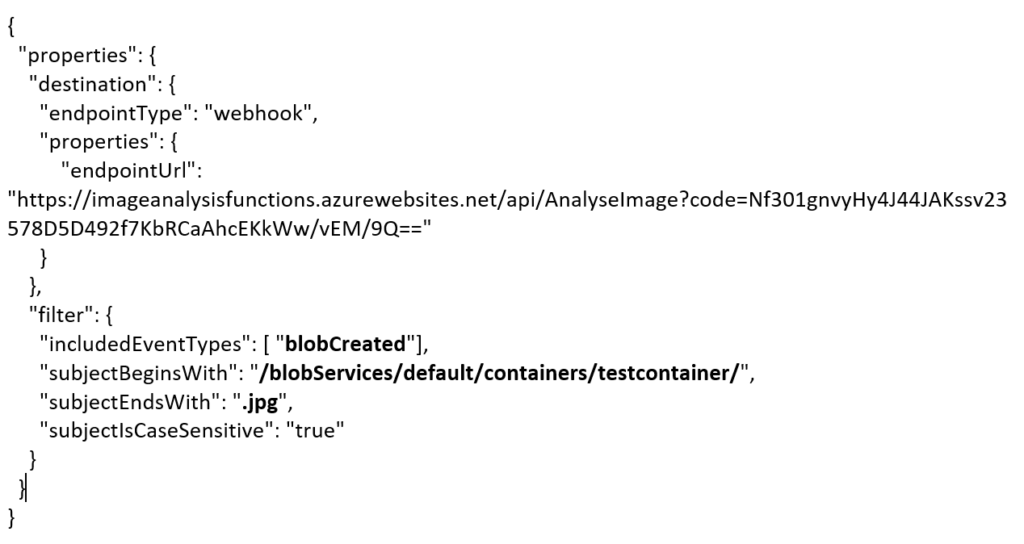

The prefix, a filter object, looks for the beginsWith in the subject field in the event. And the suffix looks for the subjectEndsWith in again the subject. In the event above you see that the subject has the specified Prefix and Suffix. See also Event Grid subscription schema in the documentation as it will explain the properties of the subscription schema. The subscription schema of the function is as follows:

The Azure Function is only interested in a Blob Created event with a particular subject and content type (image .jpg). And this will be apparent once you inspect the incoming event to the function.

The same intelligence applies for the Logic App that is interested in the same event. The WebHook subscribes to all the events and lacks any filters.

The scenario solution

The solution contains of a storage account (blob), registered subscription for Event Grid Azure Storage, Request Bin (WebHook), a Logic App and a Function App containing a function. The Logic App and Azure Function subscribe to BlobCreated event with the filter settings.

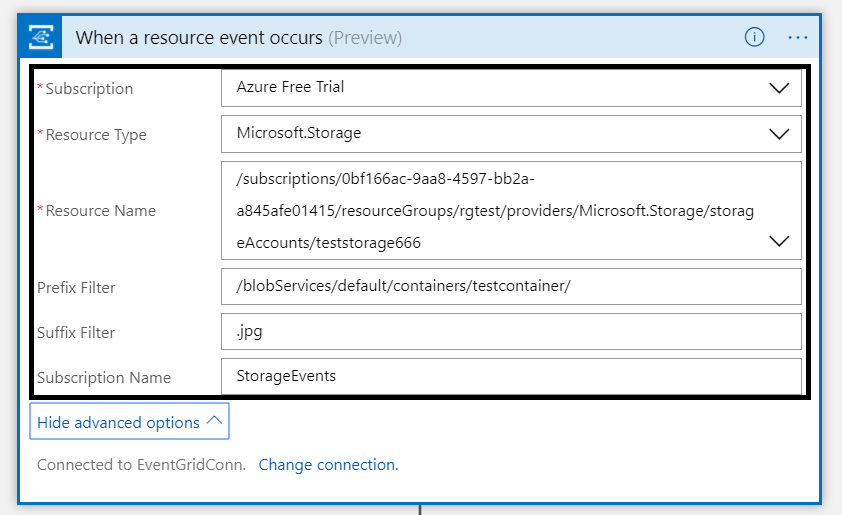

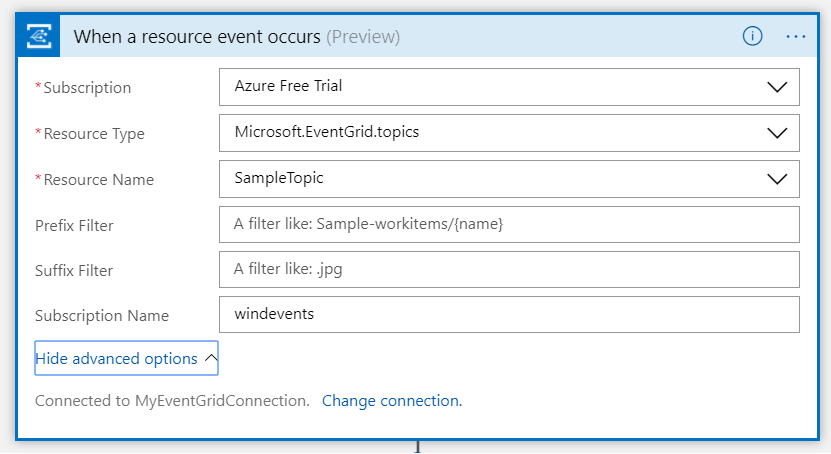

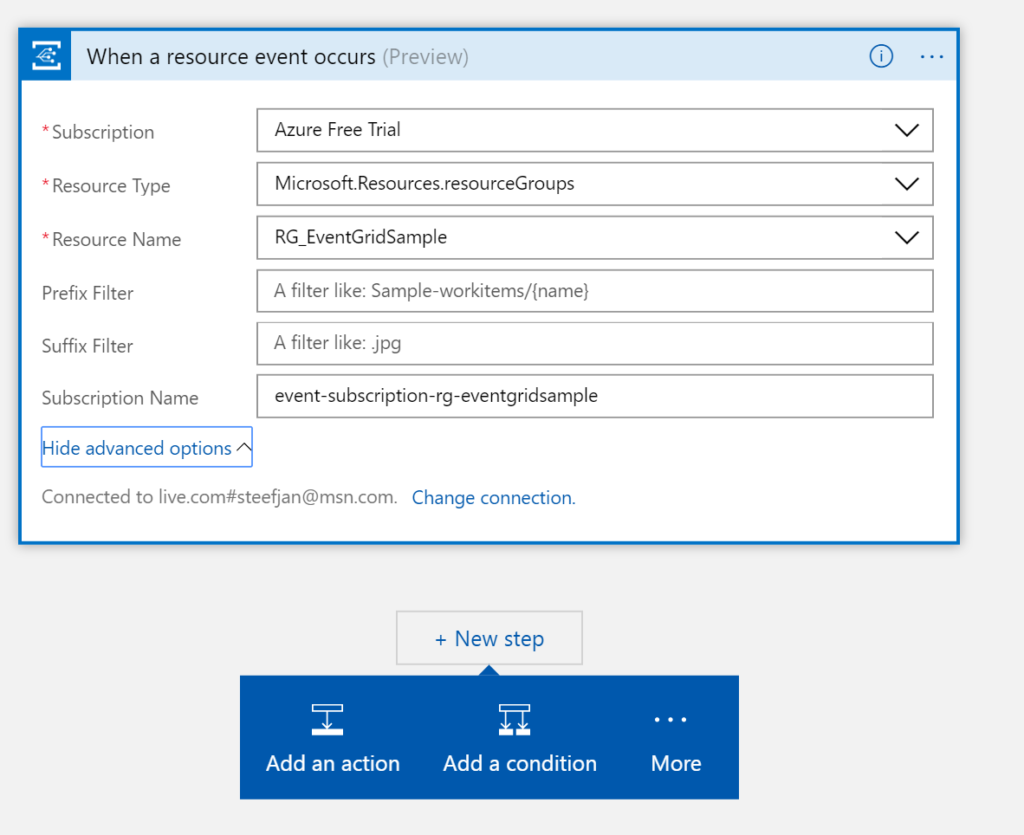

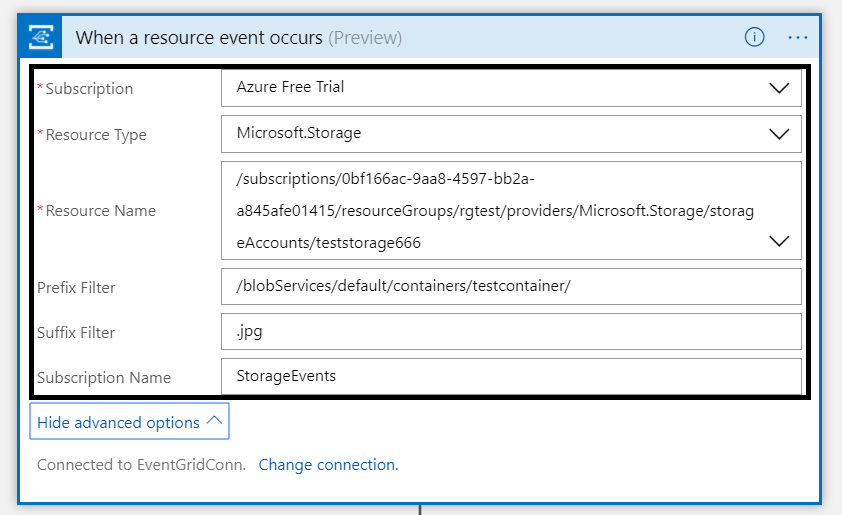

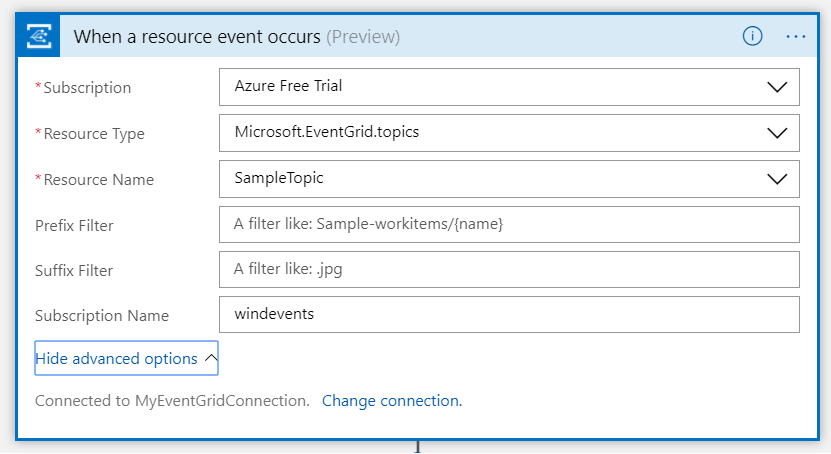

The Logic App subscribes to the event once the trigger action is defined. The definition is shown in the picture below.

Note that the resource name has to be specified explicitly (custom value) as the resource type Microsoft.Storage has be set explicitly too. The resource types that are listed are Resource Groups, Subscriptions, Event Grid Topics and Event Hub Namespace as Storage is still in a preview program. With this configuration the desired events can be evaluated and processed. In case of the Logic App it is parsing the event and sending an email notification.

Azure Function Storage Event processing

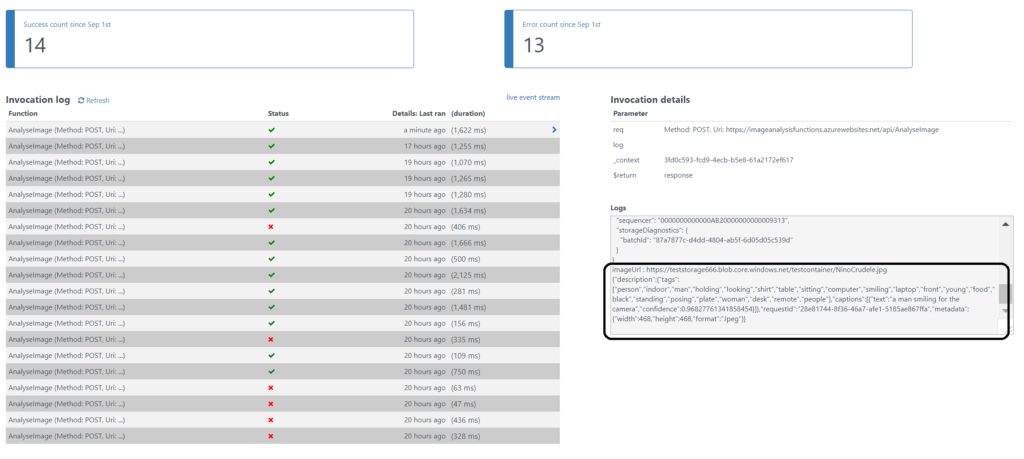

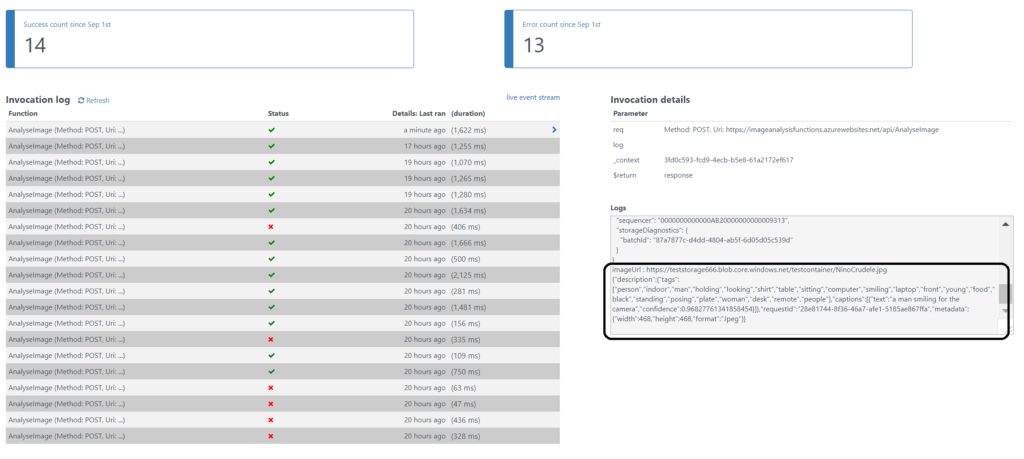

The Azure Function is interested in the same event. And as soon as the event is pushed to Event Grid once a blob has been created it will process the event. The url in the event https://teststorage666.blob.core.windows.net/testcontainer/NinoCrudele.jpg will be used to analyze the image. The image is a picture of my good friend Nino Crudele.

This image will be streamed from the function to the Cognitive Services Computer Vision API. The result of the analysis can be viewed in the monitor tab of the Azure Function.

The result of the analysis that Nino is smiling for the camera with confidence. The Logic App will parse the event and sent an email. The Request Bin will show the raw event. And in case I deleted the blob than this will only be captured by the WebHook (Request Bin) as it is interested in any event on the Storage account.

Summary

Azure Event Grid is unique in its kind as now other Cloud vendor has this type of service that can handle events in a uniform and serverless way. Although it is still early days as this service is in preview a few week. However, with expansion of event publishers and subscribers, management capabilities and other features it will mature in the next couple of months. The service is currently only available in the West Central US and West US, yet over course of time it will become available in every region. And once it will become GA the price will increase.

Working with Storage Account as source (publisher) of events unlocked new insights in the Event Grid mechanisms. Moreover, it shows the benefits of having a managed service by Azure for events. And the pub-sub and push of events are the key differentiators towards the other two services Service Bus and Event Hubs. No longer do you have to poll for events and/or develop a solution for it. To conclude the Service Bus Team has completed the picture for messaging and event handling.

Author: Steef-Jan Wiggers

Steef-Jan Wiggers is all in on Microsoft Azure, Integration, and Data Science. He has over 15 years’ experience in a wide variety of scenarios such as custom .NET solution development, overseeing large enterprise integrations, building web services, managing projects, designing web services, experimenting with data, SQL Server database administration, and consulting. Steef-Jan loves challenges in the Microsoft playing field combining it with his domain knowledge in energy, utility, banking, insurance, health care, agriculture, (local) government, bio-sciences, retail, travel and logistics. He is very active in the community as a blogger, TechNet Wiki author, book author, and global public speaker. For these efforts, Microsoft has recognized him a Microsoft MVP for the past 7 years. View all posts by Steef-Jan Wiggers

by Steef-Jan Wiggers | Aug 27, 2017 | BizTalk Community Blogs via Syndication

Summer holidays are over, it is back to work and a few weeks later back into the trenches I learned a lot more about Azure Cosmos DB, Azure Search and the latest addition to the Platform Event Grid.

Month August

Microsoft launched a new service, Event Grid to support serverless events with intelligent routing and providing an uniform event consumption using a pub-sub model (similar to pub-sub we know from BizTalk Server). Like some integration minded folks I written three blogs about the service on my own blog:

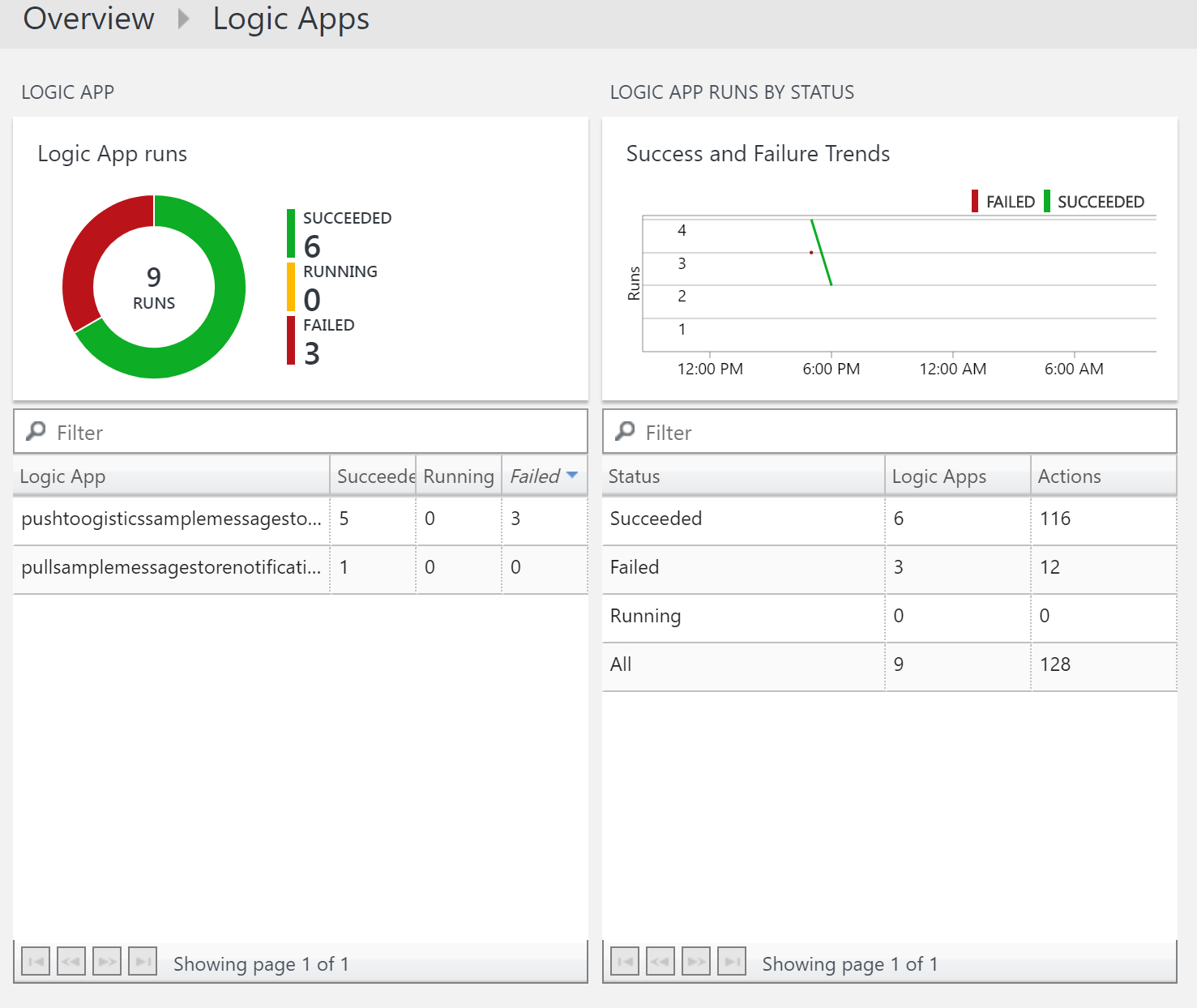

Besides EventGrid, the Microsoft Pro Integration PG announced a Logic Apps Management (Preview) solution in OMS. And I have tried out this service too a wrote a blog about it:

Based on the release of the OMS solution for Logic App, I delved into monitoring and operations a bit. And saw many monitoring solutions when it comes to a serverless integration solution in Azure. You can read about this topic on the BizTalk360 blog:

The last blog post was inspired by Saravana’s article on LinkedIn: Challenges Managing Distributed Cloud Applications

To conclude managing a distributed cloud native solution with several Azure services is a challenge!

Codit

On the 1st of October I will join Codit. Why you might ask? The year contract at Macaw ends at the 30th of September and I realized that my skills, speaking engagements, passion and focus lies more with Azure, Integration, IoT. And this fits with the Codit corporate strategy, plus I have more than 100 integration focussed sparring partners.

Books

This month I haven’t read that much other than a book about rise of robots. The message in this book was rather grim and I felt that almost no one will have a job in 10 years or so. A scary, fascinating read.

Music

My favorite albums in August were:

- Sons Of Crom – The Black Tower

- Thy Art Is Murder – Dear Desolation

- Steven Wilson – To The Bone

- Akercocke – Renaissance In Extremis

- Leprous – Malina

Running

I deciced I wanted to run another marathon and enrolled into the Tokyo Marathon at the end of February 2018. Therefore, I started running 4 to 5 times a week this month and I am making good progress

Next month will be my last with my current employer Macaw, before I start at Codit. Moreover, I will be speaking at the end of the month in Oslo for the Norwegian BizTalk User Group together with Eldert and Tomasso.

Cheers,

Steef-Jan

Author: Steef-Jan Wiggers

Steef-Jan Wiggers is all in on Microsoft Azure, Integration, and Data Science. He has over 15 years’ experience in a wide variety of scenarios such as custom .NET solution development, overseeing large enterprise integrations, building web services, managing projects, designing web services, experimenting with data, SQL Server database administration, and consulting. Steef-Jan loves challenges in the Microsoft playing field combining it with his domain knowledge in energy, utility, banking, insurance, health care, agriculture, (local) government, bio-sciences, retail, travel and logistics. He is very active in the community as a blogger, TechNet Wiki author, book author, and global public speaker. For these efforts, Microsoft has recognized him a Microsoft MVP for the past 7 years. View all posts by Steef-Jan Wiggers

by Steef-Jan Wiggers | Aug 24, 2017 | BizTalk Community Blogs via Syndication

Event Grid Topic is a part of Event Grid, a new Platform Service, which provides intelligent event routing through filters and event types. Moreover, it offers a uniform publish-subscribe model similar to the model of the BizTalk runtime. However, we are talking events here and not messaging. Event Grid is a managed service in Azure with service fabric underneath. Some of the characteristics of Event Grid are discussed in one of Tom Kerhove’s latest’s posts : Exploring Azure Event Grid.

Event Grid offers custom event routing capabilities with an Event Grid Topic. Consequently, a Topic can be provisioned through the Azure Portal. And once the Topic becomes available you can hook it up with one of more subscribers.

Routing with Event Grid Topic

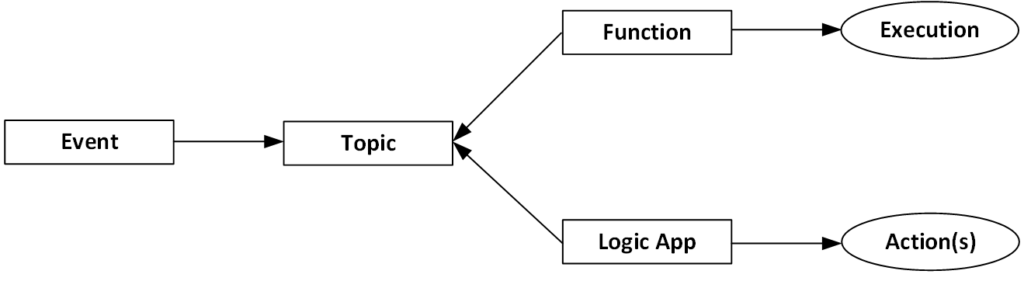

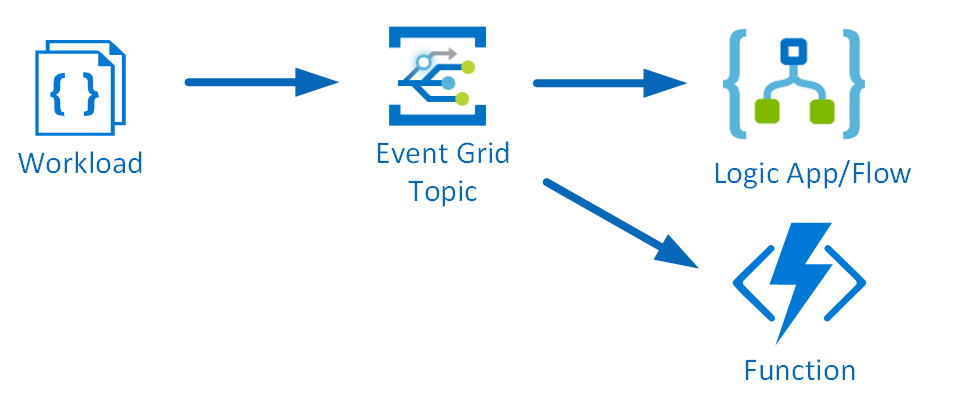

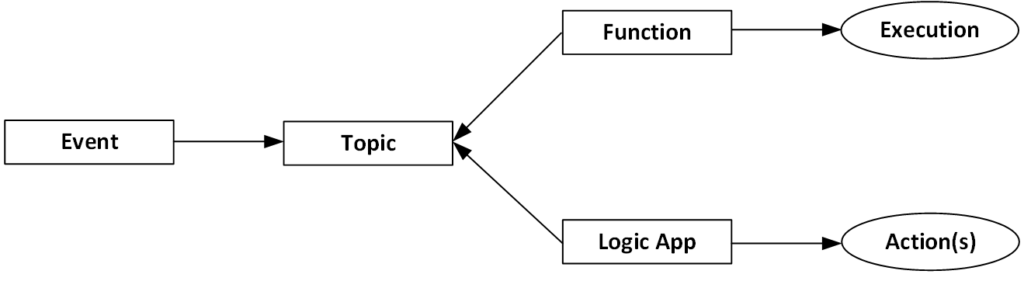

Custom events can be pushed to an Event Grid Topic, which can have multiple subscribers. Subsequently, the subscription is set on either Event Type and/or filters (Prefix, Suffix). Hence, a broadcast of a single event to multiple handlers can be accomplished. Therefore, each handler can operate on the event.

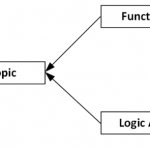

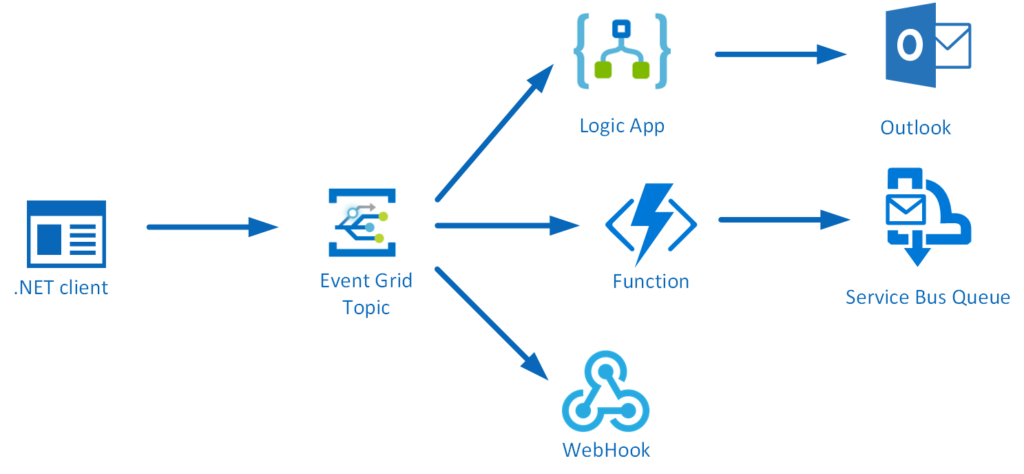

The consumption of the events will be through the custom Event Grid Topic as shown above. Futhermore, consumers can be a Function, Logic App, WebHook or Azure Automation currently. And the mechanism of subscriptions in Logic App and Functions is through WebHooks, which I will eloborate more about in event subscribers.

Custom Events to Event Grid Topic

Custom events need to adhere to a schema, which includes five mandatory string properties and a required data object. Subsequently, a custom event needs these properties. Therefore, a .NET client for instance can leverage the System.Net.Http namespace using a HttpClient. Hence to be able to sent a custom event with a .NET client to the Event Grid Topic, you’ll need to know the endpoint (URL) and SAS-Key.

Let me explain here. First of all, a Event Grid Topic requires either a SAS-key or key authentication, however the last one is easier to implement in a .NET client. Hence, you add a default request header with the key “aeg-sas-key” with the value in key1 found in the Azure Event Grid Topic Overview.

To actually sent the event, you can use the PostAsync method. This method of the HttpClient requires the content (event data) and Endpoint URL, which also can be found in the Azure Event Grid Topic Overview.

Event content

The content has to event schema. Therefore, the payload could look like:

[

{“Data”:

{“WindSpeed”:6.2,

“Beaufort”:0,

“Type”:”WindDetails”,

“Location”:”Amsterdam”,

“Lat”:52.373888888888892,

“Lng”:4.889444444444444

},

“Id”:”a72f1473-d763-43c0-ad49-13dacf9158d3″,

“Subject”:”WindDetails”,

“EventType”:”WindSpeedEvent”,

“EventTime”:”2017-08-24T14:32:15.5814874Z”

}

] |

In bold you can see the event details (data) and Id, Subject, EventType and EventTime, which are the mandatory string properties. You might ask yourself now, there are only four string properties and one data, where’s the topic property? Probably once the event above is published to the Event Grid Topic, the topic property is added to the event.

[{

{“WindSpeed”:6.2,

“Beaufort”:0,

“Type”:”WindDetails”,

“Location”:”Amsterdam”,

“Lat”:52.373888888888892,

“Lng”:4.889444444444444

},

“Id”: ” a72f1473-d763-43c0-ad49-13dacf9158d3″,

“Subject”: “WindDetails”,

“EventType”: “WindSpeedEvent”,

“EventTime”: “2017-08-24T14:01:57.4354747Z”,

“topic”: “/subscriptions/0bf166ac-9aa8-4597-bb2a-a845afe01415/resourceGroups/RG_EventGridSample/providers/Microsoft.EventGrid/topics/SampleTopic”

}] |

The Event Grid uses HTTP response codes to acknowledge receipt of events. Hence the event above sent to a custom event topic will provide the following response :

{StatusCode: 200, ReasonPhrase: ‘OK’, Version: 1.1, Content: System.Net.Http.StreamContent, Headers:

{

x-ms-request-id: e6c1fbf3-f295-49b3-ad13-b26c22b60313

Date: Thu, 24 Aug 2017 14:41:19 GMT

Server: Microsoft-HTTPAPI/2.0

Content-Length: 0

}} |

The Http Status code is 200, which is OK (event delivered). In addition I suggest you read Event Grid message delivery and retry.

Event Subscribers

A custom Event Grid Topic can have one of more subscriptions (event handlers). A subscription is an instruction for the Topic to tell it “I want this event”. In addition, the instruction can contain filters (pre- post) and/or an EventType. The Event Grid itself supports multiple subscriber types like WebHooks. And depending on subscriber type, the Event Grid has a mechanism to guarantee delivering the event to the subscriber. Do for WebHooks it’s a 200-OK, similar to when a .NET client is delivering an event to the Event Grid Topic.

Azure Function or Logic App can use the WebHook mechanism to subscribe to events on a custom Event Grid Topic. As a result, the subscription is created in the Azure Event Grid Topic containing an URL for which the Event Grid Topic can deliver the events to (POST). In addition, the Event Type can be specified, i.e. by default this is all. And finally filters can be applied, which are optional. To conclude a custom event with a certain type can be published to an Event Grid Topic, which has one of more subscribers interested in the events of a certain type.

Note the subscription of a Logic to events in the Event Grid Topic is done through a Logic App Trigger. However, to subscriber to a specific Event Type you will need to edit the subscription in the Event Grid Topic to set it from all to the required one.

A function can subscribe to events using a WebHook trigger. You create a subscription in the Event Grid Topic by providing the URL of the WebHook trigger function and Event Type (and optional filters if necessary).

Sample Scenario

To have a better understanding of routing custom events with a Event Grid topic let us look how custom events are sent and how they are consumed by multiple subscribers. Therfore, I will discuss the following scenario with you using serveral Azure services like :

- An Event Grid Topic

- An Azure Functions

- A Logic Apps

- A .NET Client

- A WebHook (RequestBin)

- An Azure Service Bus Queue

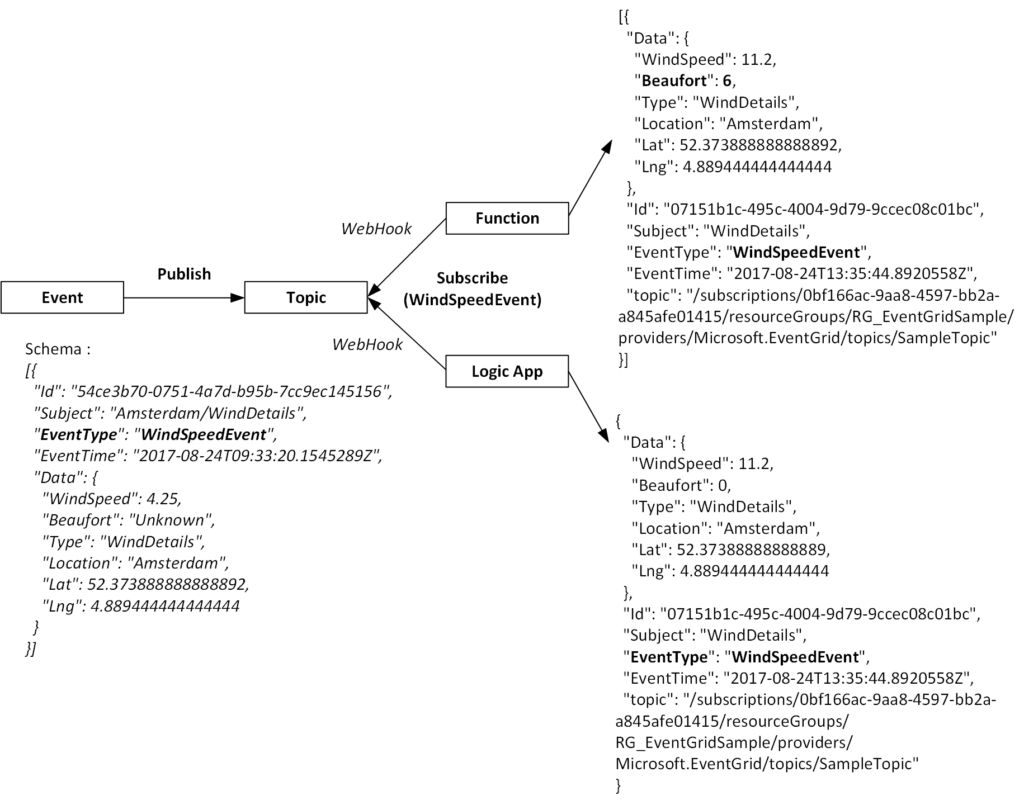

The .Net client sends a custom event to a custom Event Grid Topic provisioned in Azure. Subsequently, a Azure Function, Logic App and WebHook (RequestBin) will subscribe to an event of the Type WindSpeedEvent. First of all the Function will process the event by enriching it with a calculated value of Beaufort, and sent the enriched event to a Service Bus queue. Furthermore, the Logic App will evaluate the windspeed and send an email if windspeed is higher than a specified value. And finally RequestBin will just consume the event.

The following diagram shows the event flow of the scenario.

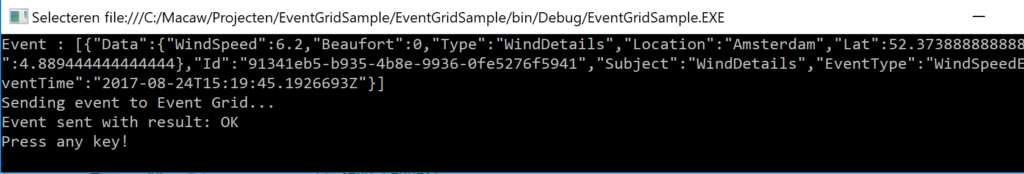

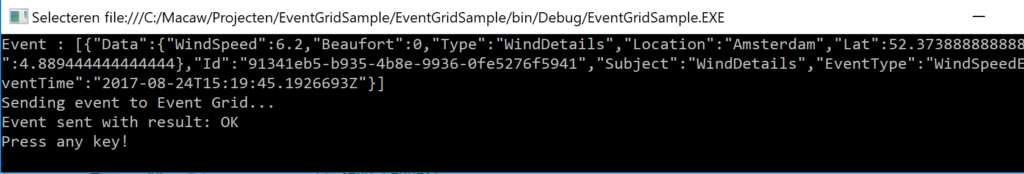

Sent a custom event

A custom event can be sent to a Event Grid Topic using a .NET client. In our scenario the custom event is of the type WindSpeedEvent, containing a few fields, including WindSpeed in meters per second and no known Beaufort (0) yet.

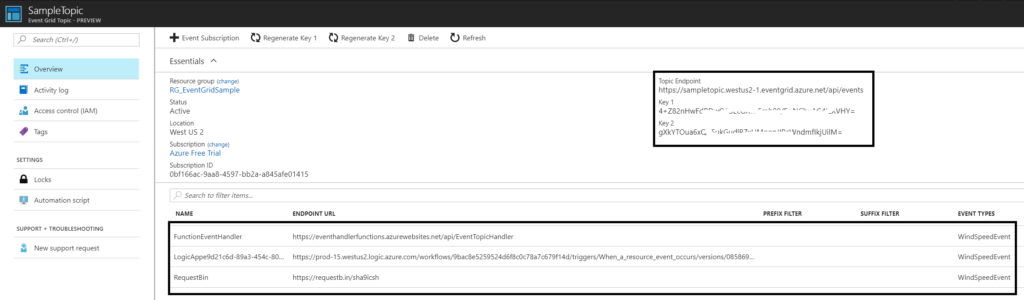

The Event Topic has three subscribers:

- Logic App

- Function

- WebHook (Request Bin)

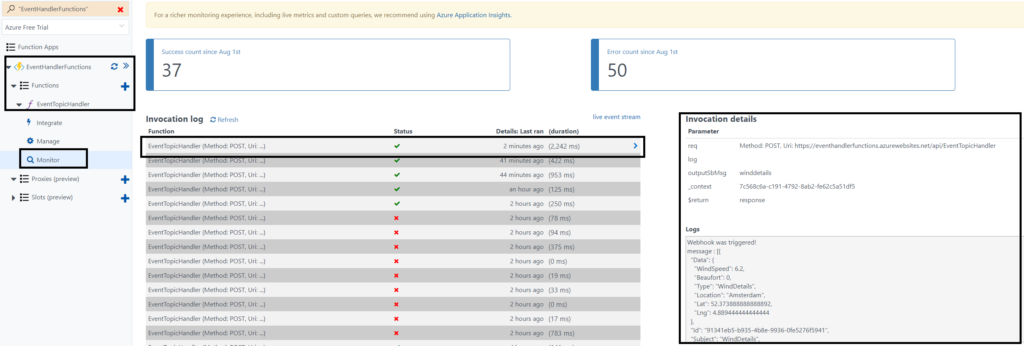

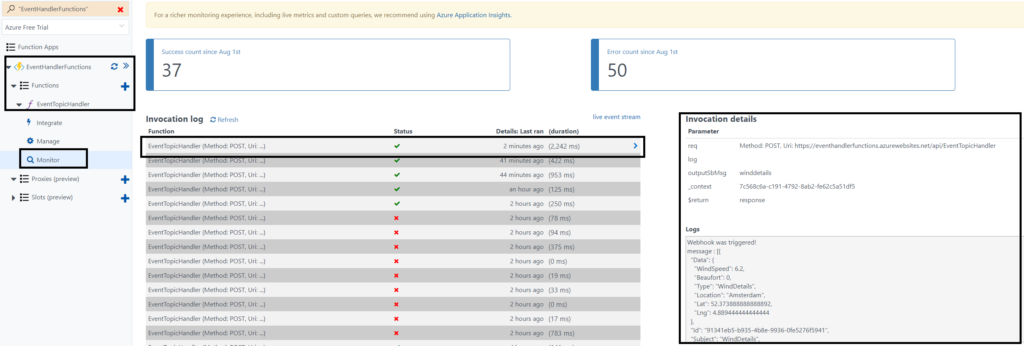

Each will receive the event, as each has subscribed to the Topic with events of Type WindSpeedEvent. Hence, in the Azure Function Monitor Pane I observed the consumption of the event.

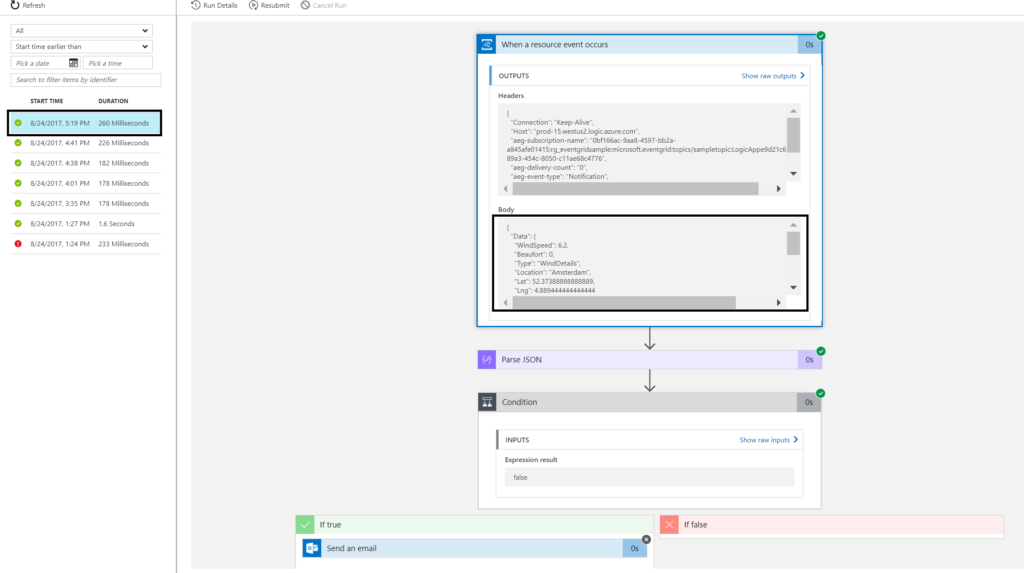

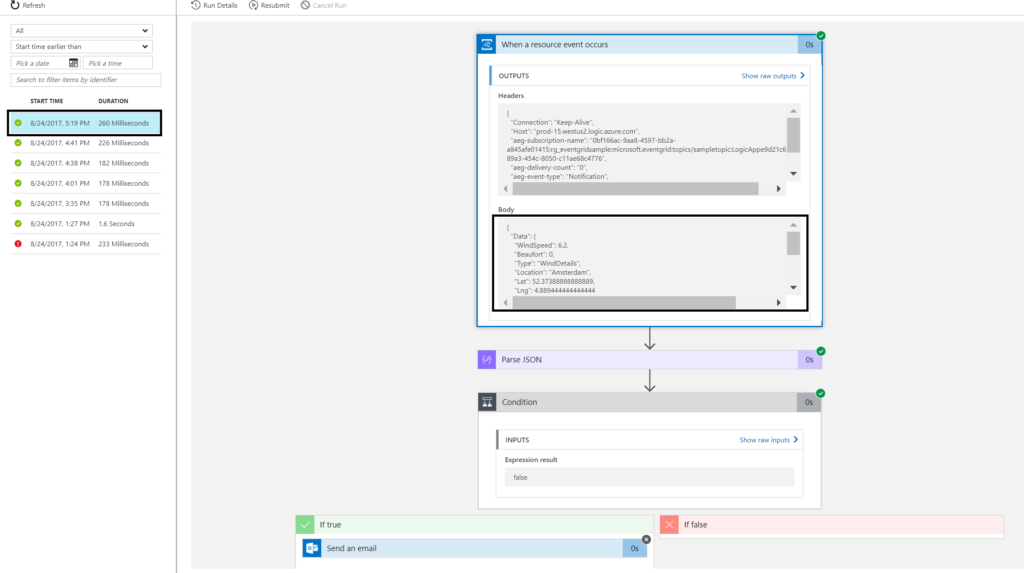

Subsequently, in the Logic Run History I observed the consumption of the event.

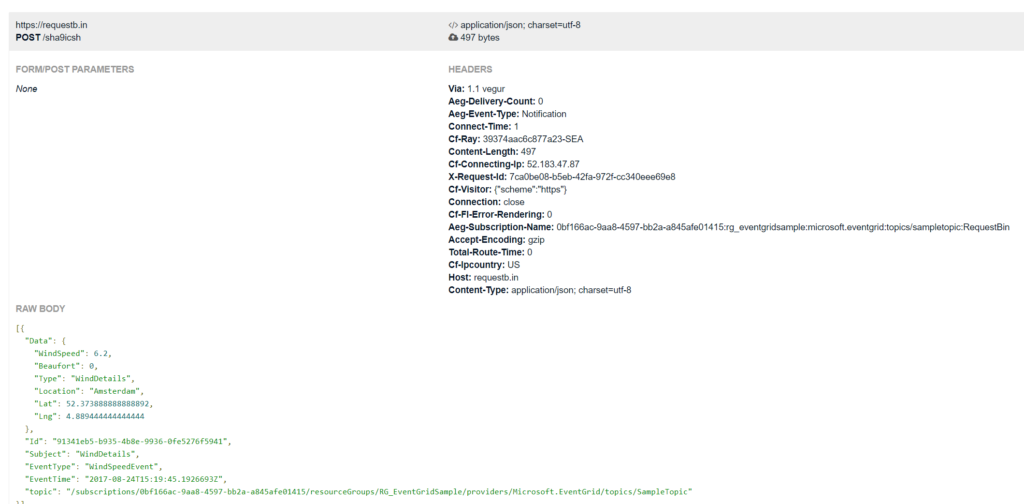

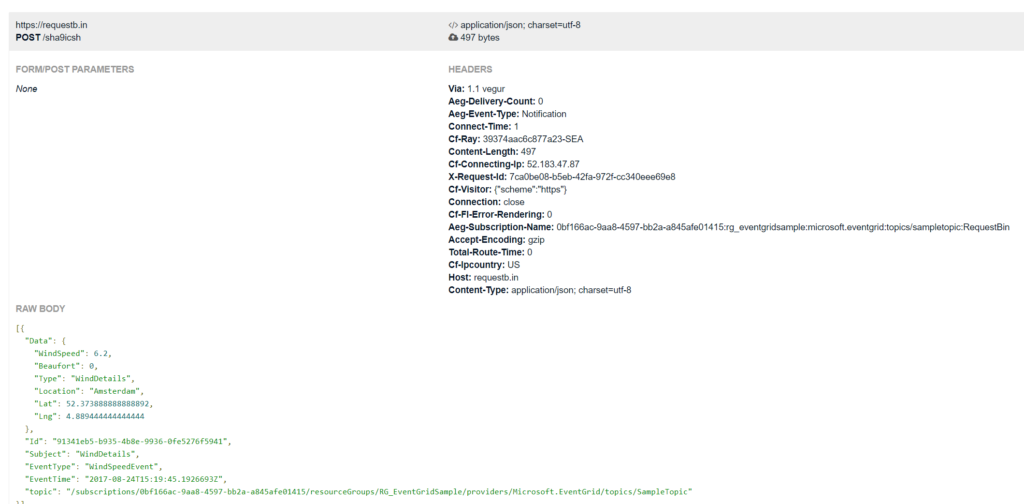

Finally, when refreshing the RequestBin page I see the event in its raw format. And this is smilar to the Event Grid Quickstart Create and route custom events with Azure Event Grid.

To conclude, each subscriber recieves the event of the Type WindSpeedEvent.

The Event Grid Topic in our scenario has three subscribers, see the screenshot of the Event Grid Topic Overview below.

Summary

Custom Event handling with an Event Grid Topic is easy to comprehend. Also it opens doors to many scenario’s ranging from IOT to Website Traffic monitoring. In this post I focused only on a custom event handled by several subscribers. However, Event Grid has more to offer in handling events from other sources like Azure Subscriptions, resource groups, and other. Finally, more publishers and handlers will be available in the future. To conclude Event Grid in my opinion is a great addition to other serverless capabilities in Azure. However I like to emphasise it is event capability in Azure compatible with other serverless components like Logic Apps and Functions.

Author: Steef-Jan Wiggers

Steef-Jan Wiggers is all in on Microsoft Azure, Integration, and Data Science. He has over 15 years’ experience in a wide variety of scenarios such as custom .NET solution development, overseeing large enterprise integrations, building web services, managing projects, designing web services, experimenting with data, SQL Server database administration, and consulting. Steef-Jan loves challenges in the Microsoft playing field combining it with his domain knowledge in energy, utility, banking, insurance, health care, agriculture, (local) government, bio-sciences, retail, travel and logistics. He is very active in the community as a blogger, TechNet Wiki author, book author, and global public speaker. For these efforts, Microsoft has recognized him a Microsoft MVP for the past 7 years. View all posts by Steef-Jan Wiggers

by Steef-Jan Wiggers | Aug 21, 2017 | BizTalk Community Blogs via Syndication

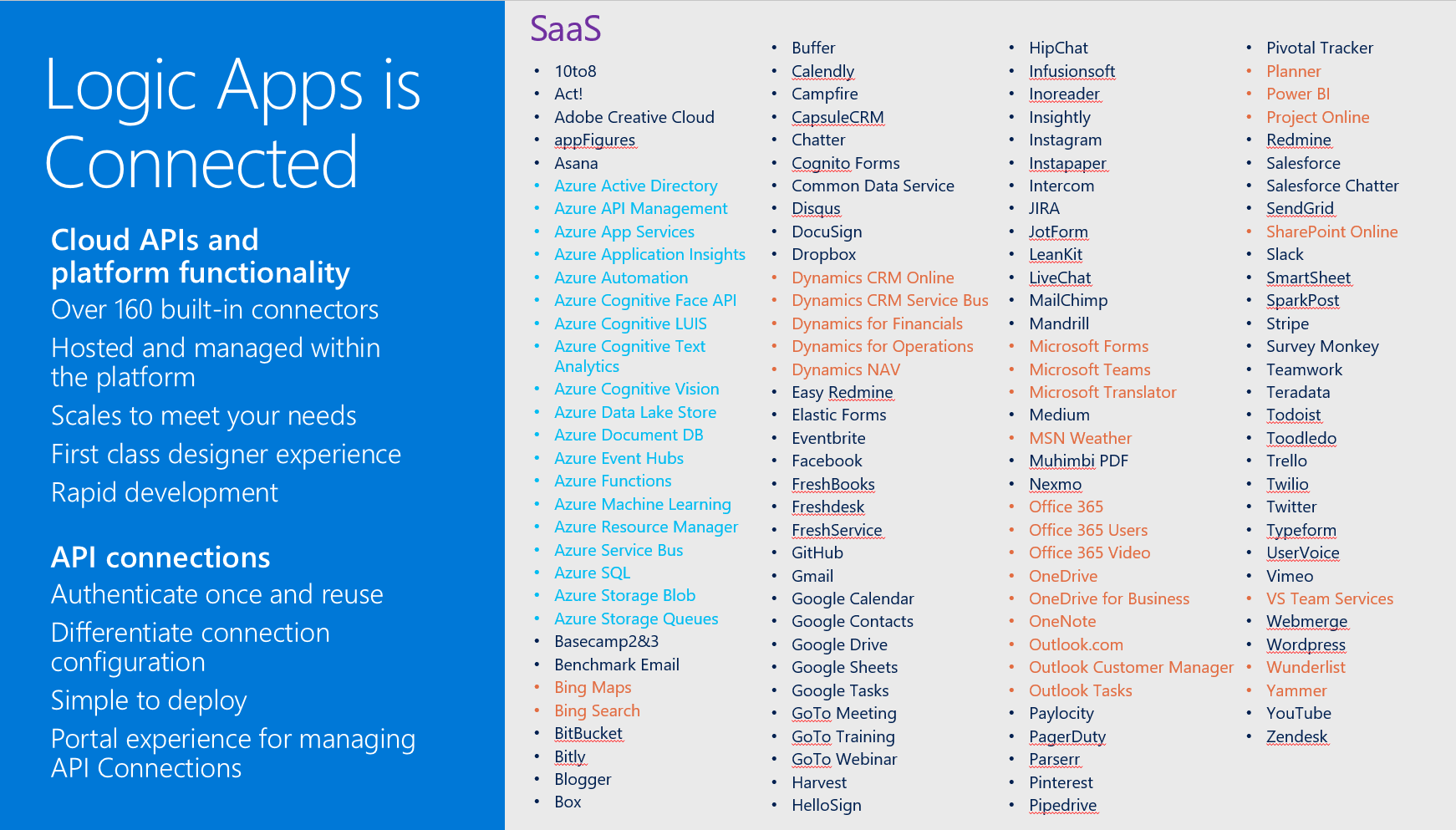

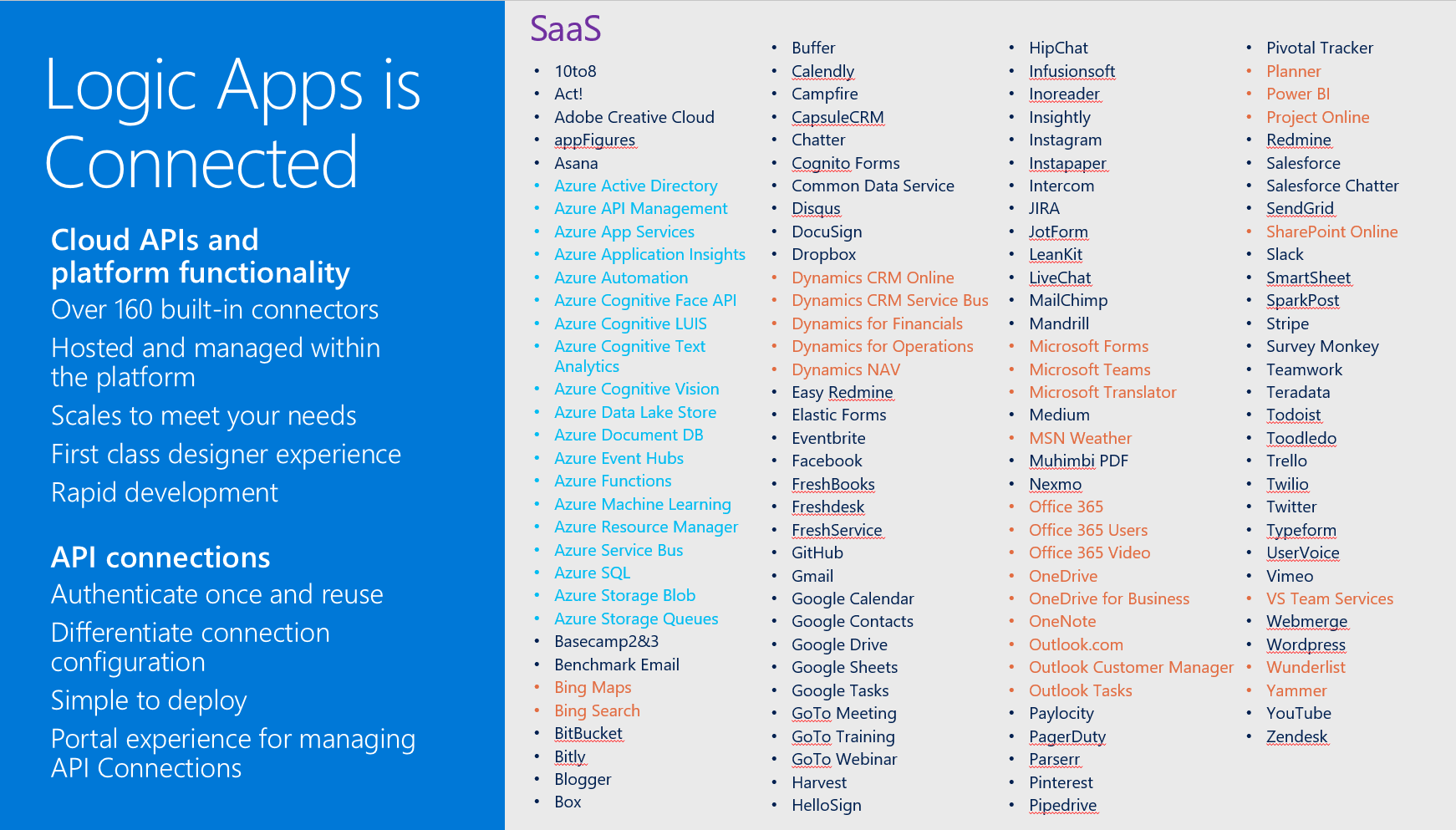

Microsoft’s iPaaS capability in Azure Logic Apps is little over a year old. And this service has matured immensely over the course of a year. If you look at what Gartner believes an iPaaS should have as essential features, Logic App has each of them. Multi-tenant, micro-billing (pay as you go), no development (connectors, see diagram below), deployment and manageability (Azure Portal) and monitoring (OMS).

Moreover, Logic Apps can be a part of your overall cloud solution, since they can play a critical part in connecting to data sources, syncing information or sending out notifications.

Scenario with Logic App

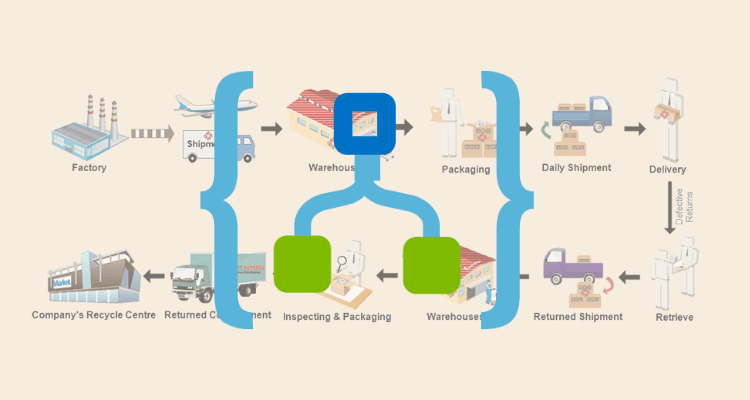

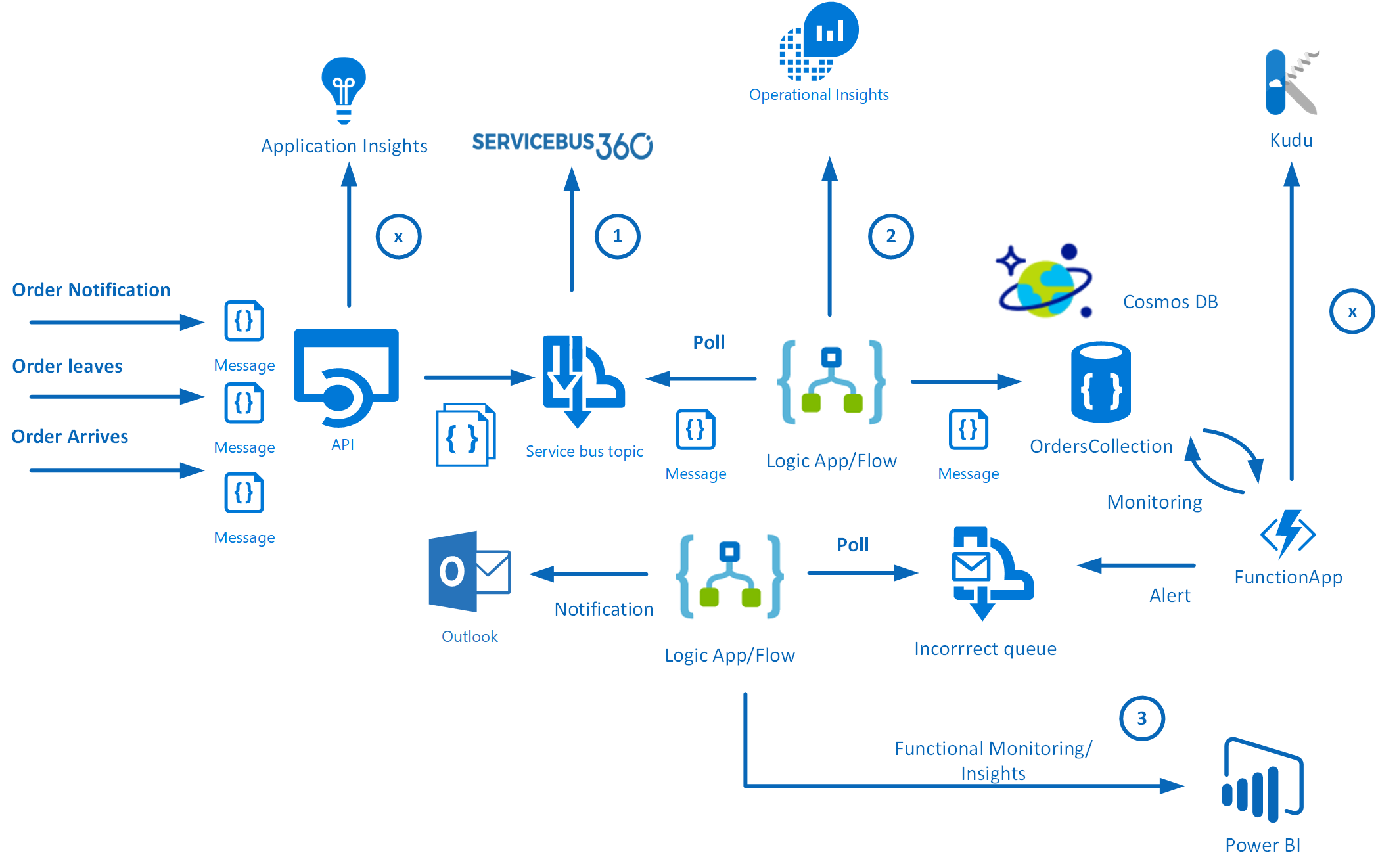

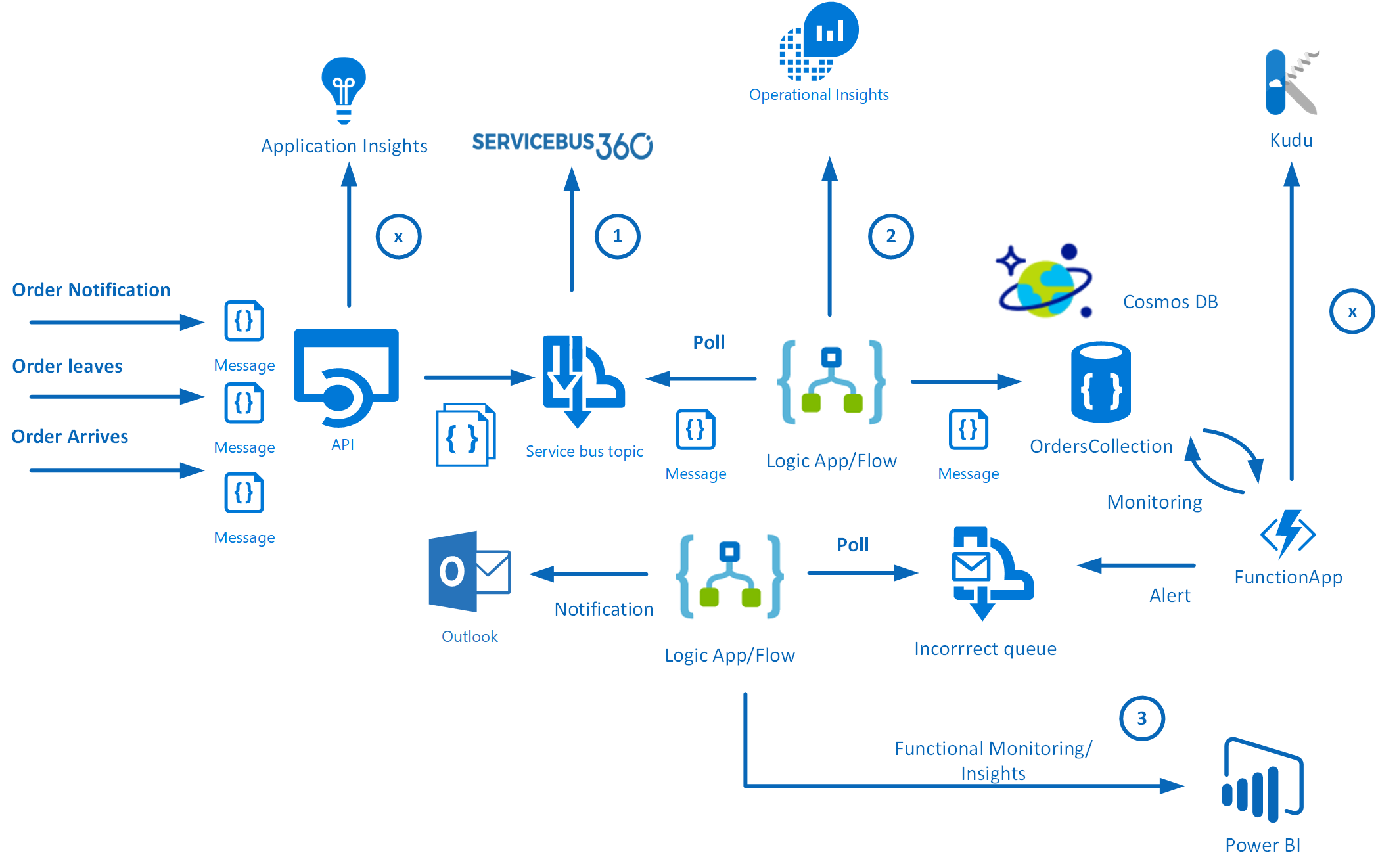

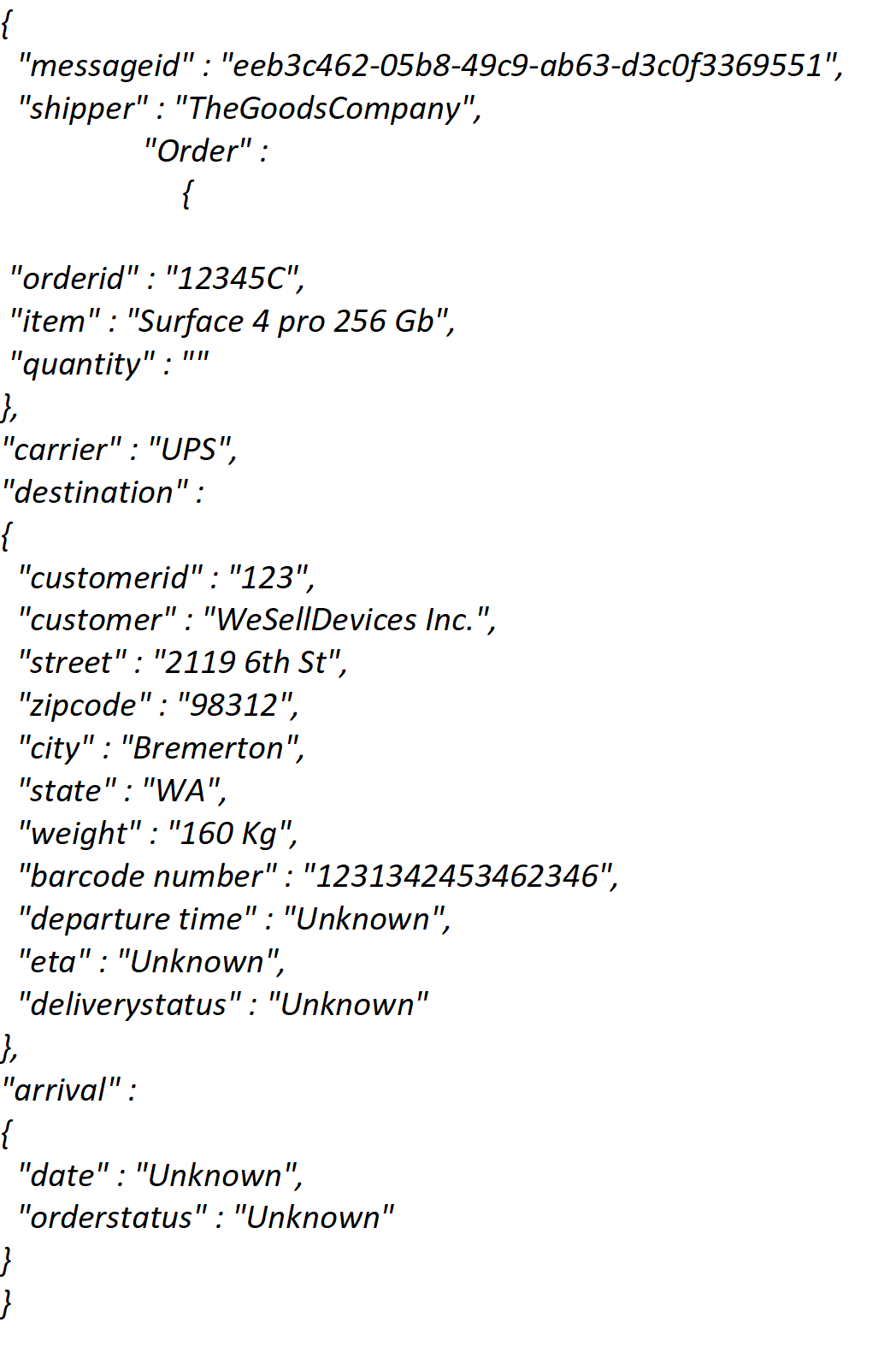

Suppose a business would like to know if the orders it sends through a carrier arrive at customer and in an expected state. The orders get picked in a warehouse and once a certain number of orders have been reached, they are scanned and loaded into a truck. Subsequently, the truck leaves the warehouse and drives it to route to various customers to deliver the orders.

Note: The calculation of the efficient route and number of orders that create an optimal load are separate processes. Therefore, see for instance the Fleet Management IOT sample.

In this scenario we will focus on the functional logic process, being order be made ready for shipment, leaving the warehouse with a truck (carrier) and arriving at a certain time at a customer. Subsequently, the customer on its turn will verify if the order is correct and not damaged.

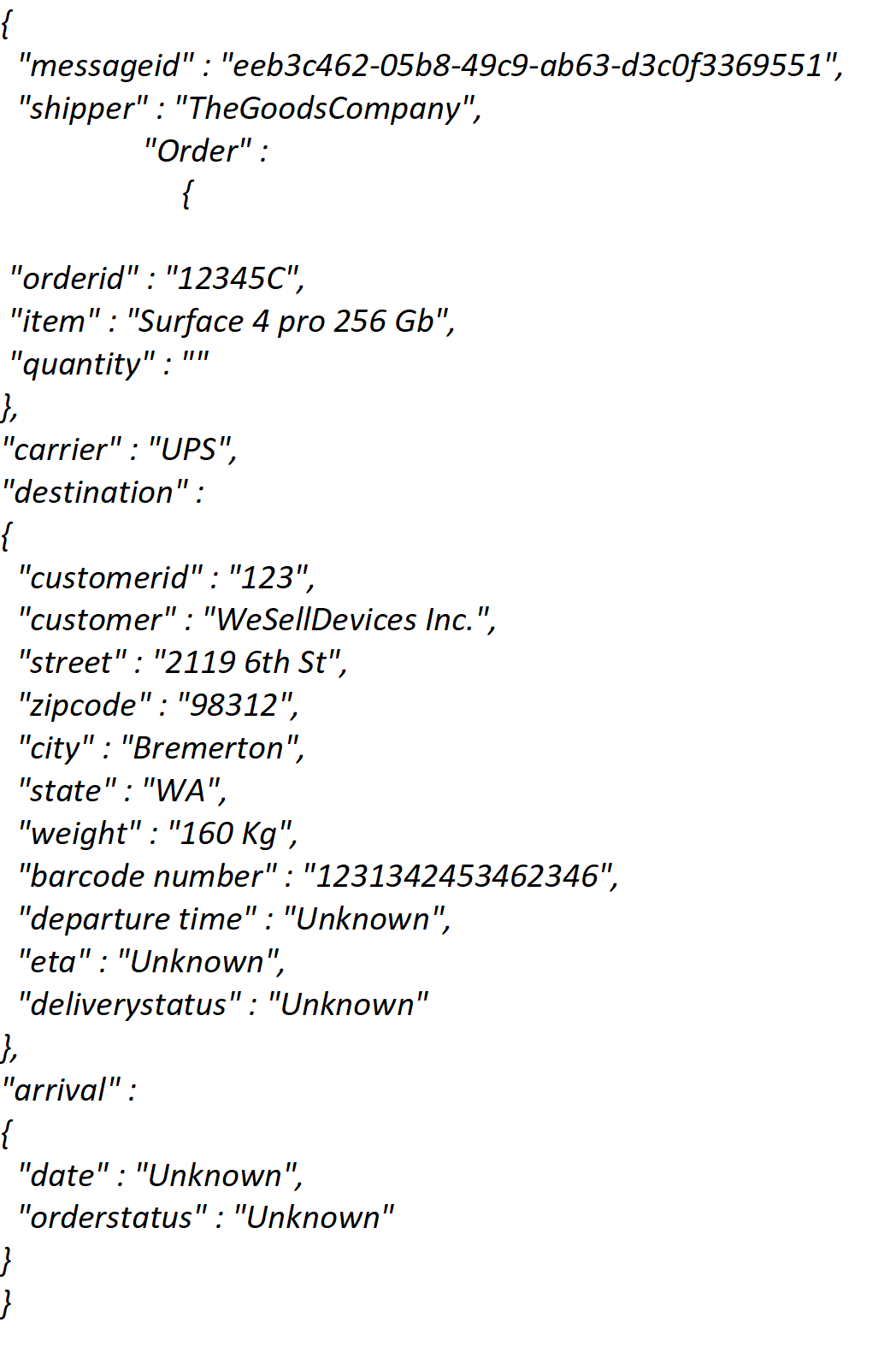

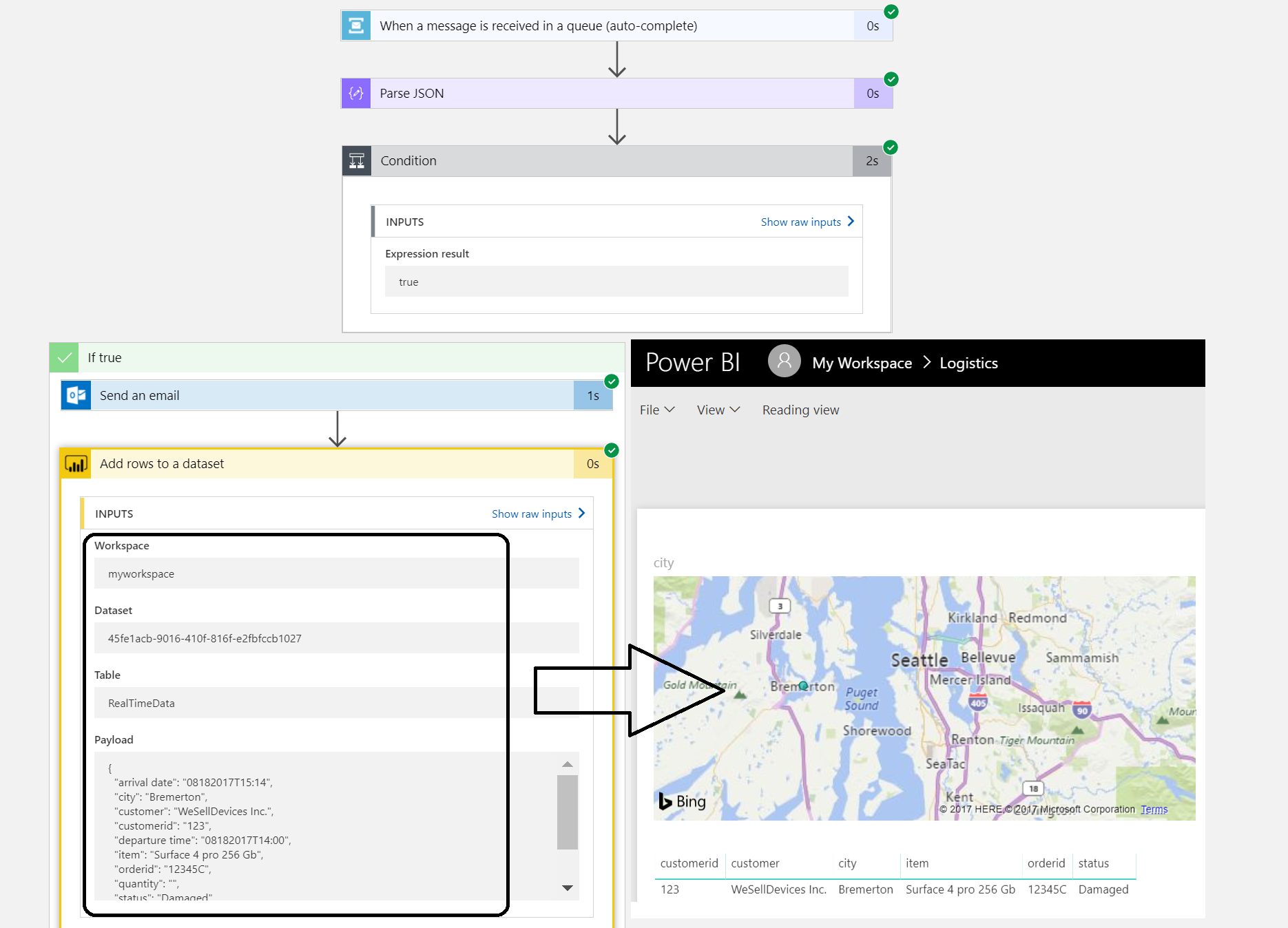

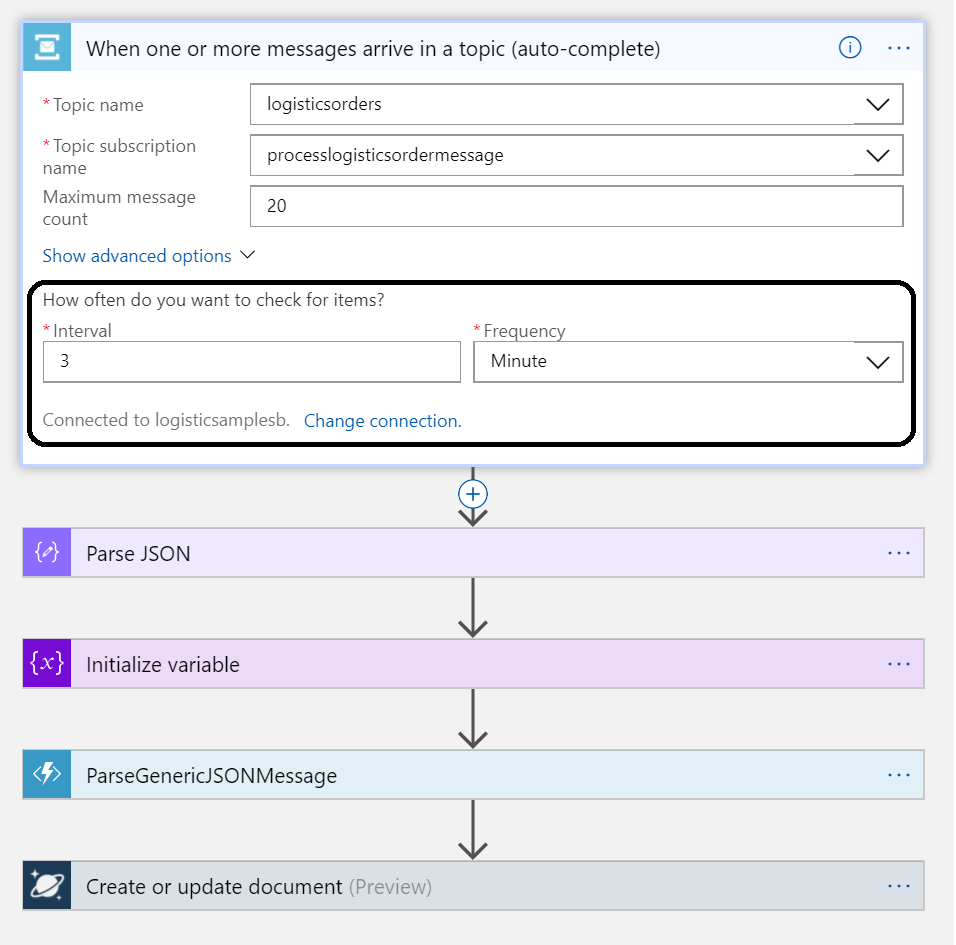

There are three messages going to generic API that pushes the messages to a Service Bus Topic. Subsequently, the messages are being picked up by a Logic App, which sends the messages to a Cosmos DB (Document DB). The first message is a notification that the order is picked, the second is that the order is en route and the third message contains arrival and verification of the order.

JSON Message example

The numbers in the diagram indicate the monitoring and diagnostic capability for the solution. ServiceBus360 is used to monitor the service bus queue and topic used in this scenario. Operations Management Suite (OMS) to monitor Logic Apps, Functions and Cosmos DB. And finally PowerBI for functional monitoring purposes.

Azure Services

In this scenario the solutions consist of several Azure Services (PaaS and SaaS) :

- PaaS

- Cosmos DB

- Service Bus

- Logic Apps

- Functions

- App Services

- SaaS

The PaaS services are all serverless, which means the infrastructure the services use, are abstracted away. You only specify what you need (consume), how much (scale) and pay for what you use.

Note: More on Serverless see serverless computing.

Building the solution

The implementation of a solution based on the scenario requires several services to be provisioned in Azure:

- a Service Bus namespace with a topic

- a WebApp for hosting the API

- a Cosmos DB instance (Document DB)

- Logic Apps

- a Function App

- Outlook and Power BI (part of Office365)

- ServiceBus360

The latter is a SaaS solution to manage your Service Bus Namespace(s). See ServiceBus360 for more information.

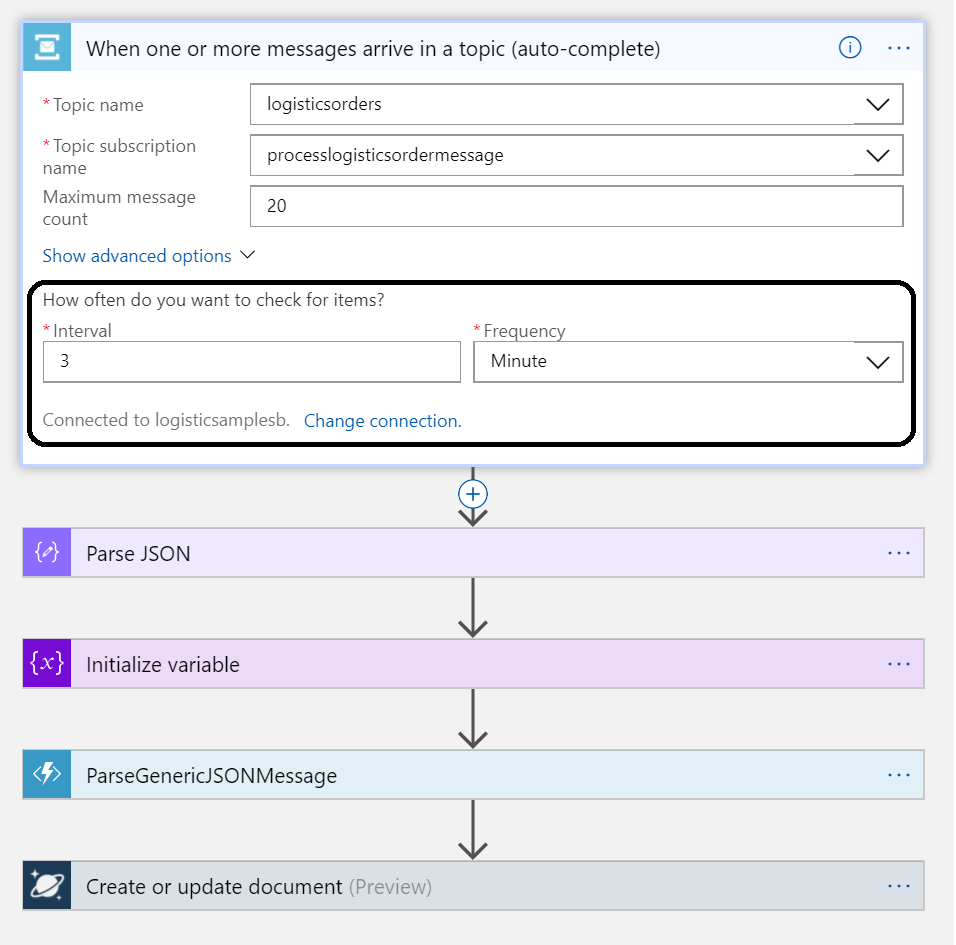

The WebApp will be hosting a simple API for which each party (shipper, carrier, customer) can be sent messages to. The message contract for each message is the same (as depicted earlier). The Service Bus Topic will be created in a Service Bus Namespace and a Logic App will poll at a certain interval.

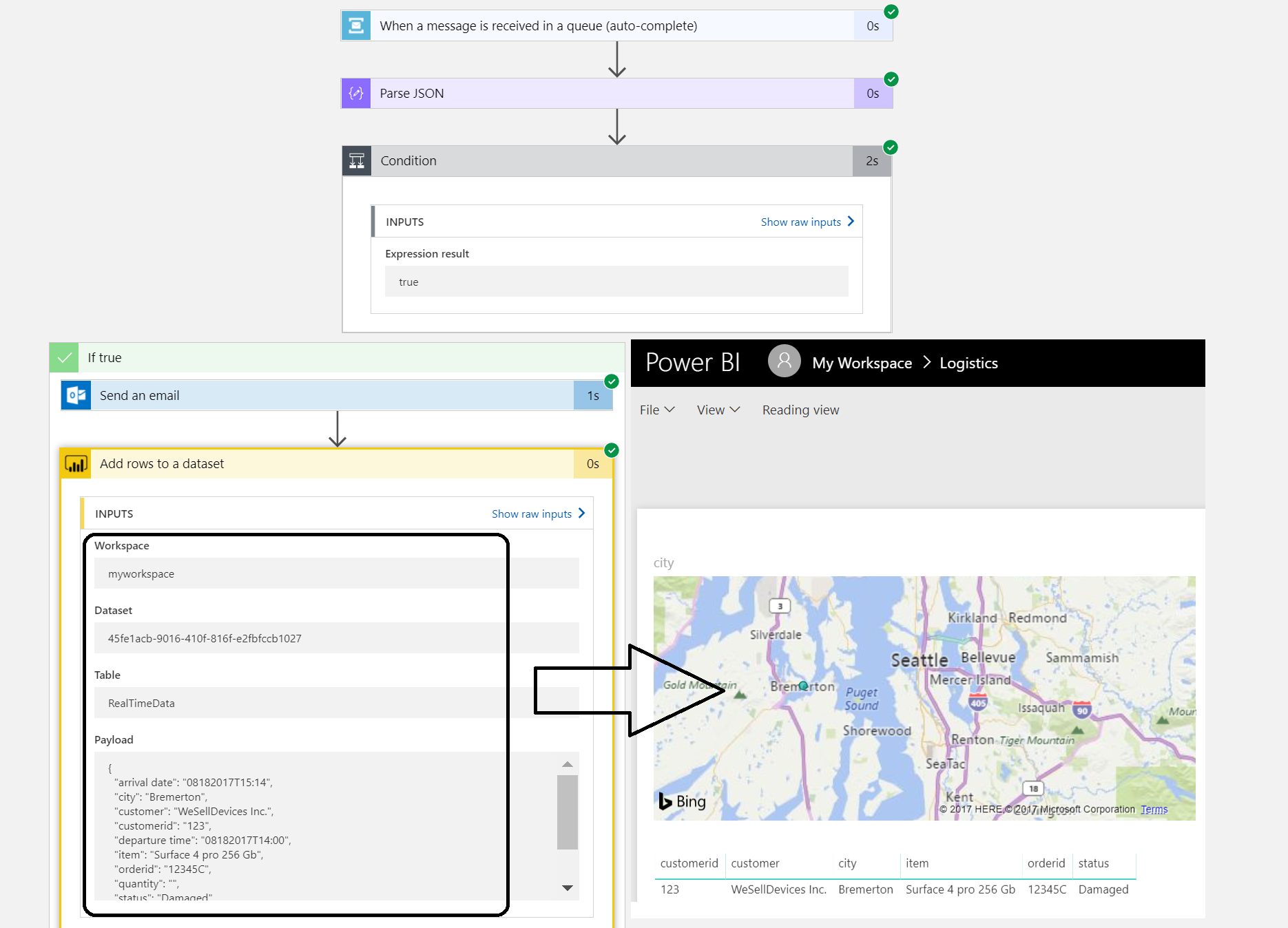

Once the Logic App receives the message it will parse it, and create a document with the body. A Function app will have a function for parsing the message body and for monitoring the document store. A second Logic App will poll a queue and send an email notification. It also will send data to PowerBI i.e. streamed dataset. These are all the nuts and bolts of this serverless solution.

Monitoring and management

The Logistics solution is in place and operational. So, how do I monitor and manage the solution as it consists of several services? The diagram shows three monitoring solutions:

Note: I leaving monitoring/management of WebApp hosting the API (Application Insights) and Azure Functions (Kudu) out of the scope of this blog.

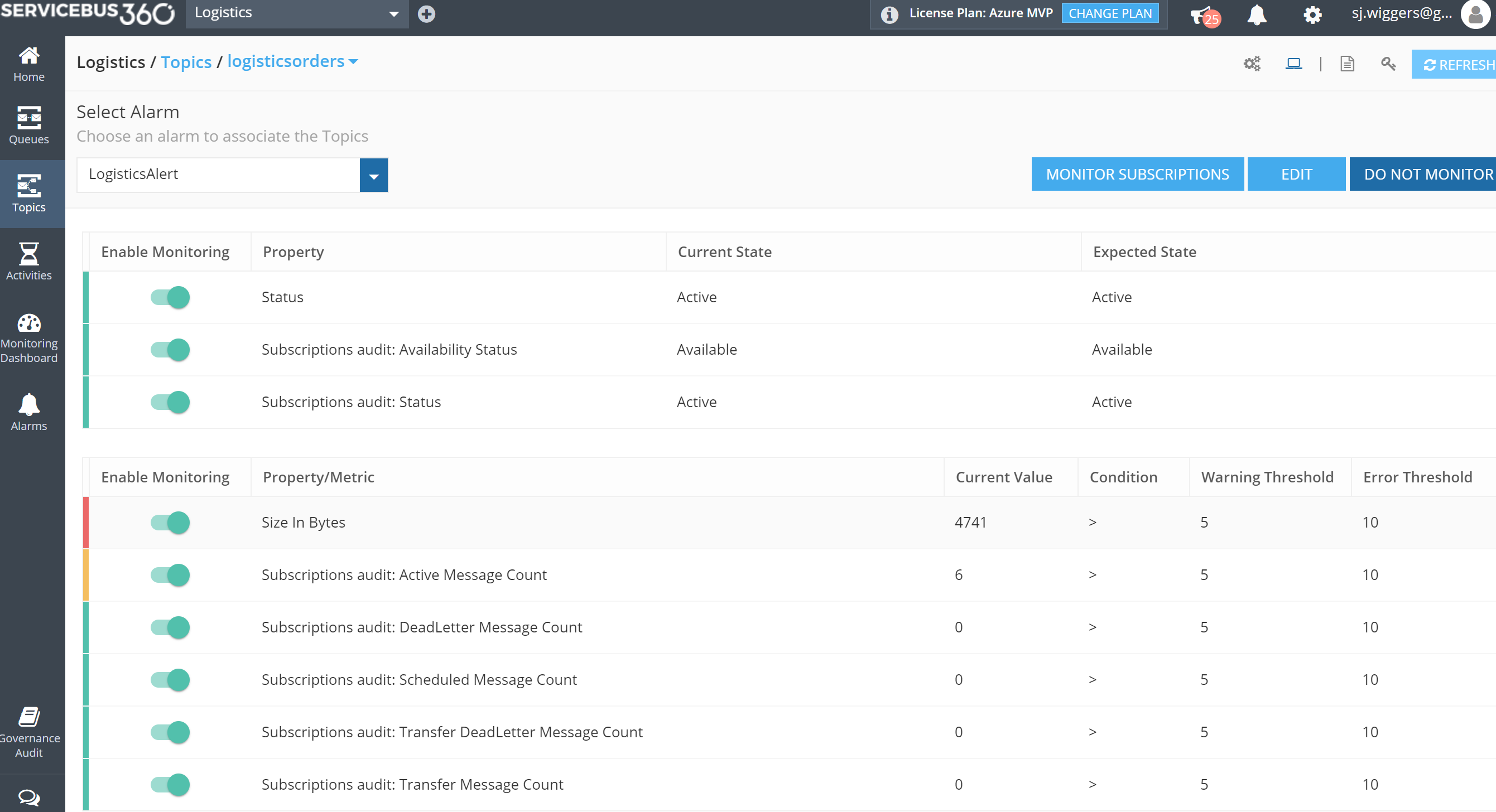

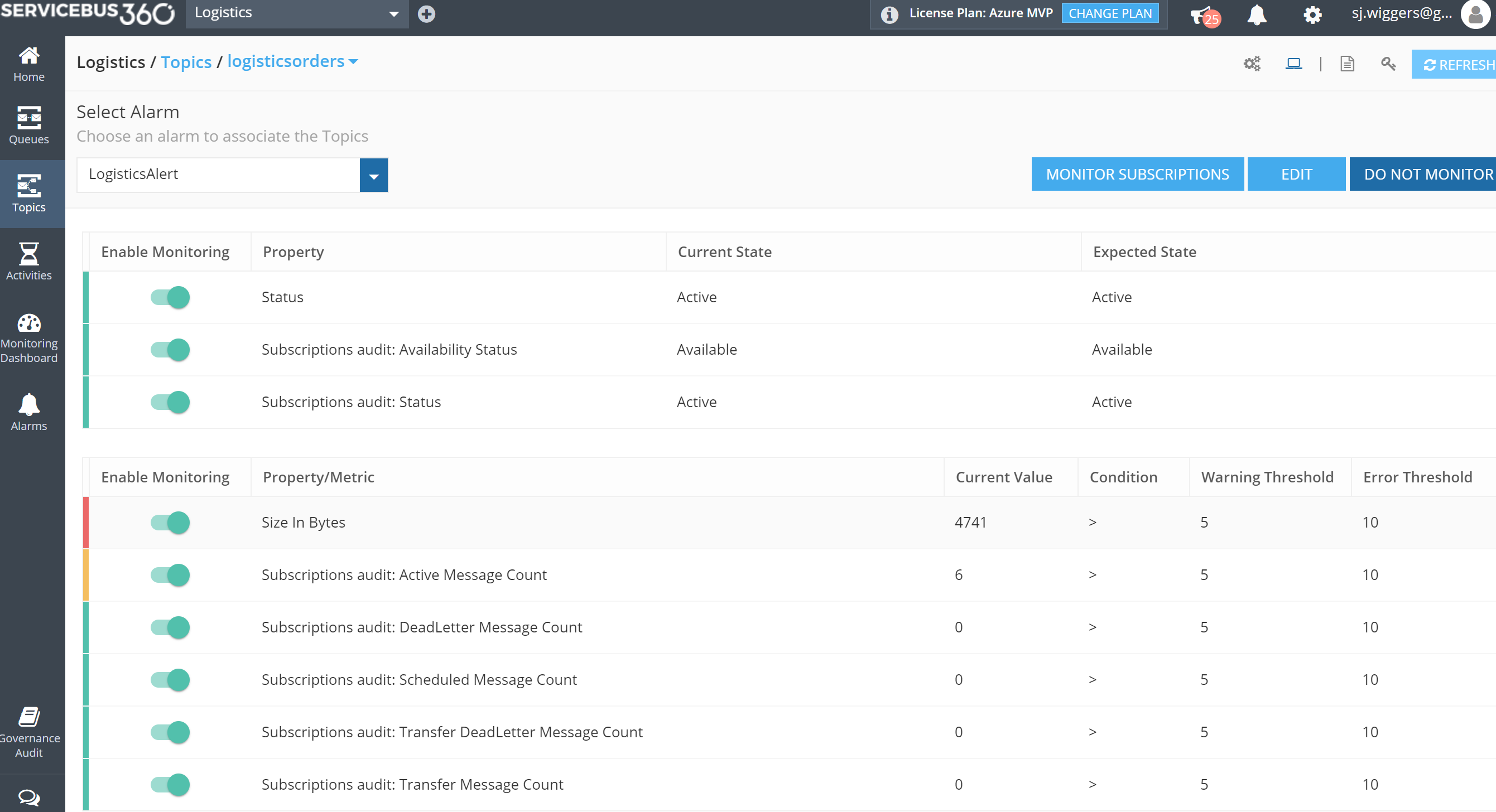

Each solution provides monitoring capabilities. With ServiceBus360 you can monitor and manage Service Bus entities Queues, Topics, Relays and Event Hubs. This cloud solution is developed by same company/team that built BizTalk360. The solution has Paolo Salvatori’s Service Bus Explorer as a foundation and extended it with new features like alarms, activities (testing purposes) and managing multiple namespaces.

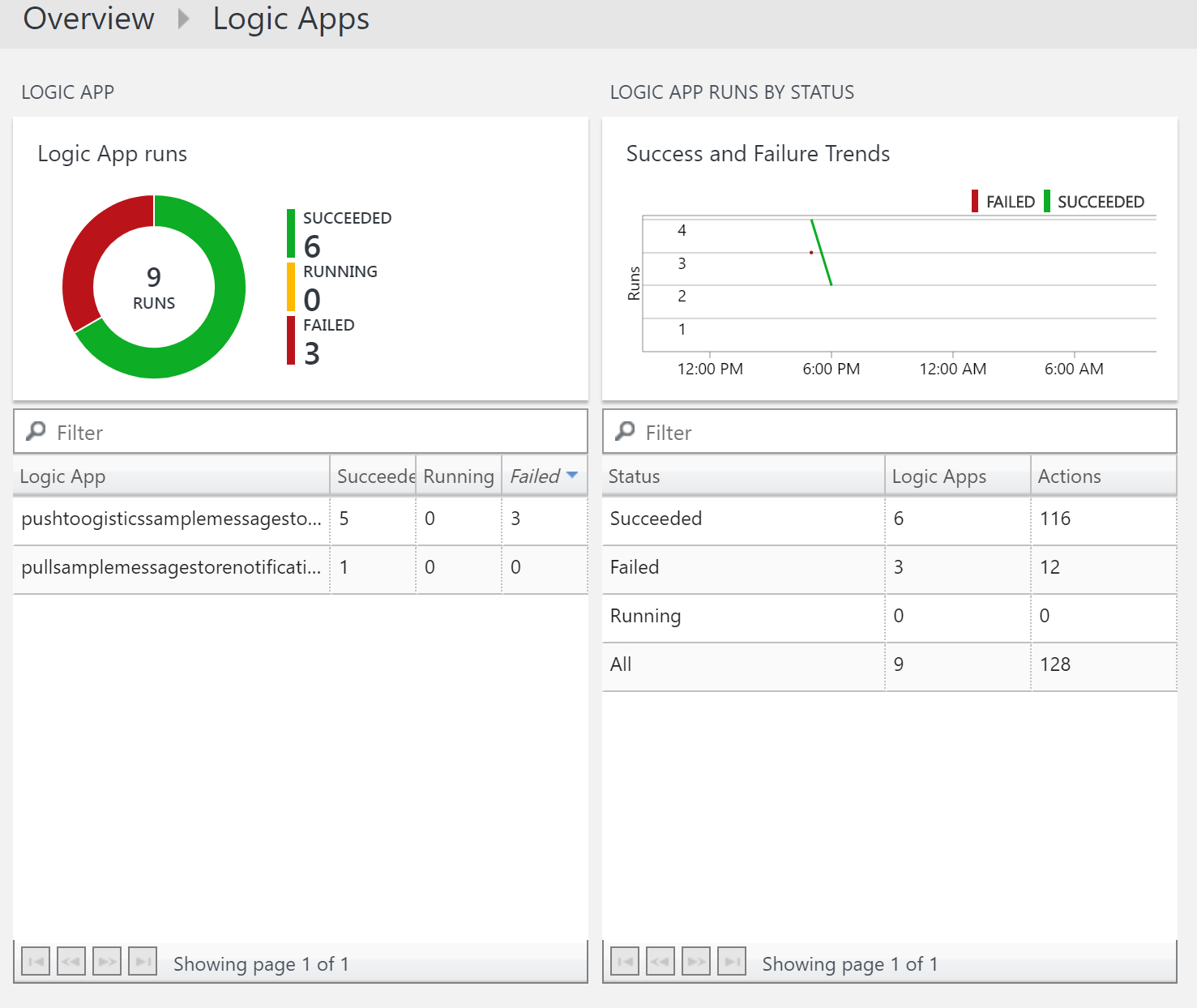

Microsoft Operations Management Suite (OMS) offers a collection of management services. And within OMS you can add solutions like the Logic Apps Management (Preview), see my blog post Logic Apps solution for Log Analytics (OMS) strengthens Microsoft iPaaS monitoring capability in Azure.

PowerBI is used in our solution to create a report on delivered orders that are damaged. The report on this particular data could give the business a view of damaged orders. Below a screenshot of a simple report generated from data of the Logic App.

The streaming dataset configured in Power BI will receive data from the Logic App. The dataset leads to build a report like shown above.

Three different services each having their own characteristics and place in this scenario.

Considerations

The implementation of the serverless solution shows several services including monitoring and management. And of the monitoring services, I only touched three of them, excluding Kudu and Application Insights. The challenge to efficiently monitor and manage this solution or any serverless or multiple Azure services solutions is the fact that there are many moving parts. Each with their own features for diagnostics, monitoring (metrics) and hooks into either OMS or other services. Designing the functionality to solve a business problem with Azure Services can be just as complex as setting up proper operations.

To support your Azure solution means having the appropriate process in place and tooling or solutions. Hence this will bring the cost factor into the mix. Moreover, usage of tools (services) is not free, designing the process and configuring the services will likely bring consultancy cost and finally operations that will need to manage the solutions cost money too. These are some of my thoughts while building this solution in Azure. To conclude serverless is great, but do not forget aspects like monitoring.

What’s next

My intention with this blog post was to show the challenges with monitoring and management of a serverless cloud solution like our scenario. When you design a solution with multiple Azure Services you will face this challenge. You really need to take operations seriously when you design as they determine the running costs of supporting the solution. And there will be costs involved in the services you use like ServiceBus360, OMS, PowerBI or Application Insights. These services provide you the means to monitor your solution, yet none covers all the bases when it comes to monitoring and management of a complete solution to our scenario. Therefore, one overall solution to plug in the monitor/management of each service would be welcome.

Author: Steef-Jan Wiggers

Steef-Jan Wiggers has over 15 years’ experience as a technical lead developer, application architect and consultant, specializing in custom applications, enterprise application integration (BizTalk), Web services and Windows Azure. Steef-Jan is very active in the BizTalk community as a blogger, Wiki author/editor, forum moderator, writer and public speaker in the Netherlands and Europe. For these efforts, Microsoft has recognized him a Microsoft MVP for the past 5 years. View all posts by Steef-Jan Wiggers

by Steef-Jan Wiggers | Aug 16, 2017 | BizTalk Community Blogs via Syndication

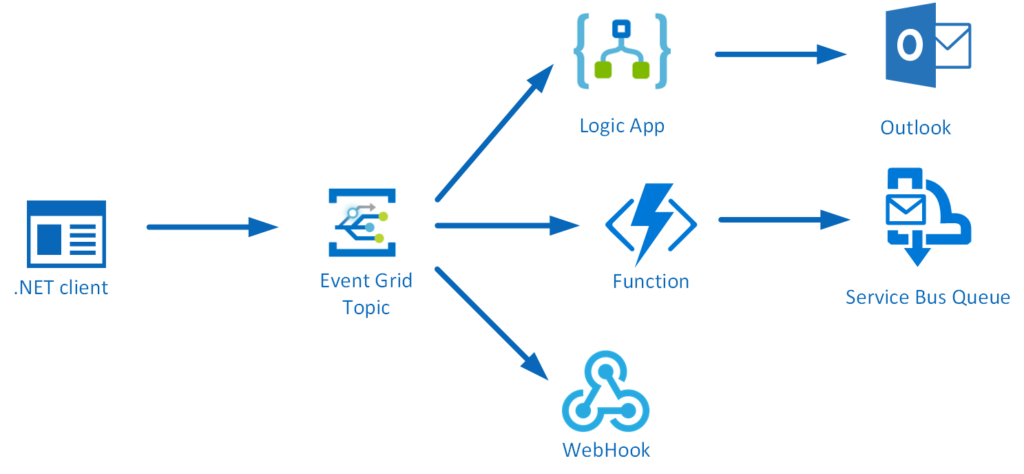

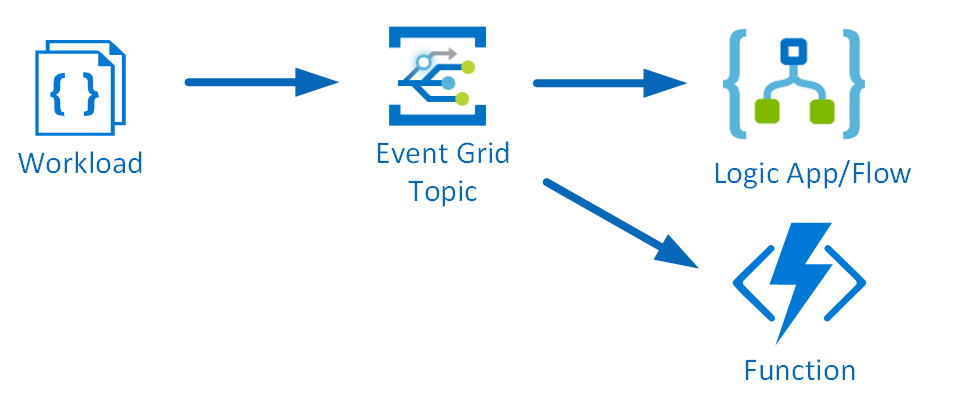

Microsoft has released yet another service in its Azure Platform named Event Grid. This enables you to build reactive, event driven applications around this service routing capabilities. You can receive events from multiple source or have events pushed (fan out) to multiple destinations as the picture below shows.

New possible solutions with Event Grid

With this new service there are some nifty serverless solution architectures possible, where this service has its role and value. For instance you can run image analysis on let’s say a picture of someone is being added to blob storage. The event, a new picture to blob storage can be pushed as an event to Event Grid, where a function or Logic App can handle the event by picking up the image from the blob storage and sent it to a Cognitive Service API face API. See the diagram below.

Another solution could involve creating an Event Topic for which you can push a workload to and an Azure function, or Logic App or both can process it. See the diagram below.

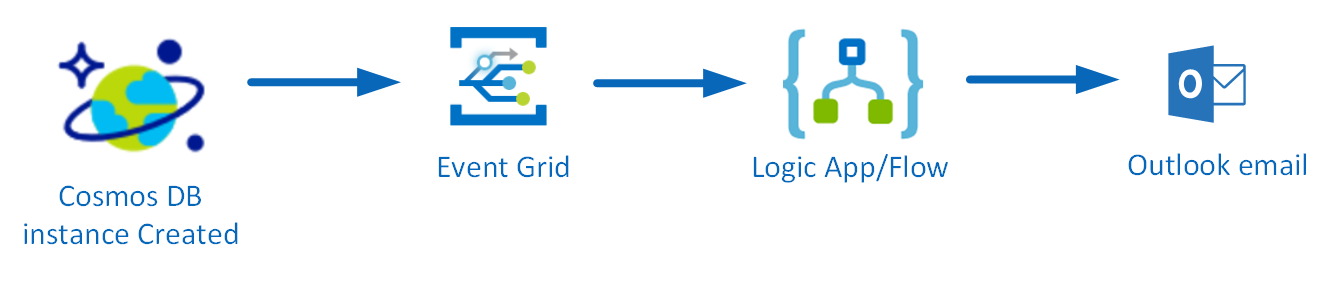

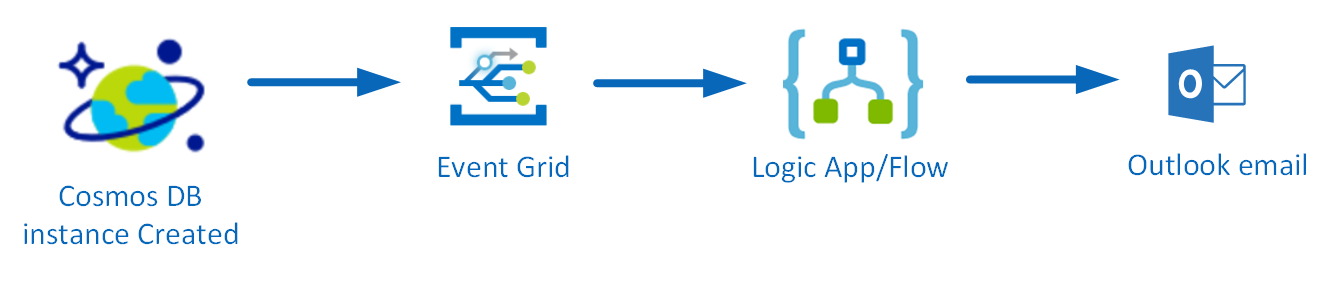

And finally the Event Grid offers professional working on operation side of Azure to make their work more efficient when automating deployments of Azure services. For instance a notification is send once one of the Azure services is ready. Let’s say once a Cosmos DB instance is ready a notification needs to be sent.

The last sample solution is something we will build using Event Grid, based on the only walkthrough provided in the documentation.

Sent notification when Cosmos DB is provisioned

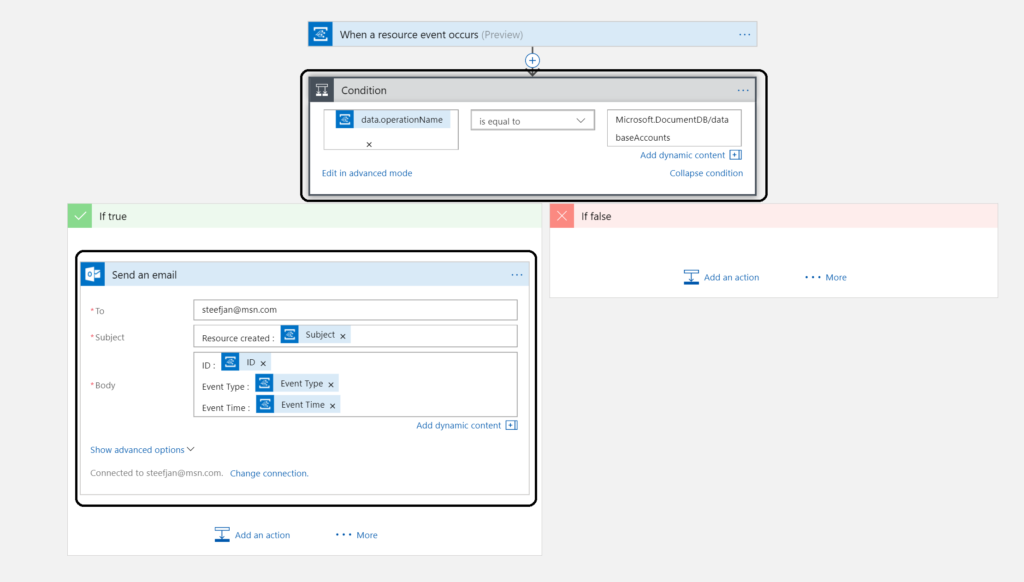

To have a notification send to you by email once an Azure Service is created a Logic App is triggered by an event (raised once the service is created in a certain resource group). The Logic App triggered by the event will act upon it by sending an email. The trigger and action are the Logic and it’s easy to implement this. And the Logic App is subscribing to the event within the resource group when a new Azure Service is ready.

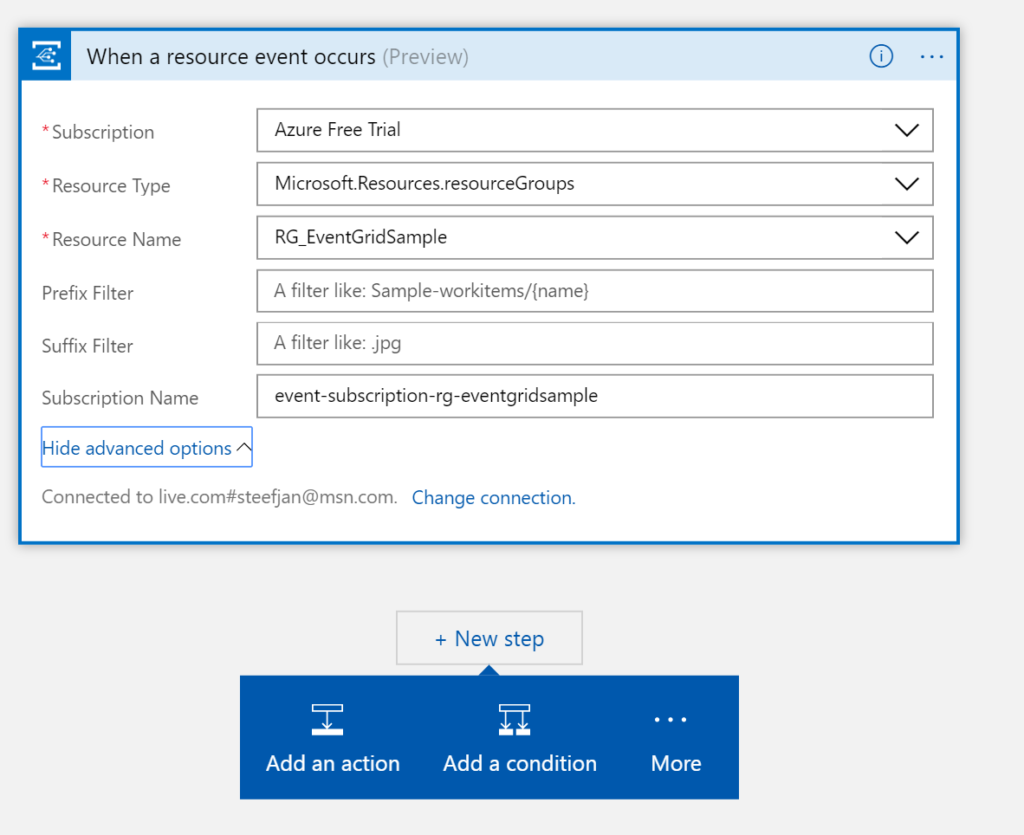

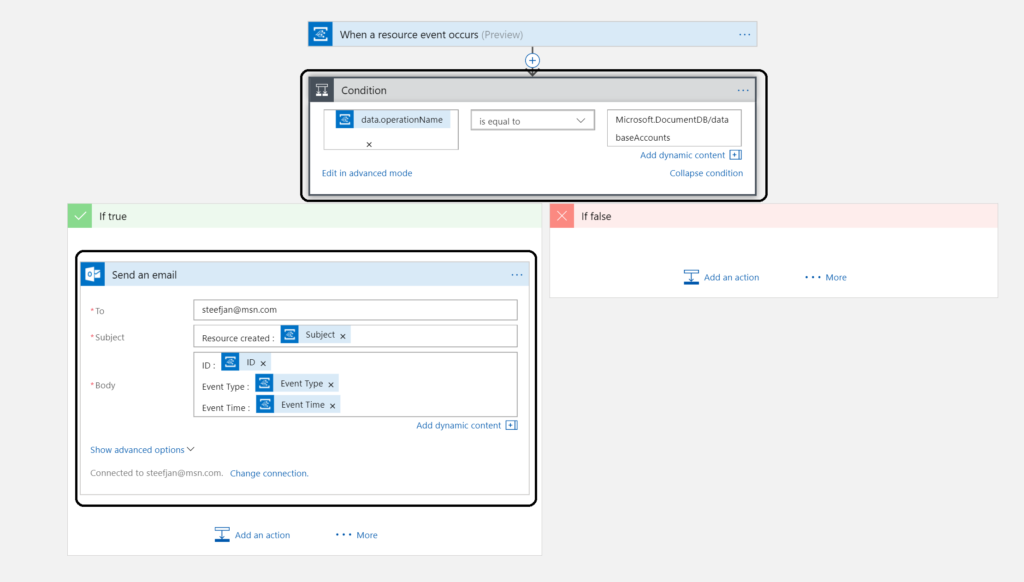

Building a Logic App is straight forward and once provisioned you can choose a blank template. Subsequently, you add a trigger, for our solution it’s the event grid once a resource is created (the only available action trigger currently).

The second step is adding a condition to check the event in the body. In this condition in advanced mode I created : @equals(triggerBody()?[‘data’][‘operationName’], ‘Microsoft.DocumentDB/databaseAccounts’)

This expression checks the event body for a data object whose operationName property is the Microsoft.DocumentDB/databaseAccounts operation. See also Event Grid event schema.

The final step is to add an action in the true branch. And this is an action to sent an email to an address with a subject and body.

To test this create a Cosmos DB instance, wait until its provisioned and the email notification.

Note: Azure Resource Manager, Event Hubs Capture, and Storage blob service are launch publishers. Hence, this sample is just an illustration and will not actually work!

Call to action

Getting acquainted with this new service was a good experience. My feeling is that this service will be a gamechanger with regards to building serverless event driven solution. This service in conjunction with services like Logic Apps, Azure Functions, Storage and other services bring a whole lot of new set of capabilities not matched by any other Cloud vendor. I am looking forward to the evolution of this service, which is in preview currently.

If you work in the integration/IoT space than this is definitely a service you need to be aware and research. A good starting point is : Introducing Azure Event Grid – an event service for modern applications and this infoq article.

Author: Steef-Jan Wiggers

Steef-Jan Wiggers is all in on Microsoft Azure, Integration, and Data Science. He has over 15 years’ experience in a wide variety of scenarios such as custom .NET solution development, overseeing large enterprise integrations, building web services, managing projects, designing web services, experimenting with data, SQL Server database administration, and consulting. Steef-Jan loves challenges in the Microsoft playing field combining it with his domain knowledge in energy, utility, banking, insurance, health care, agriculture, (local) government, bio-sciences, retail, travel and logistics. He is very active in the community as a blogger, TechNet Wiki author, book author, and global public speaker. For these efforts, Microsoft has recognized him a Microsoft MVP for the past 7 years. View all posts by Steef-Jan Wiggers

by Steef-Jan Wiggers | Jul 29, 2017 | BizTalk Community Blogs via Syndication

July the holiday month, or at least that’s when the summer holiday season starts in the Netherlands. And this year I went for a holiday with the family to Portugal (Porto) and France (Montréal, Midi-Pyrénées).

Month July

For me this is a special month as the MVP renew cycle starts, which is now yearly. And I have always have been a July MVP, hence nothing really changed for me. Anyways, I got renewed, a great start of the month!

I shared the picture above on LinkedIn with the text: “Awarded for the eight time! Thanks Microsoft. Coolest Technology!!!”. And this posted got to my surprise more than 8000 views in a week. Awesome!

In the beginning of July I consolidated my talk at Integate2017 London into a blog post: Building sentiment analysis solution with Logic Apps. This years integrate was a success as many of you might have read in various blog post that recap the event. And there will be an US Integrate later this year in October, where I will be one of speakers too.

What else did I do this month. Well I worked together with Kent for one of his Middleware Friday shows: INTEGRATE 2017 Highlight Show. We recorded our interview session in Dublin. And I wrote a blog post about Azure Functions, go serverless!

Another thing I like to mention is that for a customer I worked hard with a team on a POC with CosmosDB, Graph model and Azure Search. And we have achieved some important milestones. Some of the learning I will share in upcoming months.

Holiday

As mentioned already it’s summer holiday season in the Netherlands and I went with my family to Porto in Portugal to visit Sandro and his family.

No suprises here, we went to have lunch, visit Porto and have an ice cream of course (Santini).

Books

Since I was on holiday I was able to read a few books. With a long road trip to Porto I saw a few movies related to AI, digitalization and IOT:

And what I found interesting about seeing this movies is how they relate to these books I read:

The world is changing around us with sensors, devices and huge amounts of data. Moreover, this makes us more aware of everything around is and smart, at least we get more insights.

Blockchain

During Integrate 2017, my buddy Kent talked a lot about Blockchain and Cryptocurrencies. And I got intrigued, yet I did not fully understand both. Therefore, I bought and read these two books to get a better understanding:

Both are recently pusblished books, relevant and up to date.

Relaxing books

Besides some technical books I read two thrillers to relax and chill:

The first is defenitely an amazing well written thriller and if you like the movies Se7en than this is for you!

Music

My favorite albums that were released in July were:

- Decapitated – Anticult

- Prong – Zero Days

- Wintersun – The Forest Seasons

Hence, another month gone by. Next month I will continue to work on the POC and prepare for sessions in September and October.

Cheers,

Steef-Jan

Author: Steef-Jan Wiggers

Steef-Jan Wiggers is all in on Microsoft Azure, Integration, and Data Science. He has over 15 years’ experience in a wide variety of scenarios such as custom .NET solution development, overseeing large enterprise integrations, building web services, managing projects, designing web services, experimenting with data, SQL Server database administration, and consulting. Steef-Jan loves challenges in the Microsoft playing field combining it with his domain knowledge in energy, utility, banking, insurance, health care, agriculture, (local) government, bio-sciences, retail, travel and logistics. He is very active in the community as a blogger, TechNet Wiki author, book author, and global public speaker. For these efforts, Microsoft has recognized him a Microsoft MVP for the past 7 years. View all posts by Steef-Jan Wiggers

by Steef-Jan Wiggers | Jul 19, 2017 | BizTalk Community Blogs via Syndication

Serverless is hot and happening. Hence, it is not a buzzword, but a new interesting part of Computer Science, which is amazing and also a driver of the second machine age, which we are currently experiencing. I read two books sequentially recently: Computer Science Distilled and the Second Machine Age.

The first book dealt with the concepts of Computer Science. And few aspects in it caught my attention like breaking a problem into smaller pieces. Hence, in Azure I could use functions to solve partial of a complete problem or process parts of a large workload. The second book discusses the second machine age around automation, robotics, artificial intelligence and so on. And little repetitive tasks can be build using Functions. Azure Functions to be precise that can automate those little tasks. Thus, why not consolidate my little research of the current state of Azure Functions into a blog post with the context of both books in the back of my mind.

Serverless

Serverless computing is a reality and Microsoft Azure provides several platform services that can be provisioned dynamically. Resources are allocated without you worrying about scale, availability and security. And the beauty of it all is you only pay what you use.

Azure Functions is one of Microsoft’s serverless capabilities in Azure. Functions enable you to run pieces of code in Azure. Cool eh! And can be run independently, in orchestration or flow (durable functions), or as a part of a Logic App definition or Microsoft Flow.

You provision a Function App, which acts as a container for one or more functions. Subsequently, either attach a price plan to it, when you want share resources with other services like web app or you choose a consumption plan (pay as you go).

Finally, you have the function app available and you can start adding functions to them. Either using Visual Studio that has templates for building a function or you use the Azure Portal (Browser). Both provide features to build and test your function. However, Visual Studio will deliver intellisense and debugging features to you.

Function Types

Functions can be build using your language of choice like C#, F#, JavaScript, or Node.js. Furthermore, there are several types of functions you can build such as a WebHook + API function or a trigger based function. The latter can be used to integrate with the following Azure Services and SaaS solutions :

- Cosmos DB

- Event Hubs

- Mobile Apps (tables)

- Notification Hubs

- Service Bus (queues and topics)

- Storage (blob, queues, and tables)

- GitHub (webhooks)

- On-premises (using Service Bus)

- Twilio (SMS messages)

The integration is based upon a binding and trigger, key concepts with Azure Functions. Bindings provide a way to connect to in- and outputs of earlier mentioned services and solutions, see Azure Functions triggers and bindings concepts.

WebHook + API function

A popular quick start template for Azure Functions is WebHook + API function. This type of function is supported through the HTTP/WebHook binding and enables you to build autonomous functions that can be (re)used is various types of applications like a Logic App.

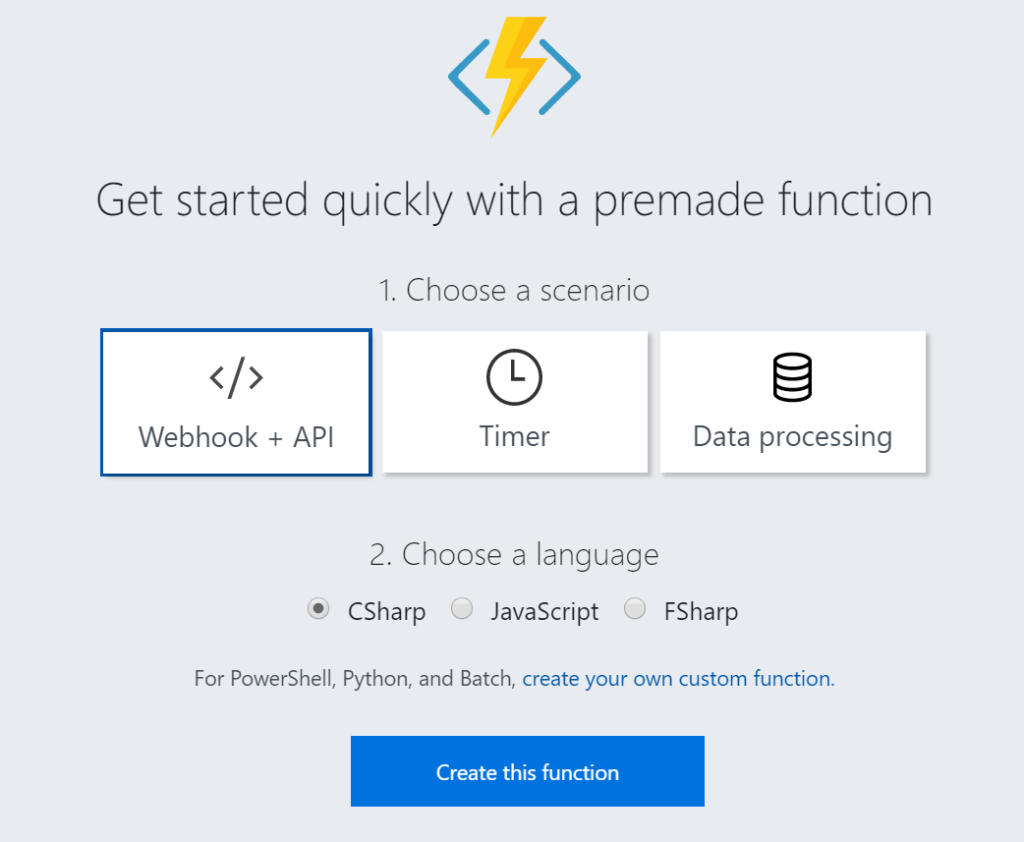

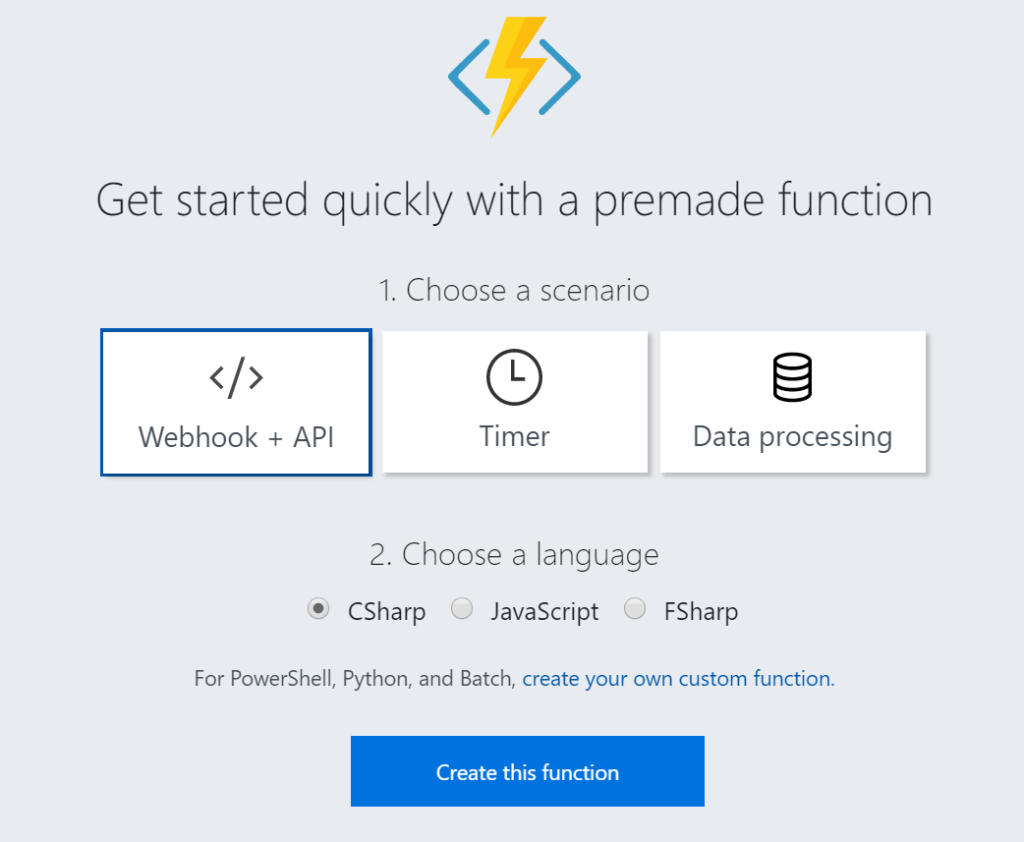

After provisioning a Function App you can add a function easily. As shown below you can select a premade function, choose CSharp and click Create this function.

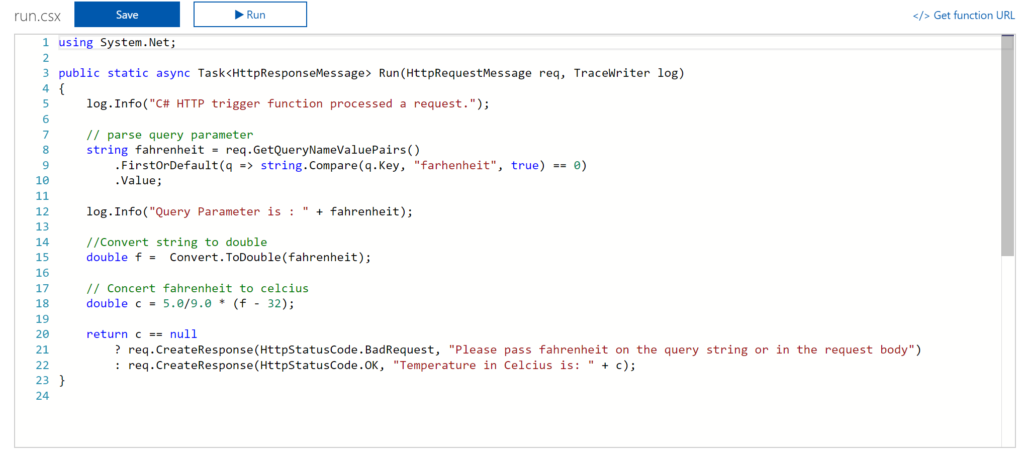

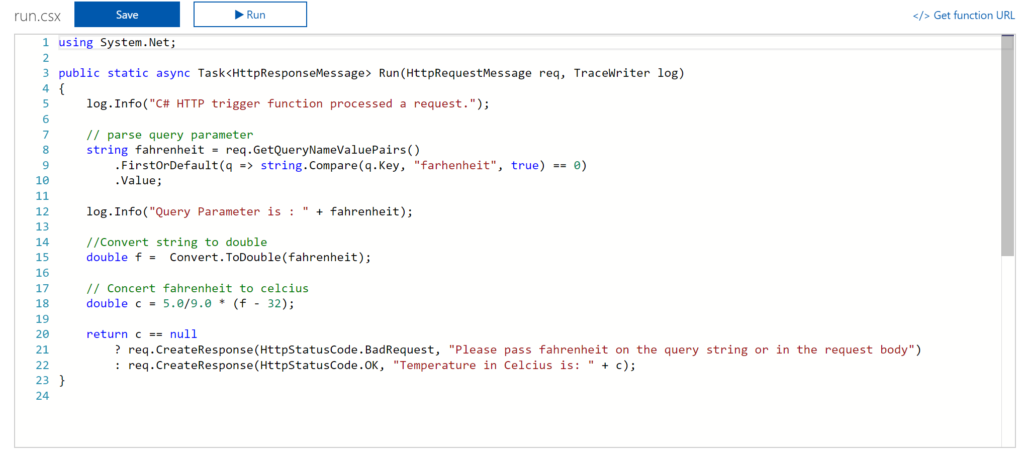

A function named HttpTriggerCSharp1 will be made available to you. The sample is easy to experiment with. I changed the given function to something new like the screenshot below.

And now it gets interesting. You can click Get Function URL as the function is publically accessible that is if you know the function key. By clicking the Get Function URL you’ll receive an URL that looks like this:

https://myfunctioncollection.azurewebsites.net/api/HttpTriggerCSharp1?code=iaMsbyhujlIjQhR4elcJKcCDnlYoyYUZv4QP9Odbs4nEZQsBtgzN7Q==

And the code resembles the default function key, which you can change through the Manage pane in the Function App blade.

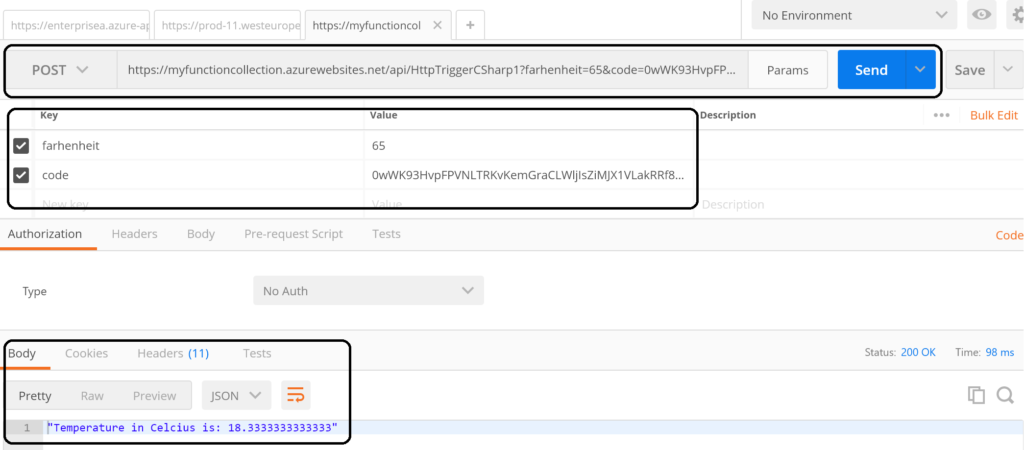

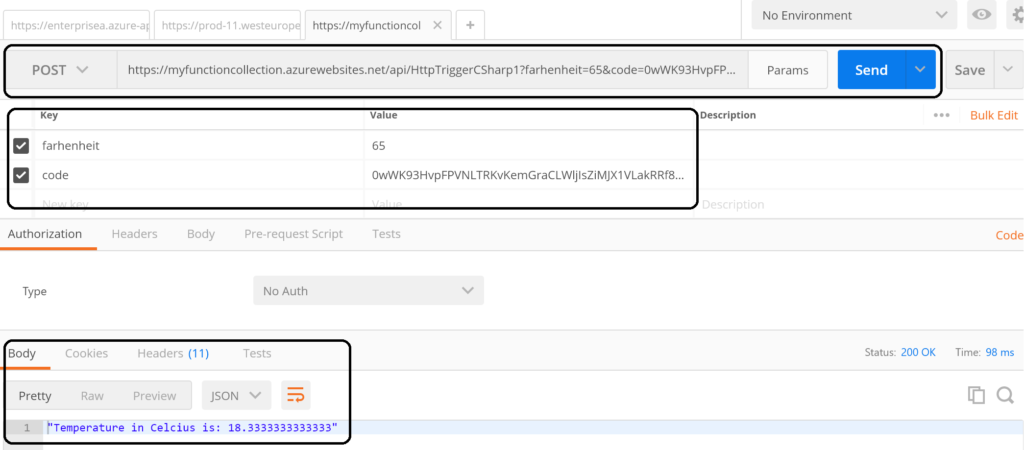

Since your function is accessible you can call it using for instance postman.

The screenshot above shows an example of a call to the function endpoint . The request includes the function key (code). However, a call like above might not be as secure as you need. Hence, you can secure the function endpoint by using API Management Service in Azure. See Using API Management to protect Azure Functions (Middleware Friday) blog post. The post explains how to do that and it’s more secure!

Integrate and Monitor

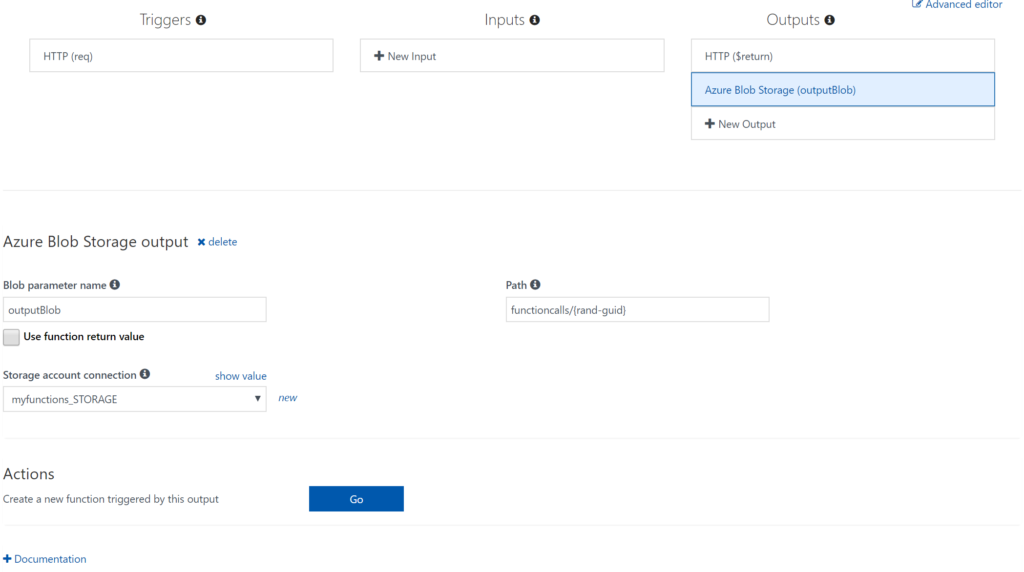

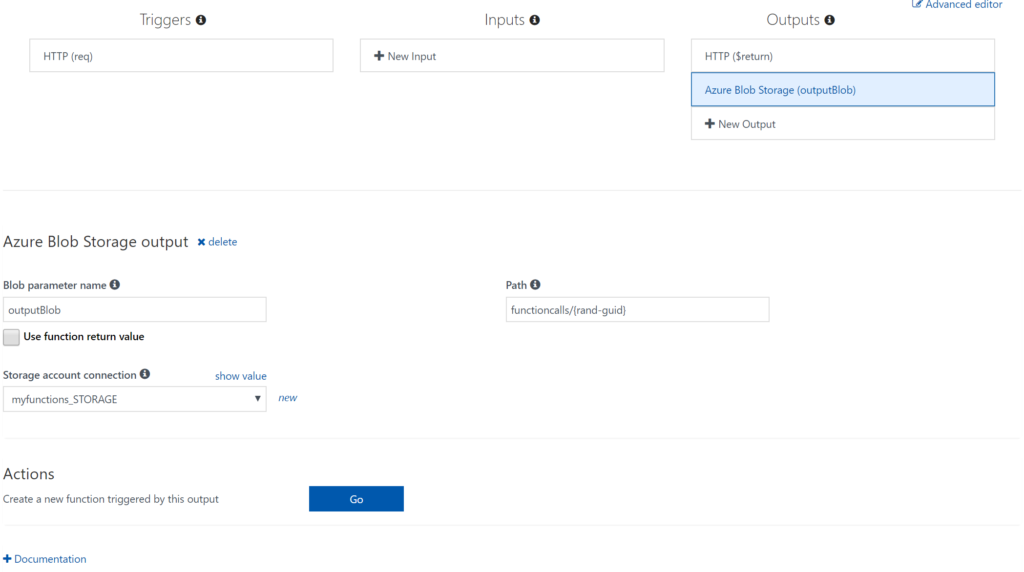

You can bind Azure Storage as an extra output channel for a function. Through the Integrate pane I can add an extra output to the function. Configure the new output by choosing Azure Blob Storage, set Storage Account Connection and specify the path.

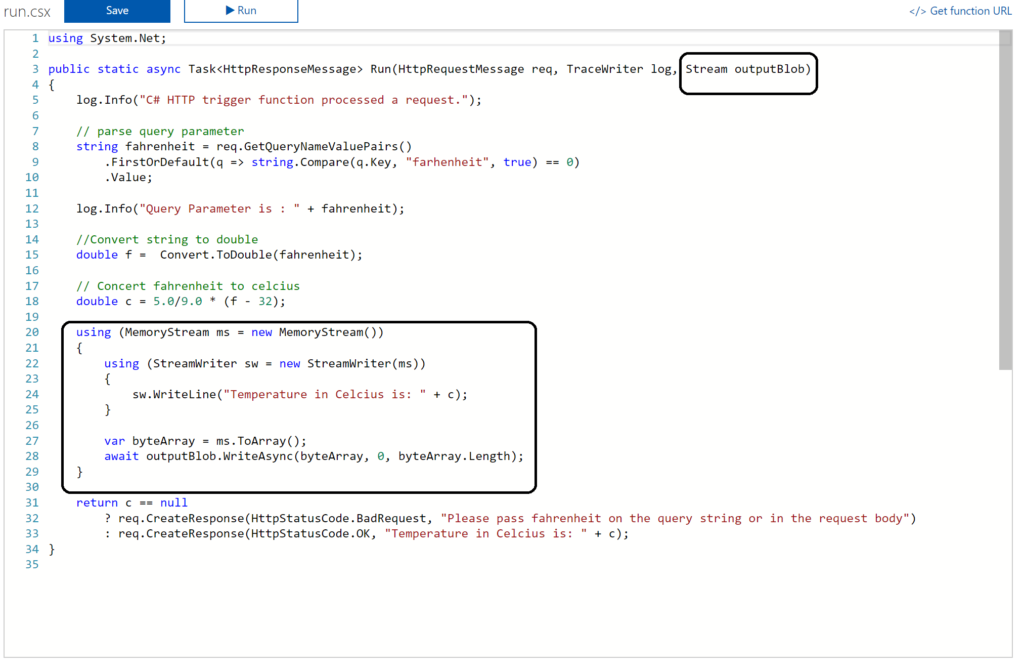

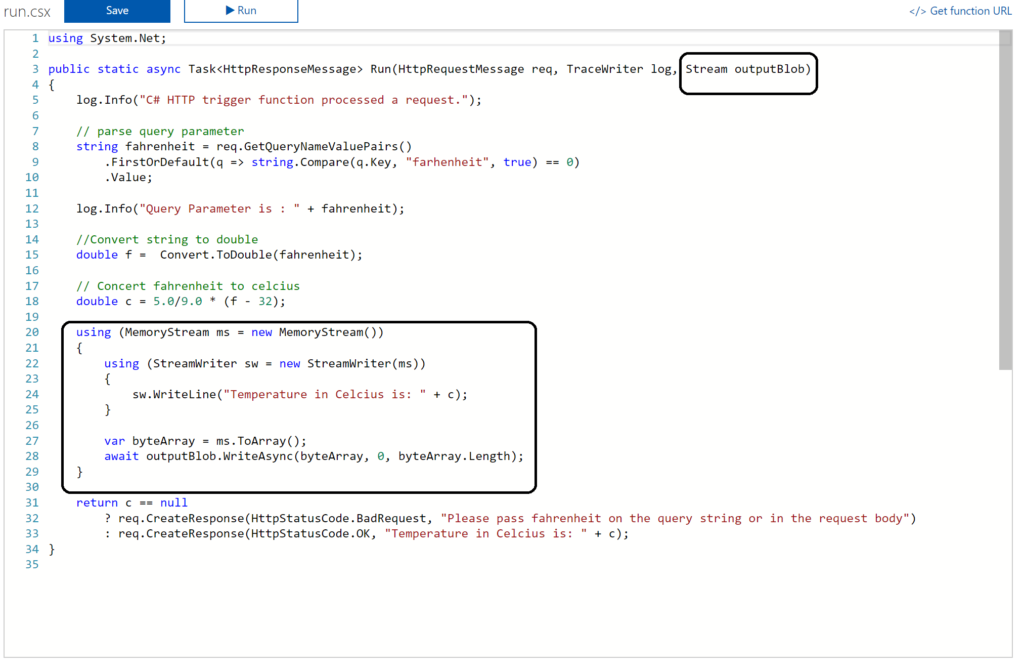

Next you have to update the Function signature with outputBlob parameter and implement the outputBlob.

Finally, you can monitor your functions through the Monitor pane, which provides you some basic insights (logs). For a more richer monitoring experience, including live metrics and custom queries, Microsoft recommends using Azure Application Insights. See also Monitoring Azure Functions.

Visual Studio Experience

Azure Functions can be build with Visual Studio. Now the templates are now available after a default installation of Visual Studio. You need download them. Visual Studio 2017 the templates for Azure Functions are available on the marketplace. For Visual Studio 2015 read this blog post, which includes the steps I did for my Visual Studio 2015 installation.

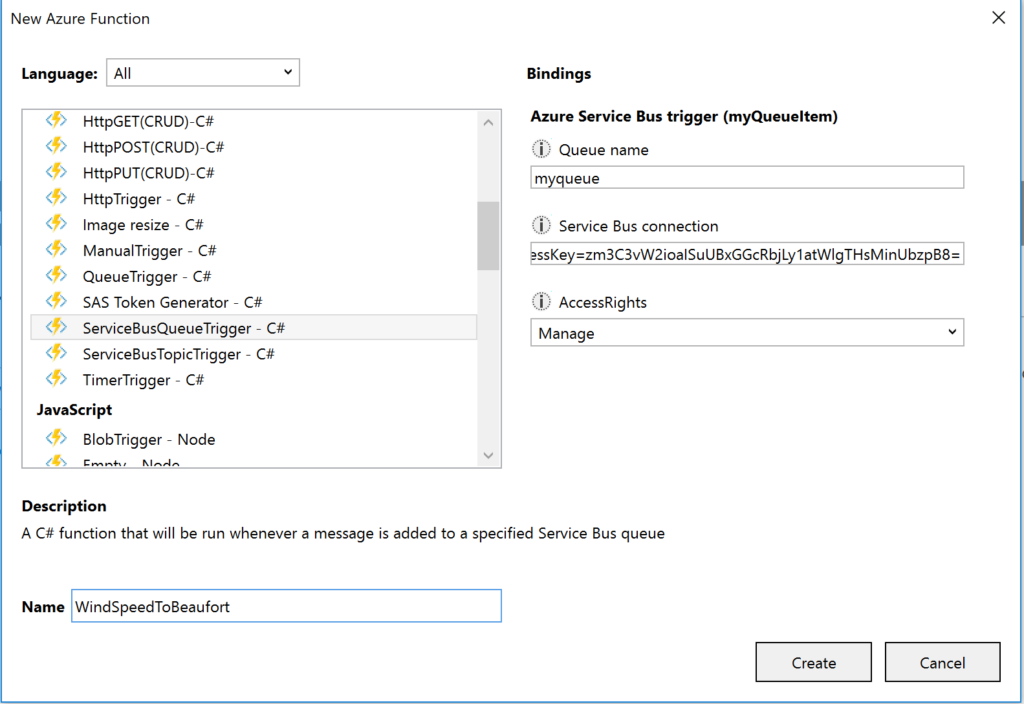

Once the templates are available in your Visual Studio version (2015 or 2017) you can create a FunctionApp project. Within the created FunctionApp project you can add functions. Right click the project and select Add –> New Azure Function. Now you can choose what type of function you can build. You will have a similar experience as with the portal.

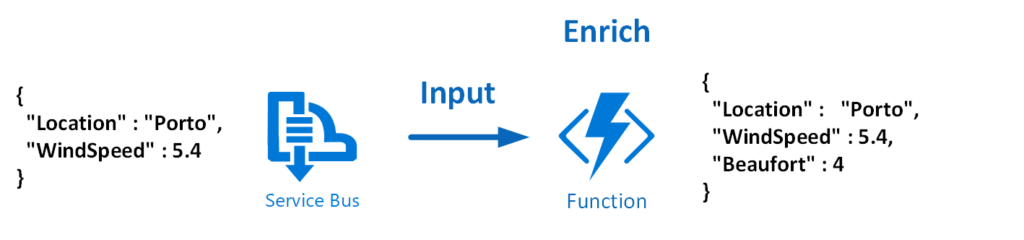

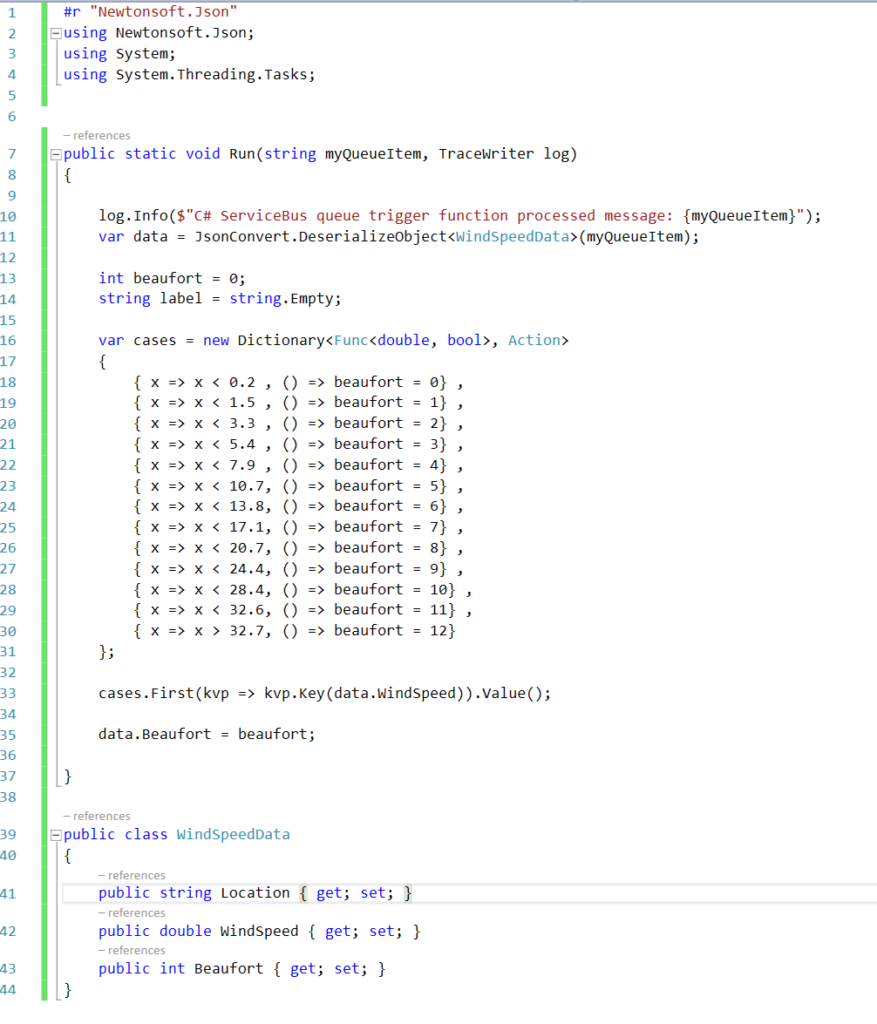

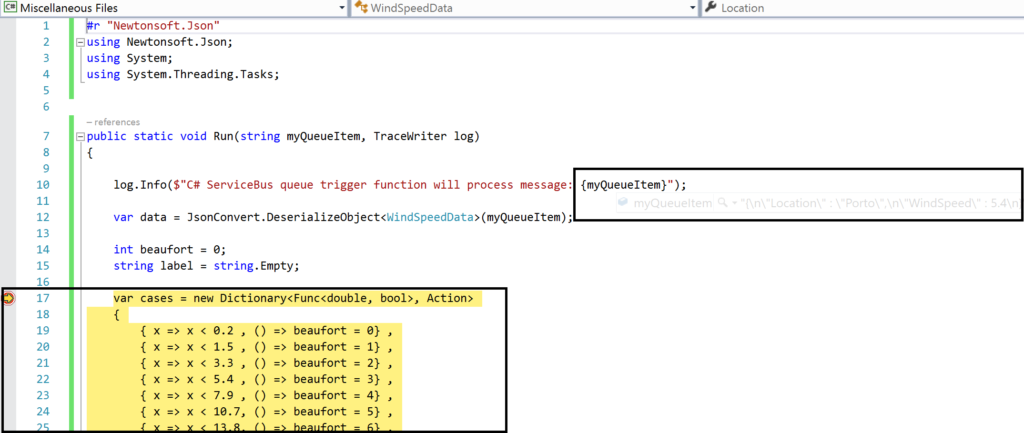

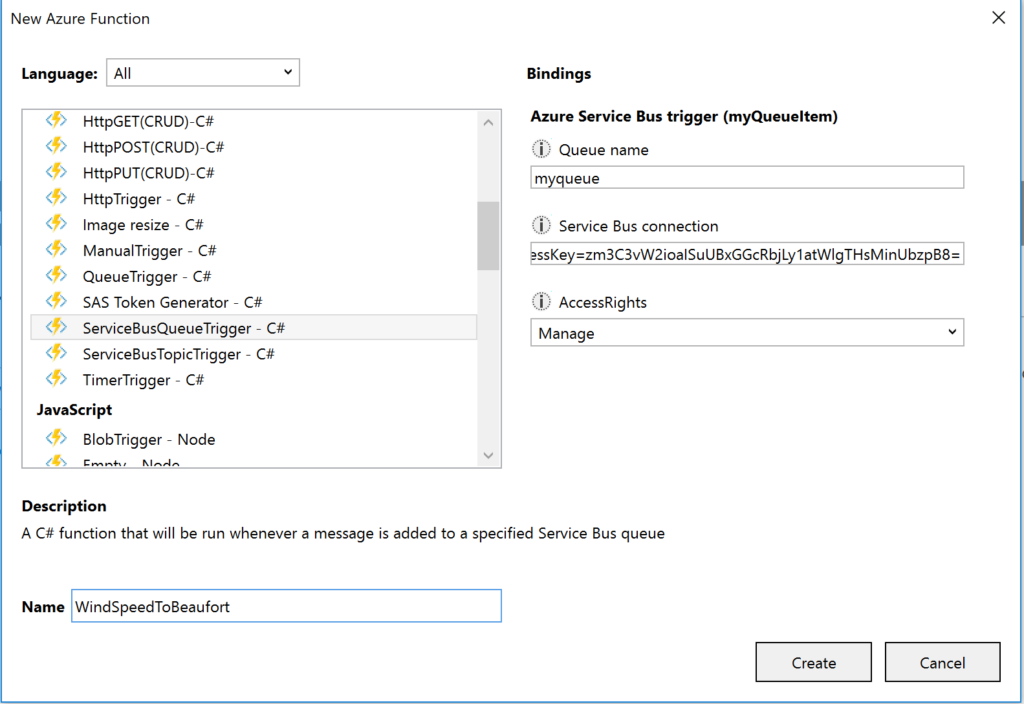

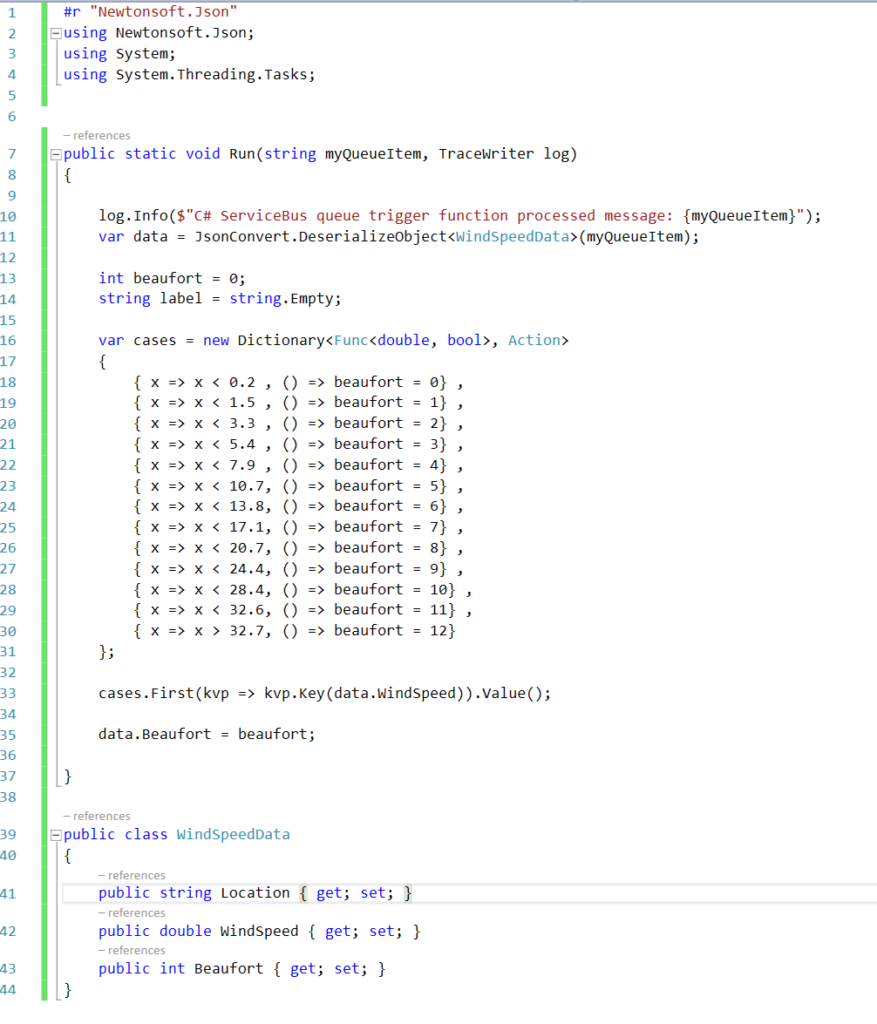

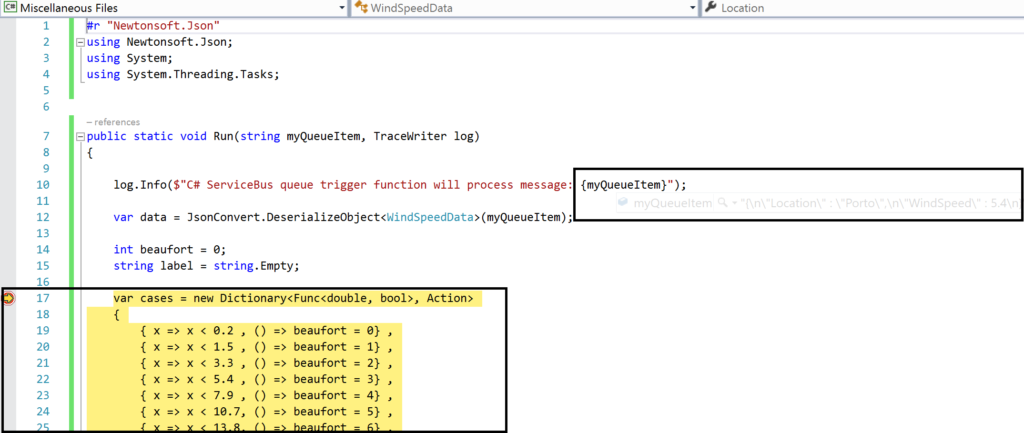

For instance you can create a ServiceBusTrigger Function (WindSpeedToBeaufort), which will be triggered once a message arrives on a queue (myqueue).

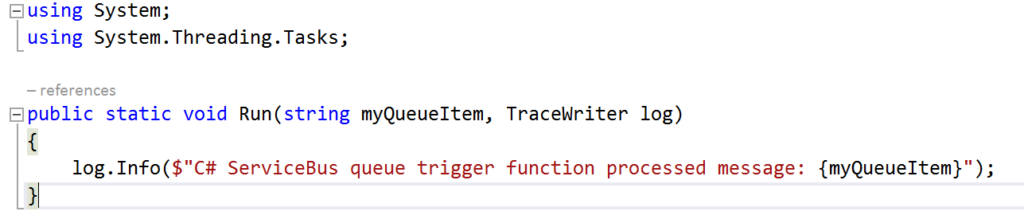

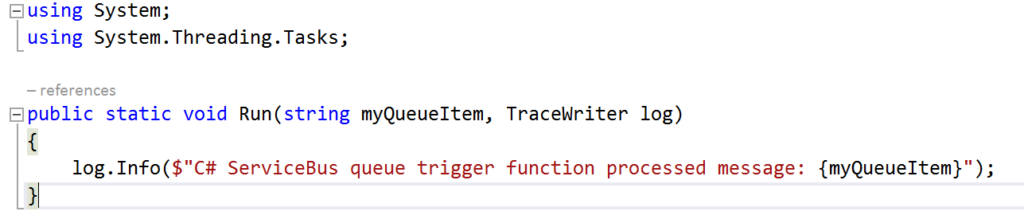

As a result you will see the following code once you hit Create:

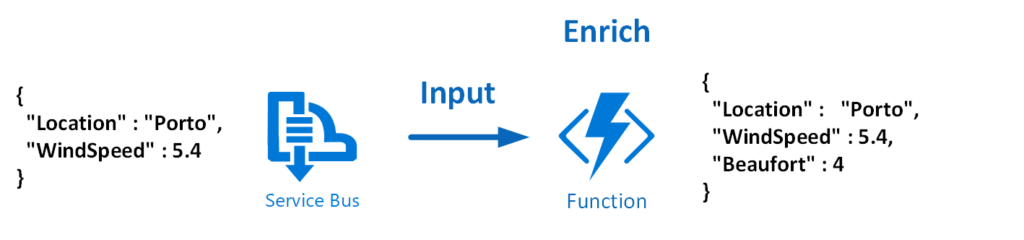

Now let’s work on the function so it will resemble the diagram below:

To modify the function that does the above the necessary code is shown below:

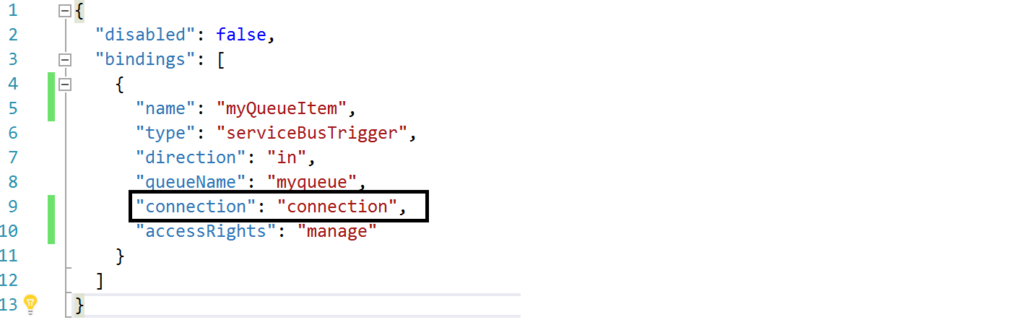

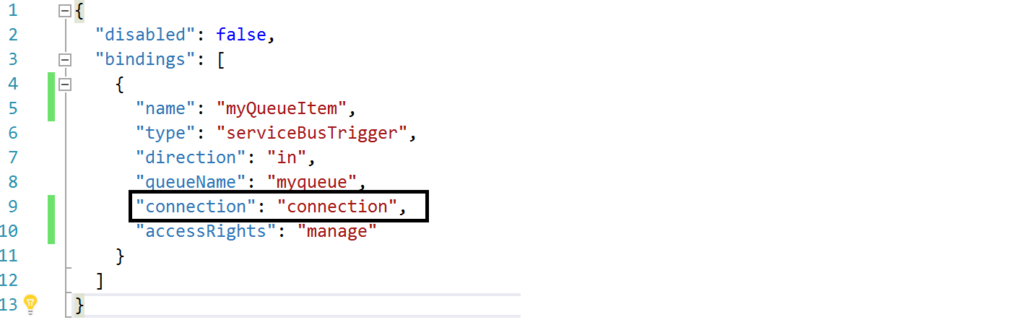

And the json.setting needs to be renamed to local.settings.json, the function.json needs modification to:

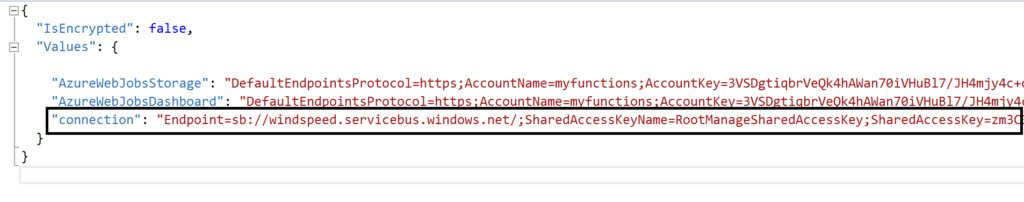

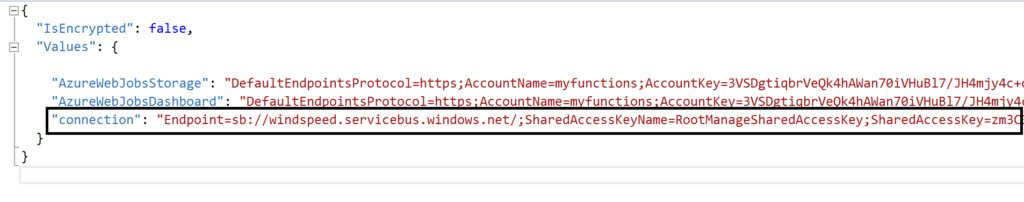

The connection string is moved to the local.settings.json as depicted below:

Most of all this change is important, otherwise you will run into errors.

Debugging with Visual Studio

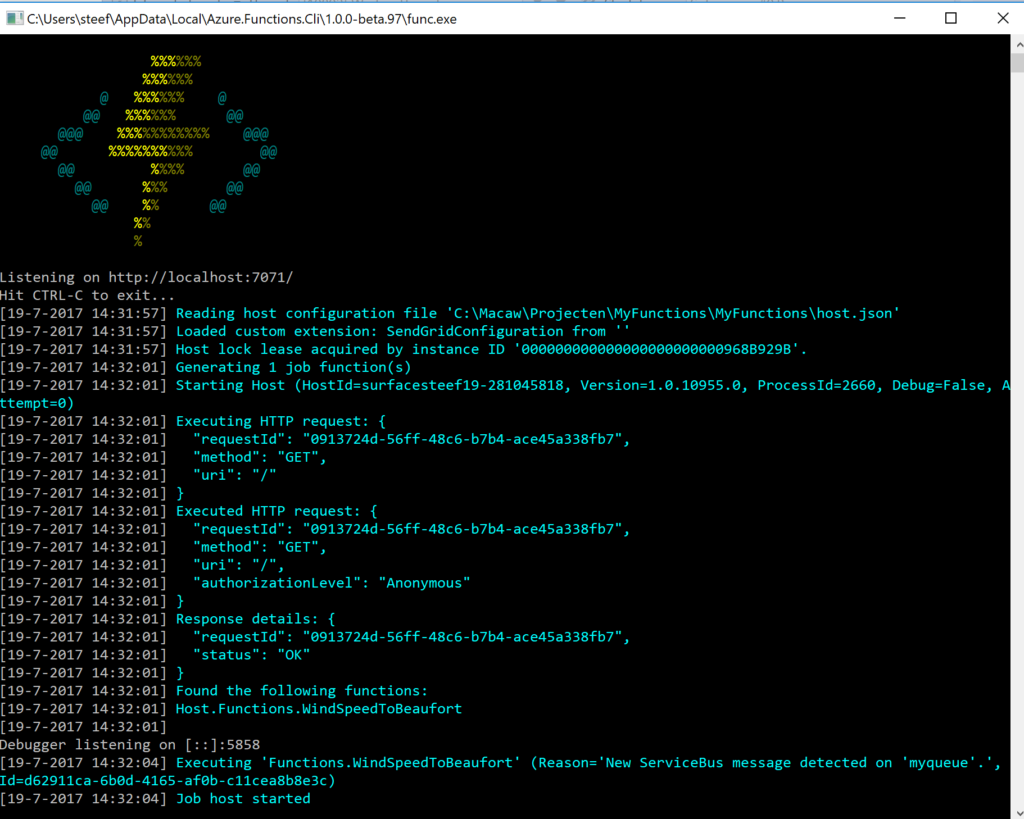

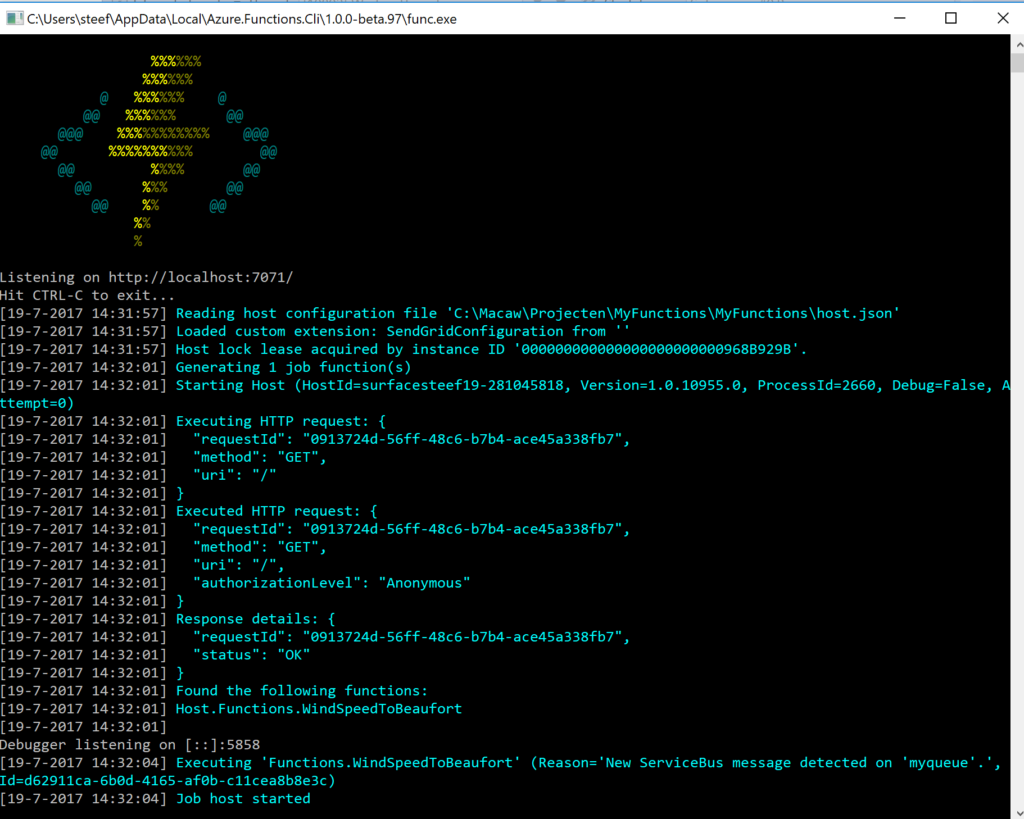

Visual Studio provides the capability to debug your custom function. Compile and start a debug instance. A command line dialog box will appear and your function is running (i.e. hosted and running).

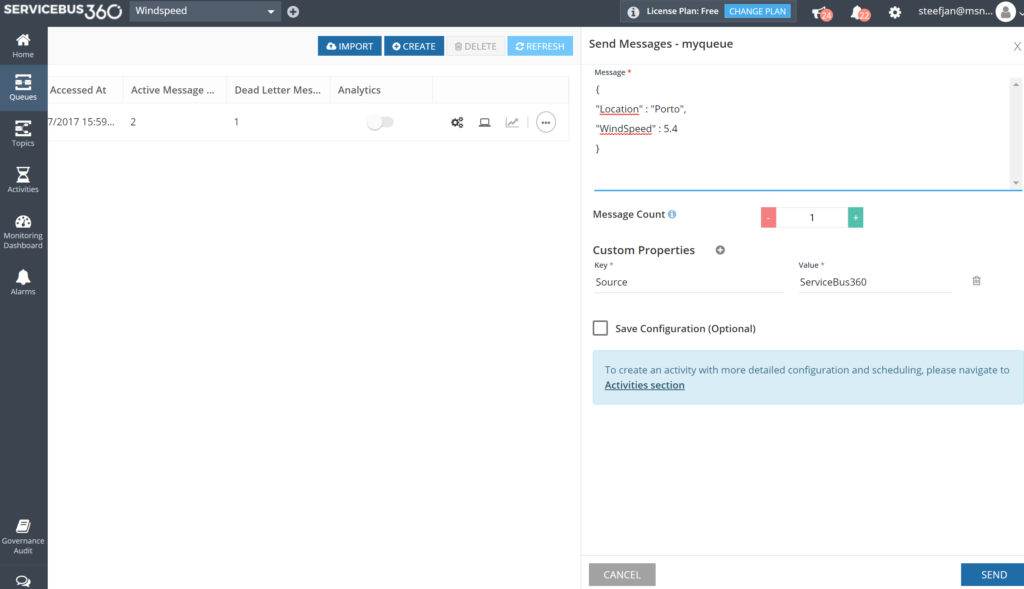

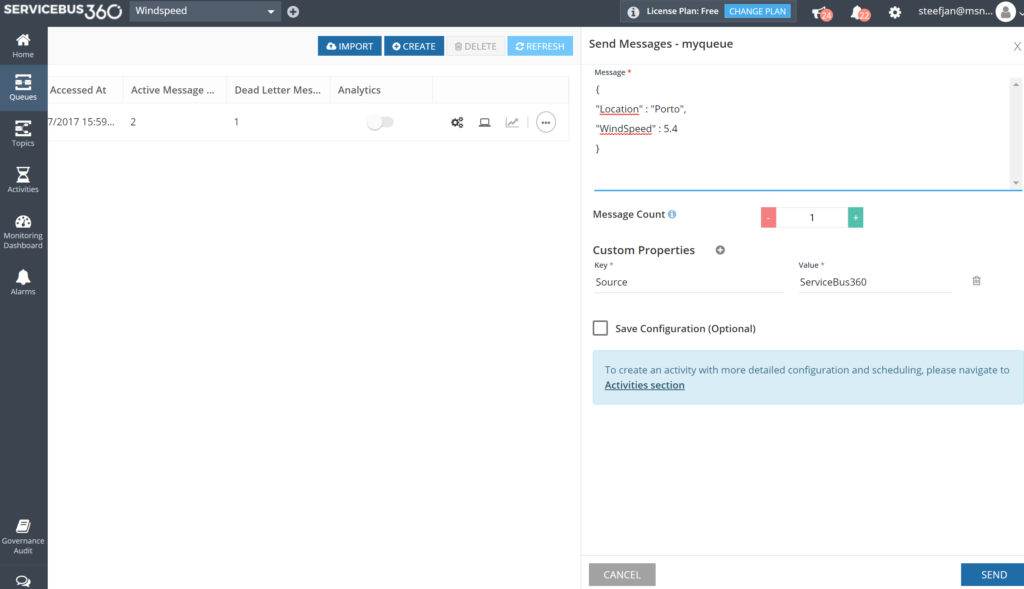

To debug our function in this blog a message is sent to myqueue using the ServiceBus360 service.

Once the message arrives at the queue it will trigger the function. Hence, the debugging can start on the position in the code, where a breakpoint has been set.

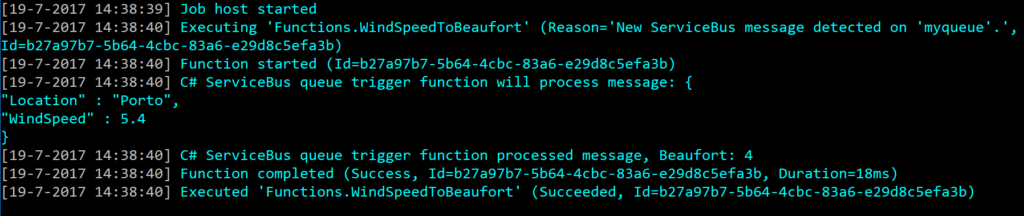

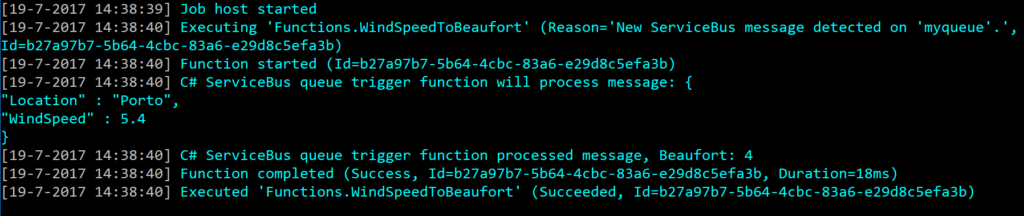

And the result of execution will be visible in the command line dialog box:

In conclusion this is the debugger experience you will have with Visual Studio. Combined with having intellisense while developing your function.

Deployment

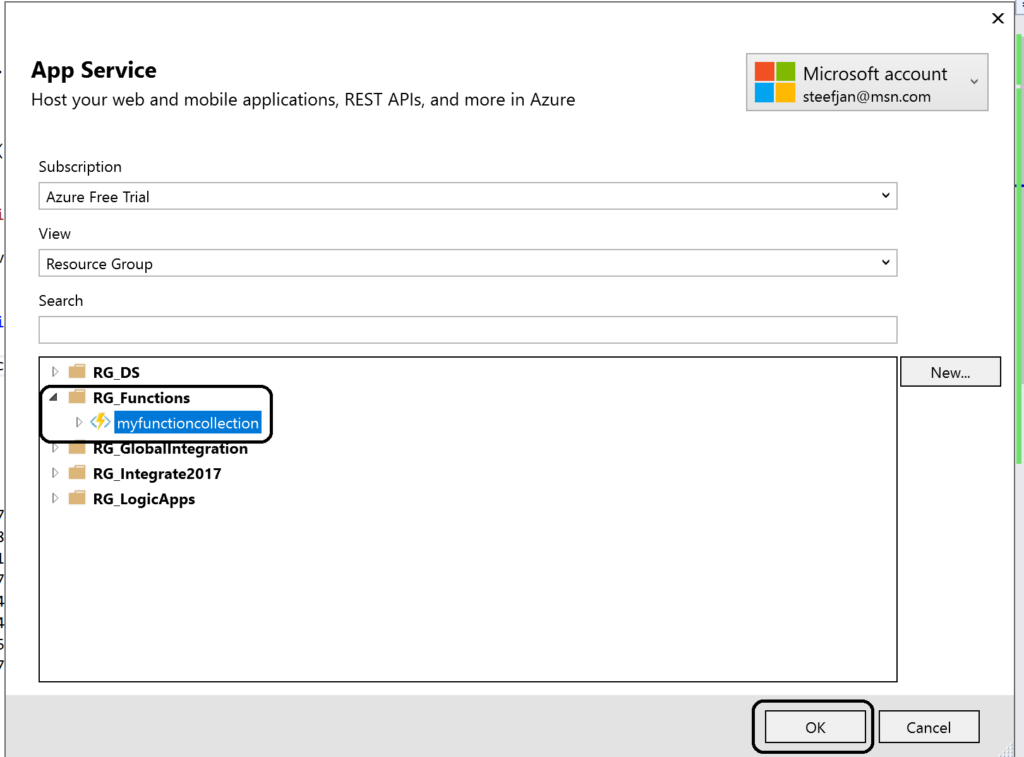

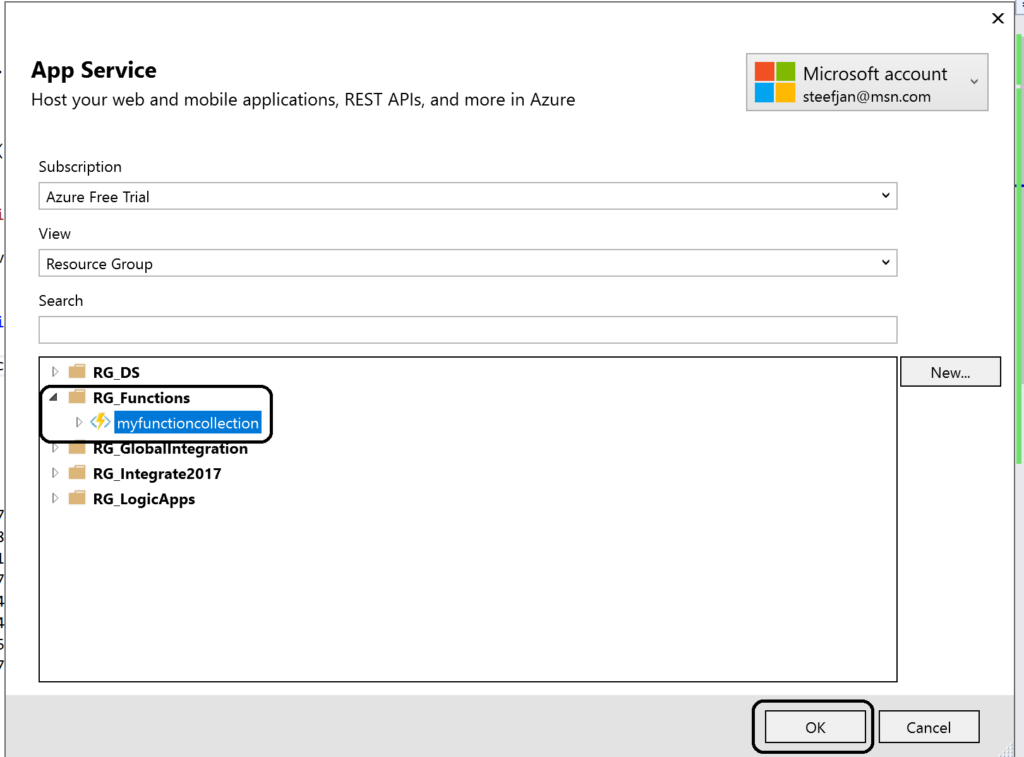

You have build and tested your function to your satisfaction in Visual Studio. Now it’s time to deploy it to Azure, therefore you right click the project and choose publish. A dialog will appear and you can choose AppService. Subsequently, if you are logged in with your Azure Credentials you will see based on the subscription one or more resource groups.

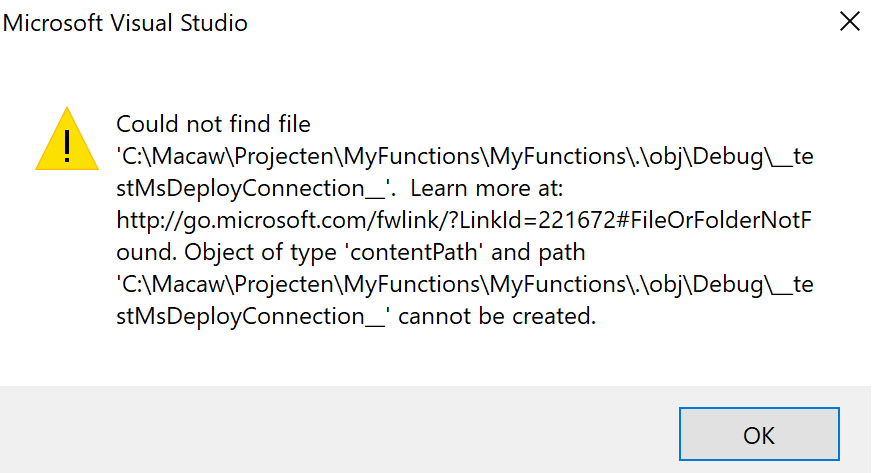

You can click OK and proceed with next steps to publish your function to the desired resource group –> function app. However, this will in the end not work!

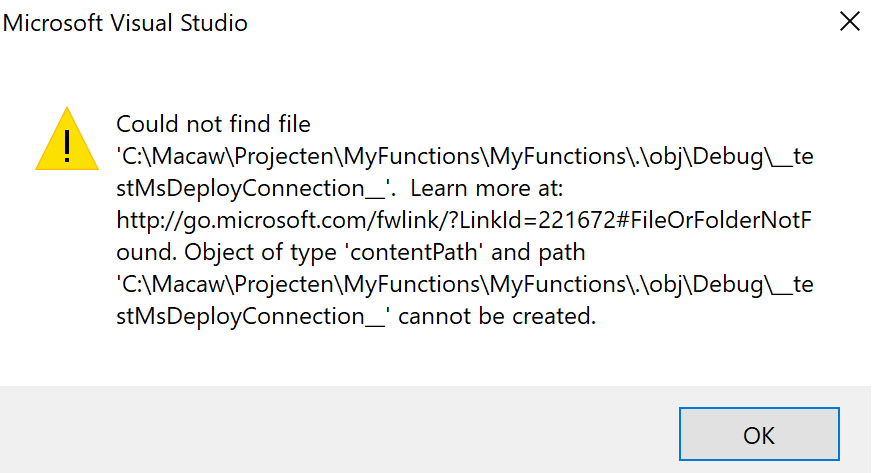

As a result you will need a workaround as explained in Publishing a .NET class library as a Function App at least that’s what I found online. However, I as able to deploy it. However, I stumbled on another error in the portal:

Error:

Function ($WindSpeedToBeaufort) Error: Microsoft.Azure.WebJobs.Host: Error indexing method ‘Functions.WindSpeedToBeaufort’. Microsoft.Azure.WebJobs.ServiceBus: Microsoft Azure WebJobs SDK ServiceBus connection string ‘AzureWebJobsconnection‘ is missing or empty.

Hence, not a truly positive experience. In the end it’s missing a setting i.e. application setting of the Function App.

Anyways, another walkaround is to create add a new function to existing function app. Choose ServiceBusTrigger template, create it and finally copy the code from the local project into the template over the existing code. In conclusion this works as now you see a setting for the Service Bus connection string in the application setting and the reference in the function.json file.

Considerations

There are some considerations around Azure Function you need to be aware of. First of all the cost of execution, which determines whether you will choose a consumption or app plan. See Function Pricing and use the calculator to have a better indication of costs. Also consider some of the best practices around functions. These practices are:

- Azure Functions should do just one task,

- finish as quickly as possible,

- be stateless

- and be idempotent.

See also Optimize the performance and reliability of Azure Functions.

Finally, be aware of the fact that some features of Azure Functions are still preview like Proxies, Slots and the Visual Studio Tools.

Resources

This blog contains several links to some resources you might like to explore. An excellent starting point for a researching Azure functions is https://github.com/Azure/Azure-Functions. And if you are interested how Functions can play a role in Logic Apps have a look at this blog post: Building sentiment analysis solution with Logic Apps.

Explore Azure Functions, go serverless!

Cheers,

Steef-Jan

Author: Steef-Jan Wiggers

Steef-Jan Wiggers is all in on Microsoft Azure, Integration, and Data Science. He has over 15 years’ experience in a wide variety of scenarios such as custom .NET solution development, overseeing large enterprise integrations, building web services, managing projects, designing web services, experimenting with data, SQL Server database administration, and consulting. Steef-Jan loves challenges in the Microsoft playing field combining it with his domain knowledge in energy, utility, banking, insurance, health care, agriculture, (local) government, bio-sciences, retail, travel and logistics. He is very active in the community as a blogger, TechNet Wiki author, book author, and global public speaker. For these efforts, Microsoft has recognized him a Microsoft MVP for the past 7 years. View all posts by Steef-Jan Wiggers

by Steef-Jan Wiggers | Jul 7, 2017 | BizTalk Community Blogs via Syndication

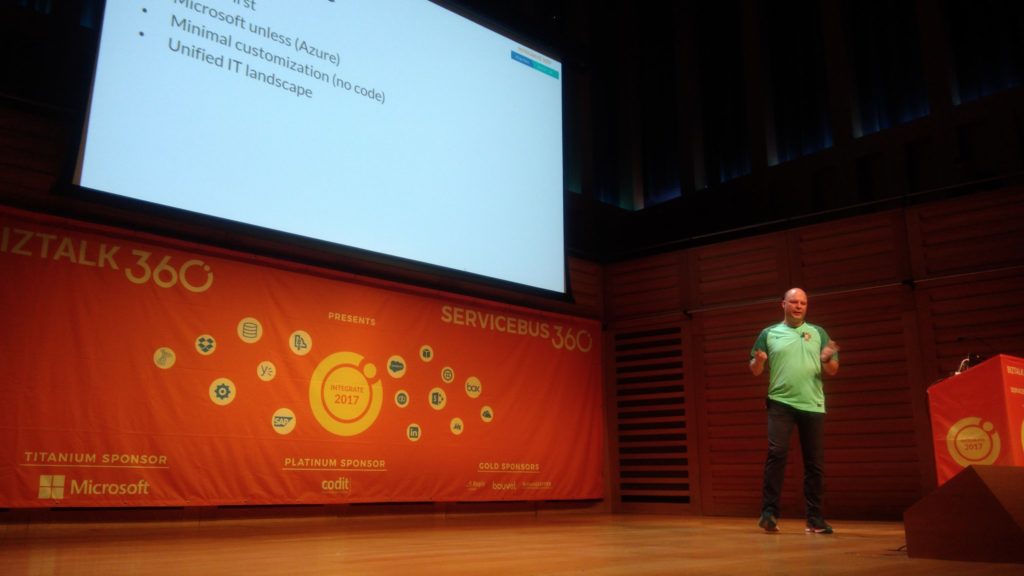

Integrate 2017, a well-organized Microsoft Integration focused event, took place from 26 to 28 of June at Kings Place in London. It attracted 380 plus attendees from 50 different countries and had 28 speakers from around the globe including the Microsoft Product Group. I did a session around Logic Apps from the consumer, end user, and business perspective and used sentiment analysis as for my demo.

Context

To provide you some context. Logic App service was the most prominent technology during the three-day event. This Azure Service became general available a year ago and is starting to build momentum as premier cloud integration capability. Most of all, the service fits rather well in the complete Azure Platform with its connectors to a wide variety of other Azure services and in addition, it connects with SaaS solutions such as Twitter, Zendesk, Salesforce, ServiceNow, PagerDuty, and Slack.

During Integrate 2017 I talked about empowering business with Logic Apps. And my goal was to show the audience the value of Logic Apps for the business. The service is a true iPaaS service according to the definition Wikipedia provides online. And it is a part of Azure, which is multi-tenant, has a subscription model or in the case of Logic Apps it’s consumption based (micro-billing), provides pre-built ready available connectors, deployment/manage/monitoring through the platform.

iPaaS

If you look at how for instance Gartner describes iPaaS then again Logic Apps are a true cloud-native integration platform. Consumers of Logic Apps in Azure can implement data, application, API and process integration projects spanning cloud-resident and on-premises endpoints. I will quote the Gartner report here:

“This is achieved by developing, deploying, executing, managing and monitoring “integration flows” (aka “integration interfaces”) — that is, integration applications bridging between multiple endpoints so that they can work together.”

And the iPaaS capabilities typically include according to Gartner:

• Communication protocol connectors (FTP, HTTP, AMQP, MQTT, Kafka, AS1/2/3/4, etc.)

• Application connectors/adapters for SaaS and on-premises packaged applications

• Several data formats (XML, JSON, ASN.1, etc.)

• Data standards (EDIFACT, HL7, SWIFT, etc.)

• Mapping and transformation of data

• Quality of data

• Routing and Orchestration

• Integration flow development and lifecycle management tools

• Integration flow operational monitoring and management

• Full lifecycle API management

Looking at the above capabilities than Logic Apps in combination with Integration Account and API Management provide those capabilities.

Gartner Quadrant

Logic Apps are positioned in Gartner Quadrant in the Visionaries box, which means that the vendor of the service is able to execute lower than the leaders (in the Quadrant vendors like Dell Boomi and Informatica), have a smaller install base, certain immaturity, timid marketing, reactive sales operation and lack of strategic commit to the market.

My take on that is that Logic Apps is relatively new in the iPaaS market.

- A year ago it became general available. And it is maturing at a fast pace with new feature releases every two weeks with an expanding set of connectors.

- Sales representation from Microsoft at Integrate 2017.

- And finally, the commitment is strong with the Pro Integration Product Group presence at various conferences throughout 2017. This year they have or will attend Ignite, Build, Integrate2017 Europe, Inspire (former WPC), Integrate 2017 US, Integration Bootcamp, Global Integration Bootcamp, Global Azure Bootcamp, and smaller User Group meetings worldwide.

Hence I struggle a bit with the classification of the current state of Logic Apps. I strongly feel the service is close to the border of visionary and leader. It has promised to become a true iPaaS leader.

Benefits

Business can reap the benefits from this service as the attention is towards solving the problem(s) it is facing. Logic Apps is a part of a large Platform. And it can deliver solutions fast as there’s no need for procuring servers, or other infrastructure related capabilities. This accounts for the business that has transformed their business to the cloud and requires cloud-native solutions. That’s what fit for purpose with Logic Apps. And the costs are less and time to market of your solutions is fast.

Use Cases

The connectors provided by Logic App can help you build solutions for various enterprise scenarios. For instance, you leverage cognitive services to identify a person to subsequently grant him access to resources, start an onboarding process, or provide access to a facility. An example of leveraging Cognitive Services is to perform text analysis on tweets, which I will explain in further detail later in this post.

The text analysis can be useful to detect sentiment in a tweet. Particularly on a #hashtag, for instance, a person like Trump, product or service. I mention President Trump here as the current US President uses this social media service quite extensively. And the tweets he produces are evaluated intensively for stock trading.

Dynamics 365

Other thinkable use cases evolve around the Dynamics 365 CRM Online connector. This connector provides connectivity to Dynamics CRM that provides various features like customer service automation, marketing campaigns, and social engagements.

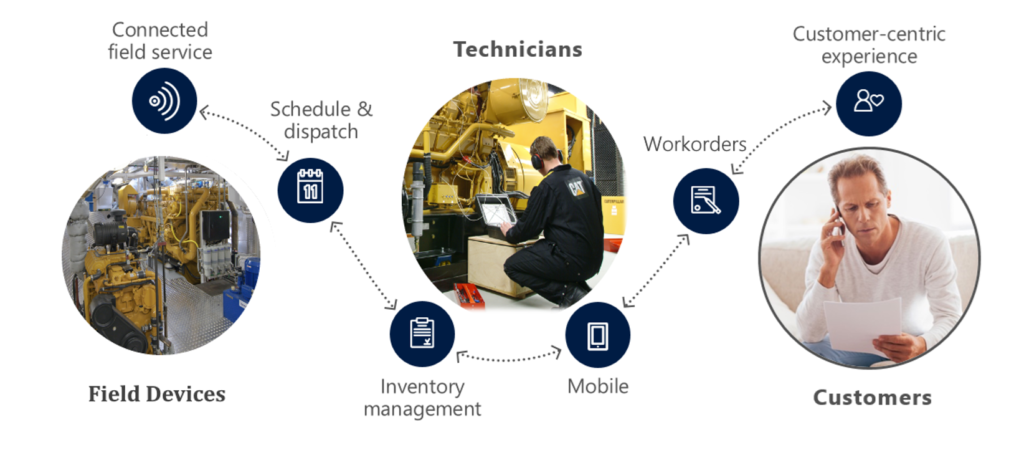

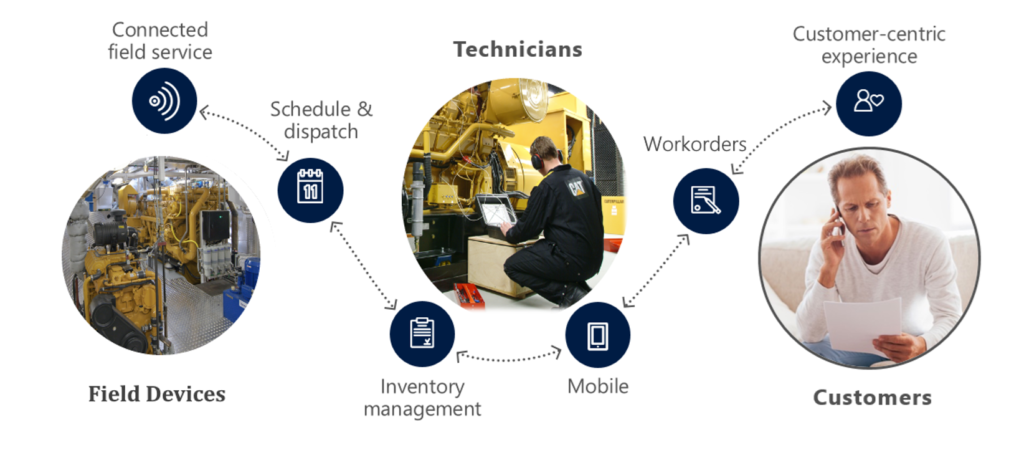

Dynamics 365 has several capabilities or flavors; one is Dynamics for Field Service, which provides a complete Field Service management solution, including service locations, customer assets, preventative maintenance, work order management, resource management, product inventory, scheduling and dispatch, mobility, collaboration, customer billing, and analytics. Therefore, during integrate I talked about leveraging this solution in combination with IoT devices. The picture below shows the data flow from device to the Dynamic Field Service features.

Data from a device can be consumed by IoT Hub service in Azure and pushed to the service bus queue, which can be read by Logic App. The Logi App forwards the data into Dynamics Field Service through the CRM connector. In conclusion, a Logic App or number of them can be part of an end-to-end solution for various field services.

The previous paragraph discussed one of the many use cases possible including Logic Apps. Moreover, there are many other scenarios thinkable since Logic Apps are a part of a bigger platform, which means you leverage them with other Azure Services or create flows to move data around. With sentiment analysis, you can detect sentiment within a text using one of the Cognitive Services API’s. The way sentiment analysis API functions are that it returns a numeric score between 0 and 1 on a given text. Scores close to 1 indicate positive sentiment and scores close to 0 indicate negative sentiment. A score of 0.5 is neutral. With Logic Apps, you can receive tweets within a certain interval (occurrence) based on filter i.e. hashtag and feed the body into Detect Sentiment action.

Sentiment Analysis Solution

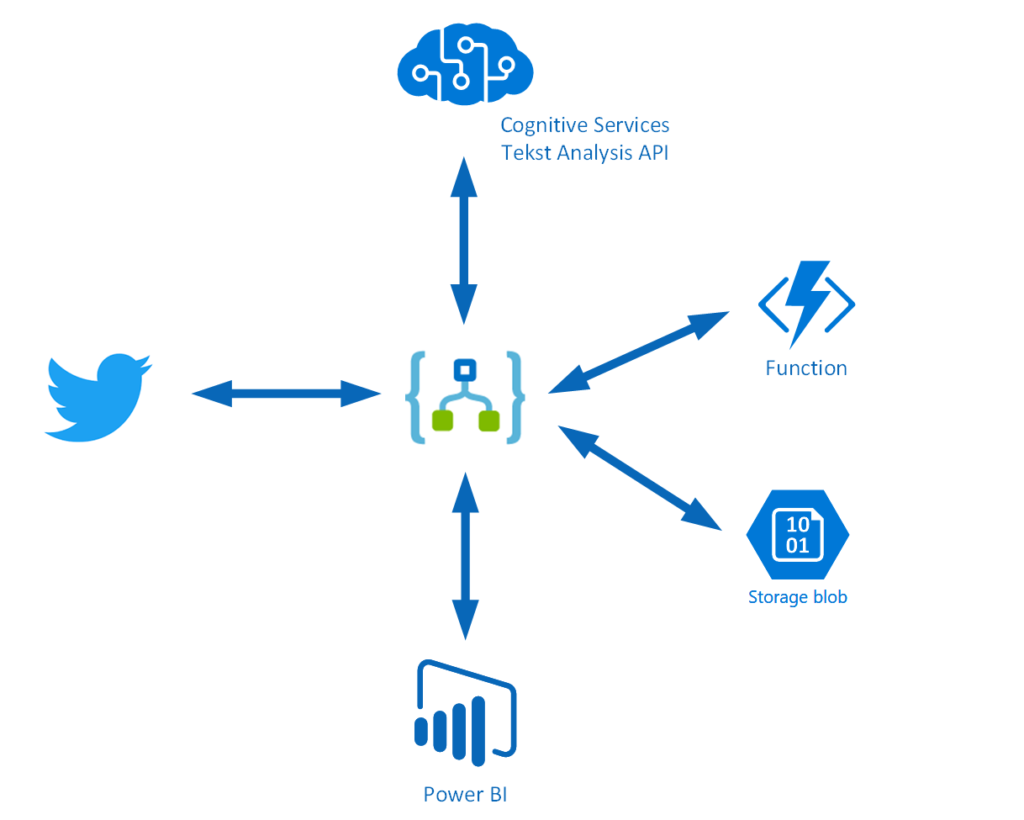

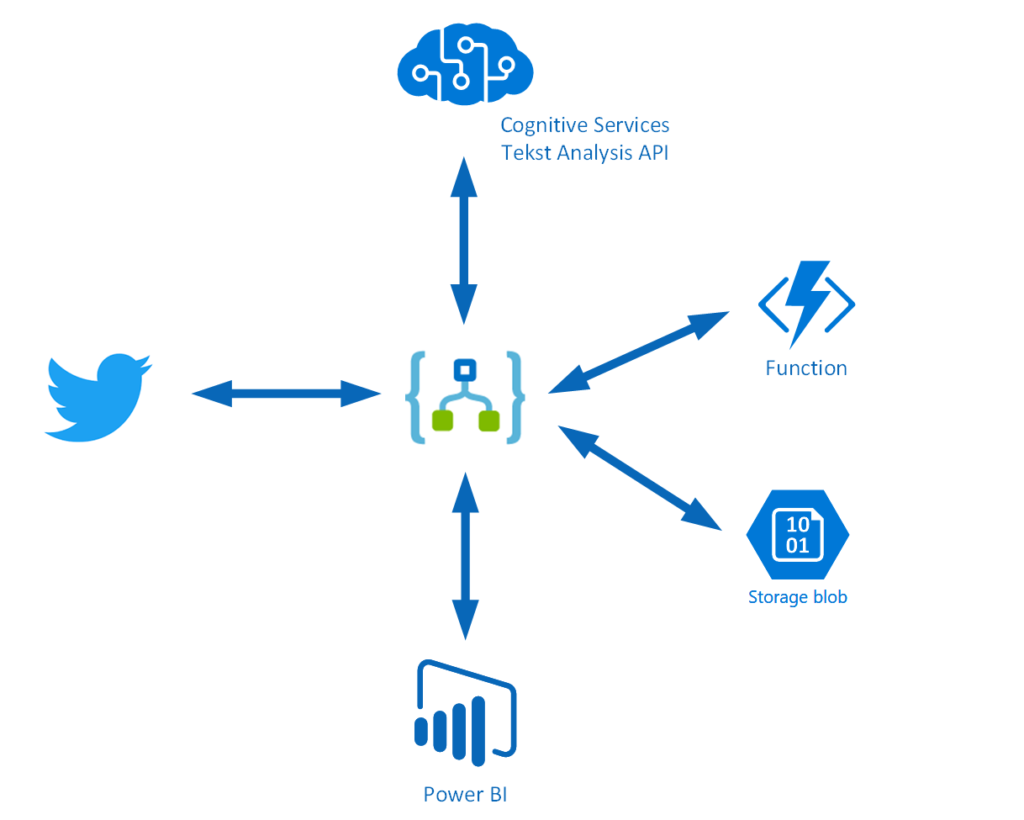

To build a solution leveraging the capabilities Cognitive Services deliver with a Logic App, Azure Storage Account, Azure Function and Power BI you need to set up these services up.

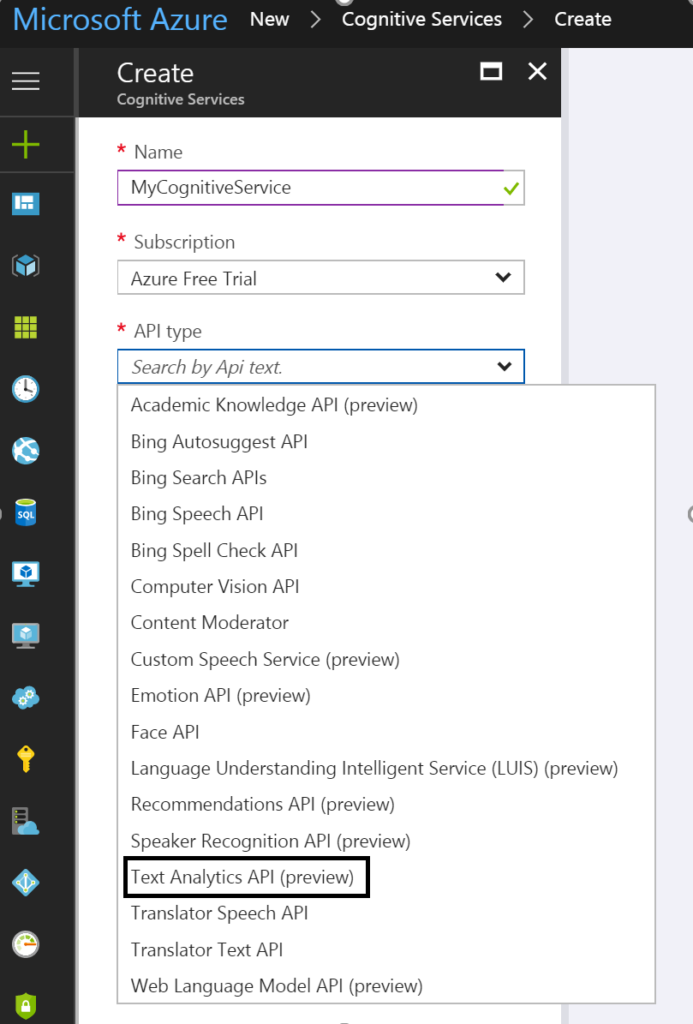

Cognitive Service

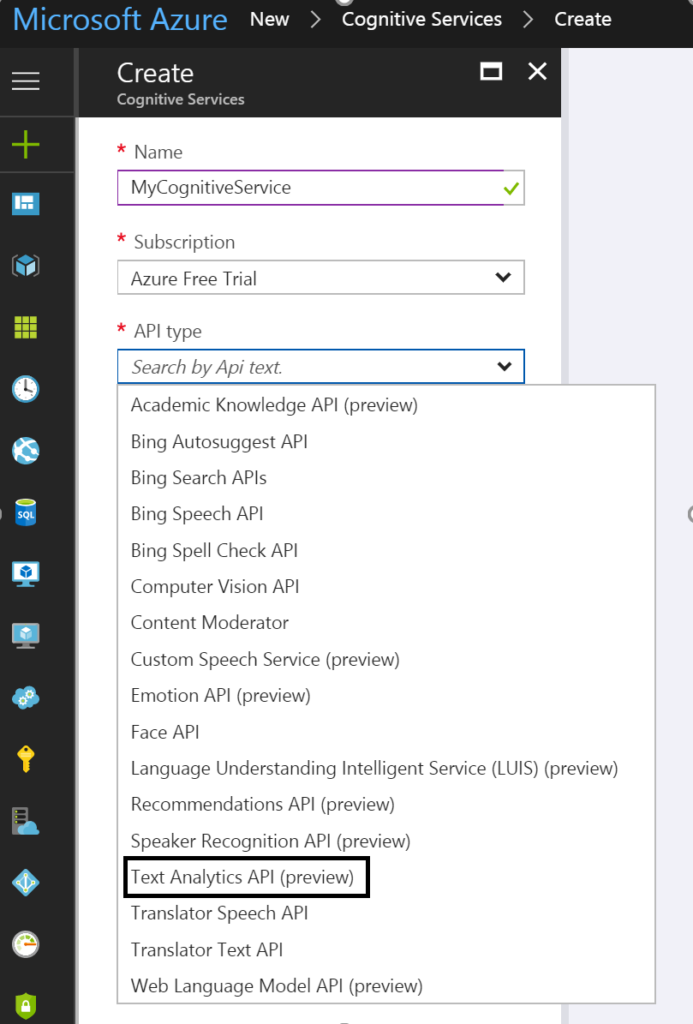

The setup of the first is basically provisioning of a Cognitive Service instance i.e. API. In the Azure Portal, you find the Cognitive Service in the marketplace. Subsequently, you click on the service you specify a name, choose a subscription, and subsequently which API you like to use.

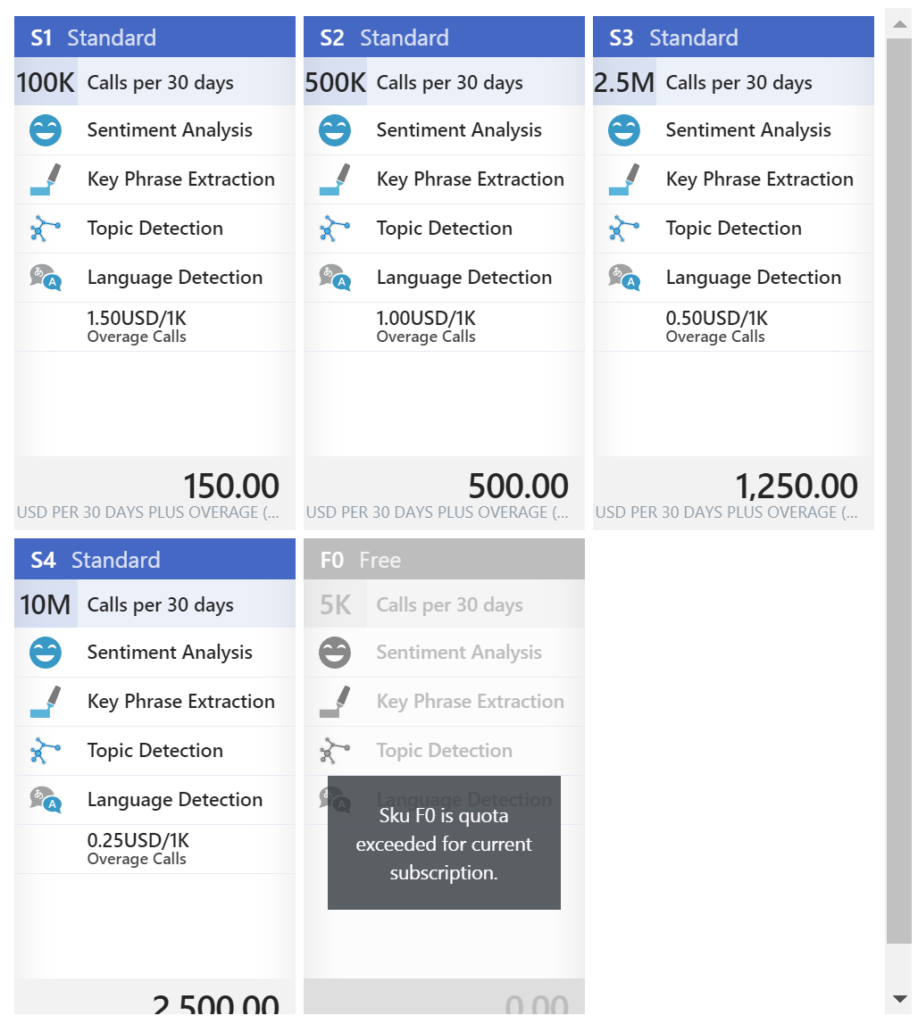

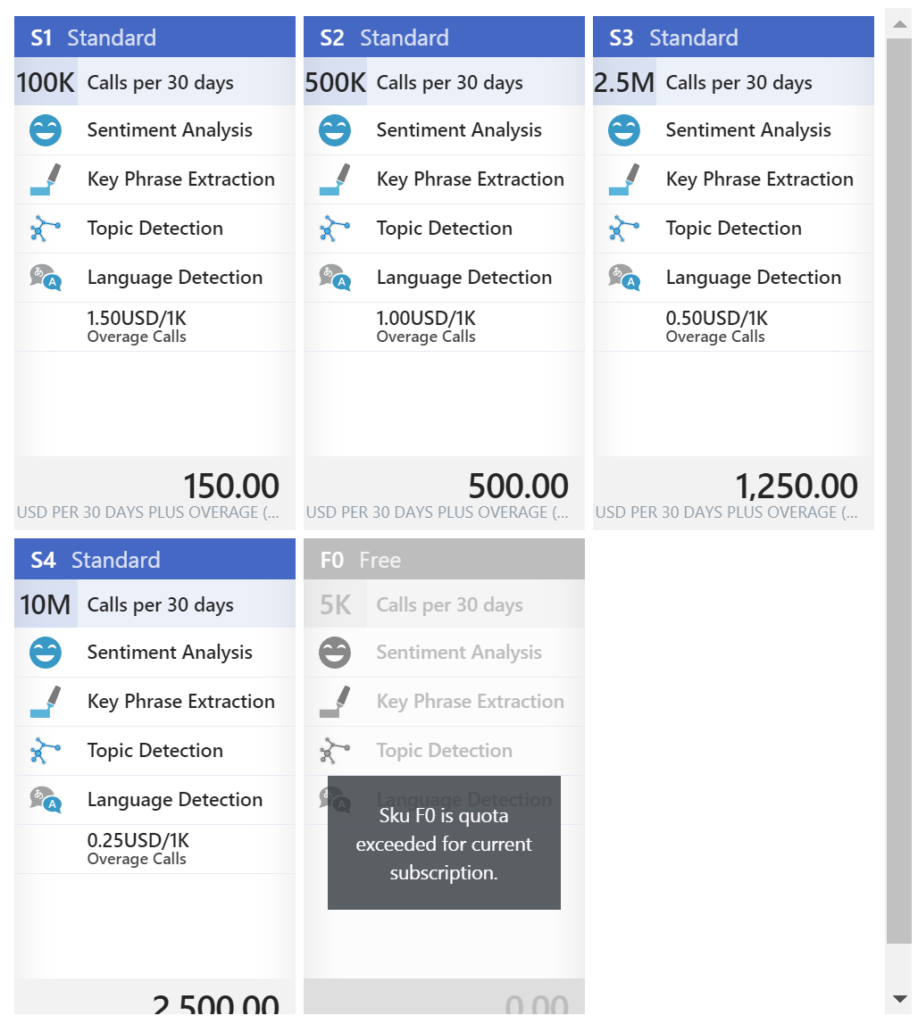

To detect sentiment analysis in a text you need to choose Text Analytics API, which as the time of writing is still in preview. The Text Analytics API is only available in region West US, and pricing of service varies depending on the tier you require. Below you can see the different pricing options.

As you can see in the picture above the Cognitive Service provides four features:

• Sentiment Analysis

• Key Phrase Extraction

• Topic Detection

• Language Detection

Once you have chosen the required tier you can create this service.

Power BI

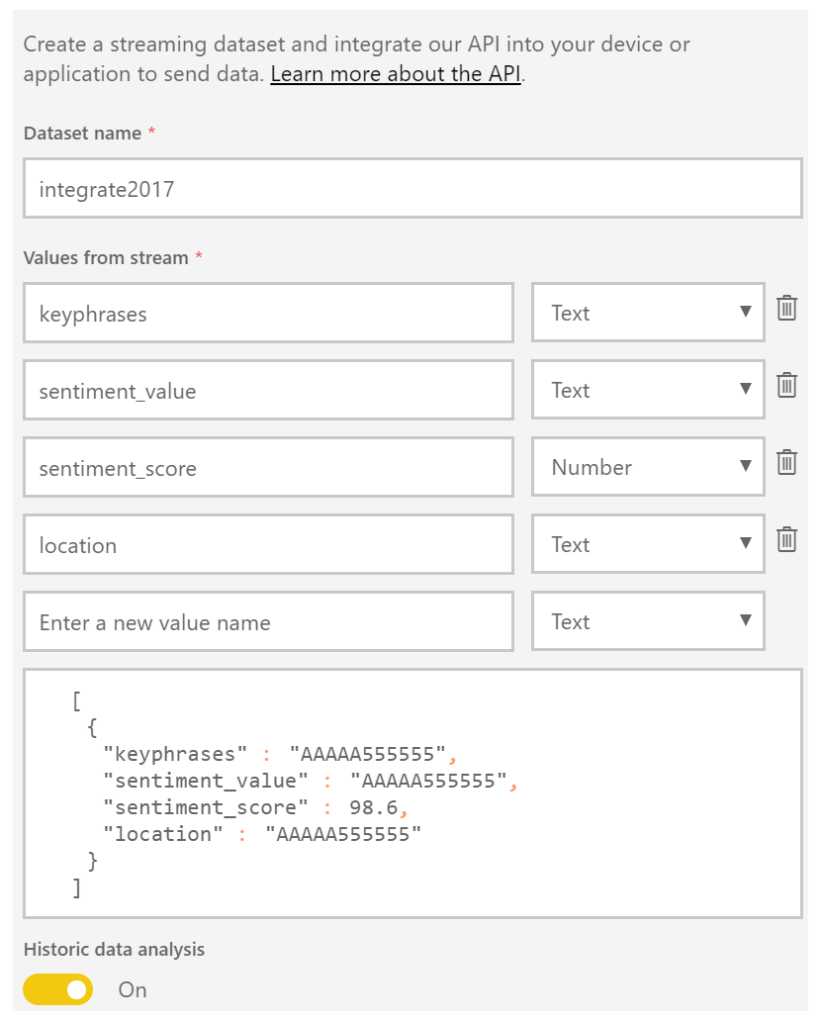

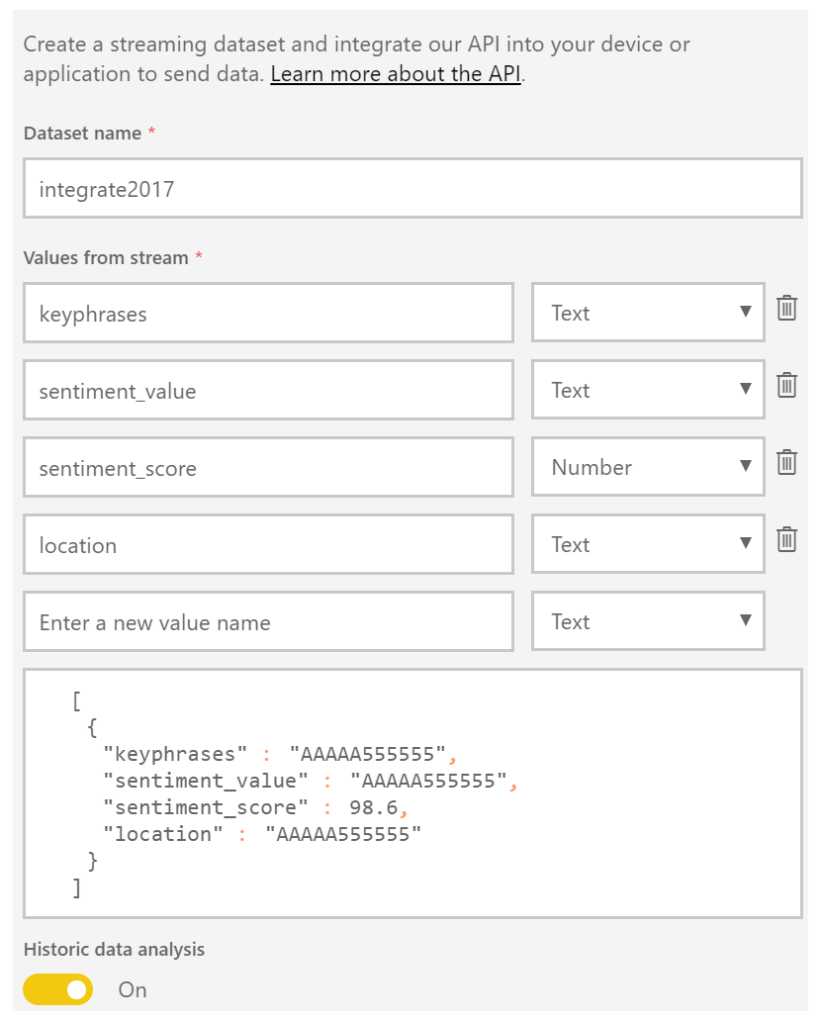

The next service is Power BI, which is a part of the Office365 offering and can be found here: https://powerbi.microsoft.com/. You can sign in and start building datasets, dashboards, and reports. For a solution to visualize sentiment you can create a streaming data set. Go to the powerbi.com and “Streaming datasets”, create a dataset of type API, click next and name the dataset and add fields to the streaming data set like shown below.

The Solution

In a solution, I build I created four text fields and one number field. The historic data analysis was enabled to build a collection of the data to be used for a report.

Now both Cognitive Service and Power BI have been setup and next step is to create a storage account in Azure. This account will archive tweets in a blob storage container tweets. Provisioning a storage account is easy and straightforward process. In the marketplace find storage account, select it, specify name, deployment model, purpose (choose blob storage), replication, access tier (cold), secure transfer, subscription, resource group, and location.

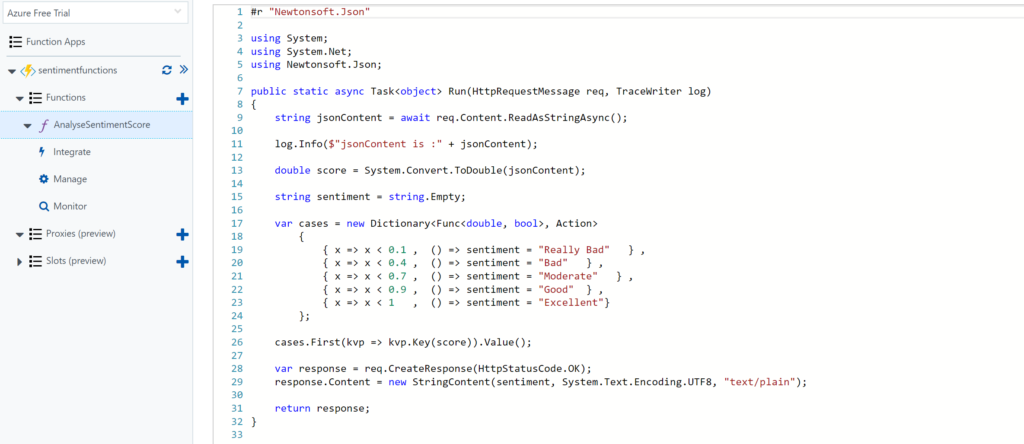

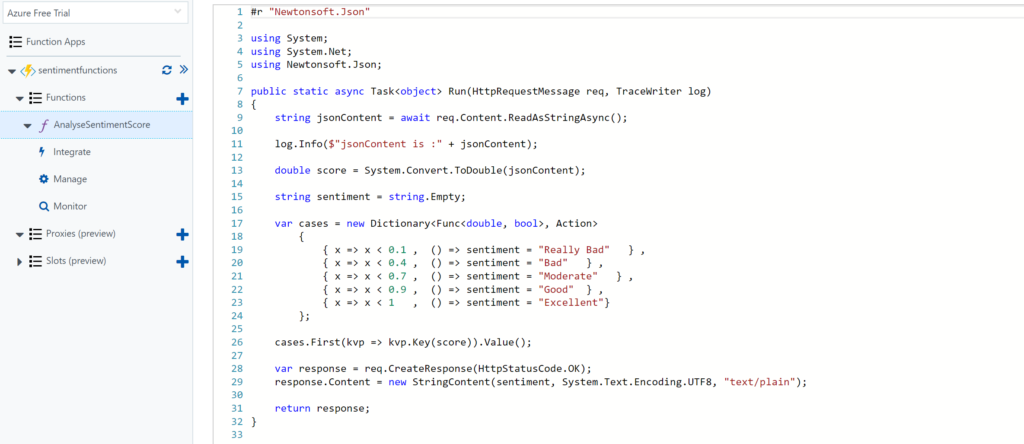

The final service required for the solution is a function. The Function in our solution will be provided with the input from the Cognitive Service API response (Score). Azure Functions provide a serverless coding capability using a Browser and the piece’s code you write can run in Azure i.e. within a Function App.

For our solution, we add a GenericWebHook-CSharp. We will rename the function to AnalyseSentimentScore. And in the Develop tab, we see some generic default code, which we will change to the code below.

Architecture

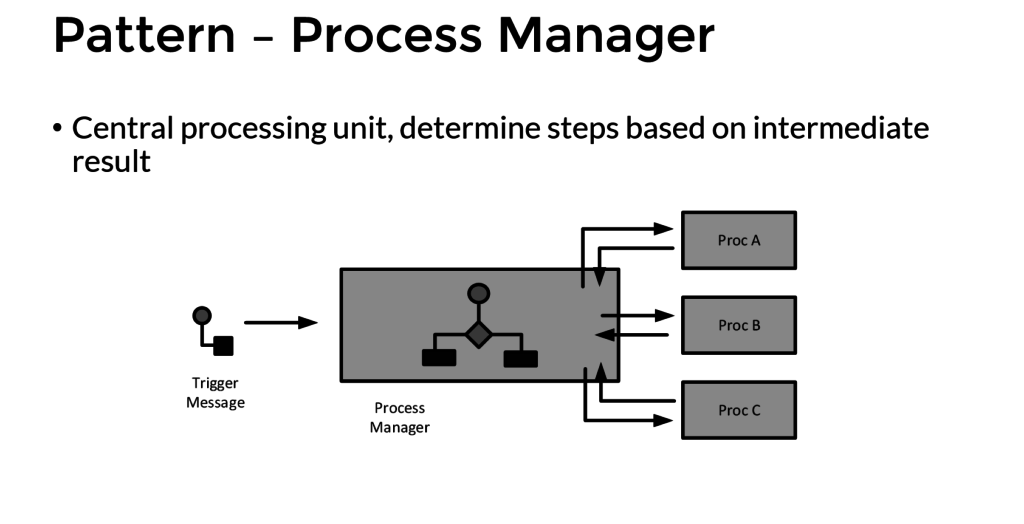

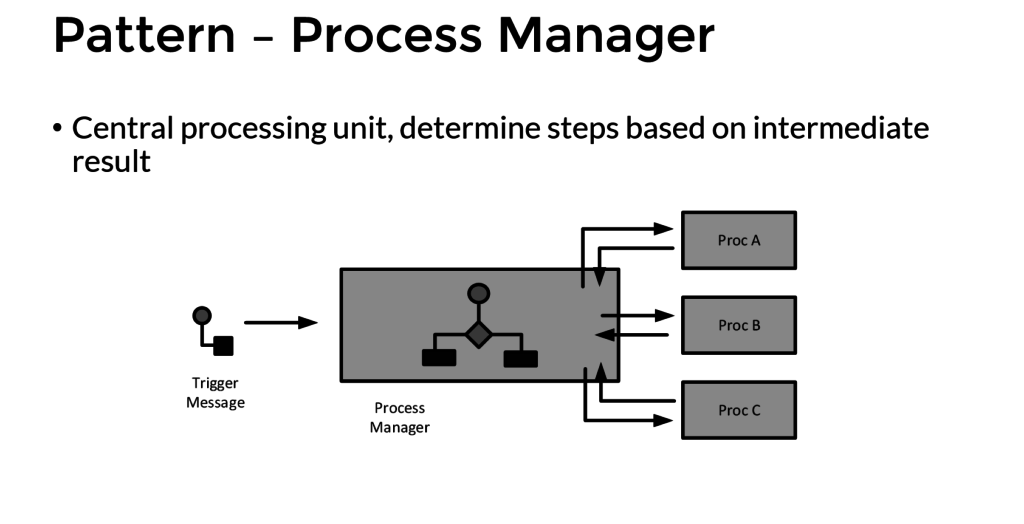

The solution architecture I build looks like the diagram below and resembles a process manager pattern.

This pattern implies that a trigger message is sent to a process manager (Logic App). The process manager is a central processing unit and determines steps based on intermediate results. A tweet is the trigger message that starts a flow in a Logic App. The body is sent to Cognitive Service (Proc A) and the score is sent to a Function (Proc B), which will evaluate the score. The Tweet is stored in blob storage and a few fields are sent to Power BI to fill the dataset. A diagram of a process manager is depicted below.

Implementation

The implementation of the solution is slightly different than from the pattern as after the second intermediate step the tweet data is sent to Azure Blob Storage and Power BI dataset.

The Logic App is implemented with a Twitter trigger, authorized to use my twitter account, with the search text #integrate2017 and interval (frequency) of 5 minutes i.e. every 5 minutes tweets with #integrate2017 will be picked up. Subsequently, this trigger is followed by several actions.

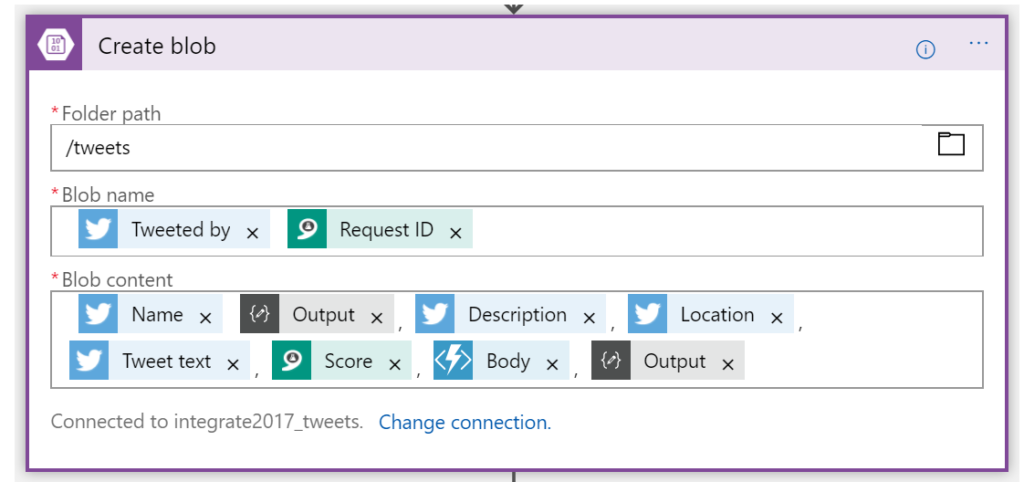

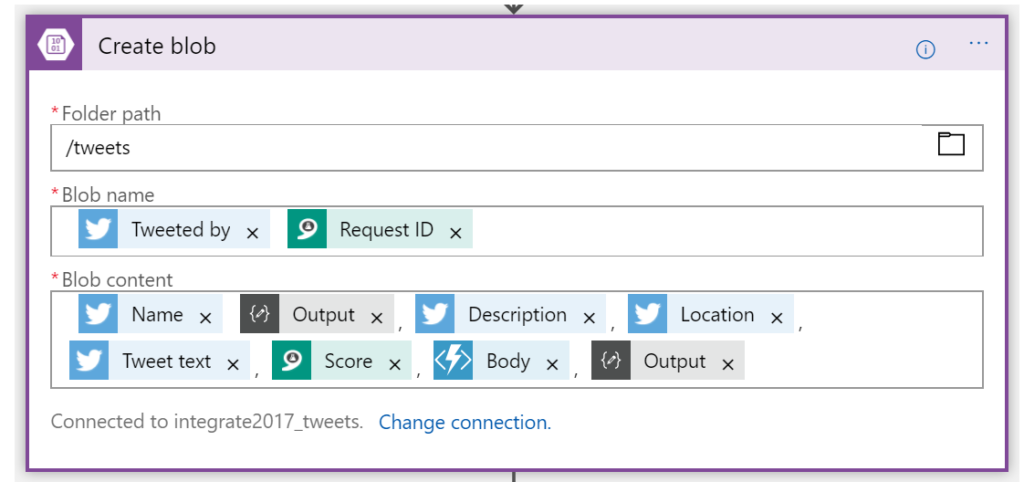

The picture above shows the flow of the Logic App. First, a Twitter triggers then a compose action to create an element part containing the username of the tweet. Subsequently the detect sentiment and the detect key phrases actions. Then the second composes to create a JSON array of the key phrases. And after the second compose the score of the detected sentiment is send to the function, which will return a string (text) of the evaluated score (see also the function). Several tokenized elements are sent to blob storage (see picture below).

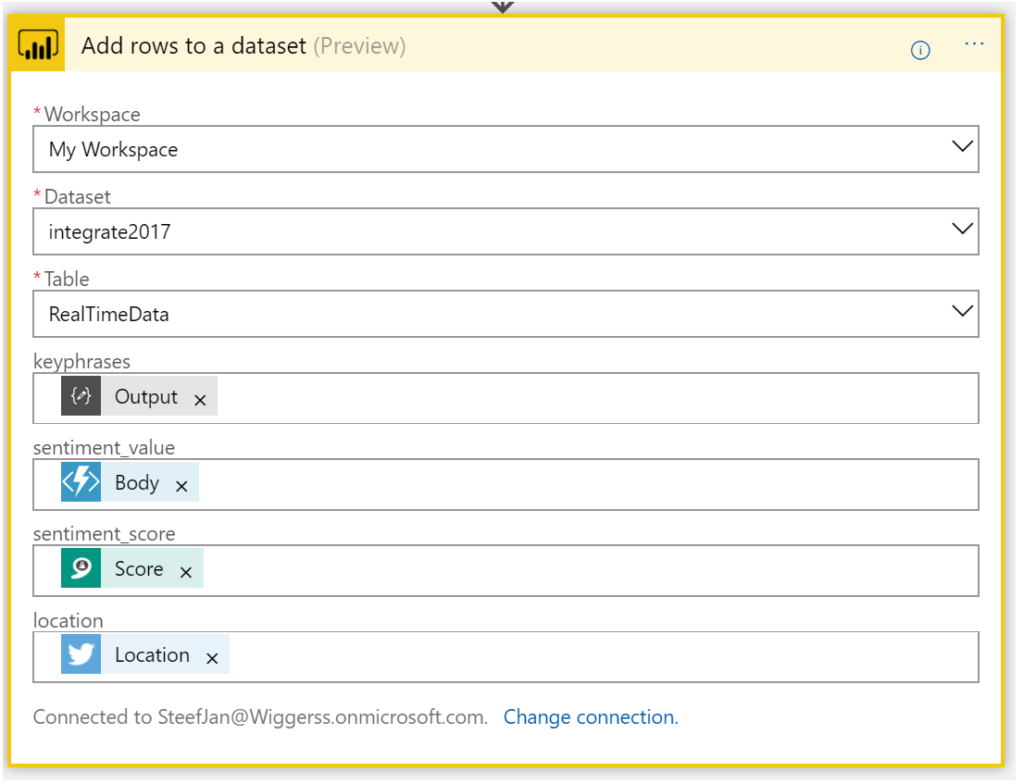

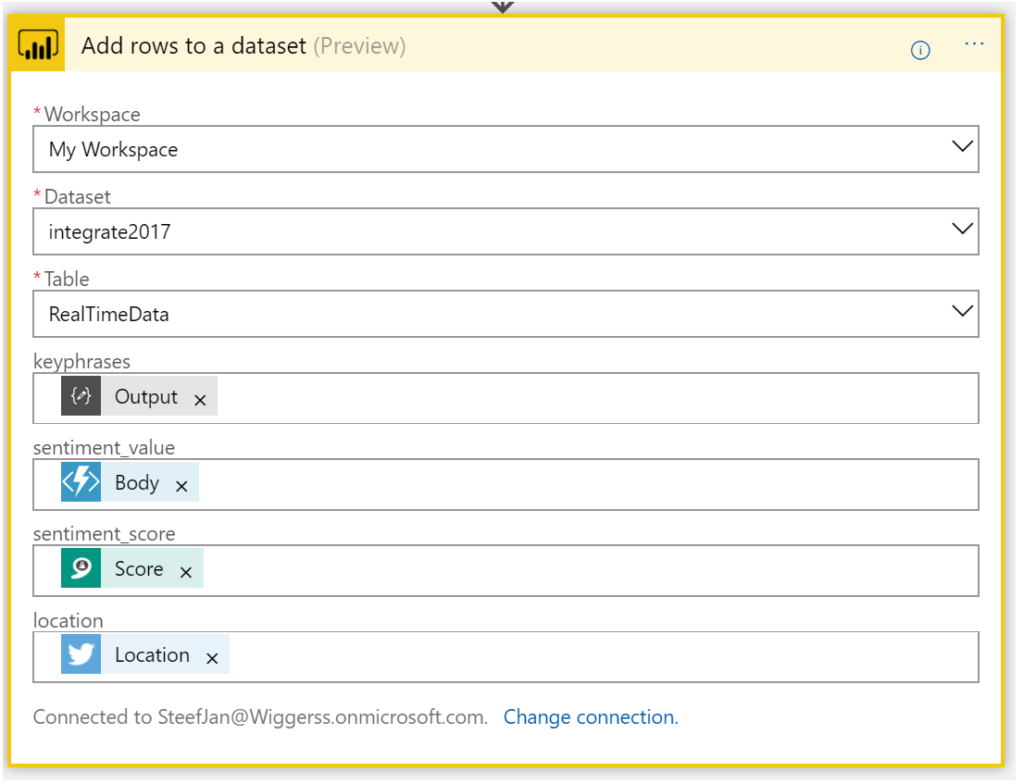

And the final step of this solution (Logic App definition) is sending some of the tokenized elements to a dataset row in Power BI dataset.

Now we have walked through the complete Logic App definition and the key actions of the solution.

Integrate 2017 Report

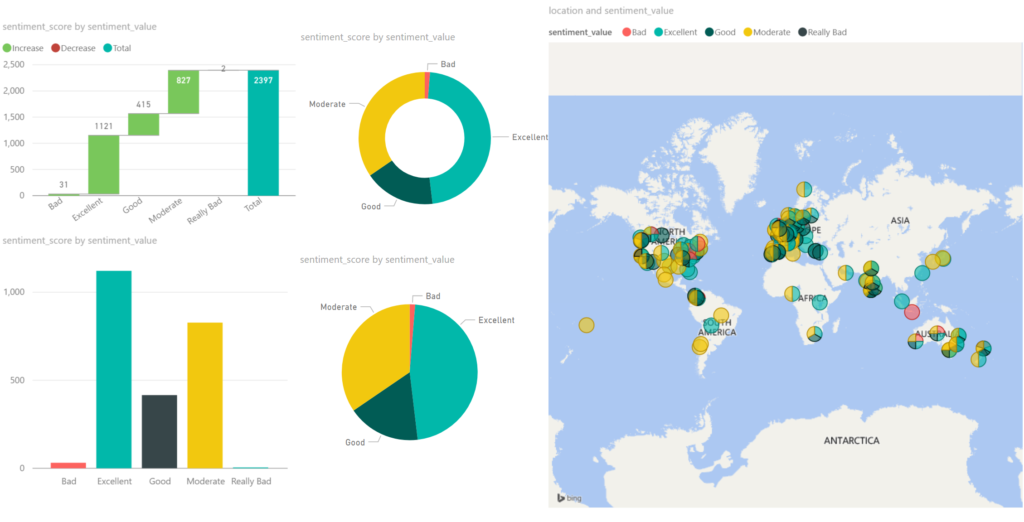

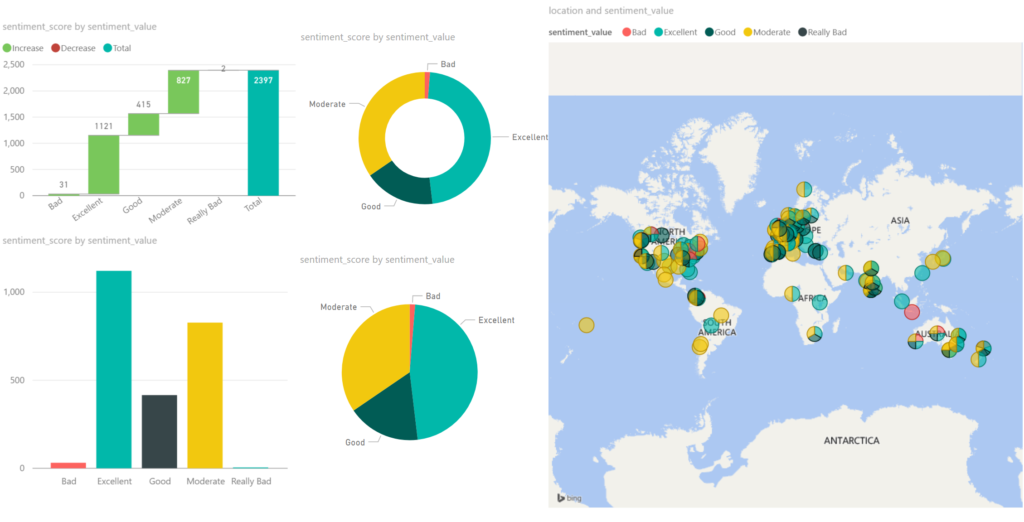

For integrate 2017 I ran the Logic App between 17th of June until the 1st of July. And the event took place between 26th and 28th of June in London. Every 5 minutes the Logic App collected tweets from Twitter with hashtag integrate2017. Over this period of 15 days, 3500 tweets have been aggregated around this event. It started slowly with around 50 tweets until the event started on the 24th with a burst of tweets. Below you can see a report created in Power BI with some visualization of sentiment measured in the tweets.

Around 2/3 of all the tweets, the sentiment was excellent/good, which can be viewed as positive. 1/3 of the tweets were evaluated as moderate. The Cognitive Service Text Analysis capability was unable to determine negative or positive. And finally, a very small percentage was negative (bad). Hence you can conclude that the event was a great event given the sentiment score.

The benefits of building a solution like described above are that with a relative simple Logic App sentiment can be analyzed leveraging several abilities provided by the Cognitive Service. Probably when a business likes to measure sentiment through Social Media channel it can use Logic Apps. Therefore, Logic Apps provide a quick solution in this manner to provide quick insights with low costs. There are no servers necessary and a pro-integration professional can build this type of solutions within a few hours depending on the complexity. Hence it provides quick time to market.

The costs

The interesting part of this solutions is cost. The breakdown of costs for this solution is:

– Logic App (Consumption)

– Function (Consumption)

– Cognitive Service (Tier)

– Storage Account (Volume)

– Power BI (Enterprise Plan)

The Logic App and Function are consumption based and measured on the execution of an action or function. And in general, it can sometimes be hard to predict the workload these services need to process. Hence you need to be aware of this. A good reference with regards to costs with Logic Apps is a post by Rene Brauwers, Tips & Tricks: Cost savings using Logic Apps.

For the Logic App in this solution, 3500 tweets were processed, and the Logic App consists of 8 actions (including the trigger). Hence 28K action calls costs based on the pricing (First 250K actions = €0.000675 / action) approximately 19 euro. And less than a euro for the executions of the Function.

Next, the costs for the Cognitive Service depends on the tier. The free tier could be an option, however, if the workload is too high then you run into rate limiting issues. The S1 Standard can be sufficient and costs 150 Euro a month. Yet you can turn it off after your campaign of measuring sentiment, which could be a few days. In this solution, the costs are 75 euro. Storage of less than 4 Mb of tweets is neglectable. This leaves the costs for Power BI. For the solution, I build I used the pro version, which is around 10 Euro per month. Thus, in total, a sentiment analysis solution costs around a 100 euro.

Conclusion

Depending what the requirements are and perceived value is, Logic Apps combined with other Azure Services and Office365 (Power BI) can be a good fit for purpose for low costs, agility and time to market. Logic Apps are becoming a leader in the iPaaS. On a short term, it will be able to cross the border from visionary to leaders in the Gartner Magic Quadrant. The Product Group is cranking out enhancements on the service and new connectors every two weeks. And they have kept this pace since the General Availability of the service a year ago. Nevertheless, the competition is strong however I am confident Logic Apps will be amongst the leaders.

Author: Steef-Jan Wiggers

Steef-Jan Wiggers has over 15 years’ experience as a technical lead developer, application architect and consultant, specializing in custom applications, enterprise application integration (BizTalk), Web services and Windows Azure. Steef-Jan is very active in the BizTalk community as a blogger, Wiki author/editor, forum moderator, writer and public speaker in the Netherlands and Europe. For these efforts, Microsoft has recognized him a Microsoft MVP for the past 5 years. View all posts by Steef-Jan Wiggers

by Steef-Jan Wiggers | Jul 3, 2017 | BizTalk Community Blogs via Syndication

It has been an amazing month, June 2017, with Integrate2017 and the momentum towards it. Team BizTalk360 and Saravana did an excellent job. Bhavana Nambiar wrote a great post about it.

Month June

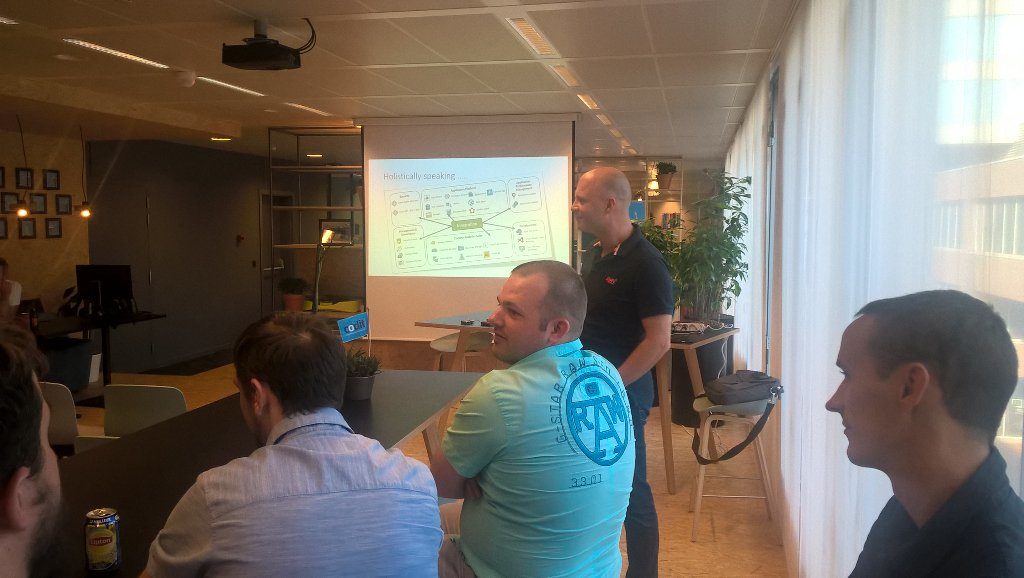

In this month I did two talks one for the BizTalk UserGroup in Belgium, and Integrate 2017. For the BTUG.be session in Ghent at Codit I did a Logic App session.

During the month I prepared my session for integrate 2017 and executed on the 2nd day of the event. It was focussed on the end user/consumer of Logic Apps i.e. business. And I interviewed 30 people around the world to share their views with me.

The event was in my view a huge success. The Pro-Integration team, Service Bus Team and MVP’s were present to give an awesome show!

Around Integrate 2017 and BTUG be I interviewed several people for my talking with integration pro youtube movies:

Music

My favorite albums in June were:

- Elder – Reflections Of A Floating World

- SikTh – The Future In Whose Eyes?

- Anathema – The Optimist

- Iced Earth – Incorruptible

- Vintersorg – Till Fjälls Del II

Dublin

After Integrate I went with Kent and Melissa to Dublin. We had to unwind a bit from all the excitement in London.

That’s all folks for this month. Next month I will be on holiday to Portugal (visiting Sandro) and France!

Cheers,

Steef-Jan

Author: Steef-Jan Wiggers

Steef-Jan Wiggers is all in on Microsoft Azure, Integration, and Data Science. He has over 15 years’ experience in a wide variety of scenarios such as custom .NET solution development, overseeing large enterprise integrations, building web services, managing projects, designing web services, experimenting with data, SQL Server database administration, and consulting. Steef-Jan loves challenges in the Microsoft playing field combining it with his domain knowledge in energy, utility, banking, insurance, health care, agriculture, (local) government, bio-sciences, retail, travel and logistics. He is very active in the community as a blogger, TechNet Wiki author, book author, and global public speaker. For these efforts, Microsoft has recognized him a Microsoft MVP for the past 6 years. View all posts by Steef-Jan Wiggers

by Steef-Jan Wiggers | May 27, 2017 | BizTalk Community Blogs via Syndication

The month May went quicker than as I realized myself. Almost half 2017 and I must say I have enjoyed it to the fullest. Speaking, travelling, working on an interesting project with the latest Azure Services, and recording another Middleware Friday show. It was tha best, it was amazing!

Month May

In May I started off with working on a recording for Middleware Friday, I recorded a demo to show how one can distinguish Flow from Logic Apps. You can view the recording named Task Management Face off with Logic Apps and Flow.

The next thing I did was prepare myself for TUGAIT, where I had two sessions. One session on Friday in the Azure track, where I talked about Azure Functions and WebJobs.

And one session on Saturday in the integration track about the number of options with integration and Azure.

I enjoyed both and was able to crack a few jokes. Especially on Saturday, where kept using Trump and his hair as a running joke.

TUGAIT 2017 was an amazing event and I enjoyed the event, hanging out with Sandro, Nino, Eldert and Tomasso and the food!

During the TUGA event I did three new interviews for my YouTube series “Talking with Integration Pros”. And this time I interviewed:

I will continue the series next month.

Books

In May I was able to read a few books again. I started reading a book about genes. Before I started my career in IT I was a Biotech researcher and worked in the field of DNA, BioTechnology and Immunology. The book is called The Gene by Siddharta Mukherjee.

I loved the story line and went through the 500 pages pretty quick (still two weeks in the evenings). The other book I read was Sapiens by Yuval Noah Harari. And this book is a good follow up of the previous one!

The final book I read this month was about Graph databases. In my current project we have started with a proof of concept/architecture on Azure Cosmos DB, Graph and Azure Search.

The book helped me understand Graph databases better.

Music

My favorite albums that were released in May were:

- God Dethroned – The World Ablaze

- Voyager – Ghost Mile

- Sólstafir – Berdreyminn

- Avatarium – Hurricanes And Halos

- The Night Flight Orchestra – Amber Galactic

There you have it Stef’s fourth Monthly Update and I can look back again with great joy. Not much running this month as I was recovering a bit from the marathon in April. I am looking forward to June as I will be speaking at the BTUG June event in Belgium and Integrate 2017 in London.

Cheers,

Steef-Jan

Author: Steef-Jan Wiggers

Steef-Jan Wiggers is all in on Microsoft Azure, Integration, and Data Science. He has over 15 years’ experience in a wide variety of scenarios such as custom .NET solution development, overseeing large enterprise integrations, building web services, managing projects, designing web services, experimenting with data, SQL Server database administration, and consulting. Steef-Jan loves challenges in the Microsoft playing field combining it with his domain knowledge in energy, utility, banking, insurance, health care, agriculture, (local) government, bio-sciences, retail, travel and logistics. He is very active in the community as a blogger, TechNet Wiki author, book author, and global public speaker. For these efforts, Microsoft has recognized him a Microsoft MVP for the past 6 years. View all posts by Steef-Jan Wiggers