This post was originally published here

…a belated Happy New Year! I know it has been quiet on this blog for quiet some time, but I’ll clear this up in the near future once things are certain for a 100%

Recently a colleague of mine, André Ruiter @AndreRuiter67 , asked my view on a particular challenge involving flatfiles. This challenge in short consisted of:

How would one based on a flatfile output x files, where the files would contain grouped data based on a specific value in the original flatfile.

My response was, as most of my response, as I enjoy using OOB functionality (thus no code): use a flatfile disassembler in a custom receive pipeline add a map on the receive port in which the inbound file is being transformed to an internal format. Within this internal document ensure to promote the field(s) one wants to group on (correlate). Then use an orchestration which subscribes to the internal message(s) and implement the sequential convoying pattern and aggregate the results to an output format and lastly store the end result to disk.

As you’ve been reading the above you might go like; do what? So for the readers convenience I will walk through an example and explain the required steps. In case it makes sense, well you now know how to implement it, so go ahead move on… Nothing to see anymore

Before we start, I assume you have at least a basic understanding of BizTalk, as such I will not explain all things, although I will have a step by step instruction relating to the flat file generation as well as the sequential convoy. Having said this you ought to be able to follow all steps and reproduce the steps involved all by yourself and in case that doesn’t work out for you, I’ve added the source which you can download here.

The scenario

In our example we will receive a comma delimited file containing data received from smart energy readers. Each line contains data like;

-customer id

-date of the energy reading

-energy consumption value since last reading

-name of the energy company (to which the reader belongs and sends out the bills)

Example file contents

based on this file we need to split the source file into separate files grouped by energy company.

Sounds easy doesn’t it? Well let’s get to it!

Create the to use schemas [Flat file header ]

First of we will start with creating an xml presentation of the source flat file Header. For this we will use the BizTalk Flat File Wizard.

Step 1

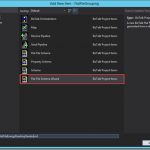

In the solution explorer of Visual Studio, select your BizTalk Project and add a new item [ Right Click -> Add -> New Item -> Flat File Schema Wizard ] and add a descriptive name for the flatfile schema header you are about to create and click on the [ Add button ]

Step 2

The BizTalk Flat File Wizard will appear. Now press the [ Next button] untill you see [ Flat File Information Screen ]. On this screen, [ browse ] to the csv file in question. Enter a name for the record in the [ Record Name ] input field. Leave the other options in tact and press the [ Next button ].

Step 3

You should now be on the [ Select Document Screen ]. On this screen, select the header [ The first line ] and press the [ Next button ].

Step 4

At this point you should be on the [ Select Record Format Screen ]. On this screen, ensure you select that the record is being by means of a [ Delimiter Symbol ]. Once you’ve selected this item press the [ Next button ].

Step 5

The next screen which pops up allows you the select the [ Child Delimiter ] ensure that for you select the [ {CR/LF} ] option. Now press the [ Next Button ]

Step 6

Now you will be presented with the [ Child Elements ] screen. On this screen ensure that you change the [ Element Type ] from [ Field Element ] to [ Record ]. Once done press the [ Next Button ].

Step 7

So far all we have done is defined our record definition, the next few steps will define our header elements (our columns if you prefer). The screen which you will be presented with at this stage is the start of this process. In order to start press the [ Next Button ]

Step 8

The sceen [ Select Document Data ] allows you to select the actual data (headers elements). If you followed up on all the steps so far it would suffice to select the [ Next Button ]. In case you’re not sure ensure that you only have selected the actual data excluding the [ New line characters ].

Step 9

Once again you will be presented with the [ Select Record Format Screen ]. On this screen, ensure you select that the record is being by means of a [ Delimiter Symbol ]. Once you’ve selected this item press the [ Next button ].

Step 10

The next screen which pops up allows you the select the [ Child Delimiter ] ensure that for you select the [ , ] (Comma) option. Now press the [ Next Button ]

Step 11

You will now be presented with the [ Childs Elements ] screen which actually allows us to define the columns of the header. In our example we will make a few modification relating to the [ Element Name ] we will not change the [ Data Type ] as we are defining our header section and we are currently only defining the header (column) names. For brevity see the screenshot below which depicts all changes I’ve made. Once you have made the changes press the [ Next Button ]

Before changes

After changes

Step 12

Congratulations at this point you have created your header structure, the end result should look similar to the image as depicted below. (note I’ve selected the Flat File tab, to display the non-xsd view)

<a href=”http://blog les pilules de viagra.brauwers.nl/wp-content/uploads/2015/01/image12.png”>

Create the to use schemas [Flat file non header data]

Now that we have defined our xml representation of our flat file header is time to define an xml representation of the non header data. For this we will once again use the BizTalk Flat File Wizard. The steps 1 to 13 we went thought earlier will have to repeated with a few [ Changes in Configuration ]. As such I will only list those steps which are different. Yeah you are allowed to call me lazy

Step 2

The BizTalk Flat File Wizard will appear. Now press the [ Next button] until you see [ Flat File Information Screen ]. On this screen, [ browse ] to the csv file in question. Enter a name for the record in the [ Record Name ] input field. Leave the other options in tact and press the [ Next button ]. Note I’ve named the [ Record Name ] EnergyReadings

Step 3

You should now be on the [ Select Document Screen ]. On this screen, select the [ The second line ] which contains the (repeating) data and press the [ Next button ].

Step 6

Now you will be presented with the [ Child Elements ] screen. On this screen ensure that you change the [ Element Type ] from [ Field Element ] to [ Repeating Record ]. Once done press the [ Next Button ].

Step 11

You will now be presented with the [ Childs Elements ] screen which actually allows us to define the columns value. In our example we will make a few modification relating to the [ Element Name ] and the [ Data Type ]. For brevity see the screenshot below which depicts all changes I’ve made. Once you have made the changes press the [ Next Button ]

After changes

Congratulations at this point you have created your data structure, however we will need to make some manual changes to the generated schema. This changes will ensure that we will instruct BizTalk to[ Auto Debatch ] the inbound records to single records (in case there are multiple data lines.)

Step 12

In order to ensure that [ Auto Debatching ] will happen we will need to do the following. [ Select the Schema Element ] of the newly generated schema and then in the [ Properties ] window ensure to change the following setting: [ Allow Message Breakup at InFix Root ] from [ False ] to [ True ]

Step 13

The last step we need to perform to enable [ Auto Debatching ] consists of changing the [ Max Occurs ] [ Property ] of the [ Repeating ‘Element’ ] from being [ Unbound ] to [ 1 ]

Create the to use schemas [Other]

Now that we’ve created our schemas which represent the flat file definition, we can move on to creating the other schema’s we need. I will not go over the details on how to create these ‘normal’ schemas instead I’ll list the schema’s required.

Property schema

We start of with a definition of a simple property schema, this schema will only hold one field and will be named EnergyCompany.

If you need more information with regards to property schemas please click on this link.

Internal schema: Reading

This schema is our internal representation of a energy reading, and looks as depicted below. Please note that the element named [ CompanyName ] has been promoted, as such we can use it later on when we are about to implement or sequential convoy.

Internal schema: EnergyReading

This schema is the actual representation of the xml we will output and contains multiple readings on a per energy ompany basis. It has to be noted that this schema is a composite schema and as such it [ Imports ] the schema [ Reading ] (see 1). The other thing which has to be noted is the fact that the [ Reading ] element has it’s [ Max Occurs ] value set to unbounded.

Creation of the Receive Pipeline

Now that all schemas have been created we can go ahead with the creation of a receive pipeline. Once again I will not dive into the nitty gritty details, but if you require more information please click on this link

So create a [Receive Pipeline ] and give it a meaning name, drag a [ Flat File Disassembler Component ] to the [ Design Surface ] and drop it in the [ Disassemble stage (1) ]. Now [ Click ]on the just added component and go to the [ Properties Windows ]. In this window ensure to select the earlier on created [ Flat File Header Schema ] for the [ Header Schema Property (2) ] and select the [Flat File Schema ] for the [ Document Schema Property (2) ].

Transformations

At this point we can start with the required mappings we need. In total we will need 3 maps. The required maps are listed below.

Please note if you want to learn more with regards to mappings and advanced patterns (In my example everything is kept quit basic), I can only recommend that you download and start reading an ebook titled “BizTalk Mapping Patterns and Best Practices” which a friend of mine, Sandro Pereira @sandro_asp, and Microsoft Integration MVP put together for free. Go here to download it

EnergyReadingFF_TO_Reading

This mapping will be used on the receive port and will map the generated inbound flat file xml structure to our single reading file.

Reading_TO_EnergyReadings

This mapping will be used in our orchestration, which implements a sequential convoy, and maps the single reading file to the energy readings

Reading_Readings_TO_AggregatedEnergyReadings

This mapping will be used in our orchestration which implements a sequential convoy as well, and maps all results together.

Sequential Convoy

Before we can deploy our BizTalk Application there is one more thing we need to implement, and that’s a mechanism to output the grouped files. The way to implement this is using an orchestration and implement the [ Sequential Convoy ] pattern. Below a screenshot of the end result and I’ll go into the basic details using steps which refer to the screenshot below. In case you want to now more about the [ Sequential Convoy] pattern please click on this link.

Step 1: rcvReading

This receive shape ensures that messages with the message type http://FlatFileGrouping.Reading#Reading are being subscribed to. These are the single reading messages as stated earlier. It has to be noted that we initialize a [ correlation Set ] this set will ensure that we actually will create a single process (Singleton) which subscribes not only to messages of the aforementioned messagetypes but to messages which contain the same value for the element CompanyName contained with the reading message.

Click on this link for more information on the [ Receive shape ]

Click on this link for more information on [ Correlation Sets ]

Step 2: Init Timeout boolean

This expression shape is used to initialize a boolean which is used later on in the process to indicate if the convoying process should be ended. The initial value here is set [ False ]

Click on this link for more information on the [ Expression Shape ]

Step 3: Construct Energy Readings

This construction block is used to to host the [ Reading_TO_EnergyReadings ] transformation, and as such initializes the actual message we will send out to disk containing the grouped contents with regards to the energy readings on a per company base

Click on this link for more information on the [ Construct Message Shape ]

Step 4: Loop until timeout

This loop ensures that the contained logic is being repeated as long as the previous initialized boolean is False. In our specific case the boolean is set to true once we have not received any more reading messages for 30 seconds.

Click on this link for more information on the [ Looping Shape ]

Step 5: Listen

This shape will enable us to receive other messages for a given time window.

Click on this link for more information on the [ Listen Shape ]

Step 6: rcvSubsequentReadings

This receive shape ensures that messages with the message type http://FlatFileGrouping.Reading#Reading are being subscribed to. These are the single reading messages as stated earlier. It has to be noted that we follow a [ correlation Set ] this will ensure that we will receive any follow up messages without starting up a new service instance of this orchestration. Ie; if an instance of this orchestration is initiated and a message with has the value Company Y for the element CompanyName contained with the reading message is received it will enter the process at this point (and be further processed)

Click on this link for more information on [ Correlation Sets ]

Step 7: Aggregate following reading to initial reading

This construction block is used to to host the composite transformation [ Reading_Readings_TO_AggregatedEnergyReadings ], and as such this map takes both the follow up reading message as well as the in step 3 constructed Energy Reading message and combines these messages to a temp message called AggregatedEnergyReadings.

Click on this link for more information on the [ Construct Message Shape ]

Click on this link for more information on [ Multi Part Mappings ]

Step 8: Copy to EnergyReadings

This message assignment shape is used to copy over output of the previous mapping (step 7) to the original Energy readings document.

Click on this link for more information on the [ Message Assignment Shape ]

Step 9: Wait 30 seconds

This delay shape will be activated once the listen shape has not received any messages for 30 seconds.

Click on this link for more information on the [ Delay Shape ]

Step 10: Set bHasTimeout

This expression shape is used to set the bHasTimeout boolean to [ True ] ensuring that we will exit the loop and are able to end to process eventually after sending out the energy readings message

Click on this link for more information on the [ Expression Shape ]

Step 11: sndAggregation

This shape will actually send out the energy readings message, which at this time only contains data relating to a specific company,

Click on this link for more information in the [ Send Shape ]

Final Configuration

At this point you will have created all the required artifacts and as such you could deploy the application and configure it. Below I’ve listed the items which need to be configured

Receive Port and Location

In order to start processing the inbound Flat File we need to set up a receive port and receive location. Once this has been configured using the File Adapter we can simply start the processing of a readings flat file by dropping such a file in the folder to which the file adapter listens. The initial processing includes debatching the inbound flat file structure to separate files using the earlier defined [ Receive Pipeline ] and the [ Transformation ] of the xml presentation of the flat file energy reading to the internal reading format.

Below the settings I used for configuring the receive port and location

Receive Port Type [ One Way ]

Receive Port Inbound Map [ EnergyReadingFF_TO_Reading]

Receive Location Transport Type [ FILE Adapter ]

Receive Location Receive Pipeline [Flat File Pipeline created earlier]

Inbound Map: EnergyReadingFF_TO_Reading

Send port:

Transport Type: File

Filters: BTS.Operation = name of the send port operation name in the orchestration

Send Port

The send port which needs to be configured will subscribe to messages which are send out by the orchestration and ensures that this message is written to disk.

Below the settings I used for configuring the receive port and location

Send Port Trabs port Type [ FILE Adapter ]

Send Port Filter [BTS.Operation = name of the send port operation name in the orchestration]

Et voila

So I hope you enjoyed this post, and feel free to give me a shout on twitter @ReneBrauwers or in the comments below, and as a reminder you can download the source here (including bindings)

Please note; the bindings might not import this is most likely due to the fact that I use different Host Instance names (Processing_Host for the orchestration, Receive_Host and Send_Host for receiving and sending the files)

Cheerio

René