by stephen-w-thomas | Jun 3, 2020 | BizTalk Community Blogs via Syndication

This blog is an extraction of the session “BizTalk360 – The past, the present, and the future” presented by the CEO of Kovai.co, Saravana Kumar at Integrate 2020.

This blog gives an overview of how BizTalk360 started and how the challenges have been enhanced and what will be future of BizTalk360.

About Saravana

He is a hard-core technical person and Microsoft MVP since 2007. As he was working in the BizTalk Server integration space for a decade. He found himself challenging to while work with BizTalk Server projects to manage and monitor the applications and other components. Hence the birth of BizTalk360.

The origin story of BizTalk360

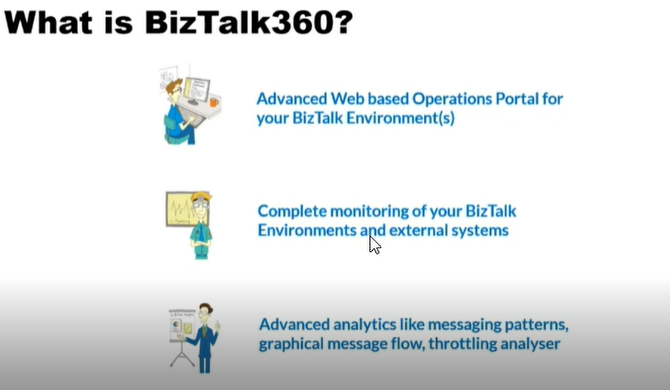

BizTalk360 was founded in 2011. It’s almost 10 years now. Saravana started this as a hobby project, an idea evolved in one of the MVP summit in 2010. The birth of BizTalk360 started and from there, and now it is an essential tool for enterprises that uses Microsoft BizTalk Server.BizTalk360 is completely a web-based admin console that provides a solution to Operations, Monitoring, and Analytics your BizTalk Server environment.

Interesting topics Covered

BizTalk360 – 10 years Journey (Past)

New Features (Present)

What’s next? BizTalk360 – v10 (Future)What is BizTalk360?

The business that uses BizTalk Server usually relies on standard admin console to manage their day to day activities but that won’t sufficient to do complete operational, Monitoring, and Analytical activities. Other third-party solutions like SCOM and custom development solutions are not matured enough to fill the gaps in BizTalk Server. Moreover, in an analytics perspective, BizTalk admins doesn’t have any clue about what is happening in the system? Our tool BizTalk360 is a single operational, Monitoring, and Analytics for the Microsoft BizTalk Server.

A single tool to improve your productivityBizTalk360 addresses some of the key features. Consider if you don’t have a BizTalk360 environment and the way you manage the BizTalk server environment is by using at least seven of the below productivity tools. Such as

- BizTalk admin Console

- SQL Management Studio

- Event viewer

- Performance Monitor

- BAM portal

- ESB Portal

- BizTalk Health Monitor

- Monitoring Consoles

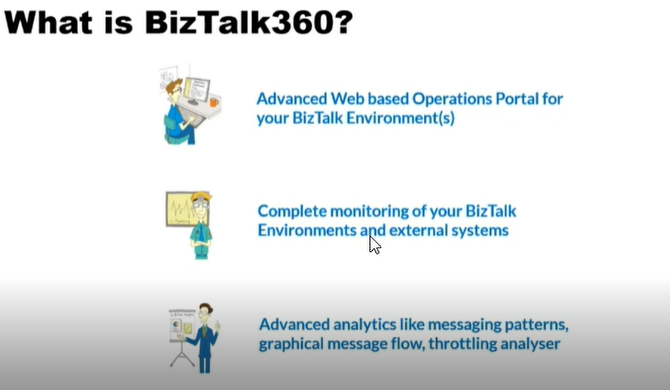

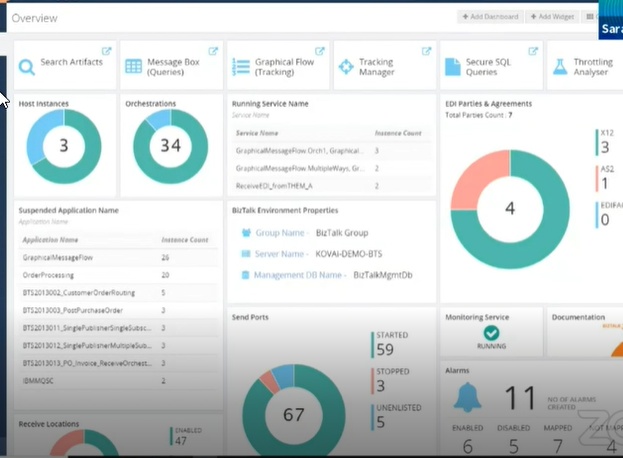

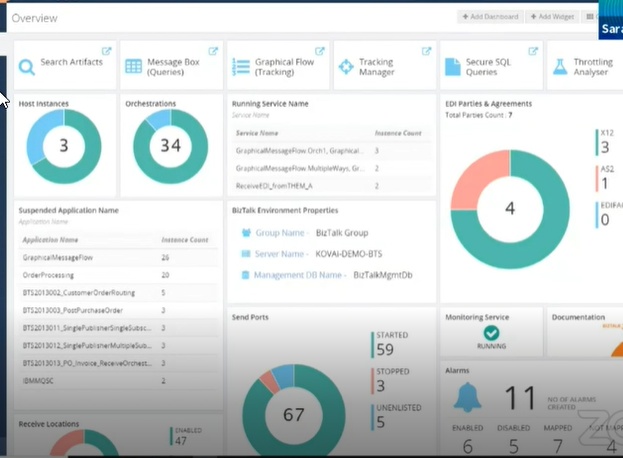

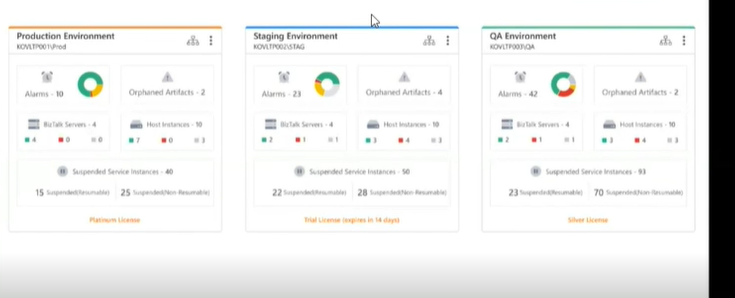

Dashboard

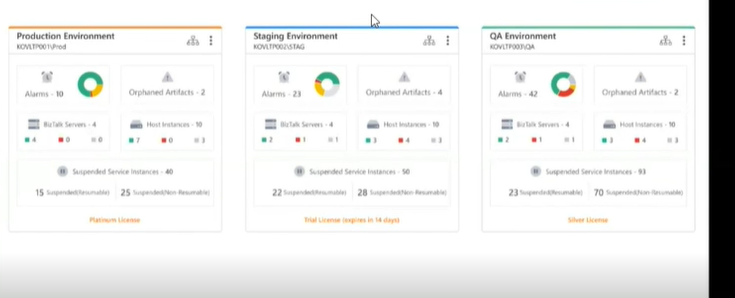

The main challenge in the admin console is that there is not an efficient way to get an overview of their BizTalk server environment status. Whereas in BizTalk360, a customized dashboard is available in order to view the overall health of your BizTalk Server in a single view In this way, you can bring in your business people to support your BizTalk environments.

Event Viewer

When it comes to troubleshooting operational problems in the BizTalk Server, the first-place admins/support person will look is the admin console. If they can’t find anything obvious, their next point of search is the Event Viewer. To address these challenges, “Advanced Event Viewer“ came into existence where all the events from all the BizTalk/ SQL server are stored in a central database.

Performance Tools

BizTalk360 aims to offer an out of box tool with similar capabilities as the Performance Monitor tool in Windows servers. Analytics offers a visual display of the most important performance counters in a consolidated way and arranged on a single screen so that the information can be monitored in a glance.

Business Activity Monitoring (BAM)

BizTalk360 comes with an integrated BAM portal that allows the business users to query BAM views, perform activity search, view the user permissions, and the activities time window.

ESB exception management framework

Even though the Exception management framework itself is a stable offering and fully supported by Microsoft still it is missing some important capabilities like

- Bulk Edit/Resubmit – It is very important since when there is a failure you will typically have 10’s-100’s of failed messages for the same reason.

- Auditing – There is no ability to trace all the activities like edit, resubmit actions.

To address all those challenges as highlighted

Our tool provides a rich ESB exception dashboard with a full search/filter/display of exception details.

BizTalk Health Monitor

BHM is a tool that can be used to analyze and fix issues in the BizTalk server environment. The predecessor of the BHM tool, Message Box Viewer (MBV), was initially built as a hobby project by one of the Microsoft Support Persons.

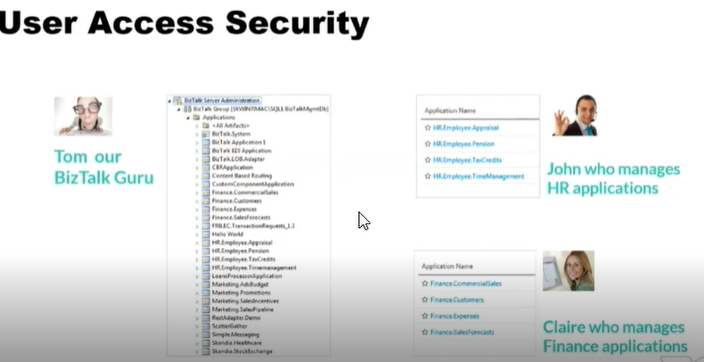

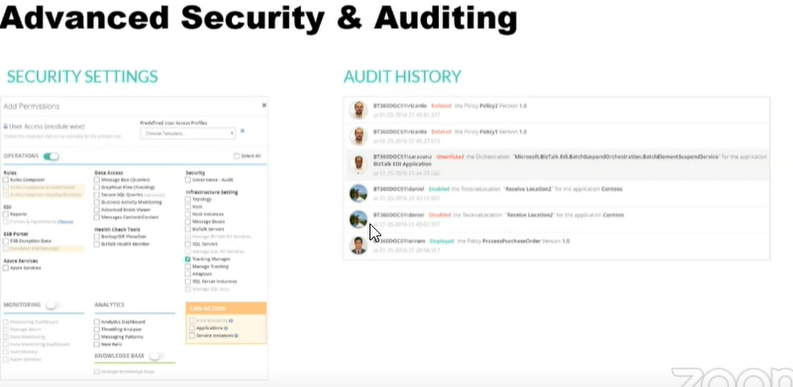

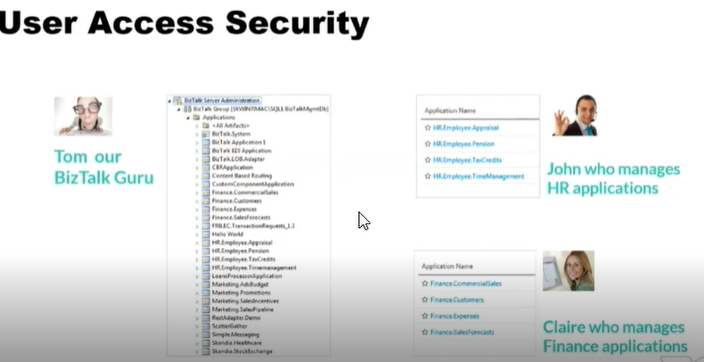

User Access Policy

On the left-hand side of the image is the standard admin console. Basically standard admin console doesn’t provide any access/security. There is no restriction on users. Once the user gets control of the BizTalk admin console. Users will be able to view the entire admin console.

In the shown example,

Tom is our BizTalk360 admin who has all access to all the integrations in the BizTalk server but John from HR and Claire from finance altogether from different teams. They must not be provided with the same permission as Tom.

This sort of application isolation security has been provided in BizTalk360.

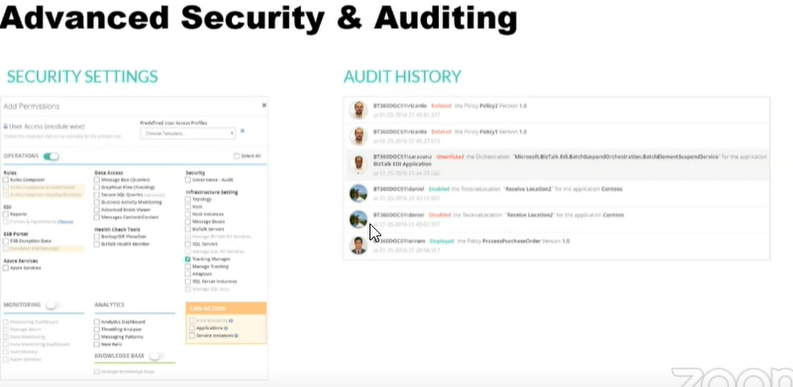

Governance and Audit

The standard BizTalk server Admin console doesn’t come with any in-built auditing capabilities for user activities. Once someone has access to BizTalk Admin Console that is, pretty much entire BizTalk support team, they are free to perform any activities without a traceIn the BizTalk server, if any of the host instances is in stop state or if any of the host instances goes down, such activity has not been traced. In BizTalk360, the auditing section comes as an in-built tool to trace what’s been done in the BizTalk server console.

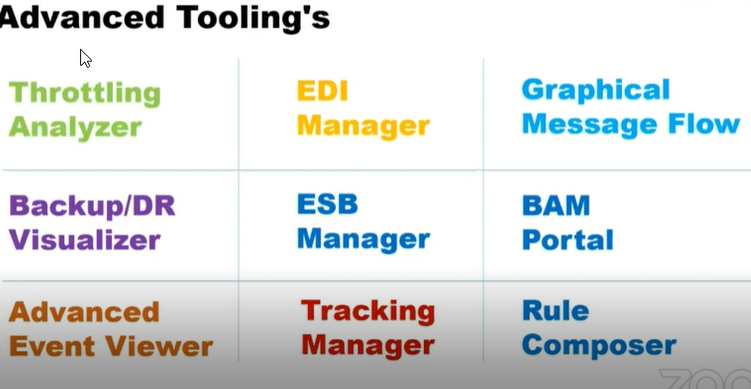

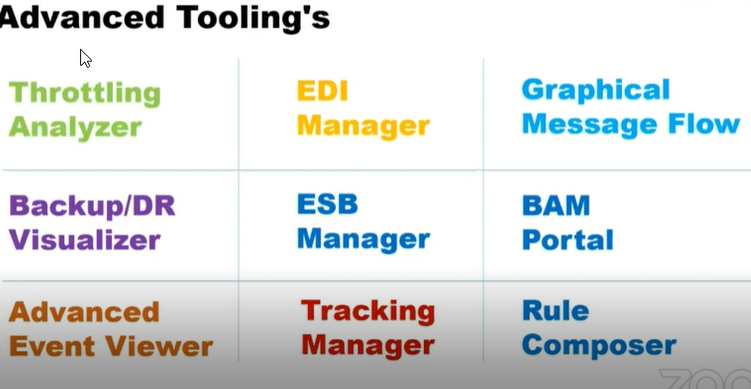

Advanced Tooling

Some of the advanced tools that have been developed in BizTalk360 in the past 10 years.

- Throttling Analyser

- EDI Manager

- Graphical Message Flow

- Backup/DR visualizer

- ESB manager

- BAM Portal

- Rules Composer

- Tracking Manager

Throttling analyzer

The idea behind the BizTalk360 throttling analyzer is to simplify the complexity in understanding the BizTalk throttling mechanism and provide a simple dashboard view. This helps people to understand the throttling conditions in their BizTalk environment, even when they do not have very deep technical expertise

Graphical Message Flow

In standard admin console, it is time-consuming as everything is stored in a flat-file format. Especially, if you got a more complex, very loosely coupled system the challenges get worse diagnosing/understanding the message flow. And another disadvantage is, when somebody has access to the tracking queries, confidential information might be revealed to unauthorized people.

In BizTalk360, it assists to visualize the entire message flow in a single view where it encapsulates

- All service instances

- Send port

- Orchestration

- Receive port details

Rules Composer

For example, if users want to roll out rule dynamically on Christmas, users need to seek help from development and this can be time consuming process as well. BRE is a simplified tool that will empower business user to manage business rules in a complex business process

Monitoring

Another key capability of BizTalk360 is Monitoring. The tool provides extensive monitoring which ensure your health of the BizTalk server environment. BizTalk being a middleware, it is always connected to various systems, we make sure that all the connected systems are in a healthy state.IBizTalk360 comes with integrated systems like

- SQL

- MSMQ

- IBMMQ

- Web End Points

- Azure Service Bus

- Web Jobs

- SFTP locations

- Disks

We are super proud to convey that no other product provides a complete monitoring tool for the BizTalk server.

Over 20 years of experience in field and that has been covered in Monitoring which meets up the customer requirements.

On a confident note, there is no other product in the market which serves 100% BizTalk Server monitoring.

Biztalk360 can be integrated with your current Enterprise Monitoring Stack

- Service now

- AppDynamics

- Dynatrace

- New Relic

BizTalk360- 10 years of innovationFor the last 9 years, consistently there will be 4-5 releases happening every year. For more information regarding release taken in every year.

Consistently, we are filling in the gaps Microsoft has left for the global BizTalk server Customers.

To align with the latest migration of Biztalk application in the cloud, the same has been brought in BizTalk360.

BizTalk360 has become the de-facto tool for BizTalk server customers. Used by over 600 large enterprises including Microsoft.

The Future

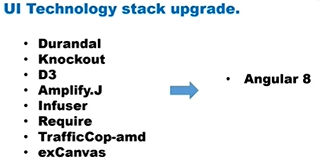

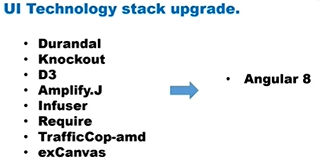

One of the biggest change its been take up is rewriting the entire UI in a new way. Backend API are pretty solid, but front end its initially started with silver light. In the upcoming years, UI will be complete change to Angular 8

Currently, the development team is working on the up-gradation of UI in BizTalk360.

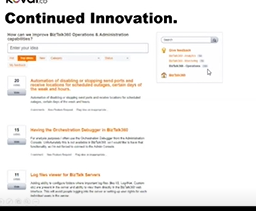

Continued Innovation

The above mentioned is the user forum and based on the customer’s feedback, if it is appropriate it’s been added to the product for every release.

Wrap Up

In this blog post, we discussed, what is the past, present, and future of BizTalk360. Still, there are lots of announcements and feature enhancements that are yet to come. Stay tuned for further updates. Happy Learning!

The post BizTalk360 – The past, present and the future appeared first on BizTalk360.

by stephen-w-thomas | May 22, 2020 | BizTalk Community Blogs via Syndication

Author Credits: Martin Peters, Senior Consultant at Codit

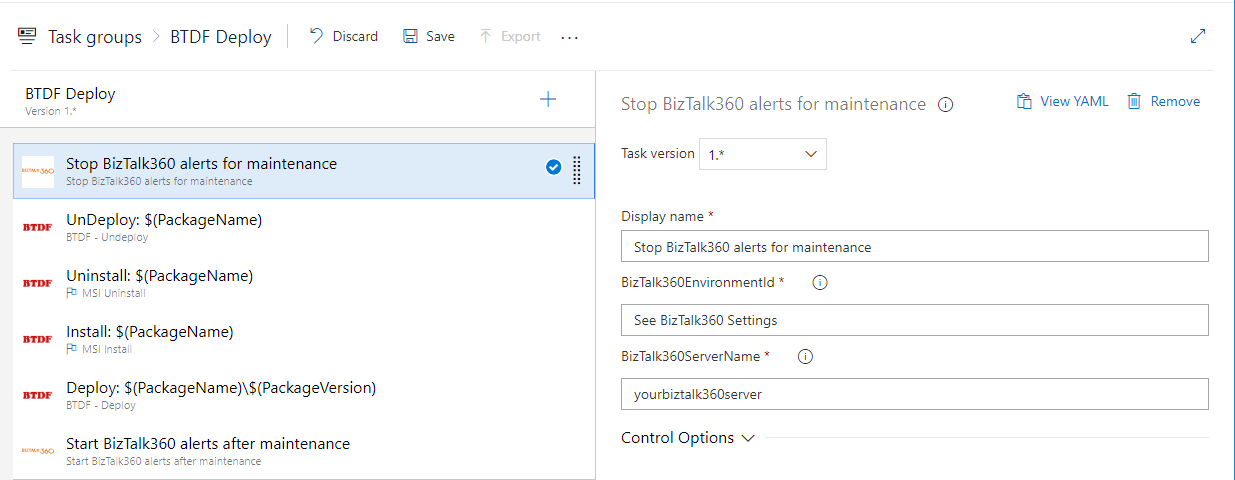

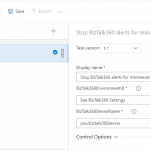

During deployments of BizTalk application, it is common practice to put BizTalk360 in maintenance mode before the deployment and switch to normal mode again after the deployment.

This avoids alerts being sent to various people due to stopping and starting of BizTalk during the deployment. If you are using Azure DevOps to deploy BizTalk applications automatically, you do not want to have a manual process to put BizTalk360 in and out of maintenance mode.

The good news is that BizTalk360 provides a set of APIs which allow you to automate this.

An article from Senthil Palanisamy named BizTalk Application Deployment Using Azure Pipeline with BizTalk360 API’s inspired me to implement this for a customer. In Senthil’s blog, Powershell scripts are used to access the BizTalk360 APIs and turn the maintenance mode off and on. But, as more and more customers are using Azure DevOps and BizTalk360, you need a copy of the PowerShell scripts. The PowerShell scripts might change over time (changes, bug fixing), so the next step is to put the PowerShell scripts under version control and create a DevOps extension.

The DevOps extension is maintained on GitHub, and compatible changes are automatically distributed to all organizations using this extension.

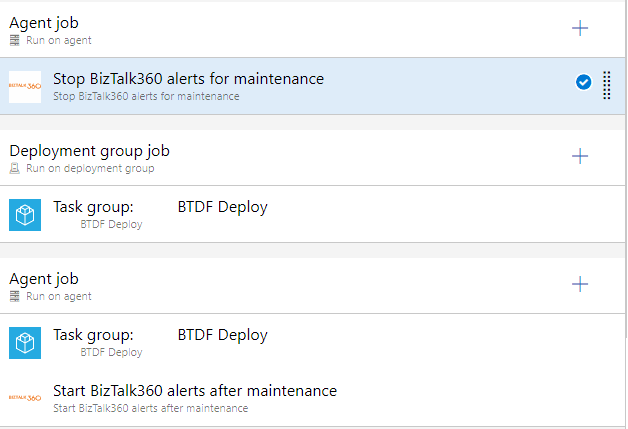

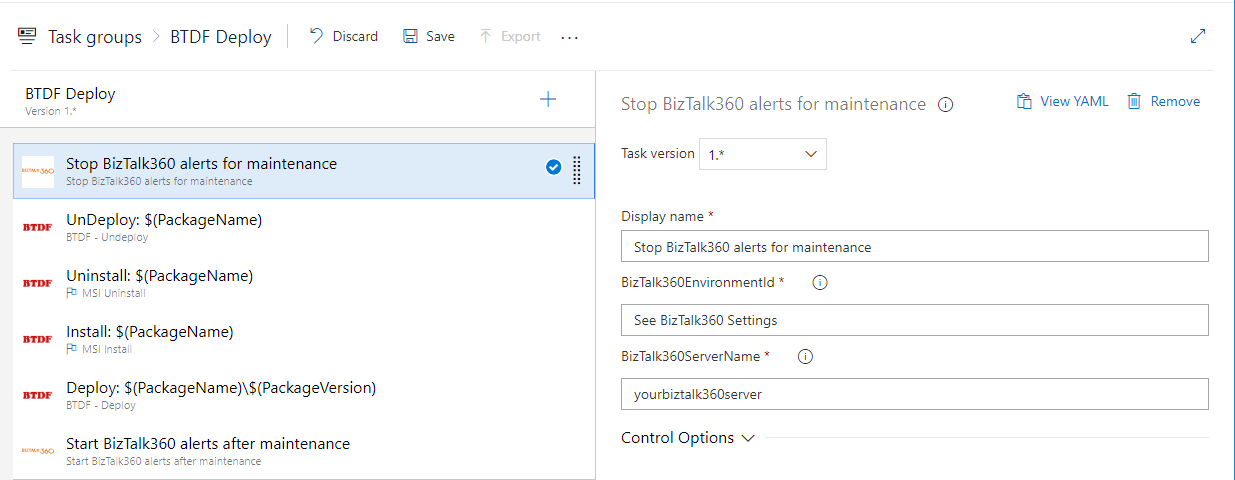

Single Server Scenario

When your Test, Acceptance, and Production environment consist of a single server, you can create a task group and use this in the release pipeline. Note that in this example, the task group uses the BTDF extension, which is useful if you are using the BizTalk Deployment Framework for deployments.

The BizTalk360 tasks require the hostname of the server where BizTalk360 is installed and the Environment ID. You can find the Environment ID in http://<yourbiztalk360server>/BizTalk360/Settings#api.

Note: You must have a license to use the BizTalk360 API.

If you do not have a license, please contact your BizTalk360 representative. The BizTalk360 API offers an extensive API that allows you to automate other tasks as well.

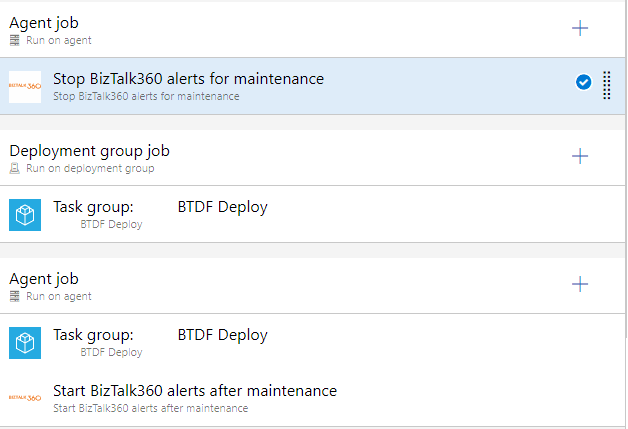

Multi-Server Scenario

In a multi-server scenario, you want to put BizTalk360 in maintenance mode before deployment to the first server and out of maintenance mode after deployment to the last server. This case, your release pipeline might look like;

You can find the DevOps extension on the VisualStudio Marketplace.

The source code is available on GitHub. Please feel free to make any improvements, enhancements, etc.

The post BizTalk360 Maintenance Mode with BizTalk Deployments via Azure DevOps appeared first on BizTalk360.

by stephen-w-thomas | May 20, 2020 | BizTalk Community Blogs via Syndication

As the title of this session suggests, you will learn about the benefits of event-based integration and how it can help modernize your applications to be reactive, scalable, and extensible. The star of the show here is Event Grid, a lynchpin capability offered as part of Azure Integration Services.

Event Grid offers a single point for managing events sourced from within and without Azure, intelligently routing them to any number of interested subscribers. It not only supports 1st class integration with a large number of built-in Azure services but also supports custom event sources and routing to any accessible webhook. On top of that, it boasts low-latency, massive scalability, and exceptional resiliency. It even supports the Cloud Events specification for describing events, as well as your own custom schemas.

My talk will feature a demo showing how Event Grid easily enables real-time monitoring of Azure resources – but this is only one of many possible scenarios that are supported.

Why I should attend INTEGRATE 2020 Remote?

With INTEGRATE 2020 Remote, we are consolidating all Microsoft Integration focused content in a single place covering on-premise (BizTalk Server), cloud (Azure Logic Apps, Functions, API Management, Service Bus, Event Grid, Event Hub, Power Platform), and Hybrid in an intense 3 days conference, with its own keynote.

If you are a Microsoft Integration professional, even if you attend part of the conference here and there, you’ll still see significant value educating and preparing yourself for the future. Please go ahead and register now.

The post Integrate 2020 Remote Session Spoiler – Building Event-Driven Integration Architectures appeared first on BizTalk360.

by stephen-w-thomas | May 18, 2020 | BizTalk Community Blogs via Syndication

Messaging and eventing activities are at the core of most integration solutions. Although conceptually those two architectures differ on how they deal with the information they need to deliver to end systems, they share a number of patterns – and mastering those patterns, knowing when to apply them and having easy “recipes” for implementation can accelerate lots of integration projects.

Azure Integration Services (AIS) provides all the components required to create robust integration solutions, including not only messaging and eventing components – Azure Service Bus and Event Grid Topics – but also components to support orchestration and API mediation – Azure Logic Apps and API Management. Usually, clients understand the components, but sometimes struggle to translate the knowledge from years of using an integrated service, like BizTalk Server, into more streamlined components.

In this talk, I will be selecting 3 of the most widely used messaging and show how to implement those patterns using AIS components. And, since some of those patterns can also be implementing on an event-based solution, how can you implement the same pattern using those components.

I am looking forward to sharing this session with you and have some good conversation of how you solve those problems in your organization.

So, come and join me on Integrate 2020 Remote in June!

Why I should attend INTEGRATE 2020 Remote?

With INTEGRATE 2020 Remote, we are consolidating all Microsoft Integration focused content in a single place covering on-premise (BizTalk Server), cloud (Azure Logic Apps, Functions, API Management, Service Bus, Event Grid, Event Hub, Power Platform), and Hybrid in an intense 3 days conference, with its own keynote.

If you are a Microsoft Integration professional, even if you attend part of the conference here and there, you’ll still see significant value educating and preparing yourself for the future. Please go ahead and register now.

The post Integrate 2020 Remote Session Spoiler – Messaging Patterns with Azure AIS appeared first on BizTalk360.

by stephen-w-thomas | May 14, 2020 | BizTalk Community Blogs via Syndication

Data is the new gold! A phrase you might have heard in some discussion or presentation you have attended. Our economies are indeed more data-driven, and decisions are made on data. Data that in many cases, originate from observations, i.e. monitoring. For instance, tollgates monitor passing cars, websites can monitor traffic, and the camera’s in machines monitor assembly of products.

In the talk, I like to explain what Artificial Intelligence, Machine Learning, Integration, and Monitoring is from an Azure perspective.

Furthermore, AI/ML is trending, and integration and monitoring are standard for integrators what will it mean for them once they onboard on a project with an AI/ML component – I like to provide my vision on that!

Lastly, I will share my experience with Artificial Intelligence, Machine Learning, Integration, and Monitoring by going through some real-world use-cases. While I am not a data-scientist as an integrator I have a role and responsibility in ML/AI related projects that require data from different places.

The general message from the talk: There is a relation between the monitoring, ingestion of data, and use for machine learning or artificial intelligence purposes.

Why I should attend INTEGRATE 2020 Remote?

With INTEGRATE 2020 Remote, we are consolidating all Microsoft Integration focused content in a single place covering on-premise (BizTalk Server), cloud (Azure Logic Apps, Functions, API Management, Service Bus, Event Grid, Event Hub, Power Platform), and Hybrid in an intense 3 days conference, with its own keynote.

If you are a Microsoft Integration professional, even if you attend part of the conference here and there, you’ll still see significant value educating and preparing yourself for the future. Please go ahead and register now.

The post Integrate 2020 Remote Session Spoiler – AI/ML, Integration and Monitoring appeared first on BizTalk360.

by stephen-w-thomas | May 12, 2020 | BizTalk Community Blogs via Syndication

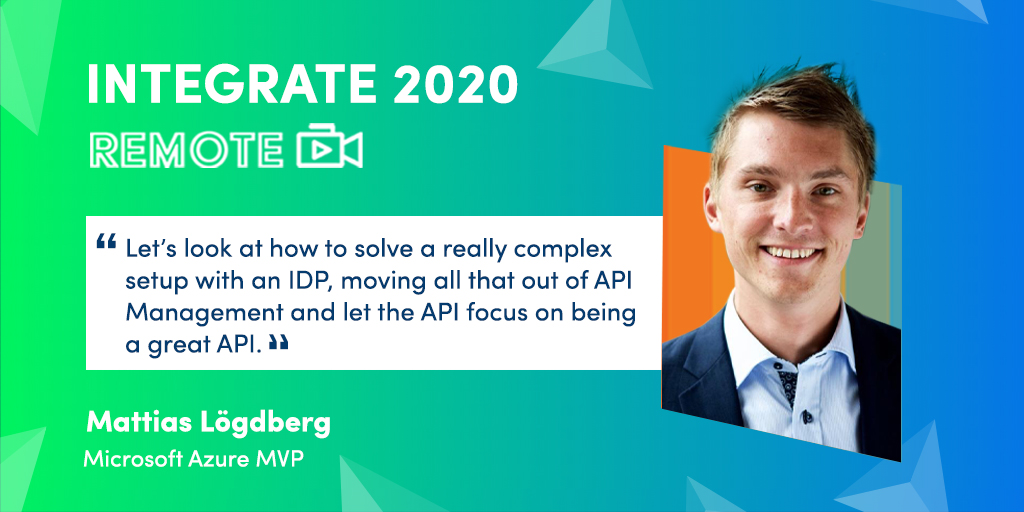

Exposing APIs is all about making it easy for consumers to consume and understand your APIs. But there is a lot more to consider to create a robust, maintainable, and long-living API. One of those is security and this will be discussed during my session at Integrate 2020. When mixing security and usability we often end up in a situation where there are one or more operations in the API that needs more security than others. This also gets mixed with the fact that multiple consumers need access and that access need’s to be given in an easy manner. And all of this ends up in a situation where built-in security options in Azure API Management are not granular enough and we end up with adding specific code to solve this that in combination with different workarounds like groups etc. Soon we get a really complex setup that is hard to understand. So let’s look at how to solve this with an IDP instead, moving all that out of API Management and let the API focus on being a great API.

In this session, we will use Auth0 as an IDP and let API Management use OAuth validation to make sure the token provided is coming from Auth0.

We will then go thru how the trust setup is done to connect the API Management instance to my Auth0 instance. And then how to work with RBAC and permissions during these. We will, in the end, up in a solution where permission and access are managed at the IDP (Auth0) and where restrictions are enforced based on the permissions inside the operation in API Management.

Come and join me to see how this is achieved!

Ask tons of questions and bring in your thoughts so we can discuss and share experience and knowledge!

Hope to see you there!

Why I should attend INTEGRATE 2020 Remote?

With INTEGRATE 2020 Remote, we are consolidating all Microsoft Integration focused content in a single place covering on-premise (BizTalk Server), cloud (Azure Logic Apps, Functions, API Management, Service Bus, Event Grid, Event Hub, Power Platform), and Hybrid in an intense 3 days conference, with its own keynote.

If you are a Microsoft Integration professional, even if you attend part of the conference here and there, you’ll still see significant value educating and preparing yourself for the future. Please go ahead and register now.

The post Integrate 2020 Remote Session Spoiler – Improve your API’s with RBAC security appeared first on BizTalk360.

by stephen-w-thomas | Jan 15, 2020 | Stephen's BizTalk and Integration Blog

I was alerted by fellow Integration MVPs that BizTalk Server 2020 is now available!

Sure enough, it is available for download on MSDN. All versions of BizTalk 2020 including Developer, Branch, Standard, and Enterprise.

The interesting take away is support for Visual Studio 2019! So we skipped over Visual Studio 2017 altogether. Plus support for SQL 2019 and Windows 2019.

There is a nice, long list of What’s New in BizTalk 2020 along with some notable deprecations including the POP and SOAP adapters.

You can see the full list of what new here.

Let me know what you think about the new features of BizTalk Server 2020!

by stephen-w-thomas | Nov 9, 2017 | Stephen's BizTalk and Integration Blog

Interested in learning more about Azure Logic Apps? What are they used for? What business problems they can solve?

Take a look at my new course “Azure Logic Apps: Getting Started” available on Pluralsight that offers training on working with and creating Azure Logic Apps.

It is a quick 1 hour 18 minutes overview of the basics of Logic Apps. Content is broken down into 3 modules.

- Introduction to Microsoft Azure Logic Apps

- Design and Development of Logic Apps

- Building a Production Ready Logic App

If you are short on time, you can watch Pluralsight content in up to 2x speed!

Give the course a try and I look forward to any feedback!

You can view the course here.

by stephen-w-thomas | Aug 8, 2017 | Stephen's BizTalk and Integration Blog

My new course titled “BizTalk Server administration with BizTalk360” is now live on Pluralsight!

BizTalk Server Administration with BizTalk360

Here is the Introduction to the course:

“BizTalk Server is a complex enterprise integration server that typically requires daily administration. This course will teach you how to use BizTalk360 to streamline your administration tasks and provide valuable insight into daily operations.”

This course is packed full of over 3 hours of content covering 11 modules.

Here is the module list:

- Introduction

- Introduction to BizTalk360

- Installing and Configuring BizTalk360

- Operational Dashboards

- Security & Governance

- Monitoring and Notifications

- Day-to-day Operational Activities

- Day-to-day Infrastructure Activities

- Insight into BizTalk Through BizTalk360

- Working with Rules, ESB, and EDIs

- BizTalk360 for Managed Services

If you do not have a Pluralsight account, you can start your Free Trial today!

Enjoy and I welcome any feedback.

by stephen-w-thomas | May 4, 2017 | Stephen's BizTalk and Integration Blog

While we all know that the Azure Logic App team is coming out with amazing new Connectors all the time!

As new ones are rolled out, a lot of the time we get to use them in Preview mode. One thing to keep in mind is, sometimes key details of the connector will change while in the preview period.

Here is an example for the SendGrid Send Email Connector.

We are using it in a few Logic Apps to send emails. When I open the Logic App up inside the web app or Visual Studios, I see this:

All I see is the connection information rather than the To, From, Subject, and Body.

In JSON it looks like this:

“path”: “/api/mail.send.json”,

“body”: {

“from”: “alert@someclient.com “,

“to”: “[parameters(‘sendgrid_1_sendToEmail’)]”,

“subject”: “Critical File Transmission Error”,

“body”: “@concat(‘TEXT, TEXT’)”,

“ishtml”: false”}

Now this just seems to be a UI issue. My Send Mail actions seem to work and send emails. But if I want to make a change I need to do it inside the code vs the designer.

How to fix it?

Option 1: Delete the shape and re-add it. Simple enough. Make sure you copy out the parameter values before you delete it so you can set them again.

Option 2: Edit the JSON to adjust the changed values. Two fields are different now: PATH and BODY.

If you want to manually fix this do the following:

1. Change the Path to “/mail/send”

2. Rename “body” to “text”

Once complete, you will see all the properties available through the UI again.

Enjoy.