by Richard | Sep 2, 2011 | BizTalk Community Blogs via Syndication

2012-09-30: I’ve renamed the whole project and moved it to GitHub. Hope to see you there!

As most BizTalk developers/architects you have probably been in a situation were documentation of your integration solution has been discussed. And you probably also been just as happy as me when you first found BizTalk Documenter. BizTalk Documenter is a great tool for extracting all that configuration data that is so vital to BizTalk and create a nice technical documentation of it.

There are of course several levels to documentation in BizTalk Server based solution were the technical information is at the lowest level. Above that you would then have you integration process descriptions and then possible another level above that where you fit the integration processes into bigger business processes, and so on – but let’s stick to the technical documentation for now.

BizTalk Documenter does a nice job of automating the creation of technical documentation, instead of basically having to all that configuration job a second time when documenting. Doing technical documentation by hand usually also breaks down after a while as solution setup and configuration changes a lot and the work is really time consuming. And as documentation that isn’t up to date frankly is completely worthless people soon looses interest in both reading and maintaining the technical documentation.

Using BizTalk Documenter however has a few problems …

-

The tool generates either a CHM file or a word file with documentation. As more and more organizations however move their documentation online new output formats are needed.

-

The latest 3.4 versions of the tools throws OutOfMemory Exceptions on bigger solutions.

BizTalk Config Explorer Web Documenter

As we started trying to find ways to solve the issues above we had a few ideas we wanted the new solution to handle better.

-

Web based documentation

A web based documentation is both much easier to distribute and more accessible to read – sending a link and reading a web page rather then sending around a CHM file.

-

Easy way of see changes in versions

Sometimes one wants to see how things have changed and how things used to be configured. The tools should support “jumping back in time” and see previous versions.

Check out an an example of a Config Explorer yourself.

Getting started

Config Explorer is a open source tool published on CodePlex GitHub – a good place to start to understanding the workflow of creating documentation and to download the tool itself.

Contribute!

This release is a 1.0 release and there will are many features we haven’t had time for, but a few of them we’ve planned for future releases. Unfortunately there will however also be bugs and obvious things we’ve missed. Please use the CodePlex GutHub Issue Tracker to let us know what you like us to add and what possible issues you’ve found.

I’ll of course also check for any comments here! Winking smile

by Richard | Jun 2, 2011 | BizTalk Community Blogs via Syndication

<br />

Gobbledygooks · Update to BAM Service Generator<br />

Jun 03, 2011

I have previously written about how to generate a types set of WCF services to achieve end-to-end BAM based tracking – making BAM logging possible from outside potential firewalls and/or from non .NET based clients.

In that post I reference a open source tool to generate the services based on the BAM definition files to be able to make types BAM API calls. I called the tool BAM Service Generator and it’s published on CodePlex.

The first version of the tool only made it possible to make simple activity tracking calls, even missing the option to defining a custom activity id. All advanced scenarios like continuation, activity and data reference or even updating a activity wasn’t possible. That has now been fixed and the second version of the tool enables the following operations.

-

LogCompleteActivity

Creates a simple logging line and is the most efficient option if one only want’s to begin, update and and end an activity logging as soon as possible.

-

BeginActivity

Start a new activity with a custom activity id

-

UpdateActivity

Updates a already started activity and accepts a types object as a parameter. The typed object is of course based on what’s has been defined in the BAM definition file. Multiple updates can be made until EndActivity is called.

-

EnableContinuation

Enables continuation. This is used if you have multiple activity loggings that belong together but you can not use the same activity id. This enables you to tie those related activities together using some other set of id (could for example be the invoice id).

-

AddActivivityReference

Add a reference to an another activity and enables you to for example display a link or read that whole activity when reading the main one.

-

AddReference

Adds a custom reference to something that isn’t necessarily an activity. Could for example be a link to a document of some sort. Limited to 1024 characters.

-

AddLongReference

Similar to AddReference but enables to also store a blog of data using the LongRefernceData parameter – limited to 512 KB.

-

EndActivity

Ends a started activity.

Let me know if you think that something is missing, if something isn’t working as would expect etc.

by Richard | Jan 18, 2011 | BizTalk Community Blogs via Syndication

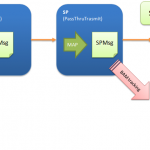

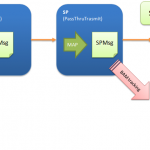

An integration between systems can often be view upon as a chain of system and services working together to move a message to its final destination.

One problem with this loosely-coupled way of dealing with message transfers is that its hard to see if and where something has gone wrong. Usually all we know is that the sending system has send the message, but the final system never received it. The problem could then be within any of the components in between. Tracking messages within BizTalk is one thing but achieving a end-to-end tracking within the whole “chain” is much harder.

One way of solving the end-to-end tracking problem is to use BAM.aspx). BAM is optimized for these kind of scenarios were we might have to deal with huge amount of messages and data – several of the potential problems around these issues are solved out-of-the-box (write optimized tables, partitioning of tables, aggregated views, archiving jobs and so on).

And even though BAM is a product that in theory isn’t tied to BizTalk its still a product that is easier to use in the context of BizTalk than outside of it due tools like the BizTalk Tracking Profile Editor.aspx) and the built in BAM-interceptor patterns within BizTalk. It is however fully possible to track BAM data and take advantage of the BAM infrastructure even outside of BizTalk using the BAM API.aspx).

The BAM API is a .NET based API used for writing tracking data to the BAM infrastructure from any .NET based application. There are however a few issues with this approach.

The application sending the tracking data has to be .NET based and use the loose string based API. So for example writing to the “ApprovedInvoice” activity below would look something like this – lots of untyped strings.

public void Log(ApprovedInvoicesServiceType value)

{

string approvedInvoicesServiceActivityID = Guid.NewGuid().ToString();

var es = new DirectEventStream("Integrated Security=SSPI;Data Source=.;Initial Catalog=BAMPrimaryImportw", 1);

es.BeginActivity("ApprovedInvoices", approvedInvoicesServiceActivityID);

es.UpdateActivity("ApprovedInvoices", approvedInvoicesServiceActivityID,

"ApprovedDate", "2011-01-18 12:02",

"ApprovedBy", "Richard",

"Amount", 122.34,

"InvoiceId", "Invoic123");

es.EndActivity("ApprovedInvoices", approvedInvoicesServiceActivityID);

}

One way of getting around problem with the usage of strings is to use the “Generate Typed BAM API tool”. The tool reads the BAM definition file and generates a dll containing strong types that corresponds to the fields in the definition. By referencing the dll in the sending application we can get a strong typed .NET call. The fact that this still however requires a .NET based application remains.

The obvious way to solve the limitation of a .NET based client – and to get one step closer to a end-to-end tracking scenario – is of course to wrap the call to the API in a service.

BAM Service Generator

BAM Service Generator is very similar to the Generate Typed BAM API tool mentioned above with the difference that instead of generation a .NET dll it generates a WCF service for each activity.

c:Toolsbmsrvgen.exe /help

BAM Service Generator Version 1.0

Generates a WCF service based on a BizTalk BAM definition file.

-defintionfile: Sets path to BAM definition file.

-output: Sets path to output folder.

-namespace: Sets namespace to use.

Example: bmsrvgen.exe -defintionfile:c:MyFilesMyActivityDef.xml

-output:c:tempservices -nampespace:MyCompanyNamespace

The BAM Service Generator is a command line tool that will read the BAM definition file and generate a compiled .NET 4.0 WCF service. The service is configured with a default basicHttp endpoint and is ready to go straight into AppFabric or similar hosting.

This service-approach makes it possible to take advantage of the BAM infrastructure from all different types of system, and even in cases when they aren’t behind the same firewall! As shown in figure below this could take us one step closer to the end-to-end tracking scenario.

“BAM Service Generator” is open-source and can be found here.

by Richard | Apr 23, 2010 | BizTalk Community Blogs via Syndication

I’ve doing a lot of EDI related work in BizTalk lately and I have to say that I’ve really enjoyed it! EDI takes a while to get used to (see example below), but once one started to understand it I’ve found it to be a real nice, strict standard – with some cool features built into BizTalk!

UNB+IATB:1+6XPPC+LHPPC+940101:0950+1'

UNH+1+PAORES:93:1:IA'

MSG+1:45'

IFT+3+XYZCOMPANY AVAILABILITY'

ERC+A7V:1:AMD'

IFT+3+NO MORE FLIGHTS'

ODI'

TVL+240493:1000::1220+FRA+JFK+DL+400+C'

...

There are however some things that doesn’t work as expected …

Promoting values

According to the MSDN documentation.aspx) the EDI Disassembler by default promotes the following EDI fields: UNB2.1, UNB2.3, UNB3.1, UNB11; UNG1, UNG2.1, UNG3.1; UNH2.1, UNH2.2, UNH2.3.

There are however situation where one would like other values promoted.

I my case I wanted the C002/1001 value in the BGM segment. This is a value identifying the purpose of the document and I needed to route the incoming message based on the value.

The short version is that creating a property schema, promoting the field in the schema and having the EDI Disassembler promoting the value will not work (as with the XML Disassembler). To do this you’ll need to use a custom pipeline component to promote the value. Rikard Alard seem to have come to the same conclusion here.

Promote pipeline component to use

If you don’t want to spend time on writing your own pipeline component to do this yourself you can find a nice “promote component” on CodePlex here by Jan Eliasen.

If you however expect to receive lots and lots of big messages you might want to look into changing the component to use XPathReader and custom stream implementations in the Microsoft.BizTalk.Streaming.dll. You can find more detailed information on how to do that in this MSDN article.aspx).

by Richard | Apr 12, 2010 | BizTalk Community Blogs via Syndication

Update 2010-04-13: Grant Samuels commented and made me aware of the fact that inline scripts might in some cases cause memory leaks. He has some further information here and you’ll find a kb-article here.

I’ve posted a few times before on how powerful I think it is in complex mapping to be able to replace the BizTalk Mapper with a custom XSLT script (here’s how to.aspx)). The BizTalk Mapper is nice and productive in simpler scenarios but in my experience it break down in more complex ones and maintaining a good overview is hard. I’m however looking forward to the new version of the tool in BizTalk 2010 – but until then I’m using custom XSLT when things gets complicated.

Custom XSLT however lacks a few things once has gotten used to have – such as scripting blocks, clever functoids etc. In some previously post (here and here) I’ve talked about using EXSLT as a way to extend the capabilities of custom XSLT when used in BizTalk.

Bye, bye external libraries – heeeello inline scripts 😉

Another way to achieve much of the same functionality even easier is to use embedded scripting that’s supported by the XslTransform class. Using a script block in XSLT is easy and is also the way the BizTalk Mapper makes it possible to include C# snippets right into your maps.

Have a look at the following XSLT sample:

<?xml version="1.0" encoding="utf-8"?>

<xsl:stylesheet

version="1.0"

xmlns:xsl="http://www.w3.org/1999/XSL/Transform"

xmlns:msxsl="urn:schemas-microsoft-com:xslt"

xmlns:code="http://richardhallgren.com/Sample/XsltCode"

exclude-result-prefixes="msxsl code"

>

<xsl:output method="xml" indent="yes"/>

<xsl:template match="@* | node()">

<Test>

<UniqueNumber>

<xsl:value-of select="code:GetUniqueId()" />

</UniqueNumber>

<SpecialDateFormat>

<xsl:value-of select="code:GetInternationalDateFormat('11/16/2003')" />

</SpecialDateFormat>

<IncludesBizTalk>

<xsl:value-of select="code:IncludesSpecialWord('This is a text with BizTalk in it', 'BizTalk')" />

</IncludesBizTalk>

</Test>

</xsl:template>

<msxsl:script language="CSharp" implements-prefix="code">

//Gets a unique id based on a guid

public string GetUniqueId()

{

return Guid.NewGuid().ToString();

}

//Formats US based dates to standard international

public string GetInternationalDateFormat(String date)

{

return DateTime.Parse(date, new System.Globalization.CultureInfo("en-US")).ToString("yyyy-MM-dd");

}

//Use regular expression to look for a pattern in a string

public bool IncludesSpecialWord(String s, String pattern)

{

Regex rx = new Regex(pattern);

return rx.Match(s).Success;

}

</msxsl:script>

</xsl:stylesheet>

All one has to do is to define a code block, reference the xml-namespace used and start coding! Say goodbye to all those external library dlls!

It’s possible to use a few core namespaces without the full .NET namespace path but all namespaces are available as long as they are fully qualified. MSDN has a great page with all the details here.aspx).

by Richard | Mar 17, 2010 | BizTalk Community Blogs via Syndication

Update 2012-03-28: This bug was fixed in the CU2 update for BizTalk 2006 R2 SP 1. Further details can be found here and here.

Update 2010-04-12: Seems like there is a patch coming that should fix all the bugs in SP1 … I’ve been told it should be public within a week or two. I’ll make sure to update the post as I know more. Our problem is still unsolved.

Late Thursday night last week we decided to upgrade one of our largest BizTalk 2006 R2 environment to recently released Service Pack 1.aspx). The installation went fine and everything looked good.

… But after a while we started see loads of error messages looking like below.

Unable to cast COM object of type 'System.__ComObject' to interface type

'Microsoft.BizTalk.PipelineOM.IInterceptor'. This operation failed because the

QueryInterface call on the COM component for the interface with IID

'{24394515-91A3-4CF7-96A6-0891C6FB1360}' failed due to the following error: Interface not

registered (Exception from HRESULT: 0x80040155).

After lots of investigation we found out that we got the errors on ports with the follow criteria:

-

Send port

-

Uses the SQL Server adapter

-

Has a mapping on the port

-

Has a BAM tracking profile associated with the port

In our environment the tracking on the port is on “SendDateTime” from the “Messaging Property Schema”. We haven’t looked further into if just any BAM tracking associated with port causes the error or if only has to do with some specific properties.

Reproduce it to prove it!

I’ve setup a really simple sample solution to reproduce the problem. Download it here.

The sample receives a XML file, maps it on the send port to schema made to match the store procedure. It also uses a dead simple tracking definition and profile to track a milestone on the send port.

Sample solution installation instructions

-

Create a database called “Test”

-

Run the two SQL scripts (“TBL_CreateIds.sql” and “SP_CreateAddID.sql”) in the solution to create the necessary table and store procedure

-

Deploy the BizTalk solution just using simple deploy from Visual Studio

-

Apply the binding file (“Binding.xml”) found in the solution

-

Run the BM.exe tool to deploy the BAM tracking defintion.

Should look something like:

bm.exe deploy-all -definitionfile:BAMSimpleTestTrackingDefinition.xml

-

Start the tracking Profile editor and open the “SimpleTestTrackingProfile.btt“ that you’ll find in the solution and apply the profile

-

Drop the test file in the receive folder (“InSchema_output.xml”)

The sample solution fails on a environment with SP 1 but works just fine on a “clean” BizTalk 2006 R2 environment.

What about you?

I haven’t had time to test this on a BizTalk 2009 environment but I’ll update the post as soon as I get around to it.

We also currently have a support case with Microsoft on this and I’ll make sure to let you as soon as something comes out of that. But until then I’d be really grateful to hear from you if any of you have the same behavior in your BizTalk 2006 R2 SP1 environment.

by Richard | Feb 26, 2010 | BizTalk Community Blogs via Syndication

Pure XSLT is very powerful but it definitely has its weaknesses (I’ve written about how to extend XSLT using mapping and BizTalk previously here) … One of those are handling numbers that uses a different decimal-separator than a point (“.”).

Take for example the XML below

<Prices>

<Price>10,1</Price>

<Price>10,2</Price>

<Price>10,3</Price>

</Prices>

Just using the XSLT sum-function on these values will give us a “NaN” values. To solve it we’ll have to use recursion and something like in the sample below.

The sample will select the node-set to summarize and send it to the “SummarizePrice” template. It will then add the value for the first Price tag of the by transforming the comma to a point. It will then check if it’s the last value and if not use recursion to call into itself again with the next value. It will keep adding to the total amount until it reaches the last value of the node set.

<xsl:template match="Prices">

<xsl:call-template name="SummurizePrice">

<xsl:with-param name="nodes" select="Price" />

</xsl:call-template>

</xsl:template>

<xsl:template name="SummurizePrice">

<xsl:param name="index" select="1" />

<xsl:param name="nodes" />

<xsl:param name="totalPrice" select="0" />

<xsl:variable name="currentPrice" select="translate($nodes[$index], ',', '.')"/>

<xsl:choose>

<xsl:when test="$index=count($nodes)">

<xsl:value-of select="$totalPrice + $currentPrice"/>

</xsl:when>

<xsl:otherwise>

<xsl:call-template name="SummurizePrice">

<xsl:with-param name="index" select="$index + 1" />

<xsl:with-param name="totalPrice" select="$totalPrice + $currentPrice" />

<xsl:with-param name="nodes" select="$nodes" />

</xsl:call-template>

</xsl:otherwise>

</xsl:choose>

</xsl:template>

Simple but a bit messy and nice to have for future cut and paste 😉

by Richard | Feb 22, 2010 | BizTalk Community Blogs via Syndication

Recently there’s been a few really good resources on streaming pipeline handling published. You can find some of the here.aspx) and here.aspx).

The Optimizing Pipeline Performance.aspx) MSDN article has two great examples of how to use some of the Microsoft.BizTalk.Streaming.dl.aspx) classes. The execute method of first example looks something like below.

public IBaseMessage Execute(IPipelineContext context, IBaseMessage message)

{

try

{

...

IBaseMessageContext messageContext = message.Context;

if (string.IsNullOrEmpty(xPath) && string.IsNullOrEmpty(propertyValue))

{

throw new ArgumentException(...);

}

IBaseMessagePart bodyPart = message.BodyPart;

Stream inboundStream = bodyPart.GetOriginalDataStream();

VirtualStream virtualStream = new VirtualStream(bufferSize, thresholdSize);

ReadOnlySeekableStream readOnlySeekableStream = new ReadOnlySeekableStream(inboundStream, virtualStream, bufferSize);

XmlTextReader xmlTextReader = new XmlTextReader(readOnlySeekableStream);

XPathCollection xPathCollection = new XPathCollection();

XPathReader xPathReader = new XPathReader(xmlTextReader, xPathCollection);

xPathCollection.Add(xPath);

bool ok = false;

while (xPathReader.ReadUntilMatch())

{

if (xPathReader.Match(0) && !ok)

{

propertyValue = xPathReader.ReadString();

messageContext.Promote(propertyName, propertyNamespace, propertyValue);

ok = true;

}

}

readOnlySeekableStream.Position = 0;

bodyPart.Data = readOnlySeekableStream;

}

catch (Exception ex)

{

if (message != null)

{

message.SetErrorInfo(ex);

}

...

throw ex;

}

return message;

}

We used this example as a base when developing something very similar in a recent project. At first every thing worked fine but after a while we stared getting an error saying:

Cannot access a disposed object. Object name: DataReader

It took us a while to figure out the real problem here, everything worked fine when sending in simple messages but as soon as we used to code in a pipeline were we also debatched messages we got the “disposed object” problem.

It turns out that when we debatched messages the execute method of the custom pipeline ran multiple times, one time for each sub-messages. This forced the .NET Garbage Collector to run.

The GC found the XmlTextReader that we used to read the stream as unreferenced and decided to destoy it.

The problem is that will also dispose the readOnlySeekable-Stream stream that we connected to our message data object!

It’s then the BizTalk End Point Manager (EPM) that throws the error as it hits a disposed stream object when trying to read the message body and save it to the BizTalkMsgBox!

ResourceTracker to the rescue!

Turns out that the BizTalk message context object has a nice little class connected to it called the ResourceTracker. This object has a “AddResouce”-method that makes it possible to add an object and the context will the hold a reference to this object, this will tell the GC not to dispose it!

So when adding the below before ending the method everything works fine – even when debatching messages!

context.ResourceTracker.AddResource(xmlTextReader);

by Richard | Dec 11, 2009 | BizTalk Community Blogs via Syndication

As all of you know the number one time consuming task in BizTalk is deployment. How many times have you worked your way through the steps below (and even more interesting – how much time have you spent on them …)

-

Build

-

Create application

-

Deploy schemas

-

Deploy transformations

-

Deploy orchestration

-

Deploy components

-

Deploy pipelines

-

Deploy web services

-

Create the external databases

-

Change config settings

-

GAC libraries

-

Apply bindings on applications

-

Bounce the host instances

-

Send test messages

-

Etc, etc …

Not only is this time consuming it’s also drop dead boring and therefore also very prone – small mistakes that takes ages to find and fix.

The good news is however that the steps are quite easy to script. We use a combination of a couple of different open-source MsBuild libraries (like this and this) and have created our own little build framework. There is however the BizTalk Deployment Framework by Scott Colescott and Thomas F. Abraham that looks great and is very similar to what we have (ok, ok, it’s a bit more polished …).

Binding files problem

Keeping the binding files in a source control system is of course a super-important part of the whole build concept. If something goes wrong and you need to roll back, or even rebuild the whole solution, having the right version of the binding file is critical.

A problem is however that if someone has done changes to the configuration via the administration console and missed to export these binding to source control we’ll deploy an old version of the binding when redeploying. This can be a huge problem when for example addresses etc have changed on ports and we redeploy old configurations.

What!? If fixed that configuration issue in production last week and now it back …

So how can we reassure that the binding file is up-to-date when deploying?

One solution is to do and export of the current binding file and compare that to one we’re about to deploy in a “pre-deploy”-step using a custom MsBuild target.

Custom build task

Custom build task in MsBuild are easy, a good explanation of how to write one can be found here. The custom task below does the following.

-

Require a path to the binding file being deployed.

-

Require a path to the old deployed binding file to compare against.

-

Using a regular expression to strip out the time stamp in the files as this is the time the file was exported and that will otherwise differ between the files.

-

Compare the content of the files and return a boolean saying if they are equal or not.

public class CompareBindingFiles : Task

{

string _bindingFileToInstallPath;

string _bindingFileDeployedPath;

bool _value = false;

[Required]

public string BindingFileToInstallPath

{

get { return _bindingFileToInstallPath; }

set { _bindingFileToInstallPath = value; }

}

[Required]

public string BindingFileDeployedPath

{

get { return _bindingFileDeployedPath; }

set { _bindingFileDeployedPath = value; }

}

[Output]

public bool Value

{

get { return _value; }

set { _value = value; }

}

public override bool Execute()

{

_value = GetStrippedXmlContent(_bindingFileDeployedPath).Equals(GetStrippedXmlContent(_bindingFileToInstallPath));

return true; //successful

}

private string GetStrippedXmlContent(string path)

{

StreamReader reader = new StreamReader(path);

string content = reader.ReadToEnd();

Regex pattern = new Regex("<Timestamp>.*</Timestamp>");

return pattern.Replace(content, string.Empty);

}

}

Using the build task in MsBuild

After compiling the task above when have to reference the dll in element like below.

<UsingTask AssemblyFile="My.Shared.MSBuildTasks.dll" TaskName="My.Shared.MSBuildTasks.CompareBindingFiles"/>

We can then do the following in out build script!

<!--

This target will export the current binding file, save as a temporary biding file and use a custom target to compare the exported file against the one we’re about to deploy.

A boolean value will be returned as IsValidBindingFile telling us if they are equal of not.

-->

<Target Name="IsValidBindingFile" Condition="$(ApplicationExists)=='True'">

<Message Text="Comparing binding file to the one deployed"/>

<Exec Command='BTSTask ExportBindings /ApplicationName:$(ApplicationName) "/Destination:Temp_$(BindingFile)"'/>

<CompareBindingFiles BindingFileToInstallPath="$(BindingFile)"

BindingFileDeployedPath="Temp_$(BindingFile)">

<Output TaskParameter="Value" PropertyName="IsValidBindingFile" />

</CompareBindingFiles>

<Message Text="Binding files is equal: $(IsValidBindingFile)" />

</Target>

<!--

This pre-build step runs only if the application exists from before. If so it will check if the binding file we try to deploy is equal to one deployed. If not this step will break the build.

-->

<Target Name="PreBuild" Condition="$(ApplicationExists)=='True'" DependsOnTargets="ApplicationExists;IsValidBindingFile">

<!--We'll break the build if the deployed binding files doesn't match the one being deployed-->

<Error Condition="$(IsValidBindingFile) == 'False'" Text="Binding files is not equal to deployed" />

<!--All other pre-build steps goes here-->

</Target>

So we now break the build if the binding file being deployed aren’t up-to-date!

This is far from rocket science but can potentially save you from making some stupid mistakes.

by Richard | Nov 13, 2009 | BizTalk Community Blogs via Syndication

There are a few really good blog post that explains BAM – like this from Saravana Kumar and this by Andy Morrison. They both do a great job explaining the complete BAM process in detail.

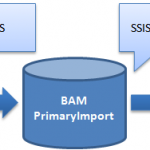

This post will however focus on some details in the last step of the process that has to do with archiving the data. Let’s start with a quick walk-through of the whole process.

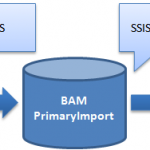

BAM Tracking Data lifecycle

-

The tracked data is intercepted in the BizTalk process and written to the “BizTalk MsgBox” database.

-

The TDDS service reads the messages and moves them to the correct table in the “BAM Primary Import” database.

-

The SSIS package for the current BAM activity has to be triggered (this is manual job or something that one has to schedule). When executing the job will do couple of things.

-

Create a new partitioned table with a name that is a combination of the active table name and a GUID.

-

Move data from the active table to this new table. The whole point is of course to keep the active table as small and efficient as possible for writing new data to.

-

Add the newly created table to a database view definition. It is this view we can then use to read all tracked data (including data from the active and partitioned tables).

-

Read from the “BAM Metadata Activities” table to find out the configured time to keep data in the BAM Primary Import database. This value is called the “online window”.

-

Move data that is older than the online window to the “BAM Archive” database (or delete it if you have that option).

Sound simple doesn’t it? I was however surprised to see that my data was not moved to the BAM Archive database, even if it was clearly outside of the configured online window.

So, what data is moved to the BAM Archive database then?

Below there is a deployed tracking activity called “SimpleTracking” with a online window of 7 days. Ergo, all data that is older than 7 days should be moved to the BAM Archive database when we run the SSIS job for the activity.

If we then look at the “BAM Completed” table for this activity we see that all the data is much older than 7 days as today’s date is “13-11-2009”.

So if we run the SSIS job these rows should be moved to the archive database. lets run the SSIS job. Right?

But when we execute the SSIS job the BAM Archive database is still empty! All we see are the partitioned tables that were created as part of the first steps of the SSIS job. All data from the active table is however moved to the new partitioned table but not moved to the Archive database.

It turns out that the SSIS job does not at all look at the the “Last Modified” values of each row but on the “Creation Time” of the partitioned table in the “BAM MetaData Partitions” table that is shown below.

The idea behind this is of course to not have to read from tables that potentially are huge and find those rows that should be moved. But it also means that it will take another 7 days before the data in the partitioned view is actually move to the archive database.

This might actually be a problem if you haven not scheduled the SSIS job to run from day one and you BAM Primary Import database is starting to get to big and you quickly have to move data over to archiving. All you then have to is of course to change that “Creation Time” value in the BAM Metadata Partitions table so it is outside of the online window value for the activity.