by Nick Hauenstein | Jul 31, 2017 | BizTalk Community Blogs via Syndication

We’re living in exciting times, where releases of new functionality of all of our favorite software (e.g., Logic Apps, Visual Studio Team Services, and BizTalk Server) isn’t happening every 2 years, it’s happening every 2 weeks. With all of the benefits that such a release cadence brings, it also introduces the dilemma of how to both be productive with the technology, and keep up to date with all of the new features.

For example, while I was at the Integrate conference this year in London, many asked how it’s possible to keep our live 5-day Logic Apps class up-to-date. “Certainly it must be out-of-date if it’s not small recorded videos, right?” Actually, we update the class before each run to incorporate the latest product updates.

In this post, I want to share a strategy that we at QuickLearn have found works to stay on top of the latest technology changes, and give you the tools to implement it yourself.

Enabling Continuous Education

The first step in working towards staying up-to-date with the latest and greatest a technology offers will be locating the ever-evolving list of what’s new. Microsoft maintains a feed of new features for Logic Apps, and even a feed of new features for Visual Studio Team Services (another technology that we teach here). The feed for Logic Apps will be updated less frequently than the listing of features in the Azure Portal, however.

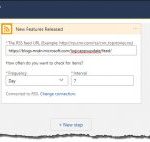

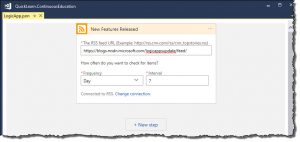

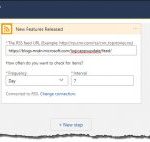

Let’s use one of those feeds, and build a Logic App that notifies us when there is a change by using the RSS trigger to queue up an instance of that Logic App whenever a new item is published to the RSS feed.

Creating a Learning Backlog

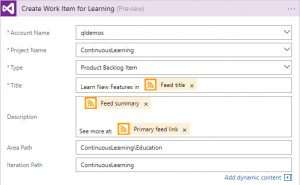

At QuickLearn, we add research items for continuous education as Product Backlog Items in VSTS. They sit alongside the core work of building products (in our case, courseware), but serve to carve out some capacity to build up the team itself. Each item carries with it the responsibility to quickly research and/or build a proof of concept using the new feature, or features, whilst also taking extensive notes to share with the team. I made all of my notes public for the release of BizTalk Server 2013 and BizTalk Server 2013 R2, and I wrote them directly in Live Writer to facilitate this. These days, I use OneNote for the same purpose.

Your organization might not want to allocate company time for such efforts. If so, you can always setup a personal VSTS account and do such research and experimentation on your own time.

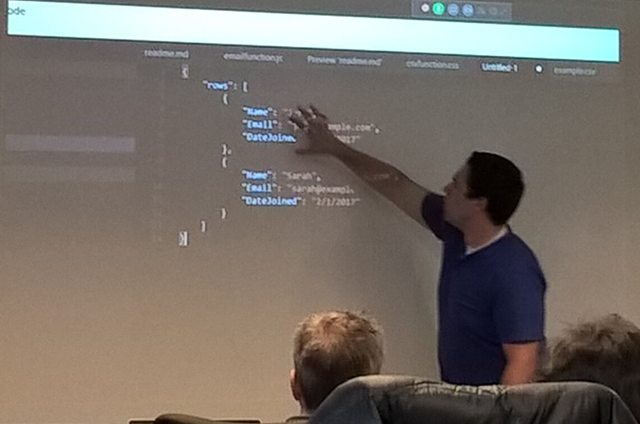

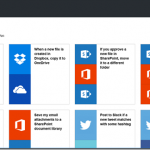

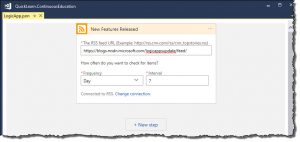

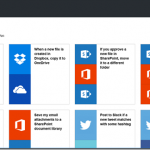

Thankfully, Logic Apps has a connector for VSTS, which makes the task of building up a learning backlog an easy task to accomplish. The work items themselves will serve as our notifications of new product features:

Working from the Continuous Learning Backlog

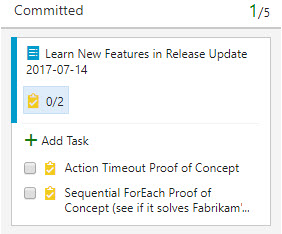

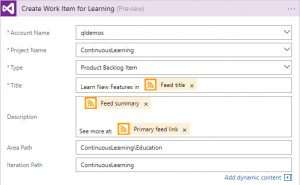

Once the Logic App runs (just the single trigger and single action), it produces backlog items to learn about new features in Logic Apps. In the way that I’ve configured it, it runs every 7 days and will automatically populate your backlog with any new features it finds. Your team can decide to investigate and/or not investigate these features further depending on what they are, and/or if they will help in future efforts on your projects.

If any features in the release sound helpful and warrant further investigation, you can break out as many tasks as are required to investigate applicable features to see what value you can derive from them. I like taking the approach of building a 30-60 minute proof of concept for each feature, rather than just reading about it. Each team member can take a relevant feature for a test drive, and present it during sprint review.

For QuickLearn, backlog items like this are a common occurrence. We update our Logic Apps class before each delivery to incorporate all of the latest and greatest features that we can fit. I always love the week of class, being able to share all of the fun and fresh goodies that the product team has cooked up.

I Need to Get Up to Speed Now

If you want a leg up, and a way to get up-to-speed quickly, there are some good opportunities coming up to do just that:

I’ll be speaking at each of those, and hope to see you there! Good luck on your continuously expanding cloud integration journey!

by Nick Hauenstein | Mar 28, 2017 | BizTalk Community Blogs via Syndication

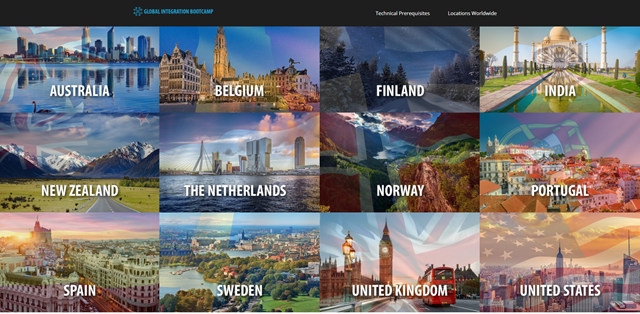

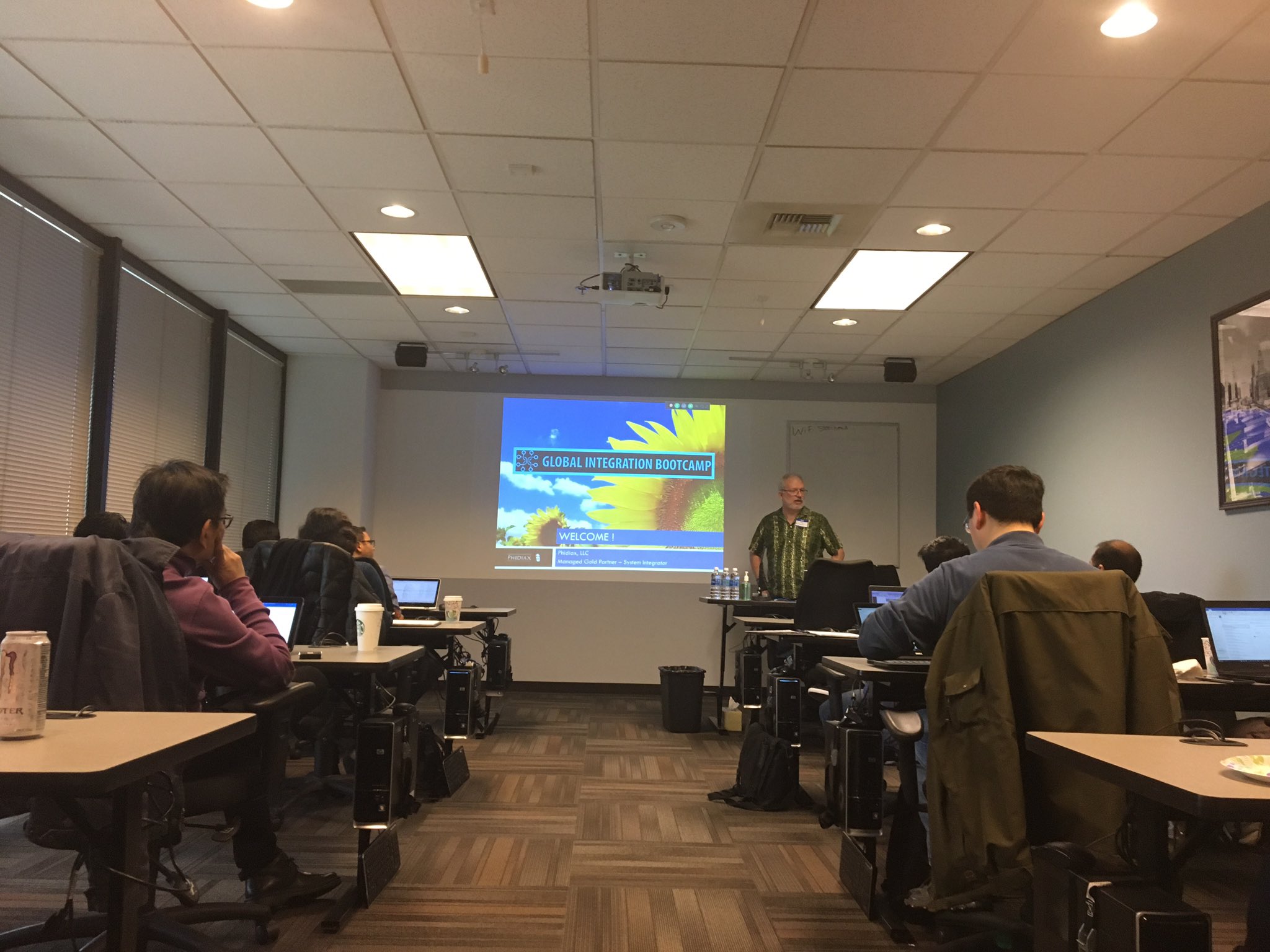

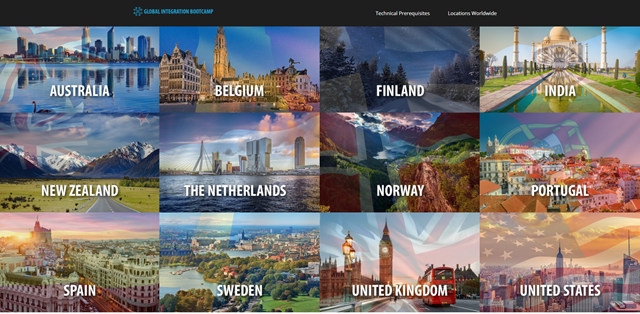

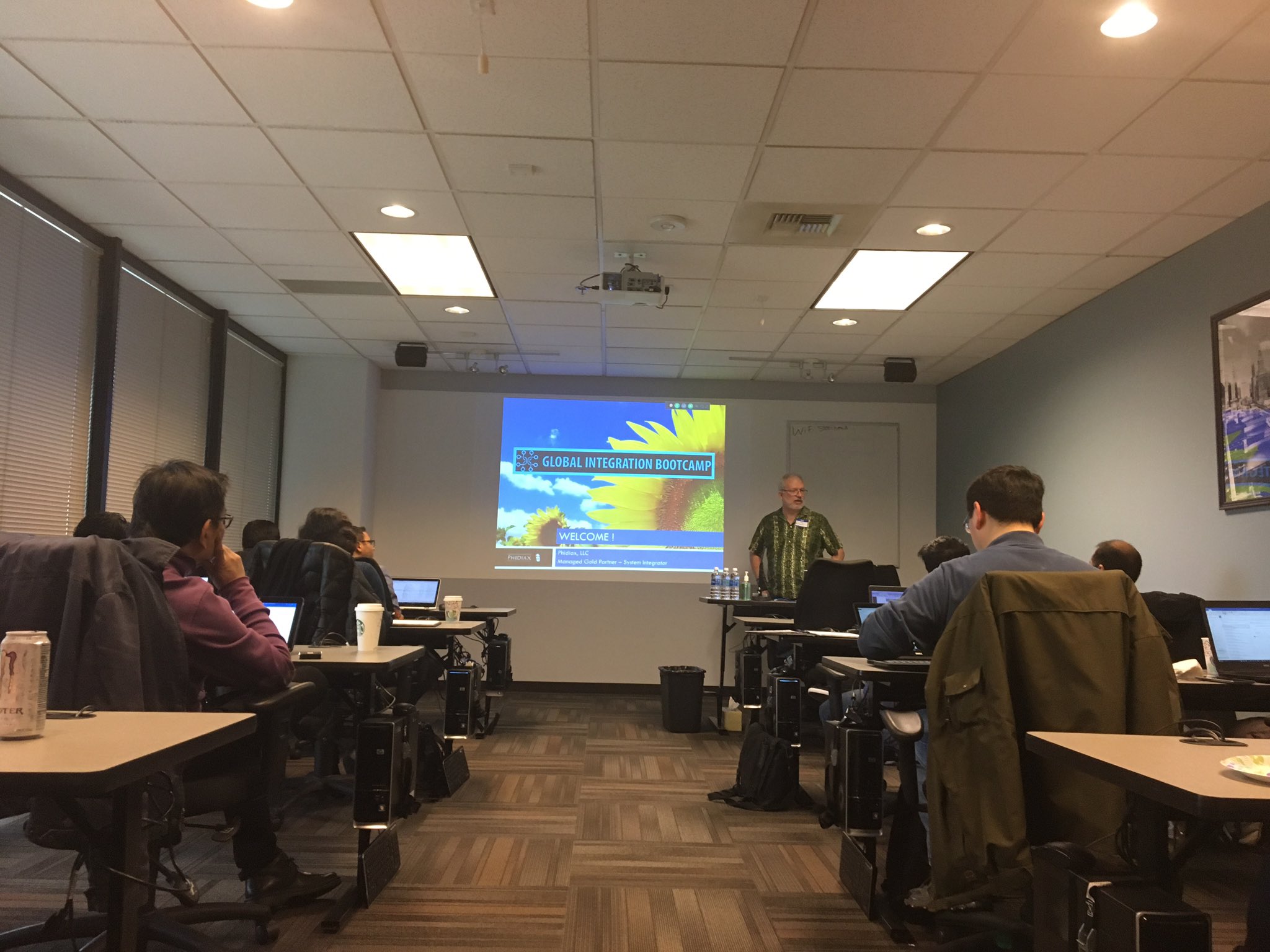

The Global Integration Bootcamp was held for the first time this last week, events spanning 12 countries, 16 locations, with over 650 attendees. If you went to either the Seattle, WA location (here at QuickLearn Training’s headquarters), or the New York location, then you may have even ran into one of our instructors!

In the weeks leading up to the day of the bootcamp, Tom Canter with Phidiax arranged a speaker line-up, refreshments, and got the word-out about the event; while over here at QuickLearn Training, we prepared to transform our classrooms into an event space. When the day arrived, all were in good spirits and ready to share knowledge, and get deep into real-world possibilities for hybrid cloud integrations using BizTalk Server and Logic Apps.

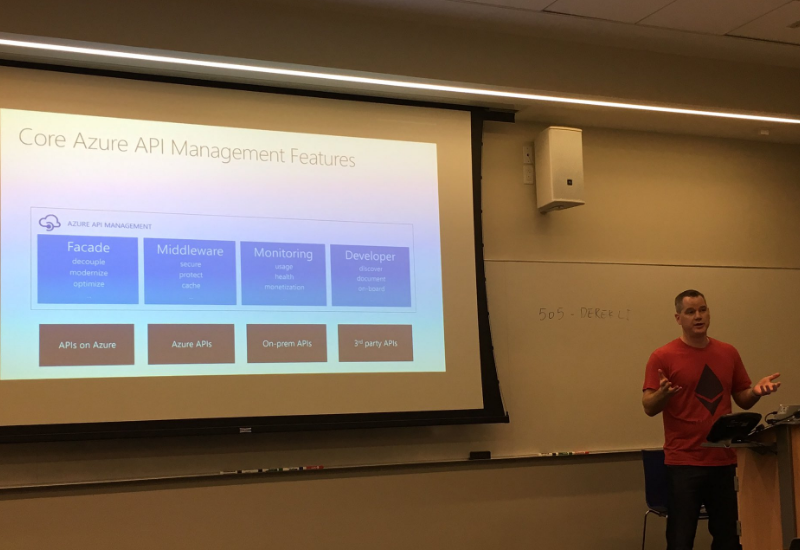

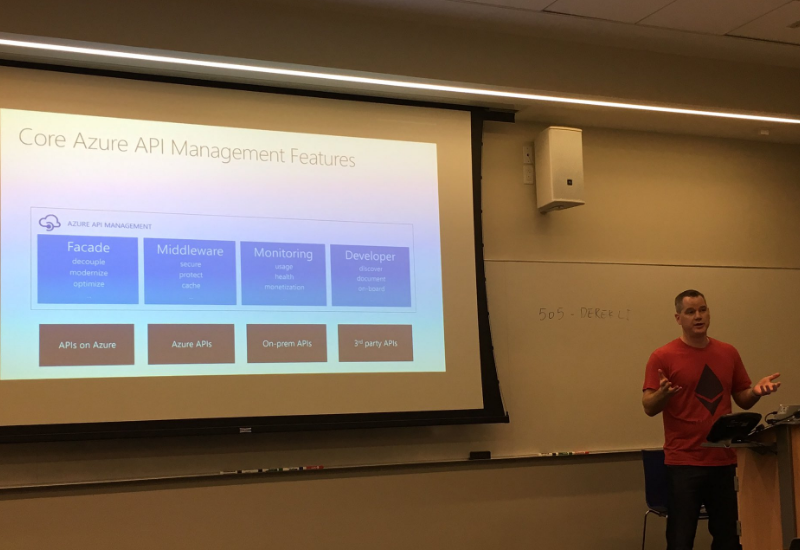

Tom kicked off the event with a keynote and introductions, and got everyone primed and excited for the day. Next up was Tord showing off some of the latest greatest features in BizTalk Server 2016 when used in concert with API Management along with a few surprises  . I’m not sure what I’m allowed to share and what I’m not, so I will just leave that short, sweet, and to the point.

. I’m not sure what I’m allowed to share and what I’m not, so I will just leave that short, sweet, and to the point.

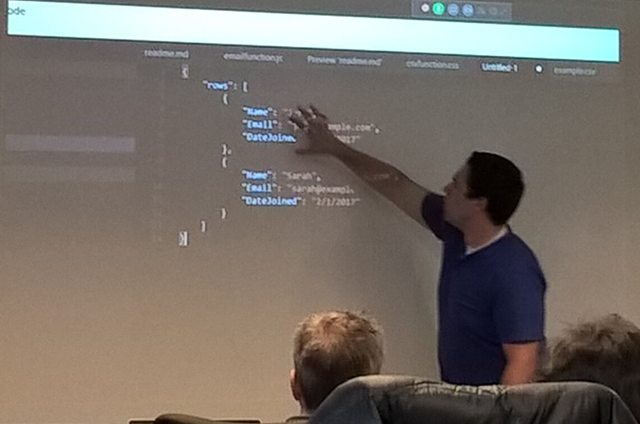

Gyanendra Gautam teamed up with Ashish Bhambhani (co-authors of the freshly published Robust Cloud Integration with Azure) to show some really slick B2B scenarios with Logic Apps and the Enterprise Integration Pack. Trading Partner and Agreement configuration were shown, along with a special surprise that no one had ever seen before – the world’s smallest X12 834 interchange! It was both a fun and informative session, and if you haven’t at the very least experimented with EDI in Logic Apps – do it. You’ll find your BizTalk Server experience in the same will serve you quite well.

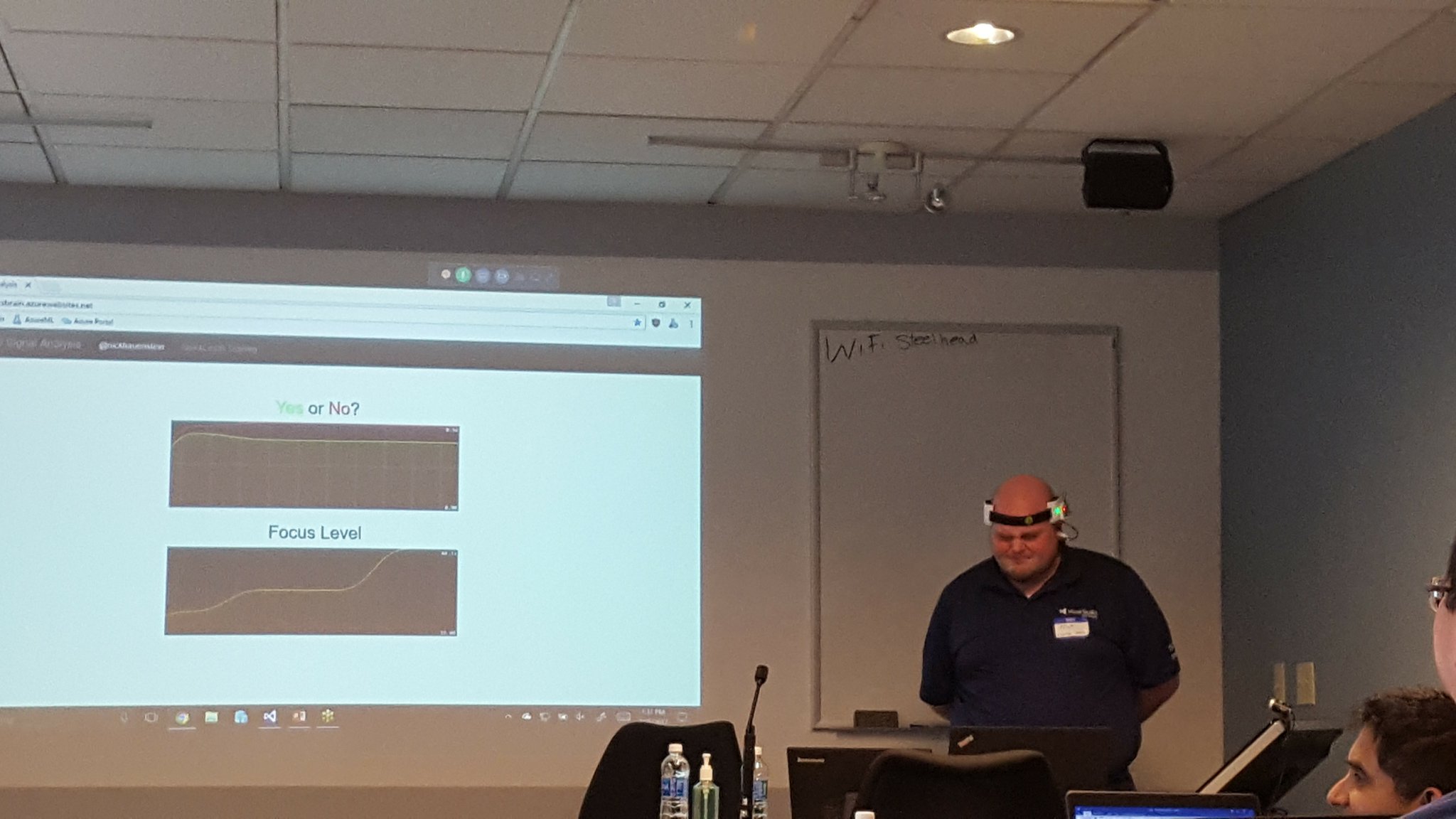

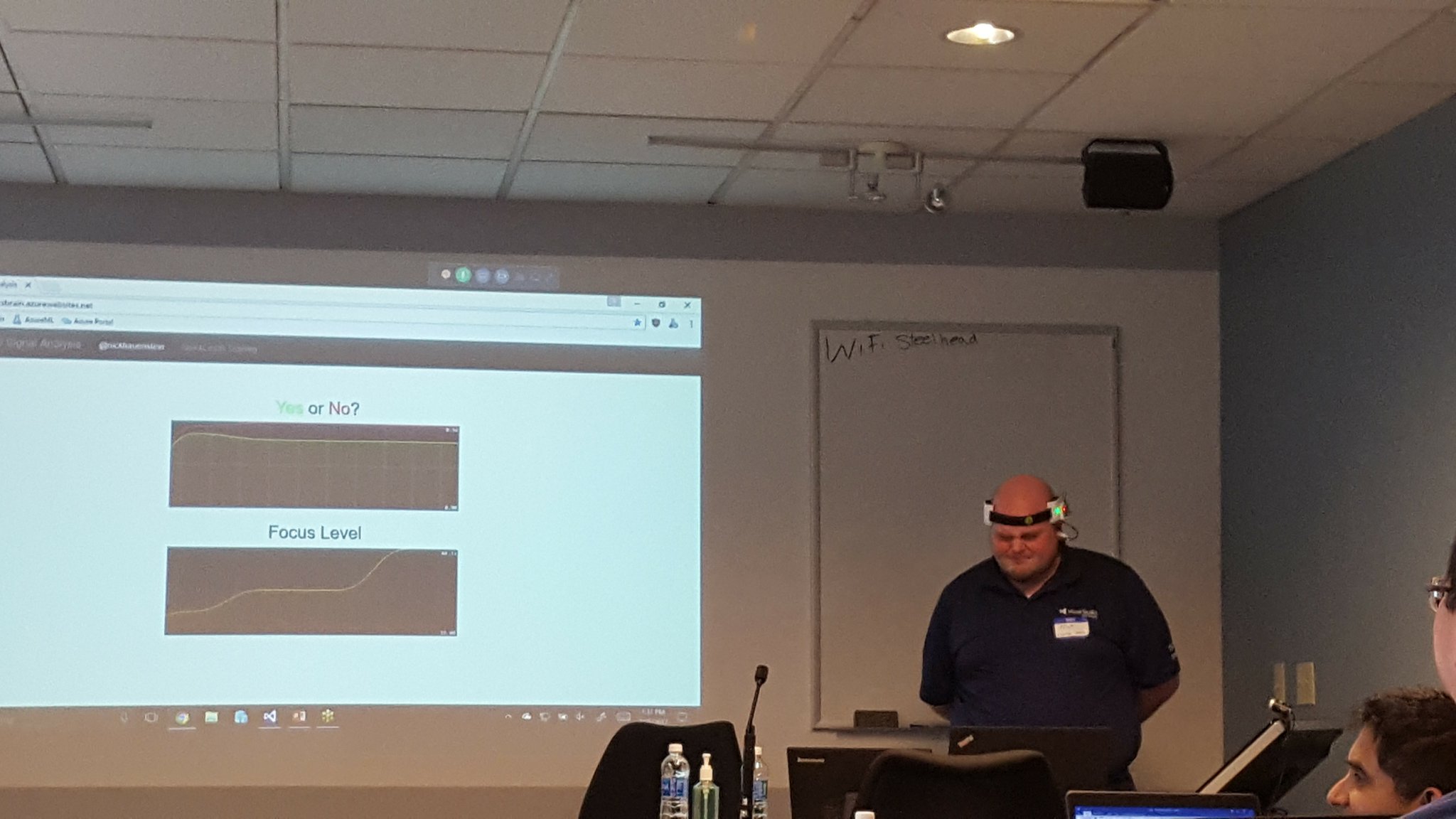

I was up next, wearing a contraption to be explained at a later date. The focus of my talk was to demystify machine learning – and to demonstrate that it’s not just for the sexy applications (e.g., self-driving cars, HoloLens, whatever it is that I’m wearing, etc…). I spent the bulk of my session walking through a simple Hello Azure ML world demo that showed how one could train, operationalize, and then call Azure ML models from within Azure Logic Apps. It is my intention to further refine the models used in this talk and share the full talk, sample code, hardware diagrams, etc. in the summer of 2017.

After I was carted away in a straight jacket, Richard Seroter gave a really cool talk on the intersection between microservices and messaging – and how when using both, one can realize seamless multi-cloud scenarios. It was a very well executed talk with fairly complex demos involving node.js services, java services (built using Spring Boot), and Logic Apps.

Undeterred by a ruthless cold that had claimed his voice, Jeff Hollan gave an excellent talk on the concept of serverless applications. He opened with an analogy comparing owning/renting/hiring a car with the equivalent on the server-side. He then looked to where serverless would lead the development of applications (i.e., API composition).

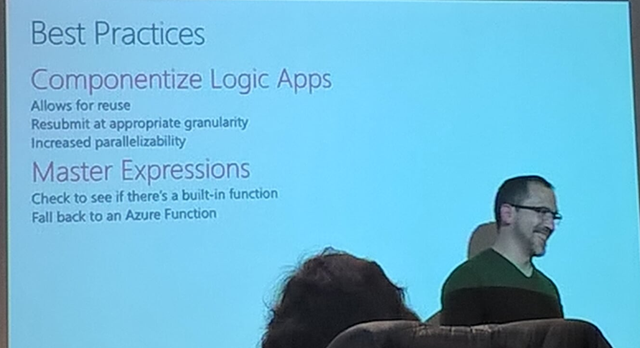

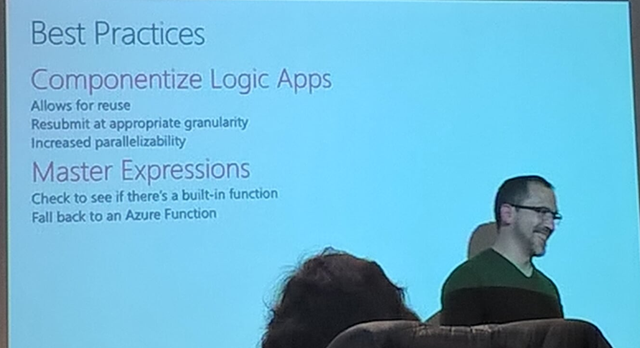

Kevin Lam wrapped up the day by going through a list of Enterprise Integration Patterns and the implementation required to make it happen on the Logic Apps side. He also addressed how to increase throughput for Service Bus connections, how to control parallelism, advanced scheduling and other fun goodies that I will likely put to quick use (and maybe follow-up with some blog posts on later). One thing did come as quite the surprise though – Sequential Convoys!

It was a great time, and I hope to be able to share more when I can. Thanks to everyone who attended, and I really hope you all had as great of a time as we did.

QuickLearn Training’s offices were just one of many locations for the event. Below is a short gallery of photos gathered from Twitter of other venues.

Belgium

Brisbane

Chicago

Finland

London

Melbourne

New York

(I haven’t been able to find a picture with the camera pointed the other way, but I get it, @wearsy is a model now after all).

New Zealand

Oslo

Portugal

Rotterdam

Sweden

by Nick Hauenstein | Jan 25, 2017 | BizTalk Community Blogs via Syndication

At the end of last week, a few of us from QuickLearn Training hosted a webinar with an overview of a few of the new features in BizTalk Server 2016. This post serves as a proper write-up of the feature that I shared and demonstrated – Shared Access Signature Support for Relay Adapters. If you missed it, we’ve made the full recording available on YouTube here. We’ve also clipped out just the section on Shared Access Signature Support for Relay Adapters over here – which might be good to watch before reading through this post.

While that feature is not the most flashy or even the most prominent on the What’s New in BizTalk Server 2016 page within the MSDN documentation, it should come as a nice relief for developers who want to host a service in BizTalk Server while exposing it to consumers in the cloud — with the least amount of overhead possible.

Shared Access Signature (SAS) Support for Relay Adapters

You can now use SAS authentication with the following adapters:

- WCF-BasicHttpRelay

- WCF-NetTcpRelay

- WCF-BasicHttp*

- WCF-WebHttp*

* = Used only for sending messages as a client

Why Use SAS Instead of ACS?

Before BizTalk Server 2016, our only security option for the BasicHttpRelay and NetTcpRelay adapters was the Microsoft Azure Access Control Service (ACS).

One of the main scenarios that the Access Control Service was designed for was Federated Identity. For simpler scenarios, wherein I don’t need claims mapping, or even the concept of a user, using ACS adds potentially unnecessary overhead to (1) the deployed resources (inasmuch as you must setup an ACS namespace alongside the resources you’re securing), and (2) the runtime communications.

Shared Access Signatures were designed more for fine-grained and time-limited authority delegation over resources. The holder of a key could sign and distribute small string-based tokens that define a resource a client could access and timeframe within which they were allowed to access the resource.

Hosting a Relay Secured by Shared Access Signatures

In order to expose a BizTalk hosted service in the cloud via Azure Relay, you must first create a namespace for the relay – a place for the cloud endpoint to be hosted. It’s at the namespace level that you can generate keys used for signing SAS tokens that allow BizTalk server to host a new relay, and tokens that allow clients to send messages to any of that namespace’s relays.

The generated keys are associated with policies that have certain associated claims / rights that each is allowed to delegate.

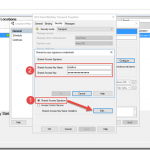

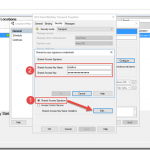

In the example above, using the key associated with the biztalkhost policy, I would be able to sign tokens that allow applications to listen at a relay endpoint within the namespace, but I would not be able to sign tokens allowing applications to Send messages to the same relays.

Clicking a policy reveals its keys. Each policy has 2 keys that can be independently refreshed, allowing you to roll over to new keys while giving a grace period in which the older keys are still valid.

Either one of these keys can be provided in the BizTalk Server WCF-BasicHttpRelay adapter configuration to host a new relay.

Configuring the Security Settings for the WCF-BasicHttpRelay Adapter

When configuring the WCF-BasicHttpRelay adapter, rather than providing a pre-signed token with a pre-determined expiration date, you provide the key directly. The adapter can then sign its own tokens that will be used to authorize access to the Relay namespace and listen for incoming connections. This is configured on the Security tab of the adapter properties.

If you would like to require clients to authenticate with the relay before they’re allowed to send messages, you can set the Relay client authentication type to RelayAccessToken:

From there it’s a matter of choosing your service endpoint, and then you’re on your way to a functioning Relay:

Once you Enable the Receive Location, you should be able to see a new WCF Relay with the same name appear in the Azure Portal for your Relay namespace. If not, check your configuration and try again.

Most importantly, your clients can update their endpoint addresses to call your new service in the cloud.

The Larger Picture: BizTalk Hybrid Cloud APIs

One thing to note about this setup, however, is that the WCF-BasicHttpRelay adapter is actually not running in the Isolated Host. In other words, rather than running as part of a site in IIS, it’s running in-process within the BizTalk Server Host Instance itself. While that provides far less complexity, it also sacrifices the ability to run the request through additional processing before it hits BizTalk Server (e.g., rate limiting, blacklisting, caching, URL rewriting, etc…). If I were hosting the service on-premises I would have this ability right out of the box. So what would I do in the cloud?

Using API Management with BizTalk Server

In the cloud, we have the ability to layer on other Azure services beyond just using the Azure Relay capability. One such service that might solve our dilemma described in the previous section would be Azure API Management.

Rather than having our clients call the relay directly (and thus having all message processing done by BizTalk Server), we can provide API Management itself a token to access to our BizTalk Hosted service. The end users of the service wouldn’t know the relay address directly, or have the required credentials to access it. Instead they would direct all of their calls to an endpoint in API Management.

API Management, like IIS, and like BizTalk Server, provide robust and customizable request and response pipelines. In the case of API Management, the definitions of what happens in these pipelines are called “policies.” There are both inbound policies and outbound policies. These policies can be configured for a whole service at a time, and/or only for specific operations. They enable patterns like translation, transformation, caching, and rewriting.

In my case, I’ve designed a quick and dirty policy that replaces the headers of an inbound message so that it goes from being a simple GET request to being a POST request with a SOAP message body. It enables caching, and at a base level implements rate-limiting for inbound requests. On the outbound side it translates the SOAP response to a JSON payload — effectively exposing our on-premises BizTalk Server hosted SOAP service as a cloud-accessible RESTful API.

So what does it look like in action? Below, you can see the submission of a request from the client’s perspective:

How does BizTalk Server see the input message? It sees something like this (note that the adapter has stripped away the SOAP envelope at this point in processing):

What about on the outbound side? What did BizTalk Server send back through the relay? It sent an XML message resembling the following:

If you’re really keen to dig into the technical details of the policy configuration that made this possible, they’re all here in their terrifying glory (click to open in a new window, and read slowly from top to bottom):

The token was generated with a quick and dirty purpose-built simple console app (the best kind).

Tips, Tricks, and Stumbling Blocks

Within the API Management policy shown above, you may have noticed the CDATA sections. This is mandatory where used. You’ll end up with some sad results if you don’t remember to escape any XML input you have, or the security token itself which includes unescaped XML entities.

Another interesting thing with the policy above is that the WCF-BasicHttpRelay adapter might choke while creating a BizTalk message out of the SOAP message constructed via the policy above (which includes heaps of whitespace so as to be human readable), failing with the following message The adapter WCF-BasicHttpRelay raised an error message. Details “System.InvalidOperationException: Text cannot be written outside the root element.

This can be fixed quite easily by adjusting the adapter properties so that they’re looking for the message body with the expression set to “*”.

Questions and Final Thoughts

During the webinar the following questions came up:

- Q: Is https supported?

- A: Yes, for both the relay itself and the API management endpoint.

- Q: Maximum size is 256KB; I was able to get a response about 800 KB; Is that because BizTalk and Azure apply the compression technology and after compression the 800KB response shrinks to about 56KB?

- A: The size limit mentioned applies to brokered messages within Service Bus. Azure Relay is a separate service that isn’t storing the message for any period of time – messages are streamed to the service host. Which means if BizTalk Server disconnects, the communication is terminated. There’s a nice article comparing the two communication styles over here.

I hope this has been both helpful and informative. Be sure to keep watching for more of QuickLearn Training’s coverage of New Features in BizTalk Server 2016, and our upcoming BizTalk Server 2016 training courses.

by Nick Hauenstein | Jul 27, 2016 | BizTalk Community Blogs via Syndication

Today the Logic Apps team has officially announced the general availability of Logic Apps! We’ve been following developments in the space since it was first unveiled back in December of 2014. The technology has certainly come a long way since then, and is certainly becoming capable of being a part of enterprise integration solutions in the cloud. A big congratulations is in order for the team that has carried it over the finish line (and that is already hard at work on the next batch of functionality that will be delivered)!

Along with hitting that ever important GA milestone, Logic Apps has recently added some new features that really improve the overall experience in using the product. The rest of this post will run through a few of those things.

Starter Templates

When you go and create a new Logic App today, rather than being given an empty slate and a dream, you are provided with some starter templates with which you can build some simple mash-ups that integrate different SaaS solutions with one another and automate common tasks. If you’d still rather roll up your sleeves and dig right into the code of a custom Logic App, there is nothing preventing you from starting from scratch.

Designer Support for Parallel Actions

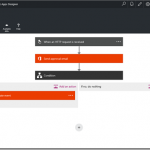

Ever since the designer went vertical, it has been very difficult to visualize the flow of actions whenever there were actions that could execute in parallel. No longer! You can now visualize the flow exactly as it will execute – even if there are actions that will be executing in parallel!

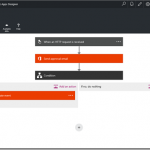

Logic Apps Run Monitoring

Another handy improvement to the visualization of your Logic Apps is the new runtime monitoring visualization provided in the portal. Instead of seeing a listing of each action in your flow alongside their statuses – with tens of clicks involved in taking in the full state of the flow at any given time – a brand new visualizer can be used to see everything in one shot.

The visualization captures essentially the same thing that you see in the Logic App designer, but shows both the inputs and the outputs on each card along with a green check mark (Success), red X (Failure), or gray X (skipped) in the top-right corner of the cards.

Additionally if you have a for each loop within your flow, you can actually drill into each iteration of the loop and see the associated inputs/outputs for that row of data.

Visual Studio Designer

There is one feature that you won’t see in the Azure portal. In fact, it’s designed for offline use – the Visual Studio designer for Logic Apps. The designer can be used to edit those Logic App definitions that you’d rather manage in source control as part of an Azure Resource Group project – so that you can take advantage of things like TFS for automated build and deploy of your Logic Apps to multiple environments

Unfortunately, at the moment you will not experience feature parity with the Azure Portal (i.e., it doesn’t do scopes or loops), but it can handle most needs and sure is snappy!

That being said, do note that at the moment, the Visual Studio designer is still in preview and the functionality is subject to change, and might have a few bugsies still lingering.

Much More

These are just a few of the features that stick out immediately while using the GA version of the product. However, depending on when you last used the product, you will find that there are lots of runtime improvements and expanded capabilities as well (e.g., being able to control the parallelism of the for each loops so that they can be forced to execute sequentially).

Be Prepared

So how can you be prepared to take your integrations to the next level? Well, I’m actually in the middle of teaching all of these things right now in QuickLearn Training’s Cloud-based Integration using Logic Apps class, and in my humble and biased opinion, it is the best source for getting up to speed in the world of build cloud integrations. I highly recommend it. There’s still a few slots left in the September run of the class if you’re interested in keeping up with the cutting edge, but don’t delay too long as we expect to see these classes fill up through the end of the year.

As always, have fun and do great things!

by Nick Hauenstein | May 22, 2016 | BizTalk Community Blogs via Syndication

In this post, I am going to try to capture in text the presentation that I gave at the Integrate 2016 conference over in London. Text is likely the worst medium in which to capture such a session, but, alas, I do realize that sometimes it is the best medium for proper digestion of such content. If you’d rather see it in video form, click here.

So with that, let’s pretend that you are sitting among fellow professionals in a beautiful room on the 3rd floor of ExCeL London – complete with bright colored lights to set the mood. A wild American then appears, flailing his arms and babbling about how it’s actually 3 AM, and we’ve all been deceived. Then he starts talking about food.

Getting Our Priorities Straight

The world of software development might be a better place if we approached our tasks in that world the way that we approach each meal. We don’t really start each meal by a trip to the racks or shelves that hold our appliances – thinking, “Well, I have a vegetable peeler, and a fondue pot, I guess that means we’re eating some melted Gruyère and Emmentaler mixed with white wine, and carrot strings for every meal.”

Usually, the way it actually works out is that I’m thinking about what I’m craving, the ingredients that I have on hand and their flavor/nutritional value relative to my needs. From there, I look to proven recipes that satisfy those things, and finally, reach for the specific tools needed to do the job. If I don’t have them, I acquire them, or fashion a workable approximation.

We have to be really careful that we, when approaching software development and integration, take the same approach as we would when crafting an excellent meal. An approach that looks first to the needs and constraints, then to proven patterns/recipes, and allows the tools used to flow from the rest – even to the point of crafting/buying new tools that we haven’t used before if necessary.

Business Challenges / Constraints

So, let’s imagine that we all work together now. We want to take the approach outlined above – one in which we have to consider the business need and the constraints that we may very well simply be stuck with. From there, we can consider proven patterns that might help us overcome, and then finally identify/acquire/create the tools required to get the job done.

Our company makes custom bobbleheads.

The way that it works is that a customer uploads a 3D model of their face, and then selects a pre-built body from the gallery. The 3D print of their face starts immediately so that the order can be shipped as soon as possible. The customer is permitted to take as long as they need to select the body from the gallery of pre-built bodies. Once they select a body, we attach the printed face to the chosen body and ship the assembled dolls to the customer.

So what happens behind the scenes that we can’t escape?

Well, sadly we don’t do greenfield development here. It’s not really brownfield development either. It’s more like a house haunted by ghost IT – shadow IT that has left.

Whenever a customer uploads their 3D face model, an XML notification message is created that contains the order id and a reference to their face model. At the time it was built, our developers emulated the BizTalk Server demos of the day and built distributed, fault-tolerant XML file copy operations whilst applying the wisdom of Chris Maden who has been quoted saying, “XML is like violence. If it doesn’t solve your problem, you’re not using enough of it.”

These same developers learned while attending conferences over the past few years that Dropbox is the next big thing in enterprise integration. They may have been wrong, but it’s now up to us to Make Dropbox Great Again™.

Once the customer selects a body for their doll, another XML message is created and dropped into the same folder in Dropbox with the same file naming conventions. Ultimately, we need to pair up the two components of the order – the head and body – in order to complete the processing.

Proven Patterns

It’s at this point that we would consult the great oracles of all wisdom and knowledge in the world of integration, Gregor Hohpe and Bobby Woolf. We will search through the patterns to find pieces that help solve each piece of the puzzle.

Which patterns might we find? Well, to handle the communication with Dropbox, we might utilize the adapter pattern in hopes that one day the data will be sourced from a different system. We could apply the pipes and filters pattern and build a reusable translation/transformation pipeline made up of reusable independent steps organized in the proper order to provide the required translation/transformation/message enrichment for each interface.

From there, we could apply the publish and subscribe pattern to enable loosely coupled communication between the source system and any number of downstream subscribers – maybe routing the message in a content-based fashion. We could also layer on top of this a process manager to enable content-based correlation.

Tools

How would we use these patterns in concert with the tools we have/don’t have? BizTalk Server might seem like a natural fit.

It already provides for us the concept of a port that begins with an adapter which delivers a byte stream to a pipes and filters style pipeline responsible for translation of the message and promotion of context properties used for routing, this is followed by a transform before publishing to the message box. From there, we have process managers in the form of BizTalk Orchestrations that understand the concept of content-based correlation of published messages, allowing them to be reunited by the messaging engine. You get adapters, pipelines, maps, orchestration out of the box – and publish subscribe whether you want it or not!

It’s already bringing to the table everything I need. Everything but a handy Dropbox adapter. Now, I know that we could always build our own, or use out of the box adapters with ungodly amounts of WCF extensions to make some magic happen, but maybe that won’t be our best bet here.

So, let’s set that aside for a moment, and consider what might become possible if we started using Logic Apps like this? It’s really the same question I posed before about MABS.

In this case, we’re marrying Logic Apps and Service Bus. We have some Logic Apps that act in a similar fashion as BizTalk Ports. They provide adaptation and message enrichment and transformation through the use of relevant API apps for those concerns. Others act more like BizTalk Server orchestrations, coordinating the sends and receives of messages and operating on the content.

The messages are routed to the “orchestration” style Logic Apps through Service Bus. Each flow is triggered by subscribing to messages that arrive on a given Service Bus topic subscription (pre-created). Correlation can then be enabled by subscriptions dynamically created mid-flow.

At this point, you may have the following thought (which I humbly share indeed):

Demo Walkthrough

This isn’t all just a pipe dream – it’s real. I’ve built it. So, let’s see how it can fit together. The flow kicks off with an XML message. For this message, I have created a BizTalk Server 2016 schema (i.e., a regular XML schema with special notations about properties that should be promoted to the message context for routing purposes). The message looks like this:

The message contains a promoted OrderId property that we should be able to correlate on. In other words, the second message that will show up in Dropbox for the order should also contain the same OrderId value – which allows us to determine that they are indeed related messages. The first message also contains a reference to the head for the bobblehead doll that we will be printing.

When this message is uploaded to Dropbox, it will be picked up by our “Port” style Logic App that looks like this:

The first API App after the Dropbox receive is a custom API app that essentially builds a context property bag when it is passed an XML payload. It does this by comparing the document to a BizTalk schema, and using the instructions in the schema to “promote” properties by extracting the relevant content. It takes two inputs to operate.

The first input is a URL to the root of an Azure Blob Storage container that contains BizTalk schemas. It will use these schemas to perform message type resolution and property promotion. The second input is a string containing the entirety of an XML message. Not exactly the screaming performance of a forward-only streaming pipeline component, but it gets the job done, considering we’re already taking on latency to get to the cloud in the first place.

The output of that API app looks like this (click to enlarge):

The next API app takes the payload, along with the property bag (which it treats as a set of brokered message properties) and publishes the message to an Azure Service Bus Topic. This is just the out of the box connector using the outputs of our custom API app. The call out to that app looks like this:

This published message is picked up by our Logic App that is acting like an Orchestration. That Logic App has a pre-defined subscription on the same service bus topic for any message with a Message Type of Print Job.

After the message is received, the Logic App must quickly setup a subscription for any related messages that come in for that order. Unfortunately, the out of the box connector for Service Bus doesn’t yet have a way to create a new subscription – only ways to subscribe for messages on an existing subscription.

Thus, we will have to use a custom API app to create a subscription unique to this running instance of the Logic App – one that is based on the OrderId property of the received message. To provide this capability, we have a custom API app called CreateInstanceSubscription.

It requires quite a few inputs to function since we don’t yet have the capability of reading details from a stored API connection in a custom API app.

The API takes in a Correlation Property property, which contains the name of the property that is shared in common between the message that triggered the Logic App instance and the message that will be correlated with this running instance.

It also takes in the Message Type (in the namespace + # + root node name format) of the next expected message. Both of these properties will be used to create a new subscription on the service bus topic referenced by the last two configuration properties (Service Bus Topic, and Service Bus Connection String).

After it executes, we might expected to see a subscription like the following (click to enlarge):

Now that we have the subscription created, we can take our time with the rest of the process until we absolutely require the second message. In this case, we’re calling another custom API which provides a visualization of the received messages. In order to read the content, we can either use the xpath() function of Logic Apps to read the XML directly, or we can covert it to JSON first using the json() function, and then simply dot into it. I decided to use the JSON function since I hadn’t attempted to use it in a situation like this yet. It was okay (it was pretty darn verbose). xpath() would have been a better choice here – and the more natural choice given an XML payload.

This yields the following visualization (a body-less bobblehead awaiting its correlated message containing information about which body to use):

And we would expect that there is both an instance subscription in service bus and a Logic App that is still actively processing – waiting for Service Bus to re-activate it with a new message.

At this point, the new message can arrive at any moment in time. It will land in the same Dropbox folder, and process through the same Logic App serving as a “Port” – with the same XML property promotion, and Service Bus publishing action. It will land in the same Service Bus topic as before, and with a matching order id to the originally submitted message.

This second message carries some new information, however. In this case, it contains the body that the customer selected for their custom bobblehead doll.

Once published to the topic, the instance subscription previously created by the second Logic App in the process will be matched by a listening Service Bus connector.

The connector uses the topic name and instance subscription name passed to it from the Create Instance Subscription API App. The name of the subscription will be a randomly generated id for that running instance of the Logic App.

Now that we have the message, it’s time to ensure that we don’t bring the problem of zombies into the world of Logic Apps. There is a step that follows which will clean up the instance subscription for the Logic App before continuing with the final bits of the process.

Again, since the OOTB Service Bus connector does not contain any operations for managing subscriptions, the custom API App is called to delete the instance subscription using the details returned from the original call to the API which created it.

After that, it’s back off to the bobblehead visualizer with the details from the correlated message received.

Call to Action

So that’s pretty cool. We can now stand with confidence and proclaim that content-based correlation is possible with Logic Apps! However, it was built out of necessity, and required custom crafted components – as is often the case with anything worth doing.

You may be wondering why this talk was titled API Apps 101 for BizTalk Developers. I didn’t really tell you how to create API apps. Instead, I showed that API apps behave in a fashion similar to different components within BizTalk Server (adapters, pipeline components, orchestration shapes, etc…). I don’t want to leave you hanging though, because we are at a point in time where there is a golden opportunity to make your mark in the foundations of this new world.

This is the ground floor of Logic Apps and API Apps. As BizTalk Server developers, we know the required ingredients of enterprise integrations. We know the recipes for success. It’s just a matter of crafting some additional tools for use in the world of Logic Apps, and for the first time we have a unified marketplace to share and even sell these components.

From working on BizTalk Server integrations, we know that we will need custom API apps that can serve as adapters, pipeline components, and pattern utility apps (e.g., content-based correlators). In fact, you may have built such things before. It’s honestly not that difficult to port those things over into this new world of integration (where it makes sense) and reap the rewards. If you need inspiration, check out the listings of such components that have already been created for BizTalk Server. Each component represents a solution to a specific integration challenge – many of which are timeless challenges.

We write BizTalk components and API Apps in the same languages, though with different techniques, and targeting a different runtime.

How do we make that all happen? Well, today we are providing the world with “the goods”. All of the slides from this talk, a sample module from the February 2016 version of our Cloud-based Integration Using Azure App Service course, and all of the code involved in the demo. With those combined resources, you should be set on the right track to start building custom API Apps for use in Logic Apps – leveraging skills and work you’ve already accomplished.

If you’re ready to get started, click the image below to download the resources:

Until Next Time

That’s all for now! Again, go forth and create API apps and come visit us in our Cloud-based Integration Using Azure App Service course if you’d like to learn more.

I’ll leave Simon Young with the final word – and dining tip!

by Nick Hauenstein | May 4, 2016 | BizTalk Community Blogs via Syndication

I’m happy to announce that I’ve been asked to speak at the BizTalk 360 Integrate 2016 conference May 11th-13th in London. When brainstorming for the session topics I had lots of ideas of fun things that could be accomplished with Logic Apps and API Apps, but decided to take things in a slightly different direction.

Over the last few years, I’ve seen BizTalk Server developers who are skilled as general .NET developers, but who may not have the time or energy to keep up-to-date with the evolution of Logic Apps, API Apps – and all of the things that come with them Web API, node.js, Swagger, etc. That is perfectly understandable because as a developer building enterprise integrations exchanging X12 or EDIFACT data using BizTalk Server, you might not have needed to interact with JSON serialization in the past.

This Will Make Your Dreams Come True

This last year, my team and I have been working hard to stay on top of changes to Microsoft’s cloud-based integration technologies. Time and again we’ve fallen, and seen others do it as well, into the trap of looking at a tool and then trying to figure out how it can solve all of our problems – even to the point of searching out problems (imagined or otherwise) that it could tackle. It happens anytime there’s a sufficiently impressive tool, or sufficiently impressive salesperson (or both). Go ahead, click the link, I’ll wait. But if you do click that link, you will end up trying to find how you too could buy a 10 pack of vegetable peelers. Then you would be trying to figure out how to incorporate zucchini strands into dessert.

Getting Back on Track

Whenever that happens we’ve been able to course correct by forgetting about the tool and focusing on the problem we want to solve, or even better, the ideal solution to the problem. When describing the solution using Enterprise Integration Patterns as our vocabulary, we can quickly model an answer that works without assigning a particular tool or technology. Maybe a given solution needs a content enricher, or a resequencer, or guaranteed delivery, or whatever – not necessarily a specific technology applied.

As a BizTalk Developer, I know how to implement these patterns using BizTalk; the real question that we keep running into is can we implement these patterns using API Apps and/or Logic Apps – and better yet should we?

My session is designed to help BizTalk Developers learn how to answer those questions for themselves. Specifically, identifying the capabilities of the new additions to our toolset, and then showing how to use them without assumptions of in-depth knowledge of the underpinnings. In the talk, you will see API App that creates promoted properties from an XML document (just like BizTalk Server) so that we could then potentially reach out to other Azure capabilities and implement publish-subscribe in concert with content-based routing and correlation.

by Nick Hauenstein | Mar 9, 2016 | BizTalk Community Blogs via Syndication

Much has happened in the world of Logic Apps and API Apps since the original announcement back in December of 2014. We have seen the continued development of SaaS connectivity within the product, along with the overall expansion of integration capabilities. We have also seen the team responding to customer feedback actively while maintaining transparency in the process, and even providing a roadmap to give insight into what is coming and when we can expect to see the sweet moment that is GA.

Sometimes, customer feedback causes fairly large shifts in the underlying product. Such is the case seen in the latest updates for the product in the form of a completely overhauled designer, new feature support for triggering flows (i.e., any action can be a trigger), and an API deployment model that is more consistent with the rest of App Service and does not require a dedicated gateway.

New Designer

One of the most obvious changes that will stick out immediately as you go out to create a Logic App is the new designer that moved over into App Service from Power Apps.

The new designer supports editing workflows build using the updated workflow language (schema version: 2015-08-01-preview), and sports a vertical layout, rather than a horizontal layout, and conditions that appear to wrap around actions instead of being embedded inside actions (though the code view demonstrates that the underlying behavior is similar).

You might also notice that the experience of adding actions is much quicker, as this act no longer provisions a new instance of an API App within your own subscription. Instead in an interesting reversal, Microsoft hosts managed instances of out-of-the-box API Apps. The result is that configuration information is sent as part of the request, instead of stored inside the API App container, and you will have far more simplified ARM deployment templates given that your deployment will no longer need to take into account each API App used by your Logic Apps.

So how do my own custom API Apps end up in the list? Well, you can apply to have them registered in the Azure Marketplace, or you can use the Http + Swagger action in order to point to a custom API App that already exists. Of course that brings us to the question of what it looks like to actually build a custom API App in this refresh of the preview.

New API App Development Model

In the preview refresh, the process to develop and consume a custom API using the designer is quite a bit different. You still have the ability to use swagger extensions for a clean designer experience – but there are new extensions intended to take advantage of new designer capabilities. These capabilities include things like dynamic schemas for parameters / return types of API (imagine a different object shape depending on the type of entity within a CRM system, or a table within a database), and dynamic values for enumerations.

The biggest change here though is that we no longer have a gateway managing authentication, internal storage, or configuration for our APIs, and get to manage that ourselves, but as a side effect, we’re no longer constrained by where our APIs live – all APIs get the same first class experience.

I would definitely recommend taking some time to read each link within this article before starting out on building a new API. I’m working on building out updates to T-Rex to help with the metadata – while also providing a few example APIs to take advantage of all of the new capabilities – but if you want a head start, the knowledge is out there!

New Triggering Capabilities

What other changes are under the hood that you should know about? Well, you may have noticed the announcement of the availability of Webhooks for Logic apps for one, or even saw the x-ms-trigger extension called out in the article linked above. The end result of this is that any action within a Logic App can have a polling trigger style behavior, or even an async push style behavior, and the Logic App itself can be triggered manually at an endpoint that isn’t tied to a specific Azure subscription.

We can see some of these changes in action as we look at actions like the Send approval email action from the Office 365 connector/API. The action sends an email, and then notifies the Logic App what the response is when the response is available – without polling.

It even includes the shape of the notification as part of the swagger metadata that is exposed, so that the designer can support using the shape of that async output in later steps. The result is that as a developer, I can use the action to build what looks like a synchronous flow without the complexity of an async flow, and yet I’m benefitting from the performance characteristics of the async implementation (i.e., immediate notification when the event happens rather than polling at a fixed or variable interval).

What Are We Doing About It?

Reading about all of this might have you wondering what QuickLearn Training is doing about all of this, and/or what you should do about all of this.

Well, I (Nick Hauenstein) am hard at work on an update to QuickLearn’s T-Rex metadata library that takes into account the new way to build API Apps. I’m on target to wrap up the core code by end of week, and hopefully have some decent sample apps out there shortly thereafter.

We’re all busy learning everything we can about the new functionality so that we can rapidly integrate those changes into our Cloud-Based Integration Using Azure App Service course.

In the meantime, keep an eye out for announcements from the BizTalk 360 folks about Integrate 2016 Europe. You might be able to meet up with myself (Nick Hauenstein), Rob Callaway, or John Callaway to talk about BizTalk Server, or any of the things in this post. Also watch for the next release of TRex on NuGet which will include support for all of the new goodies we have available in Logic Apps.

In the meantime, take care, and have fun building great things!

by Nick Hauenstein | Jan 10, 2016 | BizTalk Community Blogs via Syndication

I’m finally settling back into the swing of things as we kick off the year 2016! It has been quite a relaxing break, spending Christmas and New Year’s with my family out in the woods of Snohomish, WA. Since getting back to the office, I’ve been catching up on quite the backlog of emails. Among them was an email that called out a file that was uploaded to the Microsoft download site at the end of last month – the long awaited BizTalk Server Roadmap for 2016 or should I say the Microsoft Integration Roadmap (more on that to below).

Continued Commitment to BizTalk Server

The document opens up with a bullet pointed summary of the core takeaways (I for one appreciate that it leads with the TLDR):

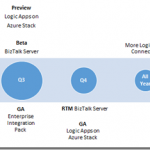

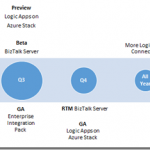

- Continuing commitment to BizTalk Server, with our 10th release of BizTalk Server in Q4 2016.

- Expansion of our iPaaS vision to provide a comprehensive and compelling integration offering spanning both traditional and modern integration requirements. Preview refresh in January 2016 and General Availability (GA) in April 2016.

- Deliver our iPaaS offering on premises through Logic Apps on Azure Stack in preview around Q3 2016 and GA around end of the year.

- Strong roadmap and significant investments to ensure we continue to be recognized as a market leader in integration.

- The next release of Host Integration Server is planned on the same timeline as BizTalk Server below.

That’s right; 2016 is the year where we start to see Microsoft’s integration investments in the cloud start to pay dividends on-premises – with two complementary offerings that each offer their own approach to solving integration challenges while still ensuring that you can build mission critical BizTalk Server integrations on the latest Microsoft platform. Though Microsoft is expanding the integration toolbox beyond just BizTalk Server, the focus is still firm on Integration, and the tools are built on proven platforms with a proven infrastructure.

BizTalk Server 2016 New Features

So what can we expect in BizTalk Server 2016?

- Platform alignment – SQL 2016, Windows Server 2016, Office 2016 and latest release of Visual Studio.

- BizTalk support for SQL 2016 AlwaysOn Availability Groups both on-premises and in Azure IaaS to provide high availability (HA).

- HA production workloads supported in Azure IaaS.

- Tighter integration between BizTalk Server and API connectors to enable BizTalk Server to consume our cloud connectors such as SalesForce.Com and O365 more easily.

- Numerous enhancements including

-

- Improved SFTP adapter,

- Improved WCF NetTcpRelay adapter with SAS support

- WCF-SAP adapter based on NCo (.NET library)

- SHA2 support

- Host Integration Server “2016”

-

- New and improved BizTalk adapters for Informix, MQ & DB2

- Improvements to PowerShell integration, and installation and configuration

I don’t know about you, but I’m fairly excited to see this listing. With the death of SHA1 certificates this year, it’s good to see SHA2 support finally coming into BizTalk Server 2016, if for nothing else, then for SHA2 a BizTalk Server 2016 upgrade is going to be a must.

Also, notice the tighter integration between BizTalk Server and API connectors. That’s fantastic! One thing that Logic Apps do really well is provide friendly connectivity to SaaS endpoints. One thing they don’t do as well is content-based correlation and long -running transactions. One thing that BizTalk Server doesn’t do too well is provide friendly connectivity to SaaS endpoints (there is generic REST connectivity, but you’re going to be wishing that you would have built/bought/downloaded an adapter once you start going down that road). One thing that BizTalk Server does really well is content-based correlation and long-running transactions. Here we’re seeing the best of Azure App Service Logic Apps meeting the best of BizTalk Server. That should make anyone happy.

An Integration Taxonomy

One interesting thing found in the roadmap is a brief discussion of an integration taxonomy that makes a distinction between “Modern Integration” – which is usually SaaS and web-centric, based in the cloud, and within the realm of Web and mobile developers — and “Enterprise Integration” – which includes support for industry standards (e.g., X12, EDIFACT, etc…), targets mission critical workloads, and caters more towards enterprise integration specialists.

In a way, this sets the context for the two core integration offerings of BizTalk Server and Logic Apps – defining the persona that might gravitate towards each. However, Logic Apps will offer an Enterprise Integration Pack for the pro developer that wants the power of BizTalk Server with the elasticity of a PaaS offering.

Where Is This Going?

Well, you might be reading this because you’re passionate about Logic Apps; you might be reading this if you’ve been working with BizTalk Server since the year 2000. Either way, you’re in the business of doing integration. MIcrosoft isn’t interested in building up cliques of developers, but instead catering to all while providing an easy to use location agnostic (cloud/on-prem) rock solid, highly scalable platform for mission critical integration.

The focus is on evolving capabilities, it doesn’t matter what brand name is slapped on the side of it (whether it’s Logic Apps, Power Apps, or BizTalk Server), Microsoft is committed to making the world of enterprise integration a better place!

by Nick Hauenstein | Nov 1, 2015 | BizTalk Community Blogs via Syndication

This blog post serves as a quick recap of and expansion on my October 19th Integration Monday talk titled Building Push Triggers for Logic Apps. You can view the session and look through the slides over at integrationusergroup.com.

In the talk, I explored the bare minimum requirements for building push triggers, expanding on my AzureCon 2015 talk about a specific push trigger for dealing with NFC tag reads. I showed how you could use the QuickLearn Push Trigger Tools and QuickLearn Push Trigger Client Tools to implement a simple interface for storing callbacks, and build a re-usable set of callback storage mechanisms.

I also introduced the Push-Button Push Trigger. A push trigger that responds to a button press on a Windows 10 IoT device (in this case a Raspberry PI 2), relying on Azure Storage for callback storage. In the remainder of this post, I’m going to show you how to get your own Push-Button Push Trigger up and running.

Where Do I Get a Push-Button Push Trigger?

At the moment, there are 2 ways that you can get one. You can come out to QuickLearn’s 5-day Cloud-Based Integration using Azure App Service course (or attend remotely), or you can build one for yourself!

Even if you’ve never worked with anything like this before — don’t panic. You can’t really get something simpler than this.

Essentially you’ll need a Windows 10 IoT device (Raspberry Pi 2, DragonBoard 410C, MinnowBoard Max, etc…), a momentary switch (button), some wiring to wire the button up to a GPIO port and ground, and optionally a breadboard for even more fun later. I went with a Raspberry Pi 2 for mine, but if I could do it again, I would have chosen a DragonBoard 410C given its built-in Wi-Fi capabilities that don’t require an additional accessory or external module.

To get started with developing, you will need some software on your own machine (Visual Studio, Windows 10 IoT Project Templates, etc…) and you will need to get Windows 10 IoT Core onto your device. Microsoft has provided a pretty decent write-up of that part of the procedure over here.

Assembling Your PusH-Button Push Trigger

You may or may not have a case to go along with your Push-Button Push Trigger. I ended up buying the cheapest case I could find on the internet. This was likely a poor choice as it quickly disintegrated and pieces chipped off. Since then, I’ve had a lot better luck with this one. Of course, you could always print/build your own custom enclosure as well.

First things first, make sure your device has Windows IoT by inserting a prepared MicroSD card into the device.

Next, place your device in its case (if applicable). Mine has a mini-breadboard mounted on top for easier portability of the device.

Now, on to the wiring. We’re going to wire up a wire to GPIO pin 4 (chosen randomly), and another wire up to ground. Eventually we’re going to put a momentary switch inbetween so that we can quickly toggle that connection between high/low.

Let’s get that switch up on the breadboard (and make sure we put on a nice and colorful cover).

You can see in the image above how the posts are reaching down into the board. To connect wires to those pins, you will simply plug in one of the jumper wires to the same row as one of the pins.

One connection down, one to go.

At this point, everything is wired up, and it’s time to get power and internet to the device.

How Do I Make The Sample Code Work?

First of all, you can find the sample code over at https://github.com/nihaue/PushButtonPushTrigger. You can either download it as a ZIP file if you’re not comfortable with Git, or if you are comfortable with Git, you can clone it directly from here: https://github.com/nihaue/PushButtonPushTrigger.git

Once you have the sample downloaded, you should immediately Build the code to restore NuGet packages, and make all of the references happy. Next, you should take some time to look through the CallbacksController class for the Push Trigger (the part actually hosted in Azure with which the Logic App registers its interest in certain data), and the StartupTask class for the Universal Windows App (the part that actually looks for and handles the button press):

After you have a decent understanding of what’s going on, you’ll realize that the CallbacksController is storing callbacks from interested Logic Apps in Azure Table Storage, and the StartupTask (think background service on the device) is reading callbacks from Azure Table Storage when the button is pressed (moving this code to initialization and caching/polling for updates would be a better choice – and something you’re free to implement). So in order to get this thing working, you’re going to need an Azure Storage account.

If you don’t already have an Azure Storage account, head over to the Azure Portal and create one.

The only thing you need from this storage account will be the connection string, which you can find after it’s created over here:

With those credentials in hand, you’ll need to visit the two files in the solution responsible for storing configuration. They’re both named AzureStorageConfig.cs.

Inside that file, you will see a line of code with a TODO comment indicating that you should paste your connection string for your Azure Storage account in that location. This is indeed your next step (make sure to do it in both the code for the API App that lives in Azure, and the code for the device itself).

Ultimately, this is a terrible way to handle configuration. You can get the sample working with a simple copy/paste in that file, but the intent is that you would simply decide for yourself how you’d like to manage the configuration and creation of the CloudStorageAccount instance, and make that instance available through the StorageAccount property of the AzureStorageConfig class. This instance is used in both the AzureStorageCallbackStore and the AzureStorageClientCallbackStore classes.

Publishing the Code to Azure

We’re now ready to get this code all in place and running. The first step toward that goal will be to publish the API App project. You can do this by right-clicking the QuickLearn.ButtonPress.PushTrigger project, and then clicking Publish.

Make sure to select Microsoft Azure API Apps (Preview) as the target.

In the Microsoft Azure API Apps window, select your Azure Subscription, and then click New… Fill out the form to create a new API App container into which you can deploy your code.

Once the creation of the container is complete, you will see the following status message appear in Visual Studio.

Then, you will once again right-click the project and then click Publish… This time, the form will be pre-filled with the settings from the publish profile of the Azure API App container that you just provisioned. You might find it helpful to deploy the Debug configuration of your API App (Settings > Configuration > Debug – Any CPU), but that is entirely up to you. Once you click Publish, your code will be deployed to the API App container, and the API App will be usable within a Logic App.

Next up, we will deploy code to the device, and configure it to run in the background.

Deploy the Code to the Device

First of all, you will need to edit the project properties for the QuickLearn.ButtonPress.App project so that it attempts deployment to the correct device. In this case, that will mean navigating to the Debug tab, setting the Target device to Remote machine, the Remote machine to the name of your Windows IoT Core device (default: minwinpc), and then unchecking the Use authentication box.

You will want to make sure to save the project properties, get your device connected to the same network as your development machine (laptop in my case). Next, you can right-click QuickLearn.ButtonPress.App, and click Deploy.

Once deployment is complete, head over to the Windows IoT Core Watcher utility that ended up on your system after installing everything that you needed to get your device setup initially. If you can’t find it, reboot your system and it will be there waiting for you. The Windows IoT Core Watcher utility finds IoT Core devices on your network and provides quick links to gain access and configure them.

In the utility, right-click your device, and then click Web Browser here.

Login using your user name and password (default is Administrator / p@ssw0rd).

Next, head over to the Apps tab, and verify that QuickLearn.ButtonPress shows up in the list. You will want to note the full name as it appears here because you will need it in a few minutes.

Since this app was created as a Startup Task rather than as a graphical application, you will need to register it with the device to be run on startup. At the moment, this is not something that you can accomplish in the browser. Instead, you will need to fire up PowerShell for this next bit.

In PowerShell, you will need to enter a remote session on your device. You can do this using the Enter-PSSession cmdlet like this:

The connection process will take a while. Just get a cup of coffee, and when you have it ready, the session should be connected. Once connected, you are in PowerShell on the device, and are executing commands against the device (not your own local machine).

On the device is a utility called iotstartup. This utility provides access to configure what tasks run at device startup. In this case, you want to configure the device to constantly be running the Push-Button Push Trigger code in the background.

At the prompt, type iotstartup add headless “QuickLearn.ButtonPress.*”

Verify that what the app added matches exactly what appeared in the list on the device web page that you examined earlier. At the prompt type, shutdown /r /t 0

This will cause the device to reboot and your application to start up. It may take 60-90 seconds for the reboot to complete.

Building and Testing a Logic App using the Push-Button Push Trigger

In the Azure Portal, create a new empty Logic App in the same resource group in which you deployed the Push Button Push Trigger API App (otherwise the API App won’t be available to select in the designer). In the Logic App designer, in the API Apps pane (which you may have to expand in order to see), click QuickLearn.ButtonPress.PushTrigger.

Configure the Push Trigger as shown, and then click the green check mark to save the settings.

After the push trigger, add any other actions to your Logic App that you wish. Maybe this triggers a build in TFS, maybe it connects to a device that opens a door, maybe it brews you coffee remotely, maybe it posts a message in a chat service, maybe it closes out the latest support ticket that you were working on in your help desk system – it’s up to you. For me, I’m going to add a simple HTTP action (since it’s built into the runtime), and have it POST a message to a requestb.in indicating that the button has been pressed.

Save the Logic App, and it’s all ready to go!

PUSH THE BUTTON

There’s only one thing left to do – push the button. If everything has been setup correctly, the Logic App’s callback should be invoked and magic should happen in the cloud.

What If It Didn’t Work?

Well, there’s a few troubleshooting things you can do. Using the Cloud Explorer window (part of the Azure SDK) in Visual Studio, you can navigate to your API App, right-click and then click Attach Debugger. You can set breakpoints within the callback registration method of the controller class, and step through looking for problems as the Logic App registers the callback. This only happens when the trigger is first added to the Logic App (after clicking Save), and then every hour or so after that (assuming it worked on the first try).

You can see past registrations of the callback by navigating to the trigger history for the Logic App. If you see a string of failures there, it’s likely a bug in the callback registration code, or your storage account credentials.

Clicking any one of those line items will bring up the details (inputs/outputs) for debugging. If you want to attempt a callback registration manually (so that you can do it on demand), you can use the Swagger UI page for the API App, and manually fire the callback registration method.

The above screenshots were generated by replacing the configuration details for the Cloud Storage account with completely invalid data.

If everything looks good as far as the API App in Azure is concerned, you may want to debug the Windows IoT Core task from within Visual Studio. This can be done by right-clicking the project and then clicking Start Debugging (nothing special there).

The End

That’s all for now. Stay-tuned for more samples, and course updates!

by Nick Hauenstein | Sep 8, 2015 | BizTalk Community Blogs via Syndication

This post is designed to serve as a companion to my AzureCon 2015 talk titled Processing NFC Tag Reads in a Logic App. As a result, I will be recapping the talk and digging a little bit deeper into the code behind the talk. If you’d like to jump straight into the code, you can find the completed source code here, or head down to the section titled Writing the Device Code.

By the time we’re through, we’ll see what it would look like to build a solution that reads vCard data (virtual business cards) from conference attendee badges, and uses those tag read events to trigger a Logic App which imports that information as Sales Lead records into both SugarCRM and Salesforce. Are you ready?

Let’s Start with a Story

Let’s imagine for a moment that you are working for a hot tech start-up and you’re showing off the full capabilities of your products at a trade show. You’re one of many booths on the exhibition floor hoping to have the opportunity to connect with customers so that you can tell them how their lives could be better with your products. What might those customer interactions look like?

You’re going to have attendees approaching the booth, not quite knowing what to expect – maybe hoping to grab some free swag. You might make eye contact, strike up a conversation, and mutually discover that they would benefit greatly from having your product in their lives. So you say “Hey can I scan your conference badge? Not only will I be able to get in touch with you later this week, but you’ll also be entered to win our super awesome contest!” At this point, everything looks really slick as you quickly tap their badge with a mobile device and their data is zapped into position.

But what really happens?

Well, in a lot of cases, after the conference, the data is removed from the scanning devices, loaded up into a CSV file, and returned to the exhibitors via email and/or through a dedicated purpose-built website. From there, it is up to mere mortal human beings to forget that data for a few days, before eventually downloading the attachment, navigating to the CRM website of choice (Dynamics, Salesforce, SugarCRM, etc…), and finally uploading the data where it actually belongs — just in time for it to be too late to make the sale. Your customer has gone with the competitors and will lead a far less fulfilling life.

There’s something a little barbaric in all of this. There’s a lot of ceremony going on over not a lot of bytes of data. While it looks really cool to the customer on the trade show floor, the experience doesn’t translate the second you’ve crammed your life back into two suitcases waiting for the moment that you can be home so that the trees can just stand still for a while.

There must be a better way.

A few years back, at the ALM Summit 3 conference in Redmond, WA, James Whittaker asserted that a new era of computing has begun – the Know and Do Era.

Before this era, we had the Store and Compute era, which drove how we interacted with data all the way up through the late 90’s. In the Store and Compute era, we focused our efforts as developers on building Applications that worked with Files. The early 2000’s through 2012 saw the dominance of the Search and Browse era, in which we built Web Pages, Web Services, and even Apps to extend the reach of that data, and make it more discoverable. The Know and Do era is one that is and will be focused on building Experiences. Experiences allow interactions with data that are painted on a canvas of time and space, agnostic of the device you have at your disposal. It’s an era where our devices become the agents of our will and use available signals to make things happen.

That sounds really cool, but seriously, how do I build it? Do I create an application? A web page? An app? Where’s the Visual Studio project template for “Experience”.

Well, here’s the thing about that – experiences don’t live in one place. They don’t deal with a small slice of data that lives in one place. They are integrations between smart things, with action brokers like Logic Apps, with data normalizers, and enrichers, and rules-based processing. They’re distributed applications that take smart devices with sensors and signals available to them, join them with data repositories (no matter how narrowly focused or curated) and make those devices seem magical.

So, what makes Logic Apps a good fit? Well, for one, they’re hosted in the cloud. I’m not going to write code for every device to be able to integrate with Salesforce, SugarCRM, Dropbox, SharePoint, Oracle databases, and a random file share back at my office – nor am I necessarily going to be able to anticipate the future integration needs of code already deployed. Instead of putting the burden on the device, I’m shifting those concerns to the cloud (you can argue whether or not that’s for better or worse amongst yourselves – in this scenario, I think it’s a good fit).

Second, with Logic Apps, I have the full Azure Marketplace full of connectors and actions at my disposal, and if I don’t find something there that meets my needs, then I’m free to write my own.

So, what does the same story we started earlier look like with a Logic App in play? We’re going to throw away all of the ceremony around manually juggling CSV files and instead focus directly on getting those hot sales leads into our CRM system directly. As the badges are scanned, the device that reads them will trigger a Logic App. That Logic App will do the work of parsing out the information read from the tag, and it will then pass that data along to the CRM system using the out of the box connectors.

How Does the Data Get to the Logic App as it Becomes Available?

That’s an excellent question! Logic Apps start processing whenever they’re triggered. They support manual invocation, invocation through a webhook, and through polling and push trigger API Apps (and yes, I have written about how to build a Polling Trigger API App). In this case, a push-style trigger would be a decent choice (not to discount something like an Event Hub or Service Bus in general, depending on application load).

A Push Trigger API App essentially sits waiting for a Logic App to indicate its interest in some event. The way that it does this is through an HTTP PUT request that includes both the URI and credentials of where to POST data, should it become available, as well as the configuration for the trigger (e.g., don’t send me the same tag read twice in a row, don’t send me tag reads if your GPS location is within the main company office, etc…) As the author of a Push Trigger API App, you get to decide the shape of that configuration data, and you define it simply as a .NET class. The Logic App designer will display this configuration data on the card for your trigger.

The Push Trigger API App will then take this information and store it somewhere, so that when data is available, it (or some other app) can use that URL and credentials to call the Logic App back. In the case of our NFC conference badge scenario, it’s going to be a device that will trigger the Logic App.

What about the storage mechanism? What shall we use for that? Well in the spirit of using as much of Azure App Service in a single demo as possible, I decided to go with an Azure Mobile App as my backend for both the Push Trigger API App and the Universal Windows app that will be running across devices.

Writing the Device Code

Let’s take a look at what we’re going to start with. We’re going to start with a set of projects that looks like this:

From top to bottom we have an API App project titled NfcPushTrigger, a Universal Windows 10 app titled NfcClientApp, and a shared portable class library called DataModels that will contain classes representing the shape of data in our Azure Mobile App, and the configuration / output of the trigger (currently empty).

Let’s crack open that device app and build out a really compelling UI:

Well, that’s enough XAML for me, let’s go write some C# instead. I’m going to switch over to the code, while you gaze upon this work of art.

Okay. Over in the code, things are going well. I have all the standard default stuff and I’m ready to start interacting with the NFC reader attached to my laptop. The class that I use to get access to the NFC Reader on my laptop – or really any device that supports it – is the ProximityDevice class that lives in the Windows.Networking.Proximity namespace. That class has a method called GetDefault that I can use to get an instance of the default NFC Reader.

From there, I can subscribe for messages (tag reads) by calling SubscribeForMessage passing it a parameter indicating the type of message and a parameter that serves as a callback for whenever a message (tag) arrives. The type of messages we’re dealing with are NDEF messages where the first record of the message is a vCard record containing conference attendees’ Given (First) name, Family (last) name, and Email Address. In this case, that means we want to use NDEF as the type of message.

So we have our callback readied (we’ll eventually be doing some async work, hence the async lambda), but what are we going to do when we get a tag read?

The raw tag data is available as an IBuffer member named Data on the second callback parameter. I’m going to write some code to convert that in a few different ways – (1) human readable ASCII text for my own benefit, so that I can see the name on the badge that was scanned while debugging, and (2) a base64 encoded string that can be passed cleanly to a Logic App, and passed around that Logic App further. While I’m at it, I’m also going to write some code to determine if we’re seeing the same tag twice in a row.

So, let’s set a breakpoint, deploy, and launch this application so that we can see what we have so far when I scan a tag. After launching it and scanning my test conference badge, we hit the breakpoint.

Looking good so far! However, there’s not yet a way for a Logic App to declare its interest in this data collected by the Universal Windows app. As a result, it is time to switch over to the NfcPushTrigger API App, so that we can enable Logic Apps to register their interest in the data, and provide callback details for use in this client app.

Building the Push Trigger API App

In the Push Trigger API App, I’m going to start by adding a few package references. I’m going to add a reference to the latest pre-release of the Mobile App SDK, so that we will have access to the MobileServicesClient class for interaction with our data store. I’m also going to add a package reference to both T-Rex and the QuickLearn Push Trigger Tools.

T-Rex provides us a painless way to decorate properties, methods, and parameters with attributes that help our API Apps look pretty within the Logic App designer without resorting to manually writing a bunch of Swashbuckle filters. The QuickLearn Push Trigger Tools on the server side really only provides us with a single interface, ICallbackStore, which you can see here. Using that, we can write our push trigger code against the interface to make sure we’re doing all the necessary things within the callback registration and then simply implement that interface for our callback storage mechanism.

Once I have those package references added, I’m going to start clearing out the ValuesController, so that it doesn’t contain code that I don’t need. Then, I’m going to write comments to remind myself what it is I’m trying to do:

Let’s start by renaming the ValuesController class to something more meaningful, like CallbacksController. Also, we have attributes routing. I don’t need to name my method “Put” when its purpose is to register a callback. Let’s adjust that a little bit.

Looks good so far. However, in the Logic App designer, this will show up as an action titled something awful like “CallbacksController_RegisterCallback” – and who will even know that has anything to do with starting the Logic App when an NFC tag is read on a device? We’ll want to use some of those attributes in the T-Rex Metadata Library to address that (also a good opportunity to add the c.ReleaseTheTRex() statement of code back in the SwaggerConfig.cs file).

You might be looking at that code, and thinking to yourself, “wait a minute, what’s a PushTriggerOutput?” That’s a fair question, we haven’t seen that class yet. It’s one that we actually still need to define. This just needs to be some class that represents the shape of the output that our trigger returns (or rather the shape of the input into the Logic App). In our case, it’s going to be a string that contains base64 encoded NFC tag read data. So, something like this might suffice (T-Rex metadata added for clarity).

Let’s go back to the RegisterCallback method now. This method is ultimately going to be receiving information about a URI to call back to the Logic App (with embedded credentials). Right now, that’s not represented in the parameters of the method.