by Dan Toomey | Jun 23, 2017 | BizTalk Community Blogs via Syndication

(This post was originally published on Mexia’s blog on 19th June 2017)

Microsoft recently announced that Azure BizTalk Services (MABS) is officially being retired. This was no great surprise, as those who actually used this service and its VETER pipelines to build integrations were well aware that the tooling was cumbersome, the DevOps story was terrible, scalability was severely limited, and the management capabilities left much to be desired. Logic Apps and the Enterprise Integration Pack already have already far surpassed the capabilities of MABS for cloud-based integration and B2B (EDI) scenarios. However, the one really useful feature of MABS was the free Hybrid Connections capability – free because this feature never made it out of preview mode.

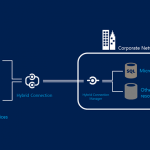

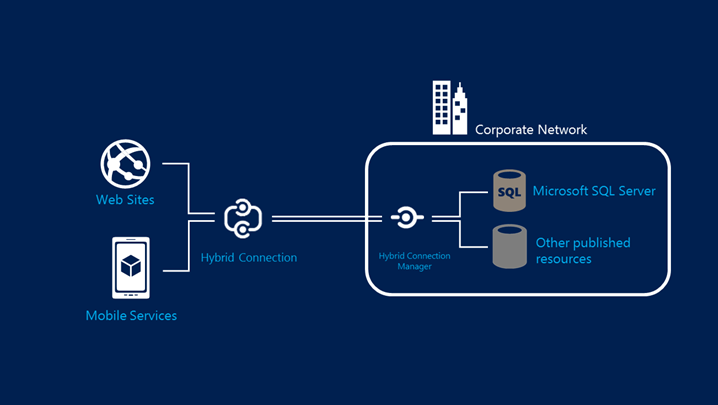

Hybrid Connections allowed you to easily connect your Web App or Mobile service to an on-premises resource without making any changes to your corporate network, traversing NATS, routers, firewalls etc. with a purely codeless solution. In fact, you could literally “lift & shift” your existing on-prem website to Azure and not even have to alter the connection string to your database. Moreover, it worked at the transport layer so there was no dependency on WCF or .NET. I was so intrigued by the capabilities of this service that I authored a Pluralsight course on it, as well as creating a webcast and writing several blog posts.

With the obvious signs over the past year or so that MABS was on its way out, this had us wondering what would happen to Hybrid Connections? Other non-network related technologies like Service Bus Relay and the newer On-Premises Data Gateway certainly offer some viable alternatives, but nothing that permitted the same flexibility as Hybrid Connections. Fortunately, late last year we got our answer – the new Azure Relay.

A New Offering

Azure Relay became generally available on 27 March 2017, less than five months after the preview was announced. This service actually is comprised of two capabilities: the WCF Relay (which is the new name of the existing Service Bus Relay), and the new version of Hybrid Connections. This version of the latter is everything that the former version was, but much more:

Azure Relay became generally available on 27 March 2017, less than five months after the preview was announced. This service actually is comprised of two capabilities: the WCF Relay (which is the new name of the existing Service Bus Relay), and the new version of Hybrid Connections. This version of the latter is everything that the former version was, but much more:

- It is no longer hosted in a sunsetted technology (lives in Azure Service Bus)

- A published API means that the capability is no longer confined to Azure Web Apps and Mobile Services

- Reliance on web sockets means it is truly a cross-platform solution

In my Pluralsight course and in my previous webcast, I proved how easy it was to enable a single Azure hosted web site to talk to two separate on-premises resources (a web service and a SQL Server database). That capability exists in the new Hybrid Connections and can be set up in exactly the same way; a convenient downloadable manager agent can be installed in seconds which will complete the listener setup and allow you to flow messages into your network. I was easily able to recreate the same demo scenario in my webcast with the new version.

But even more compelling was the experience at using the API to build a more flexible solution, for example connecting an Azure hosted VM to an on-premises resource. Here, the supplied samples on GitHub (conveniently for both .NET and Node) really prove the extensive capabilities of this service.

Starting Simple

The simple sample introduces you to the Hybrid Connections API by building a simple relay that bi-directionally exchanges blocks of text over a connection. I had this up & running in minutes just by deploying the server app on my local Hyper-V hosted VM and deploying the client application on an Azure-hosted VM. There were a few minor glitches to get over, for example how to correctly format the SQL Server connection string to talk to the local SQLEXPRESS instance I had running. This post helped me realise that I needed to explicitly include the port number in the connection string, as well as ensure that the TCP/IP settings for SQLEXPRESS used a static IP address (which is does not by default).

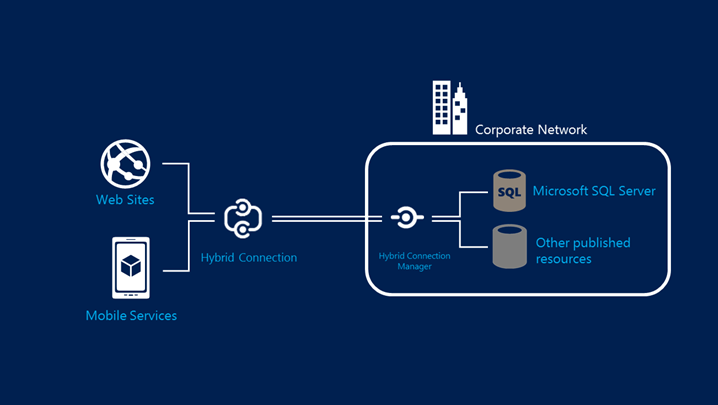

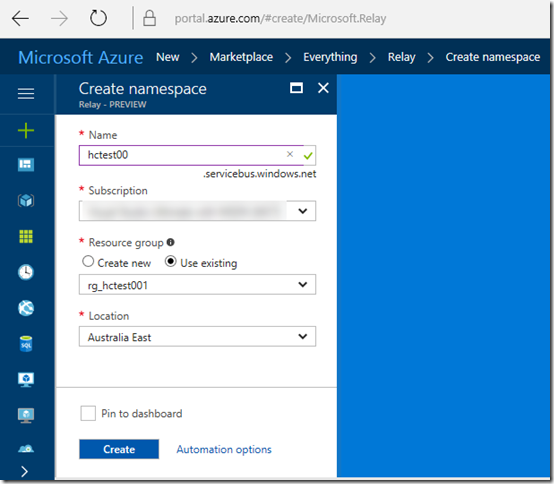

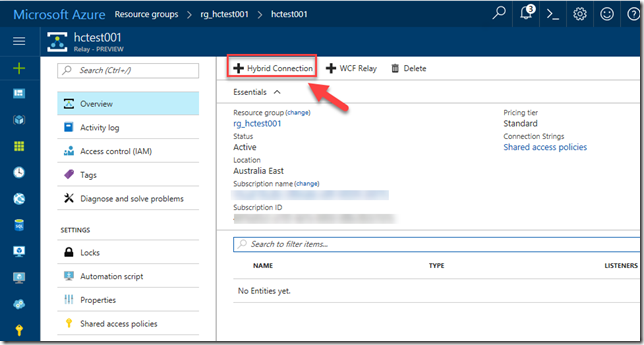

Of course you need to first create the Hybrid Connection in Azure, which you do in the portal by first creating an Azure Relay:

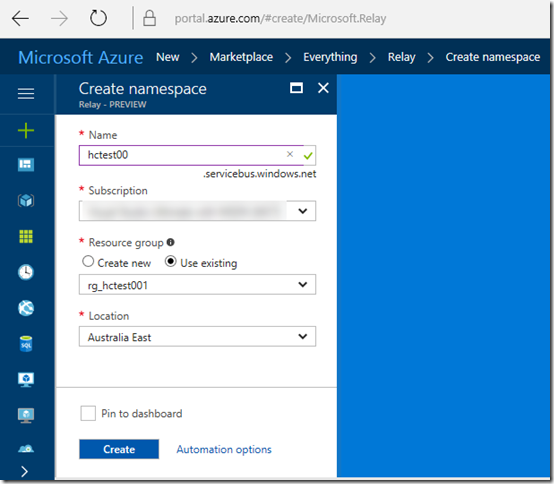

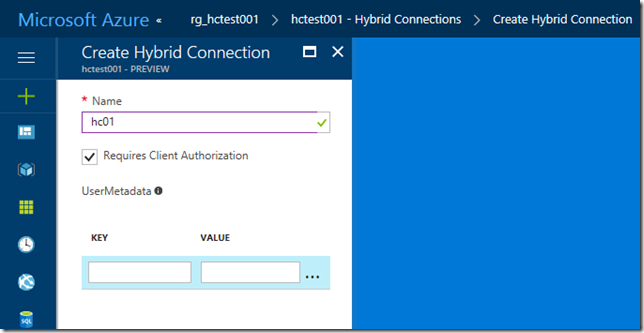

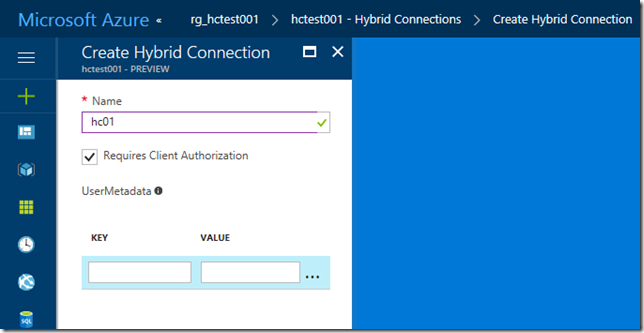

This sets up the Service Bus namespace that is associated with the relay artefacts. Then you can create the Hybrid Connection:

By going into the Shared Access Policies menu item for the Relay, you can copy the Primary Key for the “RootManageSharedAccessKey” – a necessary item for configuring the applications that will use the relay. (Of course you can and should configure your own policies for Manage, Send and Listen – but I didn’t for this example).

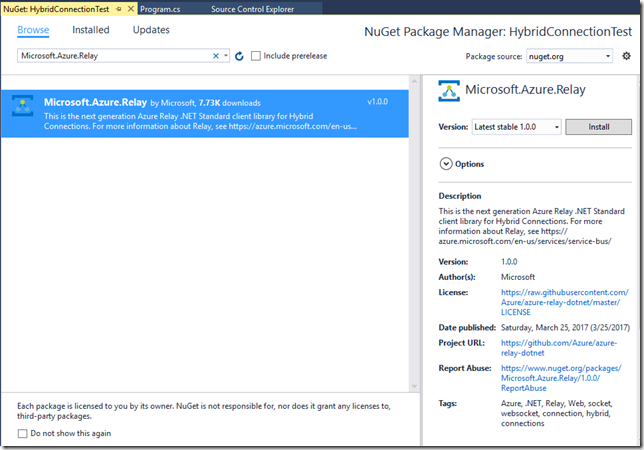

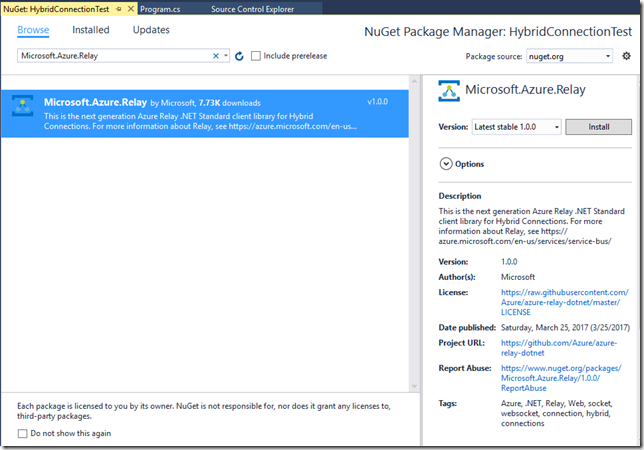

When you open any of the solutions from the GitHub samples, you will need to update the Microsoft.Azure.Relay Nuget package before compiling the solution:

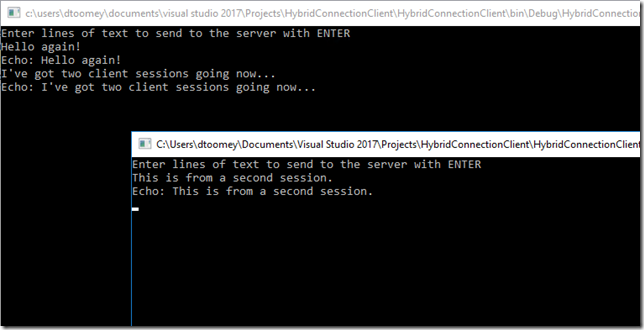

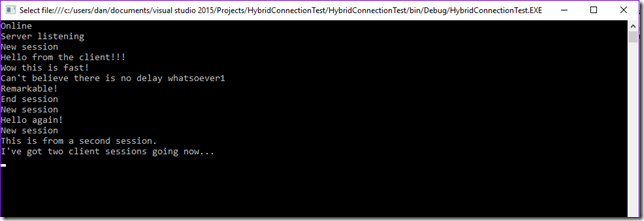

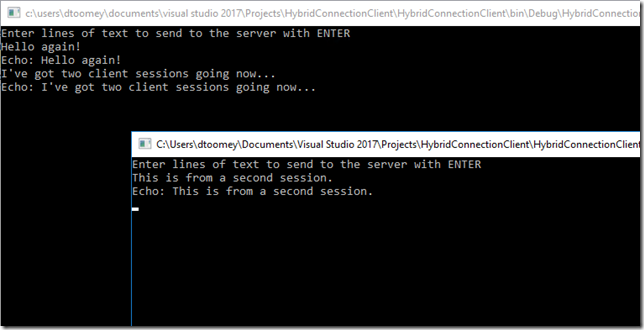

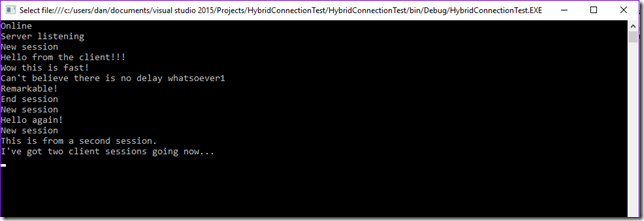

Then it’s a matter of copying the solution to your servers, running the Client app on the Azure hosted machine and the Server app on the on-prem machine. I was impressed by the speed of the connection. Messages typed into the client appeared instantly in the server console window with absolutely no perceptible delay! Given the fact that under the covers, the relay uses Service Bus queues to transfer messages, I would have expected and happily tolerated a slight latency here – but there was none! I even opened multiple instances of the client to see how that would work:

As expected, all of the messages were visible in the server-hosted console, along with logging of sessions opening and closing:

Although the application in this format isn’t particularly useful for any production scenarios I can think of, it certainly proves that hybrid connectivity is no longer limited to Web Apps.

Port Bridging

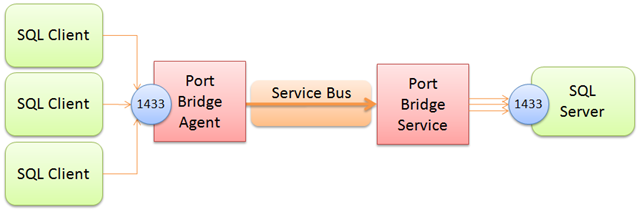

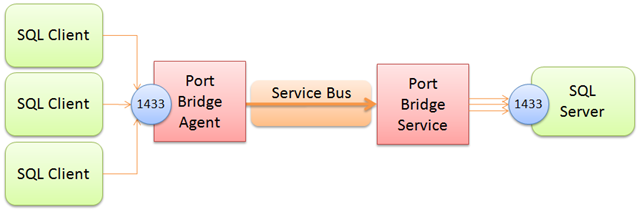

The next step was to try out the Port Bridge sample, which allows you to set up a hybrid connection that allows you to setup multiplexed socket tunnels that can bridge TCP and Named Pipe socket connections across the relay. This is an amazingly flexible demonstration as once you deploy the code, you can add SSH-like connections through configuration only, including imposing firewall-like restrictions – all without any involvement of your physical network configuration.

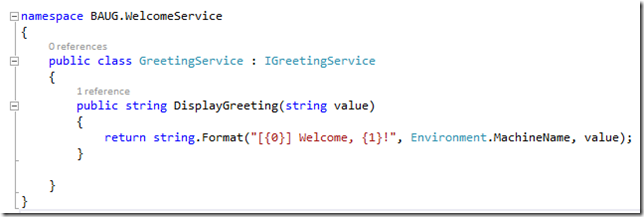

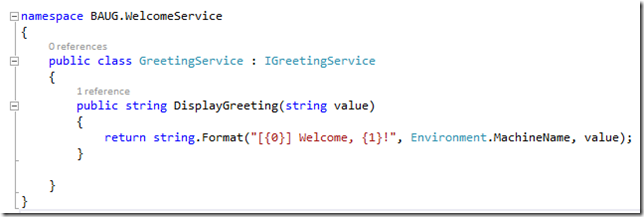

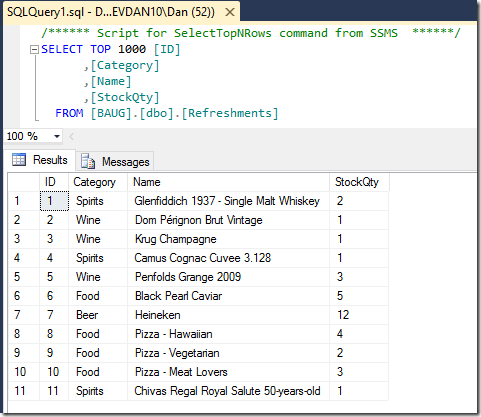

Again, I was keen to prove that this would allow connectivity across multiple nodes and ports, so I resorted again to my previous demo example and set up connections to both an locally hosted WCF service (on port 80) and my SQL Server database (on port 1433). The WCF service was really nothing more than a simple echo function that also outputs the name of the local server (same as used in my Guestbook demo in the webcast):

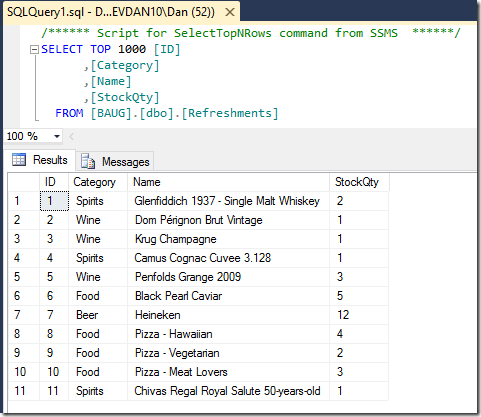

And for the database connectivity, all I needed to do was prove that a simple query would return the appropriate number of rows in a table:

I created two console test apps:

- One that called the WCF service

- One that executed a scalar query of the number of rows in the Refreshments table above

After testing both console apps locally on the Hyper-V VM, I then deployed these to my Azure VM, along with the portbridge solution (which was also deployed locally). The next step was to create the Hybrid Connection in Azure, which I did by adding to the existing Relay. The trick here was to ensure that the name of the relay (normally anything you like it to be) had to be the same name as your target host name (in this case, it was “devdan10”, the name of the VM hosted in my local Hyper-V).

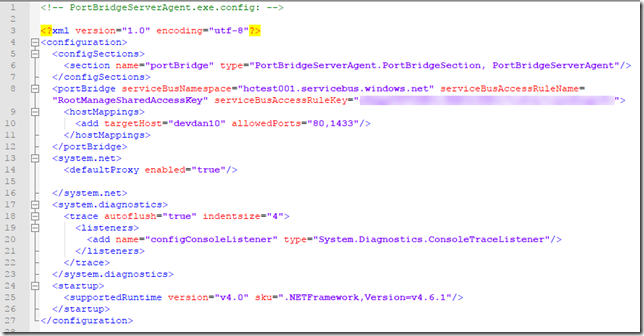

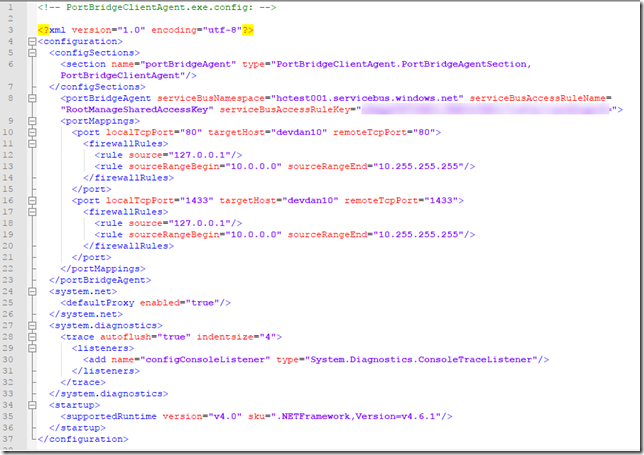

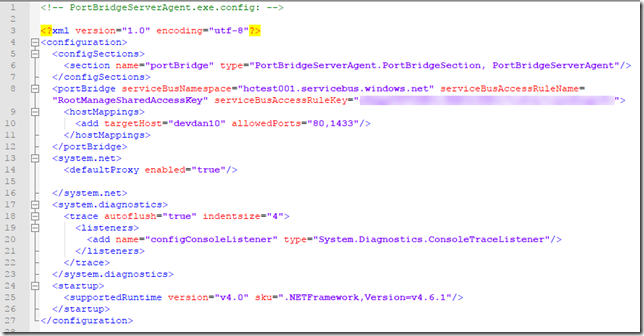

Getting the configuration right took me a few goes, but eventually got there. Here is the configuration for the on-prem Server Agent console:

Notice on Line 10 that I’ve referenced the name of my target host (“devdan10”) and listed the port numbers which I will allow to be opened to the relay.

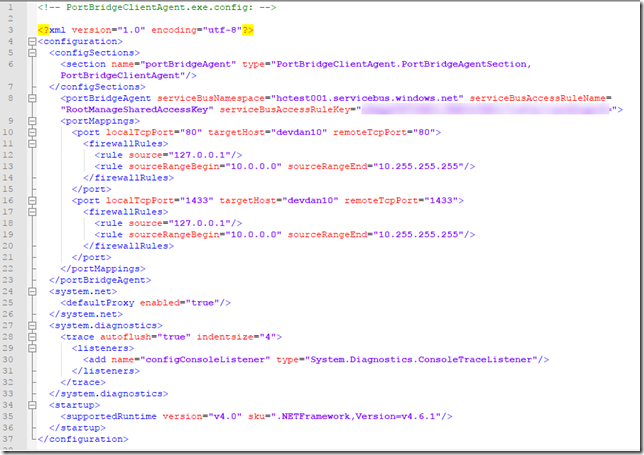

And here is the configuration for the Client Agent service running in Azure:

The local and remote ports happen to be the same here (lines 10 & 16), but they could be different – allowing you to map different ports between the remote client and the target local machine. The firewall rules in this case ensure that the requests come from the client machine – but these can also be adjusted to be as lenient or strict as desired.

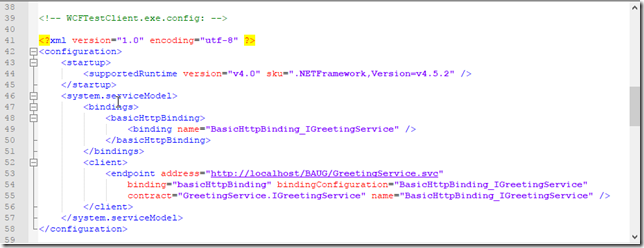

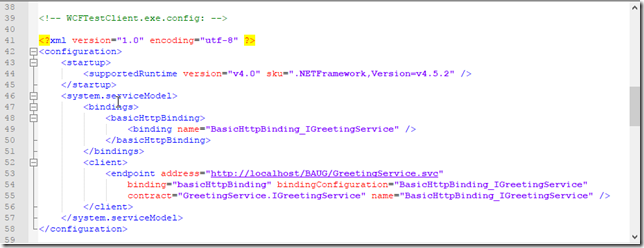

There were no changes at all required for the WCF test client, in fact I was able to use “localhost” as the hostname in the client endpoint definition (line 53)!

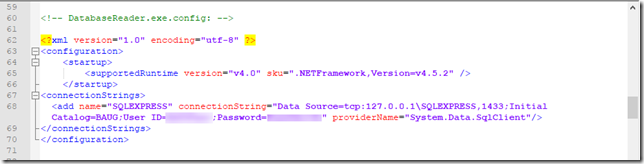

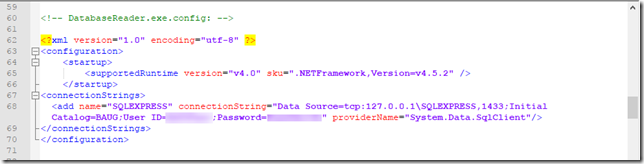

One thing I did need to do was ensure that the connection string in the SQL test client used a TCP protocol and (again) a reference to the local machine rather than the target host name (this is presumably because the portbridge client service maps everything from the local machine on that port to the target remote server):

Note that you do need to use SQL Authentication rather than Windows Authentication, as there is no support for Active Directory authentication here.

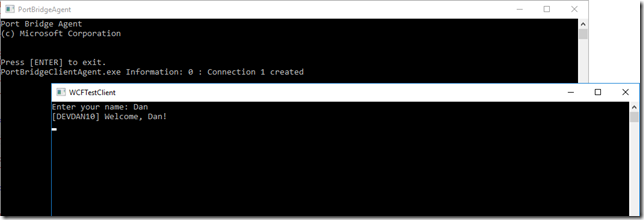

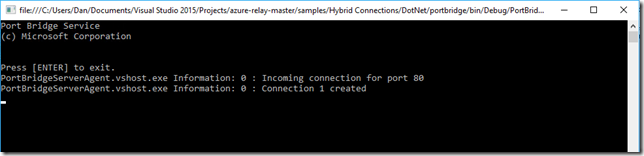

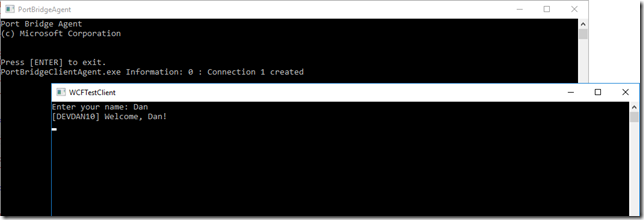

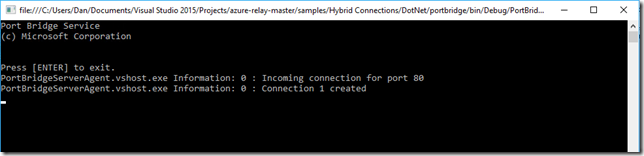

With that in place I was able to run tests with both clients to prove connectivity over the ports I had configured for forwarding. The first test screenshot show the WCF client test app successfully invoking the service remotely. The window in the background is the console for the Port Bridge Client Agent that runs on the Azure machine, forwarding requests to the remote on-prem machine by way of the Hybrid Connection relay:

The Port Bridge Service Agent console windows runs on the on-prem machine, and logs the connections:

By the way, it is worth mentioning that both the Port Bridge Service Agent and the Client Agent can be installed and run as a Windows service.

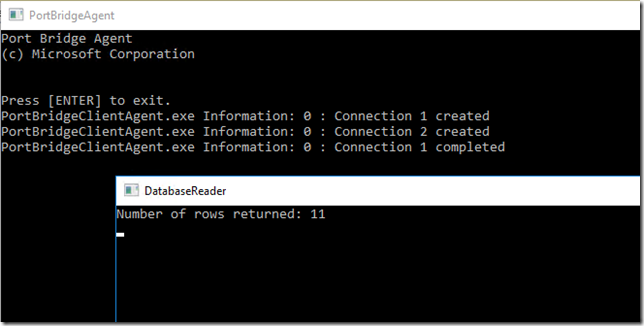

The second test is the same thing but with the SQL Server database test client, correctly retrieving the row count from the on-prem machine:

How good is that?? You now have the capability to open of a port-based bridge between cloud and on-premises machines by adding configuration to the client and server agent services, meaning you can successfully connect your IaaS VMs and cloud services [almost] as easy as you connect your Web Apps and Mobile Apps.

In a week’s time I will be speaking about Hybrid Connectivity at the INTEGRATE 2017 conference in London. Unfortunately there won’t be time for demos, but this blog post might make for a handy follow-up reference.

by Dan Toomey | Apr 7, 2017 | BizTalk Community Blogs via Syndication

(This post was originally published on Mexia’s blog on 07 April 2017)

Last weekend I had the great privilege of attending my first Microsoft MVP event – the MVP Community Connection held in Sydney. This was an invitation to all Australian MVPs to come together over two days to network, receive central communication from the program managers, and learn some best practices in how we can better serve the community.

First I should explain what an MVP is. The Microsoft Most Valuable Professional award is presented to recognised experts in the technical community who regularly and voluntarily share their passion and knowledge with others, including Microsoft itself. The award celebrates an individual’s deep commitment to serving the community and promoting awareness of Microsoft’s great products through a variety of channels – be it running user groups, speaking at events, blogging, mentoring, answering questions on forums, creating and sharing free software, etc. Aside from recognition, the award includes a number of benefits and privileges, including direct access to the Microsoft product teams via email distribution lists and Yammer, invitations to early previews and Product Group Interaction (PGI) meetings, exam vouchers, free software and subscriptions, and more. In return, Microsoft expects us to use these benefits and privileges to facilitate our continued involvement in the community and also to provide valuable feedback to Microsoft to help them design and build better products. The award lasts for one year, after which it may be renewed if the candidate has continued to demonstrate exemplary commitment and service.

The award is always granted in a particular Microsoft technological area or product for which the candidate has demonstrated expertise. My award was presented in February this year in the category of Azure – one of the broadest areas which happens to include enterprise integration. This was presumably in recognition of my efforts in running two user groups, speaking at multiple events (including Ignite Australia), writing posts on both Mexia’s and my personal blog, and promoting Microsoft events and announcements on social media. Yet, I always feel that I should be doing much more to serve an integration community that is always so willing to share and so appreciative of the efforts of those who do share!

This latest MVP event in Sydney was designed to allow us to come together and share ideas about how to improve our community leadership. It began with some fun networking activities on Friday afternoon & evening, followed by a more formal schedule of sessions on Saturday. It was a nice touch to arrive at the venue on Saturday morning and be presented with a personalised appreciation card complete with a custom Lego figurine. It quickly became evident that each Lego figurine was carefully selected to match the physical characteristics of the recipient in terms of hair colour, gender, etc!

After a warm welcome by Community Program Manager Lana Montgomery and a program update by Chris Olson from the DX team, there was a keynote about Digital Transformation by Technical Evangelist Vaughan Knight. This was followed by a series of enlightening “Skills Sessions” presented by prominent members of the MVP community including Troy Hunt, Orin Thomas, Marc Kean, Robert Crane, Leon Tribe and David Gardiner, spanning topics from developing your personal brand to blogging like a pro to recharging your user group. These were followed by some round table discussions around various topics relating to community involvement such as growing technical communities, embracing emerging technologies, supporting entrepreneurs and start-ups, and assisting young student technologists. The day wrapped up with some closing remarks by Lana and then a trip to the Helm Bar in Darling Harbour for some refreshing drinks before heading to the airport.

After a warm welcome by Community Program Manager Lana Montgomery and a program update by Chris Olson from the DX team, there was a keynote about Digital Transformation by Technical Evangelist Vaughan Knight. This was followed by a series of enlightening “Skills Sessions” presented by prominent members of the MVP community including Troy Hunt, Orin Thomas, Marc Kean, Robert Crane, Leon Tribe and David Gardiner, spanning topics from developing your personal brand to blogging like a pro to recharging your user group. These were followed by some round table discussions around various topics relating to community involvement such as growing technical communities, embracing emerging technologies, supporting entrepreneurs and start-ups, and assisting young student technologists. The day wrapped up with some closing remarks by Lana and then a trip to the Helm Bar in Darling Harbour for some refreshing drinks before heading to the airport.

The event was not only well organised, informative and enjoyable, but I also found it truly inspiring to meet up with so many other enthusiastic technologists! While you might expect it to be intimidating to be a newbie in the company of so many extraordinarily brilliant and accomplished individuals, I actually found everyone to be extremely friendly and welcoming. I made many new friends that day as well as catching up with some old friends like Bill Chesnut, Mick Badran, Shane Hoey and Martin Abbott. Kudos to Lana for her exceptional organisational skills and her contagious positive energy! Really looking forward to more occasions like these, as well as the continuing support from Microsoft which helps us MVPs better support the wider community.

Photos courtesy of Lana Montgomery

by Dan Toomey | Feb 24, 2017 | BizTalk Community Blogs via Syndication

Last week I had the opportunity to attend Microsoft Ignite on the Gold Coast, Australia. Even better – I had a free ticket on account of agreeing to serve as a Technical Learning Guide (TLG) in the hands-on labs. This opportunity is only open to Microsoft Certified Trainers (MCTs) and competition was evidently keen this year – so I am glad to have been chosen. Catching up with fellow MCTs like Mark Daunt and meeting up with new ones such as Michael Schmitz was a real pleasure. Of course the down side was that I missed quite a few breakout sessions during the times I was rostered. Nevertheless, I still got to see some of the most important sessions to me, particularly those that centred around Azure and integration technologies. Please have a read of my summary of these on my employer’s blog.

By and far this was my best Australian Ignite/Tech-Ed event experience for many reasons, including:

- The Pro-Integration team from Redmond came all the way out to Australia show everyone what the product group is doing with Logic Apps, Flow, Service Bus, and BizTalk Server

- I was chosen to present an Instructor-Led Lab in Service Fabric – my first ever speaking engagement at Ignite

- I had the rare opportunity to catch up with some fellow MVPs from Perth and Europe.

It was truly phenomenal to see enterprise integration properly represented at an Australian conference, as it is typically overlooked at these events. In addition to at least four breakout sessions on hybrid integration, Scott Guthrie actually performed a live demo of Logic Apps in his keynote! This was a good shout-out to the product team that has worked so hard to bring this technology up to the usability level it now enjoys. I’m glad that Jim Harrer, Jeff Holland, Jon Fancey and Kevin Lam were there to see it!

Teaching the lab in Service Fabric was a thrilling experience, but not without some challenges. The lab itself was broken and required a re-write of the second half, which I had pre-prepared and uploaded to One-Drive here so the students could progress. The main lab content is only available to Ignite attendees, however if you want to have a go at a similar lab you can try these ones available from Microsoft:

Despite the frustration that some attendees expressed about the lab errata and the poor performance of the environment, I was pleased that all the submitted feedback relating to the speaker was very positive!

Finally, perhaps the best part of events like these is the ability to catch up with old friends and meet some new ones. It was a pleasure to hang out with Azure MVP Martin Abbott from Perth and meet a few of his colleagues. It was also great to see Elder Grootenboer and Steef-Jan Wiggers from the Netherlands, who happened to travel to Australia this month on holidays and to speak at some events. Steef-Jan also took time to include me in a V-Log series he’s been working on with various integration MVPs, recording his 3-minute interview with me at the top of Mount Coot-tha on a sunny Brisbane Saturday! And Mexia’s CEO Dean Robertson & myself got to enjoy a nice dinner out with the Microsoft product group and the MVPs.

All good things must come to an end, but it was definitely a memorable week! Now it’s time to start getting ready for the Brisbane edition of the Global Integration Bootcamp on Saturday, 25th March, to be followed not long after by the Global Azure Bootcamp on Saturday 22nd April! I’ve got a few demos and presentations to prepare – but now with plenty of inspiration from Ignite!

by Dan Toomey | Feb 10, 2017 | BizTalk Community Blogs via Syndication

“When it rains, it pours.”

Well, I must say I’ve had a pretty remarkable run the past few weeks! I can’t remember any point in my professional life where I’ve enjoyed so much reward and recognition in such a short time span.

For starters, after six weeks and probably 50-60 hours of study, I managed to pass my MS 70-533 Implementing Azure Infrastructure Solutions exam. Since I’d already passed the MS 79-532 Developing Azure Solutions exam a couple of month earlier, this earned me the coveted Microsoft Certified Solutions Associate (MCSA) qualification in Cloud Platform. I now have just one more exam to pass to earn the Microsoft Certified Solutions Expert (MCSE) qualification.

Then just last week I was granted my first Microsoft Most Valuable Professional (MVP) Award in Azure! This is incredibly exciting to say the least, and not only a surprise to myself to have been identified with so many incredibly accomplished leaders in the industry, but also to many others because of the timing. Microsoft has revamped the MVP program so that now awards will be granted every month instead of every quarter, and everyone will have their review date on July 1st. I’m looking forward to using my newfound benefits to contribute more to the community.

Then just last week I was granted my first Microsoft Most Valuable Professional (MVP) Award in Azure! This is incredibly exciting to say the least, and not only a surprise to myself to have been identified with so many incredibly accomplished leaders in the industry, but also to many others because of the timing. Microsoft has revamped the MVP program so that now awards will be granted every month instead of every quarter, and everyone will have their review date on July 1st. I’m looking forward to using my newfound benefits to contribute more to the community.

And if that wasn’t enough, this week Mexia promoted me to the position of Principal Consultant! Aside from the CEO (Dean Robertson), I am the longest serving employee, and the journey over the last seven and a half years has been nothing short of amazing. Seeing the company grow from just three of us into the current roster of nearly forty has been remarkable enough, but I’ve also watched us become a Microsoft Gold Partner and win a plethora of awards (including ranking #10 in the Australia’s Great Place to Work competition) along the way. Working with such an awesome team has afforded me unparalleled opportunities to grow as an IT professional, and I will strive to serve both our team and our clients to the best of my ability in this role.

So what else is on the horizon? Well for starters, next week I join the speaker list at Ignite Australia for the first time delivering a Level 300 Instructor-Led Lab in Azure Service Fabric. I’m also organising and presenting for the first ever Global Integration Bootcamp in Brisbane on 25th March, and doing the same for the fifth Global Azure Bootcamp on 22nd April. And somewhere in there I need to fit in that third Azure exam… It’s going to be a busy year!

by Dan Toomey | Dec 6, 2016 | BizTalk Community Blogs via Syndication

You may have noticed that I haven’t been too active on the social media / blogging front of late. It certainly isn’t because there isn’t much to write about…especially when you consider the release of BizTalk Server 2016 (including the Logic Apps Adapter), the General Availability of Azure Functions, and many other integration events leading up to these! And for those on the certification path, there’s news of the refresh of the Azure exams as well.

In fact, it is that very last item that accounts for a good deal of my scarcity in the blogging world of late. My employer is keen for as many of us as possible to earn the Microsoft Certified Solution Expert (MCSE) accreditation in Azure. I’ve already passed first of three required exams, MS 70-532 Developing Microsoft Azure Solutions after several weeks of after hours study (hours that might have been spent blogging). That accomplishment has earned me this nice little badge:

I’m now currently studying for the next exam, MS 70-533 Implementing Microsoft Azure Infrastructure Solutions. Passing this exam will earn me a Microsoft Certified Solutions Associate (MCSA) qualification in Cloud Platform. However, it won’t stop there as I’ll need to pass one more exam – MS 70-534 Architecting Microsoft Azure Solutions in order to attain the coveted MCSE in Cloud Platform and Infrastructure. All I can say is that I’ll be doing a lot of studying over the Christmas holidays…

Aside from studying for exams, I’ve also been heavily tasked at work as Mexia has had a profoundly successful sales year in 2016 – which translates into an overload of work! No wonder we’re heavily recruiting right now, looking for those “unicorns” that can help us remain as the best integration consultancy in Australia. There has been a fair amount of travel lately, and as Mexia’s only Microsoft Certified Trainer (MCT) I will continuing to deliver courses in BizTalk Server Development, BizTalk Server Administration, BizTalk360, and Azure Readiness. That means many more hours preparing all of that content.

But it hasn’t stopped me from speaking, at least not entirely. Aside from regular presentations at the Brisbane Azure User Group (including this one on Microsoft Flow), I’ve also been a guest presenter at Xamarin Dev Days in Brisbane where I talked about Connected and Disconnected Apps with Azure Mobile Apps.

Looking forward to writing posts more regularly again after this exam crunch is over. There’s a lot of exciting things happening in the integration world right now!

by Dan Toomey | Aug 15, 2016 | BizTalk Community Blogs via Syndication

One of the many challenges with an integration project is typically the mapping of messages from one API to another. The difficulty most often lies not with the technical implementation (although some former projects mapping SAP iDocs to EDI X12 are still giving me nightmares), but rather with forming the specification of the mapping itself, including understanding the semantical meaning behind each element. This is difficult because it requires expert knowledge of both the source and target system, as well as an analysts who can correct “draw the connecting line” between the two. The correct end result is only achieved through significant collaboration amongst the relevant parties.

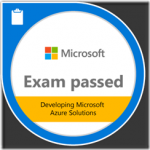

The BizTalk Mapper goes a long way to facilitating this task with it’s graphical mapping interface. Aside from providing the developer a means of rapidly implementing a transformation, it also servers as a visual representation of the mapping that can be understood by a business analyst (if not too complex):

(image courtesy of MSDN)

There are two problems with this approach, however:

- It requires BizTalk Server, which is not only expensive, but also may be overkill for a solution that can easily be implemented in WCF, REST, or another platform;

- The mapping must be implemented by a developer before it can be shown to analysts and business users for discussion and validation. This usually entails a number of iterative cycles until the mapping is correct.

Enter api-map.com – a new free online tool created by my colleague Joseph Cooney specifically to address these particular challenges. api-map provides a medium to formulate, display and share mapping documentation which can eventually be handed over to a developer for implementation on any chosen platform.

As a first step, the tool allows you to upload schemas (either JSON or XML) with the ability to display, edit and annotate them:

These schemas can then be used to define mappings, even providing automatic hints along the way using very clever heuristics. You can specify direct mappings or indicate that a transformation is required – including a description of the necessary condition and/or logic that defines the transformation. You can also map multiple source elements to a single target, and specify constant values to be assigned where appropriate.

Once this is completed, you can then display the mapping in a clear visual diagram that is easily understood by any analyst. Even better, you can combine multiple diagrams into one composite “end-to-end” view – providing a traceability which you cannot achieve within BizTalk maps. This is incredibly useful in the situation where canonical business schemas are employed within an ESB (a common scenario for most of my projects). And by selecting any element involved in a mapping, you get an independent end-to-end view of all elements involved in a mapping:

Finally, when everything has been sorted, you can export the mapping to a handy Excel spreadsheet, serving as documentation within a source repository for developers to work from:

A few other nifty features include the ability to tag items to make them searchable, join teams in order to share project artefacts, and an option to attach images of a user interface to clarify the association of an element with a system control.

Watch Joseph’s video to see a live demonstration of the tool. Still in beta, Joseph is continually adding new features, but already I believe this will be a handy utility on many of my upcoming projects!

by Dan Toomey | Jun 17, 2016 | BizTalk Community Blogs via Syndication

Last week I had the privilege of presenting a short session on Microsoft Flow to the Brisbane Azure User Group. The group meets every month, and at this particular event we decided to have an “Unconvention Night” where instead of one or two main presentations, we had several (four in this case) shorter sessions to introduce various topics. This has been a popular format with the group and one that we will keep repeating from time to time.

Wrapping up the evening was my session, called Easy Desktop Integration with Microsoft Flow. Flow is a new integration tool built into Office365; it allows business users (yes, I really mean “business users” – no code required) to build automated workflows using 35+ connectors to popular SaaS systems like DropBox, Slack, SharePoint, Twitter, Yammer, MailChimp, etc. The full list of connectors can be found here.

Even better is that Flow comes with over 100 pre-built templates out of the box, so you don’t even need to construct your own workflows unless you want to do something very customised! All you need to do is select a template, configure the connectors, publish the workflow – and off it goes! In fact, it is so simple that I built my first Flow during Charles Lamanna’s presentation at the Integrate 2016 conference in London; I decided to capture all tweets with the #Integration2016 hashtag to a CSV file in DropBox.

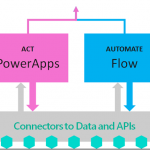

Flow is built upon Azure Logic Apps, and it uses the same connectors as PowerApps – so you can leverage both of these great utilities to create simple but powerful applications:

Because it is built on Logic Apps, this means you can easily migrate a Flow workflow to an Azure Logic App when it becomes mission critical, requires scalability, or begins to use more sensitive data that requires greater security and auditing.

Feel free to view the recording of my session at https://youtu.be/sd1AhZpPsBw:

[embedded content]

Microsoft Flow presentation to the Brisbane Azure User Group

You can also download the slides (which came mostly from Charles Lamanna’s deck– used with permission of course). But most importantly, get started using Flow! I’m sure you’ll find plenty of uses for it.

by Dan Toomey | May 20, 2016 | BizTalk Community Blogs via Syndication

Last week I had the privilege of attending the world’s largest integration event this year, Integrate 2016 in London. A big thanks to my employer Mexia for sending me. As is typical for events organised by BizTalk360, it was on an especially grand scale (27 sessions with 25+ speakers) and did not disappoint in the content presented by members of the Microsoft product team and the MVP community.

Day 1 of the three day event featured a number of announcements from Microsoft that clarified their vision and direction for integration, even more so than the Integration Roadmap delivered at the end of last year. Showing their commitment to BizTalk Server as the on-premises integration platform and Logic Apps as the cloud platform provided some much-needed reassurance and comfort to the community. “BizTalk and Logic Apps better together” is the mantra underpinned by the addition of a Logic Apps adapter in the upcoming BizTalk 2016 CTP2 release and the new BizTalk Connector soon to be introduced in Logic Apps.

Without explicitly stating it, it also became rather apparent as to what is “on the outs” in the integration space:

- Microsoft Azure BizTalk Services (MABS) is likely to be deprecated as both the VETER pipelines and the EDI/B2B functionality moves into Logic Apps by way of the Enterprise Integration Pack;

- Azure Stack is no longer being touted as the on-premises integration platform; rather BizTalk Server will continue to be king of that domain.

I’ve already posted an article on Mexia’s blog giving my rundown on all the sessions presented by Microsoft and the significant announcements. Soon after I followed up with a summary of the many MVP sessions that rounded out the conference. In addition, there are plenty of other blog posts from the community giving their thoughts and recaps of the event; here are just a few:

Besides Microsoft’s clear roadmap message and the excellent presentations, perhaps the best thing about this conference was the opportunity to catch up with colleagues and friends from around the world – and meet new ones as well!

(photo by Thomas Canter)

(photo courtesy of BizTalk360)

(photo by Tara Motevalli)

(photo by Steef-Jan Wiggers)

Kudos again to Saravana Kumar, BizTalk360, Microsoft and all the sponsors for making this such an outstanding event! Looking forward to Integrate 2017!

by Dan Toomey | May 20, 2016 | BizTalk Community Blogs via Syndication

Last week I had the privilege of attending the world’s largest integration event this year, Integrate 2016 in London. A big thanks to my employer Mexia for sending me. As is typical for events organised by BizTalk360, it was on an especially grand scale (27 sessions with 25+ speakers) and did not disappoint in the content presented by members of the Microsoft product team and the MVP community.

Day 1 of the three day event featured a number of announcements from Microsoft that clarified their vision and direction for integration, even more so than the Integration Roadmap delivered at the end of last year. Showing their commitment to BizTalk Server as the on-premises integration platform and Logic Apps as the cloud platform provided some much-needed reassurance and comfort to the community. “BizTalk and Logic Apps better together” is the mantra underpinned by the addition of a Logic Apps adapter in the upcoming BizTalk 2016 CTP2 release and the new BizTalk Connector soon to be introduced in Logic Apps.

Without explicitly stating it, it also became rather apparent as to what is “on the outs” in the integration space:

- Microsoft Azure BizTalk Services (MABS) is likely to be deprecated as both the VETER pipelines and the EDI/B2B functionality moves into Logic Apps by way of the Enterprise Integration Pack;

- Azure Stack is no longer being touted as the on-premises integration platform; rather BizTalk Server will continue to be king of that domain.

I’ve already posted an article on Mexia’s blog giving my rundown on all the sessions presented by Microsoft and the significant announcements. Soon after I followed up with a summary of the many MVP sessions that rounded out the conference. In addition, there are plenty of other blog posts from the community giving their thoughts and recaps of the event; here are just a few:

Besides Microsoft’s clear roadmap message and the excellent presentations, perhaps the best thing about this conference was the opportunity to catch up with colleagues and friends from around the world – and meet new ones as well!

(photo by Thomas Canter)

(photo courtesy of BizTalk360)

(photo by Tara Motevalli)

(photo by Steef-Jan Wiggers)

Kudos again to Saravana Kumar, BizTalk360, Microsoft and all the sponsors for making this such an outstanding event! Looking forward to Integrate 2017!

by Dan Toomey | Mar 31, 2016 | BizTalk Community Blogs via Syndication

Many people have written about Azure Service Bus Relays in the past and a summary can be found here. Dan Rosanova recently tweeted “….We’re trying to discourage ACS for security. SAS is our preferred model.”. The ACS security pattern is described here and the SAS pattern is described here. This article attempts to summarise BizTalk adapter support for using SAS tokens.

Most BizTalk Server examples use ACS tokens rather than SAS tokens, probably because the BizTalk Adapters only allowed configuration with ACS tokens when service bus relays were first released with BizTalk 2013. BizTalk 2013 R2 has limited support for configuration of SAS tokens and most adapters only allow use of ACS tokens out of the box (OOTB). If you want to use a SAS token you have to be very inventive. I hope that BizTalk vNext will add SAS token support for all WCF adapters.

Azure Relay became generally available on 27 March 2017, less than five months after the preview was announced. This service actually is comprised of two capabilities: the WCF Relay (which is the new name of the existing Service Bus Relay), and the new version of Hybrid Connections. This version of the latter is everything that the former version was, but much more:

Azure Relay became generally available on 27 March 2017, less than five months after the preview was announced. This service actually is comprised of two capabilities: the WCF Relay (which is the new name of the existing Service Bus Relay), and the new version of Hybrid Connections. This version of the latter is everything that the former version was, but much more:

After a warm welcome by Community Program Manager

After a warm welcome by Community Program Manager