by Gautam | Apr 29, 2018 | BizTalk Community Blogs via Syndication

Do you feel difficult to keep up to date on all the frequent updates and announcements in the Microsoft Integration platform?

Integration weekly update can be your solution. It’s a weekly update on the topics related to Integration – enterprise integration, robust & scalable messaging capabilities and Citizen Integration capabilities empowered by Microsoft platform to deliver value to the business.

If you want to receive these updates weekly, then don’t forget to Subscribe!

Feedback

Hope this would be helpful. Please feel free to reach out and let me know your feedback on this Integration weekly series.

by Eldert Grootenboer | Apr 25, 2018 | BizTalk Community Blogs via Syndication

Last week the new Gartner Magic Quadrant for Enterprise Integration Platform as a Service (EiPaaS) was published, listing Microsoft in the coveted leader space. Having worked with Azure’s iPaaS products for a long time now, I wholeheartedly agree with this decision, and congratulate all the teams within Microsoft who have been working so hard to get to where we are today. The complete report, with all requirements and results can be found in this report.

Source: Gartner (April 2018)

Looking at the definition of an integration platform as a service, we can see how important this space is in the modern world, where data and system integration is more important than ever.

An integration platform as a service (iPaaS) solution provides capabilities to enable subscribers (aka “tenants”) to implement data, application, API and process integration projects involving any combination of cloud-resident and on-premises endpoints. This is achieved by developing, deploying, executing, managing and monitoring integration processes/flows that connect multiple endpoints so that they can work together.

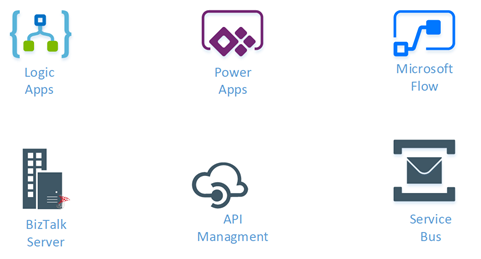

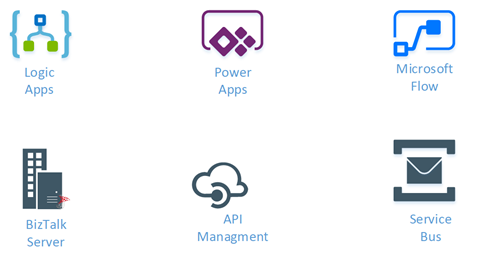

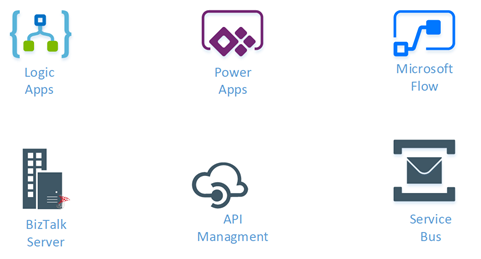

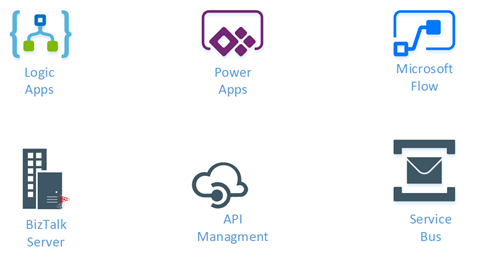

Microsoft provides various services which we can use to build a true iPaaS platform, each catering to its own strengths, which can be combined to fit any scenario, like Logic Apps, Service Bus, API Management and more. And in 2018, these services will branded together under the new name Azure Integration Services.

From 2018, Azure Integration Services will be the collective name for a number of integration-related components, including Logic Apps, API Management, Service Bus and Event Grid. Data Factory rounds off the EiPaaS offerings for extraction, transformation and loading (ETL)-type workloads. Microsoft Flow, built on top of Logic Apps, enables citizen integrators.

In my opinion, this is a good move to help customers understand how important it is to have these components working together. Before Azure, integration solutions were often build with only one or two products, like BizTalk or WCF, but nowadays it’s much more important to break down our problem, and check how to solve this using all those services we have access to.

Making it to the leader space wouldn’t have been possible without the great efforts from the Program Managers and their teams, like Dan Rosanova, Jon Fancey, Kevin Lam, Kent Weare, Vlad Vinogradsky, Matt Farmer and all others. These are the true driving forces behind these services, who keep adding new features, bring out new services and keep making the offering ever more awesome.

The other driving force behind the success of Azure and especially the iPaaS offering, I think is the community. By sharing knowledge, giving feedback to the product teams and engaging with new and existing customers, we can and do make a difference. Events like Integrate, the Global Integration Bootcamp, Integration Monday, Middleware Friday and the many user groups and meetups really help in carrying out the message around these great services.

Microsoft has been going forward steady, bringing new services like Event Grid and expanding and improving on existing ones like Logic Apps. Looking at where we were two years ago and one year ago, we can see how fast progress is being made, giving us some amazing tools in our daily work. Our customers agree with this as well, as pretty much any new project I do these days is being done with Azure iPaaS, showing how much trust they have in Azure and its services. And bringing together the different services under the Azure Integration Services name will help new customers find their way around more easily. And with that my prediction is, next year Microsoft will have climbed even further in the leader space on the quadrant.

by Lex Hegt | Apr 24, 2018 | BizTalk Community Blogs via Syndication

This blog is a part of the series of blog articles we are publishing on the topic “Why we built XYZ feature in BizTalk360”.

Why do we need this feature?

Being able to track messages and processes in middleware software like BizTalk Server can be used for few reasons, amongst them are:

- be sure all processes run like expected

- analysis of issues

- debugging during development/test phase

However, especially for live environments, the best practice is that only limited tracking should be turned on, to prevent a performance penalty. Therefore, there needs to be a balance between the amount of tracking which is turned on and the ability for the BizTalk administrator to be able to do his job when it comes to analysis of issues.

In general, when no message context/content tracking is turned on and only the default tracking is switched on, you should be good.

To prevent performance penalties, it is obvious that it helps to have a good overview of all the tracking settings of the BizTalk environment.

What are the current challenges?

All BizTalk Server related tracking settings can be maintained in the BizTalk Server Administration console. However, the tracking settings are found in different parts of the console. For example:

- the Group Level Tracking property is found in the BizTalk Group Settings Dashboard

- the BizTalk artifact settings are found at application/artifact level

- Each artifact like (receive ports, send ports, orchestrations, pipelines etc) all come with their own UI to manage tracking.

This results in not having a good overview and easy maintenance of the tracking settings with the standard BizTalk Server Administration console.

How BizTalk360 solves this problem?

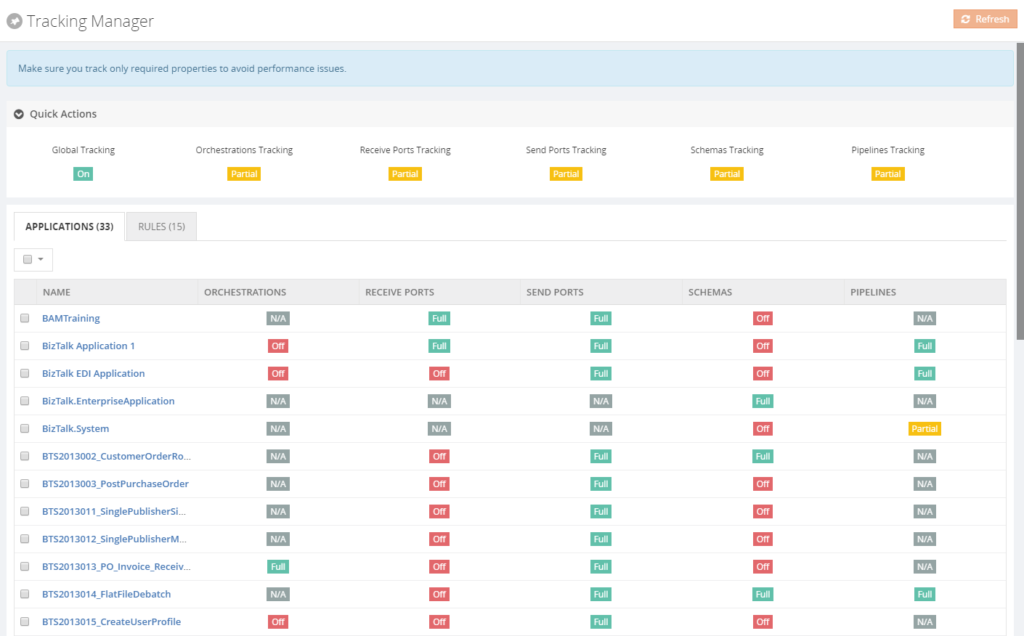

At BizTalk360 we decided to design one screen, which can be used for all tracking settings, enabling to have a good overview of all the tracking settings, meanwhile working as a central hub to configure all these settings.

We came up with the Tracking Manager screen, which can be found in BizTalk360 under Operations / Infrastructure setting.

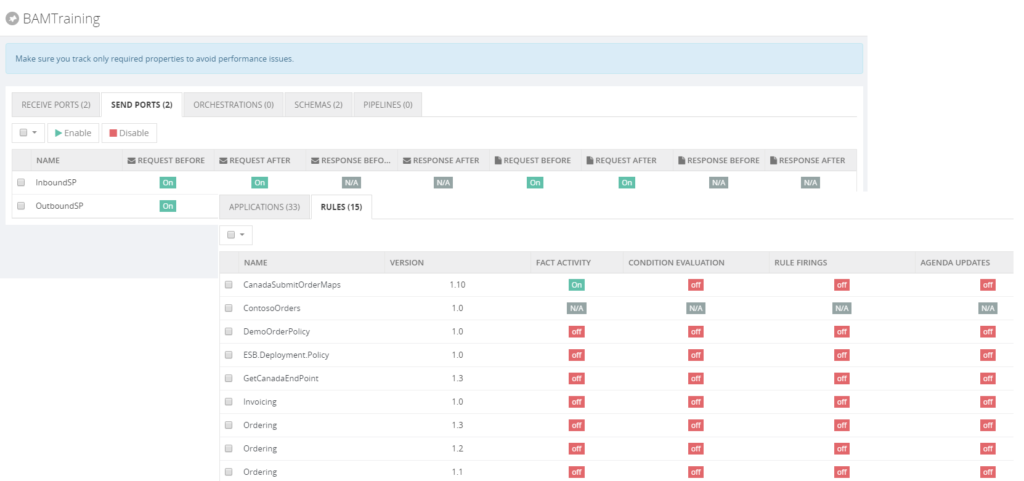

As you can see from the screen, it contains an overview of all tracking settings. On a high level, the screen is divided into two parts:

- Quick actions

- Applications and Rules

The Quick actions allow you to easily turn on/off tracking on a global level for the BizTalk artifact types, but also to turn on/off group level tracking.

The second part of the screen allows you to view/configure tracking until the application level. If a more fine-grained configuration is needed, you can simply click on the application and set the desired tracking for one or multiple artifacts at once. The overall result of the settings will be reflected in above screen.

Also, maybe less familiar, Business Rules can be tracked. BizTalk360 enables you to easily configure these settings from the Rules tab page.

All in all, we can conclude that the Tracking Manager in BizTalk360 gives easy access and maintenance to all the tracking settings for BizTalk Administrators.

Get started with a Free Trial today!

Download and try BizTalk360 on your own environments free for 30 days. The installation will not take more than 5-10 minutes.

Author: Lex Hegt

Lex Hegt works in the IT sector for more than 25 years, mainly in roles as developer and administrator. He works with BizTalk since BizTalk Server 2004. Currently he is a Technical Lead at BizTalk360. View all posts by Lex Hegt

by Gautam | Apr 22, 2018 | BizTalk Community Blogs via Syndication

Do you feel difficult to keep up to date on all the frequent updates and announcements in the Microsoft Integration platform?

Integration weekly update can be your solution. It’s a weekly update on the topics related to Integration – enterprise integration, robust & scalable messaging capabilities and Citizen Integration capabilities empowered by Microsoft platform to deliver value to the business.

If you want to receive these updates weekly, then don’t forget to Subscribe!

Feedback

Hope this would be helpful. Please feel free to reach out and let me know your feedback on this Integration weekly series.

by Lex Hegt | Apr 18, 2018 | BizTalk Community Blogs via Syndication

This blog is a part of the series of blog articles we are publishing on the topic “Why we built XYZ feature in BizTalk360“.

Why do we need this feature?

Microsoft BizTalk Server is a powerful middleware product which allows integrating all kind of information systems, independent of whether these systems are on-premise or in the cloud. BizTalk Server uses Receive and Send ports to communicate with a broad set of on-premise/cloud systems, both on the technology level (FILE, FTP, POP3, SMTP, etc.) as on the business level (ERP, CRM, etc.).

Besides just receiving and transmitting messages, BizTalk solutions might also contain Orchestrations, which can process all kind of business logic, for example, Order processing, Credit card validation, Claims processing etc.

As BizTalk Server is very scalable, a BizTalk environment can contain many deployments for all kind of different integrations. All together a BizTalk environment may contain hundreds of ports and orchestrations. The well-being of these artifacts is of vital importance to have the integrations working properly. When not running properly, these interruptions may cause serious damage to the business processes they support.

What are the current challenges?

To be well aware of the state of the BizTalk artifacts, the BizTalk administrator needs to consult the BizTalk Administration console multiple times per day. However, there are few challenges when depending on the BizTalk Administration console for checking the state of these artifacts.

Access to the Administration console – Often, the BizTalk Administration console is only installed on the BizTalk servers. Besides that there is the risk of unintended damaging the BizTalk server while accessing the server, you need to be authorized to access the servers. Not to be forgotten, is the fact that it constantly takes time to setup Remote Desktop connections to access the BizTalk server and the Administration console. Time which can easily be used for something more tangible.

Not suited for less experienced administrators – The Administration console is such a powerful tool, which is great when you are an experienced BizTalk Administrator. However, in the hands of less experienced administrators, unintentionally a lot of damage can be done. Think of for example stopping ports or terminating processes!

Not an efficient way of monitoring – Checking the state of the ports and orchestrations manually, multiple times per day, is just not efficient. It can easily be forgotten in the rush of the day, thereby putting the wellbeing of your integrations at stake.

Too many artifacts to monitor efficiently – BizTalk environments can contain hundreds of ports and orchestrations. In most scenarios, the artifacts should be in the Enabled/Started state. But there can be several reasons why certain artifacts are not in the expected state. Just to mention a few:

- After the deployment of an update of a BizTalk application the artifacts are in a wrong state

- Due to a temporary maintenance window, some of the artifacts need not be in the Enabled/Started state, to prevent suspended instances

- Artifacts might be Disabled as a result of a temporary outage

- Certain artifacts need only be Enabled/Started as a fall back scenario

By the given examples, it must be clear, that it can be very challenging to constantly be aware of the desired state of all the artifacts. This makes it hard to manually monitor the state of the artifacts in an efficient way.

Instead of having to monitor BizTalk manually, it is much more efficient to have automated, state-bound, artifact monitoring. Automated BizTalk monitoring will prevent you of constantly having to check for the state manually, enabling you to spend your time on more important activities. In the next section, we’ll show which features BizTalk360 has, to allow you to automatically monitor your BizTalk artifacts.

How BizTalk360 solves this problem?

To give you that comforting feeling that you are in control over the state of your ports and orchestrations, BizTalk360 provides multiple features.

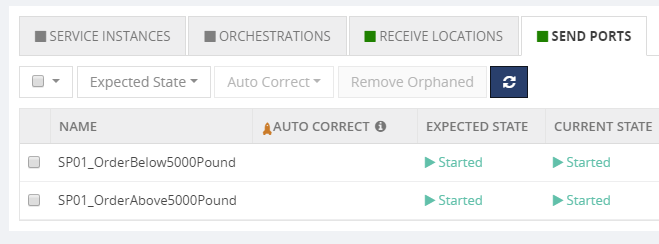

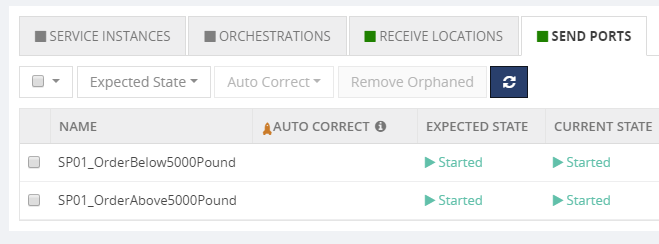

Threshold Monitoring – While using the concept of Threshold Monitoring, BizTalk360 allows you to setup state-bound monitoring. This means that you can easily setup monitoring to have the product check for the Expected state of your ports and orchestrations.

In above screenshot, you can see how some Send Ports are being monitored against their Expected State. Once, the Current State deviates from the Expected State, a threshold violation has occurred and BizTalk360 will notify you of that fact.

Positive and Negative monitoring – In most scenarios, the Expected State of your Ports and Orchestrations will be Enabled/Started; which is called Positive Monitoring. However, there might be valid reasons that certain ports/orchestrations need NOT be Enabled/Started. Setting up that kind of monitoring is called Negative Monitoring. BizTalk360 allows to setup for both Positive and negative Monitoring.

Receiving Notifications via multiple channels – Once a threshold violation has occurred, by default, you will receive notifications by email. Moving forward, BizTalk360 also allows you to receive notifications via multiple other channels.

We are constantly considering new ways to send notifications, but currently, the following Notification Channels are provided out-of-the-box: Email, SMS, Event Log, Slack, Microsoft Teams, ServiceNow, HP Operations Manager, Webhook. In addition, you can build your own custom notification channel like writing to a database, calling an internal system etc.

Auto Correct – BizTalk360 not just monitors your artifacts, it can also try to bring the artifacts back to their Expected state, once there is a mismatch between the Current State and the Expected State. Think of for example that FTP Receive Location which goes down at night or during the weekend.

The Auto-Correct feature works for the following artifacts:

- Receive Locations

- Orchestrations

- Send Ports

- BizTalk Host Instances

- Windows NT Services

- SQL Server Agent Jobs

- Azure Logic Apps

There is an extensive article on BizTalk360 Auto correct, which also explains in which kind of scenarios this feature comes at hand. You can that article here:

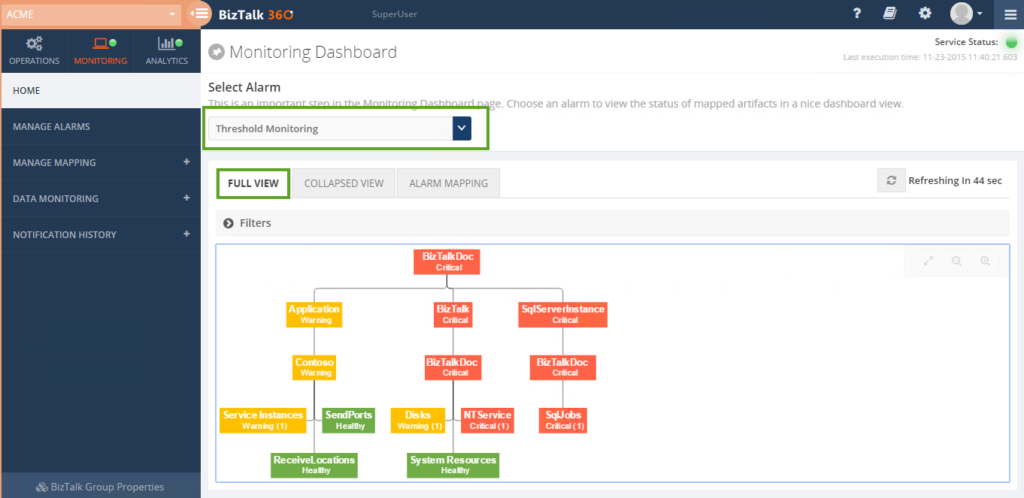

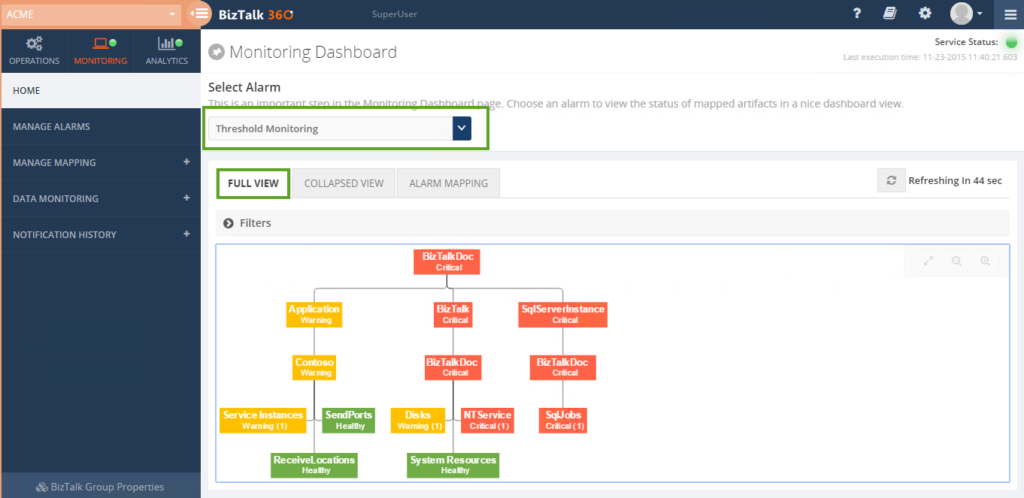

Monitoring Dashboard – Although receiving notifications of any mismatches between the Current State and the Expected State is very handy, it will equally be handy to have a large screen, at for example the Support Desk, which simply shows the current health of your artifacts. For this, we bring the Monitoring Dashboard, which does exactly that.

As you can see from below screenshot, it brings you a nice Expandable/Collapsible and filterable treeview, with automatic refresh, thereby constantly showing the current state of the artifacts.

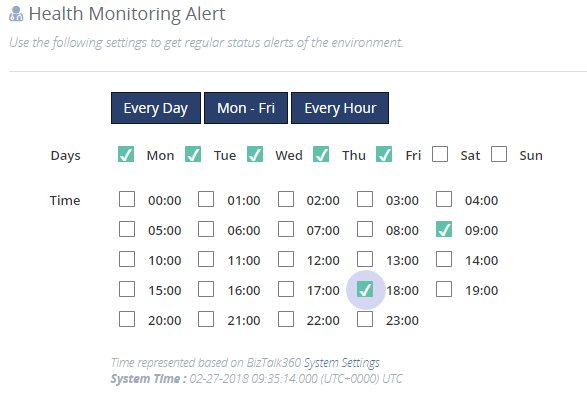

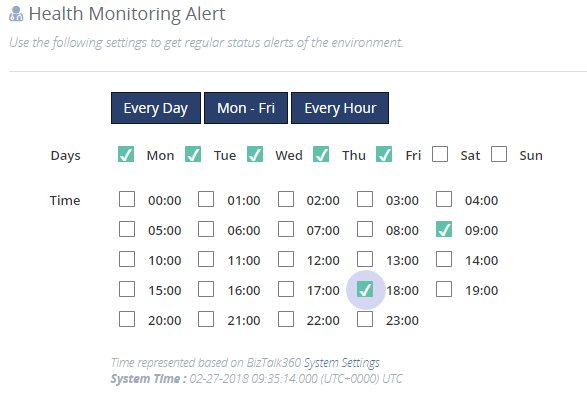

Health Check Reports – Last but not least, are the Health Check Reports you can receive at your convenient timing. These reports function as your daily health check, providing you with an overview of the state of all the artifacts from your alarm. Very handy to receive for example at the beginning of your working day!

Conclusion

In this article, we have seen why being aware of the state of your ports and orchestrations is important. We have also seen why manually monitoring that state is very inefficient and sometimes very complex. As a company which knows these challenges from our own experiences, we have brought multiple features which will help the BizTalk administrator being in control, without having to manually check the state.

Get started with a Free Trial today!

Download and try BizTalk360 on your own environments free for 30 days. The installation will not take more than 5-10 minutes.

Author: Lex Hegt

Lex Hegt works in the IT sector for more than 25 years, mainly in roles as developer and administrator. He works with BizTalk since BizTalk Server 2004. Currently he is a Technical Lead at BizTalk360. View all posts by Lex Hegt

by Sandro Pereira | Apr 17, 2018 | BizTalk Community Blogs via Syndication

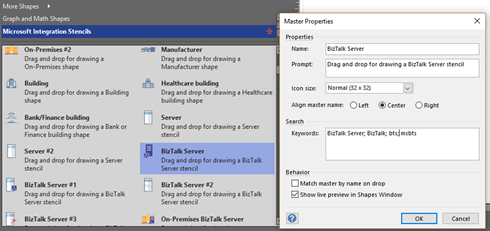

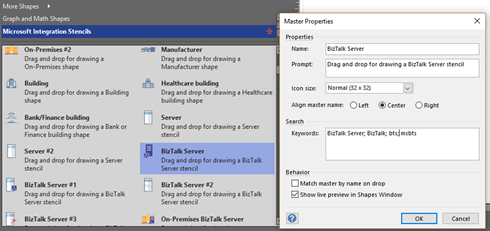

Microsoft Integration, Azure, BAPI, Office 365 and much more Stencils Pack it’s a Visio package that contains fully resizable Visio shapes (symbols/icons) that will help you to visually represent On-premise, Cloud or Hybrid Integration and Enterprise architectures scenarios (BizTalk Server, API Management, Logic Apps, Service Bus, Event Hub…), solutions diagrams and features or systems that use Microsoft Azure and related cloud and on-premises technologies in Visio 2016/2013:

- BizTalk Server

- Microsoft Azure

- Azure App Service (API Apps, Web Apps, Mobile Apps and Logic Apps)

- Event Hubs, Event Grid, Service Bus, …

- API Management, IoT, and Docker

- Machine Learning, Stream Analytics, Data Factory, Data Pipelines

- and so on

- Microsoft Flow

- PowerApps

- Power BI

- PowerShell

- Infrastructure, IaaS

- Office 365

- And many more…

What’s new in this version?

With the growing number of stencils in this package, it was becoming hard to find or look for the right shape/representation and based on some feedback I received from the community and some tips, I focused most of the work in this new version in providing search capacity to this package, but it wasn’t the only one:

- Search Capabilities: Defining the correct metadata information and keywords for all the shapes for a better search functionality.

- New shapes: of course, that has happened in all other versions, new shapes were added, in particular: Generic, Microsoft Flow and PowerApps shapes

You can download Microsoft Integration, Azure, BAPI, Office 365 and much more Stencils Pack for Visio from:

Microsoft Integration, Azure, BAPI, Office 365 and much more Stencils Pack for Visio (18,6 MB)

Microsoft Integration, Azure, BAPI, Office 365 and much more Stencils Pack for Visio (18,6 MB)

GitHub

Or from:

Microsoft Integration and Azure Stencils Pack for Visio 2016/2013 v3.1.0 (18,6 MB)

Microsoft Integration and Azure Stencils Pack for Visio 2016/2013 v3.1.0 (18,6 MB)

Microsoft | TechNet Gallery

The post New version of Microsoft Integration, Azure, BAPI, Office 365 and much more Stencils Pack for Visio is now available on GitHub appeared first on SANDRO PEREIRA BIZTALK BLOG.

by Gautam | Apr 15, 2018 | BizTalk Community Blogs via Syndication

Do you feel difficult to keep up to date on all the frequent updates and announcements in the Microsoft Integration platform?

Integration weekly update can be your solution. It’s a weekly update on the topics related to Integration – enterprise integration, robust & scalable messaging capabilities and Citizen Integration capabilities empowered by Microsoft platform to deliver value to the business.

If you want to receive these updates weekly, then don’t forget to Subscribe!

Microsoft Announcements and Updates

Community Blog Posts

Videos

Podcasts

Feedback

Hope this would be helpful. Please feel free to reach out and let me know your feedback on this Integration weekly series.

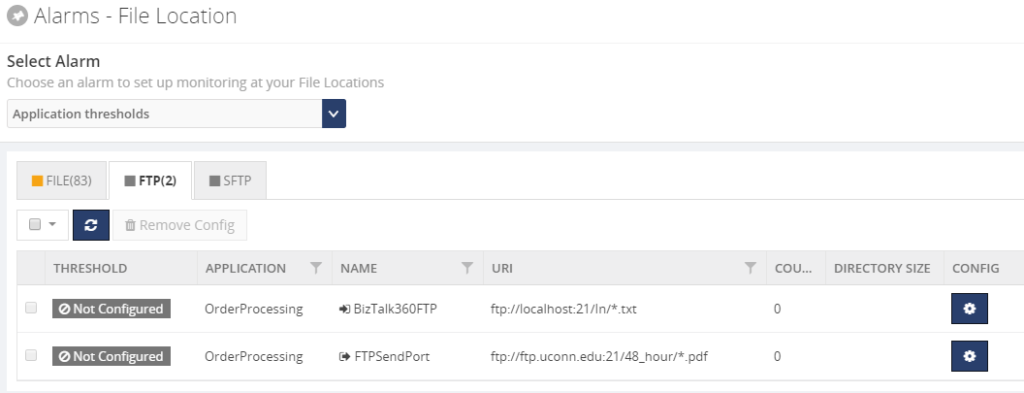

by Lex Hegt | Apr 11, 2018 | BizTalk Community Blogs via Syndication

This blog is a part of the series of blog articles we are publishing on the topic “Why we built XYZ feature in BizTalk360”.

Why do we need this feature?

In the day to day activities of a BizTalk administrator, you might come across integrations where FTP sites are used for receiving and transmitting messages. FTP sites are often used for cross-platform integrations. For example, when you have an SAP system on Unix that has to be integrated, via BizTalk Server, with other systems, you might use FTP for receiving and transmitting of messages.

SFTP & FTPS are just the secured version of FTP with advanced transport encryption mechanisms, so your end-to-end data transmission is secure and safe.

To keep the business process going, it can be of vital importance that the FTP/SFTP sites are online and the messages are being picked up. So, when a BizTalk administrator needs to be constantly aware whether the FTP/SFTP sites are online and working properly, the administrator needs to monitor the sites and the activities which take place on these sites.

What are the current challenges?

BizTalk Server offers no monitoring capabilities, not for Receive Locations / Send Ports and also not for endpoints like FTP, SFTP and FTPS sites. So, using just the out-of-the-box features of BizTalk Server, a BizTalk administrator will have to manually check whether the FTP sites are online and whether all (appropriate) files are being picked up for further processing.

Manual monitoring

This kind of manual monitoring can be quite cumbersome and time-consuming. The administrator will probably use multiple pieces of software to be able to perform these tasks. Think of for example the BizTalk Administration console to check whether the Receive Locations/Send Ports are up and some FTP client to check whether files are being picked up.

It is obvious that this is not a very efficient scenario, which could easily be automated by setting up monitoring.

Maintaining scripts for monitoring FTP sites

To reduce their workload, we experience that BizTalk administrators are creating their own scripts to monitor FTP sites and all kind of other resources. Although this kind of scripts certainly can be of help, we still think this does not fully solve the problem.

For example, often these kinds of scripts need maintenance when FTP sites need to be added, changed or deleted from monitoring. This kind of tasks can be easily forgotten.

Also from a knowledge transfer perspective, it’s easy to forget to update new colleagues about the existence of this kind of scripts, as they will probably be installed on some (monitoring) server.

Another challenge with solving this kind of problems with scripts is that not each administrator is capable to write this kind of scripts, which makes knowledge transfer even harder.

To keep the overview, we think that it is easier to use software, like BizTalk360, to have everything in one easily accessible place, with good visibility of all the features/capabilities, fine-grained security/auditing and without the need to maintain custom scripts etc..

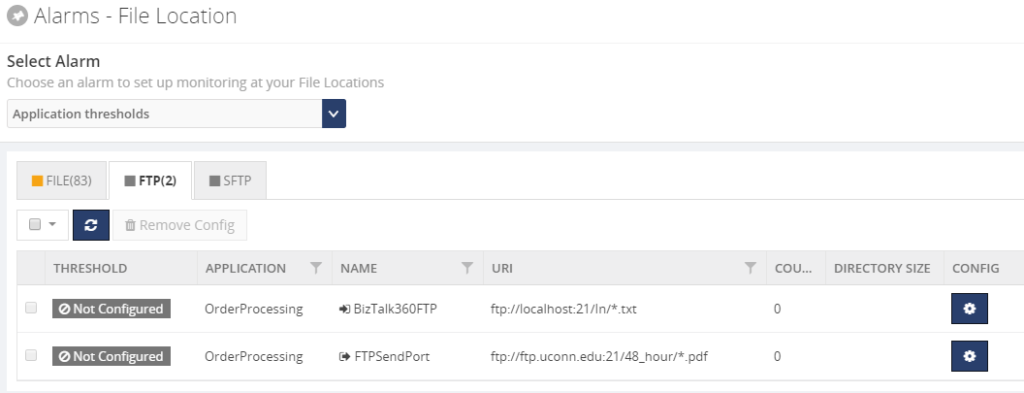

How BizTalk360 solves this problem?

With BizTalk360, we make monitoring of FTP/SFTP/FTPS sites a lot easier. For a very long time, the product offers monitoring of Receive Locations and Send Ports, but for some time now, BizTalk360 also offers to monitor of the physical FTP/SFTP/FTPS endpoints.

We wanted to make setting up this kind of endpoint monitoring as seamless as possible and therefore we simply show all the ports in the current BizTalk group which make use of the FTP/SFTP/FTPS adapter.

In BizTalk360, you can find FTP monitoring under Monitoring => Manage Mapping => File Locations (File, FTP, SFTP).

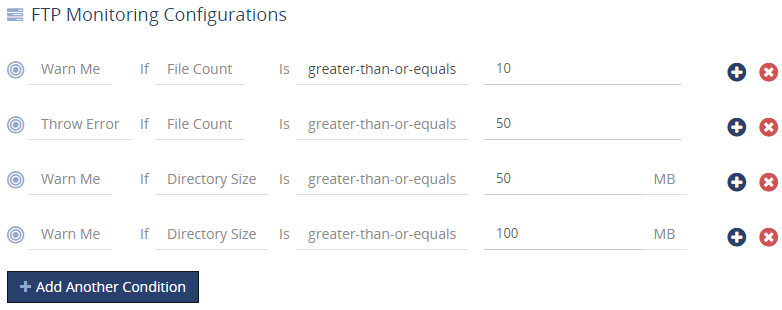

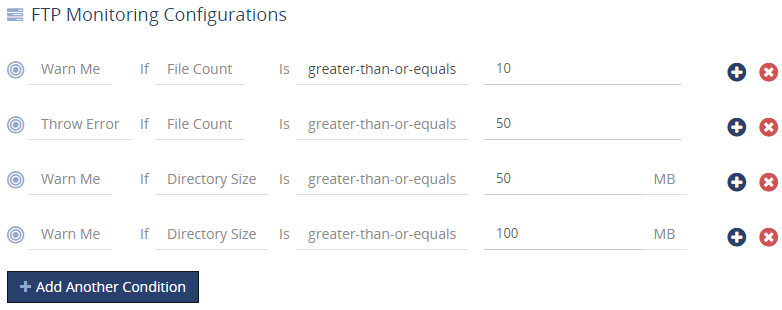

Next, you can set up monitoring rules based on File Count and Directory Size and have BizTalk360 send Warning or Error notifications through the notification channels which are configured on the associated alarm.

Next, you can set up monitoring rules based on File Count and Directory Size and have BizTalk360 send Warning or Error notifications through the notification channels which are configured on the associated alarm.

A fully monitored FTP endpoint might look like shown below.

Of course, besides the greater-than-or-equals operator, also other common operators are available.

Conclusion

As a final point, we see from time to time that administration teams maintain a administrators handbook, which contains all the tasks a (BizTalk) administrator should take care of. We think that by using software like BizTalk360, we can reduce the number of pages in such handbooks, as the kind of scripts we mentioned no more have to be described in that kind of books.

This description could be replaced by, for example, a general guideline on how FTP sites should become monitored and the monitoring rules with BizTalk360.

As a result, we hope to make the work of BizTalk administrators a bit easier so the team can focus on the more exciting parts of the job of BizTalk administrators, instead of constantly having to update their handbooks.

So, we think that we make the day to day life of a BizTalk administrator, who needs to monitor the well-being of FTP sites, a little bit easier by bringing this feature.

If you want to read more on FTP/SFTP monitoring in much more detail, you can check the following article:

FTP, FTPS, SFTP Location monitoring

BizTalk360 also offers an advanced monitoring capability called “Data Monitoring” which allows monitoring the traffic/volume of messages going through the ports for a given period, ex: expected 50 PO orders from our partner via FTP/SFTP. Please check out this article. Introducing BizTalk Server Data Monitoring in BizTalk360

Get started with a Free Trial today!

Download and try BizTalk360 on your own environments free for 30 days. Installation will not take more than 5-10 minutes.

Author: Lex Hegt

Lex Hegt works in the IT sector for more than 25 years, mainly in roles as developer and administrator. He works with BizTalk since BizTalk Server 2004. Currently he is a Technical Lead at BizTalk360. View all posts by Lex Hegt

by Gautam | Apr 8, 2018 | BizTalk Community Blogs via Syndication

Do you feel difficult to keep up to date on all the frequent updates and announcements in the Microsoft Integration platform?

Integration weekly update can be your solution. It’s a weekly update on the topics related to Integration – enterprise integration, robust & scalable messaging capabilities and Citizen Integration capabilities empowered by Microsoft platform to deliver value to the business.

If you want to receive these updates weekly, then don’t forget to Subscribe!

Microsoft Announcements and Updates

Community Blog Posts

Videos

Podcasts

Feedback

Hope this would be helpful. Please feel free to reach out and let me know your feedback on this Integration weekly series.

by Lex Hegt | Apr 4, 2018 | BizTalk Community Blogs via Syndication

This blog is a part of the series of blog articles we are publishing on the topic “Why we built XYZ feature in BizTalk360”. Read the main article here.

Why do we need this feature?

On a daily base, our customers use BizTalk360 for a lot of their activities related to BizTalk operations and monitoring. However, in a number of scenarios, BizTalk administrators are faced with activities which need to be automated, for example: during the deployment of BizTalk Applications. It is easy to forget some tasks and doing them manually can be error-prone. So, it may be better to address such challenges in an automated way.

Here are some example automation scenarios in that can happen in your BizTalk Server environment

BizTalk Server Operations

- You might want to automatically stop certain Ports over the weekend, because of regular or temporary maintenance of internal or external systems

- External systems, like portals, might need BizTalk data/metrics on a regular basis, like information around EDI transactions, BizTalk usage or BizTalk performance metrics

- You want particular users to have easy access to download links for the messages of suspended instances, without having to give them access to BizTalk Server and/or BizTalk360

- You may want to Deploy/Publish a Business Rule Policy at a particular moment (additional discounts for the holiday season or laws to which should be complied as of a particular moment)

BizTalk Applications Maintenance and Deployment

- Before or after deployment of BizTalk Applications, you may want to automatically stop and start Host Instances

- After a deployment, you may want to set certain ports/orchestrations to a particular state

- You want to bring BizTalk down at a given date and time, due to system maintenance

Identity and Access Management

- Your organisation uses tooling for Identity and Access Management and you want to automatically create User Access Policies for accessing BizTalk360 and BizTalk

- For auditing purposes, you need to provide user lists and profiles and audit information on a regular bases

Because of the nature of this kind of scenarios, the administrators would need programmatically access to BizTalk server and rely on command files or PowerShell scripts to be able to fulfil the requirements.

What are the current options and challenges?

BizTalk Server comes with different tooling for programmatic access to BizTalk Server. This tooling is described below.

WMI and ExplorerOM

Let’s briefly discuss the purpose of both WMI and ExplorerOM:

- WMI (Windows Management Instrumentation) – an infrastructure, which comes with Windows, and which allows you to automate administrative tasks on (remote) computers. BizTalk Server comes with its own WMI management pack to do preliminary management activities like starting/stopping host instances, restarting ports etc.

- ExplorerOM – a DLL used to perform all kind of BizTalk Application related tasks like exploring the schemas, maps, orchestrations, operating on them like starting/stopping etc.

BizTalk PowerShell Provider

There is also a community initiative to provide a BizTalk PowerShell provider so you can execute some of the management tasks using PowerShell (since PS has become the core of any automated administrative tasks). However, the project is not actively maintained anymore and it’s not advisable to rely on this for any critical usage.

BizTalk Server 2016 Feature Packs

Starting with BizTalk Server 2016, Microsoft started to release Feature Packs. With the first Feature Pack (BizTalk Server 2016 Feature Pack 1). a unified API became available for BizTalk Server 2016 (not for earlier versions).

This Management API enables you to perform some of the scenarios which we mentioned in the first paragraph but still lacks API’s to do extensive automation. For example, stop and start host instances.

So, although BizTalk Server comes with multiple capabilities for programmatic access to BizTalk Server, writing and testing all kind of scripts is time-consuming and not all scenarios can be covered.

Even when these custom scripts seem to work nicely, non-functional requirements, like security, auditing and logging, are not taken into account in these kinds of scripts. This would leave you in the dark when something unexpected happens. Definitely, not a scenario where you want to be.

How BizTalk360 solves this problem?

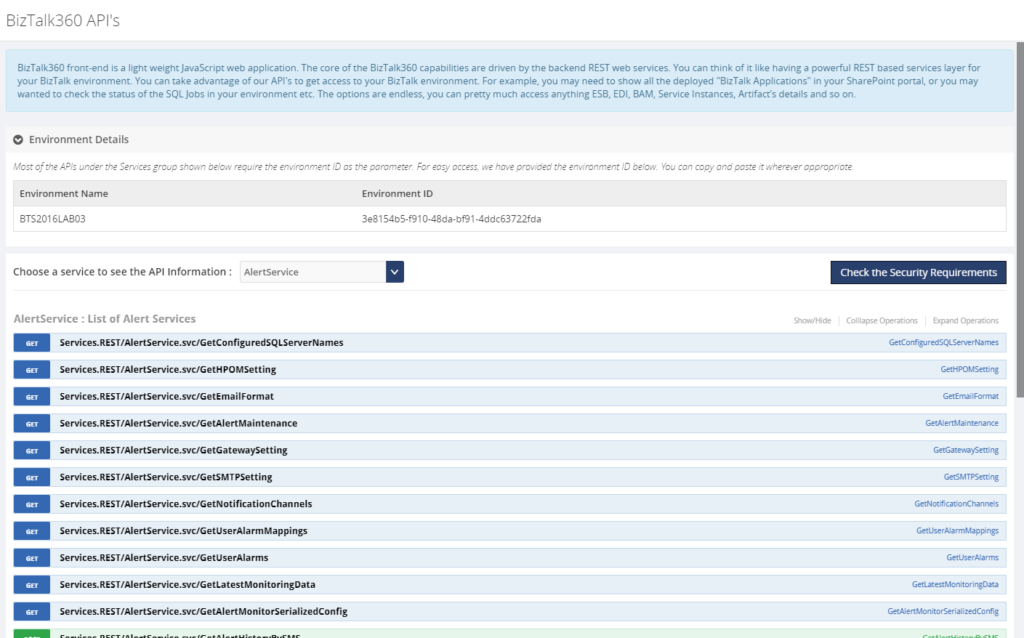

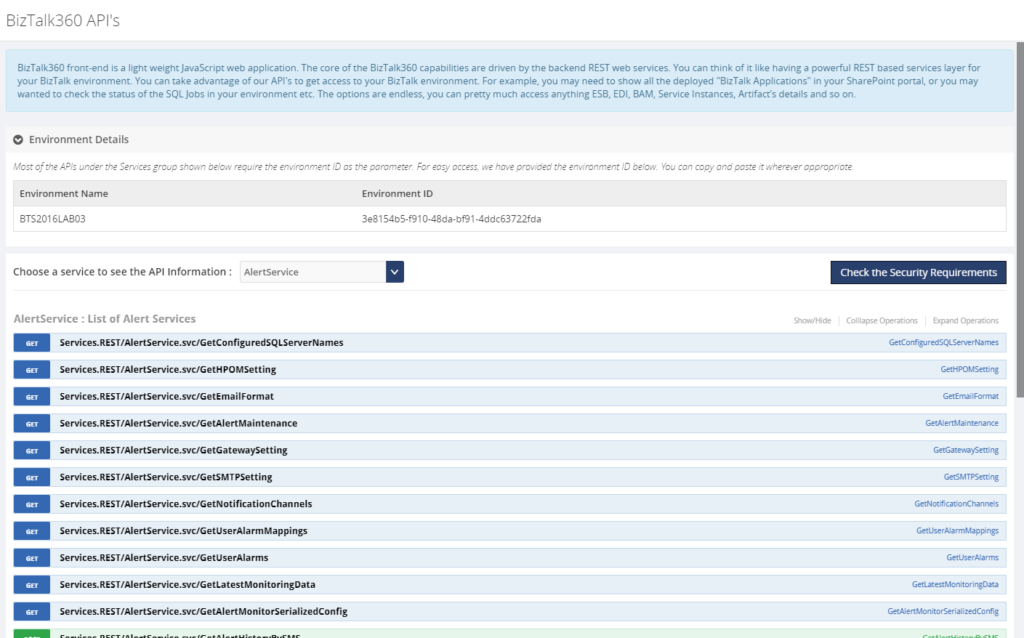

BizTalk360 consists of multiple components, a responsive user interface, few backend services and a rich API service layer.

The API service layer consists of over 400 REST API operations. The user interface of BizTalk360 uses these extremely rich API’s to perform all the tasks to manage the BizTalk environment.

A couple of years ago, we thought, exposing our API’s will hugely help BizTalk Server Administrators to automate most of their routine activities we mentioned in the beginning. Hence we documented all the BizTalk360 API’s (which are of course the management and monitoring API’s for your BizTalk Environment) using swagger and live test within the BizTalk360 application.

The API currently contains 14 services with more than 400 API operations, covering a breath of management activities you can do on your BizTalk Environment. To give you a better understanding of the capabilities, these services are:

- ActivityMonitoringService – BAM related services

- BizTalkQueryService – Execute all kind of MessageBox and Tracking oriented queries

- AlertService – All kind of Monitoring related API’s

- BizTalkGroupService – BizTalk Platform oriented API’s

- SchedulerService – Data Monitoring Scheduler related API’s

- ESBManagementService – Exception Management related API’s

- AdvancedEventViewerService – API’s to query the Eventlog entries which are collected by the Advanced Event Viewer feature

- BizTalkApplicationService – Search for and action on BizTalk Application artifacts

- AdminService – Get and maintain all kind of settings within BizTalk360

- EDIManagementService – Get/Set EDI configuration and get EDI statistics

- EnvironmentMgmtService – API’s mainly for Knowledge Base and Dashboard management

- RulesEngineService – API’s for maintenance of Business Rules

- AnalyticsDataService – Analytics, Message Patterns and Throttling related API’s

- AzureService – Get/Operate on Logic Apps and get Integration Account artifacts

With the API you cannot just retrieve information from BizTalk via GET operations, but you can also action on BizTalk Server, BizTalk360 and even certain Azure services via POST operations.

Each API can be tried from the API documentation, which comes with a Swagger definition file, by using the Try it out! feature. This helps you testing with the required parameters. All you need to do is to choose the needed API, provide the needed parameters for the API, click the Try it out! button and view the results.

Let’s have a look at few examples of how you could make use of the API.

- Stop and start Host instances before and after deployment of BizTalk applications

- Disable/Enable Receive Locations and/or Stop/Start Orchestrations/Send Ports

- Get a list of currently deployed BizTalk Applications for Support purposes

- Get Event Log data of all the BizTalk servers in one go

- Get Throttling data from the BizTalk Hosts

- Get all kind of Performance metrics of your BizTalk system

- Test, Deploy, Undeploy and Publish Business Rules

- Disable/Enable BizTalk360 alarms during deployments

- Show data in an Operations Dashboard widget, for example, the state of your SQL jobs

- Automatically create BizTalk360 alarms during deployment of BizTalk applications

Conclusion

By using the BizTalk360 API and its documentation, the complexity from WMI and ExplorerOM is shielded from the user. Besides being able to act on BizTalk, the API also allows you to action on BizTalk360. You can even show the output of API calls in the Operations Dashboard!

Few examples of how the BizTalk360 API`s can be used in real-world scenarios: Automate Monitoring Alarm creation in BizTalk360

If you are using BTDF (BizTalk Deployment Framework) we have a wrapper console application called BT360Deploy that can be used to automate certain tasks like creating monitoring alerts during deployment. The project can be accessed here.

Get started with a Free Trial today

Why not give BizTalk360 a try. It takes about 10 minutes to install on your BizTalk environments and you can witness the benefits of auto-healing on your own BizTalk Environments. Get started with the free 30 days trial.

The post Why did we expose all of our BizTalk Operations and Management REST API’s? appeared first on BizTalk360.

Next, you can set up monitoring rules based on File Count and Directory Size and have BizTalk360 send Warning or Error notifications through the notification channels which are configured on the associated alarm.

Next, you can set up monitoring rules based on File Count and Directory Size and have BizTalk360 send Warning or Error notifications through the notification channels which are configured on the associated alarm.