by Saravana Kumar | Dec 30, 2018 | BizTalk Community Blogs via Syndication

One thing I have been doing consistently on the last day of each year since I started BizTalk360 back in 2011 is donating a small amount to GOSH as a way of saying thank you for one more successful year.

In spite of where I’m in the world, I make a note on my personal calendar to do this activity first thing on 31st December.

A definition of success is different for different people, for me, it’s pretty simple, being healthy (people around me and myself), moving forward a few steps ahead from where we have started and try and help others move forward as much as I can. As long as we are not standing still or moving backward and everyone around me is happy then it’s a successful year.

This year we contributed $10k to GOSH bringing the total contribution to approximately $47,000 in the past 7 years. Here is the summary.

About GOSH

GOSH is one of the world’s leading children’s hospitals, housing the widest range of specialists under one roof. GOSH opened its doors back in 1852 with just 10 beds, today they get around 600 new patients every day. It was the first hospital in the UK dedicated solely to the treatment of children.

GOSH was supported by some of the great individuals like Charles Dickens, Queen Victoria and Diana Princess of Wales.

We feel proud to be associated with such a great organization with such a great cause.

Author: Saravana Kumar

Saravana Kumar is the Founder and CTO of BizTalk360, an enterprise software that acts as an all-in-one solution for better administration, operation, support and monitoring of Microsoft BizTalk Server environments. View all posts by Saravana Kumar

by Rochelle Saldanha | Dec 27, 2018 | BizTalk Community Blogs via Syndication

As the year draws to a close, it’s time to gather your thoughts and think about all you’ve done, and every step – no matter how big or small, got you to where you are today.

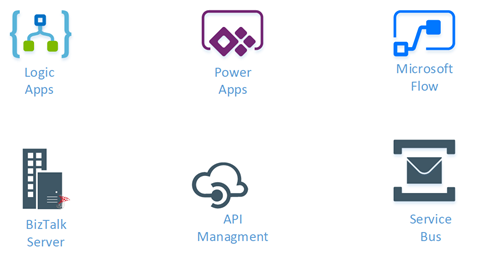

Our parent company, Kovai Ltd., made huge strides this year and we have 4 active products in the market. We now have the complete package of tools to support hybrid integration scenarios .

A One Platform solution for your Operational, Monitoring and Analytic needs for your BizTalk environment

Empower functional support teams and Business users by providing end to end visibility into Azure integration Services with rich business context

The comprehensive way to operate, manage and monitor Azure Serverless Services related to Enterprise Integration

The Knowledge Base Software that scales with your Product

Customer Happiness Team

Managing all our customers and providing top-notch customer service is at the topmost of our priorities. Customer Service is all about serving and helping at the right time. It isn’t about selling or wanting something from your customers – But guiding and helping our customers to achieve the best from our products.

Being part of the technical support & Customer Relationship team has given us a good base to have well-meaning conversations with our customers and understand the crux of their issues and suggest suitable alternatives, workarounds or provide helpful information. Our clients really appreciate the time spent with them to help resolve their issues.

Customers don’t connect with automated bots,

Customers connect with real people.

This year the Technical support team pushed the boundaries of their skills and became part of the new DevOps teams.

DevOps is the practice of operations and development engineers participating together in the entire service lifecycle, from design through the development process to production support.

Everyone on the team provided their contribution in the form of developing a new feature, enhancing an existing feature, testing, documenting, writing blogs and so on. All the product support engineers underwent product training with the help of the respective developers who developed the product features and with the QA who tested, and they evolved from support engineers to QA cum Product Support engineer.

Document360 Team

This year is again special for us at Kovai as we started a non-integration product that is mainly aimed at customers who are struggling like us to find a dedicated self-service knowledge base platform that meet our needs.

At Kovai Limited, we needed this platform for providing customer support for our products. In fact, the whole thought process of Document360 was driven by the pain points we have seen using the Helpdesk software for documentation.

We attended multiple events around London & Dublin, spreading the word of our Knowledge Base solution – Document360

INTEGRATE 2018

Our annual event – INTEGRATE 2018 – had (420+) attendees, speakers, sponsors this year and was a grand success. The event was held at ETC. Venues in the city center of London.

We had a lot of interesting speakers from Microsoft as well from across the integration landscape all over the world. We had speakers from as close as the UK, but also as far away as New Zealand and Australia! All our sponsor booths had heavy footfall as well. It was great to meet old friends and make new friends by meeting people in the real world, who we normally only meet via email and conference calls!

Each year, we try to bring some entertainment to the event. Last year we had a magician, while a new interesting concept this year was that BizTalk360 hired Visual Scribing to come and draw a mural of all the presentations, capturing the key messages throughout the conference.

We also hired some entertainers whose skills the attendees thoroughly enjoyed. A Caricaturist who captured all the attendees true likeness and a skilled saxophone player who blew everyone’s socks off!

It was a testament to the hard work carried out by the entire team to successfully pull off an event of this scale.

We are surely going to run the INTEGRATE event in 2019 as well! In fact, there will be an event in London and one in Redmond at the Microsoft Campus.

Feel free to join us in 2019 as the registrations are already open now with Early Bird offering:

Xmas in Kovai UK

Team Kovai UK had a memorable Christmas lunch complete with Santa hats at the Rose & Crown, Orpington. As the usual tradition, we also celebrated Secret Santa in the office and had a few laughs and decorated our gorgeous Christmas Tree.

The year had indeed come to an end, and we look forward to the coming year as new challenges and opportunities come our way and with each step, we become better and build our strength and character.

So, another year has gone by, we are older and wiser and can look forward to the new year of things yet to unfold

Author: Rochelle Saldanha

Rochelle Saldanha is currently working in the Customer Support & Client Relationship Teams at BizTalk360. She loves travelling and watching movies. View all posts by Rochelle Saldanha

by Gautam | Dec 24, 2018 | BizTalk Community Blogs via Syndication

Do you feel difficult to keep up to date on all the frequent updates and announcements in the Microsoft Integration platform?

Integration weekly update can be your solution. It’s a weekly update on the topics related to Integration – enterprise integration, robust & scalable messaging capabilities and Citizen Integration capabilities empowered by Microsoft platform to deliver value to the business.

If you want to receive these updates weekly, then don’t forget to Subscribe!

Feedback

Hope this would be helpful. Please feel free to reach out to me with your feedback and questions.

by Sivaramakrishnan Arumugam | Dec 20, 2018 | BizTalk Community Blogs via Syndication

A few days back we have received a question from one of our customer on the possibility to install BizTalk360 on Windows Azure Virtual machine. We have replied with suggestions, and he was impressed by the option what we have provided to install BizTalk360.

That’s the moment of inspiration for this blog. Here, we are going to look at the types of installers available and different ways to install BizTalk360.

I would recommend you to have a look at the prerequisites that should be met to install and work with BizTalk360.

Installing BizTalk360 – What are the options

One of the common questions that customers raise to us. There are various deployment choices available for BizTalk360.

This is one of the common questions we have observed recently on our customer engagements.

The deployments are purely based on the organization needs and based upon the BizTalk environment architecture. Here are the options BizTalk360 provides:

- Installing BizTalk360 On a BizTalk Server

- Installing BizTalk360 On a Stand-Alone (non-BizTalk) Server

- Installing Just the BizTalk360 Monitoring Service

- Installing Just the BizTalk360 Analytics Service

- Installing BizTalk360 on High Availability BizTalk Servers

How can you achieve the various deployment options?

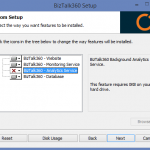

If you are installing BizTalk360 for the first time, you can select the components that you wish to install on the server.

Option 1 – Installation on the BizTalk Server environment

You can install BizTalk360 in one of BizTalk Servers of the BizTalk Server environments (production, non-production etc.) and configure all the environments in one BizTalk360 interface.

Option 2 – Installation on a stand-alone server

This is another common scenario where customers don’t want to install BizTalk360 directly on their production environment.

BizTalk360 supports this scenario by allowing customers to install on a standalone server and access various environments. In this case, customers need to install BizTalk administration components (only) in the standalone server. Also, any third-party adapters must be installed on this server.

It is one of our recommendations to install BizTalk360 on a separate box for the customers who don’t want to install in their BizTalk Environment. The benefit is that once your BizTalk server goes down, your BizTalk360 server won’t go down along with it and be able to send notifications about your BizTalk server not being available.

Option 3 – Install Just the BizTalk360 Monitoring Service

The Monitoring Service plays a key role as it is responsible for fetching the status of artifacts from the BizTalk server to monitor them. Using this option, you can install the BizTalk360 Monitoring Service on a separate box and BizTalk360 database on a separate box.

This setup will be helpful in a scenario where the customer wants to have the BizTalk360 web services, user interface (IIS), BizTalk360 Monitoring service and the BizTalk360 Database all in separate boxes.

This setup can also be done when you want the BizTalk360 monitoring service to be in the high availability state. When monitoring service is installed in two servers in the high availability mode, when one service goes down, the other service will be up, where the monitoring of your BizTalk environment by BizTalk360 will be continued without any interruption.

Option 4 – Install Just the BizTalk360 Analytics Service

As like installing BizTalk360 monitoring service on a separate box you can install the BizTalk360 Analytics Service on a separate box as well.

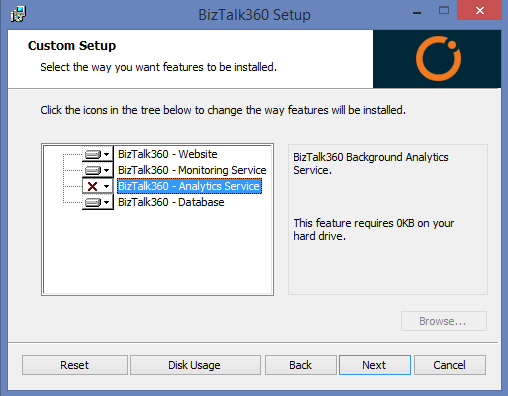

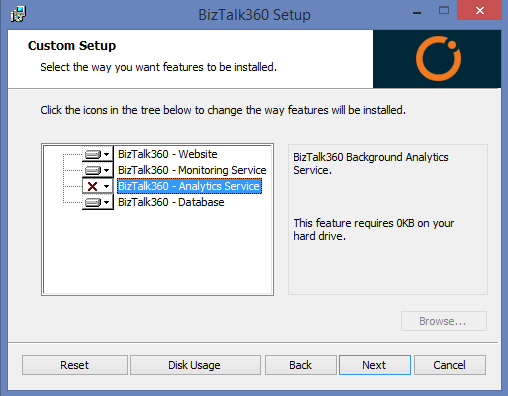

Option 5 – Installing BizTalk360 on High Availability BizTalk Servers

You can install BizTalk360 on BizTalk high availability servers. At the same time, you can make BizTalk360 as a highly available setup.

Installing BizTalk360 on the single server environment is a straightforward task when the BizTalk server and database are existing in the same machine. You can simply run the BizTalk360 MSI from the Administrator Command prompt.

But in most of the cases, we have seen our customers use BizTalk360 in a High Availability mode to make it available all the time.

Different ways to install BizTalk360

To fulfil all the customer needs, there is a number of ways to install BizTalk360 and we provide different types of installer options:

- Default installation

- Silent Installation

- Azure Marketplace installer

- Azure easy installer

Default installation

The Default installation is the regular way of installing BizTalk360.

Once you have created and configured a machine with all the prerequisites met, download the latest version of BizTalk360 (.msi) and install it on the machine.

In which case will this be helpful?

When you have a physical/virtual server within an organization which may have or have not internet connectivity.

You can achieve all the various deployment choices,

- Installing BizTalk360 on a BizTalk Server

- Installing BizTalk360 on a Stand-Alone (non-BizTalk) Server

- Just the BizTalk360 Monitoring Service

- Just the BizTalk360 Analytics Service

- BizTalk360 on High Availability BizTalk Servers

You can download the latest version installer of BizTalk360 from http://www.biztalk360.com/free-trial/ . You must enter the registration information in the form before downloading the BizTalk360 MSI.

Silent Installation

This is one of the interesting cases which came to us. One of our customers was trying to install BizTalk360. Although he was from Admin team, he was not a member of the BizTalk groups.

He proposed a request that is there any option to install BizTalk360 without the installer user interface or screen. We do have Silent installation support.

So, we assisted the customer with the command which they need to run the installer without the installer GUI. But with the silent installation, you can’t achieve all the various deployment choices as seen with the regular installation (Refer the section “Default Installation -> In which case it will be helpful”).

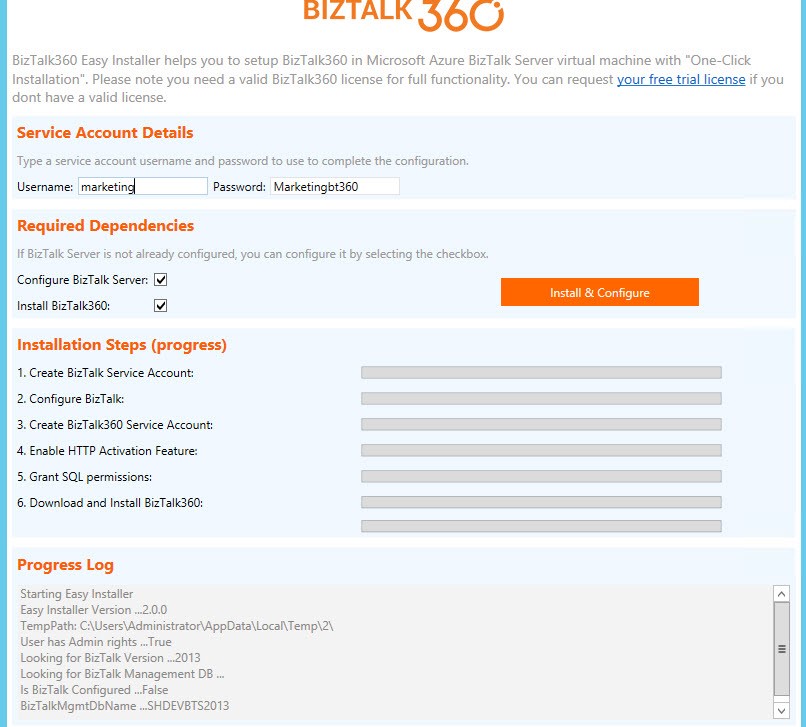

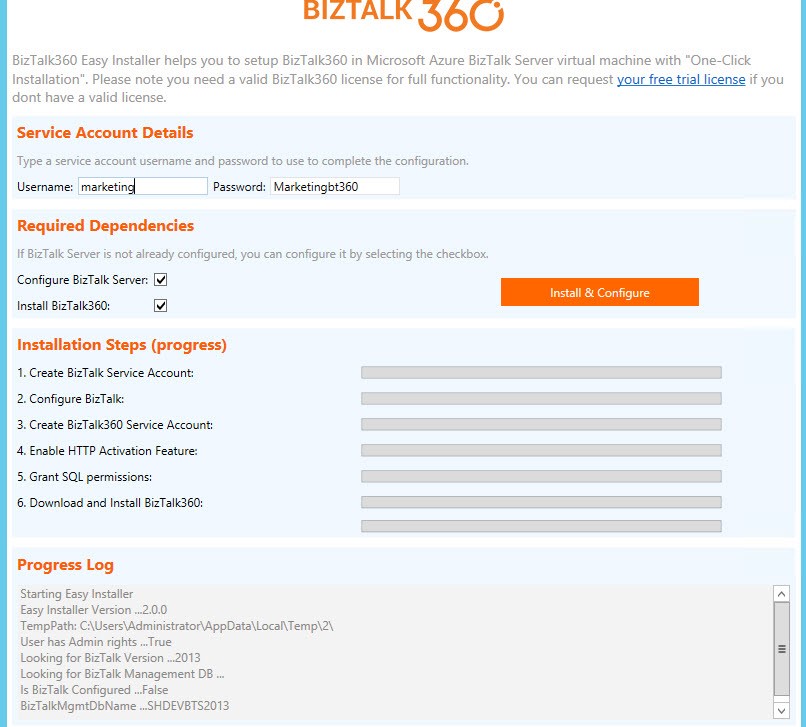

Azure easy installer

The move to the cloud eventually means that customers using BizTalk360 for managing and monitoring their BizTalk server environments will need their BizTalk360 setup to run on their Azure setup. Without BizTalk360 on their Azure setup, the only way for customers to manage their BizTalk environment (running on Azure) is by physically logging into the server through RDP connection.

Also, there is a challenge with the number of RDP connections (no. of users) who can access the server at a time. Therefore, the presence of BizTalk360 becomes an important factor – be it in an on-premise setup or on a remote server (Azure).

In which case will this be helpful?

Any server which has BizTalk Server installed, with the help of BizTalk360 Azure Easy Installer, you can easily install BizTalk360 by executing a single PowerShell command on your BizTalk Server Machine.

Why did we introduce this?

As the technology and usability evolve, we would like to encourage customers who are already using BizTalk server in Azure, to move their BizTalk360 environment to the cloud as well.

The customers using BizTalk360 for managing and monitoring their BizTalk server environments in Azure will need their BizTalk360 setup to run on their Azure setup as well.

How easy to use is it?

With the BizTalk360 Azure Easy Installer, you can easily install BizTalk360 by executing a single PowerShell command on your BizTalk Machine. The installer will take care right from the scratch of creating a service account for BizTalk360, configuring the BizTalk server (optional), enabling HTTP feature, granting SQL permissions and IIS.

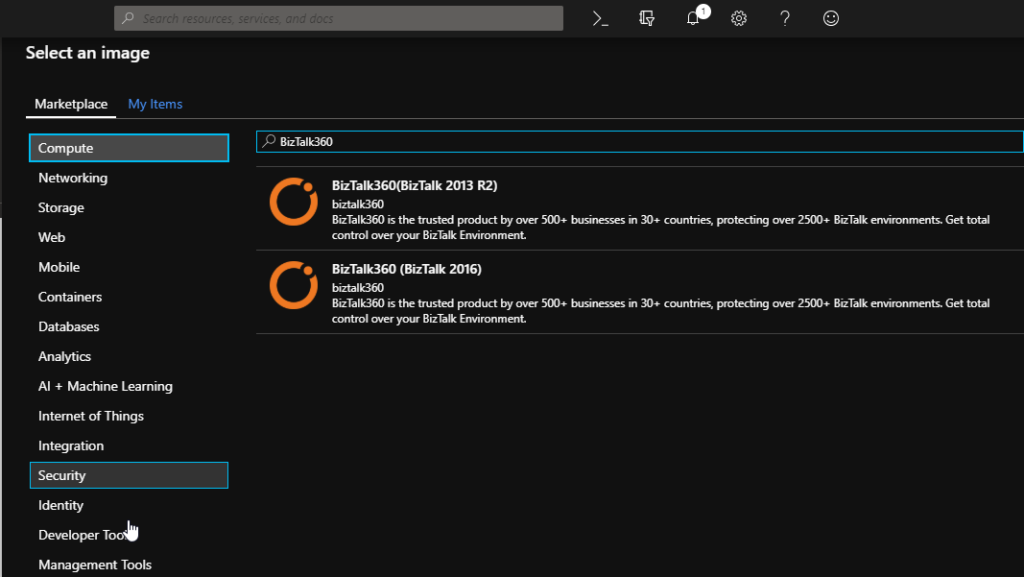

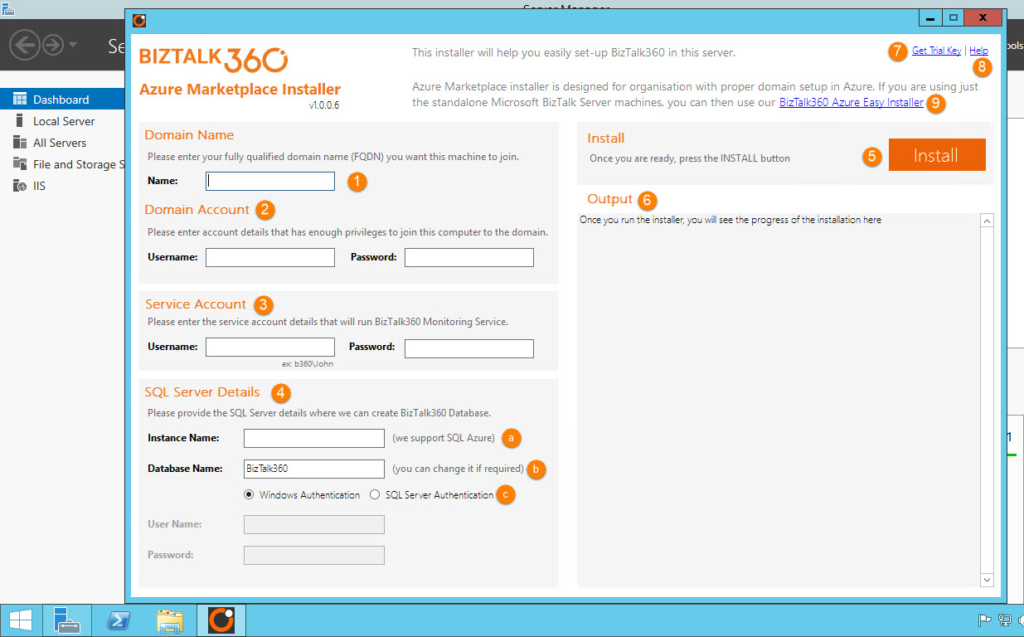

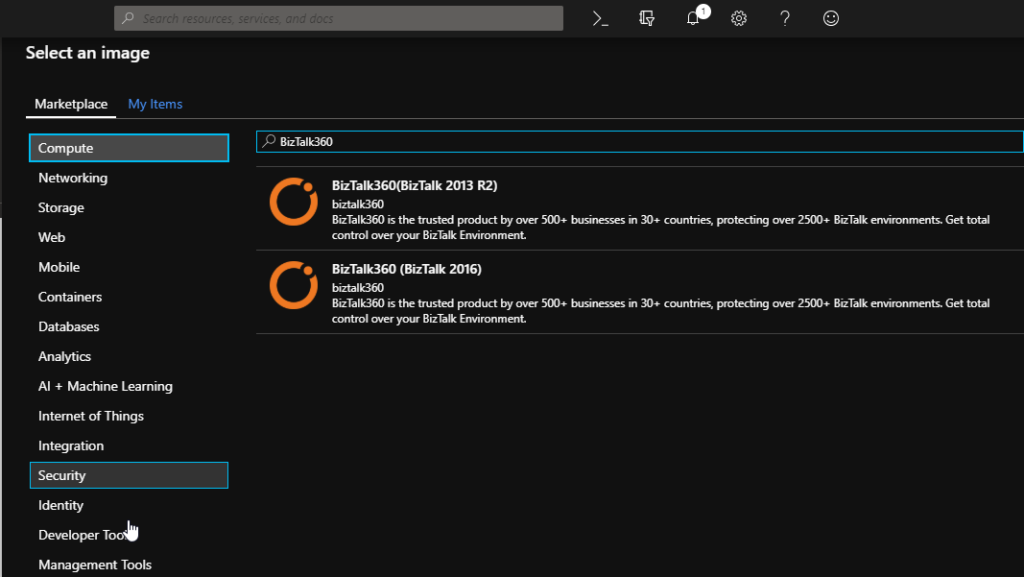

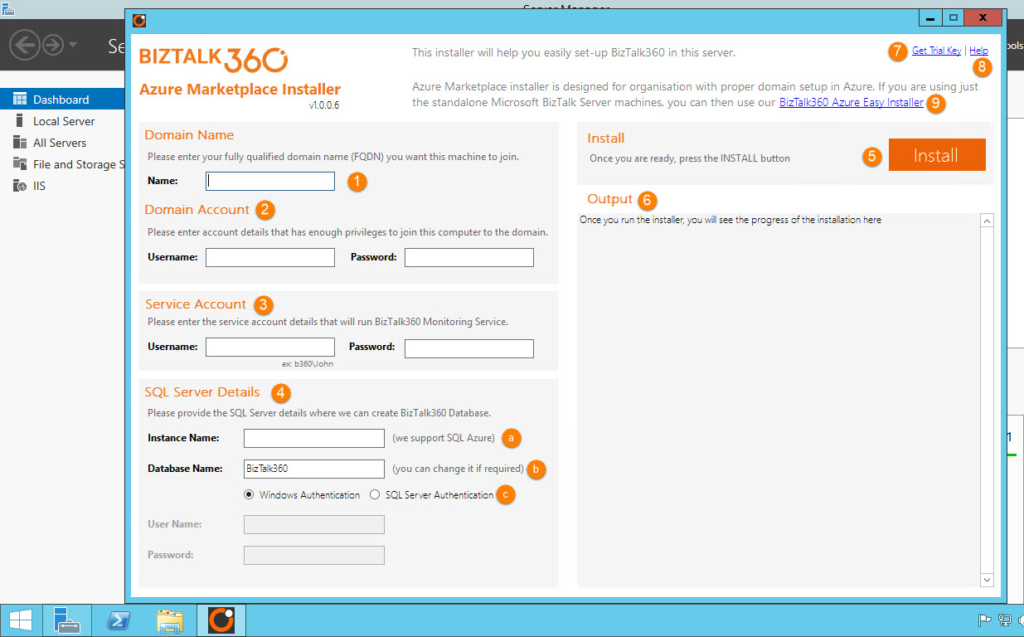

Azure Marketplace installer

This is helpful for customers having a Microsoft Azure account and provisioned BizTalk server machines in the cloud with a more complex setup such as a domain, Active Directory, and one or more BizTalk environments in the network.

With BizTalk360 in Azure, you get a complete deployment that can target your BizTalk environment running on-premise via VPN or ExpressRoute or on Azure IaaS Platform.

In which case it will be helpful?

With the help of BizTalk360’s Azure Marketplace installer, you can simply spin up and add a new BizTalk360 machine into your existing BizTalk environment. We support BizTalk Server 2013R2 and 2016.

Why did we introduce this?

To ease the installation process, where the customer has the BizTalk server setup in domain Azure architecture, this tool will be very helpful. In any architecture, to add a new application software will be a difficult job because so many changes need to be done right from the providing permission to other prerequisites.

Likewise, to install BizTalk360, set of prerequisites must be performed before installing. To make this job easier for the users, our Azure marketplace installer comes into the picture.

How easy to use is it?

While adding a new machine for BizTalk360, select new Virtual machine in the Marketplace and select the image according to your existing environment. As of now, we are providing support for BizTalk server 2013 R2 and BizTalk 2016.

It is just a simple process; once after the creation of the new BizTalk360 Virtual machine, the Azure Marketplace installer will be opened. Just providing the credentials, it will be added to the domain (the machine will be automatically restarted as a part of this process), and BizTalk360 installation will continue.

Upon successful completion, BizTalk360 will be installed and you will notice a browser window firing open with the BizTalk360 screen. We want to convey that using the BizTalk360’s Azure Marketplace installer, and the Azure easy installer is free of costs.

Conclusion

Keeping the user perspective in mind, we have built various deployment choices for BizTalk360 customers which would ease the installation and provide a user-friendly experience.

Author: Sivaramakrishnan Arumugam

Sivaramakrishnan is our Support Engineer with quite a few certifications under his belt. He has been instrumental in handling the customer support area. He believes Travelling makes happy of anyone. View all posts by Sivaramakrishnan Arumugam

by Gautam | Dec 16, 2018 | BizTalk Community Blogs via Syndication

Do you feel difficult to keep up to date on all the frequent updates and announcements in the Microsoft Integration platform?

Integration weekly update can be your solution. It’s a weekly update on the topics related to Integration – enterprise integration, robust & scalable messaging capabilities and Citizen Integration capabilities empowered by Microsoft platform to deliver value to the business.

If you want to receive these updates weekly, then don’t forget to Subscribe!

Feedback

Hope this would be helpful. Please feel free to reach out to me with your feedback and questions.

by Jeroen | Dec 16, 2018 | BizTalk Community Blogs via Syndication

TL;DR – There is no decent .NET support for PCF in the IBM client, but we can use IKVM.NET to convert JARs to DLLs so we can still use .NET instead of JAVA to use PCF.

Intro

Programmable Command Formats (PCFs) define command and reply messages that can be used to create objects (Queues, Topics, Channels, Subscriptions,…) on IBM Websphere MQ. For my current project we wanted to build a custom REST API to automate object creation based on our custom needs. The IBM MQ REST API was not a possible alternative at that moment in time.

The problem

The .NET PCF namespaces are not supported/documented by IBM and do not provide the possibility to inquired the existing subscriptions on a queue manager. All other tasks we wanted to automate are possible in .NET. Using JAVA seemed to be the only alternative if we wanted to build this custom REST API with all features.

| Action |

PCF Command |

Result |

Info |

| Create Local/Alias Queue |

MQCMD_CREATE_Q |

OK |

|

| Delete Queue |

MQCMD_DELETE_Q |

OK |

|

| List Queues |

MQCMD_INQUIRE_Q |

OK |

|

| Purge Queue |

MQCMD_CLEAR_Q |

OK |

|

| Create Subscription |

MQCMD_CREATE_SUBSCRIPTION |

OK |

|

| List Subscriptions |

MQCMD_INQUIRE_SUBSCRIPTION |

NOK |

Link1 |

| |

|

|

Link2 |

| Delete Subscription |

MQCMD_DELETE_SUBSCRIPTION |

OK |

|

Being able to use the .NET platform was a requirement at that time, because the whole build and deployment pipeline was focused on .NET.

IKVM.NET

After some searching I stumbled upon IKVM.NET:

“IKVM.NET is a JVM for the Microsoft .NET Framework and Mono. It can both dynamically run Java classes and can be used to convert Java jars into .NET assemblies. It also includes a port of the OpenJDK class libraries to .NET.“

Based on this description it sounded like it could offer a possible solution!

Using IKVM.NET we should be able to convert the IBM JARs to .NET assemblies and use the supported and documented IBM Java Packages from a .NET application.

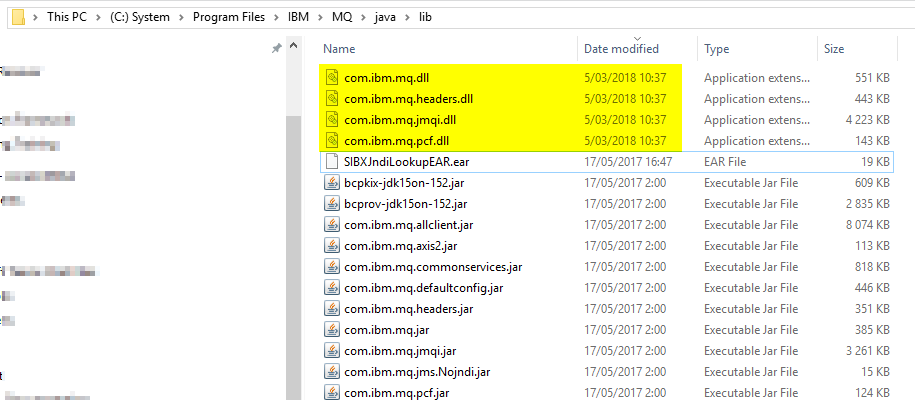

From JAR to DLL

Now I will shortly explain how we were able to put it all together. Using IKVM.NET is not that easy when you use it for the first time. The whole process consists basically out of 3 steps:

- Download (and Install) the IBM MQ redistributable client (in order to extract the JAR files)

- Convert JARs to DLLs with IKVM.NET

- Copy DLLs and Reference in .NET project

- The IBM Converted JARs and the IKVM.NET Runtime dlls

Convert JAR to DLL

Download IKVM: https://sourceforge.net/projects/ikvm/

Extract the IKVM files (c:toolsIKVM)

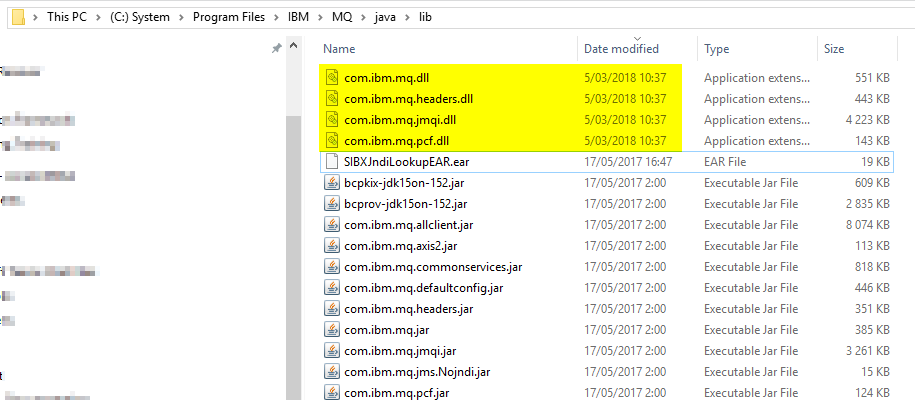

I have the IBM client installed, so the JAR files will be on there default installation (C:Program FilesIBMMQjavalib)

Open up a Command Prompt:

1

2

3

4

5

|

set path=%path%;c:toolsIKVMbin

cd C:Program FilesIBMMQjavalib

ikvmc -target:library -sharedclassloader { com.ibm.mq.jar } { com.ibm.mq.jmqi.jar } { com.ibm.mq.headers.jar } { com.ibm.mq.pcf.jar }

|

You will find the output in the source directory of the JAR files:

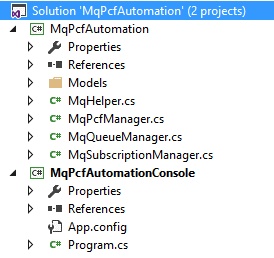

Add References…

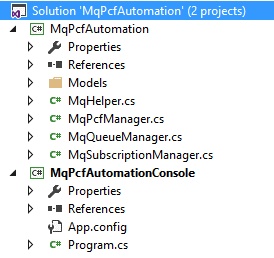

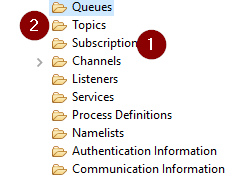

Now that we have our DLLs, we can add them to our .NET project. This seemed less easy then I thought, I spend a lot of time figuring out what dependencies I needed. In the end, this was my result:

1 = The IBM JARs converted to DLLs

2 = The IKVM.NET runtime DLLs

MqPcfAutomation Sample

To help you get started, I added my sample proof of concept solution to GitHub in the MqPcfAutomation repository.

In the sample a showcase the functionality described in the table at the beginning of this post.

Conclustion

In the end I am happy that I was able to build a solution for the problem. The question is if this approach is advised…

I don’t think IBM approves this approach, but it works for what we need it. We are using this solution now for more then 6 months without any issues. In the future we might be able to move to the IBM MQ REST API as more features will be added.

by Jeroen | Dec 13, 2018 | BizTalk Community Blogs via Syndication

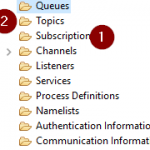

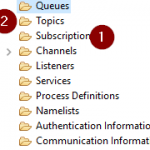

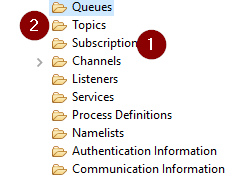

When using the IBM MQ Client library for .NET it is possible to directly make use of “Subscriptions” (1) on IBM MQ, without a topic object (2), by specifying the “TopicString” while sending a message.

The BizTalk MQSC adaptor cannot directly specify a “TopicString” in the adaptor. Also, it is not possible to directly connect to a “Topic” (2) object. It only supports sending to a “Queue” object.

The Solution:

It is however, perfectly possible to create a Queue Alias that has a Topic as BaseObject. This Topic can be linked to one or more Subscriptions based on the TopicString.

[Queue Alias, with base object Topic] => [Topic, with TopicString /xyz/] => [Subscriptions, based on TopicString /xyz/]

by Sandro Pereira | Dec 13, 2018 | BizTalk Community Blogs via Syndication

Welcome back to another entry on my blog post series about “BizTalk Server Tips and Tricks” for developers, administrators or business users and I couldn’t resist on speaking about a topic that normally divides BizTalk developers and BizTalk administrators: Tracking Data!

Problem

Normally Developers have in their environments Full tracking Enabled, why? Because it’s easier to debug, troubleshoot, analyze or validate and see if everything is running well or simply, what is happening with their new applications.

The important question is: Do Developers remember to disable tracking before they put the resources in production?

No! And actually… they don’t care about that! Is not their task to do it or control it. And if you ask them, you should always have them enabled! Sometimes, to be fair, they don’t know the right configurations that should be applied to production.

This can be an annoying and time-consuming operation. It will be the same as asking developers to change their way of being, and for them to remember each time they export an application to disable the Tracking data properties can be a big challenge… or even impossible!

Solution (or possible solutions)

My advice is, if you are a BizTalk Administrator, let them be happy thinking they are annoying you and take back the control of your environment by yourself.

These tasks can be easily automated and configured by easily creating or using PowerShell.

You should disable all Tracking or enable just the important settings at the application level. You may lose 1 day developing these scripts, but then you do not need to worry anymore about it.

As an example, with this script: BizTalk DevOps: How to Disable Tracking Settings in BizTalk Server Environment, you can easily disable all tracking settings for all the artefacts (orchestrations, schemas, send ports, receive ports, pipelines) in your BizTalk Server Environment

# Disable tracking settings in orchestrations

$Application.orchestrations |

%{ $_.Tracking = [Microsoft.BizTalk.ExplorerOM.OrchestrationTrackingTypes]::None }

# Disable tracking settings in Send ports

$disablePortsTracking = New-Object Microsoft.BizTalk.ExplorerOM.TrackingTypes

$Application.SendPorts |

%{ $_.Tracking = $disablePortsTracking }

# Disable tracking settings in Receive ports

$Application.ReceivePorts |

%{ $_.Tracking = $disablePortsTracking }

# Disable tracking settings in pipelines

$Application.Pipelines |

%{ $_.Tracking = [Microsoft.BizTalk.ExplorerOM.PipelineTrackingTypes]::None }

# Disable tracking settings in Schemas

$Application.schemas |

?{ $_ -ne $null } |

?{ $_.type -eq "document" } |

%{ $_.AlwaysTrackAllProperties = $false }

This can easily be edited by you to disable only one application or you can configure the right tracking setting that you want for your applications and environment.

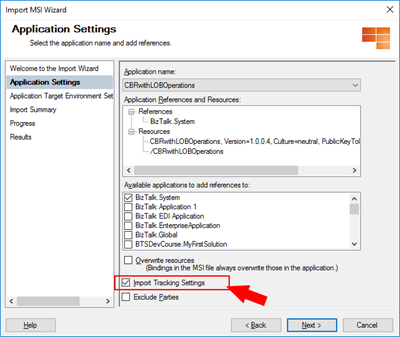

If you are working with BizTalk Server 2016…

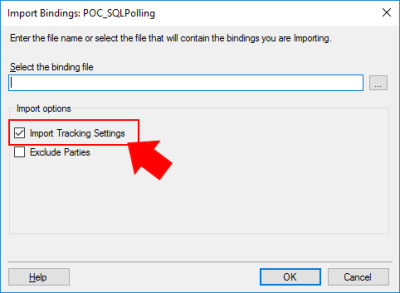

In previous versions of BizTalk Server, tracking settings were automatically imported with the rest of the application bindings. However, if you are working with BizTalk Server 2016, you have a new feature that allows you to have a better control while importing your BizTalk Applications: Import Tracking Settings.

If you are importing an MSI file, on the “Application Settings” tab, you will have a checkbox “Import Tracking Settings” that allows you to say: I don’t want to import the tracking from DEV or another environment in which the MSI was generated from.

If you are importing a Binding file you will also have this same option:

Of course, if you want to properly define the correct or minimum tracking settings of your application, you need to do it manually or, once again, using a PowerShell script to accomplish that.

Quick, simple and practical.

Stay tuned for new tips and tricks!

Author: Sandro Pereira

Sandro Pereira is an Azure MVP and works as an Integration consultant at DevScope. In the past years, he has been working on implementing Integration scenarios both on-premises and cloud for various clients, each with different scenarios from a technical point of view, size, and criticality, using Microsoft Azure, Microsoft BizTalk Server and different technologies like AS2, EDI, RosettaNet, SAP, TIBCO etc. View all posts by Sandro Pereira

by Dan Toomey | Dec 10, 2018 | BizTalk Community Blogs via Syndication

First of all, I’d like to apologise to all grandmothers out there… I mean you no disrespect. It’s just meant to be a catchy title, really. I know grandmothers who are smarter than most of us.

A couple of months ago I had the privilege of speaking at the API Days event in Melbourne. My topic was on Building Event-Driven Integration Architectures, and within that talk I felt a need to compare events to messages, as Clement Vasters did so eloquently in his presentation at INTEGRATE 2018. In a slight divergence within that talk I highlighted three common messaging patterns using a pizza based analogy. Given the time constraint that segment was compressed into less than a minute, but I thought it might be valuable enough to put in a blog post.

Photo courtesy of mypizzachoice.com

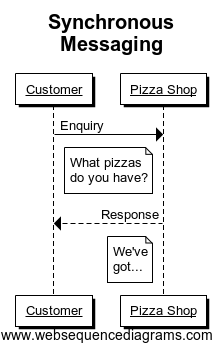

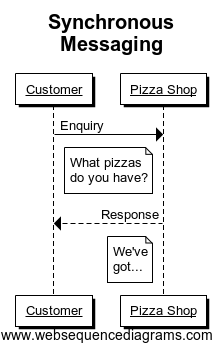

1) Synchronous Messaging

So before you can order a pizza, you need to know a couple of things. First of all, whether the pizza shop is open, and then of course what pizzas they have on offer. You really can’t do anything else without this knowledge, and those facts should be readily available – either by browsing a website, or by picking up the phone and dialling the shop. Essentially you make a request for information and that information is delivered to you straight away.

So before you can order a pizza, you need to know a couple of things. First of all, whether the pizza shop is open, and then of course what pizzas they have on offer. You really can’t do anything else without this knowledge, and those facts should be readily available – either by browsing a website, or by picking up the phone and dialling the shop. Essentially you make a request for information and that information is delivered to you straight away.

That’s what we expect with synchronous messaging – a request and response within the same channel, session and connection. And we shouldn’t have time to go get a coffee before the answer comes back. From an application perspective, that is very simple to implement as the service provider doesn’t have to initiate or establish a connection to the client; the client does all of that and service simply responds. However care must be take to ensure the response is swift, lest you risk incurring a timeout exception. Then you create ambiguity for the client who doesn’t really know whether the request was processed or not (especially troublesome if it were a transactional command that requires idempotency).

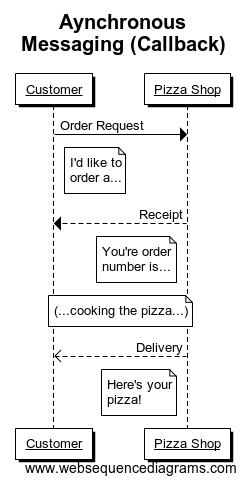

2) Asynchronous Messaging

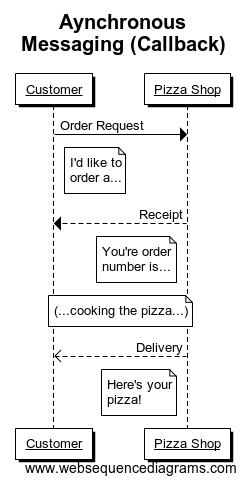

So now you know the store is open and what pizzas to choose from. Great. So you settle on that wonderful ham & pineapple pizza on a traditional crust with a garnish of basil, and you place your order. Now if the shopkeeper hands you a pizza straight away, you’re probably not terribly inclined to accept it. Clearly you expect your pizza to be cooked fresh to order, not just pulled ready-made off a shelf. More likely you’ll be given an order number or a ticket and told your pizza will be ready in 20 minutes or so.

So now you know the store is open and what pizzas to choose from. Great. So you settle on that wonderful ham & pineapple pizza on a traditional crust with a garnish of basil, and you place your order. Now if the shopkeeper hands you a pizza straight away, you’re probably not terribly inclined to accept it. Clearly you expect your pizza to be cooked fresh to order, not just pulled ready-made off a shelf. More likely you’ll be given an order number or a ticket and told your pizza will be ready in 20 minutes or so.

Now comes the interesting part – the delivery. Typically you will have two choices. You can either ask them to deliver the pizza to you in your home. This frees you up to do other things while you wait, and you don’t have to worry about chasing after your purchase. They bring it to you. But there’s a slight catch: you have to give them a valid address.

In the asynchronous messaging world, we would call this address a “callback” endpoint. In this scenario, the service provider has the burden of delivering the response to the client when it’s ready. This also means catering for a scenario where the callback endpoint it invalid or unavailable. Handling this could be as blunt as dropping the response and forgetting about it, or as robust as storing it and sending an out-of-band message through some alternate route to the client to come pick it up. Either way, in most cases this is an easier solution for the client than for the service provider.

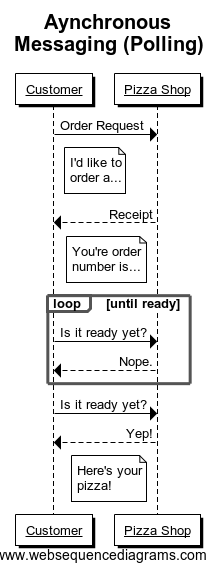

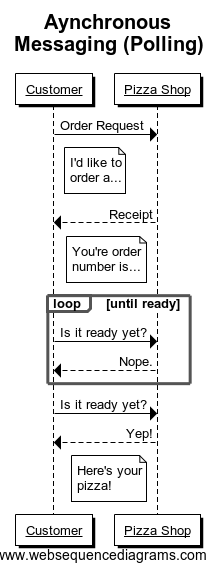

But what if you don’t want to give out your address? In this case, you might say to the pizza maker, “I’ll come in and pick it up.” So they say fine, it’ll be ready in 20 minutes. Only you get there in 10 minutes and ask if it’s ready; they say not yet. You wait a few more minutes and ask again, and get the same response. Eventually it is ready and they hand you your nice fresh piping hot pizza.

This is an example of a polling pattern. The only burden on the service provider is to produce the response and then store it somewhere temporarily. The client has the job of continually asking if it is ready, and needs to cater for a series of negative responses before finally retrieving the result it is after. You might see this as a less favourable approach for the client – but sometimes this is driven by constraints on the client side, such as difficulties opening up a firewall rule to allow incoming traffic.

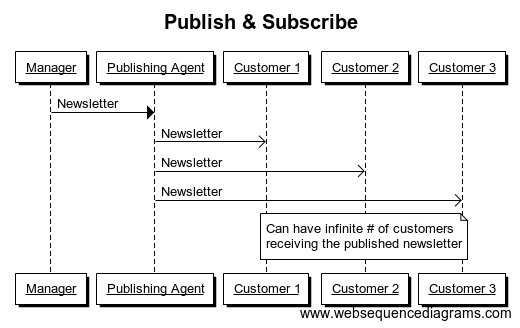

3) Publish & Subscribe

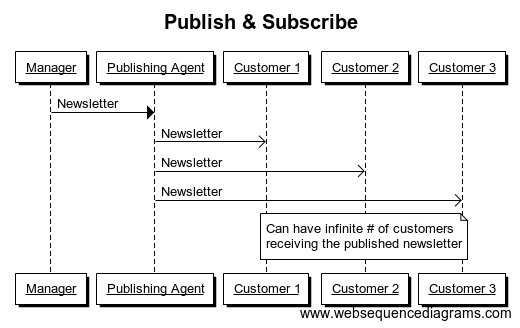

Now let’s say that the pizza was so good (even if a little on the pricey side) that you decide to compliment the manager. He asks if you’d like to be notified when there are special discounts or when new pizzas are introduced. You say “Sure!” and sign up on his mailing list.

Now let’s say that the pizza was so good (even if a little on the pricey side) that you decide to compliment the manager. He asks if you’d like to be notified when there are special discounts or when new pizzas are introduced. You say “Sure!” and sign up on his mailing list.

Now the beauty of this arrangement is that it costs the manager no extra effort to have you join his mailing list. He still produces the same newsletter and publishes it through his mailing agent. The number of subscribers on the list can grow or shrink, it makes no difference. And the manager doesn’t even have to be aware of who is on that list or how many (although he/she may care if the list becomes very very short!) You as the subscriber have the flexibility to opt in or opt out.

It is precisely this flexibility and scalability that makes this pattern so attractive in the messaging world. An application can easily be extended by creating new subscribers to a message, and this is unlikely to have an impact on the existing processes that consume the same message. It is also the most decoupled solution, as the publisher and subscriber need not know anything about each other in terms of protocols, language, endpoints, etc. (except of course for the publishing endpoint which it typically distinct and isolated from either the publisher or consumer systems). This is the whole concept behind a message bus, and is the fundamental principle behind many integration and eventing platforms such as BizTalk Server and Azure Event Grid.

The challenge comes when the publisher needs to make a change, as it can be difficult sometimes to determine the impact on the subscribers, particularly when the details of those subscribers are sketchy or unknown. Most platforms come with tooling that helps with this, but if you’re designing complex applications where many different services are glued together using publish / subscribe, you will need some very good documentation and some maintenance skills to look after it.

So I hope this analogy is useful – not just for explaining to your grandmothers, but to anyone who needs to grasp the concept of messaging patterns. And now… I think I’m going to go order a pizza.

by Gautam | Dec 9, 2018 | BizTalk Community Blogs via Syndication

Do you feel difficult to keep up to date on all the frequent updates and announcements in the Microsoft Integration platform?

Integration weekly update can be your solution. It’s a weekly update on the topics related to Integration – enterprise integration, robust & scalable messaging capabilities and Citizen Integration capabilities empowered by Microsoft platform to deliver value to the business.

If you want to receive these updates weekly, then don’t forget to Subscribe!

How get started with iPaaS design & development in Azure?

Feedback

Hope this would be helpful. Please feel free to reach out to me with your feedback and questions.