by Eldert Grootenboer | Oct 30, 2017 | BizTalk Community Blogs via Syndication

This is a new post in the IoT Hub series. Previously we have seen how to administrate our devices, send messages from the device and from the cloud. Now that we have all this data flowing through our systems, it is time to help our users to actually work with this data.

Going back to the scenario we set in the first post of the series, we are receiving the telemetry readings from our ships, and getting alerts in case of high temperature. In the samples we have been using console apps for our communications between the systems, but in a real-life scenario you will probably want a better and easier interface. In the shipping business, Dynamics CRM is already widely used, and so it would benefit the business if they can use this product for their IoT solutions as well. Luckily they can, by using Microsoft Dynamics 365 for Field Service in combination with the Connected Field Service solution.

Setting Up Connected Field Service

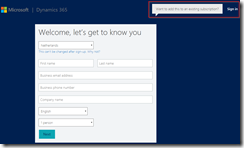

To start working with the connected field service solution, first we will set up a 30 day trial for Dynamics 365 for Field Service. Just remember you will need an organizational Microsoft account to sign up for Dynamics 365. If you do not have one, you can create a <your-tenant>.onmicrosoft.com account in your Azure Active Directory for this purpose. If you already have your own Dynamics 365 environment, you can skip to installing the connected field service.

Create Dynamics 365 Environment

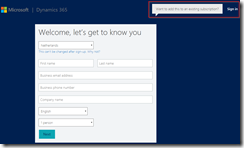

We will start by setting up Dynamics 365. In this post, we will be using a trial account, but if you already have an account you could of course also use that one. Go to the Dynamics 365 trial registration site, and click on Sign in to use an organizational account to login.

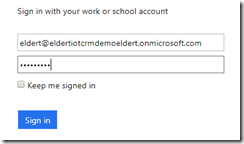

Sign in to Dynamics 365

Sign in to Dynamics 365  Use an organizational account to sign in

Use an organizational account to sign in

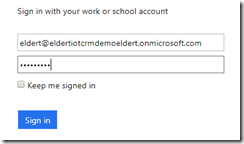

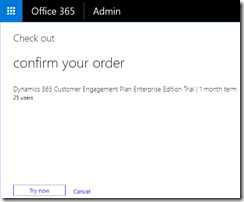

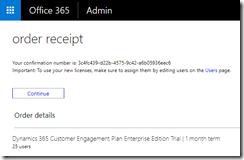

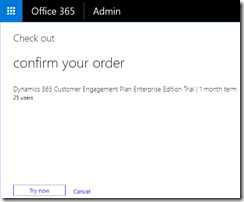

Once signed in, confirm that you want to sign up for the free trial.

Confirm the free trial

Confirm the free trial  Your trial has been accepted

Your trial has been accepted

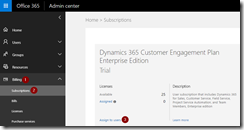

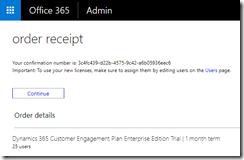

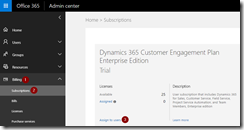

Now that we have created our free trial, we will have to assign licenses to our users. Open the Subscriptions blade under Billing and choose to assign licenses to your users.

Assign licenses to users

Assign licenses to users

You will get an overview of all your users. Select the users for which you want to assign the licenses, and click Edit product licenses.

Choose users to assign licenses

Choose users to assign licenses

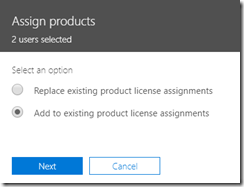

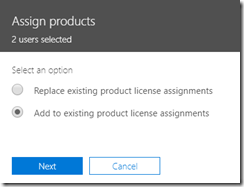

Add the licenses to the users we just selected.

Add licenses to users

Add licenses to users

Choose the trial license we just created. This will also add the connected Office 365 licenses.

Assign Dynamics 365 trial licenses

Assign Dynamics 365 trial licenses

Now that we have assigned the Dynamics 365 licenses, we can finish our setup. Go to Admin Centers in the menu, and select Dynamics 365.

Go to Dynamics 365 admin center

As we are interested in the field service, select this scenario, and complete the setup. The field service scenario will customize our Dynamics 365 instance, to include components like scheduling of technicians, inventory management, work orders and more, which in a shipping company would be used to keep track of repairs, maintenance, etc.

Select Field service

Select Field service

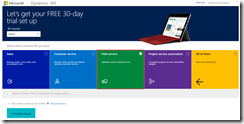

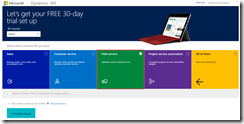

Once we have completed our Dynamics 365 setup, it will be shown in the browser. The address of the page will be in the format <yourtenant>.crm4.dynamics.com. You can also change this endpoint in your Dynamics 365 admin center.

Your Dynamics 365 environment

Your Dynamics 365 environment

Security

To allow us to install the Connected Field Service solution, we will need to add ourselves to the CRM admins. To do this, within your Dynamics 365 portal (in my case https://eldertiotcrmdemoeldert.crm4.dynamics.com/) go to the Settings Tab and open security.

Open Dynamics 365 Security

Open Dynamics 365 Security

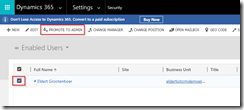

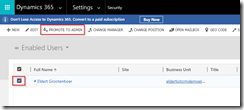

Now open the users, select your user account, and click on Promote To Admin.

Promote your user to local admin

Promote your user to local admin

Install Connected Field Service Solution

Now that we have Dynamics 365 set up, it’s time to add the Connected Field Service solution, which we will use to manage and interact with our devices from Dynamics 365. Start by going to Dynamics 365 in the menu bar.

Open Dynamics 365

Open Dynamics 365

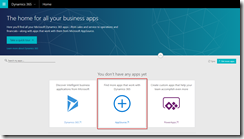

This will lead us to our Dynamics home, where we can install new apps. Click on Find more apps to open the app store.

Open the app store

Open the app store

Search for Connected Field Service, and click on Get it now to add it to our environment.

Add Connected Field Service solution

Add Connected Field Service solution

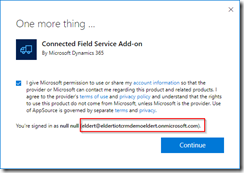

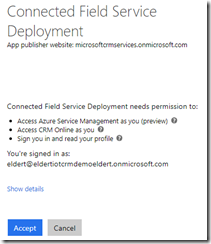

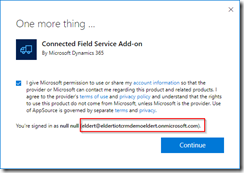

Agree with the permissions, and make sure you are signed in with the correct user. The user must have a license, and permissions to install this solution.

Accept permissions

Accept permissions

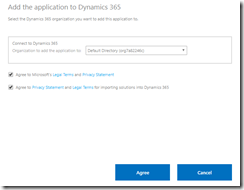

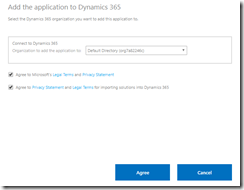

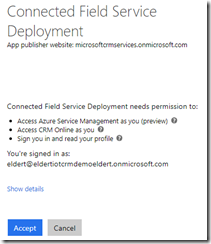

Follow the wizard for the solution and make sure you install it into the correct environment.

Select your Dynamics 365 environment

Select your Dynamics 365 environment  Accept deployment

Accept deployment

On the next pages accept the service agreement and the privacy statement. Make sure you deploy to the correct Dynamics 365 Organization.

Select correct organization

Now we will have to specify the Azure resources where we want to deploy our artefacts like IoT Hub, Stream Analytics etc. If you do not see a subscription, make sure your user has the correct permissions in your Azure environment to create and retrieve artefacts and subscriptions.

Select Azure subscription and resources

Select Azure subscription and resources

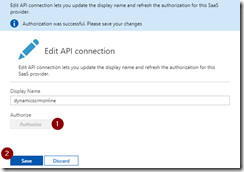

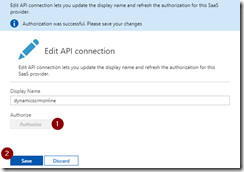

The wizard will now start deploying all the Azure artefacts, and will update CRM with new screens and components. You can follow this by refreshing the screen, or coming back to the website. Once this is finished, you will need to click the Authorize button, which will set up the connection between your Azure and Dynamics 365.

After deployment click on Authorize

After deployment click on Authorize

This will open the Azure portal on the API connection, click on the message This connection is not authenticated to authorize the connection.

Click to authenticate

Click to authenticate  Authorize the connection

Authorize the connection

The Azure Solution

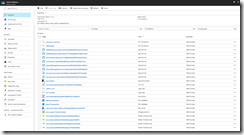

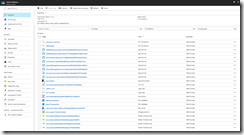

Now let’s go to the Azure portal, and see what has been installed. Open the resource group which we created in the wizard.

Resource group for our connected field service solution

Resource group for our connected field service solution

As you can see, we have a lot of new resources. I will explain the most important ones here, and their purpose in the Connected Field Service solution. After the solution has been deployed, all resources will have been setup for the data from the sample application which has been deployed with it. If you want to use your own devices, you will need to update these. This is also the place to start building your own solution, as your requirements might differ from what you get out of the box. As all these resources can be modified from the portal (except for the API Apps), customizing this solution to your own needs is very easy

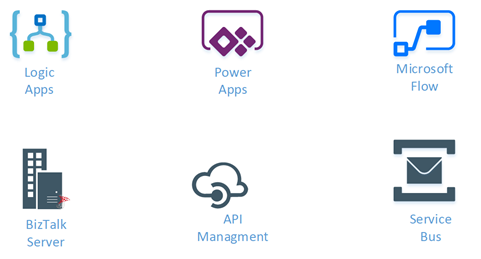

IoT Hub

The IoT Hub which has been created is used for the device management and communication. It uses device to cloud messaging to receive telemetry from our devices, and cloud to device messaging to send commands to our devices. When working with your own devices, you should update them to connect with this IoT Hub.

Service Bus

Four Service Bus queues have been created, which are used for holding messages between systems.

Stream Analytics

There are several Stream Analytics jobs, which are used to process the data coming in from IoT Hub. When working with your own devices, you should update these jobs to process your own data.

- Alerts; This job reads data from IoT Hub, and references it against device rules in a blob. If the job detects it needs to send an alert to Dynamics 365, in this case a high temperature, it will write this into a Service Bus queue.

- PowerBI; This job reads all incoming telemetry data, and sends the maximum temperature per minute to PowerBI.

API Apps

Custom API Apps have been created, which will be used to translate between messages from IoT Hub and Dynamics 365.

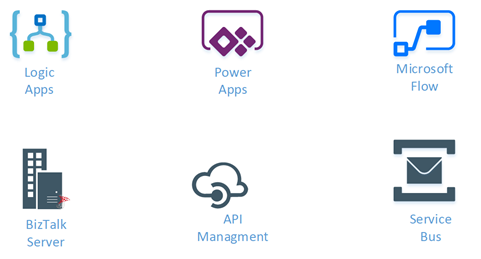

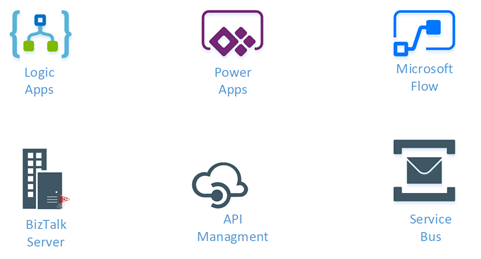

Logic Apps

There are two Logic Apps, which serve as a communications channel between Dynamics 365 and IoT Hub. The Logic Apps use queues, API Apps and the Dynamics 365 connector to send and receive messages between these systems.

Setting Up PowerBI

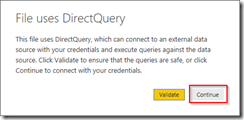

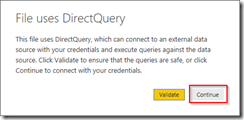

PowerBI will be used to generate charts from the telemetry readings. To use this, we first need to import the reports. Start by downloading the reports, and make sure you have a PowerBI account, it is recommended to use the same user for this which you use for Dynamics 365. Open the downloaded reports file using PowerBI Desktop. The Power BI report will open with errors because it was created with a sample SQL database and user. Update the query with your SQL database and user, and then publish the report to Power BI.

Open the downloaded report

Open the downloaded report

Once opened, click on Edit Queries to change the connection to your database.

Select Edit Queries Open Advanced Editor

Select Edit Queries Open Advanced Editor

Replace the source SQL server and database with the resources provisioned in your Azure resource group. The database server and database name can be found through the Azure portal.

Update Azure SQL Server and database names

Update Azure SQL Server and database names

Enter the credentials of your database user when requested.

Enter login credentials

Enter login credentials

If you get an error saying your client IP is not allowed to connect, use the Azure portal to add your client IP to the firewall on your Azure SQL Server.

Not allowed to connect  Add client IP to firewall settings

Add client IP to firewall settings

Once done, click on Close & Apply to update the report file.

Close and apply changes

Now we will publish the report to PowerBI, so we can use it from Dynamics 365. Click on the Publish button to start, and make sure to save your changes.

Publish report to PowerBI

Publish report to PowerBI

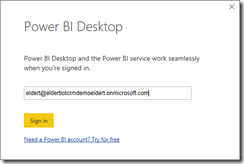

Sign in to your PowerBI account and wait for you report to be published.

Sign in to PowerBI

Sign in to PowerBI

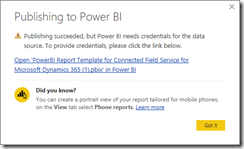

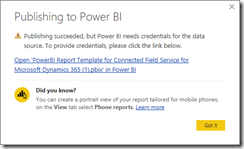

Once published, open the link to provide your credentials.

Publishing succeeded

Publishing succeeded

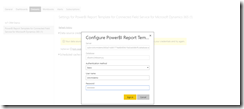

Follow the link to edit your credentials, and update the credentials with your database user login.

Sign in with database user

Sign in with database user

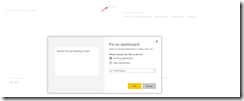

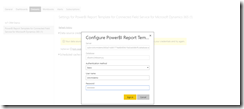

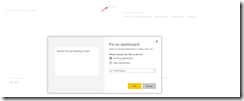

Now pin the tiles to a dashboard, creating one if it does not yet exist.

Pin tiles to dashboard

Pin tiles to dashboard

Managing Devices

In this post we will be using the simulator which has been deployed along with the solution. If you want to use your own (simulated) devices, be sure to update the connections and data for the deployed services. Go to your Dynamics 365 environment, open the Field Service menu, and select Customer Assets.

Open Customer Assets

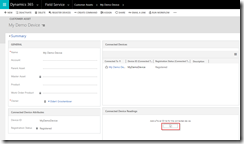

To add a new device, we will create a new asset. This asset will then be linked to a device in IoT Hub.

Create new asset

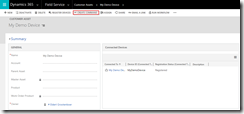

Fill in the details of the asset. Important to note here, is we need to set a Device ID. This will be the ID with which the device is registered in IoT Hub. When done, click on Save.

Set asset details

Set asset details

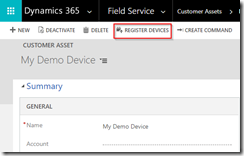

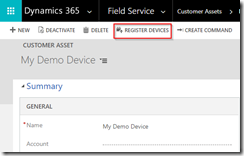

Once the asset has been saved, you will note a new command in the command bar called Register Devices. This will register the new device in IoT Hub, and link it with our asset in Dynamics 365. Click this now.

Register the device in IoT Hub

Register the device in IoT Hub

The device will now be registered in IoT Hub. Once this is done, the registration status will be updated to Registered. We can now start interacting with our device.

Device has been registered in IoT Hub

Receive Telemetry

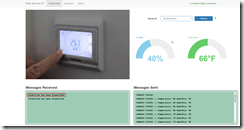

Open the thermostat simulator, which was part of the deployment of the Connected Field Service solution. You can do this by going back to the deployment website and clicking Open Simulator.

Open the simulator

Open the simulator

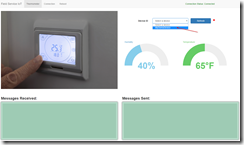

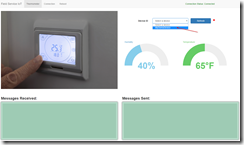

This will open a new website where we can simulate a thermostat. Start by selecting the device we just created from Dynamics 365.

Select device

Select device

Once the device has been selected, we will start seeing messages being sent. These will be sent to IoT Hub, and be placed into PowerBI, and alerts will be created if the temperature gets too high. Increase the temperature to trigger some alerts.

Generate high temperature

Generate high temperature

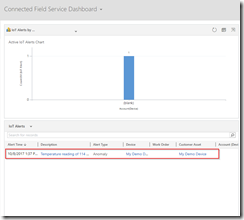

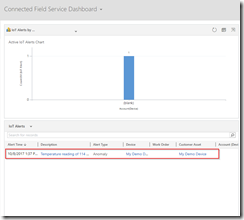

Now go back to Dynamics 365, and open the Field Service dashboard.

Open Field Service dashboard

Open Field Service dashboard

On the dashboard we will now see a new IoT Alert. You can open this alert to see it’s details, and for example create a work order for this. In our scenario with the shipping company, this would allow us to recognize anomalies on the ships engines in near real time, and immediately take action for this, like arranging for repairs.

Alerts are shown in Dynamics 365

Alerts are shown in Dynamics 365

Connect PowerBI

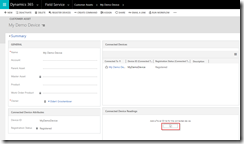

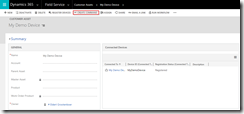

Now let’s set up Dynamics 365 to include the PowerBI graph in our assets, so we have an overview of our telemetry at all times as well. Go back to the asset we created earlier, and click the PowerBI button in the Connected Device Readings area.

Add PowerBI tile to asset

Add PowerBI tile to asset

Choose one of the tiles we previously added to the PowerBI dashboard and click save.

Add PowerBI tile

Add PowerBI tile

We will now see the recent device readings in our Dynamics 365 asset. This will show up with every asset with the readings for its registered device, allowing us to keep track of all our device’s readings.

Device readings are now integrated in Dynamics 365

Device readings are now integrated in Dynamics 365

Send Commands

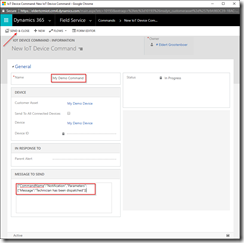

So for the final part, we will have a look how we can send messages from Dynamics 365 to our device. Go back to the asset we created, and click on Create Command.

Click Create Command

Click Create Command

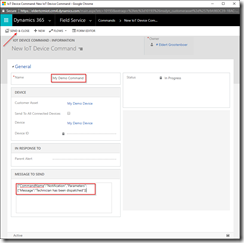

Give the command a name, and provide the command. This should be in JSON format, so it can be parsed by the device. As we will be using the simulator, we will just send a demo command, but for your own device this should be a command your device can understand. You can send this command to a particular device or to all your devices. Once you have filled in the fields, click on Send & Close to send the command. The command which we will be sending is as follows.

{"CommandName":"Notification","Parameters":{"Message":"Technician has been dispatched"}}

Create and send your command

Create and send your command

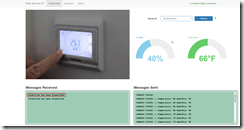

Now when we switch over to our simulator, we will see the command coming in.

Commands are coming in

Commands are coming in

Conclusion

By using Dynamics 365 in combination with the Connected Field Service solution, we allow our users to use an environment which they are well known with, to administrate and communicate with their IoT devices. It allows them to handle alerts, dispatching technicians as soon as needed. By integrating the readings, they are always informed on the status of the devices, and by sending commands back to the device they can remotely work with the devices.

IoT Hub Blog Series

In case you missed the other articles from this IoT Hub series, take a look here.

Blog 1: Device Administration Using Azure IoT Hub

Blog 2: Implementing Device To Cloud Messaging Using IoT Hub

Blog 3: Using IoT Hub for Cloud to Device Messaging

Author: Eldert Grootenboer

Eldert is a Microsoft Integration Architect and Azure MVP from the Netherlands, currently working at Motion10, mainly focused on IoT and BizTalk Server and Azure integration. He comes from a .NET background, and has been in the IT since 2006. He has been working with BizTalk since 2010 and since then has expanded into Azure and surrounding technologies as well. Eldert loves working in integration projects, as each project brings new challenges and there is always something new to learn. In his spare time Eldert likes to be active in the integration community and get his hands dirty on new technologies. He can be found on Twitter at @egrootenboer and has a blog at http://blog.eldert.net/. View all posts by Eldert Grootenboer

by Gautam | Oct 29, 2017 | BizTalk Community Blogs via Syndication

Do you feel difficult to keep up to date on all the frequent updates and announcements in the Microsoft Integration platform?

Integration weekly update can be your solution. It’s a weekly update on the topics related to Integration – enterprise integration, robust & scalable messaging capabilities and Citizen Integration capabilities empowered by Microsoft platform to deliver value to the business.

If you want to receive these updates weekly, then don’t forget to Subscribe!

On-Premise Integration:

Cloud and Hybrid Integration:

Feedback

Hope this would be helpful. Please feel free to let me know your feedback on the Integration weekly series.

by Steef-Jan Wiggers | Oct 28, 2017 | BizTalk Community Blogs via Syndication

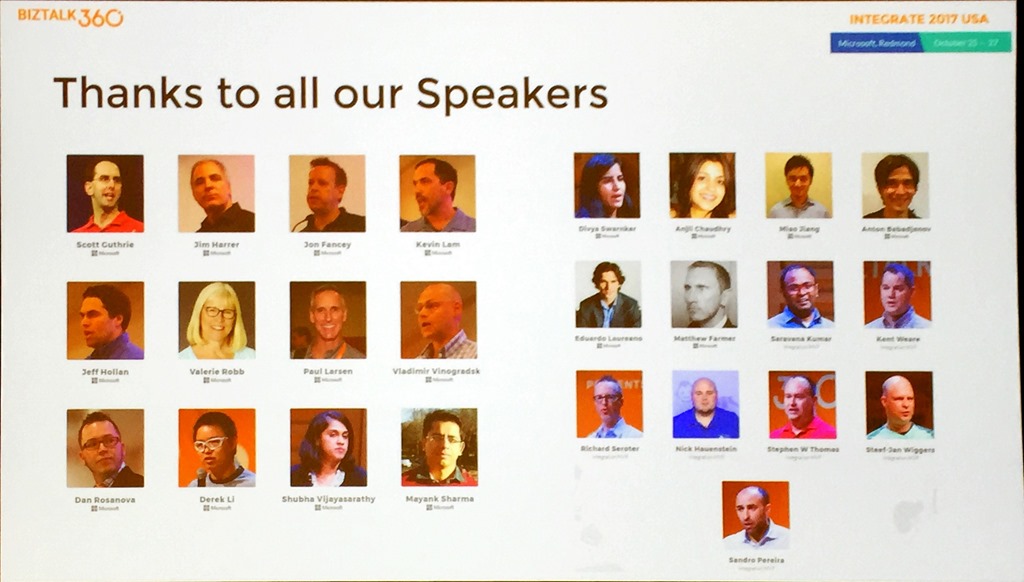

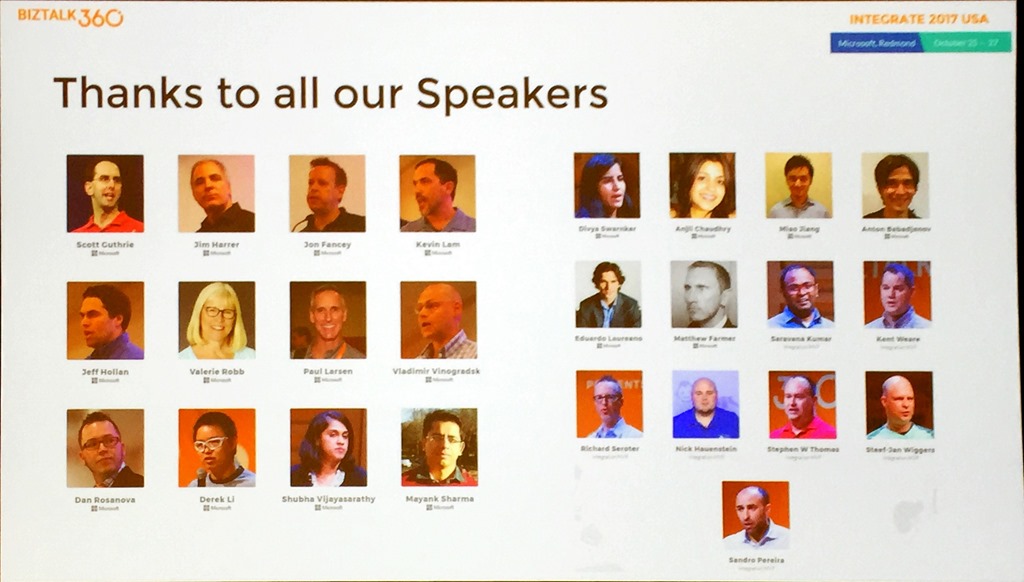

Day 3, the final day of Integrate 2017 USA, at Microsoft Campus building 92. The event so far well received and made people happy seeing the innovations, investments, and passion Microsoft is bringing to its customers and pro-integration professionals.

Check out the recap of the events on Day 1 and Day 2 at Integrate 2017 USA.

Moving to Cloud-Native Integration

Richard started the final day of Integrate 2017 USA stating that the conference actually starts now. He is a great speaker to get the audience pumped on cloud-native integrations. Richard talked about what analysts at Gartner see happening in integration. The trend is cloud service integration is rising. The first two days of this conference made that apparent with the various talks about Logic Apps, Flow, and Functions.

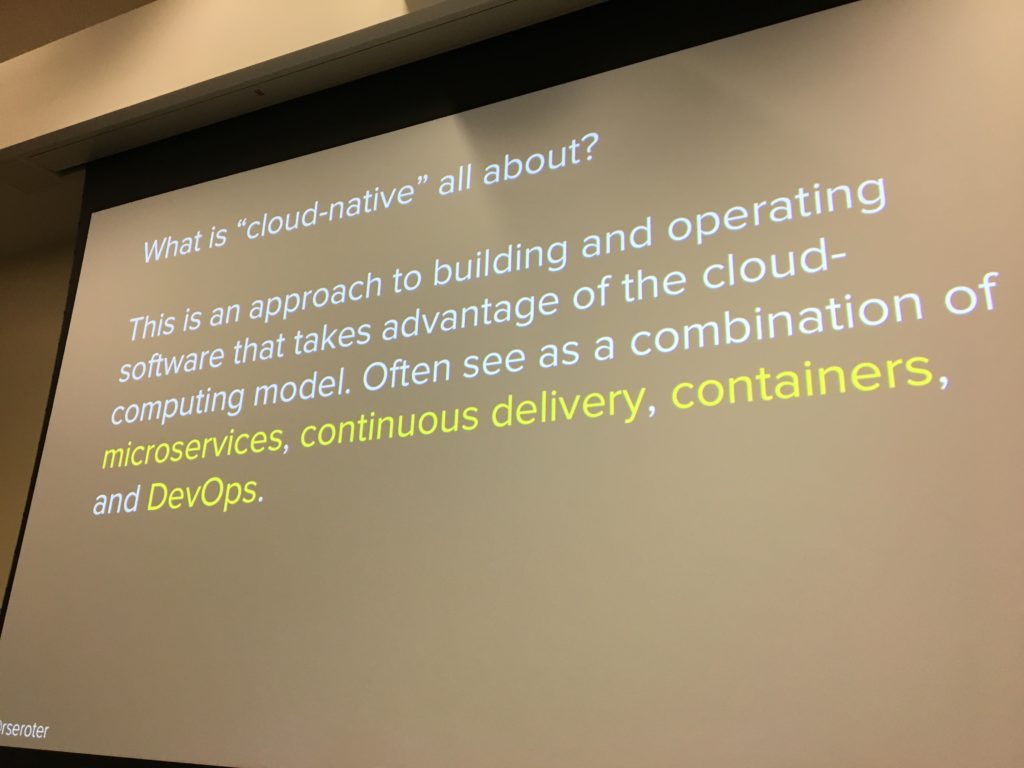

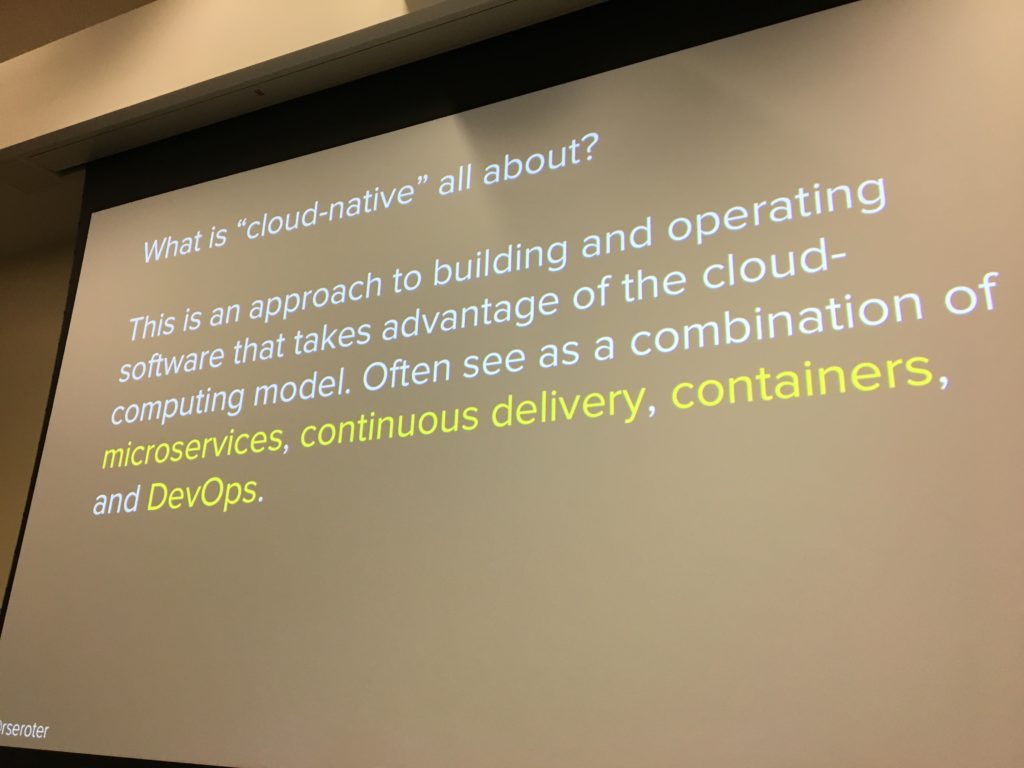

What is “cloud-native”? Richard explained that during his talk.

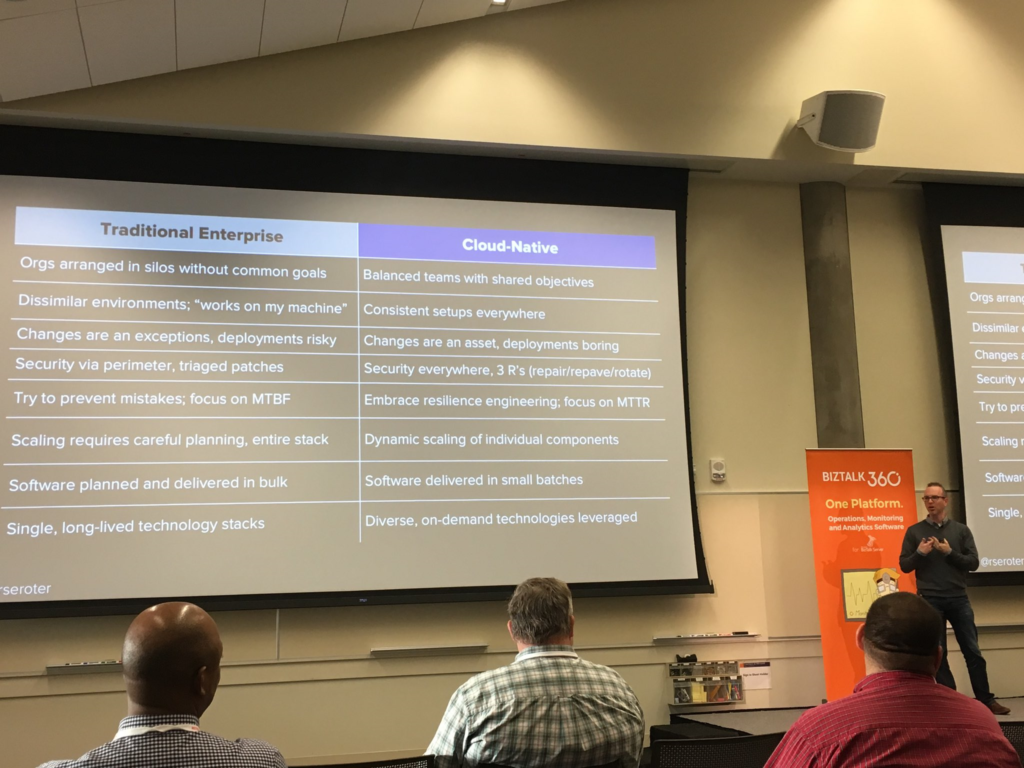

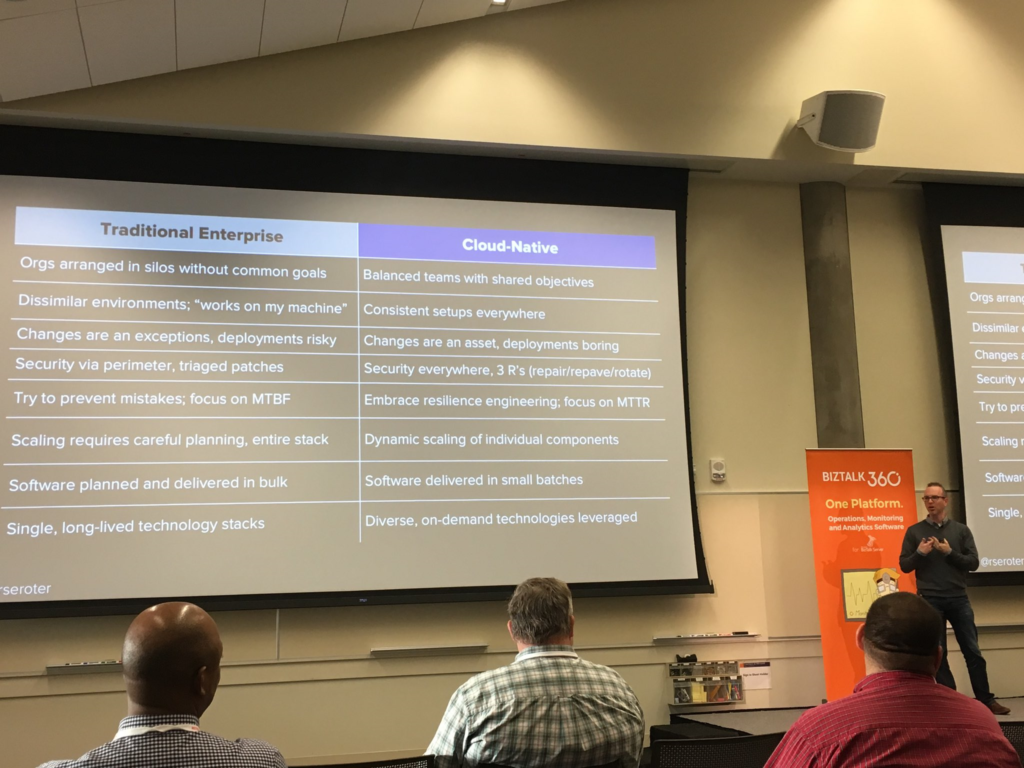

The sessions interesting part was the comparison between the traditional enterprise versus native. The way going forward is “cloud-native”.

The best ways to show what cloud-native really means is by showing demos. Richard showed how to build a Logic App as a data pipeline, the BizTalk REST API available through the Feature Pack, and automating Azure via Service Broker.

Take away from this session was the new way of thinking integration. Finally, there will a book coming out a book coming soon that discusses the topic further.

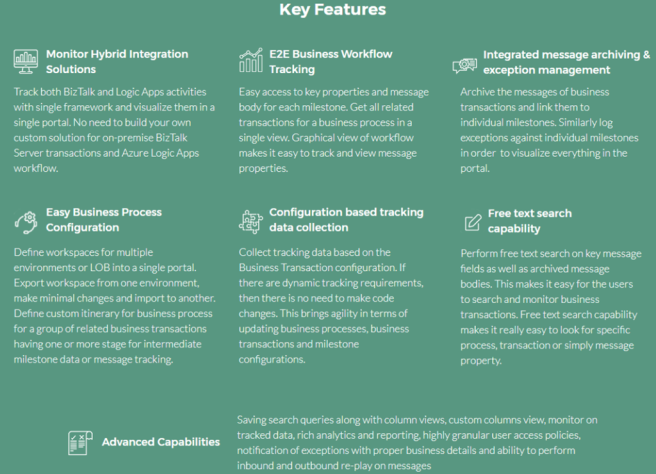

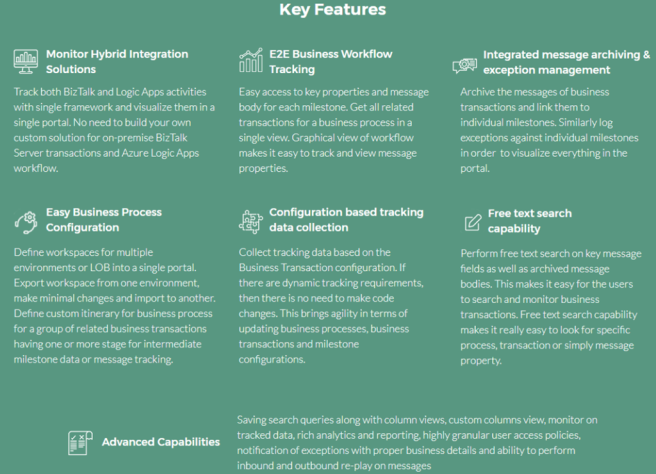

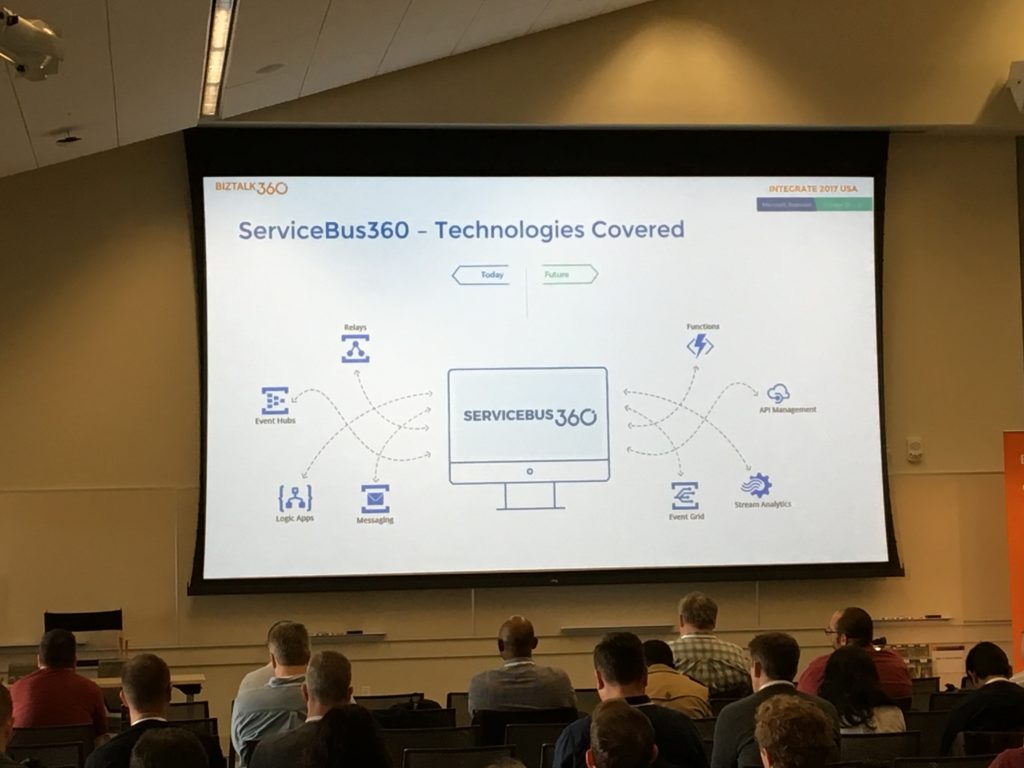

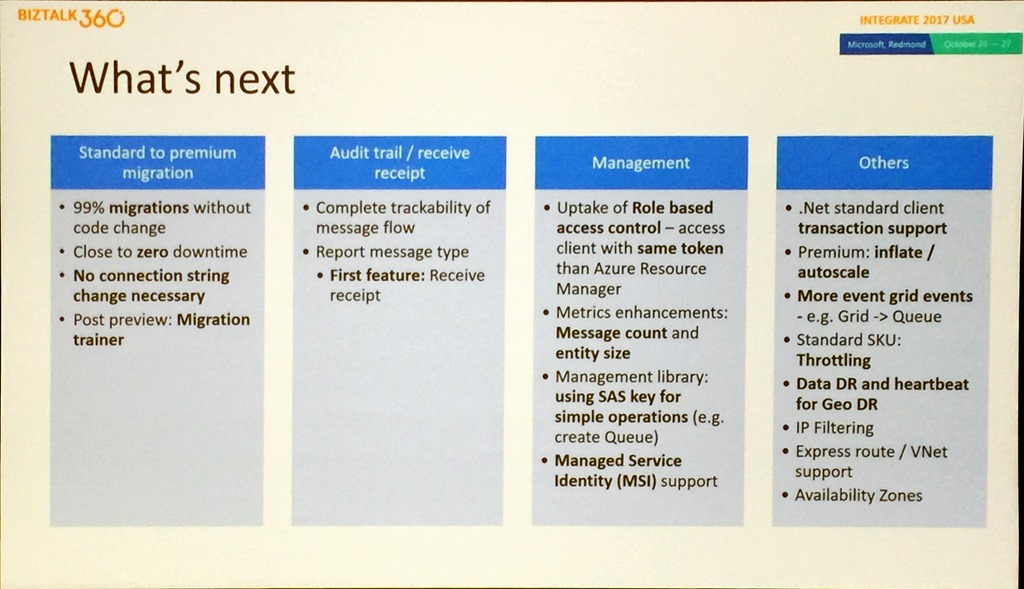

What’s there & what’s coming in ServiceBus360

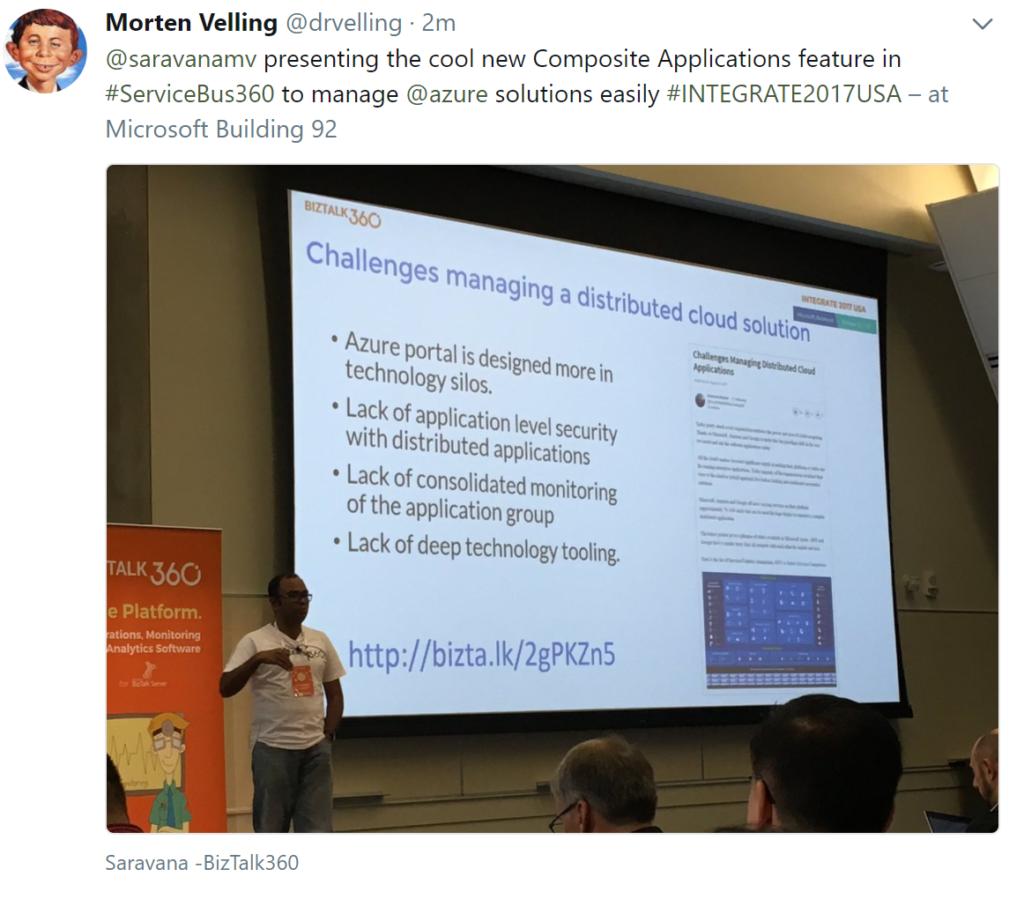

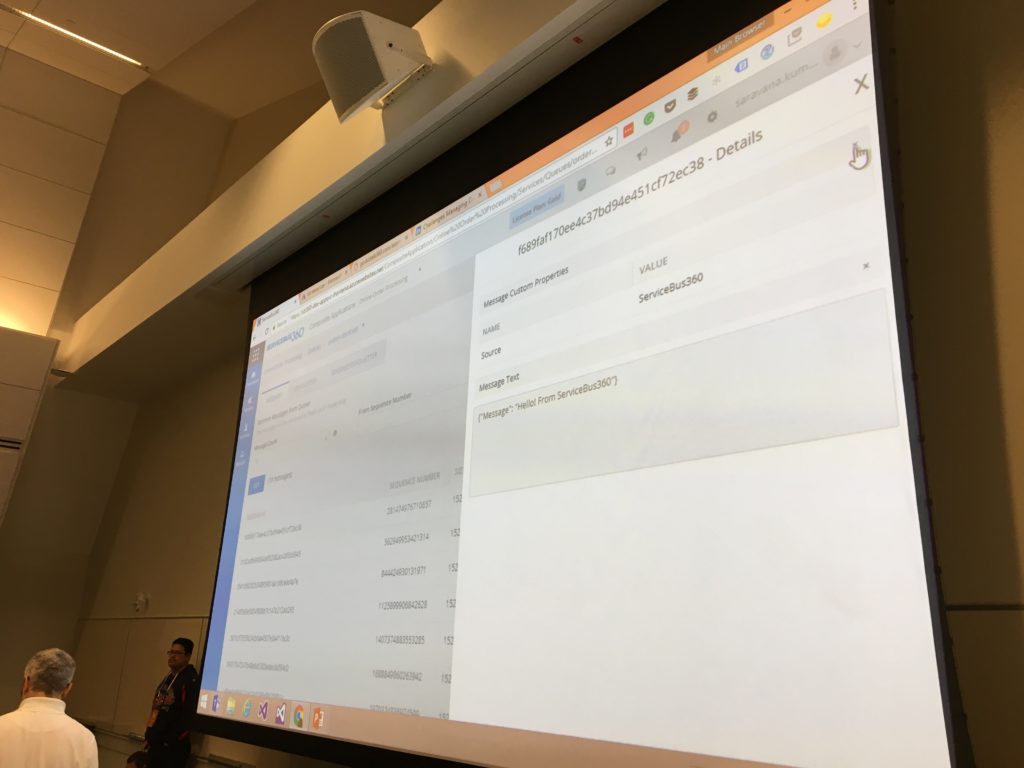

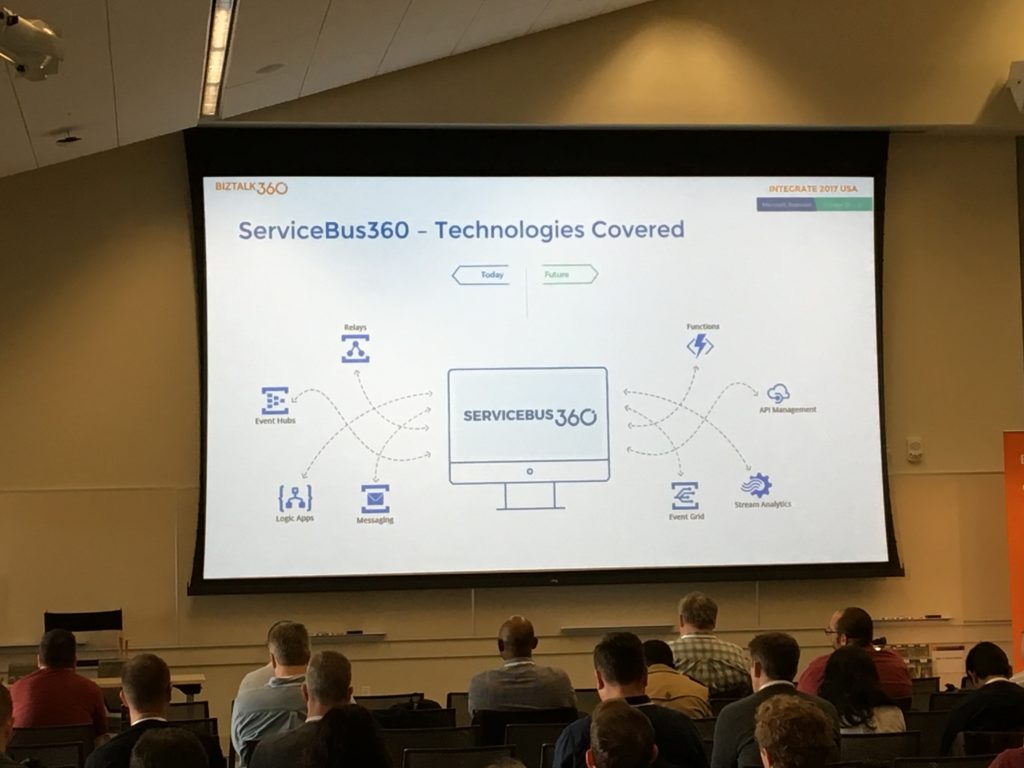

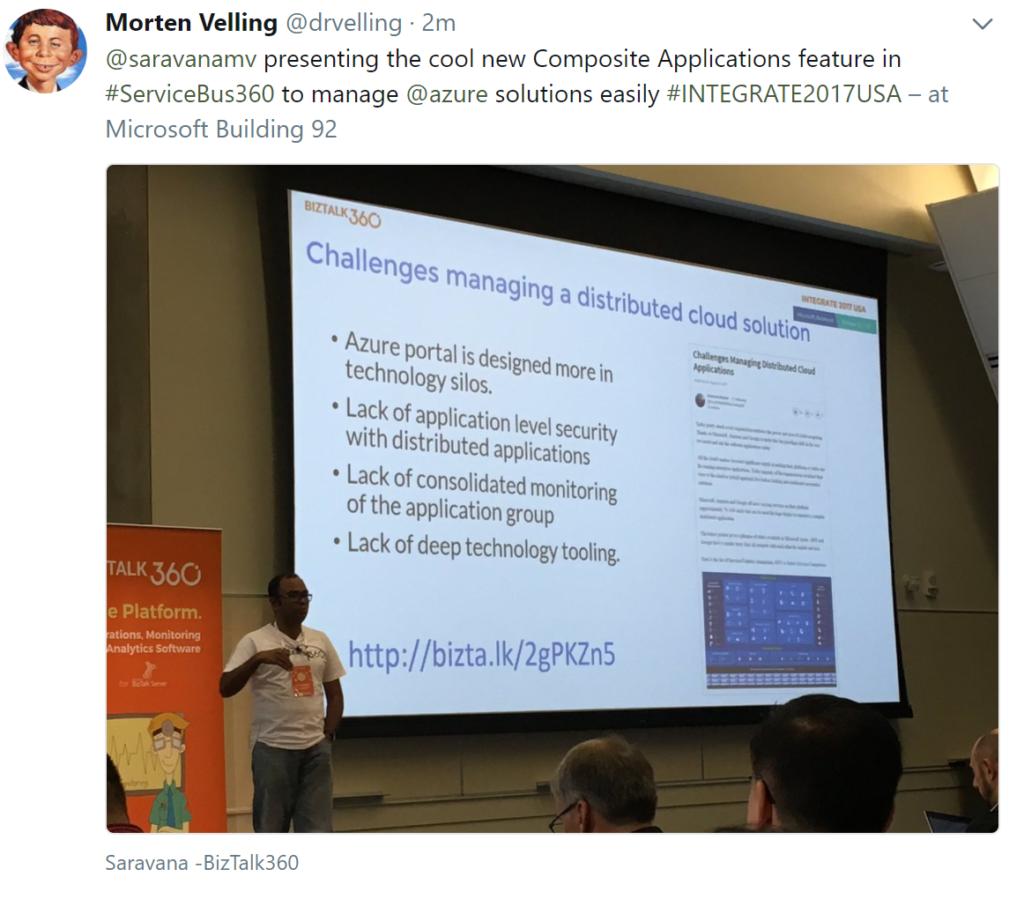

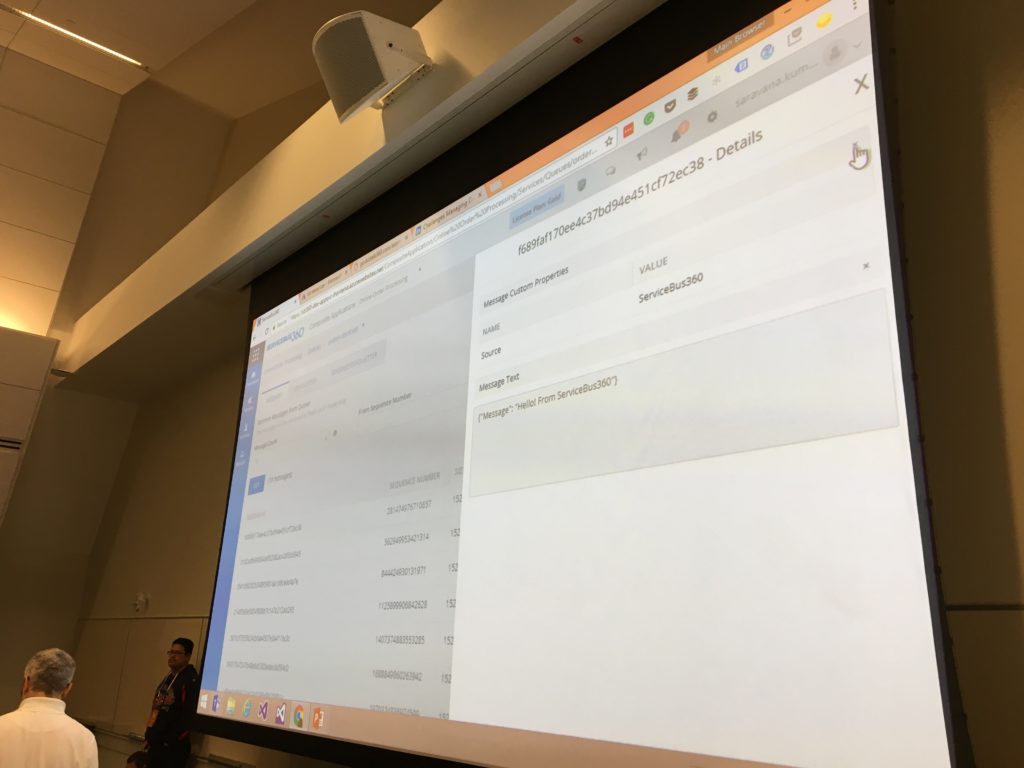

Saravana talked in his session about monitoring challenges with a distributed cloud integration solution. He showed the capabilities of ServiceBus360, a monitoring, and management service primarily for service bus yet expanded with new features. These new features are intended to mitigate the challenges the arise with a composite application.

Saravana demoed the ServiceBus360 to the audience to showcase the features and how it can help people with their cloud composite integration solution.

After the demo, Saravana elaborated on the evolution of ServiceBus360. Its still early days, for some of the new capabilities and he is looking for feedback. Furthermore, he discussed where the service will be heading too by sharing the roadmap.

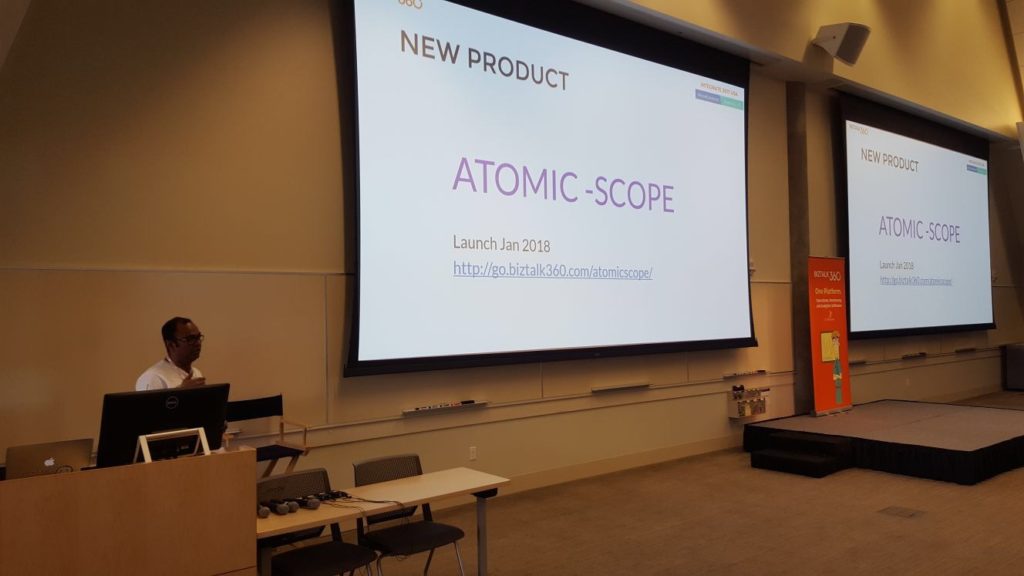

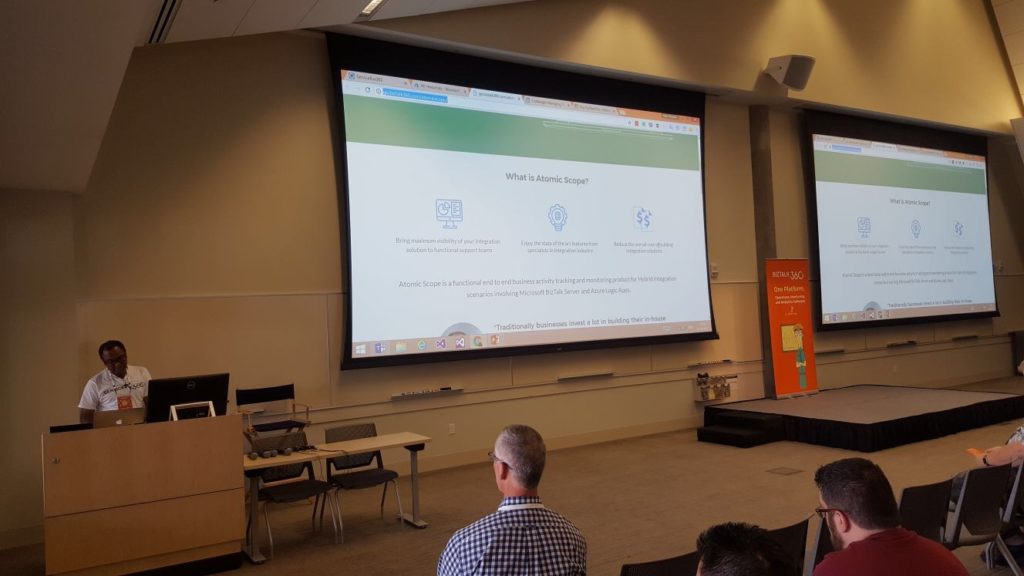

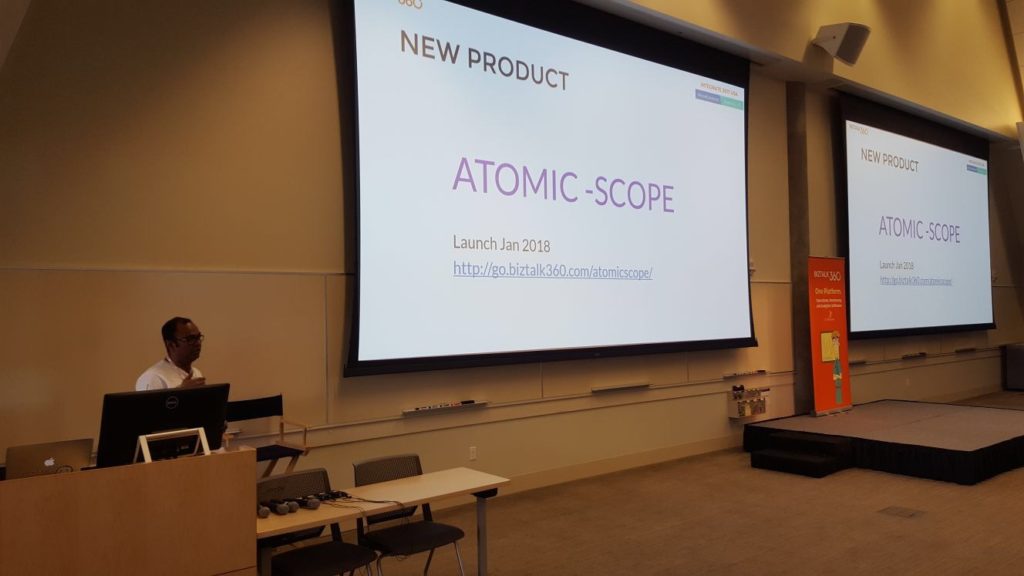

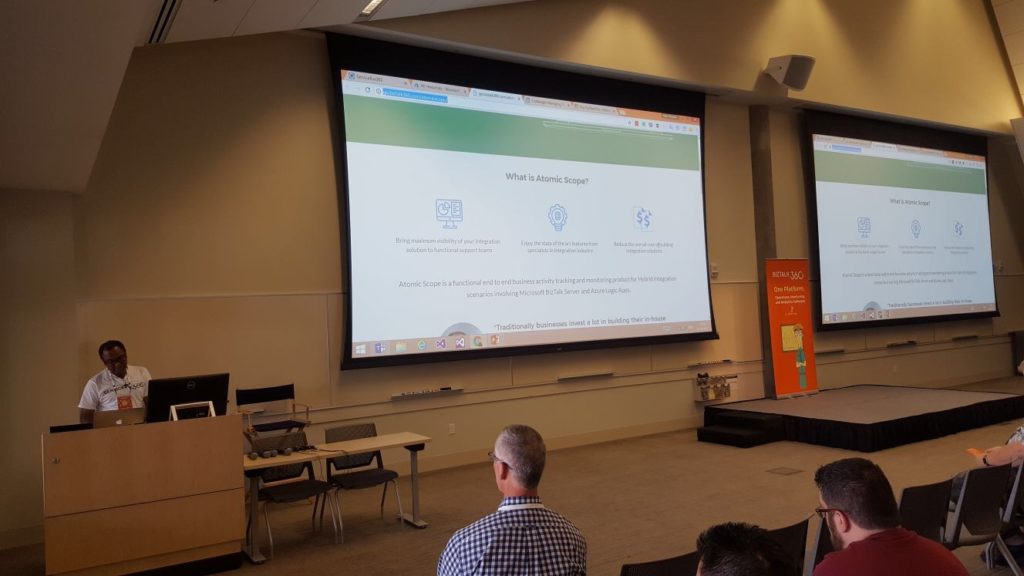

At the end of the presentation, Saravana announced Atomic Scope, a new upcoming product. It will be launched in January 2018, and it is a functional end to end business activity tracking and monitoring product for Hybrid integration scenarios involving Microsoft BizTalk Server and Azure Logic Apps.

Signals, Intelligence, and Intelligent Actions

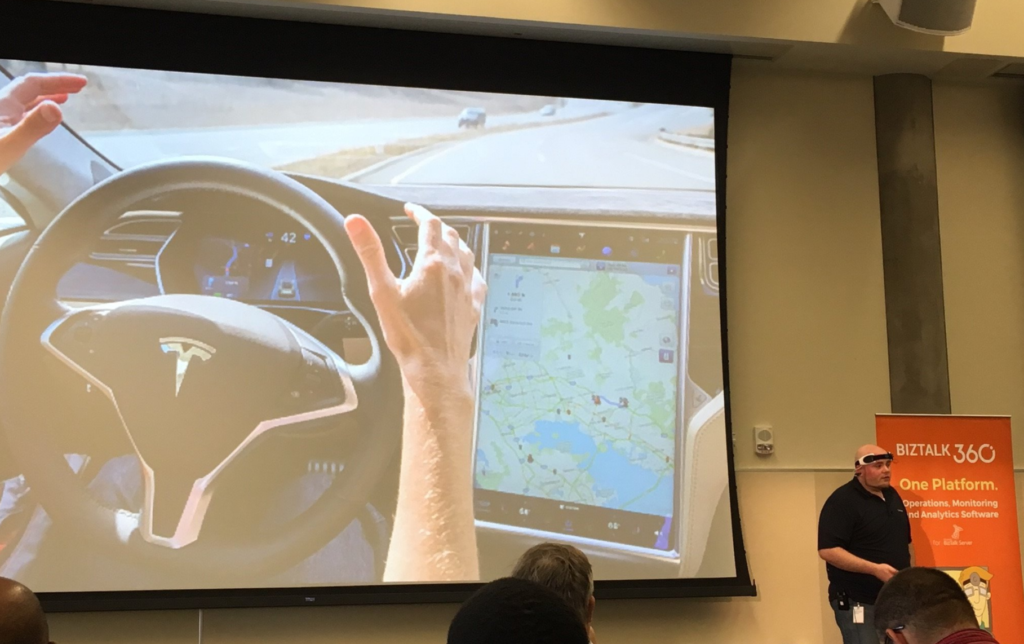

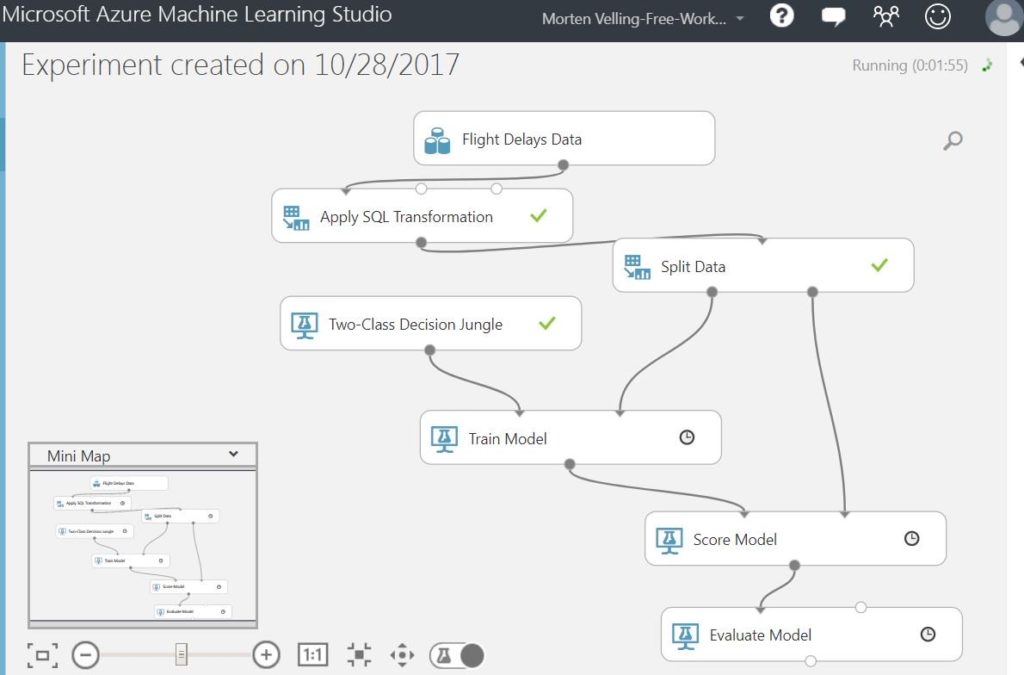

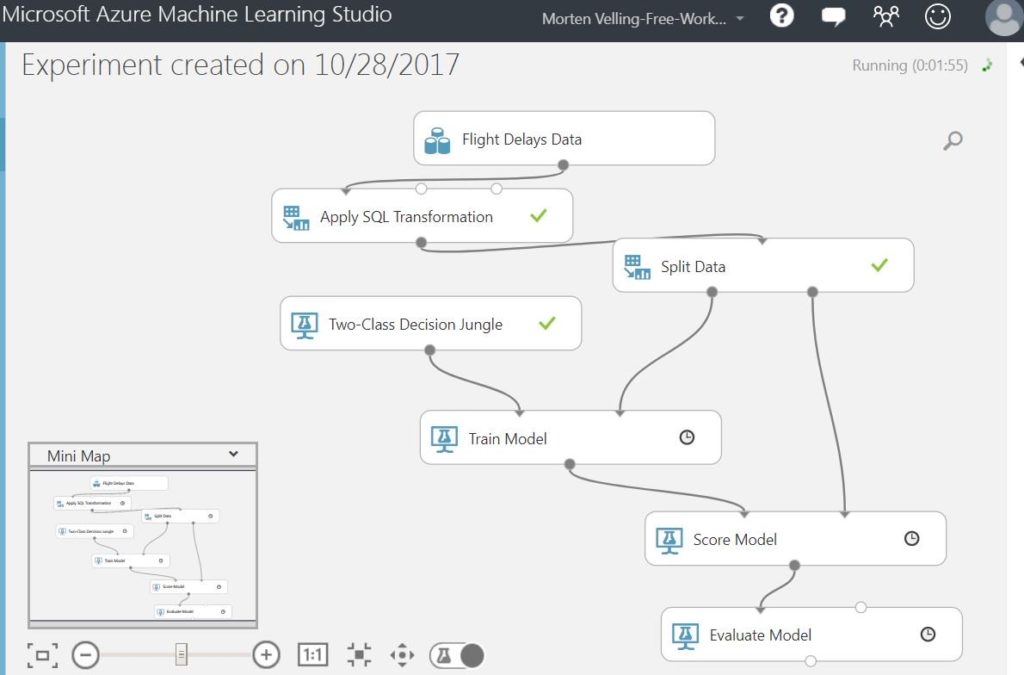

Nick Hauenstein talked about Azure Machine Learning, mind reading and experiments. He promised a fun session!

Nick did a great demo on mind reading, having people asking questions and showing what his mind was thinking yes and no. For instance: “Will Astro’s win the next game against the LA Dodgers in the World Series?“.

After the demo, Nick explained Machine Learning, possible very relevant in our day and age. Furthermore, he followed that up with another demo teaching the audience how to build and operationalize an Azure ML model, and able to invoke that from within either BizTalk Server or Azure Logic Apps. The audience could follow along with Azure ML Studio and build a demo themselves.

To conclude, this was a great session and introduction to Machine Learning. In the past, I followed the course on eDX on DataScience, which includes hands-on with ML Studio.

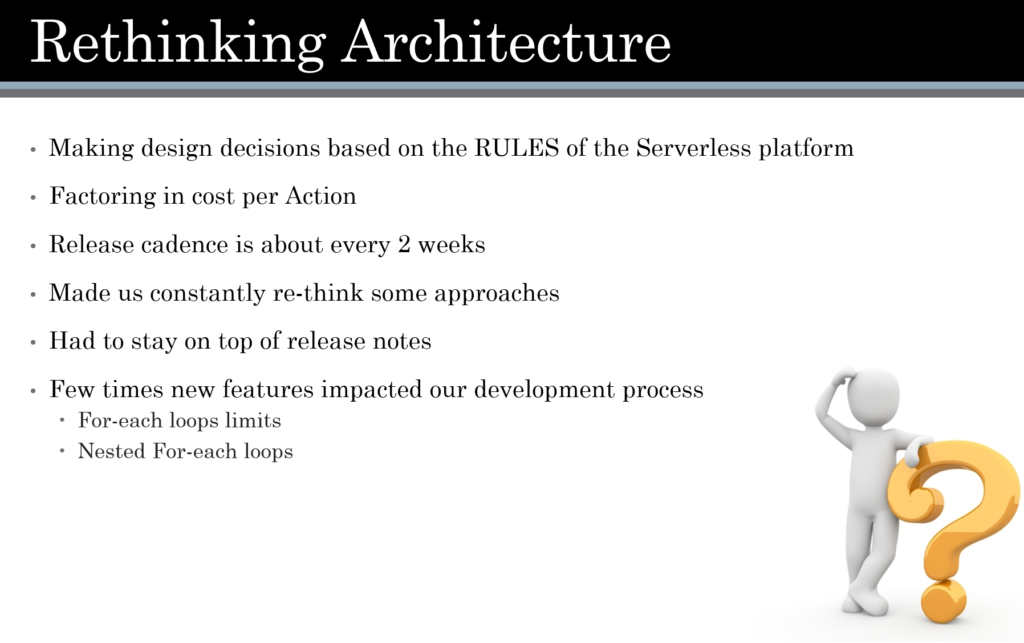

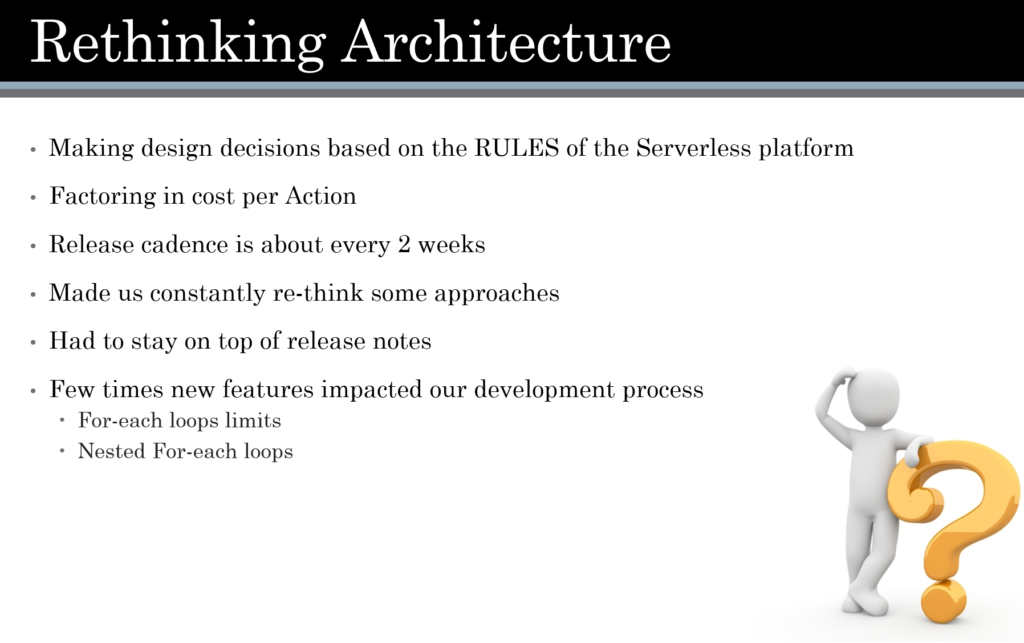

Overcoming Challenges When Taking Your Logic App into Production

Stephen W. Thomas, a long time Integration MVP, took the stage to talk about how to get a Logic App running as a BizTalk guy. He shared during his talk his experience with building Logic Apps.

Moreover, Stephen shared some good tips around Logic Apps:

- Read the available documentation.

- Don’t be afraid for JSON – code view is still needed especially with new features, but most of the time is soon available in designer and visual studio. Always save or check-in before switching to JSON.

- Make sure to fully configure your actions, otherwise, you cannot save the Logic App.

- Ensure name of action, hard to change afterward.

- Try to use only one MS account.

- If you get odd deployment results, close / re-open your browser.

- Connections – Live at resource group level. The last deployment wins.

- Best practices: define all connection parameters in one Logic App. One connection per destination, per resource group.

- Default retries – all actions retry 4 additional times over 20s intervals.

Control using retry policies.

- Resource Group artefacts – contain subscription id, use parameters instead.

- For each loop – limited to 100000 loops. default to multiple concurrent loops can be changed to sequential loops

- Recurrence – singleton.

- User permissions (IAM) – multiple roles exist like the Logic App Contributor and the Logic App Operator.

BizTalk Server Fast & Loud

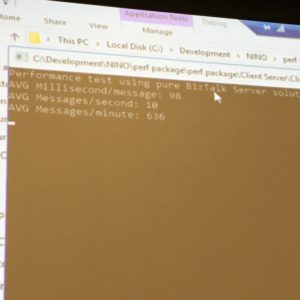

The final session of the day by Sandro Pereira, he talked about performance with BizTalk. After the introduction of himself, nicknames and stickers, he dived into his story. Have your BizTalk Jobs running, pricing based on the setup of a BizTalk environment, default installation, and performance.

How to increase performance, how to decrease response times, BizTalk database optimizations, hard drives, networks, memory, CPU, scaling, Sandro went the distance.

Finally, Sandro did a demo to showcase better performance with BizTalk by doing a lot tuning.

It was a fast demo and he finished the talk with some final advice: “Do not have more than 20 host instances!”.

Q&A Session

After Sandro’s session, lunch and a Q&A session with the Pro-Integration and Flow Product Group.

It’s a wrap

That was Integrate 2017 USA, two and half days of integration focussed content, great set of speakers and empowered attendees, who will go home with a ton of knowledge. Hopefully, BizTalk360 will be able to organize this event again next year and keep the momentum going.

Thanks, Saravana and Team BizTalk360. Job well done!!!

Check out the recap of the events on Day 1 and Day 2 at Integrate 2017 USA.

Author: Steef-Jan Wiggers

Steef-Jan Wiggers has over 15 years’ experience as a technical lead developer, application architect and consultant, specializing in custom applications, enterprise application integration (BizTalk), Web services and Windows Azure. Steef-Jan is very active in the BizTalk community as a blogger, Wiki author/editor, forum moderator, writer and public speaker in the Netherlands and Europe. For these efforts, Microsoft has recognized him a Microsoft MVP for the past 5 years. View all posts by Steef-Jan Wiggers

by Eldert Grootenboer | Oct 26, 2017 | BizTalk Community Blogs via Syndication

And so the second day of Integrate 2017 USA is a fact, another day of great sessions and action packed demos. We started the day with Mayank Sharma and Divya Swarnkar from Microsoft CSE, formerly Microsoft IT, taking us through their journey to the cloud. Microsoft has an astounding amount of integrations running for all their internal processes, communicating with 1000+ partners. With 175+ BizTalk servers running on Azure IaaS, doing 170M+ messages per month, they really need a integration platform they can rely on.

Like most companies, Microsoft is also looking into ways to modernize their application landscape, as well as to reduce costs. To accomplish this, they now are using Logic Apps at the heart of all their integrations, using BizTalk as their bridge to their LOB systems. By leveraging API Management they can test their systems in production as well as in their UAT environments, ensuring that all systems work as expected. By using the options the Azure platform provides for geo replication they ensure that even in case of a disaster their business will stay up and running.

Adopting a microservices strategy, each Logic App is set up to perform a specific task, and meta data is used to execute or skip specific parts. To me this seems like a great setup, and definitely something to look into when setting up your own integrations.

Manage API lifecycle sunrise to sunset with Azure API Management

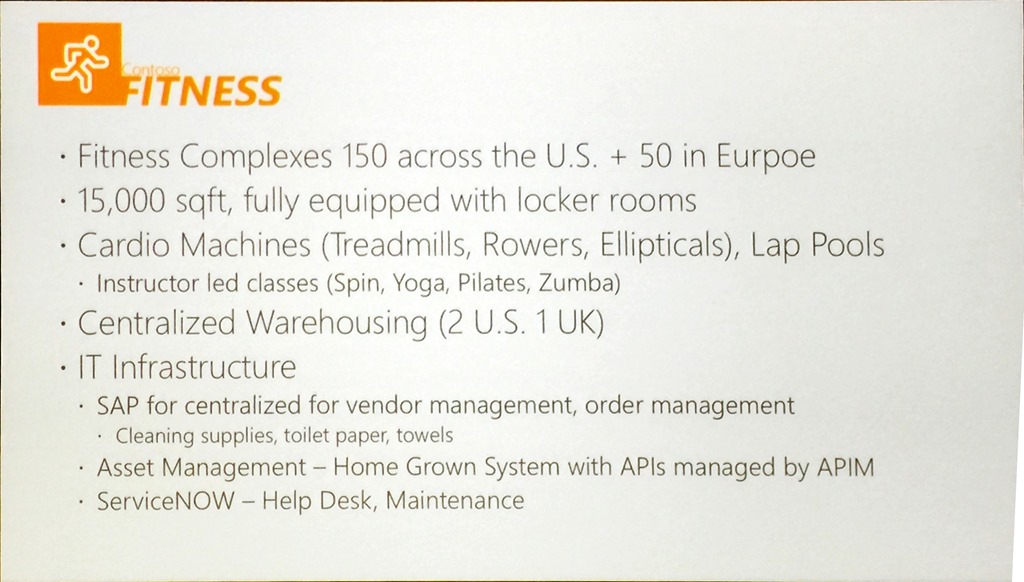

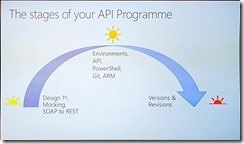

The second session of the day we had Matthew Farmer and Anton Babadjanov showing us how we can use API Management to set up an API using a design first approach. Continuing on the scenario of Contoso Fitness, they set up the situation where you need to onboard a partner to an API which has not been built yet. By using API Management we can set up a façade for the API, adding it’s methods and mock responses, allowing consumers to start working with the API quickly.

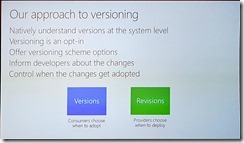

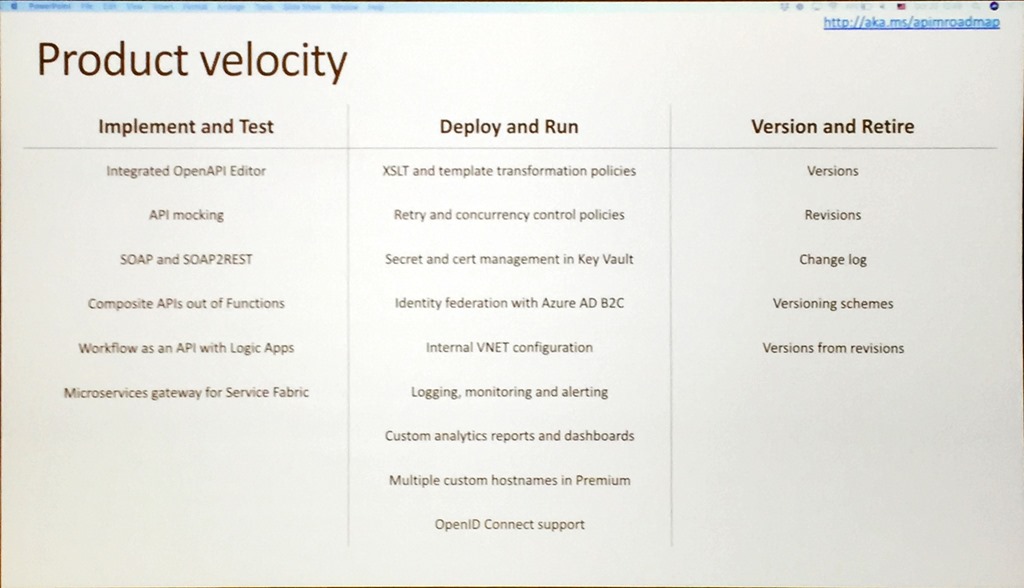

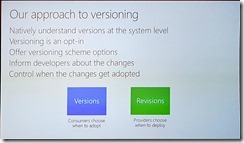

Another important subject is how you handle new versions of your API. Thanks to API Management you can now have versions and revisions of your API. Versions allow you to have different implementations of your API living next to each other publically available to your consumers, where revisions allow you to have a private new version of your API, in which you can develop and test changes. Once you are happy with the changes done in the revision, you can publish it with a click of the button, making the new revision the public API. This is very powerful, as it allows us to safely test our changes, and easily roll back in case of any issues.

Thanks to API Management we have the complete lifecycle of our API’s covered, going from our initial design, through the ALM story, all the way up to updating and deprovisioning.

Azure Logic Apps – Advanced integration patterns

Next up are Jeff Hollan and Derek Li, taking us behind the scenes of Logic Apps. Because the massive scale these need to run on, there are many new challenges which needed to be solved. Logic Apps does this by reading in the workflow definition, and breaking it down into a composition of tasks and dependencies. These tasks are then distributed across various workers, each executing their own piece of the tasks. This allows for a high degree of parallelism, which is why they can scale out indefinitely. Having this information, it’s important to take this with us in our scenarios, thinking about how this might impact us. This includes keeping in mind tasks might not be processed in order, and at high scale, so we need to take this into account on our receiving systems. Also, as Logic Apps provides at-least-once delivery, so we should look into idempotency for our systems.

Derek Li showed us different kinds of patterns which can be used with Logic Apps, including parallel processing, exception handling, looping, timeouts, and the ability to control the concurrency, which will be coming to the portal in the coming week. Using these patterns, Derek created a Logic App which sent out an approval email, and by adjusting the timeout and setting up exception handling on this, escalating to the approver’s manager in case the approval was not processed within the timeout. These kinds of scenarios show us how powerful Logic Apps has become, truly allowing for a customized flow.

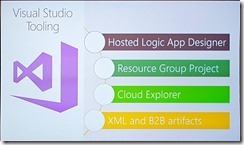

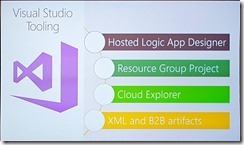

Bringing Logic Apps into DevOps with Visual Studio and monitoring

After some mingling with like minded people during the break at Integrate 2017 USA, it’s now time for another session by Kevin Lam and Jeff Hollan, which is always a pleasure to see. In this session we dive into the story around DevOps and ALM for Logic Apps. These days Logic Apps is a first class citizen within Visual Studio, allowing us to create and modify them, pulling in and controlling existing Logic Apps from Azure.

As the Logic Apps designer creates ARM templates, we can also add these to source control like VSTS. By using the CI/CD possibilities of VSTS, we can then automatically deploy our Logic Apps, allowing for a completely automated deployment process.

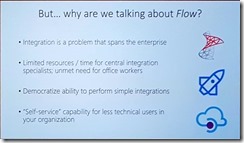

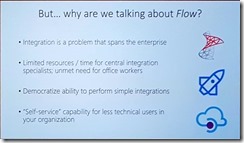

Integrating the last mile with Microsoft Flow

As pro-integrators Microsoft Flow might not be the first tool coming to mind, but actually this is a very interesting service. It allows us to create light weight integrations, giving room to the idea of democratization of integration. There is a plethora of templates available for Flow, allowing users to easily automate tasks, accessing both online and on-premises data. With custom connectors give us the option to expose any system we want to. And being categorized in verticals, users will be able to quickly find templates which are useful for them.

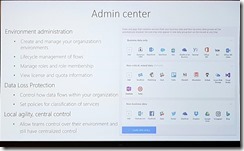

Flow also has the option to use buttons, which can be both physical buttons, from Flic or bttn, or programmatic in the Flow app. This allows for on-demand flows to be executed by the click of a button, and sharing these within your company. For those who want more control over the flows that can be built, and the data that can be accessed, there is the Admin center, which is available with Flow Plan 2.

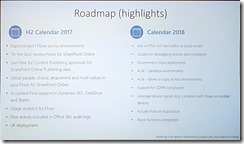

Looking at the updates which has happened over the last few months, it’s clear the team has been working hard, making Flow ready for your enterprise.

And even more great things are about to come, so make sure to keep an eye on this.

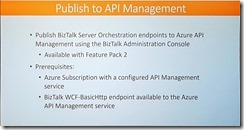

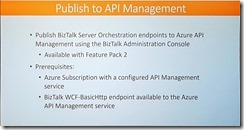

Deep dive into BizTalk technologies and tools

Yesterday (on Day 1 on Integrate 2017 USA), we heard the announcement of Feature Pack 2 for BizTalk. For me, one of the coolest features that will be coming is the ability to publish BizTalk endpoints through Azure API Management. This will allow us to easily expose the endpoint, either via SOAP pass-through or even with SOAP to REST, and take advantage of all the possibilities API Management brings us, like monitoring, throttling, authentication, etc. And all this, with just a right click on the port in BizTalk, pretty amazing.

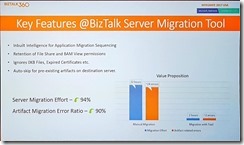

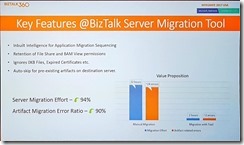

As we had seen in the first session of today, Microsoft has a huge integration landscape. With that many applications and artifacts, migration to a new BizTalk version can become quite the challenge. To overcome this, Microsoft IT created the BizTalk Server Migration Tool, and published the tool for us as well. The tool takes care of migrating your applications to a new BizTalk environment, taking care of dependencies, services, certificates and everything else.

Looking at the numbers, we can see how much effort this saves, and minimizing the risks of errors. The tool supports migration to BizTalk 2016 from any version from BizTalk 2010, and is certainly a great asset for anyone looking into migration. So if you are running an older version of BizTalk, remember to migrate in time, to avoid running out of the support timelines we have seen yesterday.

What’s there & what’s coming in BizTalk360

Next up we had Saravana Kumar, CEO of BizTalk360 and founding father of Integrate, guiding us through his top 10 features of BizTalk360. Having worked with the product since its first release, I can only say it has gone through an amazing journey, and has become the de-facto monitoring solution for BizTalk and its surrounding systems. It helps solving the challenges anyone who has been administrating BizTalk, giving insights in your environment, adding monitoring and notifications, and giving fine-grained security control.

So Saravana’s top 10 of BizTalk360 is as following, and I pretty much agree on all of them.

1. Rich operational dashboards, showing you the health of your environment in one single place

2. Fine grained security and auditing, so you can give your users access to only those things they need, without the need to opening up your complete system

3. Graphical message flow, providing an end to end view of your message flows

4. Azure + BizTalk Server (single management tool), because Azure is becoming very important in most integrations these days

5. Monitoring – complete coverage, allowing us monitor and, even more importantly, be notified on any issue in your environment

6. Data (no events) monitoring, giving us monitoring on what’s not happening as well, for example expected messages not coming in

7. Auto healing – from failures, to make sure your environment keeps running, automatically coming back up after issues, either from mechanical or human causes

8. Scheduled reporting, which will be coming in the next version, creating reports about your environment on a regular basis

9. Analytics & messaging patterns, giving even more insights in what is happening using graphical charts and widgets

10. Throttling analyser, because anyone who has ever needed to solve a throttling problem knows how difficult this can be, having to keep track of various performance counters, this feature allows a nice graphical overview and historical insights

11. Team knowledgebase, so one more bonus feature that should really be addressed, the knowledgebase is used to link articles to specific errors and faults, making sure this knowledge is readily available in your company

Of course, this is not all, BizTalk360 has a lot more great features, and I can recommend anyone to go and check it.

Give your Bots connectivity, with Azure Logic Apps

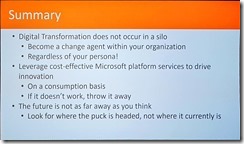

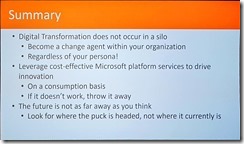

Kent Weare, former MVP and now Principal Program Manager within Microsoft on Flow team, takes us on a journey into bots, and giving them connectivity with Logic Apps and Flow. First setting the stage, we all have heard about digital transformation, but what is it all about? Digital transformation has become a bit of a buzzword, but the idea behind it is actually quite intriguing, which is using digital means to provide more value and new business models. The following quote shows this quite nicely.

“Digital transformation is the methodology in which organizations transform, create new business models and culture with digital technologies” – Ray Wang, Constellation Research

An important part here is the culture in the organization will need to change as well, so go out and become a change agent within your organization.

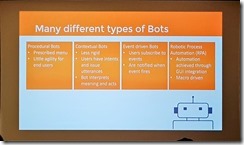

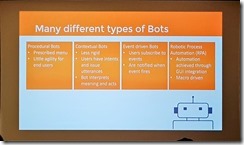

Next we go on to bots, which can be used to reduce barriers and empower users through conversational apps. With the rise of various messenger applications like WhatsApp and Facebook Messenger, there is a huge market to be reached here. There are many different kinds of bots, but they all have a common way of working, often incorporating cognitive services like Language Understanding Intelligence Service (LUIS) to make the bot more human friendly.

When we want to build our own bots, we have different possibilities here as well, depending on your background and skills. Kent had a great slide on this, making it clear you don’t have to be a pro integrator anymore to make compelling bots.

In his demos, Kent showed the different implementations on how to build a bot. The first is using the bot framework with Logic Apps and Cognitive Services to make a complex bot, allowing for a completely tailored bot. For the other two demos, he used Microsoft flow in combination with Bizzy, a very cool connector which allows us to create a “question-answer bot”, analyzing the input from the user and making decisions on it. Finally the ability to migrate Flow implementations to Logic Apps was demonstrated, allowing users to start a simple integration in Flow, but having the ability to seamlessly migrate these to Logic Apps when more complexity is needed over the lifecycle of the integration.

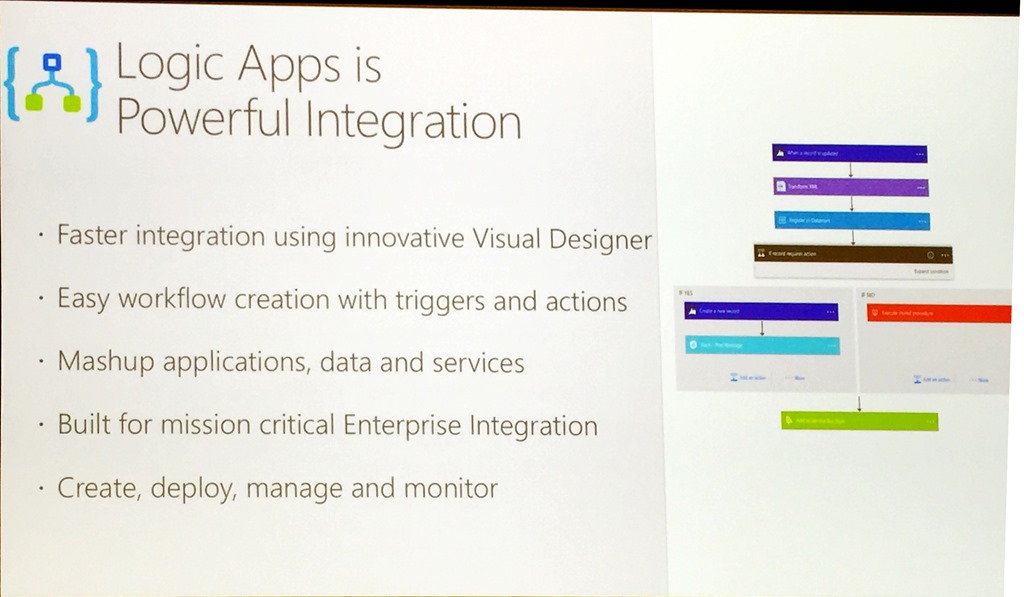

Empowering the business using Logic Apps

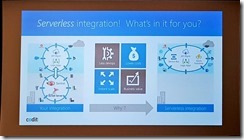

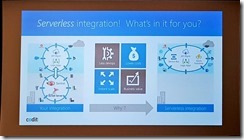

And closing this second day of Integrate 2017 USA, we had Steef-Jan Wiggers, with a view from the business side on Logic Apps. A very interesting session, as instead of just going deep down into the underlying technologies, he actually went and looked for the business value we can add using these technologies, which in the end is what it is all about. Serverless integration is a great way to provide value for your business, lowering costs and allowing for easy and massive scaling.

Steef-Jan went out to several companies who are actually using and implementing Azure, including Phidiax, MyTE, Mexia and ServiceBus360. The general consensus amongst them, is Logic Apps and the other Azure services are indeed adding value to their business, as it gives them the ability to set up new powerful scenarios fast and easy.

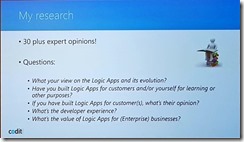

With several great demos and customer cases Steef-Jan made very visible how he has already helped many customers with these integrations to add value to their business. The integration platform as a service is here to stay, and according to Gartner iPaaS will actually be the preferred option for new projects by 2019. And again, he has gone out and this time went to the community leaders and experts, to get their take on Logic Apps. The conclusion here is these days Logic Apps is a mature and powerful tool in the iPaaS integration platform.

So that was the end of the second day at Integrate 2017 USA, another day full of great sessions, inspiring demos, and amazing presenters. With one more day to go, Integrate 2017 USA is again one of the best events out there.

Check out the recap of Day 1 and Day 3 at Integrate 2017 USA.

Author: Eldert Grootenboer

Eldert is a Microsoft Integration Architect and Azure MVP from the Netherlands, currently working at Motion10, mainly focused on IoT and BizTalk Server and Azure integration. He comes from a .NET background, and has been in the IT since 2006. He has been working with BizTalk since 2010 and since then has expanded into Azure and surrounding technologies as well. Eldert loves working in integration projects, as each project brings new challenges and there is always something new to learn. In his spare time Eldert likes to be active in the integration community and get his hands dirty on new technologies. He can be found on Twitter at @egrootenboer and has a blog at http://blog.eldert.net/. View all posts by Eldert Grootenboer

by Martin Abbott | Oct 26, 2017 | BizTalk Community Blogs via Syndication

And we’re off, the USA leg of the Integrate conference started today in Building 92 on the Microsoft campus in Redmond.

Saravana kicked off proceedings by setting the scene and giving us an indication of who we’re going to see over the next two and a half days.

It’s a good line up with speakers from the Microsoft integration teams and some great community speakers.

There was a shout out for Integration Monday and Middleware Friday, two awesome community efforts supported by Saravana and BizTalk360.

Saravana was followed by Duncan Barker from BizTalk360 who explained that BizTalk360 has now grown to 50 people and spoke about ServiceBus360 and how that has grown and continues to be developed.

Duncan also teased about 2 new products that are coming in 2018 so that’s definitely something to look out for and mentioned that work is already underway for Integrate 2018 so watch your mailboxes for more information on that as the plans begin to take shape.

With the introductions and scene setting done, it was time for the leader of the awesome integration team to take the stage.

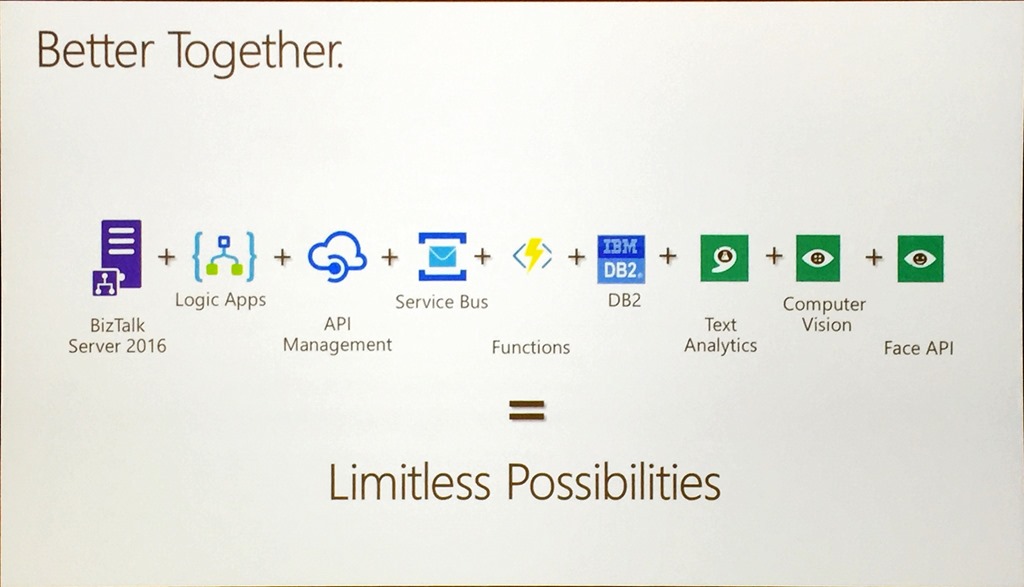

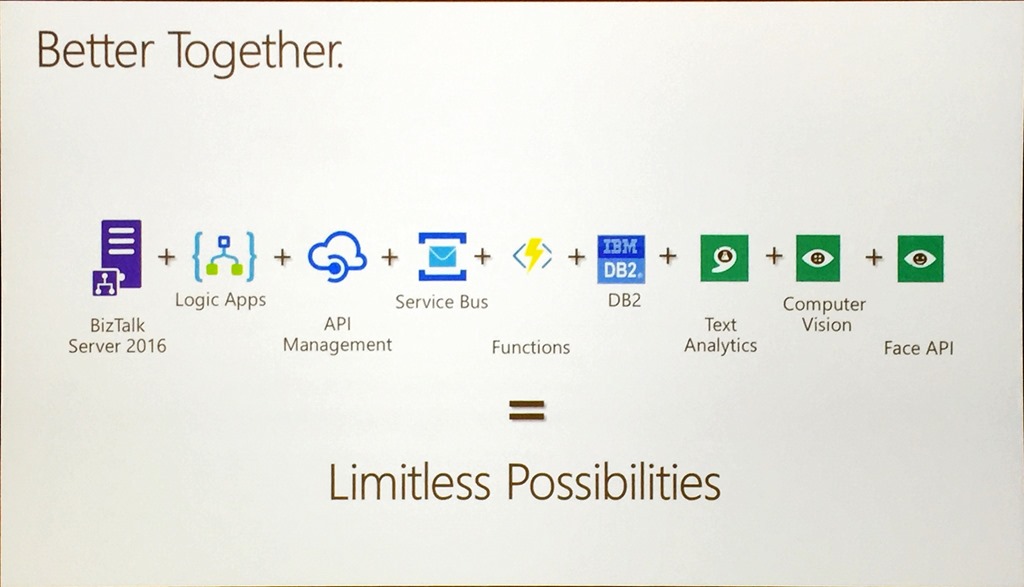

Jim Harrer – Limitless Possibilities with Azure Integration Services

Jim’s message was very much one of integration being the connective tissue that all solutions need to tie things together, reinforcing that there is ongoing investment in BizTalk Server and the story that Logic Apps and BizTalk Server are Better Together.

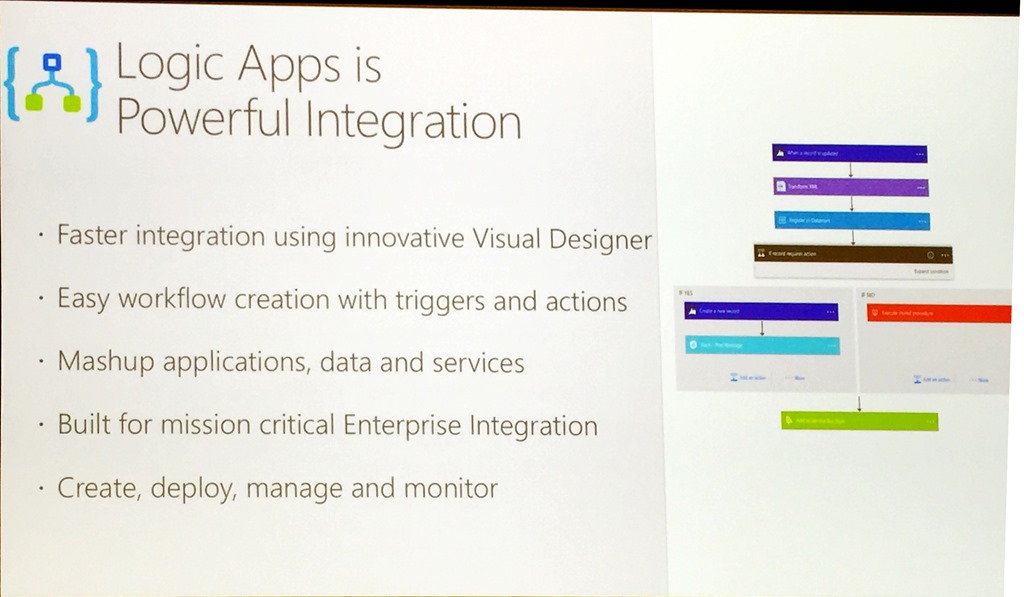

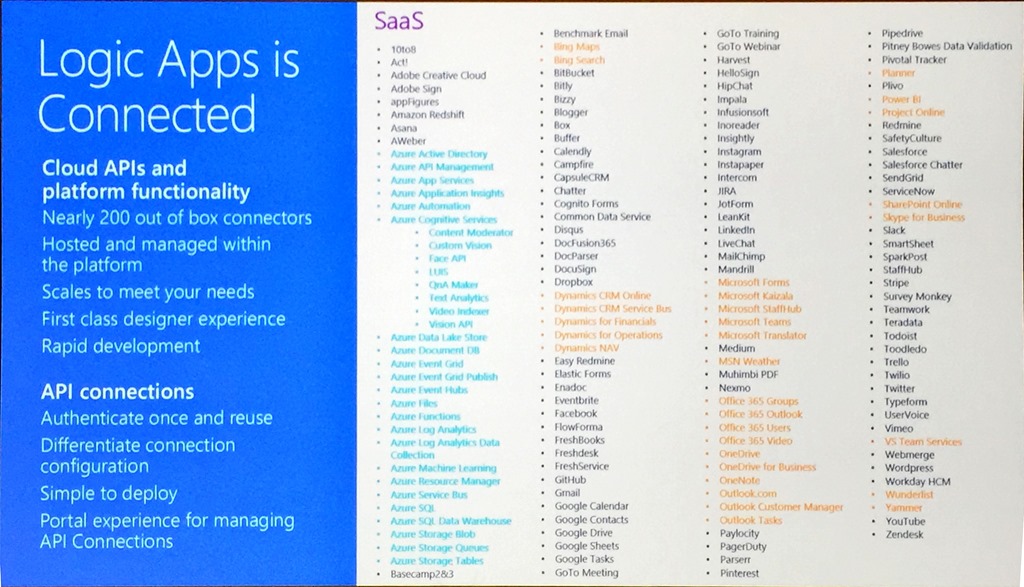

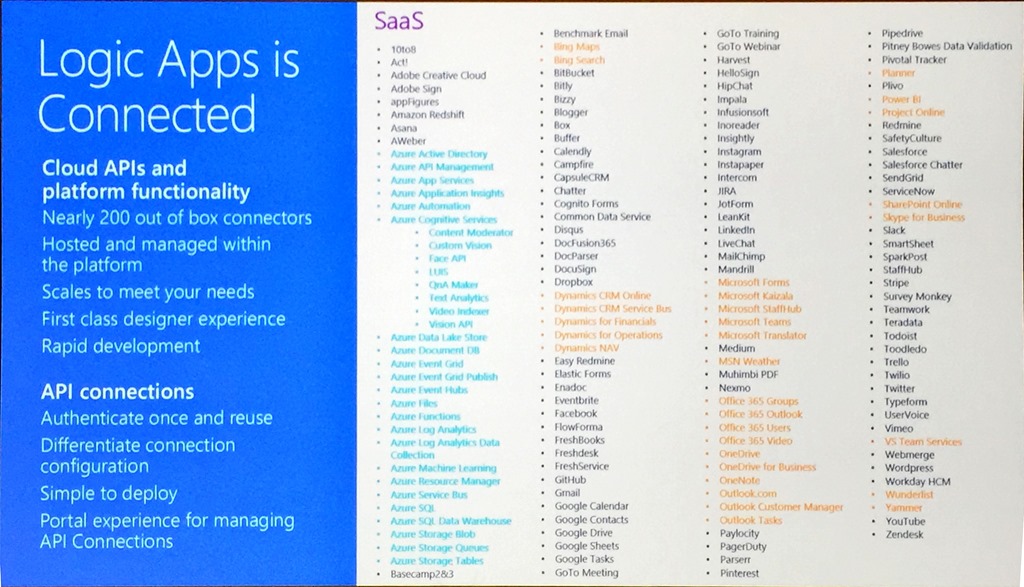

With over 180 connectors now in Logic Apps, including many that integrate directly with Azure Services, it is possible to more effectively build integration solutions that span on-premises and cloud and really accelerate adoption through hybrid integration, and taking an API first approach is a great way to unlock business value.

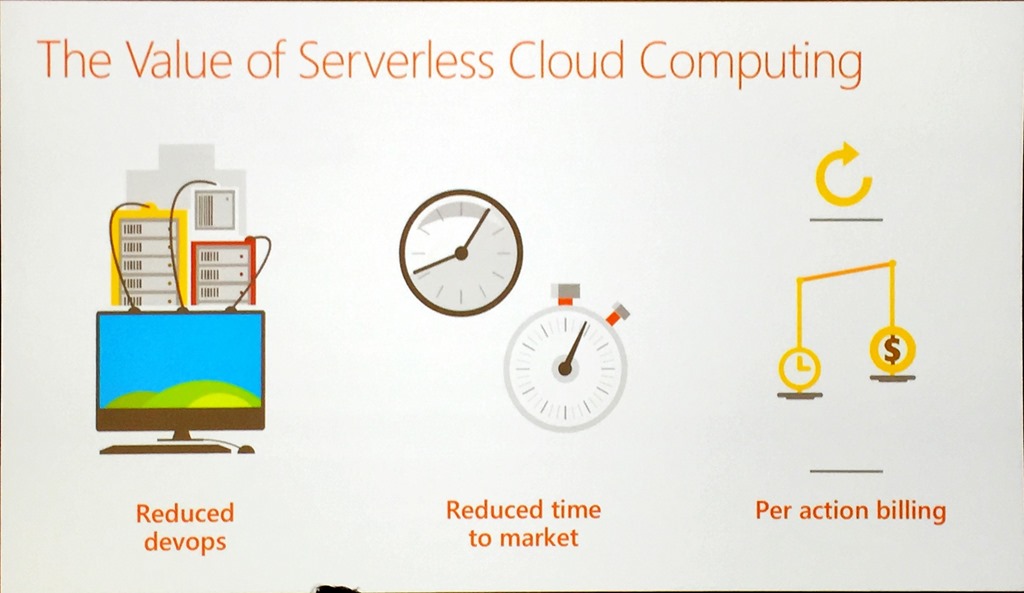

Jim then moved on to serverless, a platform that is just there ready for you to use when you need it.

With serverless, you get improved build and delivery, reduced time to market and per action billing and it really flips traditional development on its head.

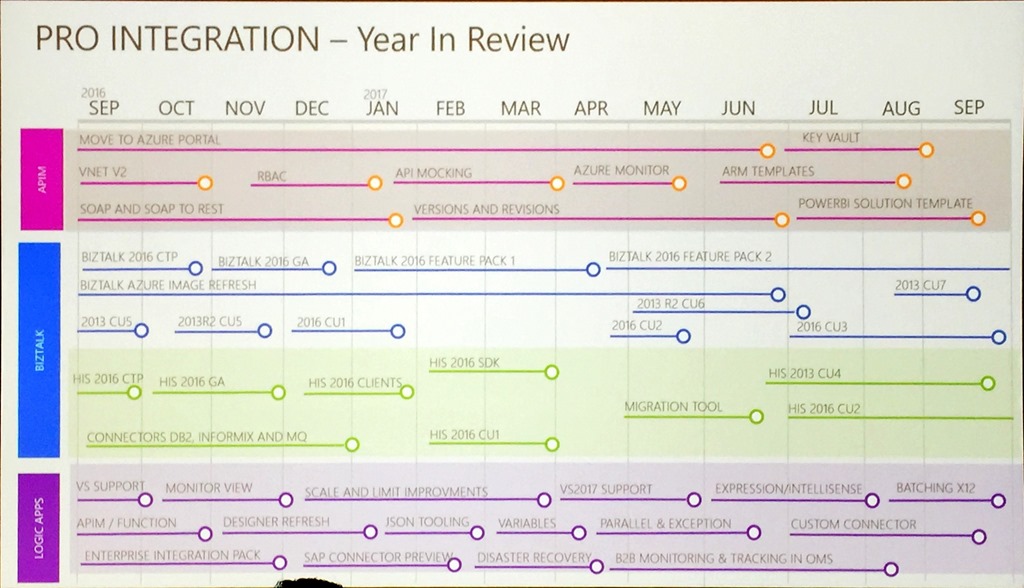

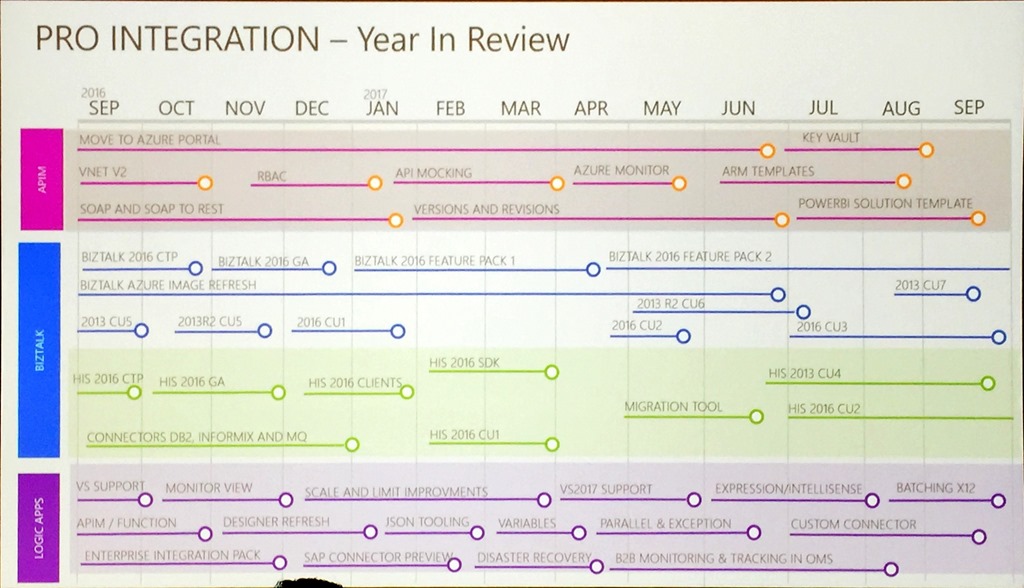

The Pro Integration team has had a busy year, and this was shown in a single slide.

This shows just how quickly things are changing and evolving and has included things like Logic Apps going GA, feature packs being introduced for BizTalk and API Mocking which has allowed teams to be more agile and progress at greater speed, making it possible to deliver integration solutions in weeks rather than months.

This agility has led to integration getting a seat at the table instead of being an afterthought.

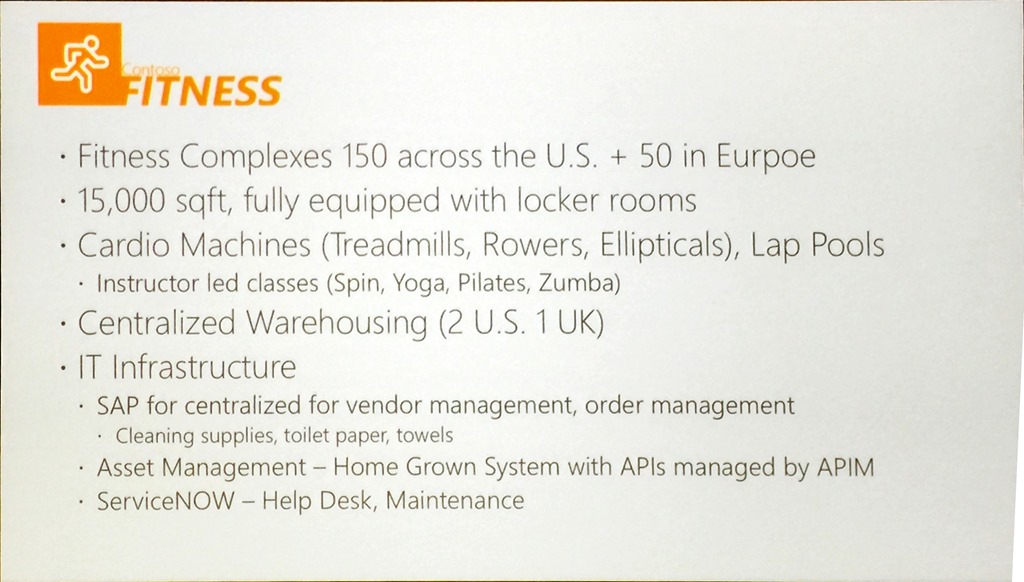

We then had some great demos from Jon Fancey, Kevin Lam and Jeff Hollan who introduced the demo scenario that would be used throughout the conference, Contoso Fitness.

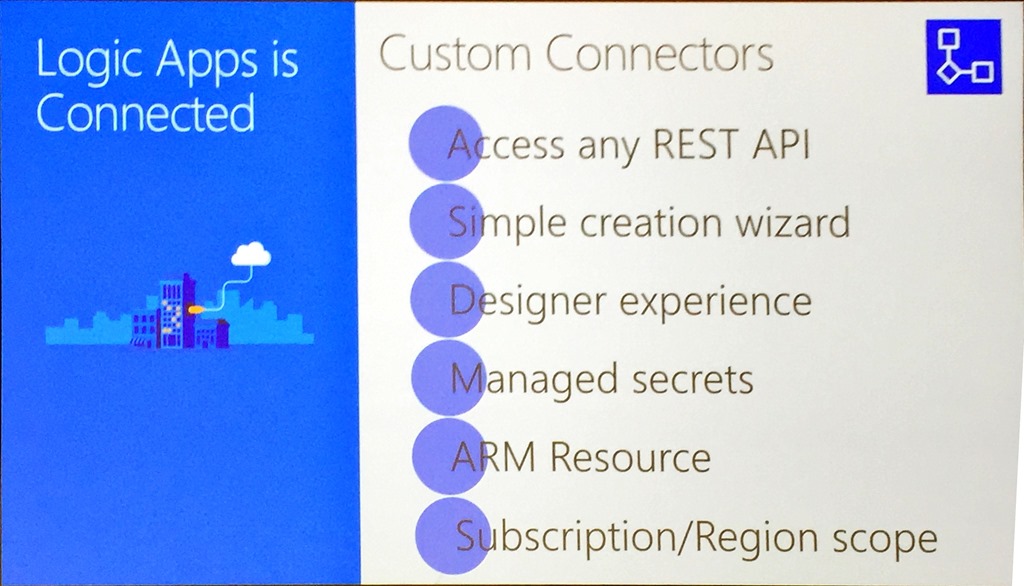

Jon kicked off the demos with a Logic App calling Spotify. This allowed him to show the new Custom Connector and a great resource, https://apis.guru/browse-apis/.

Kevin followed up looking at Azure Security Center and showed the tooling that was introduced at Microsoft Ignite recently. This provides integration directly between Azure Security Center and Logic Apps, including playbooks that are templates which integrate directly into typical service management tools such as Service Now.

Jeff did the last demo on Logic Apps and Cognitive Services. This showed the power of using the Video Indexer API and the ability to spin up a Docker container through a connector that will be released shortly. This container used FFMPEG, an open source tool, to take the transcript generated by the indexer and apply the information as subtitles in the video.

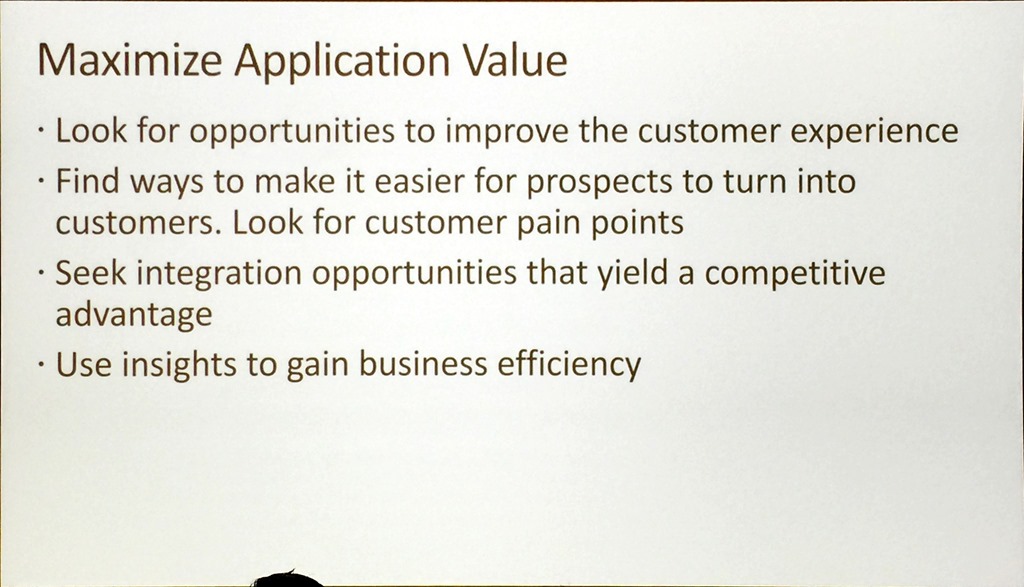

We finished with Jim urging everyone to maximise the value of their projects using integration.

Final message:

“Now is the time for integrators to unlock the impossible”

Paul Larsen – BizTalk – Connecting line-of-business applications across the Enterprise

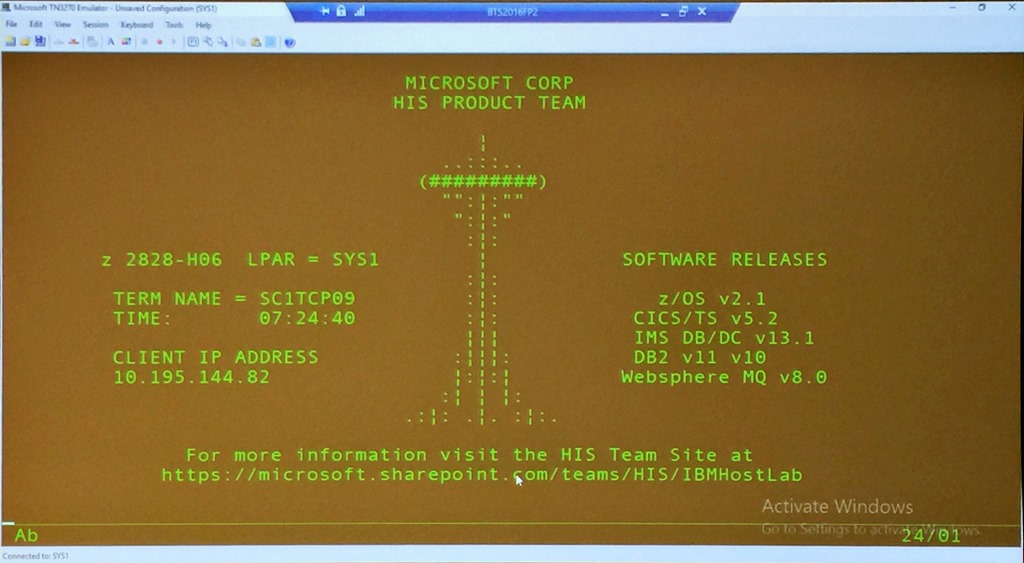

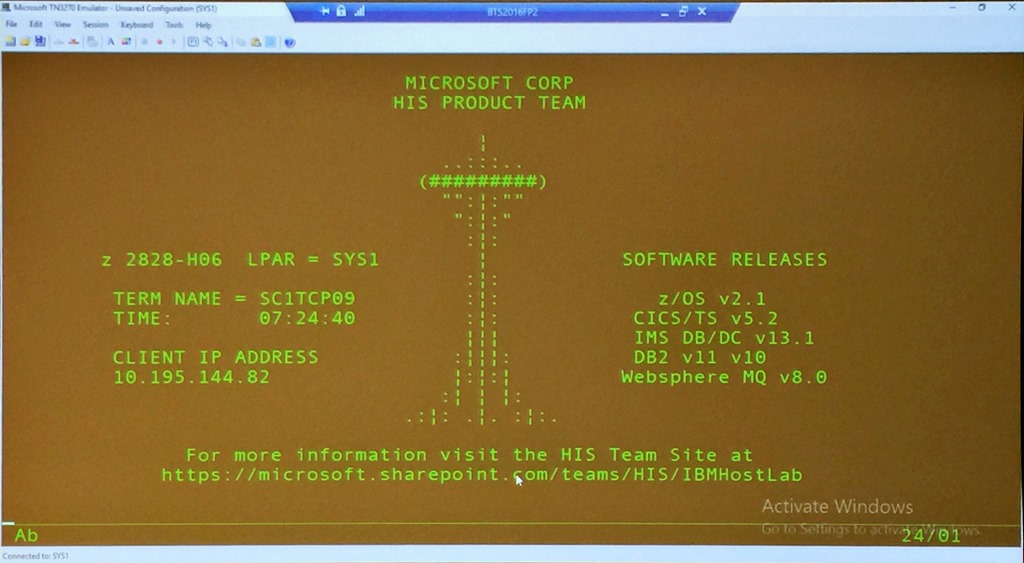

Paul opened his presentation with a great image of a green screen, a mainframe that is running on campus.

This set the scene for a great presentation and dive into BizTalk and heritage systems. Paul insisted on calling them heritage rather than legacy, as heritage is something you celebrate and love whilst legacy has a number of negative connotations!

Paul again emphasized the importance of hybrid integration between BizTalk and the cloud, and the message really started resonating. He spent some time positioning BizTalk and how it had changed along with Host Integration Server over the years he has been on the team.

For me, his demo involving Contoso Fitness showcasing mobile applications, Logic Apps, virtual machines, HL7 and a mainframe was one of the best of the day. It showcased hybrid integration with the Logic Apps adapter, and the real breadth and depth of the Microsoft integration story.

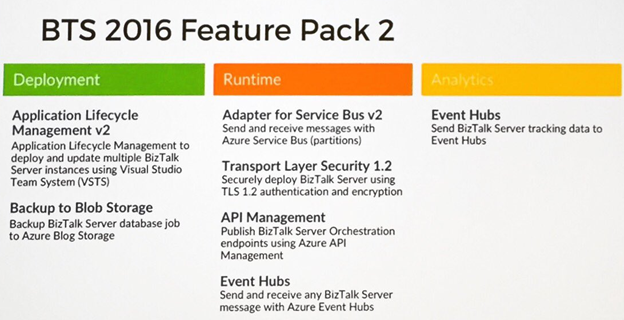

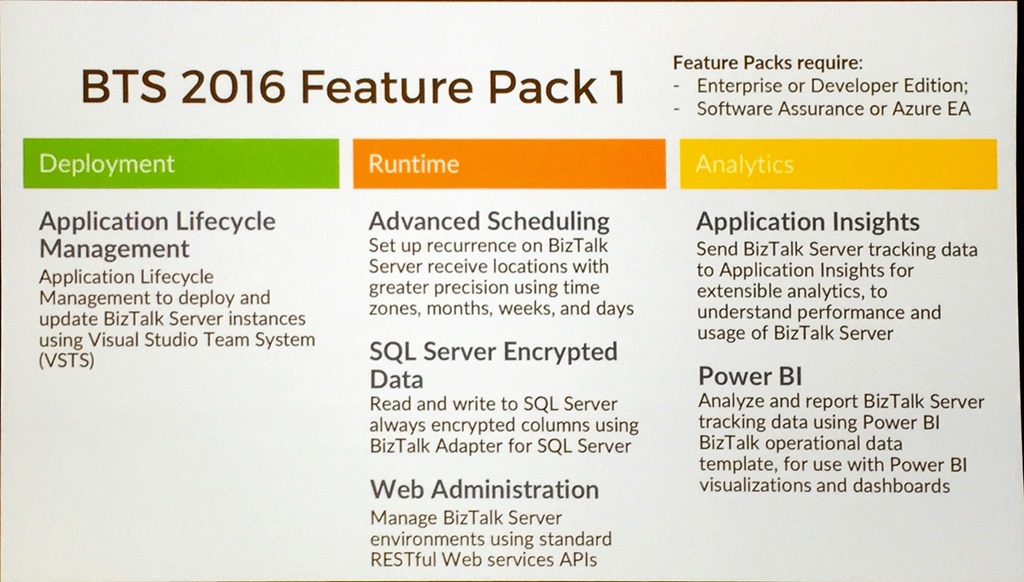

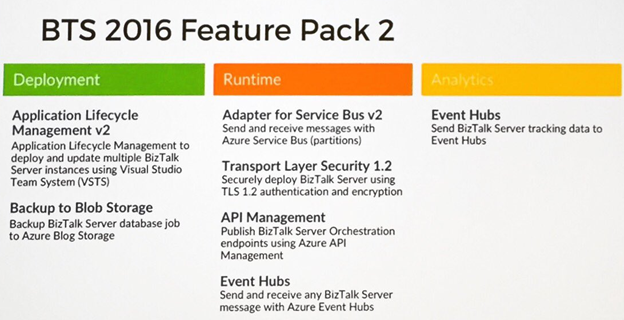

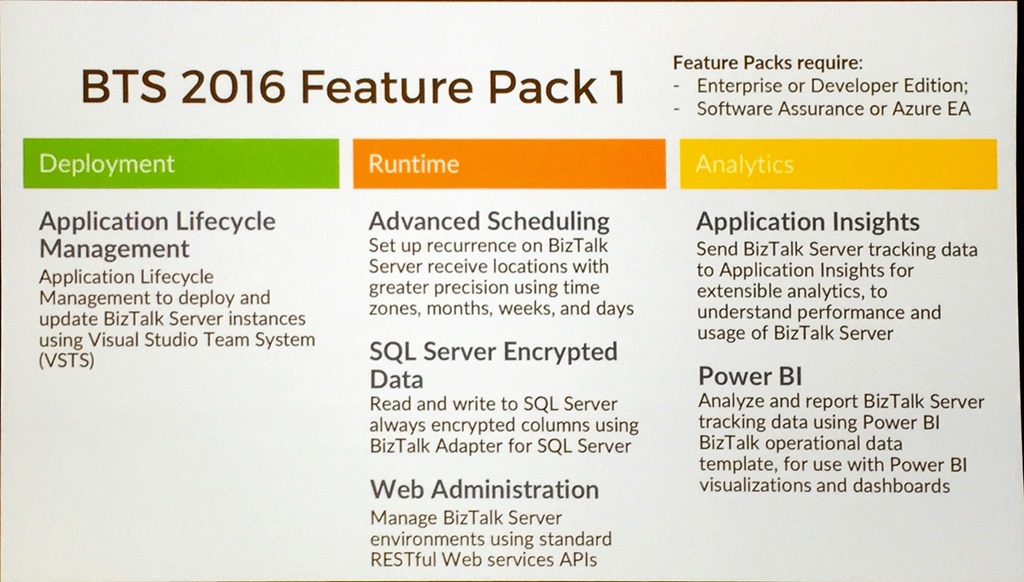

Paul explained the reasoning behind the Feature Pack releases, how it was able to deliver new value at a quicker cadence by introducing non-breaking changes and he reviewed what had been delivered in Feature Pack 1.

The information was split between Deployment – application lifecycle management; Runtime – advanced scheduling, SQL encryption columns and web admin; and Analytics – AppInsights for tracking and the Power BI template.

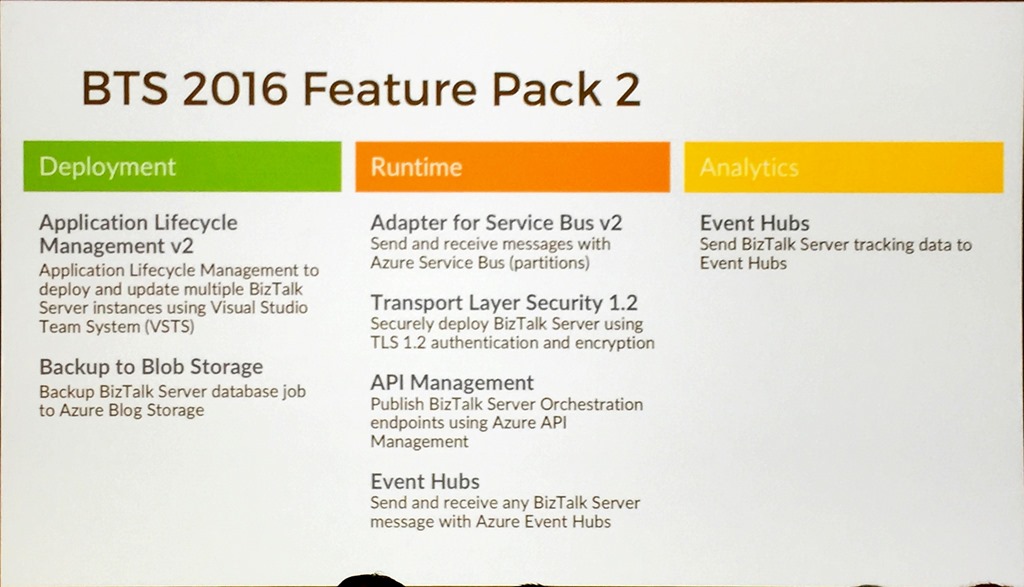

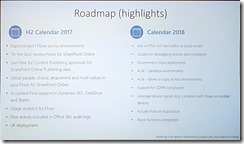

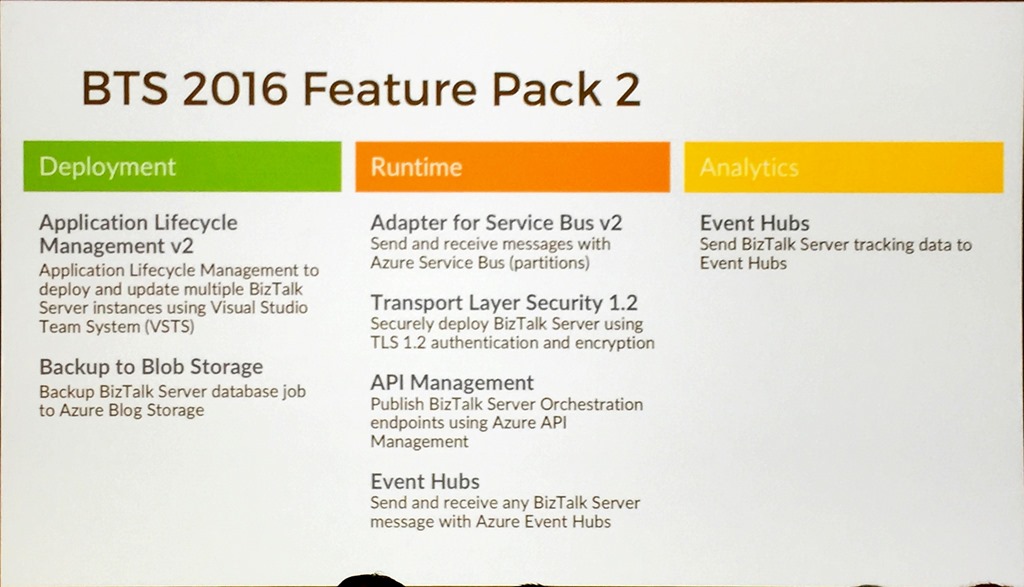

He then mentioned that Feature Pack 2 would be released next month!

Splitting the information the same way we had Deployment – application lifecycle management for multiple servers and backup to Blob Storage; Runtime – Adapter for Service Bus v2, TLS 1.2 (although this may be in the next Cumulative Update as it is a critical update), using API Management to expose Orchestration endpoints, and sending/receiving from Event Hub; Analytics – sending data to Event Hub for tracking.

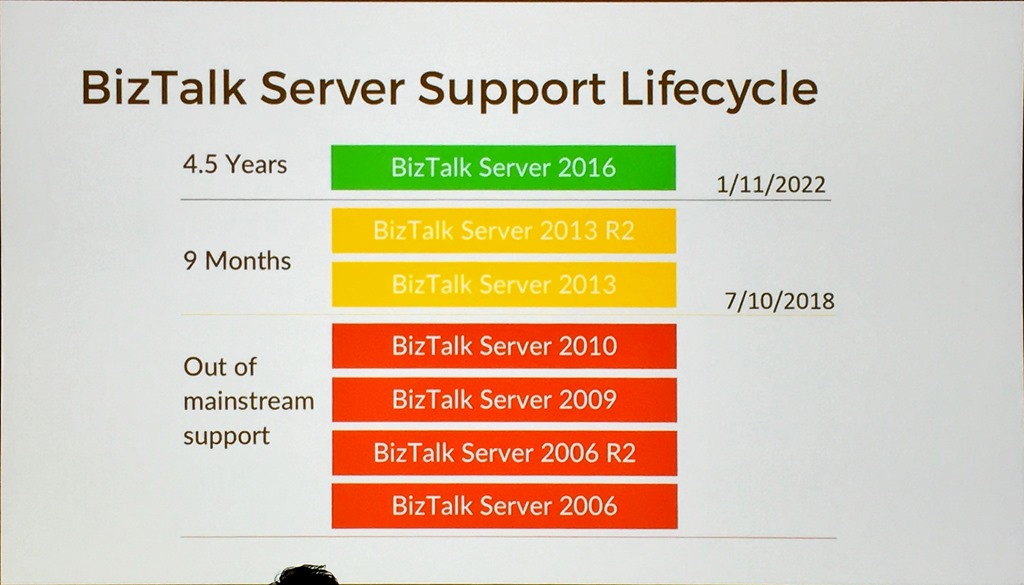

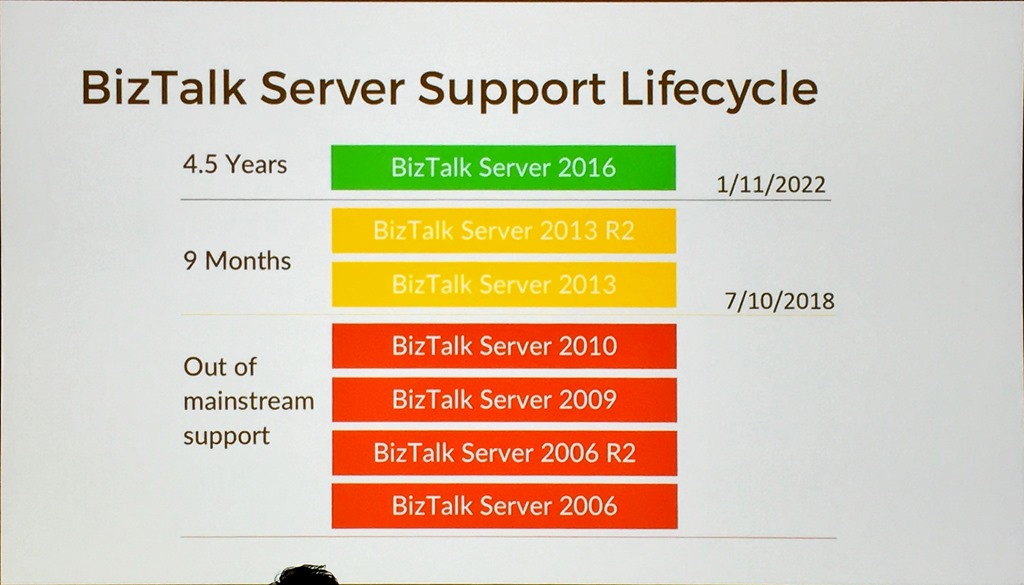

He walked through the BizTalk Server Support Lifecycle.

This shows that BizTalk Server 2013/2013 R2 is out of mainstream support in 9 months and that people should at least starting thinking about migrating. NOTE: A cool tool to help with this migration was presented by Microsoft IT on Day 2 and is available for use.

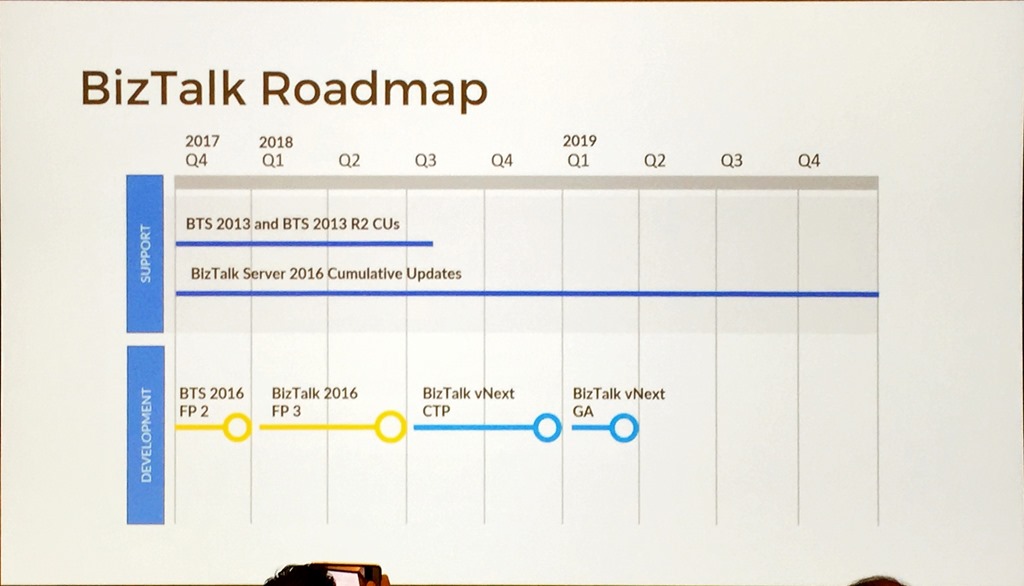

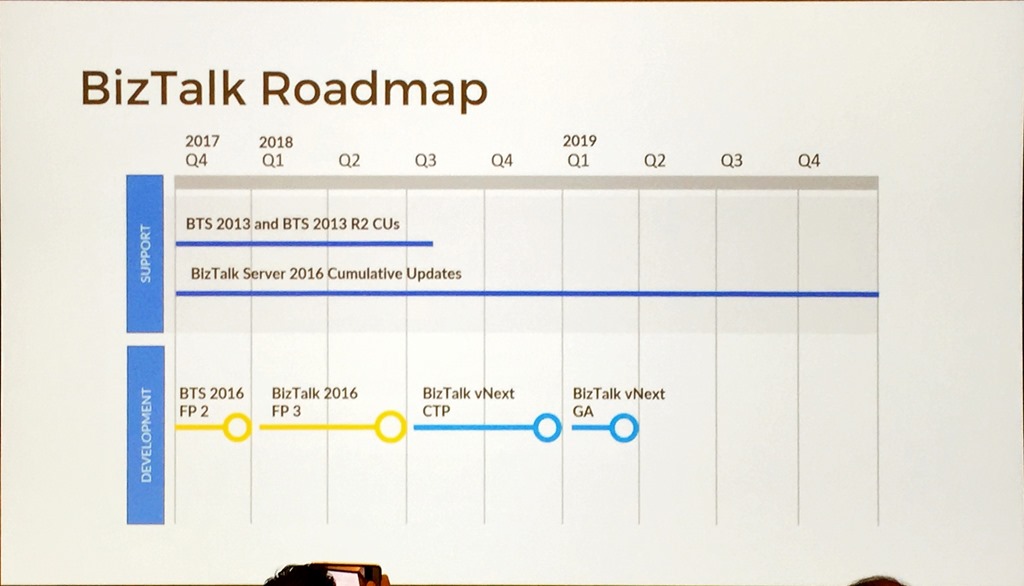

The most important slide was the BizTalk Roadmap.

This clearly shows an ongoing commitment to the product with a timeline for CUs, Feature Packs, and BizTalk vNext.

With that Paul wrapped up we had a break followed by Jeff and Kevin.

Jeff Hollan/Kevin Lam – Azure Logic Apps – build cloud-scale integrations faster

You always know you’re in for a great session when these two stand up, and this session did not disappoint.

It was aimed a level setting session to get people across Logic Apps, what they are and why you’d use them.

To help emphasize the growth of the service, Kevin mentioned that at GA in June 2016 there were about two dozen connectors, now there are nearly 200!

Connectors provide a canonical form for integration that scale to meets the needs of the customer.

A slide was shown that had an animation of the current connectors that went on for a few pages and included colours to indicate connectors to Azure Services (blue) and those to other Microsoft services (orange), along with a list of others really showing how much coverage Logic Apps has.

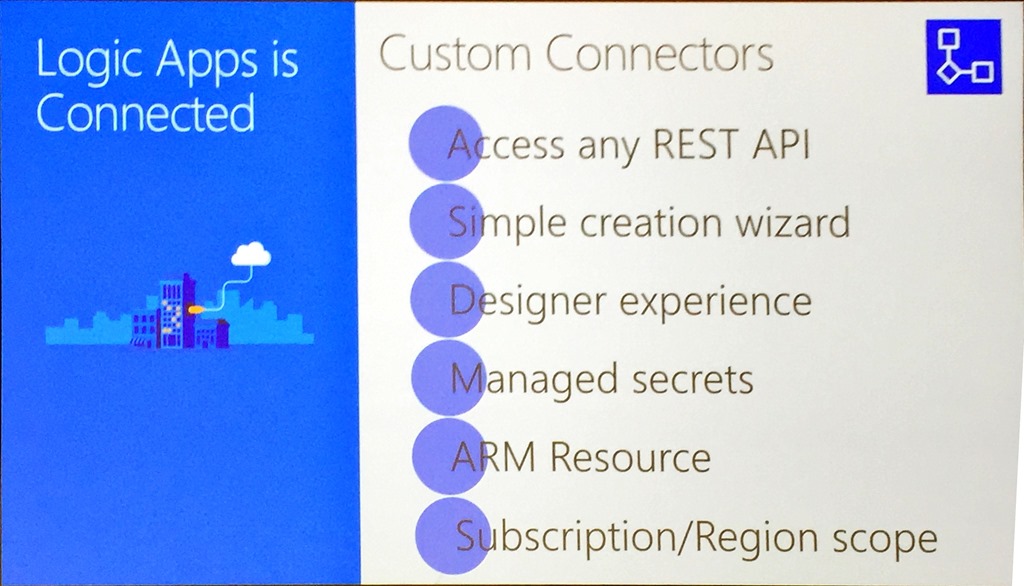

One of the new features was shown – custom connectors.

Custom connectors are available now and treated just the same as any other connector, including storing secrets in the Logic Apps secret store just like regular connectors.

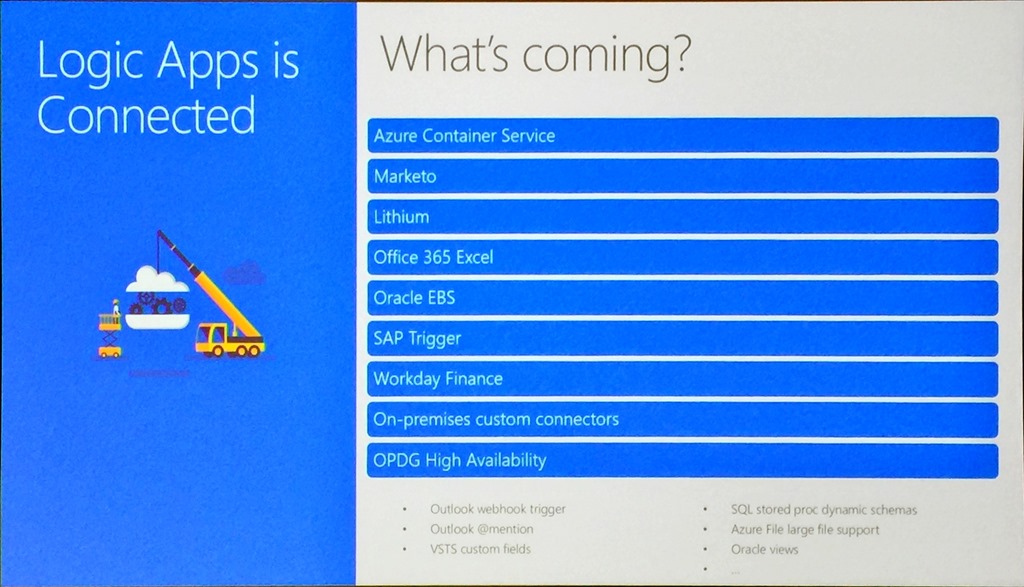

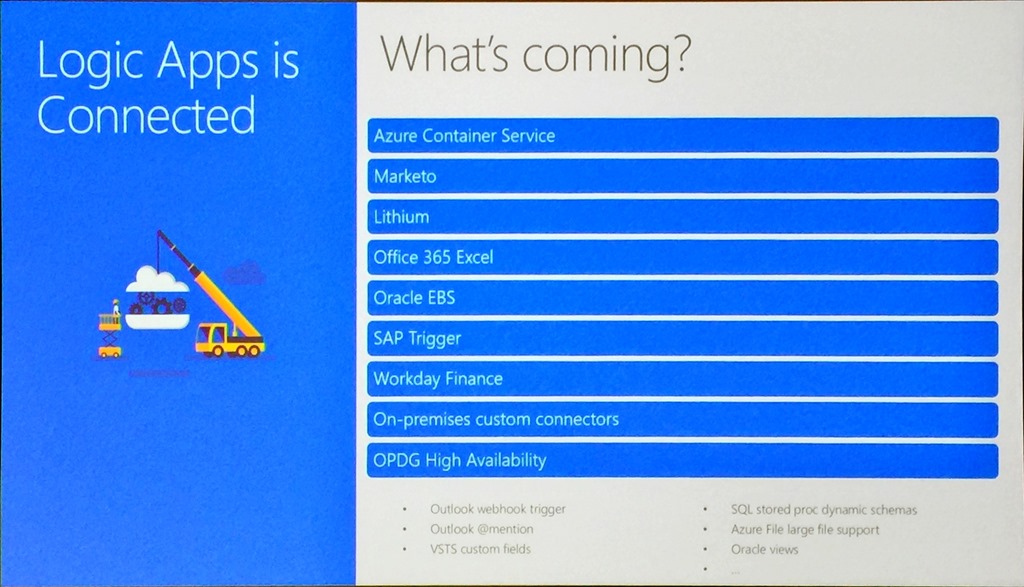

These conferences are great on their own, but when the teams share what’s next and any roadmap information it is particularly interesting. With that, we were teased with what connectors and services are coming soon.

These include the ability to initialize and destroy containers within the Azure Container Service, Oracle EBS and high availability for the on-premises data gateway. I am particularly interested in the container story and can see this as a great way of running transient compute workloads easily and only when required.

We then moved on to more level setting and to how agile the Logic Apps team is, highlighted by a slide that showed what they have shipped this year, including Visual Studio tooling, nested foreach loops and Ludicrous Mode that allows sharding across the infrastructure to improve performance. Currently, the cadence is roughly a release every two weeks!

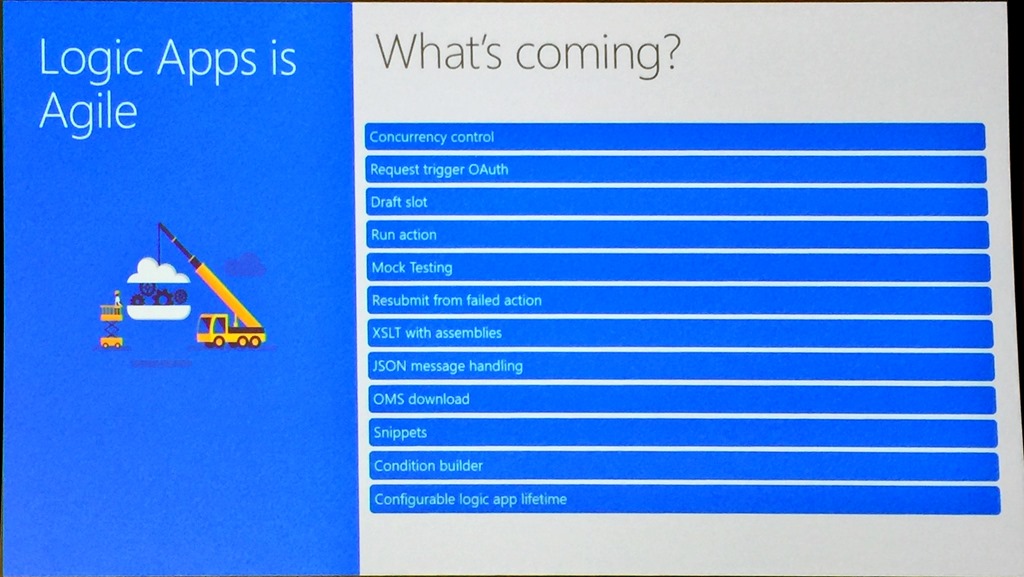

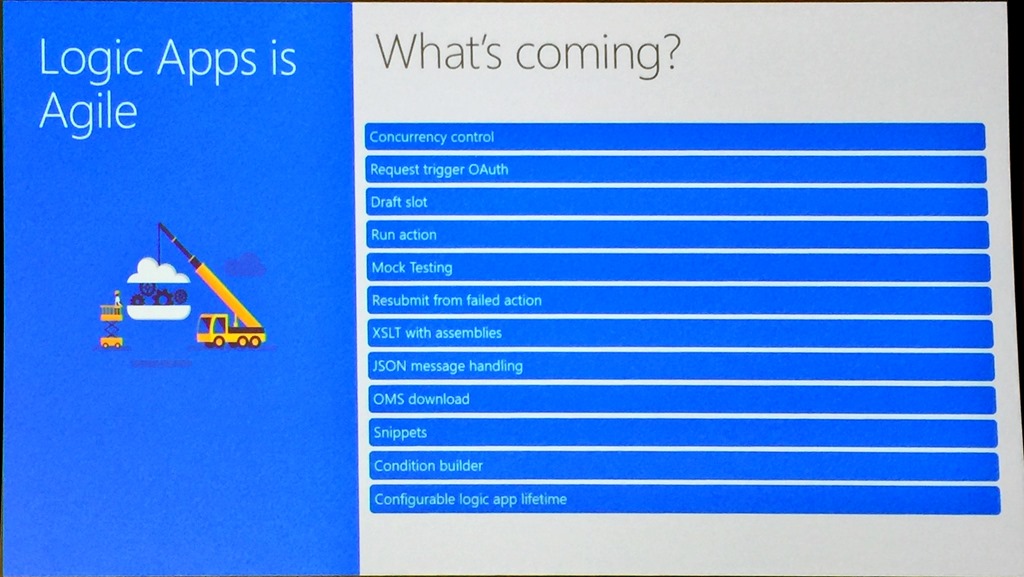

To highlight this agility, even more, they showed what was coming soon to the service.

Particularly interesting is mocking testing to allow you to stub out connectors that are still being built, being able to resubmit from a failed action rather than an entire run, concurrency control to allow control of how parallel foreach loops run which can be important in ordered delivery scenarios and snippets which allow you to create some reusability across your Logic Apps.

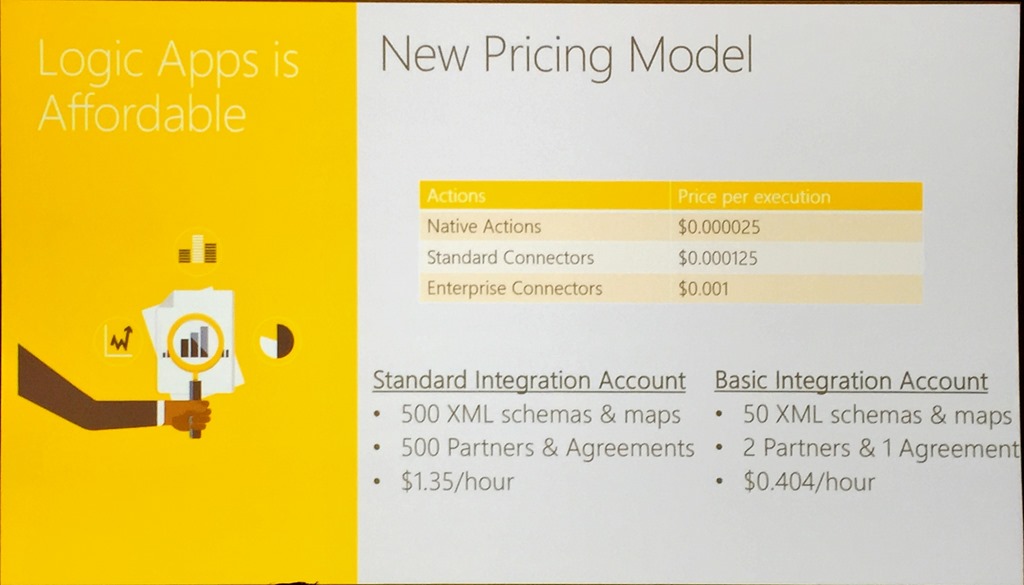

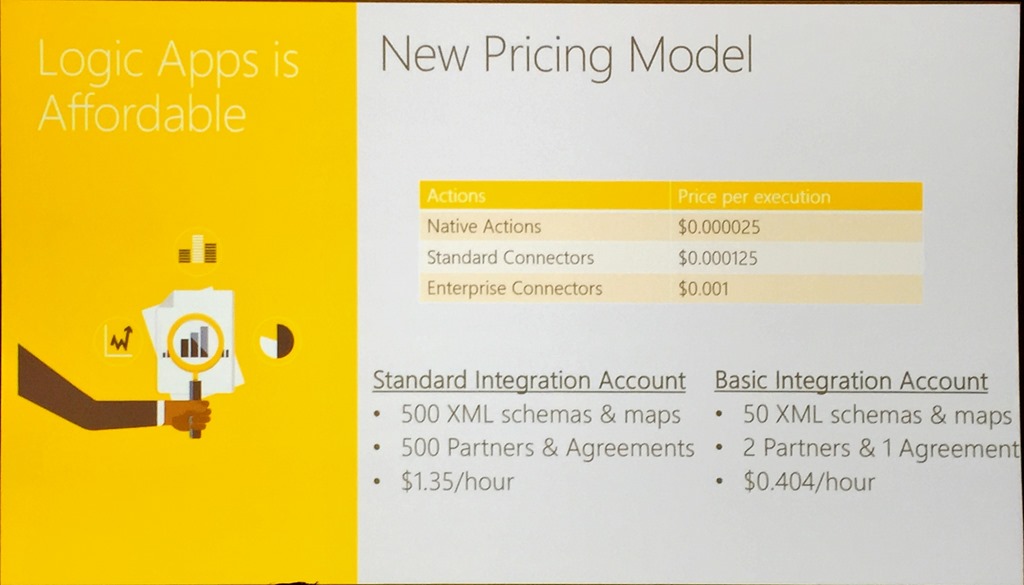

The new pricing model that comes into effect on 1st November was shown. This has a 32x reduction in the cost of native actions, 6.5x reduction in the cost of standard connectors and bringing enterprise connectors inline with other connects based on pay per execution.

The pricing changes also applied to integration account with them coming to a third of their previous price.

With that Jeff wrapped up with another great demo for Contoso Fitness showing how to integrate a Flic button to emulate a customer pushing a button on a fitness machine when it needed maintenance or cleaning, sending an alert via an HTTP trigger to ServiceNow.

We then had a change in presentation order, with Vlad and Miao covering API Management.

Vladimir Vinogradsky/Miao Jiang – Bolster your digital transformation with Azure API Management

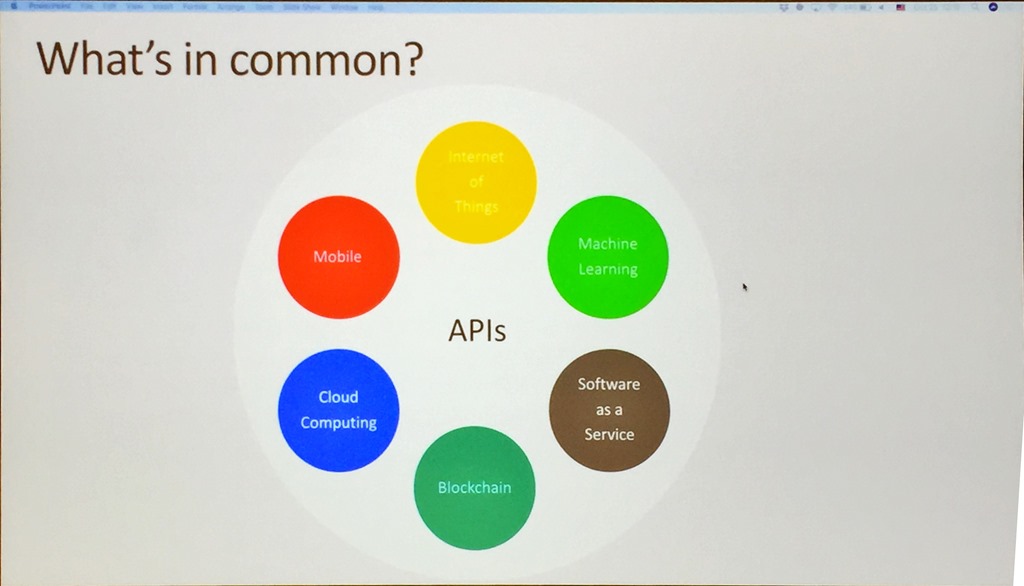

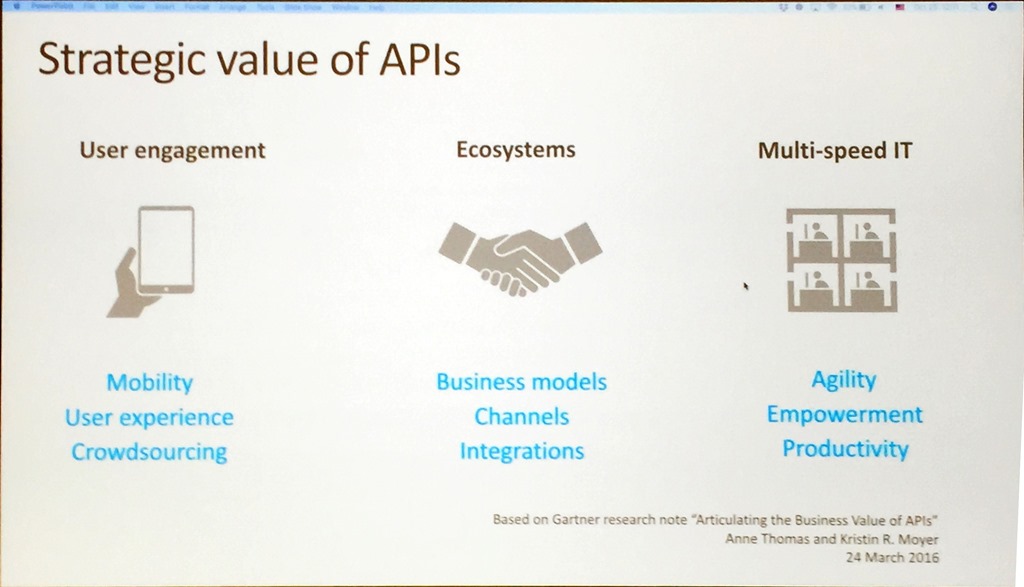

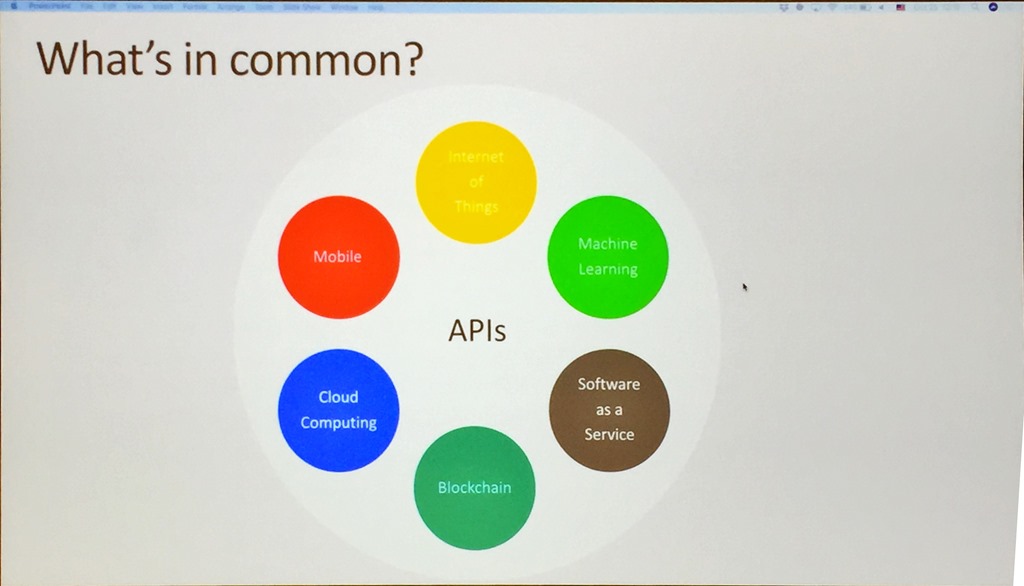

Vlad provided a great overview of API Management and showed how APIs, in general, is really the common component of any solution, whether that is a Software as a Service product or the Internet of Things.

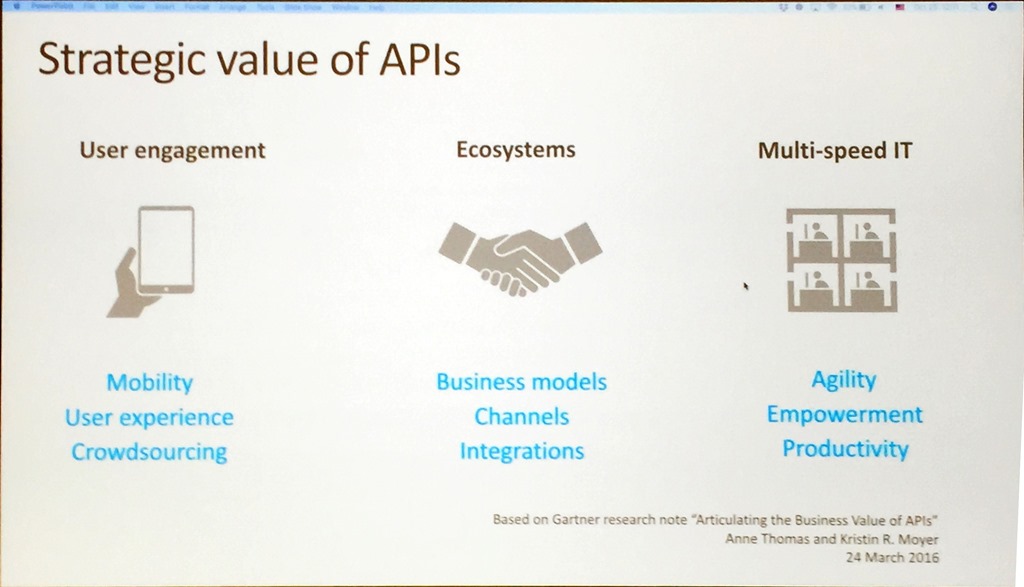

He continued by explaining how API Management is positioned and how it can be used to drive loyalty, build new services and channels to market and how it can help cope with multi-speed IT where not every part of a solution or business wants the same pace of change.

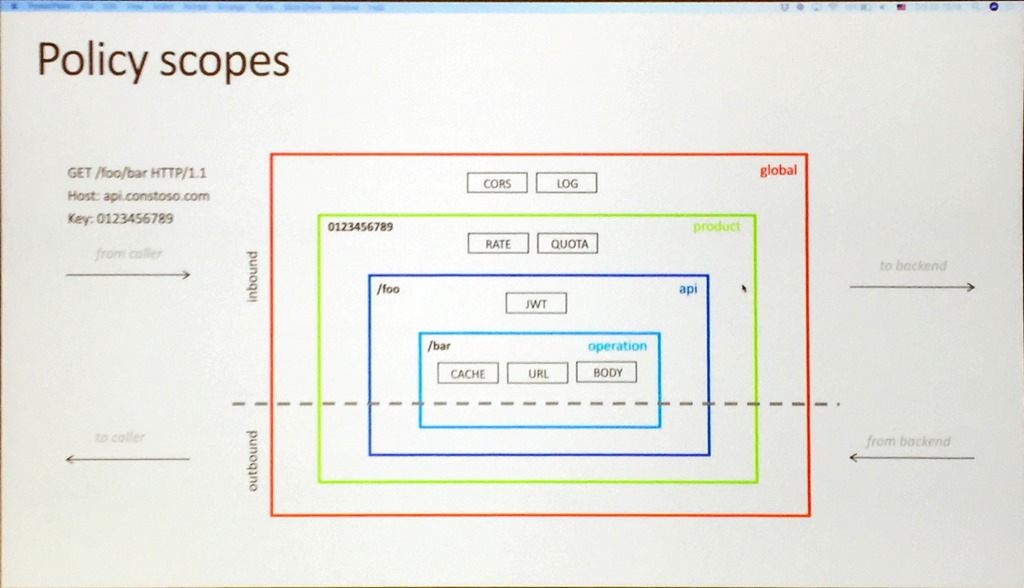

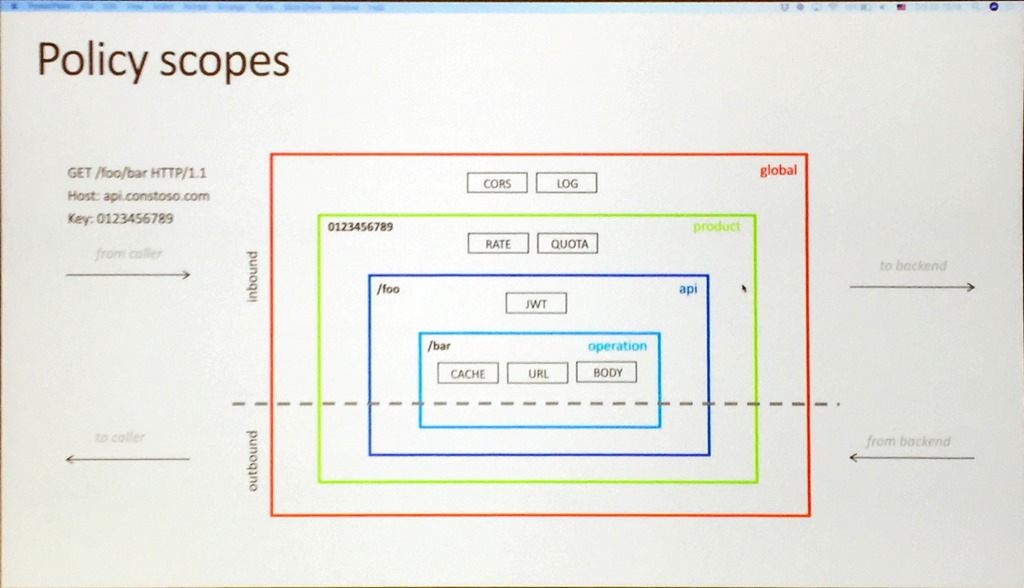

Vlad continued with a general overview of policies and how to use them to enforce certain things like access control and rate limits, and how you can chain them together by explaining the scope and the cumulative nature of policies.

After a discussion about security, the conversation moved on to the inclusion of VNets to help control access to on-premises APIs and then multi-region support and scaling that is available as a premium feature. This allows

you to deploy units of scale across regions, includes request caching out of the box, allows incremental growth of APIs, and allows different scales in different regions. It is a great way to grow your APIs as your business grows.

Miao then did a great demo, showing the key features of the service, firstly showing how to create an API, including SOAP to REST to allow more modern access to heritage APIs.

Using the Developer Portal to allow testing of the APIs he showed how to apply a number of policies such as removing headers, replacing backend URLs and rate limiting, followed by using the tracing feature to gain insight into the information passed to and from an API call and what policies are applied.

Any enterprise solution requires in-depth insight, so Miao moved on to monitoring and using Metrics in the Azure Portal to set alerts and using it to call a Logic App followed by the Diagnostic settings and Log Searching.

We then moved to looking at the new Power BI template that can be deployed with a single click.

This looks like a great way of delivering insight into an API Management deployment and has been created based on customer asks. It uses Event Hubs, Stream Analytics, and SQL Database.

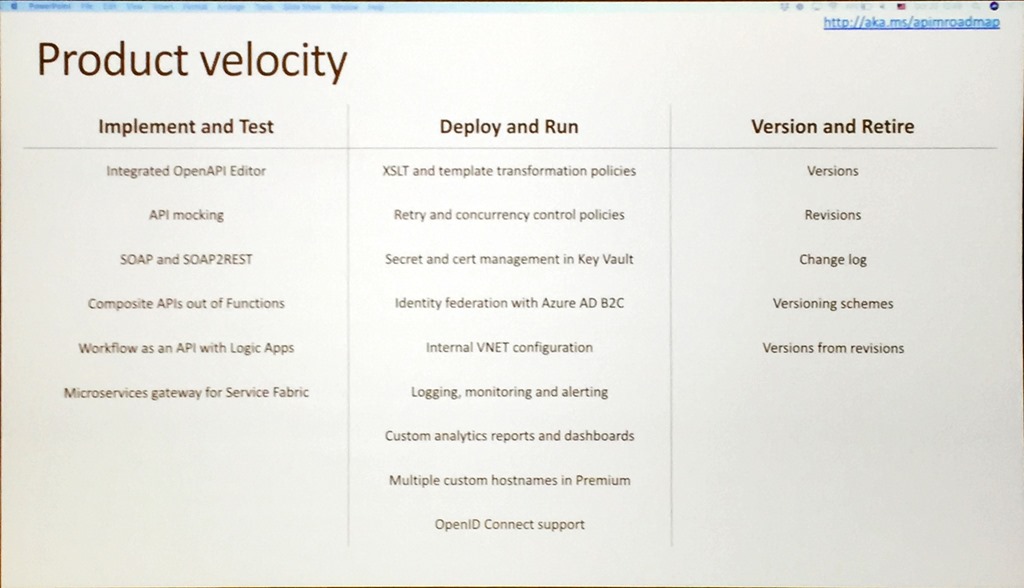

After a slide that showed the growth in API Management, Vlad then showed how much work has been done in the last 12 months.

Like the other presentations, this shows just how agile and engaged the team is and how they are really delivering value to us as users of their service.

With that Vlad provided a list of resources and closed out the morning session.

After lunch, we had 3 presentations on the messaging services within Azure that took proceeding up to the afternoon break.

Dan Rosanova – Messaging yesterday, today and tomorrow

After lunch, Dan kicked off sessions about the messaging services in Azure starting with his own presentation about tools and how Microsoft is really a tools company.

Using a hammer for illustration, Dan gave a great presentation on where a hammer is a right tool and where a hammer is not. This included an unusual demo that showed how to open a beer with a hammer live on stage!

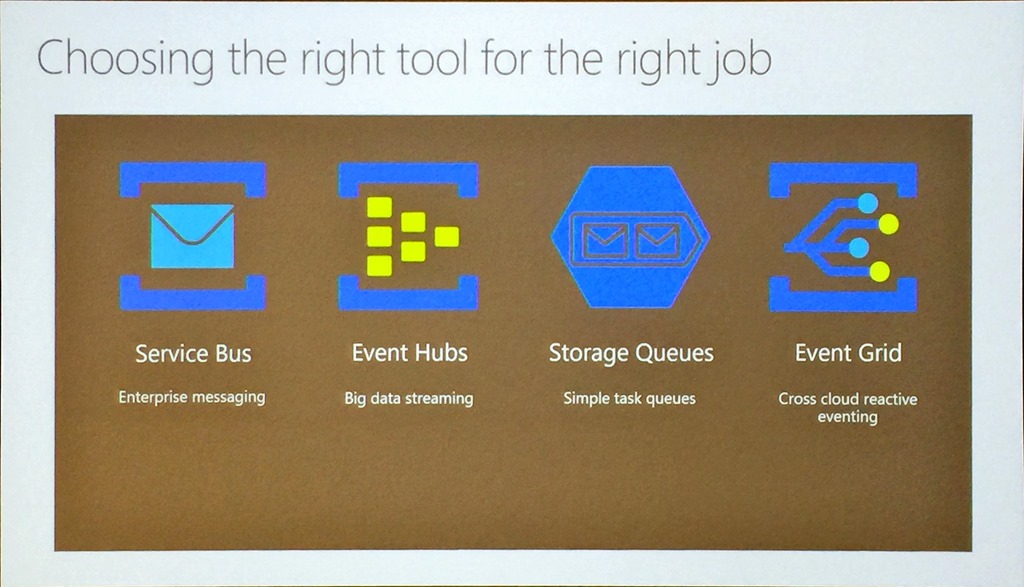

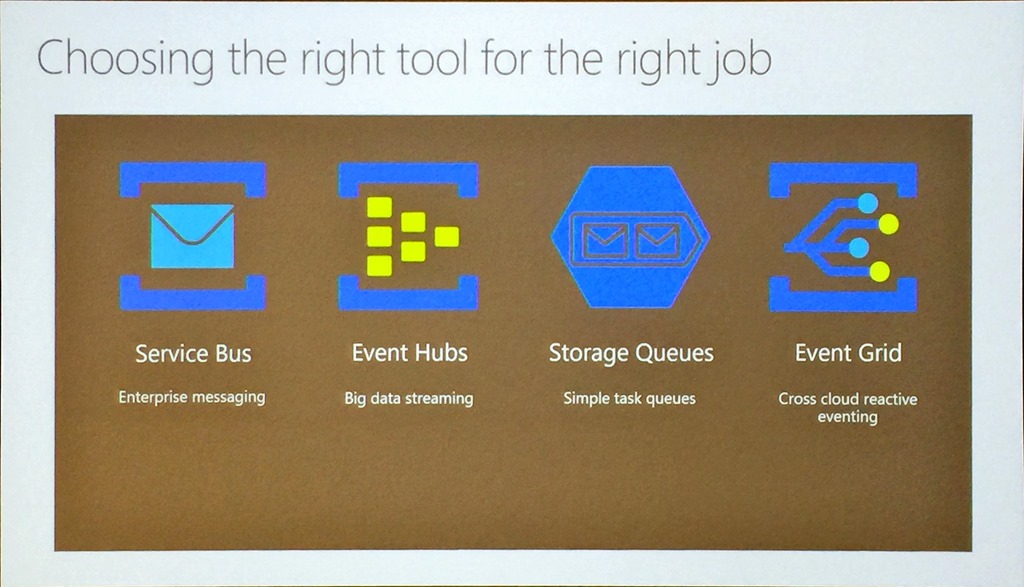

And really that was the main thrust of the presentation, that with Azure messaging being such a large set of tools, it is important to choose the right tool for the job.

To further hammer home the point, he talked about 3 scenarios to fit these tools:

- Task Queue using a Storage Queue to coordinate simple tasks across compute

- Big data streaming using Event Hub to flow and process data and telemetry in real-time

- Enterprise Messaging using Service Bus to manage business process state transitions

- Eventing using Event Grid to provide a reactive programming model

Dan summed up by saying that Event Grid will be GAed soon and indicated that some new services outside Azure are coming.

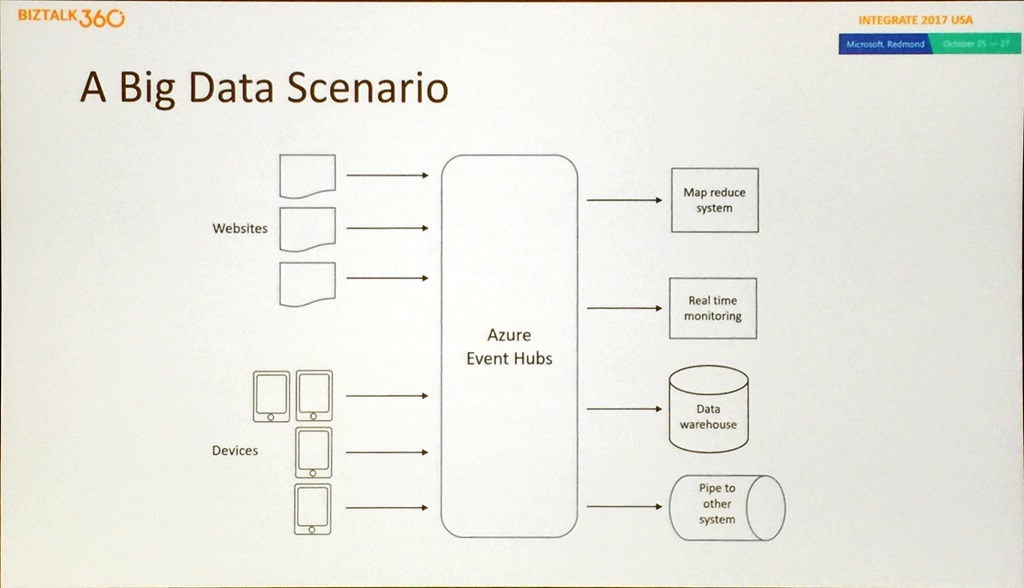

Shubha Vijayasarathy – Azure Event Hubs: the world’s most widely used telemetry service

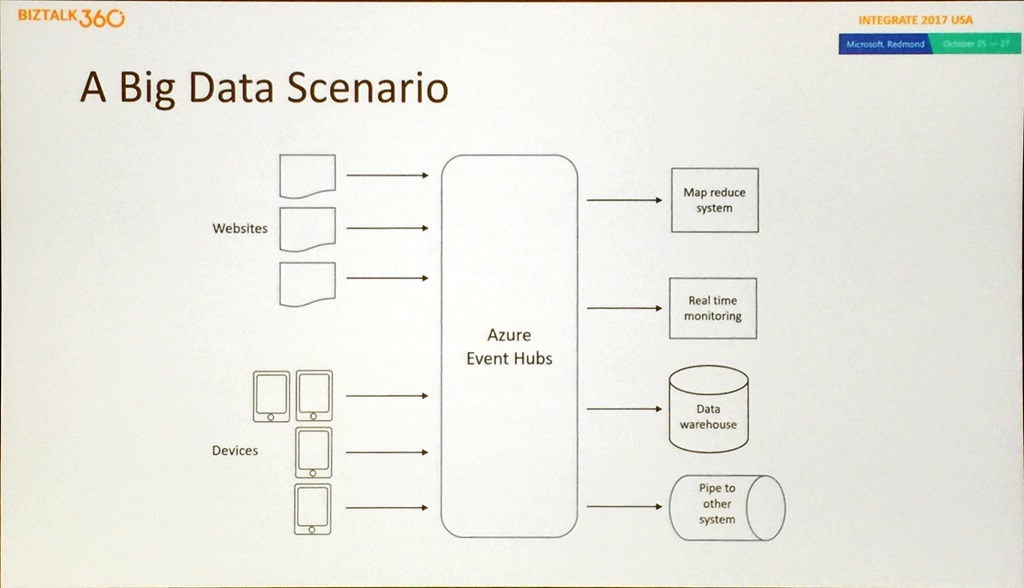

Shubha set the scene using a big data scenario and how Event Hub can be used to provide a single service solution to common problems around telemetry and data pipelines.

She moved on to how Event Hub answers all the typical questions asked about big data solutions, such as how do you handle data that has velocity, volume, and variety, can you deal with regional disasters and do real-time streaming as well as batch capture, what can Event Hub integrate with, and how can you handle support. Again, for any production solution, it is important to be able to lift the covers and see what is happening and how a solution is performing.

Shubha did a great demo showing how to use Event Hubs and Event Grid to move stream data into SQL Datawarehouse using the Capture feature of Event Hub that allows you to persist the telemetry data into a storage account. This demo used an Azure Function to react to an Event Grid event that was fired due to a storage file being created to process data into SQL DW.

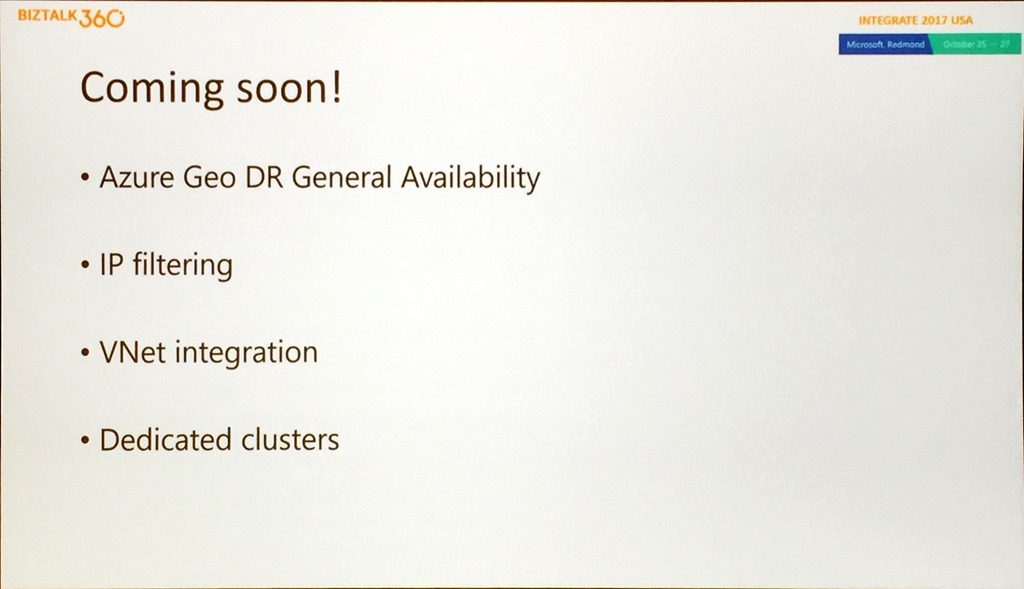

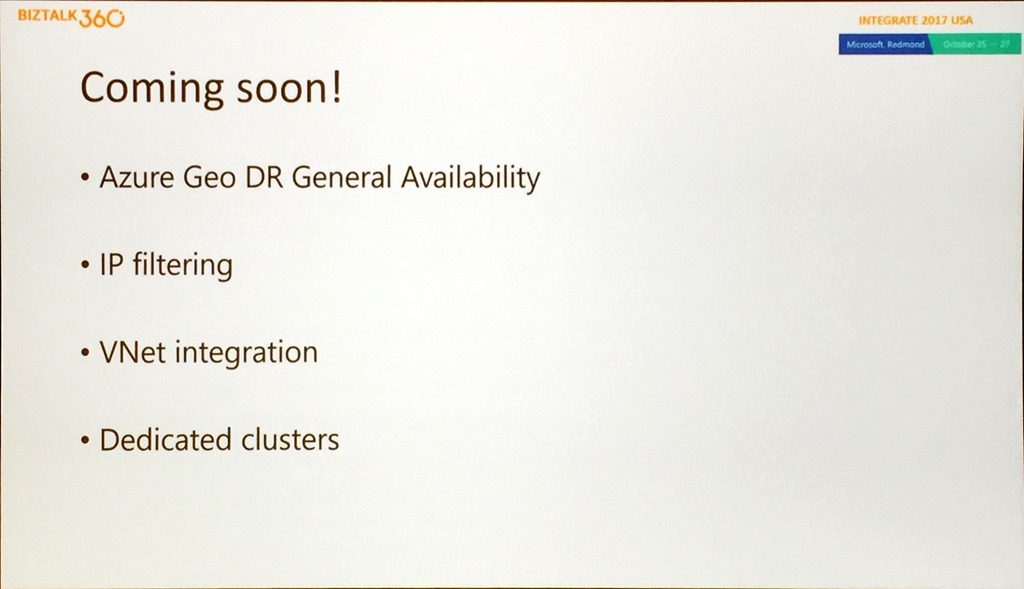

Leaving the best until last Shubha gave some indication of what was coming soon from the team.

This includes the general availability of Geo DR, IP filtering and VNet support and a portal experience for creating dedicated clusters.

We then had a bonus session for the day that was not scheduled.

Christian Wolf – Azure Service Bus: Who doesn’t know it?

So Dan covered the messaging services available, Shubha covered Event Hubs and Christian on to cover one of the oldest services in Azure.

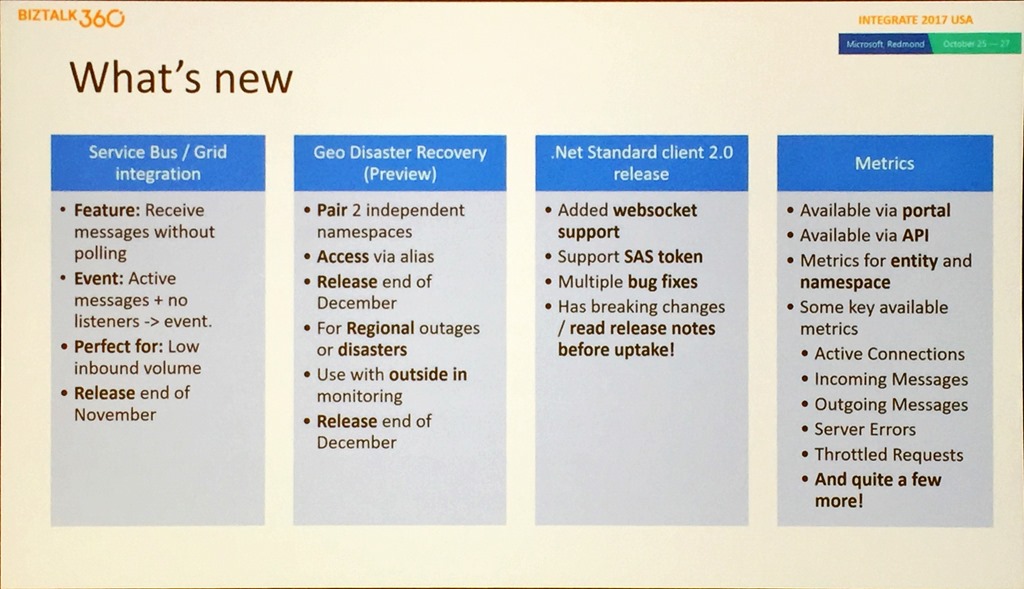

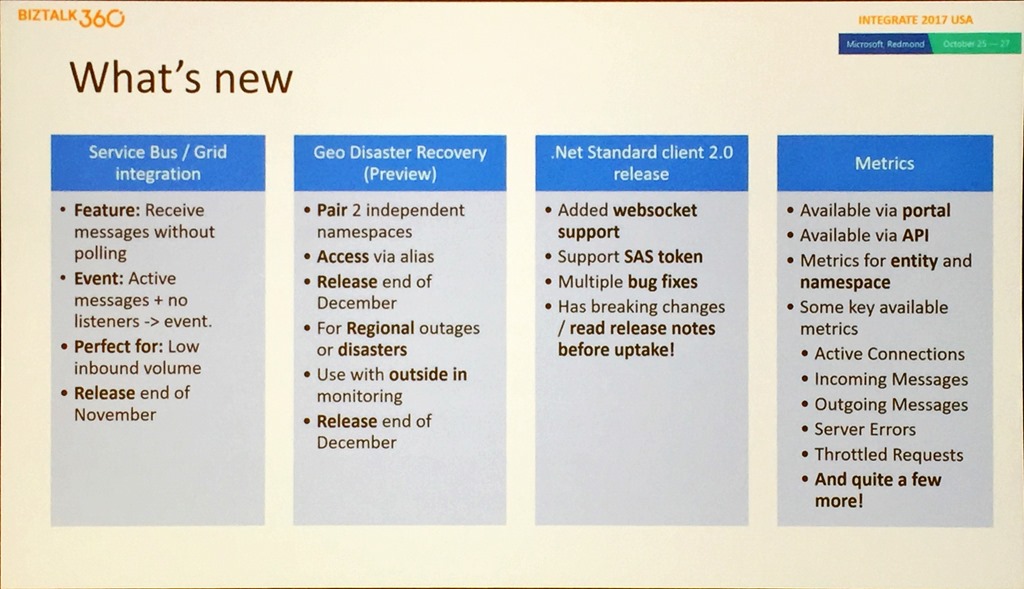

This was a shorter but highly focussed session that started with what is new and soon to be released in Service Bus.

He went through the important points of the slide, including that the Event Grid scenario is for lower volumes and not millions of messages. They are introducing Geo DR for Service Bus that will allow you to pair 2 independent namespaces and access them through an alias. NOTE: In this first release it is only metadata that is failed over between regions, not the data that is on any Service Bus asset.

A good point was made about the .NET Standard Client. It has been breaking changes, so Christian urged anyone wanting to adopt it to spend time in the release notes and testing.

Christian then did a couple of good demos, the first using Service Bus, and Event Grid to simulate Clemens Vasters wanting to buy an airplane (so a likely scenario!), and using Dynamics 365 to react to a new sales opportunity. The second demo showed the Geo DR capabilities and showed that monitoring not entirely straightforward. Christian used ServiceBus360 to help drive demo.

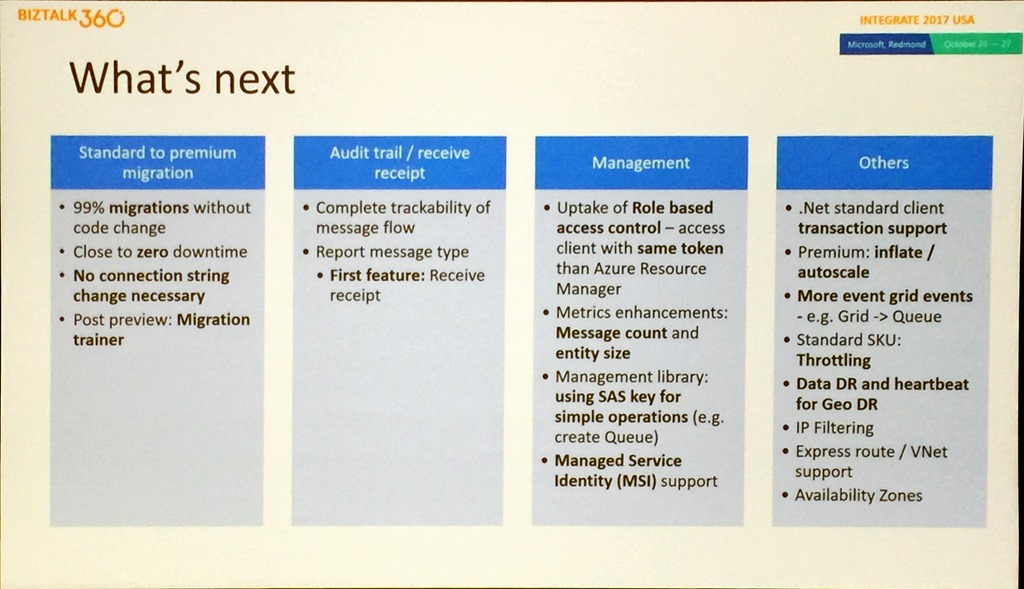

Christian finished with what’s next for Service Bus.

This includes a capability to allow migration between standard and premium SKUs, a new management library, the introduction of throttling in the standard SKU, which is not dedicated, to eliminate noisy neighbours and Data DR as a broader part of the disaster recovery strategy.

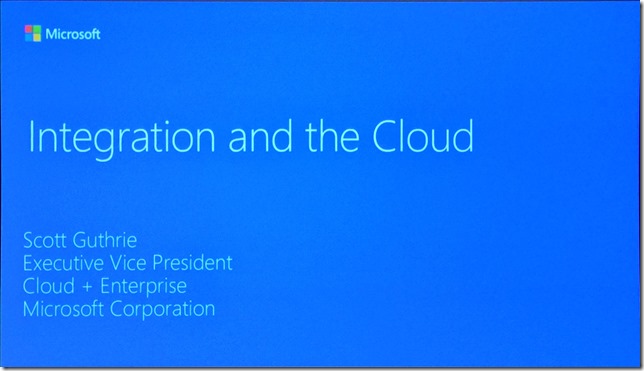

This led to the final break of the day, with 2 more presentations standing between attendees and Scott Guthrie’s keynote.

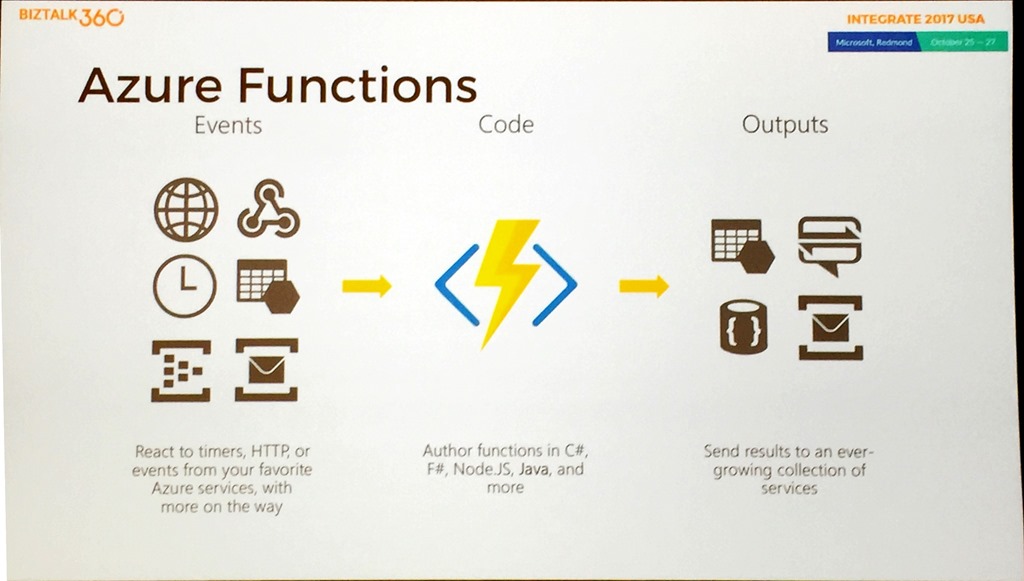

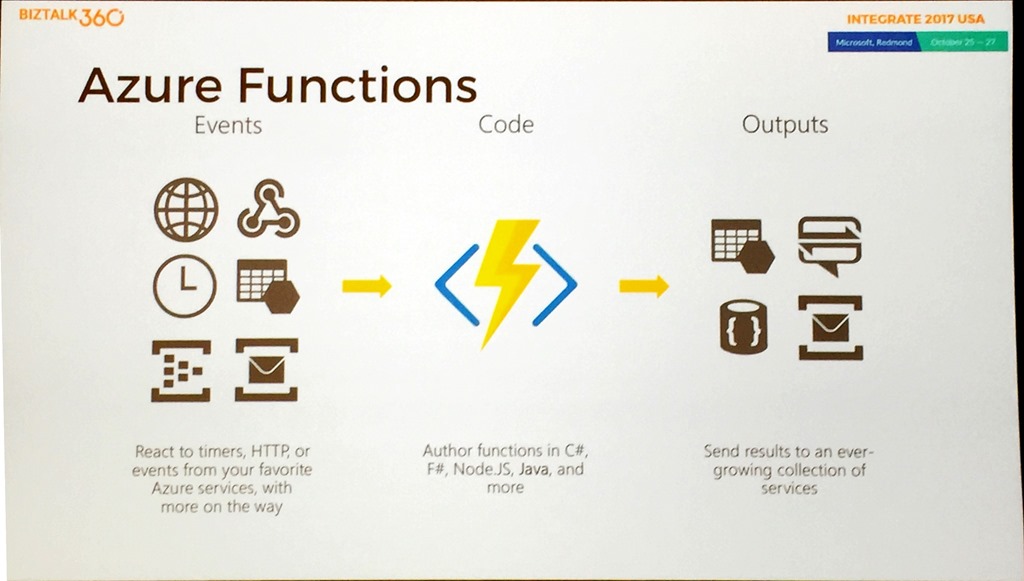

Eduardo Laureano – Azure Functions – Serverless compute in the cloud

We started with an overview of Functions and the components of the service.

Eduardo explained how Functions evolved, it came from App Service so HTTP has always been a native part of the service.

Eduardo showed the bindings and triggers, ut directed people to the documentation for an up-to-date list.

Following up with a discussion about developer tooling the discussion then turned to Functions by the numbers. The key takeaway from that was when customers go to Functions they are continuing to move more things over time as they evolve their ecosystems.

Eduardo did a demo that really showed the power of bindings by walking through the Function creation process for a Blob Storage trigger, performing a simple file upload, changing the input from Stream to byte[] and showing that it just still works exactly the same way.

After speaking about the difference between Function bindings and Logic Apps connectors (low code v no code, 23 bindings v 180+ connectors, ideal for data flow v ideal for workflow orchestration, data type in code v fully managed) Eduardo explained that as Functions is open source, anyone can go and create a new custom binding, and that he’d be happy to discuss having more community contributed bindings in the service.

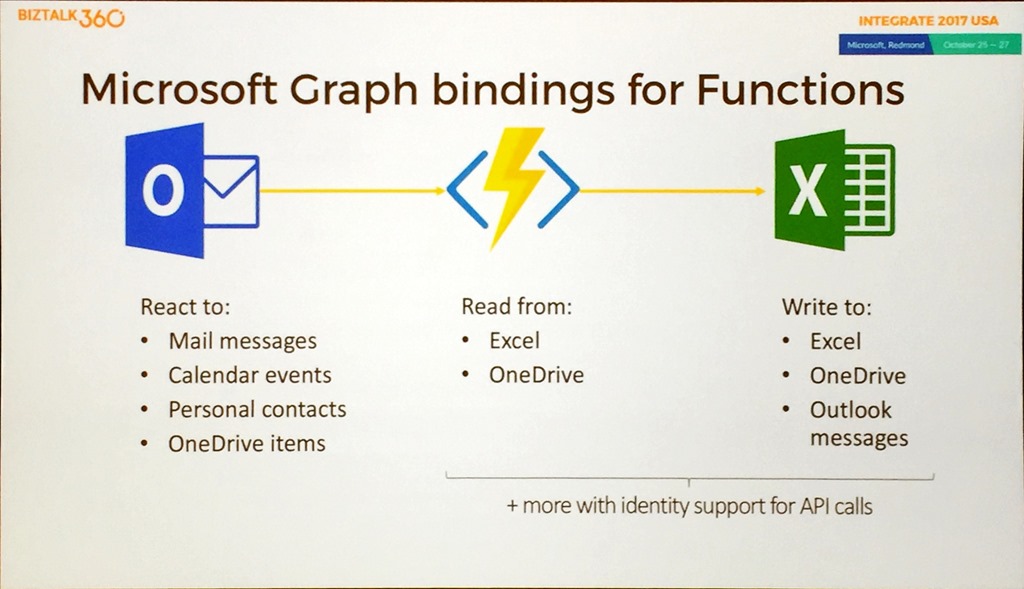

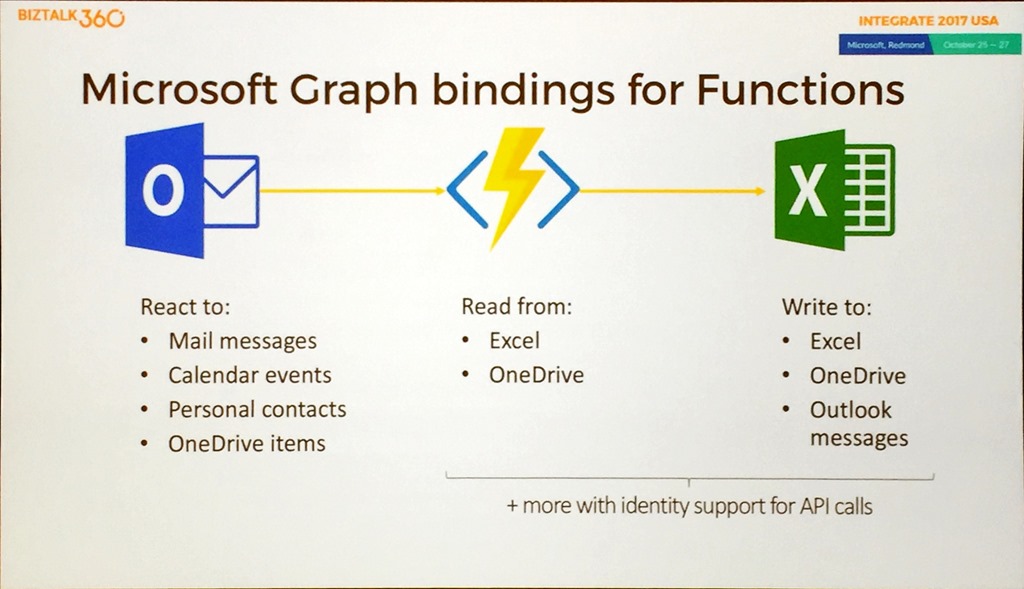

We then moved on to the new Microsoft Graph binding announced at Ignite.

This provides a way of finding correlations across different data sets, but the real magic is that it incorporates identity so you don’t have to.

We had 2 demos, the first showing the Graph binding, and the second showing the new Excel binding with data being added to an Excel file.

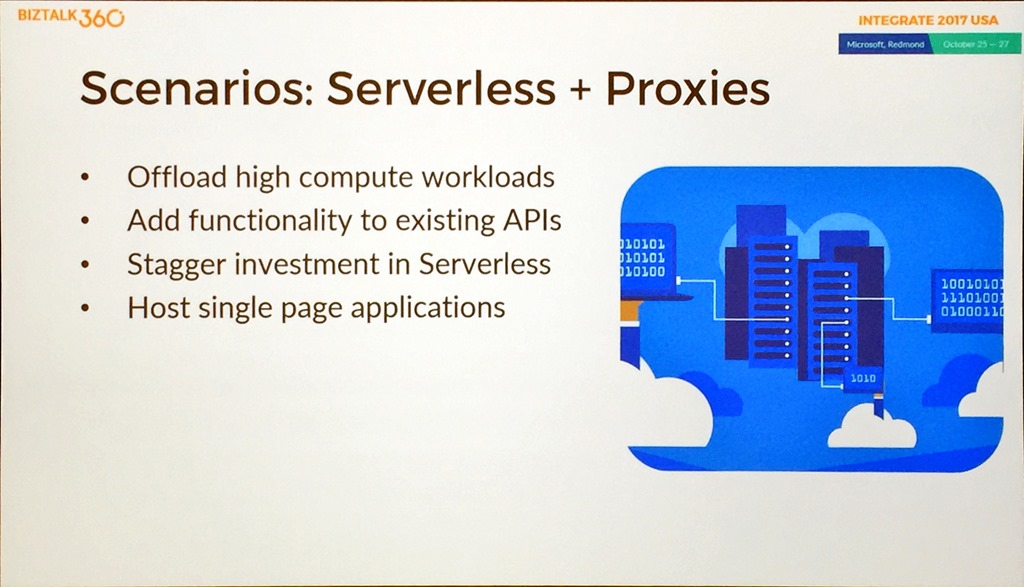

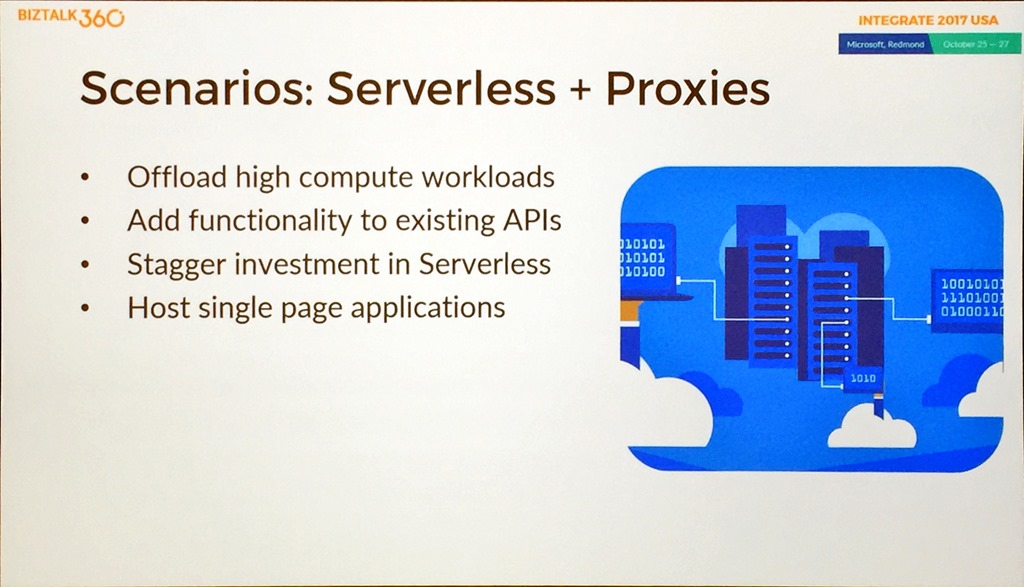

Proxies is a recently added feature that will be going GA soon, so Eduardo spent some time explaining how it works and did a great demo showing how you can use proxies for URL redirection and mocking of responses since you can specify a response payload. He then gave some scenarios where you may want to use proxies.

Like most of the presentations during the day, he finished with a list of takeaways and resources.

The final presentation of the day before Scott was delivered by Jon Fancey.

Jon Fancey – Enterprise Integration with Logic Apps

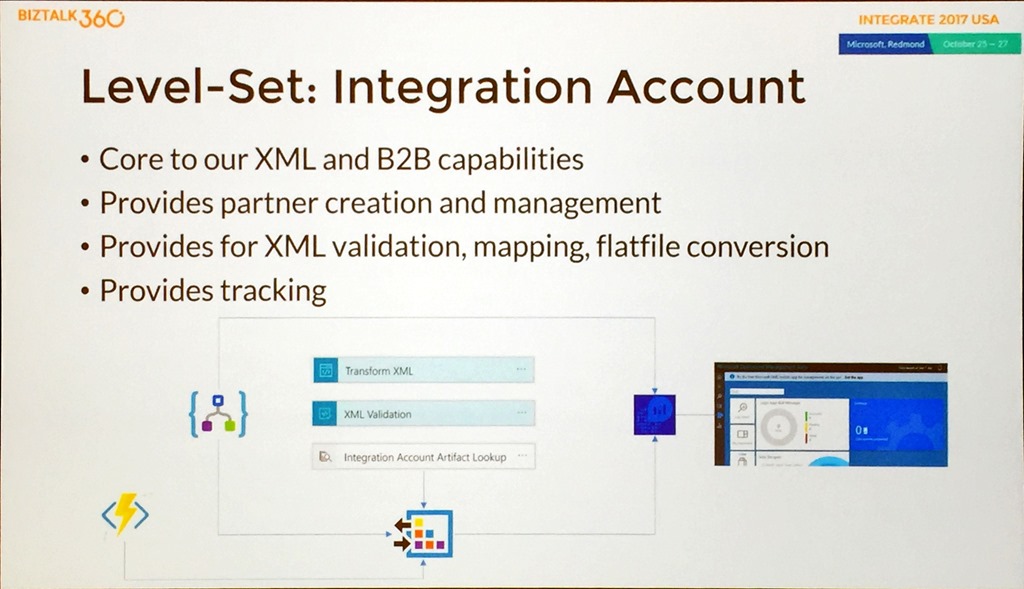

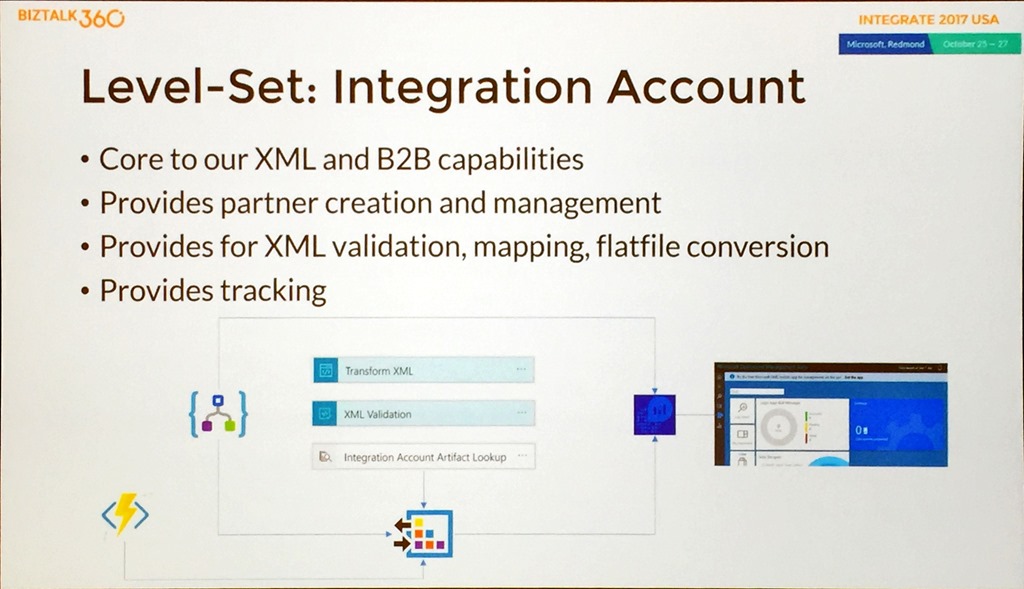

Jon started by level setting and explaining that the Integration Account in Azure is the basic unit of work for Enterprise Integration.

He explained about the XML and B2B capabilities that are provided with the Integration Account and talked about DR scenarios which are important to consider as Integration Accounts hold stateful information. DR is achieved by having a Primary and (multiple) Secondary Integration Accounts in different regions, and the service uses Logic Apps to keep Integration Account states in sync.

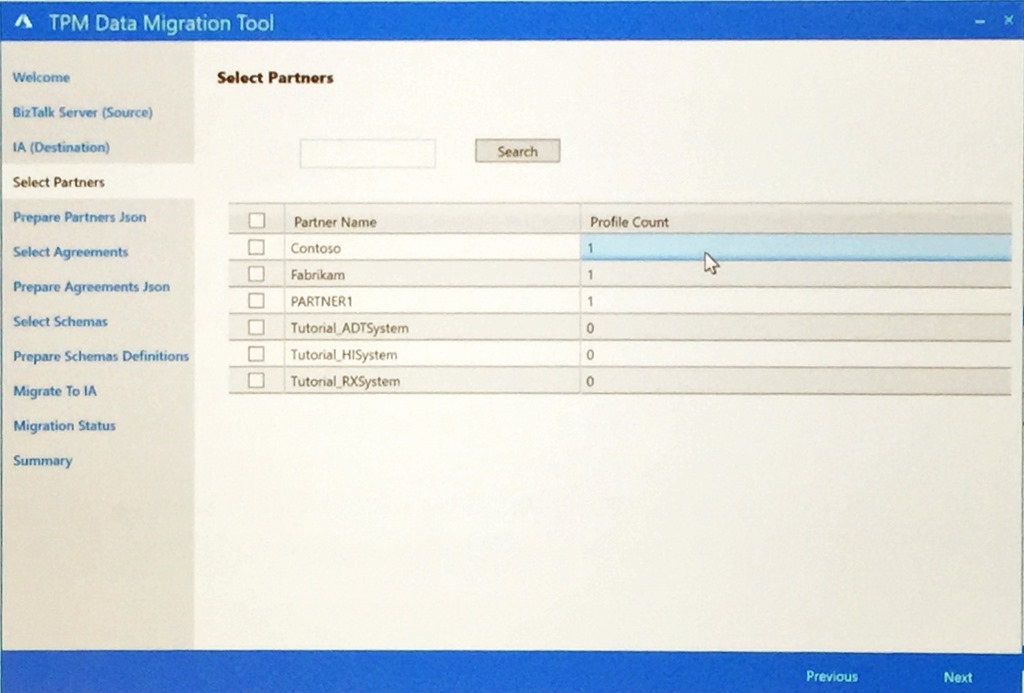

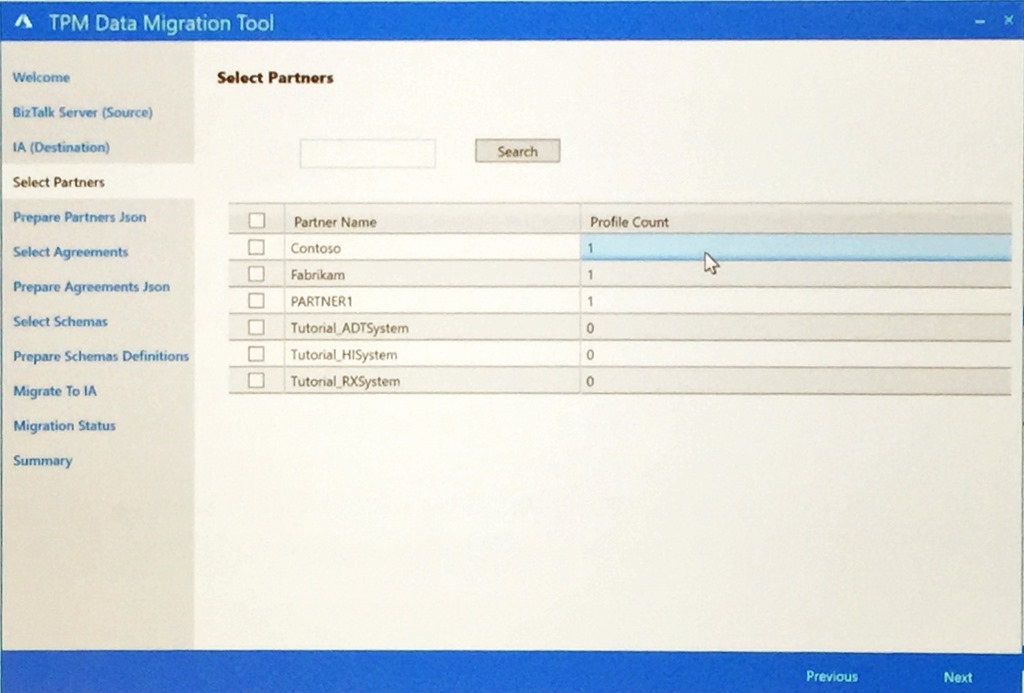

Jon moved on to trading partner migration and a tool (TPM) that has been written to allow customers to easily move trading partners and agreements between BizTalk Server and Logic Apps.

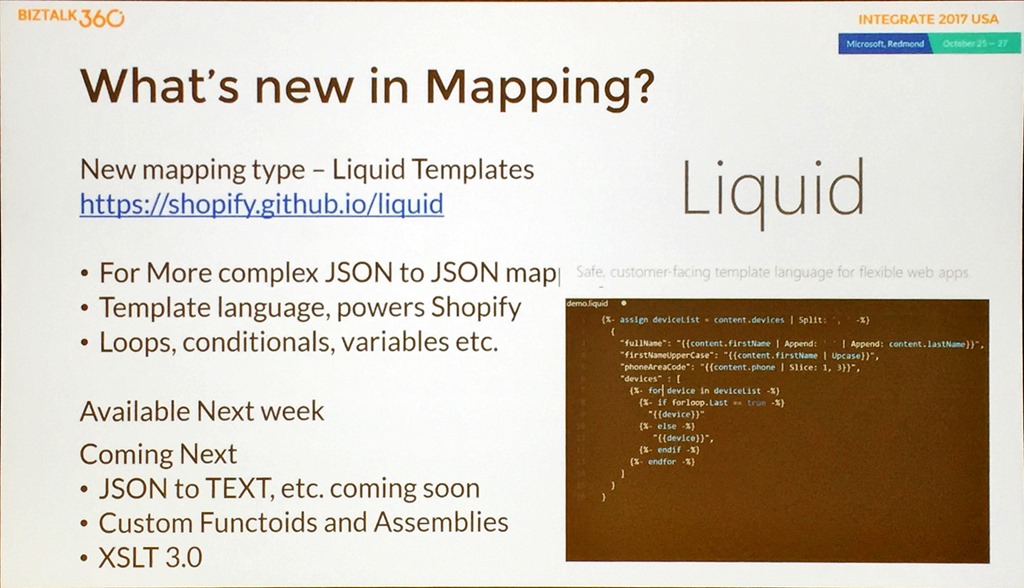

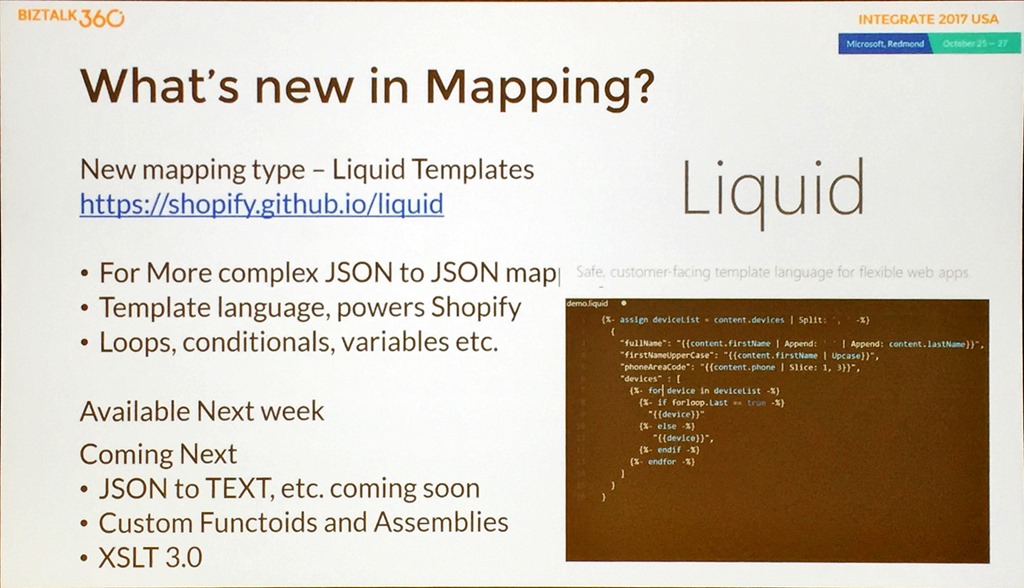

Jon gave an explanation of the traditional VETER pipeline and then moved to what is new in mapping.

With this, he introduced Liquid which allows mapping between different entity types using a DSL and did a demo of it using Visual Studio code.

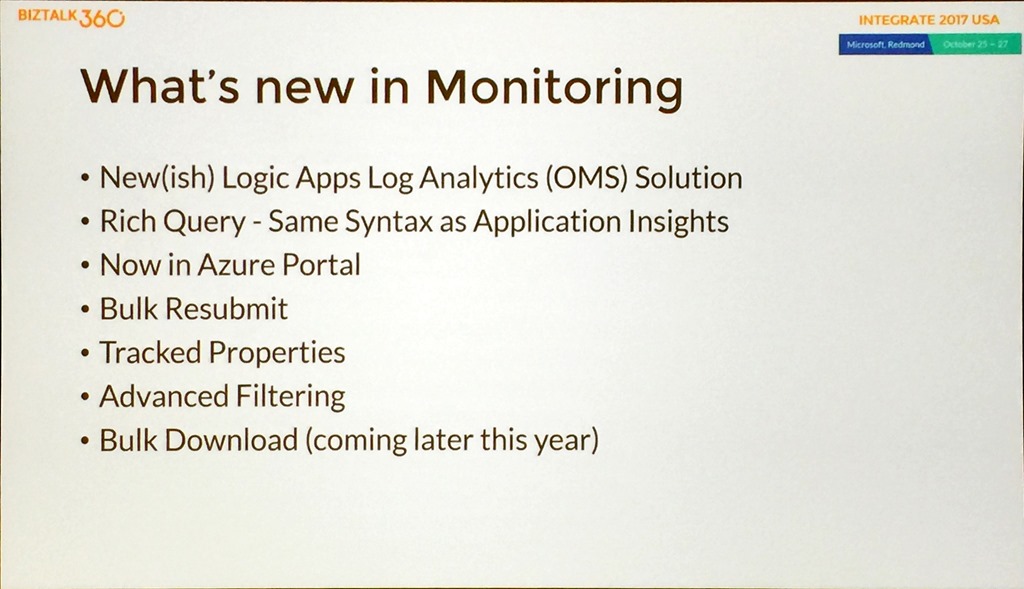

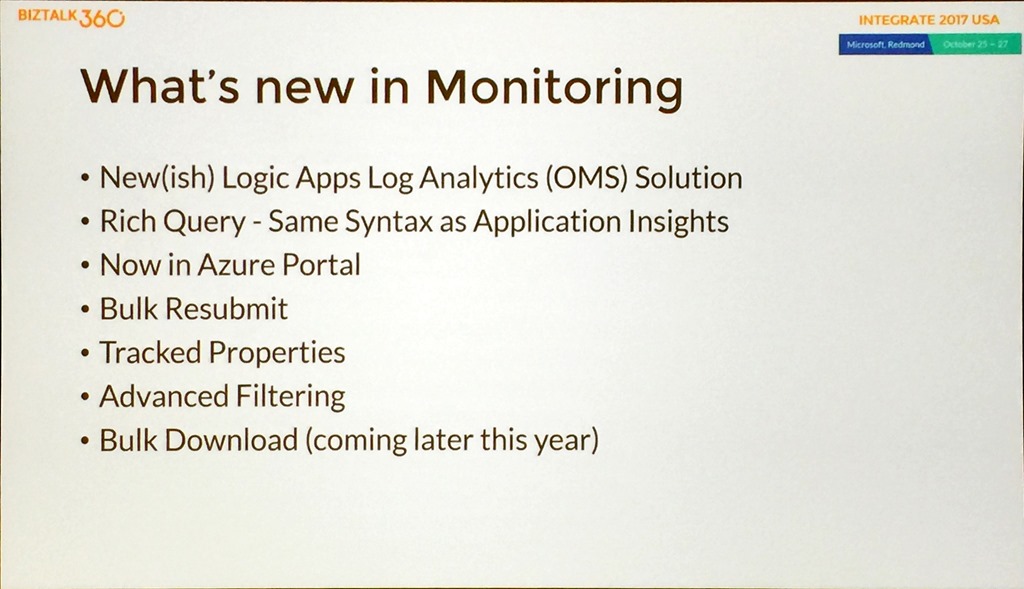

After talking about the tracking features in Logic Apps, Jon gave us a glimpse of what was coming in Monitoring.

Key takeaways from this list are the OMS template and work around harmonizing the querying capabilities to bring it inline with AppInsights.

Jon did a demo to highlight these features showing OMS in the portal, drilling through the data, showing batch resubmit by looking at Runs and selecting, and tracked properties containing your own tracking, then showed taking a Query and creating a custom tile in the OMS workspace.

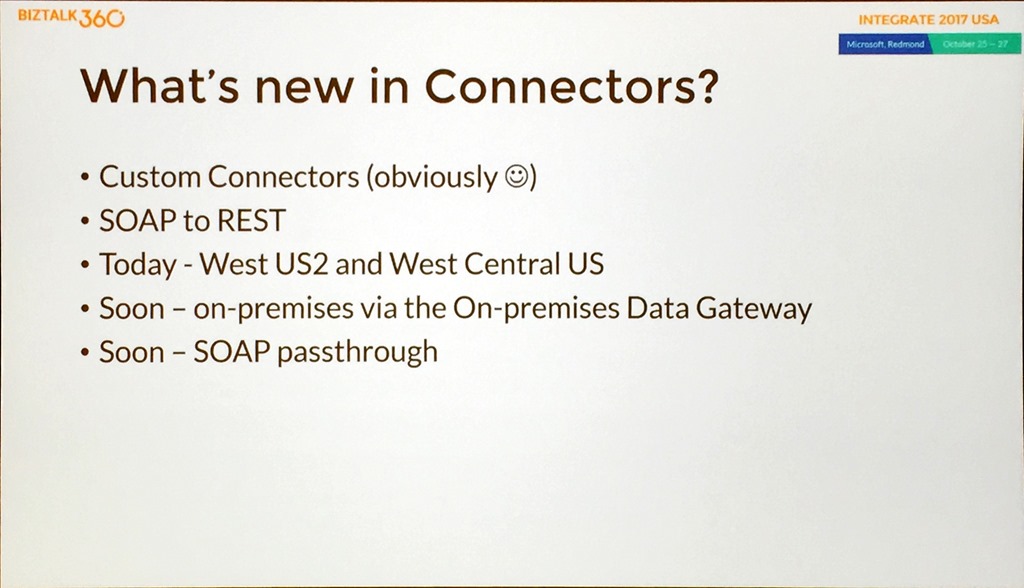

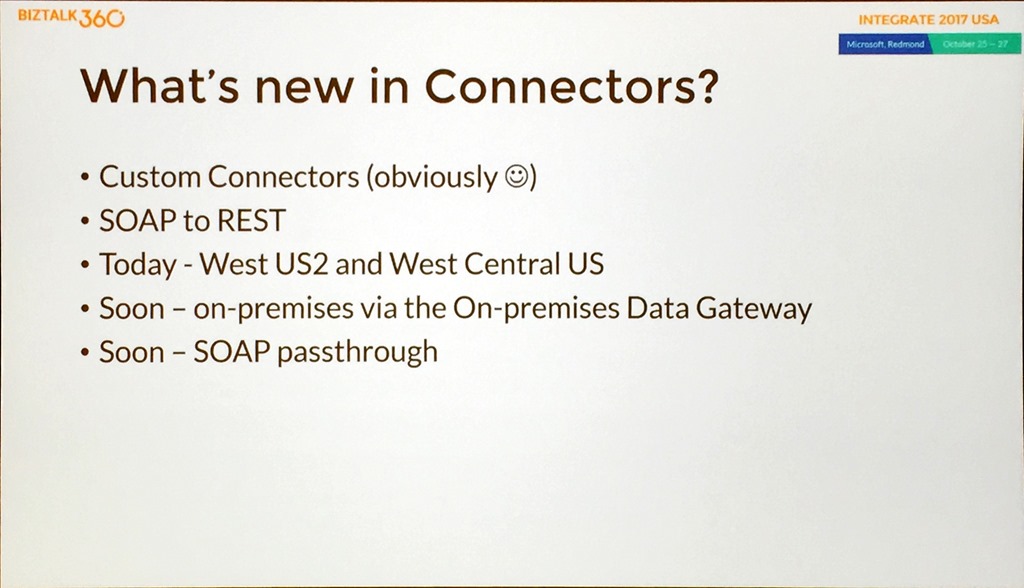

Next up for the “new” treatment was connectors.

There had already been the discussion about custom connectors earlier in the day but it was great to see SOAP to REST, which shipped the same day, to allow even more opportunities to leverage current investments.

Time for another demo, this time looking at SOAP to REST using a custom connector. This was a great demo that involved Jon changing a SOAP app on the fly, adding a new custom connector, then running the service and a great He Man reference, “By the Power of GreySkull”, always a bonus!

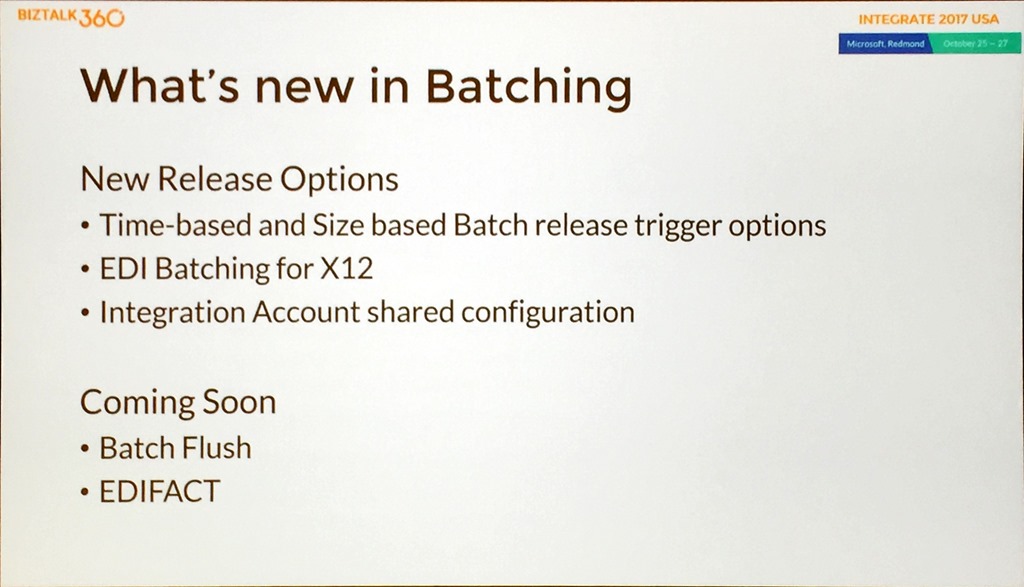

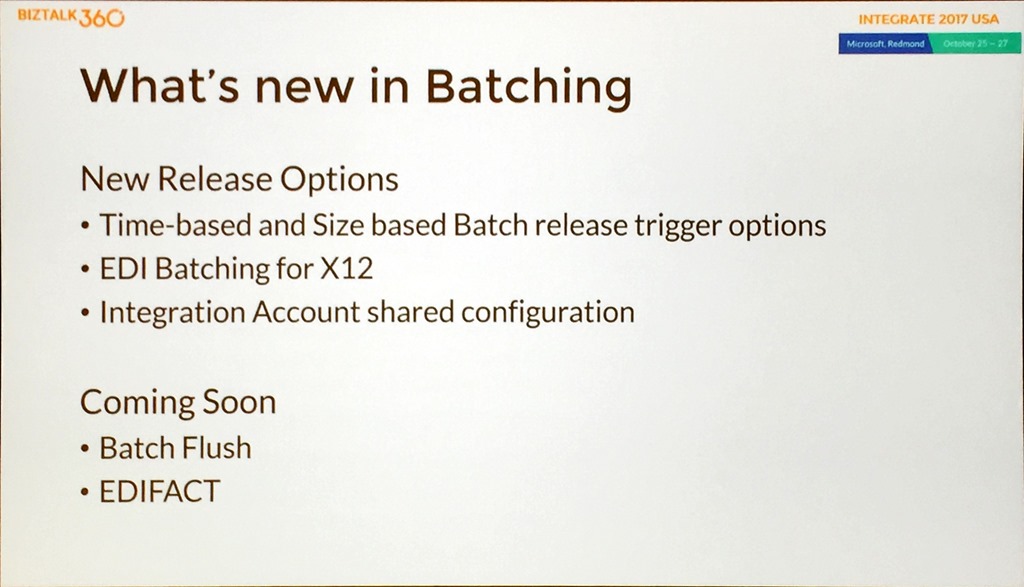

Jon talked about the new batching feature and then gave us a view of what was new and coming.

The last demo of the day showed off the batching feature before Jon did a quick recap and showed some resources.

That was the end of the first day prior to the Keynote, and what a great day it was. There was plenty of information for people who had some knowledge but wanted to learn more and the presenters were very goofing at getting an idea of the level of the audience.

With great demos, great presentations and great presenters the conference got off to a real bang.

The only thing that was left after this was the man in the red polo shirt, but let’s cover that in its own post!

by Martin Abbott | Oct 25, 2017 | BizTalk Community Blogs via Syndication

After a great start and some great content on Day 1 at Integrate 2017 USA it was time for the keynote, Jim Harrer returned to introduce the man known as ScottGu, the man in the red shirt.

Fresh off the back of his Red Shirt Tour, Scott Guthrie took to the stage to deliver a presentation to wrap up Day 1 of Integrate 2017 USA.

He started by asking the question, what are the big opportunities for integration?

He echoed Jim’s sentiment earlier in the day that integration is the glue and an essential part of any enterprise solution.

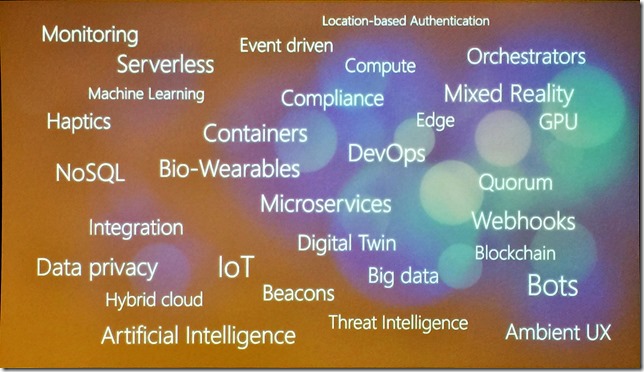

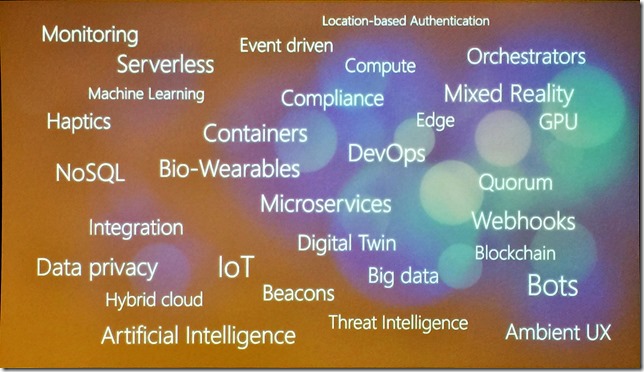

As Integrate 2017 USA is a technical conference, he wanted to show some buzzwords and terms.

Integration has a part to play everywhere, he said now is the time, time to build new things, new solutions.

Furthermore, he went as far as to say that integration is now transformational, creating new revenue streams and services, reinventing the way we do business, but security is critical.

We need to be using Azure to do things differently; in a productive way, a hybrid way, an intelligent way and a trusted way.

He spoke about the reach of Azure with an unparalleled capability to reach a global audience with 42 Azure regions, providing a global reach for global business, and a great fact, 20% of all power for Ireland is used in North Europe data centre!

He showed a great video about what a data centre looks like which gave a glimpse in to just how impressive the Azure cloud is.

But Azure is also a Trusted Cloud.

Azure has more certifications than any rival cloud provider, and provides a guarantee that regional data stays in the region and fails over across paired regions. Germany and China have specific data requirements so their data has even more protections.

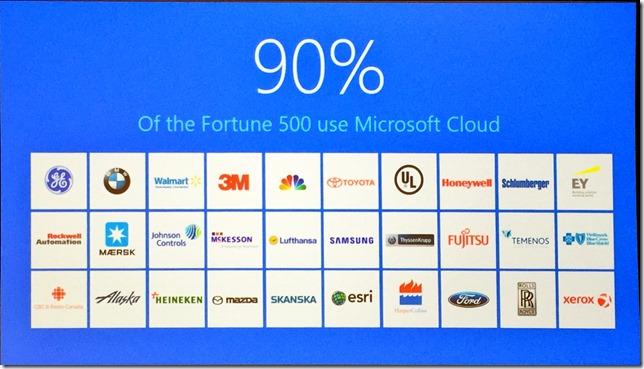

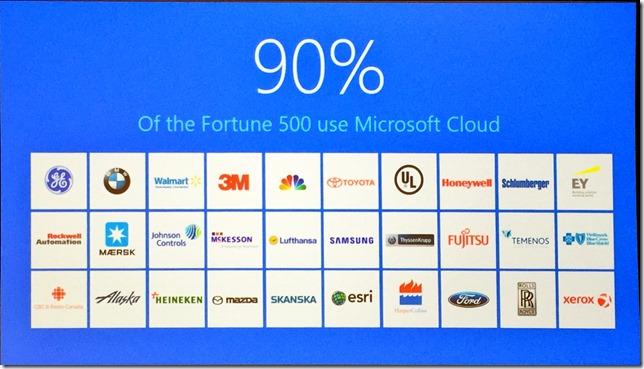

Scott then shared that 90% of Fortune 500 companies use Azure.

Time for another video, this one on customers using Azure including Asos, Dominos, Rockwell Automation and Geico.

Scott calls out integration again as the enabler of all these scenarios, one of the most critical components of the overall stack that delivers the value.

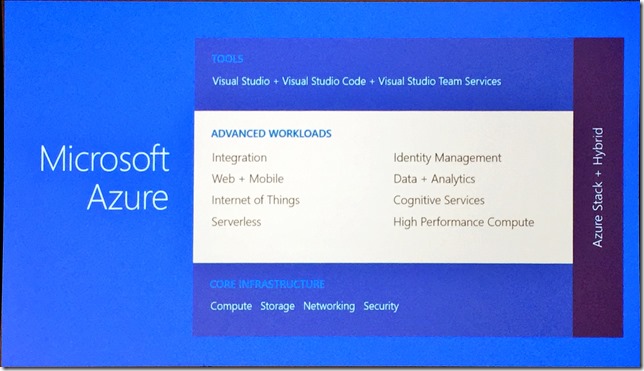

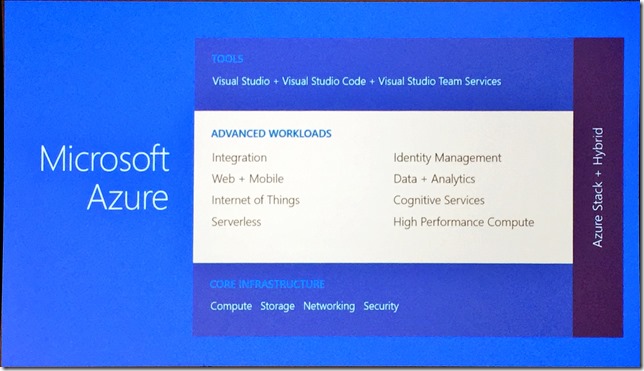

Next up is a great summary slide that shows the technology at play in Microsoft Azure across tools, advanced workloads and core infrastructure.

Integration is an important part of this, as is hybrid cloud.

Time for Scott to do some demos and showcase some integration scenarios.

The first demo is a backend solution driven by AI and data to create workflows. In this demo we see a Twitter analysis Logic App that monitors social networks, uses Cognitive Services to detect sentiment and perform key phrase extraction. The phrases are then analysed in a Function, and rows added to a PBI dataset.

The final part of the puzzle is sending a message to Microsoft Teams if the sentiment < 0.3 and creating a case in Dynamics 365 for support follow up.

This solution is live and used right now, Azure Support is currently able to reach out within three and a half minutes to follow up when negative sentiment is detected.

One curiosity was when showing Microsoft Teams, it clearly showed that not everything that seems negative is negative!

The second demo was based on a customer visit, a fitness organisation, and was built within the customer meeting and demonstrated Azure’s ability to solve real world problems and address pain points.

The demo used a PowerApp to take a picture, then use the Face API in Cognitive Services to do gym sign up and check in.

Now back to the slides, integration combined with other services provides much more possibility, and is better than a pure integration play.

Another quick demo showing how to create a new database, then talking about the capabilities of SQL Database including Point In Time recovery, and recommendations for optimising and auto tuning.

This was then extending to talk about SQL Injection and how the SQL Database service has threat analytics built in and how it can automatically block or take other actions as required when it perceives a threat.

Scott moved on to Virtual Machines and showed how to manage them, including the capability to manage multiple computers at once, you can look at Update management (patch management), and look at compliance of VM patching including Windows, Linux and non-Azure computers.

Using VM Inventory allows visibility on what VMs there are and their capabilities, then looking at Change tracking to show what has changed, files, registry settings, software and also supports managing multiple VMs at once making operators more efficient.

All of these demos really allow Scott to show that Azure has such a rich set of features with such a huge breadth.

Back to the core message to finish, integration is at the centre for connecting things together, and we can do it productively to deliver quickly, we can do it in a hybrid way to join cloud and on-premises, we can do it intelligently using AI and Cognitive Services and we can do it in a trusted way on a cloud that is compliant and secure.

With that Scott wrapped up and the first day concluded at Integrate 2017 USA, a day full of knowledge, information and humour.

by Sandro Pereira | Oct 23, 2017 | BizTalk Community Blogs via Syndication

INTEGRATE (formerly known BizTalk Summit) is the premier, and maybe the biggest integration conference in the world focused on Microsoft Integration and a must go event for anyone who is working in this area. So, if you missed the chance to attend the London event this year, this is your last chance to meet and interact with the Microsoft Product Group, Microsoft Integration MVP’s and with almost 250 experts from the field.

Since the first BizTalk Summit event, I’m a constant presence as a speaker at these events, but unfortunately, due to several professional and personal factors I didn’t have the pleasure to be present in first INTEGRATE USA event, but I’m thrilled to be able to be present in this year’s edition… not only I am present as a speaker but my company DevScope is a proud sponsor of this event!

But if all of this was not enough, I can also say that:

- I will be the only non-Microsoft employee with a dedicated session about BizTalk Server – I cannot imagine such big event focus on Microsoft integration space without proper session about BizTalk Server;

- I will be responsible to close this amazing event – we can say that we can apply the following proverb here: “it is the cherry on top the cake, or the best stays always to the end”

You are still on time to register for the conference here.

About my session

Session Name: BizTalk Server Fast & Loud

Session Overview: In this session, I will talk about a hardcore BizTalk topic that will address the following question: How can you optimize/tuning your BizTalk environment for performance. Optimizing your BizTalk Server installation is not an easy thing to do because it affects several layers and skills. This topic is very well documented by the product group but the problem is that it is very extensive and complex. This presentation will aim to guide you through the most important steps, operations or task you need to do or be aware in order to boost the performance of your BizTalk Server environment and that you can adjust or follow according to your needs because, depending on your infrastructure, this can be a straightforward operation or a very extensive and hard operation. But I will try to keep it as simple as possible so everyone can understand and follow.

Bringing gifts

As I did in the last two INTEGRATE events in London, I will bring gifts to the attendees, in this case, my latest BizTalk Server sticker version: BizMan, The BizTalk Server SuperHero Sticker

So, fill free to reach me out and ask for a BizTalk sticker.

BizTalk Mapping Patterns and Best Practices book

Last but not the least, it will also be a good opportunity for you to grab a physical copy of my book about BizTalk mapping: BizTalk Mapping Patterns and Best Practices, the book is a reference guide mainly intended for BizTalk developers to make their day-to-day lives easier. It offers insights on how maps work, the most common patterns in real time scenarios, and the best practices to carry out transformations and has as technical reviewers Steef-Jan Wiggers, Nino Crudele, Michael Stephenson and José António Silva. Some of the key patterns address in this book are:

- Direct Translation Pattern: Simply move data to a different semantic representation without any manipulation or transformation.

- Data Translation Pattern: Similar to Direct Translation Pattern with the additional step of data manipulation or transformation to match the target system format.

- Content Enricher Pattern: Set up access to an external data source (say, a database) to enhance the message with missing information.

- Aggregator Pattern: Similar to Content Enricher Pattern but a different mapping technique. Multiple inbound requests mapped to a single outbound request.

- Content Filter Pattern: Opposite of Content Enricher Pattern; remove unnecessary items from message (even based on condition) and send what is exactly required

- Splitter Pattern: Opposite of Aggregator Pattern; single inbound request to be mapped to several outbound requests

- Grouping Pattern: Example – shopping catalog where items are grouped into categories like Sports, Women Cosmetics, Electronics, Computers, and so on.

- Sorting Pattern: In most scenarios, Grouping Pattern and Sorting Pattern will be bound together

- Conditional Pattern: To receive only a portion of the data from the message, apply a conditional statement to filter the result set at the source.

- Looping Pattern: For instance, a record in the source system may occur multiple times in the input file. They need to be transformed according to the target system

- Canonical Data Model Pattern: Ensures loose coupling between applications; if a new application is added, only the transformation between the Canonical Data Model has to be created.

- Name-Value Transformation Pattern: Target system requires a Name-Value Pair (NVP) structure, or the source system has an NVP structure and the target requires a hierarchical schema

Click here to download your free digital copy of the book.

And since I will be there, if you want m please reach me out if and I will sign your copy of the book:

Author: Sandro Pereira

Sandro Pereira lives in Portugal and works as a consultant at DevScope. In the past years, he has been working on implementing Integration scenarios both on-premises and cloud for various clients, each with different scenarios from a technical point of view, size, and criticality, using Microsoft Azure, Microsoft BizTalk Server and different technologies like AS2, EDI, RosettaNet, SAP, TIBCO etc. He is a regular blogger, international speaker, and technical reviewer of several BizTalk books all focused on Integration. He is also the author of the book “BizTalk Mapping Patterns & Best Practices”. He has been awarded MVP since 2011 for his contributions to the integration community. View all posts by Sandro Pereira

by Gautam | Oct 22, 2017 | BizTalk Community Blogs via Syndication

Do you feel difficult to keep up to date on all the frequent updates and announcements in the Microsoft Integration platform?

Integration weekly update can be your solution. It’s a weekly update on the topics related to Integration – enterprise integration, robust & scalable messaging capabilities and Citizen Integration capabilities empowered by Microsoft platform to deliver value to the business.

If you want to receive these updates weekly, then don’t forget to Subscribe!

On-Premise Integration:

Cloud and Hybrid Integration:

Feedback

Hope this would be helpful. Please feel free to let me know your feedback on the Integration weekly series.

by Praveena Jayanarayanan | Oct 19, 2017 | BizTalk Community Blogs via Syndication

As the below quote says,

Quality in a service or product is not what you put into it. It is what the client or customer gets out of it – Peter Drucker

We, the product support team at BizTalk360, always put in our best efforts to solve the customer problems to make them feel satisfied. Our team often gets different varieties of problems, some related to functionality, performance, and data related issues and we make sure the issue is resolved within proper timelines. Recently there was a case with the customer that they were facing exception in Data Monitoring. In this blog, I am going to share my experience on how we resolved this problem.

The Message Box Data Monitoring:

How many times in a day does the support person have to watch for suspended instances in a particular application and take an appropriate action, or look out for ESB exceptions with a particular fault code? Wouldn’t it be nice if there was a way to set up monitoring on a particular data filter, get notified when there is a violation, and take actions automatically depending on the actual situation? Yes, that’s exactly what BizTalk360 achieves through the concept of data monitoring, which was a result of a customer feedback.

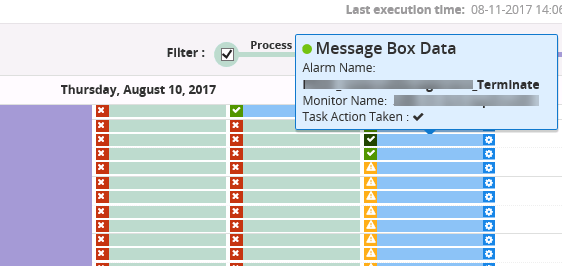

In MessageBox Data Monitoring, we can identify the number of suspended instances, the messages flowing and take appropriate action, either to suspend, resume or terminate the instances. This can be done from BizTalk360 itself, given the user has appropriate permissions. In my previous blog, I have explained about the permissions to be given for the user to resume/suspend/terminate service instances. Let’s look into the customer’s case.

Customer’s case:

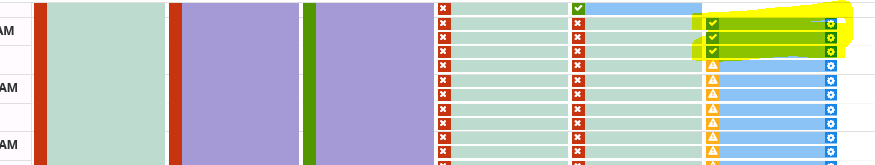

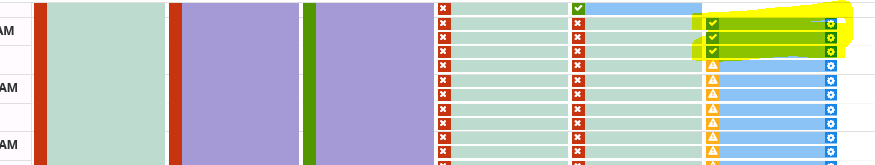

The support ticket was raised for the case that the customer has configured auto termination functionality for the suspended instances. The messages were neither getting archived nor terminated. But the Data Monitoring dashboard was showing the details of the successful run.

Our investigation starts:

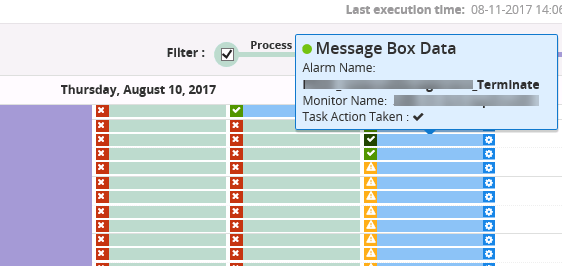

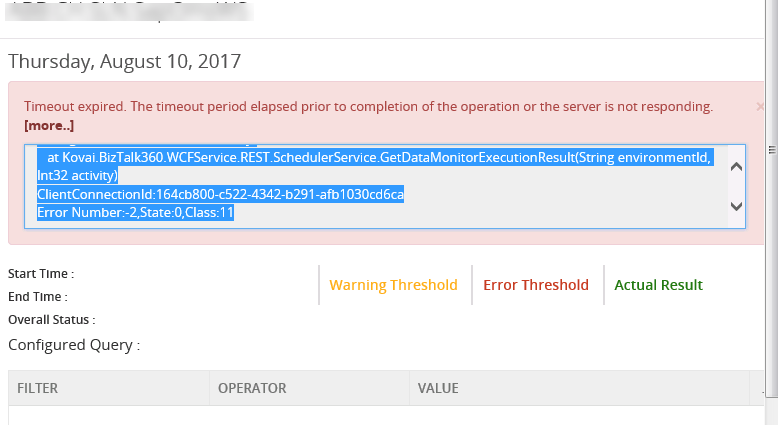

For a support ticket, with respect to Data Monitoring section, the first thing that we would check is for the alarm configuration details and then the logs for an exception. So, we started the investigation by checking the alarm details and they seemed to be fine. But the exception was captured in the Data Monitoring dashboard when the details of the task action were checked for.

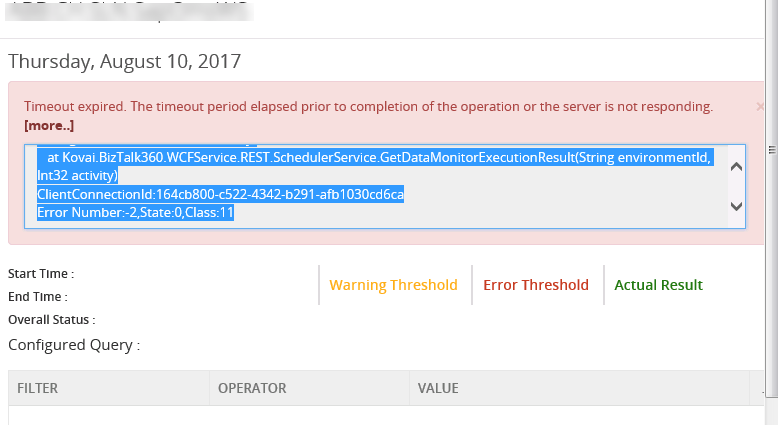

System.Data.SqlClient.SqlException (0x80131904): Timeout expired. The timeout period elapsed prior to completion of the operation or the server is not responding. —> System.ComponentModel.Win32Exception (0x80004005): The wait operation timed out at System.Data.SqlClient.SqlConnection.OnError(SqlException exception, Boolean breakConnection, Action`1 wrapCloseInAction)

The real cause for the Timeout exception:

There was an additional information from the customer that they were not able to delete the existing MessageBox data alert from BizTalk360, even though the BT360 service account was a superuser. The same timeout exception was displayed while deleting the alarm also. The suspended instances were getting terminated from BizTalk admin console without any issues. Since this was an issue in the production environment, we immediately went on a call with the customer to probe further on the case.

There were four BizTalk360 servers configured in High Availability mode in the production environment, out of which 3 were passive and one was active. We checked the status of the monitoring sub-services and found that Purging was not getting updated properly.

The timeout exception usually happens when there is a large volume of data. Checking all the permissions and configurations, everything was fine. The next step was to check for the data in the BizTalk360 database. But from where does the large volume of data come from?

BizTalk360 communication with other BizTalk databases:

It’s a well-known fact that BizTalk360 is a one-stop monitoring solution to monitor BizTalk server. So, for monitoring the BizTalk artefacts and the messages flowing through the receive and send ports, BizTalk360 polls for the data from the BizTalk databases namely BizTalkDTADb and BizTalkMsgBoxDb and inserts the required data into the BizTalk360 database as per the alarm configurations. For MessageBox Data Monitoring, the data from BizTalkMsgBoxDb is fetched. If there is any action (resume/terminate) is configured for the suspended instances in Data Monitoring, then the data is inserted into the following tables in BizTalk360 database.

– b360_st_DataMonitorResults

– b360_st_DataMonitorTaskActionResults

When we checked the number of records in the b360_st_DataMonitorTaskActionResults table, the select query was just spinning and was taking lot of time to load the results. This was due to the reason that there were 8 million records in that table. And obviously, this was cause for the timeout exception in BizTalk360.

Purging in BizTalk360:

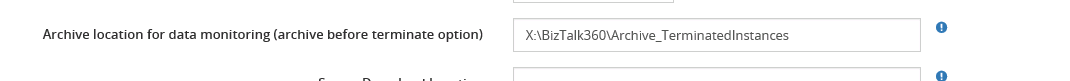

BizTalk360 comes out of the box with the ability to set purging duration and the background monitoring service has the capability to purge older data automatically after the specified period. The Administrators/Superusers can set up the “Purge duration” under “Settings”. This will control the database growth and hence the performance of BizTalk360 will not get affected. The default purging settings in BizTalk360 can be seen in the below screenshot.