by Gautam | Jun 30, 2017 | BizTalk Community Blogs via Syndication

I am proud to be part of Synegrate which is now certified as Gold Partner for Microsoft’s Azure Cloud Platform. Synegrate was already a Managed Microsoft Gold Certified Partner in Application Integration and a Microsoft Silver Certified Partner in Cloud Competency.

Today Synegrate achieved a Microsoft Gold Cloud Platform competency, demonstrating a best-in-class ability and competencies on the Azure platform.

The Cloud Platform competency is designed for partners to capitalize on the growing demand for infrastructure and software as a service (SaaS) solutions built on Microsoft Azure.

This is the highest attainable partnership level and is earned after achieving the defined competency requirements.

To earn a Microsoft gold competency, partners must successfully complete exams (resulting in Microsoft Certified Professionals) to prove their level of technology expertise, and then designate these certified professionals uniquely to one Microsoft competency, ensuring a certain level of staffing capacity. They also must submit customer references that demonstrate successful projects, meet a performance (revenue and or consumption/usage) commitment, and pass technology and/or sales assessments.

Achieving the Microsoft Gold Cloud Platform competency showcases Synegrate’s expertise in and commitment to today’s cloud technology market and demonstrates deep knowledge of Microsoft’s Cloud Platform.

Synegrate has proven a reliable partner for customers globally. Over the years the company has been successful in assisting customers in various Microsoft solution based endeavors that created value propositions ranging from reduced costs or complexity to increased availability and security. Here are some stories and examples of our loyal customers.

About Synegrate

Synegrate’s core focus is data. We have data coursing through our veins; it is in our DNA. We specialize in the storing, integration, dissemination, visualization and analytics of data. We create modern data driven applications, BPM (Business Process Management) processes to orchestrate data and dashboards for data analysis.

Synegrate is a 100% Microsoft focused company that is fully committed to the Microsoft Azure cloud services and solutions.

We utilize the platforms, products and tools provided by Microsoft, to provide our customers with innovation, analytics and insight. We’re a front runner in helping our customers realize their future state architectures on the Microsoft Azure cloud.

We have our Head Office in California, development centers in different regions, allowing us to service the US from coast to coast.

by michaelstephensonuk | Jun 29, 2017 | BizTalk Community Blogs via Syndication

When Logic Apps was first announced at the Integrate summit in Seattle a few years ago one of my first comments was that I felt that this could be an integration game changer for small business in due course. The reason I said this was if you look across the vendor estate for integration products you have 2 main areas. The first is the traditional integration broker and ESB type products such as BizTalk, Oracle Fusion, Websphere and others. The 2nd area is the newer generation of iPaas such as Mulesoft, Dell Boomi, etc. While the technicalities of how they solve problems has changed and their deployment models are different they all have 1 key thing in common. They all view integration as a high value premium thing that customers will pay a lot of money to do well.

This has always rules them out of the equation for small business and meant that over the years many SME companies will typically implement integration solutions with custom code from their small development teams. This was often their only choice and made it difficult because as they grow they would reach a point where they got to a certain size yet their integration estate would be a mess of custom scripts, components and other things.

What excites me with Logic Apps is that Microsoft have viewed the cost model in a different way. While it is possible to spend a lot of money on some premium features it is also possible to create complex integration solutions that have zero up front cost and a running cost of less than a cup of coffee. This mindset that integration is a commodity not a premium service can be put forward by Microsoft because they have a wide cloud platform and offering low cost integration will increase the compute usage by customers across their cloud platform. Other than the big cloud players such as AWS and Google its much harder for them to think of integration in this way because the vendor doesn’t have the other cloud features to offer. Likewise AWS and Google who do have the platform play don’t have any integration heritage so this puts

Outside of the integration companies, small business has looked at products like Zapier and IFTTT for a few years but these products can only go so far in terms of complexity of processes you want to implement.

Microsoft in a unique position where they have an integration offering with something for the biggest enterprise right down the scale to something for a one man band.

In Microsoft world if you’re a small company the likelihood is your using Office 365, there are some great features available on that platform and for my own small business ive been an Office 365 user for years. One example of how I use it is for my accounts and business finance. While I use it a lot, I do have one legacy solution in place from my pre-office 365 days. I had a Microsoft Access database which I wrote a small console app which would load transactions from the CSV files from my bank into it so I could process them and keep my accounts up to date. Ive hated this access solution for years but it did the job.

I have decided that now is a good opportunity to migrate this to Office 365 along with the rest of my accounts and finance info and this will let me get rid the console app.

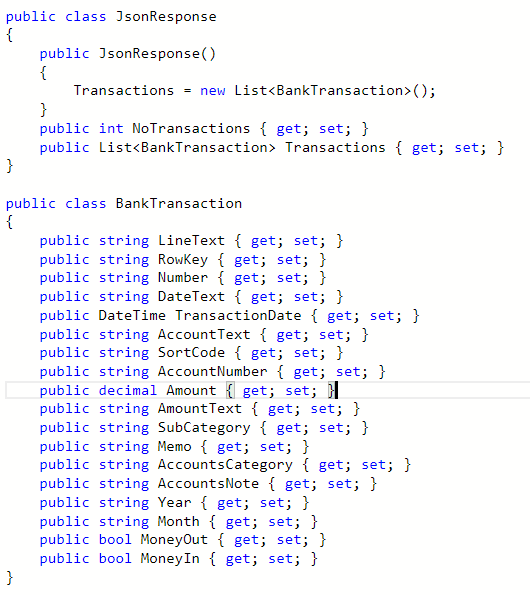

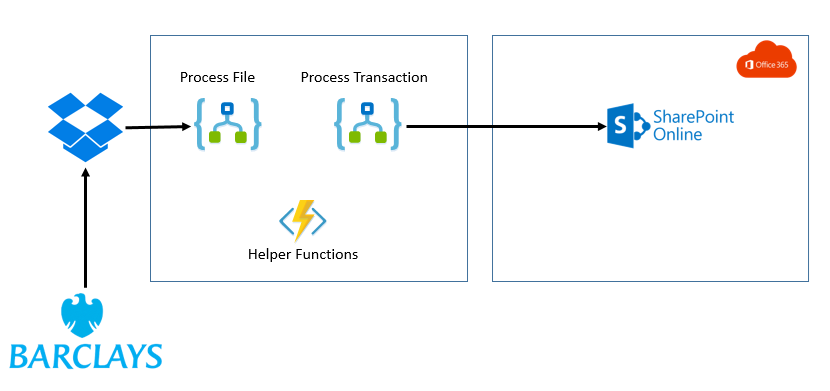

The plan for this new interface was to use Logic Apps to pick up the csv file I can download from my bank and then load the transactions into an Office 365 SharePoint list and then copy the file into a SharePoint document library for back up.

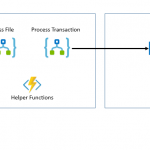

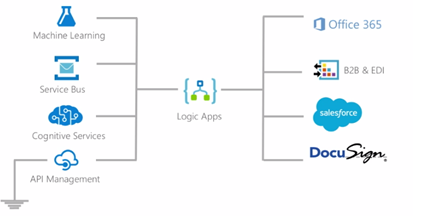

At a high level the architecture will look like the below picture.

While this integration may seem relatively straightforward there were a few hoops to jump through so I thought it might be interesting to share the journey.

Issues with Barclays File

First off the thing to think about would be the Barclays file. I will always have to download this manually (it would be nice if I could get them to deliver it to be monthly). The file was a pretty typical CSV file but a couple of things to consider.

First there is no unique id for each transaction!! – I found this very strange but the problem it causes is each time I download the file the same transaction may be in multiple files. A file would typically have around 3 months data in it. This means I need to check for transactions which have already been processes.

2nd there is a number field in the file but this is not populated so ill ignore this for now.

3rd and most awkwardly is that its possible to have 2 or more rows in the file which would be exactly the same but refer to different transactions. This happens if you pay the same place 2 or more times with the same amount on the same day. Id have to figure out how to handle this.

Logic App – Load Bank Transactions

I was going to implement the solution with 2 logic apps. The first one will collect the file, it will then process the file and do a loop over each record but each record will be processed individually by a separate Logic App. I like this separation as it makes it easier to test the Logic Apps.

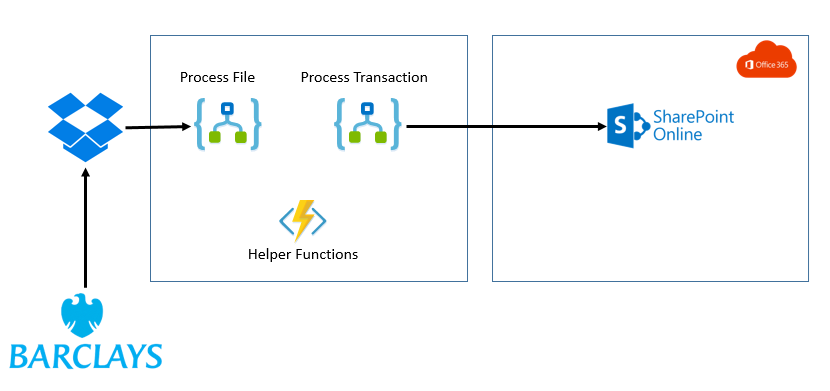

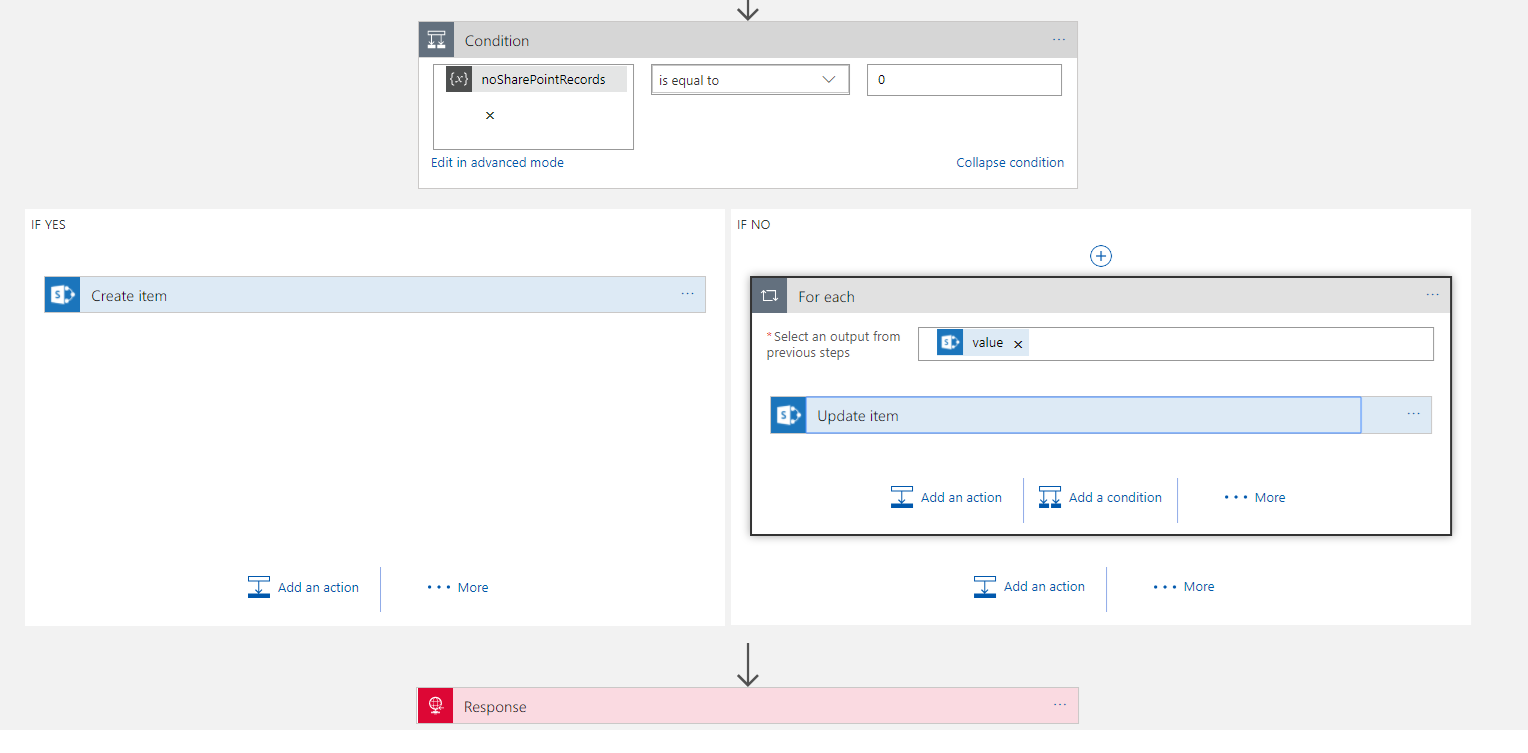

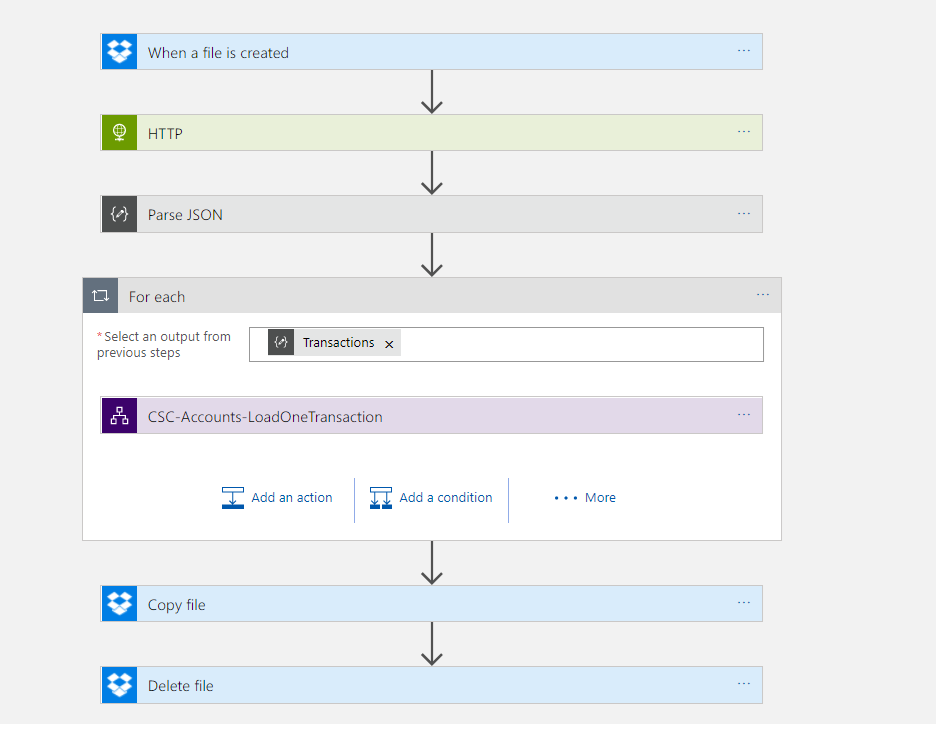

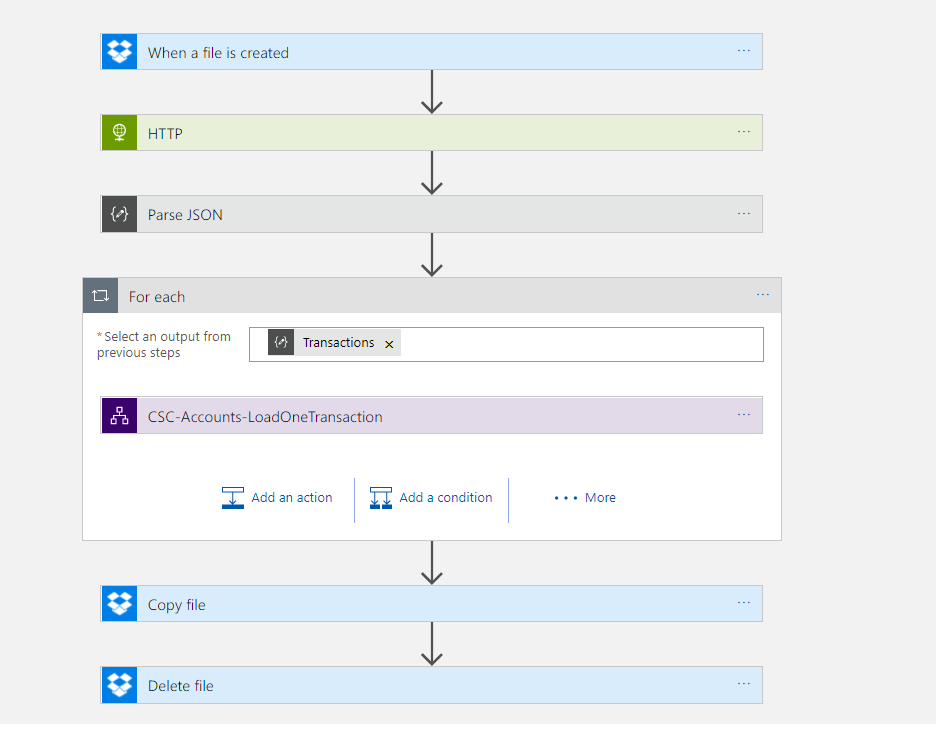

Before I get into the details of the logic apps, the below picture shows what the logic app looks like.

The main bit that is interesting in this Logic App is the parsing of the csv file. With Logic Apps if you have plenty of money to spend you can get an Enterprise Integration Account which includes flat file parsing capability. Unfortunately however I am a small business so I cant justify this cost. Instead I took advantage of the Azure Functions. In the Logic App I pass the file content to a function and then in the function I processed each line from the file and created an object model which will be returned as Json which will make the rest of the processing much easier.

In the function it was easy for me to use some .net code to do a bit of logic on the data and also to do things like trying to identify the type of transaction.

The big positive is using the consumption plan for Functions this means the cost is again very very cheap.

One interesting thing to note was I had some encoding issues calling the function from the logic app using the CSV data. I didn’t really get to workout the root cause of this as I could call it fine in Postman but I think its something about how the Logic App and its function connector encapsulate the call to the function. Fortunately it was really easy to work around this because I could just call the function with the HTTP connector instead!

The only other interesting point is I made the loop sequential just to keep processing simple so I didn’t have to worry about concurrency issues.

Azure Function Parse Bank Data

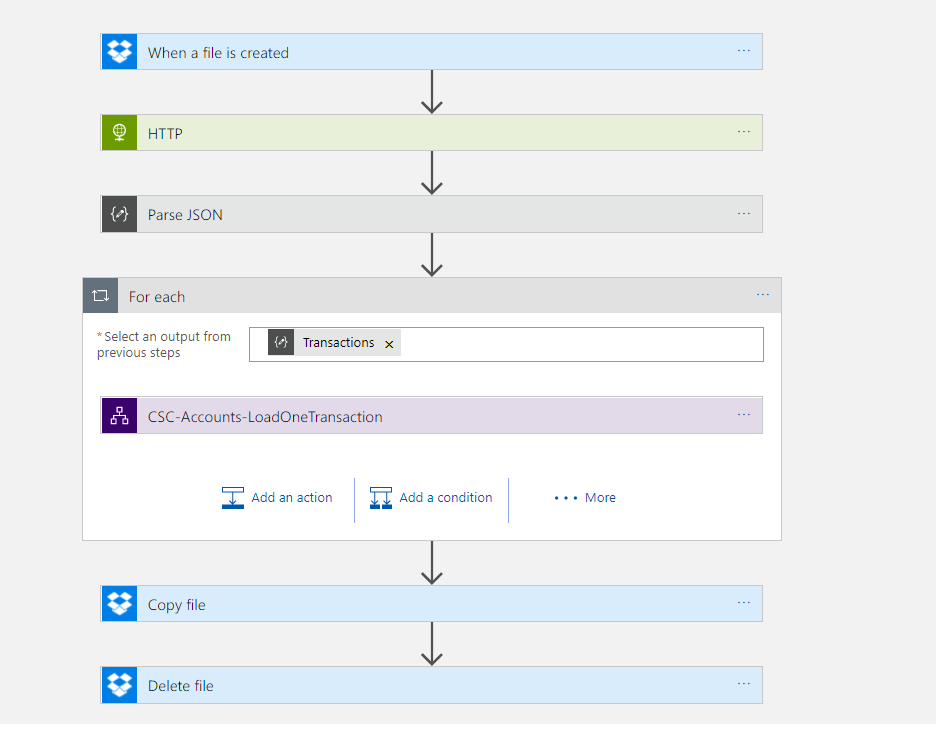

In the Azure Function I chose to tackle the problem of the duplicate looking transactions. The main job of the function was to convert the CSV data to JSON but I did a little extra processing to simplify things. One feature I implemented was to add a row key field. This would be used to populate the title field in the sharepoint list. This means id have a unique key to look up any existing records to update.

When calculating the row key I basically used the text from the entire row which in most cases was unique. As I processed records I checked if there was a transaction which already had that key. If it did I would add a counter to the end of it so if there were 3 rows with a row key id add a -2 and -3 to the 2nd and 3rd instances of the for to make them unique.

This isn’t the nicest solution in the world but it does work and gets us past the limitation from the Barclays data.

Response Object

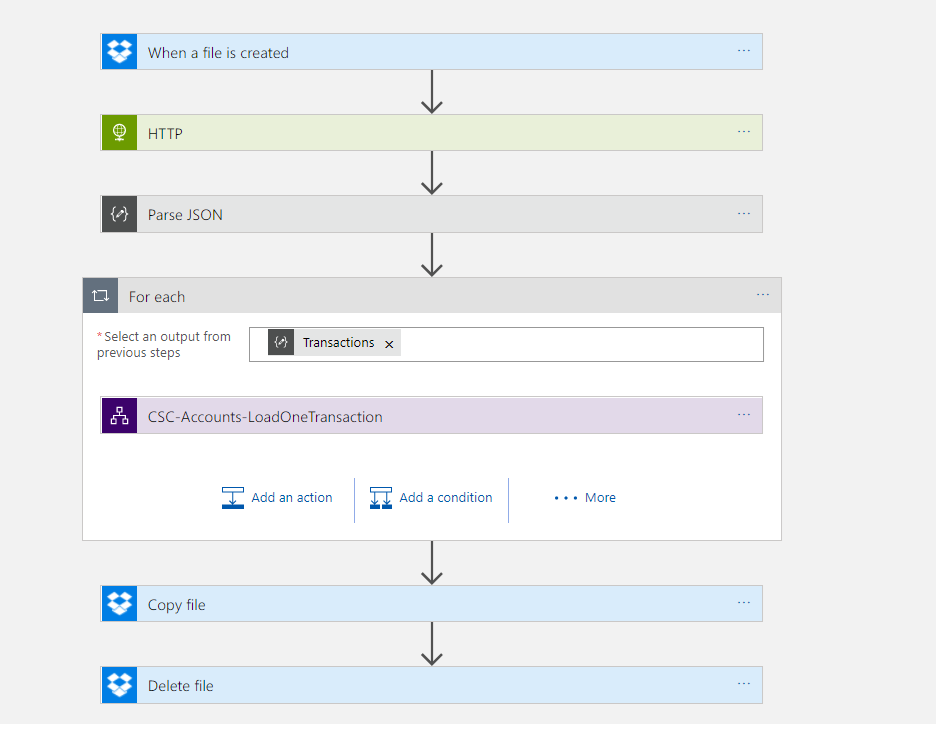

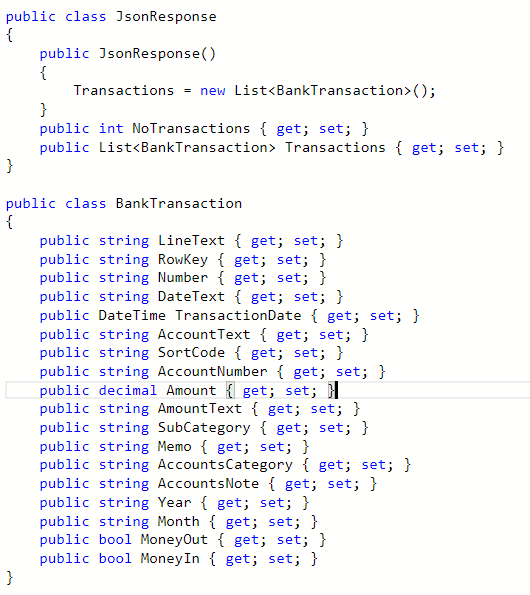

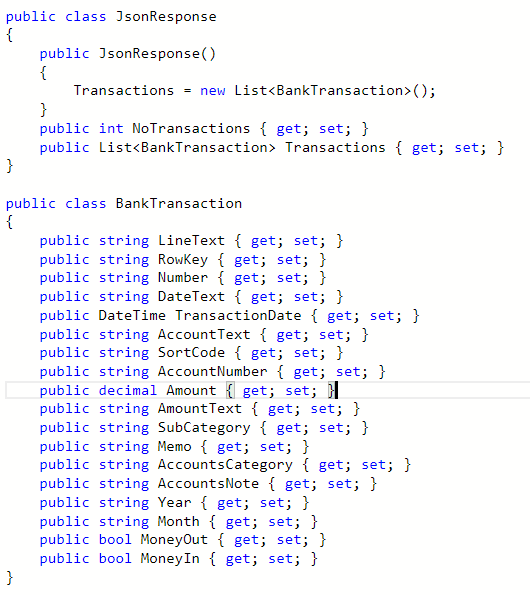

Below is an picture of the response object returned from the functions so you can see its just an object wrapping a list of transactions.

Logic App – Load Single Transaction

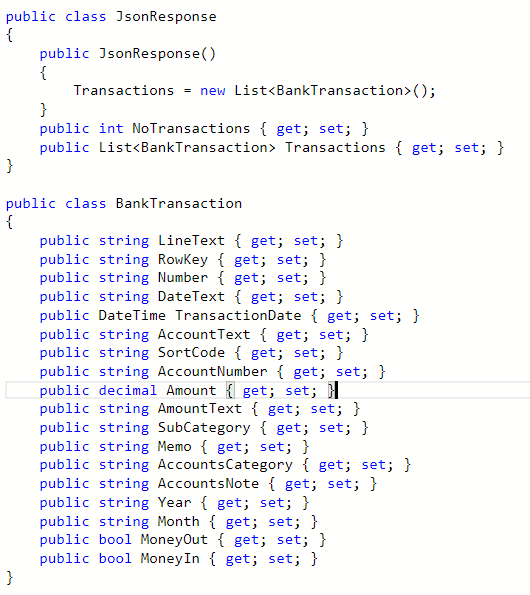

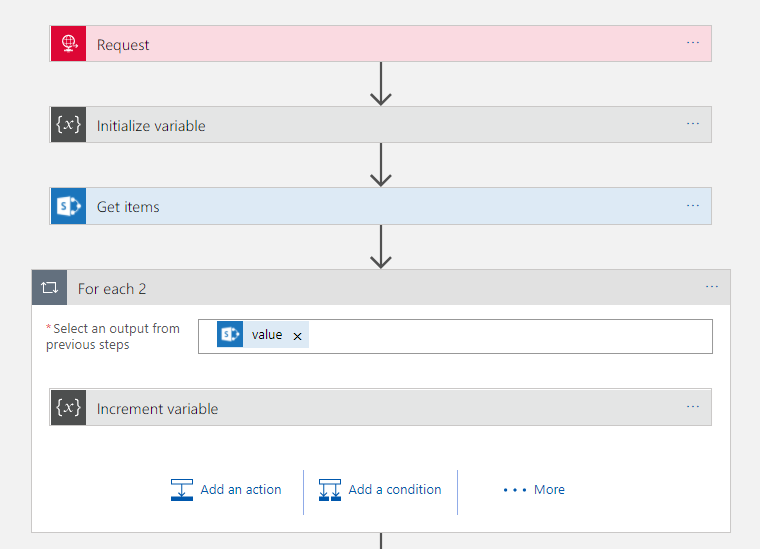

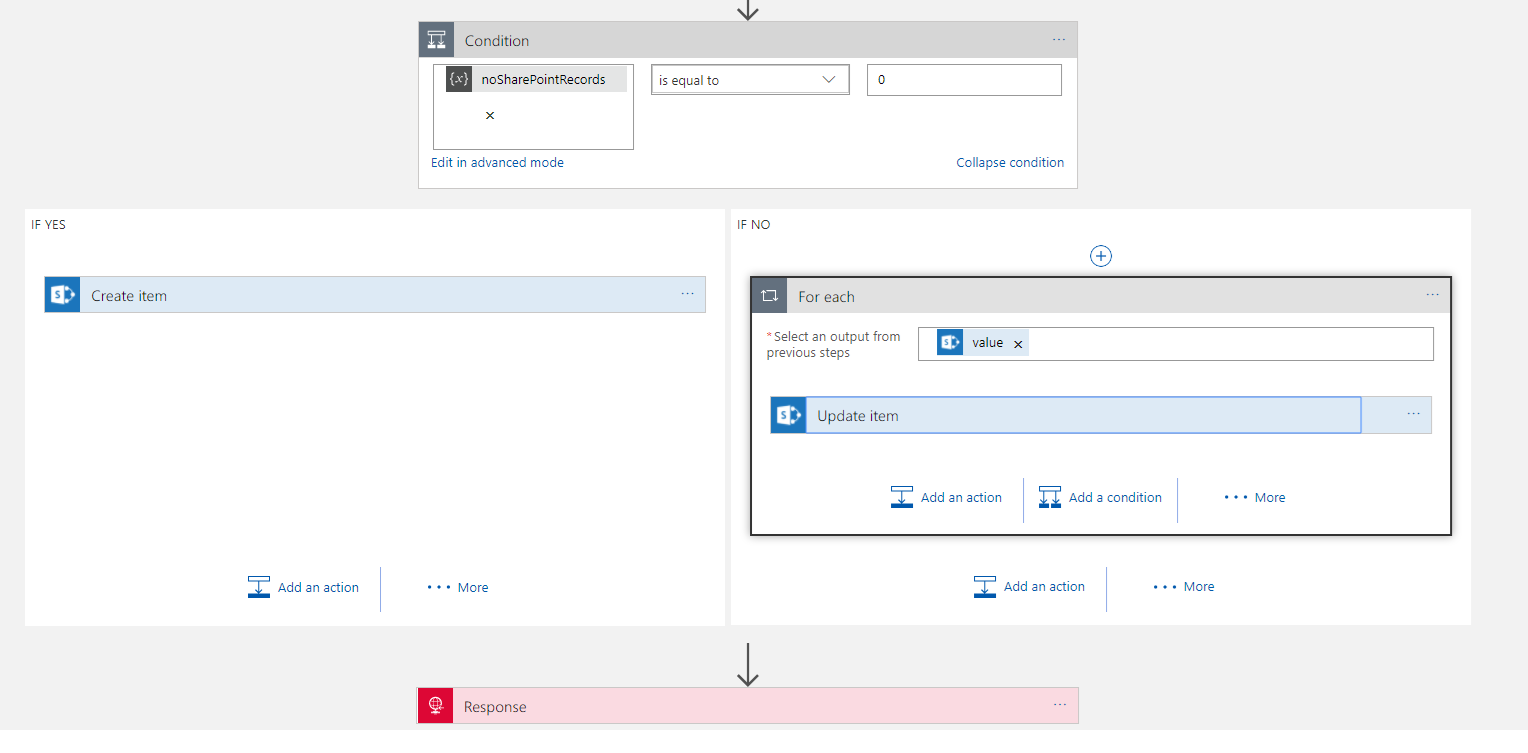

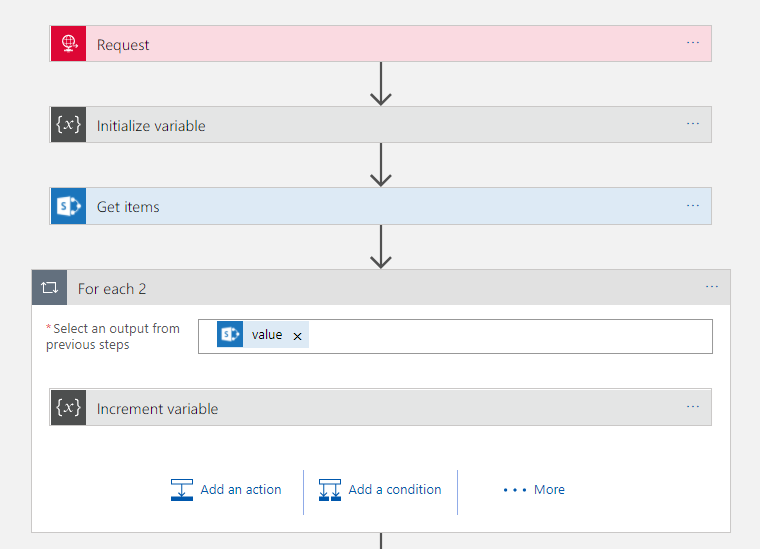

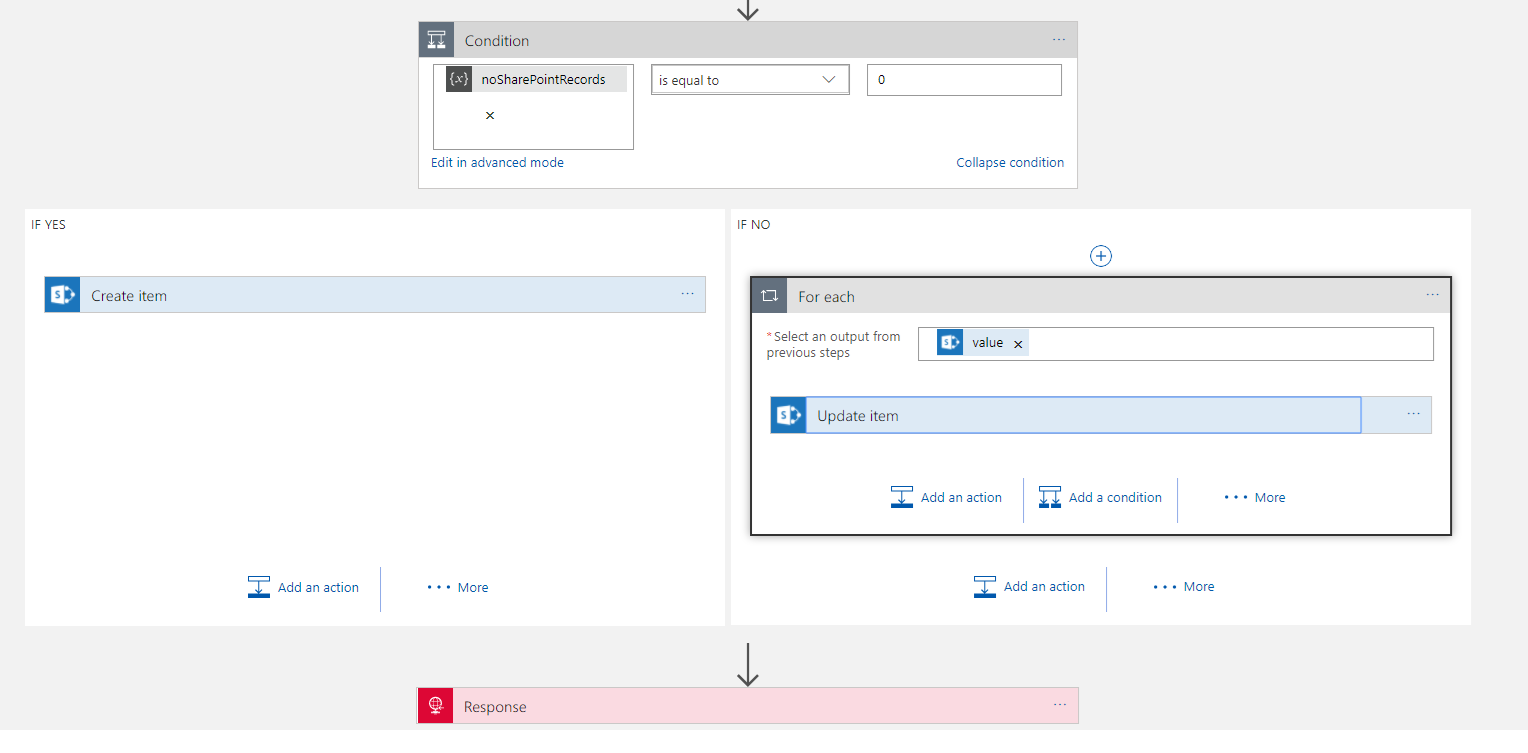

Once I have my data in a nice JSON format, the parent Logic App would loop the records and call the child Logic App for each record. Below is a picture of the child Logic App.

In this next section I am going to talk about how I implemented the solution. Please note that while this works, I did have a chat with Jeff Holland afterwards and he advised me on some optimisations which I am going to implement which will make this work a little nicer. I am going to blog this as a separate post but this is based on me working through how to get it to work with designer only features.

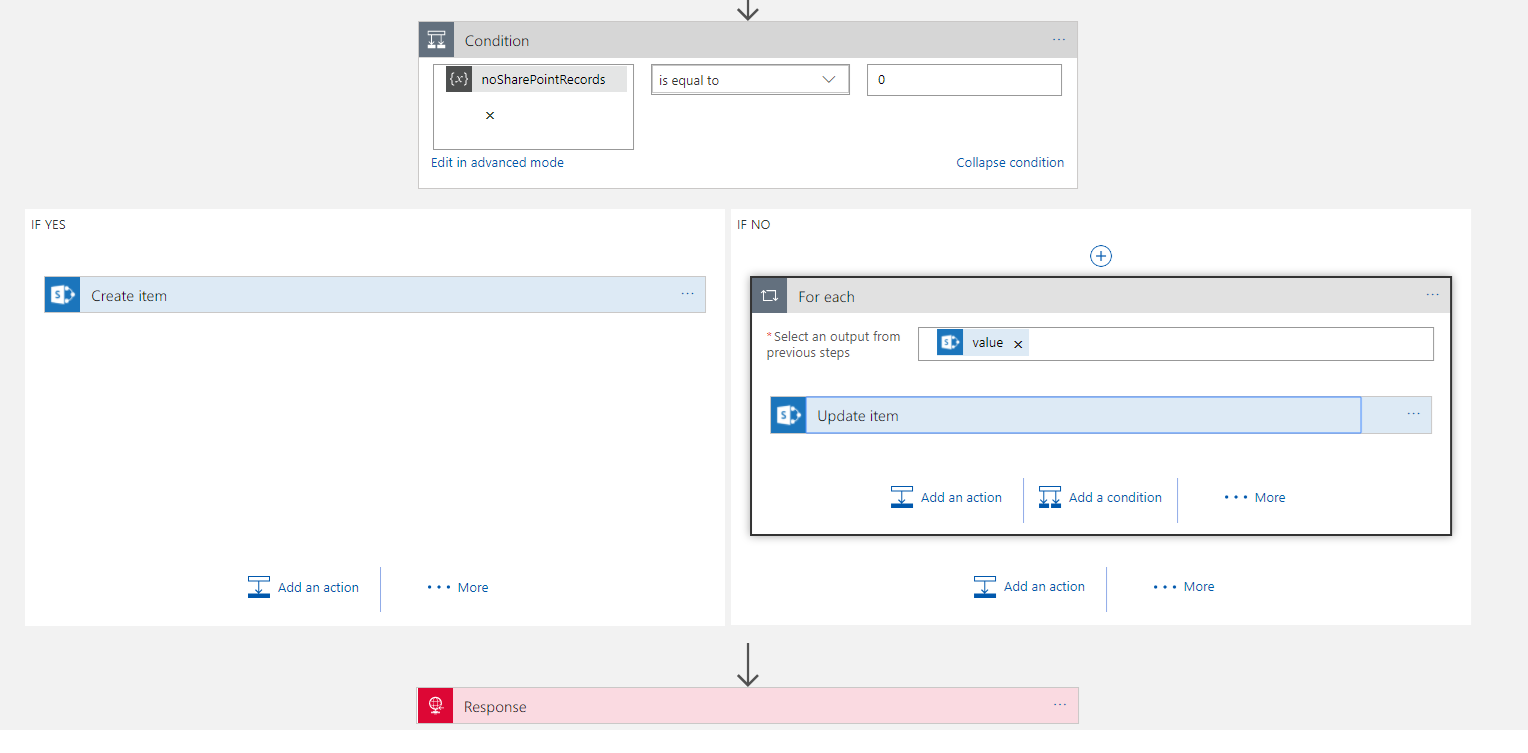

What’s interesting about this Logic App was the hoops to do the upsert style functionality. First off I needed to use the SharePoint Get Items with a query expression to “Title eq ‘#Row Key#’”. This would take the row key parameter passed in and then get any matches. I also used the return max records 1 setting as there would only be 1 match.

I also initialized a variable which I then counted the number of records in the logic app array that was returned. In my condition I could check the record could to see if there was a match in the query of SharePoint which would direct me to insert or update.

From here the Insert Item action was very straight forward but the Update Item was a little more fiddly. Because get items was an array I needed to put the Update inside a loop even though I know there is only 1 row. In the upsert I could use either fields from the input object or the queried item depending if I want to change values or not.

At this point I now had a working solution which took me about 2-3 hours to implement and test and I now have migrated my bank transaction processing into Office 365. The Azure upfront cost for the solution was zero and the running costs is about 50 pence each time I process a file.

Summary

As you can see this integration solution is viable for a small business. The main expense is manpower to develop the solution. I now have a nice sandboxed integration solution in a resource group in my companies Azure subscription. Its easy to run and monitor/manage. The great thing is these kind of solutions can grow with my business.

If you think about it this could be a real game changer for some small businesses. When you think about B2B business, often its about how smoothly and well integrated two business can be that is the differentiator between success and failure. Typically big organisations are able to automate these B2B processes but now with Azure integration a very small business could be able to implement an integration solution on the cloud which could massively disrupt the status quo of how B2B integration works in their sector. When you consider that those smaller business also don’t have the slow moving processes and people/politics of big business this must create so many opportunities for forward thinking SME organisations.

by michaelstephensonuk | Jun 29, 2017 | BizTalk Community Blogs via Syndication

When Logic Apps was first announced at the Integrate summit in Seattle a few years ago one of my first comments was that I felt that this could be an integration game changer for small business in due course. The reason I said this was if you look across the vendor estate for integration products you have 2 main areas. The first is the traditional integration broker and ESB type products such as BizTalk, Oracle Fusion, Websphere and others. The 2nd area is the newer generation of iPaas such as Mulesoft, Dell Boomi, etc. While the technicalities of how they solve problems has changed and their deployment models are different they all have 1 key thing in common. They all view integration as a high value premium thing that customers will pay a lot of money to do well.

This has always rules them out of the equation for small business and meant that over the years many SME companies will typically implement integration solutions with custom code from their small development teams. This was often their only choice and made it difficult because as they grow they would reach a point where they got to a certain size yet their integration estate would be a mess of custom scripts, components and other things.

What excites me with Logic Apps is that Microsoft have viewed the cost model in a different way. While it is possible to spend a lot of money on some premium features it is also possible to create complex integration solutions that have zero up front cost and a running cost of less than a cup of coffee. This mindset that integration is a commodity not a premium service can be put forward by Microsoft because they have a wide cloud platform and offering low cost integration will increase the compute usage by customers across their cloud platform. Other than the big cloud players such as AWS and Google its much harder for them to think of integration in this way because the vendor doesn’t have the other cloud features to offer. Likewise AWS and Google who do have the platform play don’t have any integration heritage so this puts

Outside of the integration companies, small business has looked at products like Zapier and IFTTT for a few years but these products can only go so far in terms of complexity of processes you want to implement.

Microsoft in a unique position where they have an integration offering with something for the biggest enterprise right down the scale to something for a one man band.

In Microsoft world if you’re a small company the likelihood is your using Office 365, there are some great features available on that platform and for my own small business ive been an Office 365 user for years. One example of how I use it is for my accounts and business finance. While I use it a lot, I do have one legacy solution in place from my pre-office 365 days. I had a Microsoft Access database which I wrote a small console app which would load transactions from the CSV files from my bank into it so I could process them and keep my accounts up to date. Ive hated this access solution for years but it did the job.

I have decided that now is a good opportunity to migrate this to Office 365 along with the rest of my accounts and finance info and this will let me get rid the console app.

The plan for this new interface was to use Logic Apps to pick up the csv file I can download from my bank and then load the transactions into an Office 365 SharePoint list and then copy the file into a SharePoint document library for back up.

At a high level the architecture will look like the below picture.

While this integration may seem relatively straightforward there were a few hoops to jump through so I thought it might be interesting to share the journey.

Issues with Barclays File

First off the thing to think about would be the Barclays file. I will always have to download this manually (it would be nice if I could get them to deliver it to be monthly). The file was a pretty typical CSV file but a couple of things to consider.

First there is no unique id for each transaction!! – I found this very strange but the problem it causes is each time I download the file the same transaction may be in multiple files. A file would typically have around 3 months data in it. This means I need to check for transactions which have already been processes.

2nd there is a number field in the file but this is not populated so ill ignore this for now.

3rd and most awkwardly is that its possible to have 2 or more rows in the file which would be exactly the same but refer to different transactions. This happens if you pay the same place 2 or more times with the same amount on the same day. Id have to figure out how to handle this.

Logic App – Load Bank Transactions

I was going to implement the solution with 2 logic apps. The first one will collect the file, it will then process the file and do a loop over each record but each record will be processed individually by a separate Logic App. I like this separation as it makes it easier to test the Logic Apps.

Before I get into the details of the logic apps, the below picture shows what the logic app looks like.

The main bit that is interesting in this Logic App is the parsing of the csv file. With Logic Apps if you have plenty of money to spend you can get an Enterprise Integration Account which includes flat file parsing capability. Unfortunately however I am a small business so I cant justify this cost. Instead I took advantage of the Azure Functions. In the Logic App I pass the file content to a function and then in the function I processed each line from the file and created an object model which will be returned as Json which will make the rest of the processing much easier.

In the function it was easy for me to use some .net code to do a bit of logic on the data and also to do things like trying to identify the type of transaction.

The big positive is using the consumption plan for Functions this means the cost is again very very cheap.

One interesting thing to note was I had some encoding issues calling the function from the logic app using the CSV data. I didn’t really get to workout the root cause of this as I could call it fine in Postman but I think its something about how the Logic App and its function connector encapsulate the call to the function. Fortunately it was really easy to work around this because I could just call the function with the HTTP connector instead!

The only other interesting point is I made the loop sequential just to keep processing simple so I didn’t have to worry about concurrency issues.

Azure Function Parse Bank Data

In the Azure Function I chose to tackle the problem of the duplicate looking transactions. The main job of the function was to convert the CSV data to JSON but I did a little extra processing to simplify things. One feature I implemented was to add a row key field. This would be used to populate the title field in the sharepoint list. This means id have a unique key to look up any existing records to update.

When calculating the row key I basically used the text from the entire row which in most cases was unique. As I processed records I checked if there was a transaction which already had that key. If it did I would add a counter to the end of it so if there were 3 rows with a row key id add a -2 and -3 to the 2nd and 3rd instances of the for to make them unique.

This isn’t the nicest solution in the world but it does work and gets us past the limitation from the Barclays data.

Response Object

Below is an picture of the response object returned from the functions so you can see its just an object wrapping a list of transactions.

Logic App – Load Single Transaction

Once I have my data in a nice JSON format, the parent Logic App would loop the records and call the child Logic App for each record. Below is a picture of the child Logic App.

In this next section I am going to talk about how I implemented the solution. Please note that while this works, I did have a chat with Jeff Holland afterwards and he advised me on some optimisations which I am going to implement which will make this work a little nicer. I am going to blog this as a separate post but this is based on me working through how to get it to work with designer only features.

What’s interesting about this Logic App was the hoops to do the upsert style functionality. First off I needed to use the SharePoint Get Items with a query expression to “Title eq ‘#Row Key#’”. This would take the row key parameter passed in and then get any matches. I also used the return max records 1 setting as there would only be 1 match.

I also initialized a variable which I then counted the number of records in the logic app array that was returned. In my condition I could check the record could to see if there was a match in the query of SharePoint which would direct me to insert or update.

From here the Insert Item action was very straight forward but the Update Item was a little more fiddly. Because get items was an array I needed to put the Update inside a loop even though I know there is only 1 row. In the upsert I could use either fields from the input object or the queried item depending if I want to change values or not.

At this point I now had a working solution which took me about 2-3 hours to implement and test and I now have migrated my bank transaction processing into Office 365. The Azure upfront cost for the solution was zero and the running costs is about 50 pence each time I process a file.

Summary

As you can see this integration solution is viable for a small business. The main expense is manpower to develop the solution. I now have a nice sandboxed integration solution in a resource group in my companies Azure subscription. Its easy to run and monitor/manage. The great thing is these kind of solutions can grow with my business.

If you think about it this could be a real game changer for some small businesses. When you think about B2B business, often its about how smoothly and well integrated two business can be that is the differentiator between success and failure. Typically big organisations are able to automate these B2B processes but now with Azure integration a very small business could be able to implement an integration solution on the cloud which could massively disrupt the status quo of how B2B integration works in their sector. When you consider that those smaller business also don’t have the slow moving processes and people/politics of big business this must create so many opportunities for forward thinking SME organisations.

by Sriram Hariharan | Jun 29, 2017 | BizTalk Community Blogs via Syndication

During the course of INTEGRATE 2017, BizTalk360 Founder/CTO Saravana Kumar presented the Partner of the Year (2016) and Product Specialist awards to companies and technical leaders who have showcased and demonstrated expertise with the BizTalk360 product over the last year. We started this tradition in the BizTalk Summit 2015 and INTEGRATE 2016 events and the trend continues.

BizTalk360 Partner of the Year 2016 Awards

The Partner of the Year 2016 award was bagged by Codit from Netherlands, Solidsoft Reply from UK, and Evry from Sweden.

Product Specialist Of The Year 2016 Awards

BizTalk360 recognized the efforts of people who have proven their history of implementation of the product over the past year. The program recognizes these exceptional contributions by allowing product specialists early access to products and a forum for providing feedback. This year, we are extremely happy to present this award to 15 people –

- Bart Scheurweghs

- David Grospelier

- Daniel Toomey

- Daniel Wilen

- Maarit Laine

- Eldert Grootenboer

- Eva De Jong

- Joakim Wadskog

- Jordy Maes

- Kent Weare

- Kien Pham

- Maxime Delwaide

- Milen Koychev

- Nicolas Blatter

- Steef – Jan Wiggers

We would like to thank all our Partners and Product Specialists for their efforts towards improving the reach of BizTalk360 to customers.

Author: Sriram Hariharan

Sriram Hariharan is the Senior Technical and Content Writer at BizTalk360. He has over 9 years of experience working as documentation specialist for different products and domains. Writing is his passion and he believes in the following quote – “As wings are for an aircraft, a technical document is for a product — be it a product document, user guide, or release notes”. View all posts by Sriram Hariharan

by Gautam | Jun 28, 2017 | BizTalk Community Blogs via Syndication

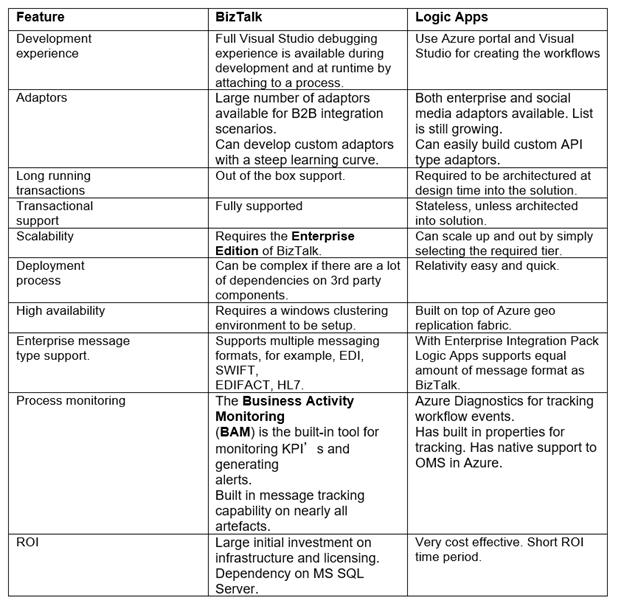

Now a days, this is a very common and valid question in the BizTalk community, both for existing BizTalk customer and for new one too.

Here is what Tord answered in the open Q&A with product group at 100th Episode of integration Monday. Check at ~ 30.30 minutes of the video.

If your solution need to communicate with SaaS application, Azure workloads and cloud business partners (B2B) all in cloud then you should use Azure Logic Apps, but if you are doing lot of integration with on-premise processing by communicating with on-premise LOB applications, then BizTalk is the pretty good option. You can use both if you are doing hybrid integration.

So basically, it depends on scenario to scenario based on your need and architecture of your solution.

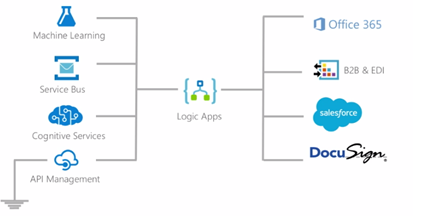

Many enterprises now use a multitude of cloud-based SaaS services, and being able to integrate these services and resources can become complex. This is where the native capability of Logic Apps can help by providing connectors for most enterprise and social services and to orchestrate the business process flows graphically.

If your resources are all based in the cloud, then Logic Apps is a definite candidate to use as an integration engine.

Natively, Logic Apps provides the following key features:

Rapid development: Using the visual designer with drag and drop connectors, you design your workflows without any coding using a top-down design flow. To get started, Microsoft has many templates available in the marketplace that can be used as is, or modified to suit your requirements. There are templates available for Enterprise SaaS services, common integration patterns, Message routing, DevOps, and social media services.

Auditing: Logic Apps have built-in auditing of all management operations. Date and time when workflow process was triggered and the duration of the process. Use the trigger history of a Logic App to determine the activity status:

- Skipped: Nothing new was found to initiate the process

- Succeeded: The workflow process was initiated in response to data being available

- Failed: An error occurred due to misconfiguration of the connector

A run history is also available for every trigger event. From this information, you can determine if the workflow process succeeded, failed, cancelled, or is still running.

Role-based access control (RBAC): Using RBAC in the Azure portal, specific components of the workflow can be locked down to specific users. Custom RBAC roles are also possible if none of the built-in roles fulfills your requirements.

Microsoft managed connectors: There are several connectors available from the Azure Marketplace for both enterprise and social services, and the list is continuously growing. The development community also contributes to this growing list of available connectors as well.

Serverless scaling: Automatic and built in on any tier.

Resiliency: Logic Apps are built on top of Azure’s infrastructure, which provides a high degree of resiliency and disaster recovery.

Security: This supports OAuth2, Azure Active Directory, Cert auth and Basic auth, and IP restriction.

There are also some concerns while working with Logic Apps, shared by Microsoft IT team at INTEGRATE 2017

You can also refer the book, Robust cloud integration with Azure to understand and get started with integration in cloud.

When you have, resources scattered in the cloud and on premise, then you may want to consider BizTalk as a choice for this type of hybrid integration along with Logic Apps.

BizTalk 2016 include an adapter for Logic Apps. This Logic App adapter will be used to integrate Logic Apps and BizTalk sitting on premise. Using the BizTalk 2016 Logic App adapter on-premise, resources can directly talk to a multitude of SaaS platforms available on cloud.

The days of building monolithic applications are slowly diminishing as more enterprises see the value of consuming SaaS as an alternative to investing large amounts of capex to buy Commercial Off the Self (COTS) applications. This is where Logic Apps can play a large part by integrating multiple SaaS solutions together to form a complete solution.

BizTalk Server has been around since 2000, and there have been several new products releases since then. It is a very mature platform with excellent enterprise integration capabilities.

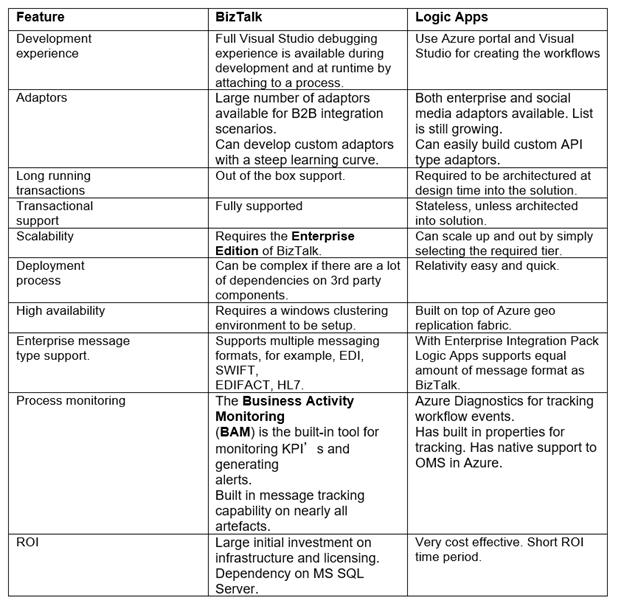

Below is a short comparison matrix between BizTalk and Logic Apps:

Conclusion

Microsoft Integration platform has all the option for all kind of customer’s integration need.

by Sriram Hariharan | Jun 28, 2017 | BizTalk Community Blogs via Syndication

After a scintillating Day 1 and Day 2 at INTEGRATE 2017, the stage was perfectly set for the last (Day 3) day of the event. Before you proceed further, we recommend you to take a read at the following links –

Quick Links

Session 1 – Rethinking Integration by Nino Crudele

Day 3 at INTEGRATE 2017 started off with the “Brad Pitt of the Integration Community” – Nino Crudele. It was a perfect start to the last day of this premier integration focused conference.

Nino started off his session by thanking his mentor, a fellow MVP for instilling knowledge about Power BI. This session was based on true experience. Nino shared his experience of how he calls the job as his passion with three different types of jobs – Bizzy (BizTalk), DEFCON1, and Chicken Way. In this context, what Nino refers to the Chicken way is the way in which you can actually solve the problem – you can take a direct or an indirect approach to solve the problem.

Nino even had some Chicken Way Red Cards to give away to the community and some reactions to that were –

Then Nino presented the most comical slide of the entire #Integrate2017 event – a question / answer from his 12-year old daughter about BizTalk.

The above slide shows how people perceive the technology actually. Therefore, it’s imperative that you have to choose the proper technology to solve the specific problem and make the customer happy. Nino also explained what according to him are the top technology stacks and made a mention that “BizTalk is SOLID” – a very solid technology platform.

Then Nino gave an example of his customer experience where the customers were using 15 BizTalk Servers! :O Nino suggested changes to certain approaches in their business process, and the way to get the real time performance improvement. The customer was also looking for a real fast hybrid integration (point to point) with BizTalk in the project with real time monitoring, tracing and so on. Nino suggested a framework that was completely built over the cloud. This approach was more reliable and the customer had complete control over the messaging system, scalable and so on. The solution made use of Logic App, Event Hubs, Service Bus, Blob storage and many more such integration solutions which made the customer happy.

The session moved into a cool demo from Nino (real time data visualization in Power BI using custom visualization) which you can get to watch when the videos go Live on the INTEGRATE 2017 website.

Session 2 – Moving to Cloud-Native Integration by Richard Seroter

The second session of the day was from Richard Seroter on Moving to Cloud-Native Integration. Richard started off his talk with the analogy of “theory of constraints” where processes are limited to throughput by the constraint (bottleneck). In any software environment, you have to focus on what is the constraint that is slowing you down and optimize it. In an organization environment, there are chances that the “integration might itself be the constraint” to slow things and slow down the business.

Therefore, Richard introduces the concept of cloud native integration to connect different systems.

Integration Today

According to Gartner, in current scenario, application-to-application integration is the most critical integration scenario, while few years down the line, cloud service integration will rise to the top. The actual spending on integration platforms is on the rise with the fastest growth in iPaaS and API Management.

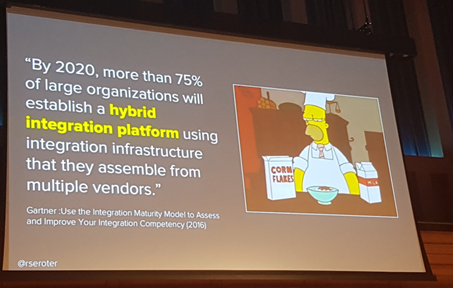

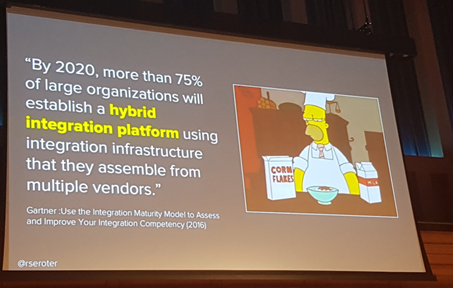

Again, Gartner says, by 2020, 75% of the companies will establish a hybrid integration platform using an infrastructure that they assemble from different vendors. By 2021, atleast 50% of large companies will have incorporated citizen integrator capabilities into their integration infrastructure.

What is Cloud Native?

Cloud native is basically “how” do I build a software!

The following image clearly shows the difference between a traditional enterprise and a cloud native enterprise.

Delivering Cloud Native Integration

- Build a more composable solution that is

- Loosely coupled

- Makes use of choreographed services

- Push more logic to the endpoints

- Offer targeted updates

Richard then jumped into his demos where in the first demo, he used a Logic App as a data pipeline. The Logic App receives a message from the queue, call a service running in Azure App service, call a Azure function that does some fraud processing, and feed the result message back to the queue for further processing.

To feed the queue, Richard deploys another Logic App where a file is picked up from OneDrive, parse the file as a JSON array and dump it to the queue which is on the other Logic App.

That’s not it! Richard had few more demos in store – Making BizTalk server easy where he used BizTalk 2016 FP1 Management APIs to create BizTalk artifacts self-service style, and automate Azure via Service Broker.

We recommend you to watch this session when the video is made available in a week’s time on the INTEGRATE 2017 website.

Session 3 – Overcoming Challenges When Taking Your Logic App into Production

Stephen started off with a key announcement about the readiness of a New Pluralsight Course – “BizTalk Server Administration with BizTalk360“. The course will be made available shortly.

Phase 1 of the session was targeted towards ‘Decision Making‘, phase 2 was on what we did right and wrong, and the last phase with some important tips.

Decisions

Stephen compared building a .NET parser solution to Logic Apps development. Logic Apps was calculated to have finished earlier and way cheaper. They even questioned if Integration Account are worth the price ($1000 per month)

What’s Wrong and Right?

-

- Make design decisions based on the rules on the serverless platform and factoring costs per Logic Apps action

- Stephen described that initially he used 2 subscriptions in 2 regions, but this made deployment across regions hard. Therefore, the best practice is to have one subscription in one region

- Solution structure – Solution level maps to a resource group, use one project per Logic App, maintained 3 parameter files, one per environment. For performing deployment you can create a custom VM.

- Serverless – is AMAZING, but sometimes things break for no fault of your own, sometimes Microsoft support needs to be called in for support/fixing issues

Tips

- Read the available documentation

- Don’t be afraid for JSON – code view is still needed especially with new features, but most of the time are soon available in designer and visual studio. Always save or check-in before switching to JSON.

- Make sure to fully configure your actions, otherwise you cannot save the Logic App

- Ensure name of action, hard to change afterwards

- Try to use only one MS account

- If you get odd deployment results, close / reopen your browser

- Connections – Live at resource group level. Last deployment wins. Best practices: define all connection parameters in one Logic App. One connection per destination, per resource group.

- Default retries – all actions retry 4 additional times over 20s intervals.

- Control using retry policies

- Resource Group artefacts – contain subscription id, use parameters instead

- For each loop – limited to 100000 loops . default to multiple concurrent loops, can be changed to sequential loops

- Recurrence – singleton

- User permissions (IAM) – multiple roles exist like the Logic App Contributor, and the Logic App Operator

With that, it was time for the attendees to take a break!

After the break, Duncan Barker from the BizTalk360 team took the stage to thank the wonderful team at BizTalk360 for all their effort in making INTEGRATE 2017 a great success!

Session 4 – BizTalk Server Deep Dive into Feature Pack 1

Tord was given a warm welcome with the song “Rise” by Katy Perry. Tord complimented the welcome by saying how good friends he and Katy Perry are and the story behind how she wrote the song for BizTalk. 🙂

Fun aside, Tord started off the session by saying how BizTalk Server 2016 almost got a pink theme for the icons! :O Just hours before the team was to do the final build for BizTalk Server 2016 Feature Pack 1 release, one of the engineers pointed out the pink stroke on the outside of all icons. The team managed to fix and ship the release.

But, do you know! There is one tiny pixel of pink somewhere in some icon? If you find it, send Tord an email and he will send you a nice gift!

BizTalk Connector in Logic Apps is now Generally Available with Full Support!!!

Microsoft IT team have built a first class project to help migrate easily to BizTalk Server 2016. You can get your downloadable version of the application from the below link. If migration is what is holding you, then make use of this application.

With BizTalk Server, you can do so many things! You can take advantage of the cloud through BizTalk Server. Tord walked through the different features that were released as a part of Feature Pack 1 in detail with some Live Demo.

Session 5 – BizTalk Server Fast & Loud

After that power packed introduction from Daniel Szweda for Sandro Pereira comparing him with Cristiano Ronaldo (who as well hails from Portugal), guess what happened! SANDRO PEREIRA forgot to Turn on his machine to show his presentation :O The IT admin guy at Kings Place almost had to show up 5 – 6 times to get the “problem” solved, and Sandro termed it with the famous word “Jetlag” that was associated with most speakers during any technical issues 😛 🙂 And.. there was a roar when the presentation worked for Sandro! Phew … There goes the BizTalk Wiki Ninja, BizTalk Mapper Man, The Stencil Guy into his session.

Sandro started off his session with this slide

Sandro’s session was more towards BizTalk Server optimization and performance. The points discussed in this session were –

SQL Server

- Clients still don’t have BizTalk Jobs running

- Comparing in a Car terminology,

- BizTalk Server is the Chassis

- SQL Server is the Engine

- Hard Drivers is the Tiers

- Memory is the Battery

- CPU is the Fuel Injector

- Network and Visualization Layer is the Exhaust pipe

- Make sure BizTalk Server and SQL Server Agent jobs are configured and running

- Treat BizTalk databases as a Black box

- Size really matters in BizTalk! Large databases impact performance (Eg., MessageBoxDB, Tracking database)

- Consider to dedicate SQL resources to BizTalk Server

- Consider splitting the TempDB into multiple data files for better performance

Networking

- Speed defines everything for this layer

- At a minimum, you need to have 1 logical disk for data files, 1 for transaction log files, and 1 for TempDB data files

- Remove unnecessary network

- Scaling out is not a solution to all problems – sometimes you may also have to scale in to solve a problem!

Session 6 – BizTalk Health Check – What and How?

The last session before lunch was on BizTalk Health Check – What and How? by Saffieldin Ali. BizTalk Health Check is something similar to the MOT Testing that’s performed on vehicles in UK. MOT Testing is a compulsory test for exhaust and emissions of motor vehicles.

In BizTalk, the health check is performed to –

- Identify symptoms and potential problems before it affects production environment

- Review critical processes to achieve minimum downtime due to disaster recovery

- Identify any warnings and red flags that may be affecting users

- Understanding of common mistakes made by administrators and developers

- Understand the supportability and best practices

BizTalk Health Check Process

Interviewing

- Operations Interview (1-1 meetings with admins/dev teams to collect operational view of things)

- Knowledge Transfer

Collecting

- Run collection tools (BizTalk Health Monitor etc)

- Collect informal information (say, I did something wrong last week during an informal discussion)

Analysis and Reporting

- Run and examine analysis tools results

- Write and Present final conclusion

BizTalk Health Check Areas

- Platform configuration for BizTalk Server

- BizTalk Server Configuration

- BizTalk Performance

- Resilience (High Availability)

- SQL Server Configuration for BizTalk Server

- Disaster Recovery

- Security

- BizTalk Application Management and Monitoring

BizTalk Health Check Key Tools

- Microsoft Baseline Security Analyser (MBSA)

- BizTalk Best Practices Analyser

- BizTalk Health Monitor (BHM)

- Perf Analysis of Logs (PAL)

Safieldin showed how each of the above products work and how they perform the checks on the BizTalk environment.

It was time for the community to break out for Lunch and some networking before the close of the event in the next couple of hours.

Session 7 – The Hitchhiker’s Guide to Hybrid Connectivity by Dan Toomey

The last leg of #Integrate2017 was something quite significant. All the 3 speakers – Daniel Toomey, Wagner Silveira and Martin Abbott are the ones who have flown into London after some long flights. Dan and Martin from Australia (about 20 hours) and Wagner from New Zealand (about 30 hours!).

Post lunch, it was time for Dan Toomey from Australia to take the stage to talk about The Hitchhiker’s Guide to Hybrid Connectivity.

Dan started his talk about the types of Azure Virtual Network –

- Point to Site (P2S) – Something similar to connection when you work from home and connect to corporate network (connect to Citrix/VPN) over the internet

- Site to Site (S2S) – taking an entire network and joining with another network over the internet

- ExpressRoute – something like taking a giant cable (managed by someone else) and connecting your corporate network on that.

VNET Integration for Web/Mobile Apps

- Requires Standard and Premium App Service Plan

- VNET must be in the same subscription as App Service Plan

- Must have Point to Site enabled

- Must have Dynamic Routing Gateway

VNET with API Management

If you have API Management that is sitting in your Virtual Network with access to your Corporate Network gateway, you will get:

- Added layer of security

- All benefits of API Management (caching, policies, protocol translation [SOAP to REST], Analytics, etc)

Non-Network based Operations

Azure Relay (an alternate approach) – This is a new offering with Azure Service Bus

-

- WCF Relay

- Hybrid Connections

- Operates at transport level

On-Premises Data Gateway

- Generally available since 4th May 2017

- Acts as a bridge between Azure PaaS and on-prem resources

- Works with connectors for Azure Logic Apps, Power Apps, Flow and Power BI

Daniel wrapped up his talk by talking about the following business scenarios –

- Azure Web/Mobile App to On-Prem

- IaaS Server (VM) to On-Prem

- SaaS Service to On-Prem

- Business to Business

- Service Fabric Cluster to On-Prem

To know more about these scenarios that Dan talked about, please watch the video which will be made available soon.

Session 8 – Unlocking Azure Hybrid Integration with BizTalk Server by Wagner Silveira

In this session, Wagner started off his talk speaking about Why BizTalk + Azure, and what BizTalk brings to Hybrid Integration –

- On-premises adapters

- Azure adapters

- Separation of concerns

- Availability

- For existing users

- Leverage investment into the platform

- Continuity to developers

Wagner talked about the ways in which you can connect to Azure in detail along with some scenarios-

- Service Bus

- Azure WCF Relay

- App Services/API Management

- Logic Apps

Wagner showed an exciting demo for 2 Line of Business (LoB) systems and finally some tweets coming out of Logic Apps.

Session 9 – From Zero to App in 45 minutes (using PowerApps + Flow) by Martin Abbott

There we were! The last session at #Integrate2017. Obviously not a good feeling being the speaker as you would be closing what was an amazing 3 days of learning and experience. But Martin did a great job in showing the power of PowerApps and Flows and showed how you can build an application in 45 minutes using the combo.

Martin started off his talk talking about Business Application Platform Innovation which is represented in a very nice diagram.

Martin just had 3 slides and it was an action packed session with demo to create an application in under 45 minutes. We recommend you to watch the video which will be available shortly on the event website.

Key Announcement – Global Integration Bootcamp 2018

Martin was one of the organizers of the recently concluded Global Integration Bootcamp event in March 2017. It’s now official that we will have the #GIB event in 2018. The event will happen on 24th March, 2018. You can follow the website http://www.globalintegrationbootcamp.com/ for further updates.

Sentiment Analysis on #Integrate2017

In the Day 1 Recap blog, we had shown some statistics on the sentiment analysis of tweets for hashtag #Integrate2017. Here is one last look at the report at 00:00 (GMT+0530) on June 29, 2017.

And, with that!!! It was curtains down on what has been a fantastic 3 days at INTEGRATE 2017. Well, we are not just done yet! As announced on Day 1 by Saravana Kumar, INTEGRATE 2017 will be back in Redmond, Seattle, USA on October 25-27, 2017. So if you missed attending this event in London, come and join us at Redmond.

We hope you had a great time at INTEGRATE 2017. Until next time, adios!!!

In case you missed it!

Author: Sriram Hariharan

Sriram Hariharan is the Senior Technical and Content Writer at BizTalk360. He has over 9 years of experience working as documentation specialist for different products and domains. Writing is his passion and he believes in the following quote – “As wings are for an aircraft, a technical document is for a product — be it a product document, user guide, or release notes”. View all posts by Sriram Hariharan

by BizTalk Team | Jun 27, 2017 | BizTalk Community Blogs via Syndication

We are very happy to announce a wonderful tool provided by MSIT, the tool will help in a multiple scenarios around migrating your environment or even taking backup of your document applications.

It comes with few inbuilt intelligence like

- Connectivity test of source and destination SQL Instance or Server

- Identify BizTalk application sequence

- Retain file share permissions

- Ignore zero KB files

- Ignore files which already exist in destination

- Ignore BizTalk application which already exist in destination

- Ignore Assemblies which already exist in destination

- Backup all artifacts in a folder.

| Features Available |

Features Unavailable |

- Windows Service

- File Shares (without files) + Permissions

- Project Folders + Config file

- App Pools

- Web Sites

- Website Bindings

- Web Applications + Virtual Directories

- Website IIS Client Certificate mapping

- Local Computer Certificates

- Service Account Certificates

- Hosts

- Host Instances

- Host Settings

- Adapter Handlers

- BizTalk Applications

- Role Links

- Policies + Vocabularies

- Orchestrations

- Port Bindings

- Assemblies

- Parties + Agreements

- BAM Activities

- BAM Views + Permissions

|

- SQL Logins

- SQL Database + User access

- SQL Jobs

- Windows schedule task

- SSO Affiliate Applications

|

Download the tool here

For a small guide take a look here

by Srinivasa Mahendrakar | Jun 27, 2017 | BizTalk Community Blogs via Syndication

What a day it was at ‘Integrate 2017’ today. For Logic Apps enthusiasts, it was a treat. have you missed the sessions? don’t worry, I am going write on all that was talked about today on logic apps.

Azure Logic Apps – Microsoft IT journey with Azure Logic Apps – By Divya Swarnkar and Mayank Sharma

Microsoft has a large IT wing to serve its business which is called ‘MSIT’. This team is well known for ‘eating its own dog food’. Mayank and Divya are from MSIT’s integration team. When they started their session by describing the scale of business their team is serving, we were all blown away. Look at the number of business entities they are serving. Around 170 million messages flow through their 175 BizTalk servers serving 1000 plus trading partners across various business entities.

“We are moving all of this Integration to Logic Apps.”

MSIT is modernizing their integration landscape completely. Divya and Mayank made it very clear that they are moving all the BizTalk interfaces to Logic Apps and BizTalk is only going to be used as a proxy to serve existing partner requests. They so far were able to deliver three releases.

- Release 1.0 they moved most of their interfaces relying on X12 and AS2, Logic Apps.

- Release 1.5 they were able to move interfaces related to EDIFACT to Logic Apps.

- Release 2.0 release they moved many of the XML-oriented interfaces.

All these interfaces helped them to achieve following goals.

- Enable Order to Cash Flow for digital supply chain management.

- Running trade integrations and all customer declaration transactions.

- They became ready to retire “Microsoft BizTalk Services” instances by end of July.

Solution Architecture

They then continued to explain their solution architecture. Below is the slide that they presented. Following are some of the important aspects of their solution architecture.

- Azure API Management: All trading partners send the messages(X12/EDIFACT/XML) through Microsoft’s Gateway store. Azure API management service then routes the message to an appropriate logic app.

- Integration Account: The Logic apps they have built, make full use of Integration account artefacts such as Trading Partner Agreements, Certificates, Schemas, Transformations etc.

- On-premises BizTalk: On-premises BizTalk is merely used as a proxy for Line of business applications. This makes sense as they may not want to change all the connections which already exist for Line of Business Applications and also they need to support the continuity of other interfaces. This is the perfect example of how other organizations can start migrating their interfaces to Logic Apps.

- Logic App Flow: The Logic apps make use of typical VETER pipeline which involves AS2 connector, X12 connector, Transformation, Encoding and HTTP connectors as shown below.

- OMS for Diagnostics and Monitoring: Operational Management Suits(OMS) is used for collection of diagnostic logs from Integration Accounts, Logic Apps and Azure functions which are part of their solution. Once all the diagnostic data is collected they will be able to query and create nice dashboards for getting analytics on their interfaces. Currently, Integration accounts have their built-in solutions for OMS. Please refer the video http://www.integrationusergroup.com/business-activity-tracking-monitoring-logic-apps/ to know about Diagnostic logs in Logic Apps and Integration accounts.

Fall-back and Production Testing Using APIM

They have scenarios where they want to test the logic apps in production and also want to fall back to previous stable versions of the logic app. They make use of APIM to achieve this requirement. APIM is configured with rules to switch between the logic apps end points.

Disaster Recovery

Business continuity is very important especially for MSIT with the scale of messaging they are handling. In order to achieve the business continuity assurance, they make use of Disaster Recovery feature which comes along with integration account.

The disaster recovery is achieved by creating similar copies of logic apps, integration accounts and azure functions in two different regions. As you can see from the picture they have this replication in both Central US and West US regions. Visit the documentation https://docs.microsoft.com/en-us/azure/logic-apps/logic-apps-enterprise-integration-b2b-business-continuity to know more about disaster recovery feature.

Huge confidence Boost to Customers who are contemplating on moving to Logic Apps

Azure Logic Apps – Advanced integration patterns By Jeff Hollan and Derek Li

I am a big fan of Jeff Hollan. When he is on the stage it’s a treat to listen to him. He brings life into technical talks and involves the audience by leaving a lasting impression. Enough of personifying him. Jeff Hollan and Derek Li were on to the stage to talk about advanced integration patterns in Logic apps.

Internals of Logic Apps Platform

Jeff arrived on the stage with the clear intention of explaining the internal architecture of Logic Apps platform. You might be wondering why we should be knowing about the internals of Logic Apps as it is a PaaS offering and we generally treat them as a black box from the end user perspective. However, he gave three powerful reasons why we should understand the internals.

- There are some published limits for the Logic apps. We need to understand them in order to design enterprise grade solutions.

- Understanding the nature of the workflows

- Internals help us to clearly understand the impact of design on throughput especially when we are working with Long running flows.

- We will be able to leverage the platform as much as possible for concurrency.

- Helps us to understand the structure and behavior of our data

Agenda

The agenda was not just talking about the internal architecture of logic apps but also to talk about Parallel Actions, Exception handling, workflow expressions.

Logic Apps Designer

Logic apps designer is apparently a TypeScript/React JS application. All the functionality that we observe in logic apps designer is all self-contained in this application. This is the main reason how they are able to host it in visual studio. This makes use of Swagger to render the inputs and outputs. Also as we already aware it generates the workflow definition in JSON.

Logic Apps Runtime

As we know logic apps will have triggers and actions. When we create a logic app all these will be defined in a JSON file. When we click save button, logic apps runtime handles it as below.

- Runtime engine reads the workflow definition and breaks down into various tasks and identifies the dependencies. the tasks will not be executed until their dependencies are worked out.

- It spins distributed workers which coordinate to complete the execution of the tasks. This is very revealing to know that all the workers are distributed which makes the logic app more resilient

- Runtime engine ensures that all the tasks inside the flow are executed at least once. he announced that in the history of logic apps he has not seen any instance where a task is left unexecuted.

- There is no limit on the number of threads executing these tasks and hence there is no overhead of managing active threads.

Example logic App

He gave an example of a logic app with a service bus trigger receiving list of products, and writes each product to a SQL database.

In this example, his main intention was to show how runtime identifies the tasks which can be executed. In this example, a for each loop decides that run time can spin parallel tasks to execute the SQL task. The workflow orchestrator then completes the message by calling service bus complete connector and ends the workflow.

Parallel action

Now with run times ability to spin parallel tasks, he showed us how to use parallel action in logic app definition.

From above picture, it is clear that we can add as many parallel actions we want to add by just clicking Plus symbol on the branches.

Exception handling

At this point, Derek Li took over the stage to show some geeky stuff. He started off by creating a logic app in which one of the action fails and when it fails he would send an email to Jeff. To achieve this he puts a scope and adds all the actions required. After the scope, he configured the run after settings for an action. I do not have an exact snapshot from his slide but it was something like below.

With run after configuration for an action, it is easy to handle the error conditions. Also, he showed how we can set the timeout configuration for an action.

When the timeout expires, we can take some action again by setting run after configuration to “has time out”

Workflow expressions

He spoke about important aspects of workflow expressions. Following are the highlights.

- Any input that changes for every run is an expression. He showed some example expressions.

- He explained the difference between different constructs such as “@”, “{}”,”[]” and “()”.

@ is used for referring a JSON node, {} means a string, [] is used as JSON path and () is used to contain the expressions for evaluation. He also showed the order in which elements of an expression executed.

Summary

As explained earlier it was a real treat for all the logic app enthusiasts and gave a lot of insights into a logic app platform.

- The first session from Mayank and Divya gave the audience a great level of confidence about going with logic app implementations.

- The session from Jeff and Derek brought an understanding of logic apps internals and patterns.

Author: Srinivasa Mahendrakar

Technical Lead at BizTalk360 UK – I am an Integration consultant with more than 11 years of experience in design and development of On-premises and Cloud based EAI and B2B solutions using Microsoft Technologies. View all posts by Srinivasa Mahendrakar

by Sriram Hariharan | Jun 27, 2017 | BizTalk Community Blogs via Syndication

After an exciting Day 1 at INTEGRATE 2017 with loads of valuable content from the Microsoft Pro Integration team, it was time to get started with Day 2 at INTEGRATE 2017.

Important Links – Recap of Day 1 at INTEGRATE 2017,

Photos from Day 1 at INTEGRATE 2017

Session 1 – Microsoft IT journey with Azure Logic Apps by MSCIT team

Day 2 at INTEGRATE 2017 started off with Duncan Barker of BizTalk360 introducing Mayank Sharma and Divya Swarnkar from the Microsoft IT Team. The key highlights from the session were –

-

- Integration Landscape at Microsoft has over 1000 Partners, 170M+ Messages per month, 175+ BizTalk Servers, 200+ Line of Business Systems, 1300+ Transforms and a Multi platform that supports BizTalk Server 2016, Azure Logic Apps, and MABS

- Microsoft IT Team showed why the team were motivated to move to Logic Apps –

- Modernization of Integration (Serverless Computing + Managed Services, business agility and accelerated development)

- Manage and Control Costs based on usage

- Business Continuity

- The following image shows where the MSCIT team is placed today in terms of number of releases. Microsoft Azure BizTalk Services will be retired by end of July.

- Microsoft IT team uses Logic App pipeline to process EDI messages coming from partners

- For testing purposes, Microsoft IT team uses Azure API Management policies to route the message flows to parallel pipelines for testing purposes

- The team at Microsoft IT uses Operations Management Suite (OMS) for Logic Apps diagnostics. This was briefly covered earlier by Srinivasa Mahendrakar in one of the Integration Monday sessions – Business Activity tracking and monitoring in Logic Apps. Microsoft IT have migrated all their EDI workloads off of MABS and BizTalk and onto Logic Apps.

-

- Microsoft IT only uses BizTalk for its adapters to connect to LOB systems, while all processing happens in Logic Apps.

- Finally, the team shared their learnings while working with Logic Apps

- Each Logic App has a published limit – make sure you understand what they are

- Consider the nature of flow you will create with Logic Apps – high throughput or long running workflows

- Leverage the platform for concurrency (SplitOn vs. ForEach)

- Understand the structure and behavior of data (batched vs. non-batched)

- Consider a SxS strategy to enable test in production

- In Logic Apps, your delivery options are ‘atleast once’ or ‘at most once’ (not ‘only once’)

Jim Harrer was really appreciative and thankful to the Microsoft IT team for making their trip to London to share their experiences.

Session 2 – Azure Logic Apps – Advanced integration patterns

This was one of the most expected sessions on Day 2 at INTEGRATE 2017 with Jeff Hollan (Sir Hollywood) and Derek Li talking about “Advanced integration patterns”. The agenda of the session included talks on –

- Logic Apps Architecture

- Parallel Actions

- Exception Handling

- Other “Operation Options”

- Workflow Expressions

The Logic Apps architecture under the hood looks as follows –

An important point to observe is that the ForEach loop in Logic Apps runs the tasks in parallel!

Awesome overview from @jeffhollan @logicappsio on how #LogicApps are executed by the runtime. No thread management needed!!

The Logic Apps designer is basically a TypeScript/React app that uses OpenAPI (Swagger) to render input and output. The Logic Apps designer has the capability to generate Workflow definition (JSON). You can configure the runAfter options via the Logic Apps designer.

This statistic made by Jeff Hollan was probably the highlight of the show

In the history of #LogicApps, there hasn’t been a single run that hasn’t executed at least once.

After a very interesting demo by Derek Li, Jeff Hollan started his talk on Workflow Expressions. An expression is anything but any input that will be dynamic (changes at every run). Jeff explained the different expression properties in a easy to understand way –

@ – Used to indicate an expression. It can be escaped with @@. Example – @foo()

() – Encapsulate the expression parameters – Example – @foo(‘Hello World’)

{} – “Curly braces means string!!!“. This is same as @string() – Example – @add{(1,1)}

[] – Used to parse properties in the JSON objects – Example – @foo(‘JsonBody’) [‘person’][‘address’]

This session from Jon Fancey and Derek Li was well received by the audience at #Integrate2017.

Jon also made the mention about the feature where customers can test the expressions in the designer which is coming soon!

Session 3 – Enterprise Integration with Logic Apps by Jon Fancey

In this session, Jon Fancey started off his presentation by talking about Batching in Logic Apps and how it works –

- There are basically two Logic Apps – Sender and Receiver

- Batcher is aware of the Batching Logic App; whereas Batching Logic App is not aware of the batchers (1:n)

What’s coming in Batching?

- Batch Flush

- Time based Batch release trigger options

- EDI Batching

Jon Fancey moved into the concept of Integration Account (IA) and made the mention about the VETER pipeline being available as a template in Azure Logic Apps using Integration Account.

- Integration Account is the core to XML and B2B capabilities

- IA provides partner creation and management

- IA provides for XML validation, mapping and flatfile conversion

- Provides tracking

Jon listed the Logic Apps enhancements coming soon for working with XML such as:

- XML parameters

- Code and functoids

- Enhancements soon

- Transform output format (XML, HTML, Text)

- BOM handling

Jon showed a very interesting demo about how to transform an XML message with C# and XSLT in Logic Apps. You got to wait a little longer till the videos are made available on the INTEGRATE 2017 event website 🙂

Disaster Recovery with B2B, and how it works?

In the final section of his presentation, Jon discussed about the Monitoring and tracking of Azure Logic Apps. This part was covered by Srinivasa Mahendrakar on one of his recent Integration Monday sessions.

Jon showed an early preview (mockup) of the OMS Dashboard for Azure Logic Apps that’s coming up soon. With this, you can perform Operational Monitoring for Logic Apps in OMS with a powerful query engine. You can expect this feature to be rolled out mid-July!

With that, completed the first set of sessions for the morning on Day 2 at INTEGRATE 2017.

Session 4 – Bringing Logic Apps into DevOps with Visual Studio and monitoring by Jeff Hollan/Kevin Lam

Once again, but unfortunately for the last time on stage, it was time for Sir Hollywood Jeff Hollan to rock the stage with his partner Kevin Lam to talk about bringing Logic Apps into DevOps with Visual Studio and monitoring.

The key highlights from the session include –

Visual Studio tooling to manage Logic Apps

- Hosted the Logic App Designer within Visual Studio

- Resource Group Project (same project that manages the ARM projects)

- Cloud Explorer integration

- XML/B2B artifacts

Make sure you have selected “Cloud Explorer for Visual Studio 2015 and Azure Logic Apps Tools for Visual Studio” these tools in order to be able to use Logic Apps from Visual Studio. It also works on Visual Studio version 2015/2017.

Kevin and Jeff showed the demo of the Visual Studio tooling with a real time example of using Logic Apps in Visual Studio.

Azure Resource Templates

- You can create Azure Resource Templates that get shipped on to Azure Resource Manager.

- Azure Resources can be represented and created via programmatic APIs that are available at http://resources.azure.com. This is a pivot to Azure where you are looking at the API version of your resources.

- Resource templates define a collection of resources to created

- Templates include –

- Resources that you want to create

- Parameters that you want to pass during deployment (for example)

- Variables (specific calculated values)

- Outputs

Service Principal

With this, you can get authorization to an application that you create and then say the application has access to the resources.

Jeff wrapped up the session by showing a demo of how the deployment process works, in detail. You can watch the video that will be available in a week’s time on BizTalk360 website for the detailed understanding of the steps to perform a deployment.

With this wrapped up the 1.5 days of sessions from Microsoft on core integration technologies, and what’s coming up from them in the coming months. It was now time for the Integration MVPs to take the stage and show what they’ve done/achieved, or what they can do with the various offerings from Microsoft.

Session 5 – What’s there & what’s coming in BizTalk360 & ServiceBus360 by Saravana Kumar

Saravana was given a “warm” welcome with a nice music and a loud applause from the audience! 🙂 Saravana thanked the entire Microsoft team for their presence and effort at INTEGRATE 2017 over the last 1.5 days.

Key Highlights from Saravana’s session

BizTalk360 Updates

- BizTalk Server License Calculator

- Folder Location Monitoring

- Queue Monitoring

- Email Templates

- Throttling Monitoring

- On-Premise + Cloud features

- Azure Logic Apps Management

- Azure Logic Apps Monitoring

- Azure Integration Account

- Azure Service Bus Queues (monitoring)

You can get started with a 14-day FREE TRIAL of BizTalk360 to realize the full blown capabilities of the product.

ServiceBus360

Saravana discussed the challenges with Azure Service Bus and how ServiceBus360 helps to solve the Operations, Monitoring and Analytics issues of Azure Service Bus.

You get ServiceBus360 with a pricing model as low as 15$. We wanted to go with a low cost, high volume model for ServiceBus360. You can also try the product for FREE if you are keen on trying the product. If you are an INTEGRATE 2017 attendee, we have a special offer for you that you cannot afford to miss.

With that it was time for the attendees to break for lunch on Day 2 at INTEGRATE 2017. Lots more in store over the remaining 1.5 days!

Post Lunch Sessions – Session 6 – Give your Bots connectivity, with Azure Logic Apps by Kent Weare

We’ll take you through a quick recap of the post lunch sessions on Day 2 at INTEGRATE 2017.

Kent Weare started off his talk about his company and how they are coping up to the business transformation demands from the government and local bodies in Canada. Kent then shows how their company has grown over the years and how much it will mean to them in terms of cost of business transformation. The approach they have taken is by moving towards “Automating Insight, Artificial Intelligence, Machine Learning, and BOTS”.

Kent then showed why BOTS are gaining the popularity these days – to Improve Productivity! Bots is something very similar to IMs which users are very familiar with.

Kent then stepped into his demo where the concept was as follows –

Kent wrapped up his session with the following summary for companies to take advantage of the latest technology in store these days.

Session 7 – Empowering the business using Logic Apps by Steef-Jan Wiggers

After Kent Weare, Steef-Jan Wiggers took over the stage to talk about Empowering the business using Logic Apps. This talk from Steef-Jan Wiggers was more from the end user/consumer perspective of using Logic Apps.

Steef took a business case of a company called “Cloud First” that wanted to move to the cloud (and chose Azure). All his talk in this session was focussed towards this company who wanted to migrate to cloud with minimal customization and by having a unified landscape. Steef also showed some sentiment around the developer experience with Logic App.

Steef showed a demo that calculates the sentiment of #Integrate2017 (which is exactly something similar folks at BizTalk360 also have tried and reproduced in the Day 1 Recap blog).

After the end of the demo, Steef talked about the Business Value of Logic Apps –

- Solving business problem first

- Fit for purpose for cloud integration’

- Less cost; Faster time to market

Session 8 – Logic App continuous integration and deployment with Visual Studio Team Services

After Steef, Johan Hedberg took the stage to talk about Logic App continuous integration and deployment with Visual Studio Team Services. Johan set the stage for the session by giving a example –

- Pete is a web developer who loves the Azure Portal and has an amazing time to market. Generally, he is fast but has no process.

- Charlotte loves Visual Studio. She wants to bring the Logic App from Visual studio with Source control.

- Bruce is an operations guy. He does not like Pete and Charlotte having direct access to production. He likes to have a process over anything and would want to approve things before it goes out.

Therefore, what all 3 of them are missing is a common process/pipeline of how to perform things such as –

- Lack of development standards

- Process standards

- Security standards

- Deployment standards

- Team communication and culture, and more

Therefore, in this session (and demo), Johan shows how users can use continuous integration and deployment with Visual Studio Team Services using Logic Apps.

Sessions 9 & 10 – Internet of Things

In the last two sessions of Day 2 at INTEGRATE 2017, Sam Vanhoutte and Mikael Hakansson talked about Integration of Things (IoT).

Sam Vanhoutte talked about why integration people are forced to build good IoT solutions. He showed the IoT End-to-End value chain with a nice diagrammatic representation.

Then Sam talked about the different points in the Industrial IoT Connectivity challenge. The points are –

- Direct connectivity (feels less secure)

- Cloud gateways (easier to start in a cloud setup)

- Field gateways (feels more secure)

Sam spoke about Azure IoT Edge, the required hardware for Azure IoT Edge and more about flexible business rules for IoT solutions.

Mikael Hakansson started off his IoT talk from where Sam Vanhoutte left the speech, but there came the fun part of the session. Sandro Pereira had to stop Mikael from delivering his presentation and make him wear the “Green” color shirt for losing a bet (well not sure if Mikael was a part of that bet at all and his friends unanimously agreed he lost the bet 🙂 )in a football match. (so did Steef-Jan Wiggers and he was wearing a green shirt too!)

Mikael started off his talk about IoT === Integration and he introduced the concept of Microsoft Azure IoT Hub in detail.

- Stand-alone service or as one of the services used in the new Azure IoT Suite

- With Azure IoT Hub, you can connect your devices to Azure:

- Millions of simultaneously connected devices

- Per-device authentication

- High throughput data ingestion

- Variety of communication patterns

- Reliable command and control

Mikael gave a very cool demo on IoT with Azure Functions in his usual, calm way of coding while on stage. We recommend you to watch the video to see the effort that has gone behind to prepare for the demo and actually be able to code while presenting the session.

End of the Sessions

At the end of the session, it was curtains down on what promised to be another spectacular day of sessions at INTEGRATE 2017. The team gathered for a lovely photo shoot courtesy photographer Tariq Sheikh.

With that we would like to wrap our exhaustive coverage of Day 2 proceedings at INTEGRATE 2017. Stay tuned for the updates from Day 3. Until then Good night from London!

ICYMI: Recap of Day 1 at INTEGRATE 2017

Author: Sriram Hariharan

Sriram Hariharan is the Senior Technical and Content Writer at BizTalk360. He has over 9 years of experience working as documentation specialist for different products and domains. Writing is his passion and he believes in the following quote – “As wings are for an aircraft, a technical document is for a product — be it a product document, user guide, or release notes”. View all posts by Sriram Hariharan

by Lex Hegt | Jun 27, 2017 | BizTalk Community Blogs via Syndication

During the second day of Integrate 2017, Jeff and Kevin did a session on DevOps for Logic Apps. They explained the available tooling in Visual Studio for that purpose:

- Hosted Logic App designer

- Cloud Explorer

- Resource Group Projects

Hosted Logic App designer – First of all, since some time it is possible to create your Logic Apps from within Visual Studio. This enables you to for example use Team Foundation Services or Visual Studio Services to check-in your Logic Apps code.

Cloud Explorer – Another feature in Visual Studio is the Cloud Explorer which, besides browse and search capabilities for your Logic Apps, the following features:

- Edits done in the designer can be published to the Azure portal

- Enable, disable and delete Logic Apps

- Run Logics Apps and view the Run History

- Download Logic App which is handy when started development in the portal

After download the Logic App, you can add the Logic App as an existing item to a Resource Group project, which we will discuss now.

Resource Group projects – Visual Studio comes with the Resource Group project type, which allows you to persist artefacts and configuration for your deployments. Amongst the features are: