by Jeroen | Feb 22, 2017 | BizTalk Community Blogs via Syndication

After rolling out BizTalk 2013R2 CU5 on a development server we experienced the following error when loading the group hub:

“Could not find stored procedure ‘ops_ClearOperationsProgress’”

It looked like something when wrong during the installation of the cumulative update. After investigation of the log file, the following SQL error showed up:

To conclude: the installation failed because of insufficient SQL access rights.

(To bad the installer didn’t check this requirement before starting the installation…)

In the end we were able to fix the issue by taking the following steps:

- Providing the SQL sysadmin role

- Uninstalling the CU5 update

- Restoring the BizTalkMsgBoxDb database prior the update

- Installing the CU5 update again

My key takeaways regarding BizTalk updates:

- Always test BizTalk updates before rolling them out!

- Create a backup of your BizTalk databases before installing anu update – even for your development environments!

- Read the installer log file!

by Sandro Pereira | Feb 21, 2017 | BizTalk Community Blogs via Syndication

“The risk of changing the name of something people have grown to know and love is too big. For others, the risk of being boxed into something they no longer feel much affinity for is even bigger”

For the last 6 or 7 years, I had my blog hosted on WordPress.com which is an amazing (for me the best) platform and that served my purposes well. However, as everything that is free, it has its limitations… and the two main limitation for me was:

- Design Customization: The customizations that you are able to do in a free WordPress is very limited and also the list of available themes. And it was impossible to make the layout and look of my blog exactly how I see it in my head.

- Advertising: The fake annoying ads that were appearing in my blog posts that I could not control, annoying me and to my readers. The reason here was not to have the option to monetize my blog (which, to be honest, also always helps a little) but especially to get rid of this fake ads.

I have to say, it was an old dream of mine, and some of my friends had already warned me that I should redesign my blog and asked me why didn’t I do it …. My response was always the same: I don’t really have time to do it, my skills at web designer are not the best these days and I don’t really want to spend extra money… but the dream was there that one day I will find a way to do it…

… Well, I’m glad to say that this day has come! Welcome to my “new” blog!

At first glimpse, it looks the same as the old one with a new modern layout/skin. In fact, this blog is a continuation of my former blog hosted on WordPress.com, but this one is actually hosted on my own site so, now, I can do lots of new things with it.

The old blog is redirecting to this new blog and all the old content is here, so you should expect the same content but I hope with better quality and a better user experience.

Nonetheless, this dream would not have come true without the help of my dear friend Saravana Kumar and his amazing team behind BizTalk360 … yes, my blog sponsor!

While I was discussing with him my dream, he not only made himself available to sponsor my blog… but he also said… you do not have to do anything, I have an excellent team very experienced and able to do everything that is necessary to migrate your blog including redesign it according to your specifications… and they did it!

After several weeks of work behind the scenes, BizTalk360 team made an amazing and extraordinary work that I now present to you… During this time, I just had to relax and in only in last week, I step in and review one by one a modest amount of 543 posts.

So, thank you Saravana and BizTalk360 for collaborating with me, for supporting me and for making this dream a reality!

But do not be fooled … This is still my personal blog, I now have a sponsor, but I will continue to be independent and speaking about any topic or tool in an impartial way.

However, the surprises don’t end here … I have some amazing free gifts provided by my sponsor that I will be giving away within a few days: A Free Production Bronze License of BizTalk360 and some Free ServiceBus360 namespaces.

Stay tuned for more details about this gifts!

Once again, THANKS BizTalk360 team for this amazing work and I hope that my readers enjoy this new and more professional layout and that they consider it more appealing with better quality and better user experience.

Author: Sandro Pereira

Sandro Pereira lives in Portugal and works as a consultant at DevScope. In the past years, he has been working on implementing Integration scenarios both on-premises and cloud for various clients, each with different scenarios from a technical point of view, size, and criticality, using Microsoft Azure, Microsoft BizTalk Server and different technologies like AS2, EDI, RosettaNet, SAP, TIBCO etc. He is a regular blogger, international speaker, and technical reviewer of several BizTalk books all focused on Integration. He is also the author of the book “BizTalk Mapping Patterns & Best Practices”. He has been awarded MVP since 2011 for his contributions to the integration community. View all posts by Sandro Pereira

by Praveena Jayanarayanan | Feb 20, 2017 | BizTalk Community Blogs via Syndication

The BizTalk360 Support Process

As the product support team, we are customer facing people. The support forms the backbone of every product developed. In BizTalk360 we follow the mantra of “Exceptional Customer Support” and this is made possible through our support process.

In our flagship product, BizTalk360, the all-in-one solution for Operating, Monitoring and Analyzing Microsoft BizTalk environments, customer support plays a major role. The BizTalk360 support started with the tradition ways like emails and calls. Soon our CTO, Saravana Kumar, realized that we should have a proper channel for communication and recording the issues. This was possible with Freshdesk. There starts the support process: a complete rule management system.

The customers raise issues and we track these through the assist portal with Freshdesk. What if that issue is really a bug? We have to provide the fix. Here comes the Jira software for internal tracking purpose and to coordinate with the internal technical team. Sometimes, we do need to check for issues at customer end. Then we use GoToMeeting for a screen sharing session with the customer. This is the complete pack of the BizTalk360 support process.

The story of BizTalk360 support with Freshdesk

The motto being “Customer happiness, refreshingly easy“, BizTalk360 support also aims for the same. The dashboard is the most significant feature, it gives a clear picture of all the open, overdue and unresolved tickets. We can see our conversations with the customer by clicking on each ticket. This channel seems to have improved the support process.

As a Product support team, we make sure there are no long pending tickets. We have included the customer survey which will rate our support to the customer. The portal takes care of rule management so that the tickets get assigned to the right people. Whenever a customer raises an issue, support team members get a notification email. The ticket gets assigned to the person who will take the responsibility of resolving the issue.

The number of conversations with the customer also matters. We cannot go on dragging the issue for a long time until and unless, the customer is busy and delayed in response. There is an “Add private note” section where the internal team members get notified about the discussion on the issue raised, if any technical assistance is required.

We have the escalation process in our support to make sure the customer’s issue is taken care of and resolved in time. There are different levels of support team namely L1, L2, L3 and L4 in the ascending order. When a customer raises a ticket, it gets assigned to the L1 Team. This team does the analysis and provides the solution to the customer. In case, if we need any help in resolving the ticket, we escalate it to the next L2 level technical team, adding our observations and details in the private notes section. If it cannot be resolved by L2, then its moved to the L3 team and so on. The L1 team will make the follow ups on the escalated tickets and make sure that they are responded in time and closed after getting resolved.

Interested to have a look at the BizTalk360 Support Portal?

Leader-board Achievements

The activities of the support team can be monitored through the dashboard of the BizTalk360 Freshdesk. It says who has done what. The rating in the Leaderboard is based on the number of conversations that we handle to resolve the issue and close the ticket. This helps to improve the agent productivity. The different trophies based on the different criteria include:

- Most Valuable Player: Agent with the most overall points for the current month.

- Customer “Wow” Champion: Agent with the maximum Customer Satisfaction points for the current month.

- Sharpshooter: Agent with the highest First Call Resolution (Ticket was solved with only one interaction between agent and customer) points for the current month.

- Speed Racer: Agent with the maximum points for Fast Resolution (Ticket was solved in under an hour) for the current month.

The Leaderboard is reset on the first of every month so everyone can start over with a clean slate.

Recently we started with the addition of survey rating for our support. When the survey rating was started, my teammate Sivaramakrishnan was leading in all the four categories. Our team is really proud of him. Being with the team for two whole years, he has gained a very good knowledge on the functionality of the product.

Behind every brilliant performance there were countless hours of practice and preparation.

– Eric Butterworth

This motivation is required for each and every team member to gain high customer satisfaction with clear and appropriate resolution to the issues raised. Of course, we do have some lags when the issue raised by the customer is a rare scenario, which we might not have seen before. Then unfortunately there are a large number of interactions which may make the customer frustrated. The customer’s experience or feelings will help to improve business, especially in the areas of Sales and Marketing. Positive experiences get passed along and even the bad experience would turn into a lesson of improvement for our BizTalk360 Support team. For quick resolution we also set up web meetings through GoToMeeting website where we can have a screen sharing session with customers.

The SLA’s for the tickets would be based on the priority of the tickets raised. For example, for a low priority ticket, the due date would be three days. We see to it that we resolve the tickets on time for better customer satisfaction.

The status of the tickets is categorized as Open, Pending, Bug, New Feature Request etc., based on the issues raised and the conversations handled. When we wait for a reply from the customer, we set the status as “Waiting on Customer”. The status of the ticket is changed to closed, once the issue is resolved. This is called “refreshingly easy”, where every act refreshes the status of the issue raised. For the New Feature request, we recommend the customer to add it to our user voice in the feedback portal, where we collect new requirements or enhanced requirements of existing features and take it for development, based on the number of votes received.

The Jira software

The customer raises an issue and if it’s found to be a bug, we have an internal tracking system called Jira. This issue tracking software is used by development and technical support team to get more work done and getting it done faster. When we confirm that an issue is a bug, we create a Jira ticket, assign it to the development team and tag the ticket as “bug” in the Assist portal. They will fix the issue and add it to the upcoming release. We can define the workflow for the same. This is used as an internal tracking system for us. Jira is updated whenever the issue is fixed. We will inform the customer about the fix and close the ticket once after the customer confirmation.

The GoToMeeting

What if we are not able to reproduce the issue raised by the customer or we need to know their environment setup? Instead of dragging on too many conversations, it would be better if we contact the customer directly. But how? Here comes the GoToMeeting, which is an online meeting, desktop sharing, and video conferencing software that enables the user to meet customers via internet in real time. This way, the customer will be able to show their issue where either we can directly resolve them or get the appropriate details regarding the issue.

Before arranging for the meeting, we will confirm the convenient time of the customer and then send the invite. This helps the customer to prepare their environment and even helps us to collect the necessary details. Some customers feel happy when we ask for the call because they feel that it is the faster way to resolve the issue. But some may feel that it’s a waste of time, if we don’t provide a solution or if we ask for some other information.

We get a chance to interact with different types of customers in the call. Some of them would patiently listen to us, explain their problem and provide the required details. But some of them would become impatient when we ask them some details. But we assure that the issue gets resolved at the earliest so that the customer’s confidence is not shaken.

Conclusion

With the different tracking systems along with the Support Assist portal, we are providing an exceptional customer support. This can be known from the customer survey ratings, where we have got the rating as “Awesome” making the agents as the “Customer Wow Champion”. We continue our teamwork in achieving “Maximum Customer Satisfaction”.

Author: Praveena Jayanarayanan

I am working as Senior Support Engineer at BizTalk360. I always believe in team work leading to success because “We all cannot do everything or solve every issue. ‘It’s impossible’. However, if we each simply do our part, make our own contribution, regardless of how small we may think it is…. together it adds up and great things get accomplished.” View all posts by Praveena Jayanarayanan

by Gautam | Feb 19, 2017 | BizTalk Community Blogs via Syndication

Do you feel difficult to keep up to date on all the frequent updates and announcements in the Microsoft Integration platform?

Integration weekly update can be your solution. It’s a weekly update on the topics related to Integration – enterprise integration, robust & scalable messaging capabilities and Citizen Integration capabilities empowered by Microsoft platform to deliver value to the business.

If you want to receive these updates weekly, then don’t forget to Subscribe!

On-Premise Integration:

Cloud and Hybrid Integration:

Feedback

Hope this would be helpful. Please feel free to let me know your feedback on the Integration weekly series.

by Sriram Hariharan | Feb 17, 2017 | BizTalk Community Blogs via Syndication

The Azure Logic Apps team conducted their monthly webcast earlier this morning – well, not from the same room/venue they normally do! This time it came all the way from Down Under on a bright, sunny Friday morning at Gold Coast, Australia. The entire Microsoft Pro Integration team is at Microsoft Ignite, Australia (#MSAUIgnite) and they have been having a great time talking about Logic Apps, BizTalk, API Management, Azure Service Bus and more.

Before I get into the updates from the team, a huge shout out to Jeff Hollan, Kevin Lam, Jon Fancey, and of course Jim Harrer for bringing in this session live from Australia and trying out a new outdoor experiment of the remote webcast. Well done!

Now on to the updates from the session –

What’s New?

- Header name-values control – Instead of having the JSON block, you can have the name-value pair in the HTTP control. However, if you want to still have the JSON block, you can get it done through the Logic Apps designer window.

- Updates to Portal Management Blade

- Clean ups to Resource blade – Users can now search for a particular run id and jump in directly to that particular run

- You can filter directly on the status (Flow History filter)

- Open a run directly

- Relative URL with custom methods – Say, you have a request trigger and you want to change the URL of the request trigger, this is now possible in the designer. The root name however remains the same but you still can add extensions to it. You can now create a Logic App with a GET and pass the parameters as a query string in the URL which gets passed as a token inside the Logic App.

- Edit Azure Function from Logic App – If you are having a Function, you will now see a link (easy one click link) from which you can jump directly to your function in the Functions blade and edit it, and come back to the designer. You no longer need to search for your functions in order to edit it.

- Generate JSON schema from sample payload – You can put schemas in two different places – request trigger and parse JSON action. You no longer need to go to a different website to generate the JSON Schema, instead there is a button readily available with which you can generate a JSON Schema with some sample payload. You can later edit the schema as per your requirements. This is definitely a time saving option for developers.

New SaaS Connectors

- Azure Automation – You can now trigger a Runbook directly from your Logic Apps. The action to run a Runbook will wait for the Runbook to complete and you can get the output from the Runbook. You can achieve a lot of Dev-Ops scenarios with this brand new Azure Automation connector.

- Azure Data Lake – Dump your data into the lake easily (LOL!!). Well, that’s exactly what this connector helps developers, data scientists and analysts. Store the data of any size, shape and speed easily into the Azure Data Lake using the new Azure Data Lake connector.

- LUIS – You can now do BOT related stuff with this connector

- Bing new search

- Basecamp 2

- Infusionsoft

- Pipedrive

- Eventbrite

- Bitbucket

New Operations

SQL on-prem stored procs – You can now get the stored procs from SQL Azure on to your on-premise installation

Improvements to Logic Apps Designer

Jeff showed a demo on the recent improvements to the Logic Apps Designer. We’ll list out some of the cool things that have been added in the last few weeks –

- Improved Logic Apps Designer (Templates) screen – The templates screen definitely sports a new look with a very nice video by Jeff Hollan that shows how you can get started with a Logic App in less than 5 minutes. Yes, Jeff! That deserves an Oscar (wink)! You’ve got to watch the Logic Apps Live webcast video and jump to 7:07 to understand what I mean here.

- Start with a common trigger – You may not want to start from a blank template all the time. So, a new section called “Start with a common trigger” has been introduced (as suggested by a fellow Program Manager at Microsoft Pro Integration Team).

- When creating a new Request/Response Trigger, you can now choose from a list of advanced options – the Method of the request trigger (GET, PUT, POST, PATCH, DELETE) and enter a Relative Path (e.g., /foo/{id}).

Similarly when adding an action for this trigger, the designer is now able to identify that an ‘id’ will actually be passed as a parameter with the URL, in addition to the body, header, path parameters, queries.

What’s in Progress in Azure Logic Apps?

Here’s a look at what more is expected from the Azure Logic Apps team in the coming months –

- Variables – Once implemented, you will be able to declare variables and use them within the Logic App

- Exposing Retries – You will be able to see the action retries, errors & successes and what the results actually were

- Expression evaluation in Run History– Break down of the evaluation of each workflow definition language functions in the Run History so that you can see what/how it actually evaluated

- Convert array as table or CSV action – This is something similar to a daily/weekly digest. For e.g., you want to convert a set of tweets into a HTML table and send it as an email.

- Service Bus namespace picker – If you are using the Service Bus connector, the connector will list all the Service Bus namespaces. You can choose the policy that you want and you can easily establish a connection with Service Bus.

- Partner and EDIFACT tiles in OMS

- A new region has been added – Canada!! (Well, looks like Jeff, Kevin, and Jon love Canada a lot!!!)

- Connectors (in the pipeline)

- Oracle DB

- Oracle EBS

- SQL Trigger

- Blob trigger

- Zendesk

- Act!

- Intercom

- FreshBooks

- Lean Kit

- WebMerge

- Inoreader

- Pivotal Tracker

- Paylocity

This was a Special Session of Firsts. Why??

At the halfway mark, again! there was a surprise on the Logic Apps webcast. Seems like a session of firsts this one!! Jeff, Kevin and Jon had a special guest – Daniel from Perth, Australia who is one of the early adopters of Logic Apps. Daniel gave a brief idea about the project that he has been working on with Logic Apps. In fact, Daniel’s integration solution spans across almost 40 different workflows and Daniel talks about the best practices to manage such a complex solution. You can watch the webcast between 13:20 and 32:40 to understand more about Daniel’s implementations and the technologies they are using.

Oh, and did I miss about one more first – Jeff’s laptop getting overheated during the course of the webinar (blazing sun and sunny day) and feel sorry for the guys to have done the recording (continuation) with Daniel at a later time.

Performance Improvements

- Service Bus Performance

- Triggers normally use long polling intervals, and a minimum polling interval is about 30 seconds. The Pro Integration team have now added batching to the trigger and the trigger can receive up to 175 messages/trigger.

- The trigger limits are now increased to 3000/minute (further even upto 6000/minute as testings are underway) from existing 2000/minute

- Auto complete actions can now handle about 300 messages/second, and this will increase further with more connections and Logic Apps

- Limitless

- Larger messages

- Higher throughput

- Increased limits on foreach and other logical constructs

Community Content

Towards the end of the session, the team pointed out on the upcoming Global Integration Bootcamp event happening on March 25, 2017 around the world. As on date, the event is happening in 11 different locations – Australia, UK, USA, Belgium, Finland, Norway, The Netherlands, New Zealand, Portugal, India, and Sweden.

Did You Know? BizTalk360 is proud to organize the Global Integration Bootcamp in 2 out of the 11 locations – UK and India.

You can sign up for these events by clicking the following links:

UK – Register Today

India – Register Today

If you are working on logic apps and have something interesting, feel free to share them with the Azure Logic Apps team via email or you can tweet to them at @logicappsio. You can also vote for features that you feel are important and that you’d like to see in logic apps here.

The Logic Apps team are currently running a survey to know how the product/features are useful for you as a user. The team would like to understand your experiences with the product. You can take the survey here.

If you ever wanted to get in touch with the Azure Logic Apps team, here’s how you do it!

You can watch the full video here to get a feel of the new features that were discussed during the webcast.

[embedded content]

Previous Updates

In case you missed the earlier updates from the Logic Apps team, take a look at our recap blogs here –

Author: Sriram Hariharan

Sriram Hariharan is the Senior Technical and Content Writer at BizTalk360. He has over 9 years of experience working as documentation specialist for different products and domains. Writing is his passion and he believes in the following quote – “As wings are for an aircraft, a technical document is for a product — be it a product document, user guide, or release notes”. View all posts by Sriram Hariharan

by Saravana Kumar | Feb 15, 2017 | BizTalk Community Blogs via Syndication

We are very happy to announce the immediate availability of BizTalk360 version 8.3. This is our 48th product release since May 2011. In version 8.3 release, we focused on bringing the following 6 features –

- Azure Logic Apps Management

- Webhooks Notification Channel

- ESB Portal Dashboard

- EDI Reporting Manager

- EDI Reporting Dashboard

- EDI Functional Acknowledgment Viewer

We have put together a release page showing more details about each feature, links to blog articles and screen shots. You can take a look here : https://www.biztalk360.com/biztalk360-version-8-3-features/

Public Webinars

We are conducting two public webinars on 28th Feb to go through all the features in version 8.3. You can register via the links below.

BizTalk360 version 8.3 Public Webinar (28th Feb – Europe)

https://attendee.gotowebinar.com/register/2736095484018319363

BizTalk360 version 8.3 Public Webinar (28th Feb – US)

https://attendee.gotowebinar.com/register/1090766493005002755

You can read a quick summary of each feature below.

Azure Logic Apps Operations

You can now manage your Azure Logic Apps right inside BizTalk360 user interface. This eliminates the need to log in to the Azure Portal to perform basic operations on the Logic Apps. Users can perform operations such as Enable, Disable, Run Trigger and Delete on the Logic App. In addition to performing the basic operations, users can view the trigger history and run history information in a nice graphical and grid view. We are making further investments in Azure Logic Apps management and monitoring, to give customers a single management console for both BizTalk and the Cloud. Read More.

Webhook Notification Channel

The new webhook notification channel allows you to send structure alert notification (JSON format) to any REST endpoints whenever there is a monitoring violation. This opens up interesting scenarios like calling an Azure Logic App and doing further processing. Read More.

ESB Dashboard

The new ESB dashboard allows you to aggregate different ESB reports into a single graphical dashboard that will help business users and support people to visualize the data in a better way. BizTalk360 has 13 different widgets that will help users to understand the ESB integrations better and help to analyze data to improve the performance. The widgets are categorized as –

- Fault code widgets (based on application, service or error type)

- Fault code over time widgets

- Itinerary Widgets

Read More

EDI Reporting Manager

The EDI reporting manager allows your to turn on/turn off reporting for each agreement in a single click. Performing this task in the BizTalk Admin Console is a tedious task especially when there are many parties and agreements. Similarly, administrators can perform bulk enable/disable operations on the NRR configuration. Administrators can add the Host Partner information required to configure the host party for NRR configurations.

EDI Reporting Dashboard

In version 8.3, out of the box, we are providing a rich EDI dashboard to aggregate different EDI transactions that help business users and support persons to visualize the data in a better way. You can also create your own EDI Reporting dashboard with three different widget categories we ship –

- EDI Interchange Aggregation widgets

- EDI Transaction Set aggregation widgets

- AS2 messaging aggregation reports

Read More

BizTalk360 has a few aggregations (as widgets) that are not available in BizTalk Admin Console reports such as Interchange Count by Agreement Name (Top 10), Interchange count by Partner Name (Top 10), Transaction count by ACK Status (Filtered by partner id), AS2 messaging aggregation reports.

EDI Functional Acknowledgement

In a standard B2B scenario, whenever a message (with multiple functional groups) is sent from source to destination, it is quite obvious that the source will expect a technical acknowledgment (TA1) and functional acknowledgment (997) for each functional group. What if the technical acknowledgment is received but not the functional acknowledgment? Taking a look at the status of functional acknowledgment is not an easy job with BizTalk Admin Console. With BizTalk360, administrators can easily view the status of functional acknowledgments within the grid and also set up data monitoring alerts whenever negative functional acknowledgments are received. Read More

Version 8.3 Launch page

https://www.biztalk360.com/biztalk360-version-8-3-features/

Version 8.3 Release Notes

http://assist.biztalk360.com/support/solutions/articles/1000244514

Author: Saravana Kumar

Saravana Kumar is the Founder and CTO of BizTalk360, an enterprise software that acts as an all-in-one solution for better administration, operation, support and monitoring of Microsoft BizTalk Server environments. View all posts by Saravana Kumar

by Steef-Jan Wiggers | Feb 14, 2017 | BizTalk Community Blogs via Syndication

BizTalk Server is able to communicate with the outside world, leveraging the Azure Service Bus Relay, Queues and/or Topics/Subscriptions. The latter is indirect one-way messaging (brokered) through listening to a specific subscription, in a topic or service bus queue. Through the relay, you can have direct two-way messaging. This capability can help in scenarios where you do not want to expose your ERP system like SAP or other directly to the cloud connected system. You might also have invested in a BizTalk solution and like to have an easy access to the cloud yet do not want to change the systems. The other benefit of using the relay is that you do not have to move your BizTalk installation into another zone like the DMZ to be closer to the outside world.

Other means of access to BizTalk can be indirect through the Logic App adapter (new with BizTalk Server 2016) or directly by exposing a BizTalk endpoint (WCF-BasicHttp, WsHttp or WebHttp) in IIS, accessible through reverse proxy. The latter is also possible using the BizTalk WCF Service Publishing Wizard that will extend the reach of the service to the Service Bus.

In this blog post, we’ll be discussing on securing a BizTalk endpoint that uses the BasicHttpRelay or NetTcpRelay i.e. a BizTalk endpoint configured with these WCF Bindings. Extending an endpoint using WCF Publishing Wizard doesn’t add extra capability, yet more complexity as the endpoint will be exposed internally through the chosen binding, however, to the Service Bus one of the relay bindings has to be selected (see picture below).

To be able to have an endpoint exposed to Azure Service Bus, you will need a namespace in your subscription. To setup a Service Bus Namespace is straightforward — in the Azure Market Place, select Service Bus and click Create. Specify a name i.e. that’s the namespace, choose a suitable price plan and specify the Resource Group and Location (regional data center).

In the picture above, you can clearly see what each price plan offers in terms of capacity, features and message size. This Azure service and others are very transparent in their costs and capability by vividly showing the options in pricing tiers.

Scenario

Our scenario to explain how to secure a relay configured BizTalk endpoint will be as follows:

- A receive location will be configured with a given address and security details from the Service Bus Namespace

- The endpoint will be hosted through BizTalk and registered in the Service Bus Namespace EnterpriseA

- API Management Instance will have an API that will connect through one of its operations to the relay endpoint

- A client can call the MyAPI order operation

The Service Bus Namespace credentials are abstracted away in the API management and not known to the MyAPI user. The MyAPI user will have to provide an API Key to be able to call the operation Order. The MyAPI can be further secured by applying OAuth 2.0 or OpenID, which we will not discuss in this blog.

Setting up BizTalk Endpoint with Relay binding

BizTalk Server started providing support to the Azure Service Bus with SB-messaging, WCF-BasicHttpRelay and NetTcpRelay adapters. The relay, as mentioned earlier, supports two-way messaging, and that will be the adapter we will use to build our scenario. The configuration of the relay in BizTalk is straight forward. You create a Receive Port, add a Receive Location, select the appropriate binding i.e. BasicHttpRelay or NetTcpRelay and configure.

The key aspects of the configuration are the address, which is the namespace of your service name to be placed in the standard DNS. servicebus.windows.net followed by the name of your endpoint at the end. And security, where we specify the security mode, client security (authentication type), and connection information i.e. SAS key, which can be obtained through Shared Access Policies tied to our Service Bus namespace.

Once we configured our adapter and enabled the ReceiveLocation, the endpoint will be registered in Service Bus Namespace.

The endpoint is available, however, not visible or discoverable through the Azure Portal. To see if the endpoint is up, we use ServiceBus360 or we could use the Service Bus Explorer.

Provisioning API Management

API Management is an Azure Service, which can be provisioned through the Azure Portal. The service provides an API gateway i.e. you can publish API’s to external and internal consumers. You can also provide a gateway to backend services hosted in your data center like a BizTalk endpoint (hosted in a Receive Location, or actually the Host Instance). The features API Management provides are:

- API documentation and an interactive console (developer portal)

- Throttle, rate limit and quota of APIs

- Monitor API health

- Support modern formats like JSON and REST to existing APIs

- Connect to APIs hosted anywhere on the Internet or on-premises and publish globally

- Gain analytic insights of APIs that are being used

- Management of your service (API Management instance) via the Azure portal, REST API, PowerShell, or Git repository

In this blog post, I will demonstrate some of the features mentioned above in relation to securing BizTalk endpoints.

To create an API Management Service, you can select API Management in the Azure Portal and click Create. Specify a name i.e. that’s the name of your API Management instance, choose a suitable pricing tier, specify the Resource Group, Organization Name, Administrator Email and Location (regional data center).

Again, in the picture above, you can clearly see what each price plan offers in terms of capacity, features and scale. The provision of your API Management service i.e. instance can take some time (30 minutes or more).

Securing the relay binding with API Management

Our first step is to open up the Publisher Portal by clicking Publisher Portal in our API Management Service. In the Publisher Portal, we can click Add API and specify a Web API name, the Service URL of our relay endpoint, which we like to secure. We place our API in Starter Product, which is an existing default product what limits us in 5 calls/minute up to a maximum of 100 calls/week. For demo purposes, this is sufficient. More on products, read Microsoft docs How to create and publish a product in Azure API Management.

The second step is adding an operation to the just defined API, which will be a REST type of operation i.e. POST on our endpoint i.e. the relay endpoint that is hosted by BizTalk. The endpoint for this operation will be the following https://enterprisea.servicebus.windows.net/myendpoint/order.

The API and its operation have been defined and the final step will be setting a policy on our operation. Policies in API Management are where a lot of the magic happens, like converting JSON to XML or vice versa, applying CORS, or set HTTP headers. The latter is what we need to set a pre-generated SAS token (using Shared Access Signatures, enter the token in a CDATA tag). We could ask the client of our API to set these headers, however we then devalue the strength of API management and enforce the client to provide the SAS-key. In that case the client needs to know the Service Namespace credentials, which is not what we want. With API Management, we can enforce other authentication strategies or just simply let the client use our API and provide only an API-Key. We will discuss this in testing the endpoint through API Management.

In the policy, we will set the Authorization header for accessing our relay endpoint and we will need a SAS-token. Now the SAS-Key needs to be generated by us and put into the set-header name policy as shown below.

<policies>

<inbound>

<set-header name="Authorization" exists-action="skip">

<value><![CDATA[SharedAccessSignature sr=https%3a%2f%2fenterprisea.servicebus.windows.net&sig=E26706kmlcrFlkdP%2bgcFqalfKRAxzO2Ht%2flz8BhuGaqQ%3d&se=1484788588&skn=RootManageSharedAccessKey]]></value>

<!-- for multiple headers with the same name add additional value elements -->

</set-header>

<base />

</inbound>

<backend>

<base />

</backend>

<outbound>

<base />

</outbound>

<on-error>

<base />

</on-error>

</policies>

The SAS-token will have a validity of a certain period of days or months, which at some point need to be refreshed in the policy. Fortunately, you can use a tool from Sandro Dimattia to obtain the token for a certain amount of time i.e. the Time To Live (TTL, see also Protecting your Azure Event Hub using Azure API Managment). We will further discuss this later on the considerations concerning the refreshing of the token.

Our API is now ready to be tested. It is already published and available, because we placed our API in the Starter Product, which is published and protected by default. Note that a custom product is not published by default.

Testing the endpoint through API Management

Testing of an API can easily be done in the Developer Portal of our API Management instance. In the Azure Portal, we click Developer Portal where we click on the API menu item (top bar) and select our API. The API has only one operation, which is Order. We will try it out and add a header to indicate the content type for the payload, and our request body (which the format our BizTalk endpoint is expecting).

When we hit Send we will be sending the following over the wire:

POST https://enterprisea.azure-api.net//Order HTTP/1.1

Host: enterprisea.azure-api.net

Ocp-Apim-Trace: true

Ocp-Apim-Subscription-Key: ••••••••••••••••••••••••••••••••

Content-Type: text/xml

<ns0:Request xmlns:ns0="http://EnterpriseA.Schemas.Orders">

<ID>123</ID>

<Description>My Product Description</Description>

<Quantity>1</Quantity>

<Price>100</Price>

</ns0:Request>

We receive the following response:

<strong>Response status</strong>

202 Accepted

<strong>Response latency</strong>

6033 ms

<strong>Response content</strong>

Transfer-Encoding: chunked

Strict-Transport-Security: max-age=31536000

Ocp-Apim-Trace-Location: https://apimgmtstigjqylbzcvt5mdi.blob.core.windows.net/apiinspectorcontainer/LghnyTlQR3qEhFUmXwPlsQ2-24?sv=2015-07-08&sr=b&sig=ewuBua9VTrXtwrrOmMeCdm5uA54uBtqB9FO2CjSVOl8%3D&se=2017-01-19T17%3A21%3A14Z&sp=r&traceId=1d50bac0dc28414a8cc1d46281e03e41

Date: Wed, 18 Jan 2017 17:21:20 GMT

Content-Type: application/xml; charset=utf-8

<ns0:Response xmlns:ns0="http://EnterpriseA.Schemas.Orders">

<ID>123</ID>

<Status>Ok</Status>

<Error>

<Code>0</Code>

<Description>No Error</Description>

</Error>

</ns0:Response>

We can also click on Trace to investigate deeper what happens behind the scenes i.e. beyond the API endpoint https://enterprisea.azure-api.net//Order. When we hit inbound we can inspect the call to the relay endpoint.

{ "configuration":

{ "api":

{ "from": "/",

"to":

{ "scheme": "https",

"host": "enterprisea.servicebus.windows.net",

"port": 443,

"path": "/myendpoint",

"queryString": "",

"query": {},

"isDefaultPort": true } },

"operation":

{ "method": "POST", "uriTemplate": "/Order" },

"user": { "id": "1",

"groups": [ "Administrators", "Developers" ] },

"product": { "id": "587f536c0c2a0e0060060001"

}

}

}

As you can see this is interesting detail information. We see the host i.e. endpoint of our relay with port 443 i.e. HTTPS type of port, path (/myendpoint) and uriTemplate (/Order).

We can drill down further by click Backend in Trace and observe the details there. We see the complete URL (address) where the payload will be posted.

{

"message": "Request is being forwarded to the backend service.",

"request":

{ "method": "POST",

"url": "https://enterprisea.servicebus.windows.net/myendpoint/Order",

"headers":

[ { "name": "Ocp-Apim-Subscription-Key",

"value": "fd8f172f22bf405283f7796ed90c8eb1" },

{ "name": "Content-Length",

"value": "184" },

{ "name": "Content-Type",

"value": "text/xml" },

{ "name": "Authorization",

"value": "SharedAccessSignature sr=https%3a%2f%2fenterprisea.servicebus.windows.net&amp;amp;amp;amp;sig=E26806kmlcrFydP%2bgcFqalfKRAxzO2Ht%2flz8BhuGaqQ%3d&amp;amp;amp;amp;se=1484788588&amp;amp;amp;amp;skn=RootManageSharedAccessKey" }

,

{ "name": "X-Forwarded-For",

"value": "13.94.139.231"

}

]

}

}

We also can observer the API Key i.e. Ocp-Apim-Subcription-Key, Content-Type, the header with the SAS-Token, and an IP address, which is the Public Virtual IP (VIP) address of our APIM Instance where the request was initiated.

Note that if other developers want to test it, they will be required to subscribe to the Product and use a subscription key to access the APIs included in it. We can access it, since we created the Azure API Management and therefore we are administrator.

Considerations

BizTalk endpoints which are configured with the relay extend their reach or boundaries to Azure and such provide means of access to the outside world in a new way. Through the relay, two-way direct messaging can be facilitated with external parties. To further secure the endpoints, by not sharing the service bus credentials, we used API Management, which brings in a few aspects you have to bear in mind.

The first aspect is the availability of the BizTalk endpoints. They can extend as shown in this blog post, however they depend on the host instance under which the receive location runs. If the host is stopped, then the endpoint will become unavailable. You can mitigate this risk by having two instances of the BizTalk host and a Receive Location with the same address i.e. endpoint registered in the Azure Service Bus. And then there is the availability of the Service Bus and API Management, both are managed by Microsoft and fall under a SLA.

The second aspect is the quality of service, which has moved from BizTalk side to API Management. This means that you can implement security, rate limiting, throttling, and other service quality aspects at the API Management level by configuration using policies. This is a huge time saver, since on the BizTalk level you, for instance will have to build a custom behavior to support OAuth 2.0.

The third aspect I like to discuss, is the contract of the message you sent to the API. The actual contract is abstracted away as only the endpoint is visible as an operation in the API. Therefore, you still need to agree on the contract and format i.e. XML or JSON with your consumers. We have shown XML messages here, yet BizTalk supports JSON natively using the appropriate pipeline components.

The final aspect will be TTL of the token. As you have seen in this blog post you can create a SAS-token that has a validity based on how you set it using a tool. You do have to keep in mind when the token has almost expired you refresh the token. Moreover, you will need to have a certain SLA in place to ensure the service endpoint does not become unavailable.

Conclusion

This blog post has provided an overview on how to secure BizTalk endpoints exposed through WCF-Relay bindings, in particular we chose WCF-BasicHttpRelay. The NetTcp variant would not have been any different, other than on the protocol level. A BizTalk endpoint can be secured leveraging the relay and API Management, i.e. we can apply some quality of service aspects through API Management on our BizTalk hosted endpoint. BizTalk itself can host the actual service itself, which can be any type of service.

Using API Management, you can abstract the relay endpoint away from the consumers (clients), whitelist IP’s, cache response, monitor health, have support for OAuth 2.0, and many other aspects that are hard to accomplish with BizTalk itself when it comes to exposing endpoints to Azure. The other benefit is that you do not have to burden infrastructure guys to move BizTalk to another zone, or open up extra firewall ports, setup reverse proxies, and so on (see also a talk by Kent Weare API Management Part 1 – An Introduction to Azure API Management).

Call to action

If you like to further explore the technology that has been showcased in this blog I, would suggest to explore the following resources:

Author: Steef-Jan Wiggers

Steef-Jan Wiggers has over 15 years’ experience as a technical lead developer, application architect and consultant, specializing in custom applications, enterprise application integration (BizTalk), Web services and Windows Azure. Steef-Jan is very active in the BizTalk community as a blogger, Wiki author/editor, forum moderator, writer and public speaker in the Netherlands and Europe. For these efforts, Microsoft has recognized him a Microsoft MVP for the past 5 years. View all posts by Steef-Jan Wiggers

by michaelstephensonuk | Feb 13, 2017 | BizTalk Community Blogs via Syndication

One of my favourite things in Azure is the ability to group stuff into resource groups. Maybe it’s a reflection on myself but I hate looking at messy environments that look like a kid has just emptied their toy box over the floor.

With resource groups you have the ability to group related stuff together in kind of a sandbox. Now there are much more powerful features to resource groups such as the ability to automate their setup and deployment but without even considering those, just the grouping of stuff on its own is very handy. Recently I was working with some of the support team around some knowledge transfer sessions to help them understand our integration platform and as you would expect when a group has limited experience with cloud there is an element of fear of the unknown but when I started explaining how the complex platform was grouped into solutions to make it easier to understand you could see this was a good first step rather than showing them a million different components which they wouldn’t be able to understand the relationships between.

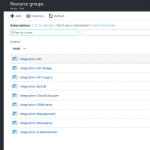

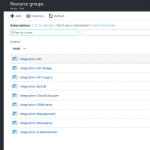

Below is a picture showing some of our resource groups.

In this particular one here is what some of them do:

- Integration-API = This contains app service components which support our API & Microservices architecture

- Integration-API-Bridge = This contains a web job which acts as a bridge between service bus queues and our API

- Integration-API-Legacy = This contains some older API style components which are expected to be deprecated soon

- Integration-BizTalk = This contains some Azure services which support some of our BizTalk applications. Mainly app insights and some storage. Note our BizTalk instance is on premise in this instance

- Integration-CloudDataLayer = We have some shared data which is available and is stored in this resource group, it includes DocDB, Azure Storage and Azure Search

- Integration-CRMEvents = This contains some Web Jobs and Functions which handle events published from Dynamics CRM Online to Azure Service Bus

- Integration-Management = This contains some tools and widgets to help us monitor and manage the platform

- Integration-Messaging = This contains service bus namespaces for relay and brokered messaging

- Integration-ScheduledJobs = This contains Azure Scheduler to help us have a single place for triggering time based integration scenarios

As we use new Azure features it helps to group these into new or existing resource groups so we have a clear way of logically partitioning the integration platform and I think this helps from the maintenance perspective and keeps your total cost of ownership down.

Wonder how other people are grouping their stuff in Azure for integration?

by michaelstephensonuk | Feb 13, 2017 | BizTalk Community Blogs via Syndication

While chatting to Jeff Holland on integration Monday this evening I mentioned some thinking I had been doing around how to articulate the various products within the Microsoft Integration Platform in terms of their codeless vs Serverless nature.

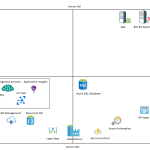

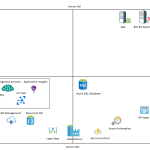

Below is a rough diagram with me brain dumping a rough idea where each product would be in terms of these two axis.

(Note: This is just my rough approximation of where things could be positioned)

If you look at the definition of Serverless then there are some obvious things like functions which are very Serverless, but the characteristics of what makes something Serverless or not is a little grey in some areas so I would argue that its possible for one service to be more Serverless than another. Lets take Azure App Service Web Jobs and Azure Functions. These two services are very similar, they can both be event driven, neither has an actual physical server that you touch but they have some differences. The Web Job is paid for in increments based on the hosting plan size and number instances where as the function is per execution. Based on this I think its fair to say that a lot of the PaaS services on Azure could fall into a catchment that they have characteristics of Serverless.

When you consider the codeless elements of the services I have tried to consider both code and configuration elements. It is really a case of how much code do I need to write and how complex is it that differentiates one service from another. If we take web apps either on Azure App Service or IIS you get a codebase and a lot of code to write to create your app. Compare this with functions where you tend to be writing a specific piece of code for a specific job and there is unlikely to be loads of nuget packages and code not aimed at the functions specific purpose.

Interesting Comparisons

If you look at the positioning of some things there are some interesting comparisons. Lets take BizTalk and Logic Apps. I would argue that a typical BizTalk App would take a lot more developer code to create than it would to do a Logic App. I don’t think you could really disagree with that. BizTalk Server is also clearly has one of the more complex server dependencies where as Logic Apps is very Serverless.

If you compare Flow against Logic Apps you can see they are similar in terms of Serverless(ness). I would argue that for Logic Apps the code and configuration knowledge required is higher and this is reinforced by the target user for each product.

What does this mean?

In reality the position of a product in terms of Serverless or codeless doesn’t really mean that much. It doesn’t necessarily mean one is better than the other but it can mean that in certain conditions a product with certain characteristics will have benefits. For example if you are working with a customer who wants to very specifically lock down their SFTP site to 1 or 2 ip addresses accessing it then you might find a server base approach such as BizTalk will find this quite easy where as Logic Apps might not support that requirement.

The spectrum also doesn’t consider other factors such as complexity, cost, time to value, scale, etc. When making decisions on product selection don’t forget to include these things too.

How can I use this information?

Ok with the above said this doesn’t mean that you cant draw some useful things from considering the Microsoft Integration Stack in terms of Codeless vs Serverless. The main one I would look at is thinking about the people in my organisation and the aims of my organisation and how to make technology choices which are aligned to that. As ever good architecture decision making is a key factor in the successful integration platform.

Here are some examples:

- If I am working with a company who wants to remove their on premise data centre foot print then I can still use server technologies in IaaS but that company will potentially be more in favour of Serverless and PaaS type solutions as it allows them to reduce the operational overhead of their solutions.

- If I am working with a company who has a lot of developers and wants to share the workload among a wider team and minimize niche skill sets then I would tend to look to solutions such as Functions, Web Jobs, Web Apps, Service Bus. The democratization of integration approach lets your wider team be involved in large portions of your integration developments

- If my customer wants to that that further and empower the Citizen Integrator then Flow and Power Apps are good choices. There is no Server-full equivalent to these in the Microsoft stack

The few things you can certainly draw from the Microsoft stack are:

- There are lots of choices which should suit organisations regardless of their attitude/preferences and reinforces the strength and breadth of the Microsoft Integration Platform through its core services and relationships to other Azure Services.

- Azure has a very strong PaaS platform covering codeless and coding solutions and this means there are lots of choices with Serverless characteristics which will reduce your operational overheads

Would be interesting to hear what others think.

by Lex Hegt | Feb 13, 2017 | BizTalk Community Blogs via Syndication

This is an article in a series of 2 about the BizTalk Deployment Framework (BTDF). This open source framework is often used to create installation packages for BizTalk Applications. More information about this software can be found here CodePlex. With these series of articles, we aim to provide a useful set of hands-on documentation about the framework.

The series consists of the following parts:

- Part 1 – Introduction and basic setup (this article)

- Introduction

- BizTalk out-of-the-box capabilities

- Top reasons to use BTDF

- Setting up a BTDF-project

- Conclusion

- Part 2 – Advanced setup

Introduction

In this first part, we will shortly discuss the shortcomings of the deployment packages which can be created with the out-of-the-box capabilities of BizTalk Server and introduce you to the benefits of BTDF. Further this article will show you how you can create a BTDF-project for the deployment of basic BizTalk artifacts like Schema’s, Maps and Orchestrations and how you can deploy variable settings for other DTAP environments. We will end this article with showing you how you can generate distributable MSI packages.

BizTalk out-of-the-box capabilities

Microsoft BizTalk Server comes with capabilities to create deployment packages. However, the MSI packages you create with the out-of-the-box features of BizTalk Server have some shortcomings which lead to deployments that are time consuming and error-prone. The main reasons for this are:

- The packages can only contain URI’s of Endpoints (Receive Locations and Send Ports) of a single DTAP environment. So DTAP promotion from the same package is not possible.

- Only deployment of BizTalk artifacts is possible. So if you need, for example, to create and populate database tables, this cannot be automated.

Top reasons to use BTDF

As you’ll imagine, BTDF has eliminated these shortcomings, because of which the framework has been widely adopted. Below you’ll find a list which contains a number of reasons why you could use this framework:

- Deploy complex BizTalk solutions containing BizTalk artifacts but also for example SSO settings, ESB itineraries and SQL Server scripts, with no human intervention

- Consolidate all of your environment-specific configuration and runtime settings into one, easy-to-use Excel spreadsheet

- Maintain a single binding file that works for all deployment environments

- Make automated deployment a native part of the BizTalk development cycle, then use the same script to deploy to your servers

Setting up a BTDF-project

After you have downloaded and installed BTDF on your Development box, you can start with setting up your BTDF-project. To get that working, you need to take the following steps:

- Add a BTDF project to your BizTalk solution

- Add BizTalk artifacts to the BTDF project

- Deploy from Visual Studio

- Generate a master PortBinding file

- Apply Environment Specific Settings

- Generate a MSI

Add a BTDF project to your BizTalk solution

Firstly, you need to open a BizTalk solution in Visual Studio and perform the following steps:

- Right-click the Solution Explorer and select Add/New Project…

- In the dialog that opens, in the left hand pane, navigate to BizTalk Projects

- In the right pane, select Deployment Framework for BizTalk Project, give the project the name Deployment and click the OK button to continue the creation of the project.

In the screen that follows you can configure which artifacts you want to deploy. Perform the following:

- Double-click on the text True after Deploy Settings into SSO. It will be set to False

- Uncheck the check mark ‘Write properties to the project file only when…’

- Click the Create Project button

After the project has been created, you’ll get a small dialog box, which states that you need to edit the file Deployment.btdfproj to configure your specific deployment requirements. And that’s exactly what we are going to do in a couple of minutes! For now, just click the OK button.

Although the aforementioned project file is shown in the left part of the screen, the Solution Explorer does not yet show the newly created Deployment project. To have the project shown up there, you need to manually add the project file and some other files to the solution in a Solution folder.

Perform the following actions to add the Deployment project to the solution:

- Right-click on the Solution Explorer, select Add/New Solution Folder, enter Deployment and hit the Enter key

- Right-click on the Deployment folder, select Add/Existing Item… and in the dialog box that appears double-click the folder Deployment

- Select all the files and click on the Add button

The Deployment project is now added to your BizTalk solution and that project in the Solution Explorer looks like below.

Note: Take care that it is important to name that solution folder Deployment, otherwise Visual Studio won’t be able to find the Deployment.btdfproj file.

Add BizTalk artifacts to the BTDF project

Now we have the Deployment project in place, we are going to add some basic BizTalk artifacts to the Deployment project. To start we’ll add the following types of artifacts:

- Schemas

- Maps

- Orchestrations

To keep the Deployment project file nicely organized, we will create an ItemGroup for each type of artifact. As we start with 3 types of artifacts, we’ll end up having 3 ItemGroups. Probably it will be clear by now that ItemGroups can be used to organize the artifacts you want to deploy. An ItemGroup is an element from MSBuild which is used by BTDF. Besides ItemGroups, you will also find PropertyGroups within the BTDF project file. PropertyGroups are used to organize all kind of parameters which can be used during deployment. Think of this for example of the name of the project, but also which kind of artifacts need to be deployed.

The project file already contains an ItemGroup for deploying schemas. We will use this as a template for our own purposes.

Follow below instructions to add schemas, maps and orchestrations to the deployment project.

- In the Deployment project file, search for the ItemGroup that is used for deploying Schemas. It contains the text ‘<Schemas Include=”YourSchemas.dll”>’.

- Replace ‘YourSchemas.dll’ with the name of the DLL which contains your schemas

- The line with the ‘<LocationPath>’ tag, must contain the path where the DLL resides

The ItemGroup will now look like below:

<ItemGroup>

<Schemas Include="Kovai.BTDF.Schemas.dll">

<LocationPath>..$(ProjectName).Schemasbin$(Configuration)</LocationPath>

</Schemas>

</ItemGroup>

Next we’ll prepare deployment of the BizTalk Maps.

- Below the ItemGroup to deploy Schemas, create a new ItemGroup to deploy Maps. Therefore, you can copy/paste below XML:

<ItemGroup>

<Schemas Include="Kovai.BTDF.Schemas.dll">

<LocationPath>..$(ProjectName).Schemasbin$(Configuration)</LocationPath>

</Schemas>

</ItemGroup>

Now we are going to prepare deployment of the BizTalk Orchestrations.

- Below the ItemGroup to deploy Maps, create a new ItemGroup to deploy Orchestrations. Therefore, you can copy/paste this XML:

<ItemGroup>

<Orchestrations Include=" Kovai.BTDF.Orchestrations.dll ">

<LocationPath>..$(ProjectName).Orchestrationsbin$(Configuration)</LocationPath>

</Orchestrations>

</ItemGroup>

If you have multiple projects of for example orchestrations, you can simple copy/paste the <Orchestrations>…</Orchestrations> block and provide it with the correct Include-parameter and location path. Of course, this also works the same for other types of artifacts like schemas, maps, pipelines etc.

Deploy from Visual Studio

We now have deployment for some basic artifacts in place, so let’s deploy the BizTalk solution and see what happens:

- Right-click the solution (in the solution Explorer) and select Build Solution

- When the solution is built without errors, you can deploy the solution, therefore just click the little green arrow in the upper left are of Visual Studio:

If everything runs like expected the Kovai.BTDF application is created and the schemas, the map and the orchestration will be deployed properly, but you’ll end up with the following error:

Could not enlist orchestration ‘Kovai.BTDF.Orchestrations.BizTalk_Orchestration1,Kovai.BTDF.Orchestrations, Version=1.0.0.0, Culture=neutral, PublicKeyToken=72ff5decf00d3ffb’. Could not enlist orchestration ‘Kovai.BTDF.Orchestrations.BizTalk_Orchestration1’. All orchestration ports must be bound and the host must be set.

This is due to the fact that the orchestration Orchestration1 contains ports which will have to be configured and bound in the BizTalk Administration Console. Let’s just do that and afterwards export the Binding file into your Deployment project.

- Open the BizTalk Server Administration Console and navigate to the BizTalk application called BTDF

- Right-click the application and click Configure. The following screen appears:

- Select a Host in the designated dropdown box

- Create a Receive Port and a Receive Location (use the FILE adapter) and bind it to rcvPort

- Create a Send Port (use the FILE adapter) and bind it to sndPort

- Click the OK button

- Right-click the application again and click .. and in the dialog box that appears, click Start again. The application should now be started properly

Generate a master PortBinding file

We now have a running BizTalk application which includes bindings between the deployed orchestration and a couple of ports. In the next step we will make these bindings available to our deployment project. Therefore, we need to export the bindings.

Again Right-click the application Kovai.BTDF and now select Export/Bindings…

- Click on the ellipsis button (…), navigate to your Deployment project, select xml, click the OK button and confirm that you want to overwrite the existing file

- Switch to Visual Studio and notice that you are prompted with the following message:

- Click Yes to reload the PortBindingsMaster.xml file

- Open PortBindingsMaster.xml and notice that it contains the bindings you created earlier

Apply Environment Specific Settings

For just local deployments you now have the basics in place. However, in most scenarios you will have to deploy your BizTalk solution(s) to multiple DTAP environments. In that case, the addresses of your Receive Locations and Send Ports will differ between environments. BTDF facilitates this by providing a XML file which can be edited in Microsoft Excel.

Maintaining DTAP environments

That XML file, named SettingsFileGenerator.xml, can contain columns for each DTAP environment to which you have to deploy your BizTalk solution. Below you find a screen shot of how such a file looks like by default.

As you can see, there are columns for multiple environments; Local Development, Shared Development, Test and Production. You are free to rename the values in the existing columns, or add/remove columns.

Maintaining environment specific settings

In the spreadsheet there currently are 2 settings defined; SsoAppUserGroup and SsoAppAdminGroup. You can add more settings in the first column and give them values for the different environments in the following columns. If the value is the same for two or more environments, you could enter a value in the column Default Values, as this value will be used in case no value is entered for one or more environments.

The screenshot below shows a Settings file that now contains values for the addresses of the Receive Location and the Send Port. As you can see, there are Default values entered and the columns for Local Development and Shared Development are left empty, so while deploying to those environments, the Default values will be used.

To be able to use for example the SP_Address variable from the settings file in the PortBindingsMaster, you need to open the PortBindingsMasterFile.xml file, navigate to the Send Port which should use the addresses and replace the current address with ${SP_Address}.

So before that part of the PortBindingsMaster.xml file looks like this:

<SendPort Name="SendPort2" IsStatic="true" IsTwoWay="false" BindingOption="1">

...

<PrimaryTransport>

<Address>C:BizTalkKovai.BTDFOut%MessageID%.xml</Address>

...

</SendPort>

After the change the XML will look like below:

<SendPort Name="SendPort2" IsStatic="true" IsTwoWay="false" BindingOption="1">

...

<PrimaryTransport>

<Address>${SP_Address}%MessageID%.xml</Address>

...

</SendPort>

Generate a MSI

So far, we have only done deployments through Visual Studio. When we have to deploy our BizTalk solutions to non-development servers, we do that by installing MSI’s. BTDF is also able to generate MSI’s. Besides the BizTalk artifacts which need to be deployed, these MSI’s can also contain custom components, binding files for your entire DTAP environment and all kind of custom scripts, which can also be started during deployment.

Generating a MSI is done from Visual Studio, just choose one of below steps to generate a MSI:

- Click on the below button in the toolbar

- In the Visual Studio menu, select Tools/Deployment Framework for BizTalk/Build Server Deploy MSI

Both options will provide you with a MSI which you can use to deploy the BizTalk application throughout your DTAP environment.

Conclusion

In this first article we have seen how the BizTalk Deployment Framework differs from the out-of-the-box capabilities of BizTalk Server and described the benefits of BTDF. We’ve also setup a simple deployment project in an existing BizTalk solution and shown how you can add some basic BizTalk artifacts to that project. Further we have seen how we can create different settings for, for example, Receive Location addresses and how they become deployed to multiple environments. Finally, we’ve seen deployment from Visual Studio and we’ve generated a MSI based on the BTDF project.

Explore best practices for deploying BizTalk Server 2016 from Sandro Pereira’s Whitepaper.

In the next article, we’ll get familiar with amongst others Custom Targets, Conditions and see how we can run third party executables to automatically deploy non-standard artifacts like SQL Server scripts or even automatically create BizTalk360 alerts from within your deployment project!

Check out the 2nd article of the series here.

Author: Lex Hegt

Lex Hegt works in the IT sector for more than 25 years, mainly in roles as developer and administrator. He works with BizTalk since BizTalk Server 2004. Currently he is a Technical Lead at BizTalk360. View all posts by Lex Hegt