by Gautam | Jan 19, 2017 | BizTalk Community Blogs via Syndication

Microsoft Tech Summits kicked off today at Chicago with lots of keynotes, technical training sessions and hands-on labs to build and develop cloud skills of interested individuals.

There were also deep dive sessions covering a range of topics across Microsoft Azure and the hybrid platform including security, networking, data, storage, identity, mobile, cloud infrastructure, management, DevOps, app platform, productivity, collaboration and more.

The Microsoft Tech Summit provides Free, two-day technical training for IT professionals and developers with experts who build the cloud services across Microsoft Azure, Office 365, and Windows 10.

Here is the Agenda look like.

You can also find a city near you and Register for the event

Here’s a list of the currently published Tech Summit events around the globe:

- Amsterdam, March 23 – 24

- Bangalore, March 16 – 17

- Birmingham, March 27 – 28

- Chicago, January 19 – 20

- Copenhagen, March 30 – 31

- Frankfurt, February 9 – 10

- Johannesburg, February 6 – 7

- Milan, March 20 – 21

- Seoul, April 27 – 28

- Singapore, March 13 – 14

- Washington D.C. March 6 – 7

.

There is another free event coming up for integration community – Global integration boot camp.

This event is driven by user groups and communities around the world, backed by Microsoft, for anyone who wants to learn more about Microsoft’s integration story. In this full-day boot camp there will deep-dive into Microsoft’s integration stack with hands-on sessions and labs, delivered to you by the experts and community leaders.

In this Boot Camp, the main focus will be on:

BizTalk 2016 –BizTalk Server 2016, what’s new, and using the new Logic Apps adapter

Logic Apps –Creating Logic Apps using commonly-used connectors

Servicebus –Build reliable and scalable cloud messaging and hybrid integration solutions

Enterprise Integration Pack –Using the Enterprise Integration Pack (EIP) with Logic Apps

API Management –How does API management help you organize your APIs and how does it increase security?

On-Premise Gateway –Connecting to on-prem resources using the On-Premise Gateway

Hybrid Integration –Hybrid integrations using BizTalk Server and Logic Apps

Microsoft Flow – Learn to compose flows with Microsoft Flow

If you are interested to be part of it or to host it on your location, you can reach out to organizers by providing your details.

Organizers

by Gautam | Jan 19, 2017 | BizTalk Community Blogs via Syndication

This week during our discovery session with one of our new customer and Azure Black Belt Team members we came to know about a new storage type called Archive Storage which is still in development phase.

It is also mentioned in the Microsoft Cloud Platform roadmap documentation which provides a snapshot of what Microsoft is working on in their Cloud Platform business. You can use the document to find out which cloud services are

- recently made generally available

- released into public preview

- are still developing and testing

- or are no longer developing

As per documentation,

Azure Archive Storage is a very low cost cloud storage for data that is archived and very rarely accessed with retrieval time in hours. It can be useful for archive data such as medical reports, compliance documents, exchange mails, etc. that are accessed rarely but need to be stored for many years.

Currently Azure Storage offers two storage tiers for Blob – Hot and cool storage.

Azure hot storage tier – is optimized for storing data that is accessed frequently.

Azure cool storage tier – is optimized for storing data that is infrequently accessed and long-lived.

Azure Archive Storage is different than Hot and Cold because it’s in a way offline data storage. And that’s the reason if the Archive Storage data need to be accessed then it will be made online with retrieval time in hours. It will also be cheaper than Cold storage almost half price.

With Archive Storage there will be an option to apply a policy to move the data from hot or cold tier to Archive. For example, you can have an automated process based on a policy to move any data which is one-year-old to archive storage.

I hope archive storage type would provide an option to enterprise customers to store archival data in most cost-effective way in Azure.

by Sandro Pereira | Jan 17, 2017 | BizTalk Community Blogs via Syndication

While researching for my last post, Thinking outside the box (or not): How to create “Global C# function” to be reused inside a map?, in BizTalk360 blog, I encountered several errors while playing around with maps in order to find an approach that would allow me to create the concept of Global Function. And some of this errors were:

“Inline Script Error: must declare a body because it is not marked abstract, extern, or partial”

“Inline Script Error: ; expected”

or

“Inline Script Error: Type ‘BizTalkMapper.FunctoidInlineScripts’ already defines a member called ‘FunctionName’ with the same parameter types”

Causes

The cause of this problem is that you do not correctly declare the body of the Inline C# Function.

Or, if you are trying to reuse an existing Inline C# Function you are doing it properly.

To reuse Inline C# Functions these are the rules that you need to follow:

- If all of the Scripting Functoids are in the same grid page: In the first Scripting Functoid, linked from the source to the destination, we will have to specify the body function and in the following functoids, we only need the function declaration (no body).

- If the Scripting Functoids are in different grid pages: The Scripting Functoid that specifies the body function needs to be on the leftmost grid page and the remaining Scripting Functoids (with the function body declared) on the other grid pages to the right. In other words, counting the grid pages from left to right, if the Scripting Functoid that specifies the body function is on the second grid page, the remaining functoids with the function body declared, cannot be placed on the first grid page, they can only be placed from the second grid page (including the second).

Solution

The solution to solve this issue you have two options, you need to follow the rules described above (BizTalk Mapper tips and tricks: How to reuse Scripting Functoids with Inline C# inside the same map) or you need to implement the concept of global C# Function described in my post: Thinking outside the box (or not): How to create “Global C# function” to be reused inside a map?, in resume:

- Add a Grid page to your map and rename it to “GlobalFunctions”

- Set this grid as the first grid page of your map (important step)

- Drag-and-Drop a Scripting Functoid to the “GlobalFunctions” grid page and place the C# code

- Do not link any inputs and don’t map (link) this Scripting Functoids to any element in the destination Schema.

- Double click the earlier Scripting Functoids added to the “GlobalFunctions” grid page and set the expected input values as empty constant values, that by default doesn’t exist

- Now you can use these functions in other grid pages using only the function declaration

Author: Sandro Pereira

Sandro Pereira lives in Portugal and works as a consultant at DevScope. In the past years, he has been working on implementing Integration scenarios both on-premises and cloud for various clients, each with different scenarios from a technical point of view, size, and criticality, using Microsoft Azure, Microsoft BizTalk Server and different technologies like AS2, EDI, RosettaNet, SAP, TIBCO etc. He is a regular blogger, international speaker, and technical reviewer of several BizTalk books all focused on Integration. He is also the author of the book “BizTalk Mapping Patterns & Best Practices”. He has been awarded MVP since 2011 for his contributions to the integration community. View all posts by Sandro Pereira

by Gautam | Jan 9, 2017 | BizTalk Community Blogs via Syndication

Microsoft’s Cognitive services provides set of powerful intelligence APIs. These APIs can be integrated into your app on the platform of your choice to tap into ever-growing collection of powerful artificial intelligence algorithms for vision, speech, language, knowledge and search.

Integrating Cognitive Services into an application provides the app with the ability to SEE, RECOGNIZE, HEAR and even understand the SENTIMENT of your text.

In this blog post, I am trying to experiment with the Text Analytics API in Logic App. The API is a suite of text analytics services built with Azure Machine Learning to evaluate sentiment and topics of text to understand what user want.

For Sentiment analysis the API returns a numeric score between 0 and 1. Scores close to 1 indicate positive sentiment and scores close to 0 indicate negative sentiment. For Key phrase extraction the API returns a list of strings denoting the key talking points in the input text.

Cognitive Service account for the Text Analytics APIs

To build to Logic App to use Text Analytics APIs, first you need to sign up of the text analytic services.

- Login to Azure Portal with your valid MSDN Subscription and Search for Cognitive Services APIs.

- Create a Cognitive Service account by providing the details as shown below.

- Make sure Text Analytics is selected as the ‘API type’ and select free plan – free tier for 5,000 transactions/month

- Complete the other fields and create your account.

- After you sign up for Text Analytics, find your API Key. Copy the primary key, as you will need it Logic App.

Logic App to detect sentiment and extract key phases

Logic Apps is a cloud-based service that you can use to create workflows that run in cloud. It provides a way to connect your applications, data and SaaS using rich set of connectors. If you are new to Logic App, please refer the Azure documentation for further details.

Now let’s create a Logic App to detect sentiment and extract key phrases from user’s text using the Text Analytic API.

Go to New >Enterprise Integration and select Logic App as shown below.

Create a Logic App by providing the details as shown below.

After our deployment success, we can start editing our Logic App.

To access it, in your left, browse All Resources > [Name of your Logic App].

Clicking in your Logic App will open the Logic Apps Designer. In welcome screen, there are a lot of templates ready to use. Choose a blank template from Logic Apps Designer

On Logic App designer, a search box is available where you can look for available Microsoft managed connectors and APIs available. Select the Request from the list which would act as a trigger to your Logic App and can receive incoming request.

Now we need to define a request body JSON Schema and the designer will generate tokens to parse and pass data from the trigger through the workflow.

We can use a tool like jsonschema.net to generate a JSON schema from a sample body payload

JSON schema for the above payload looks like below

Now use this JSON schema in the Request trigger body as shown below

Next step is to look for Cognitive Service API connector in the managed API list.

Select the Detect Sentiment and provide a connection name and Cognitive Service Account Key which we have copied in the previous section and click on Create.

Now you need to provide the Text value to the Detect Sentiment API from “text” variable of the Request trigger as shown below.

Next step would be to add Cognitive Service connector for Key Phrases same way we did for Detect Sentiment.

Now we would use the Compose and Response action to send HTTP response for the sentiment and key phrase analysis.

This is how I have composed the response using a simple new JSON message using the variable “key phrase” and “score” from the Key Phrase and Detect Sentiment APIs.

You can also use code view to compose the response message as shown below.

And finally use the output of compose action to send the HTTP response.

So here is complete workflow look like

Quick and easy! Now once you save the workflow the topmost Request trigger will have the URL for this particular Logic App.

Now let’s invoke this Logic App from one of my favorite API testing tool, Postman.

I submitted the sample JSON message to the endpoint with following text – “I had a wonderful experience! Azure cognitive services are amazing.”

Sure enough, I got the key phrase and sentiment score as below:

Conclusion

Clearly Microsoft’s Cognitive Services are easy to use in your app on the platform of your choice. The Text Analytics API is just one of many different Artificial Intelligence APIs provide by Microsoft. I am sure this new platform would mature in the coming days and different types of app can leverage this technology.

References:

https://docs.microsoft.com/en-us/azure/cognitive-services/cognitive-services-text-analytics-quick-start

https://social.technet.microsoft.com/wiki/contents/articles/36074.logic-apps-with-azure-cognitive-service.aspx

by Mark Brimble | Jan 9, 2017 | BizTalk Community Blogs via Syndication

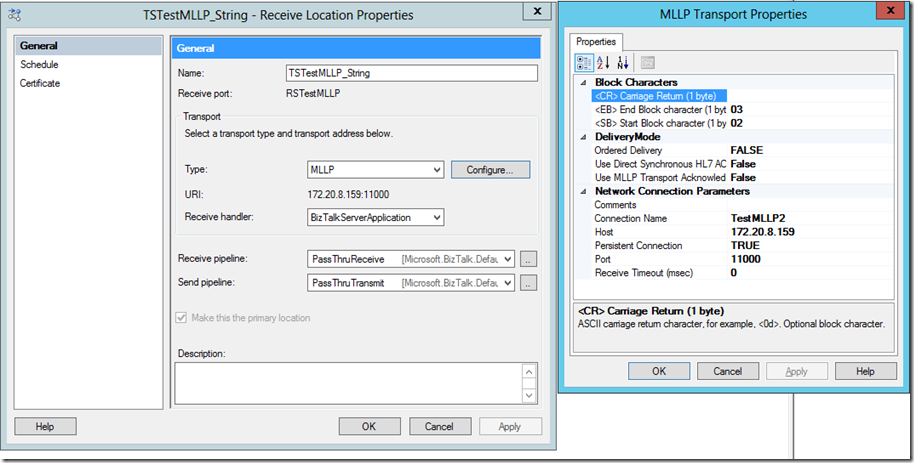

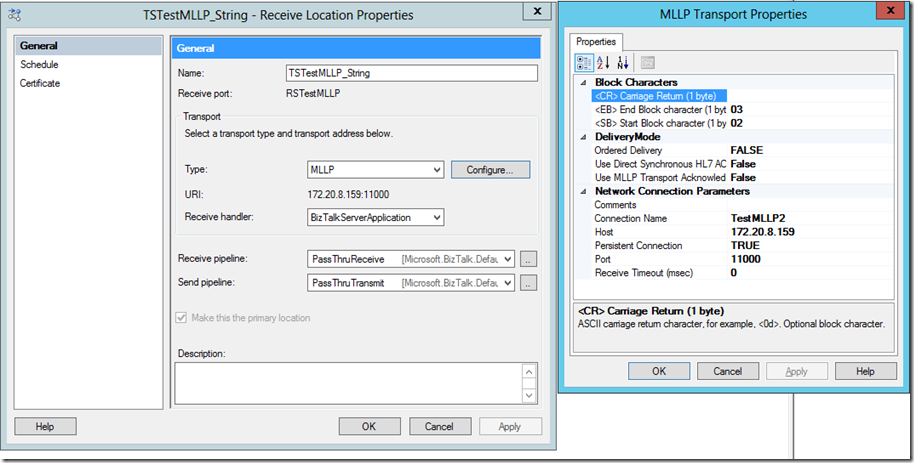

I have been doing proof of concept where a application connects to BizTalk Server using a TCP/IP stream connection where the application will actively connect and BizTalk Server will listen for connections.

I have previously written a custom adapter called the GVCLC Adapter which actively connects to a vending machine. This was based on the Acme.Wcf.Lob.Socket Adapter example by Michael Stephenson. Another alternative that was considered was the Codeplex TCP/IP adapter. When I met Michael for the first time in Sydney two years ago I thanked him for his original post and he said to me why hadn’t I used the BizTalk MLLP adapter instead. In this post I examine whether it is possible to use MLLP adapter to connect to a socket.

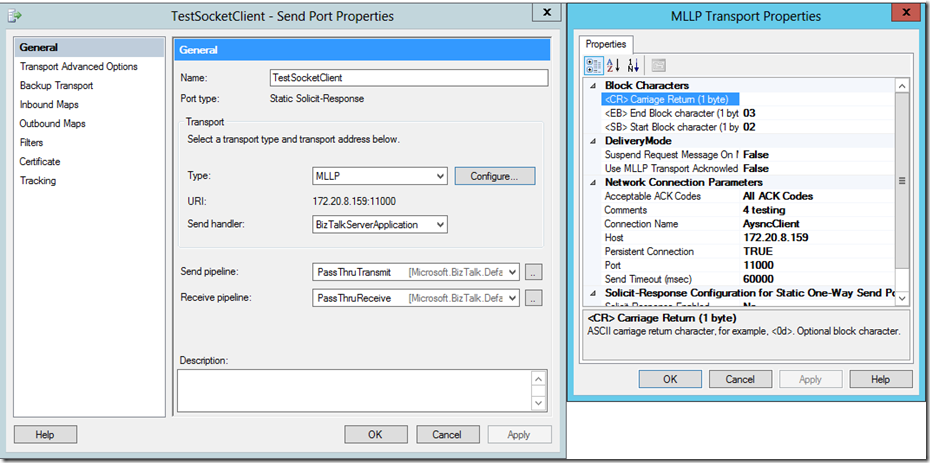

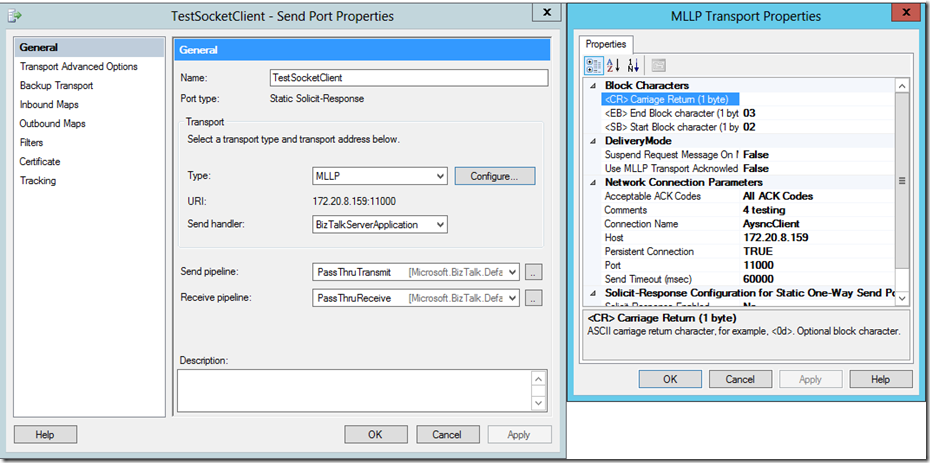

The BizTalk MLLP adapter is part of the BizTalk HL7 accelerator. This is usually used with healthcare systems. The MLLP adapter at its essence is a socket adapter as shown by the receive and send port configurations shown below. can it be used for other non-healthcare systems?

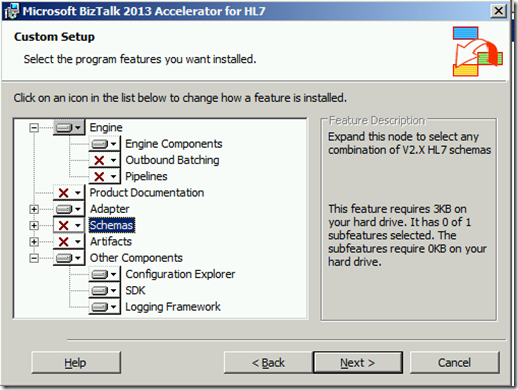

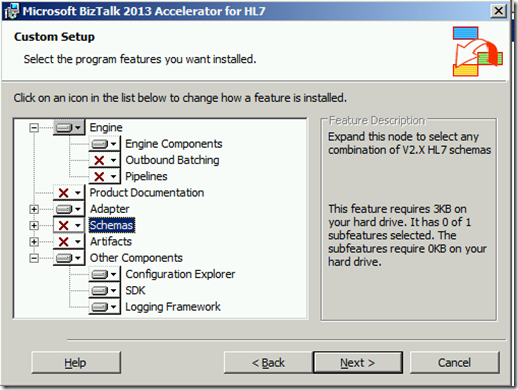

First I installed the BizTalk 2013 HL7 Accelerator with the minimal options to run the MLLP adapter. I had issues because you cannot install it if all the latest Windows Update have been installed but there is a workaround.

I setup a receive location as shown above once the MLLP adapter had been installed. Note i am not using any of the HL7 pipelines, no carriage return character, a custom start character and end character. Next I created a asynchronous socket test client very similar to the the Microsoft example here. I modified it so it would send a heart beat. I added the following lines at the right place

.//Start,End and ACK messages

protected const string STX = “02”;

protected const string ETX = “03”;

protected const string ENQ = “05”;

//…………………………….

// Send test data to the remote device.

//Send(client, “This is a test<EOF>”);

data = (char)Convert.ToInt32(STX, 16) + data + (char)Convert.ToInt32(ENQ, 16) + (char)Convert.ToInt32(ETX, 16);

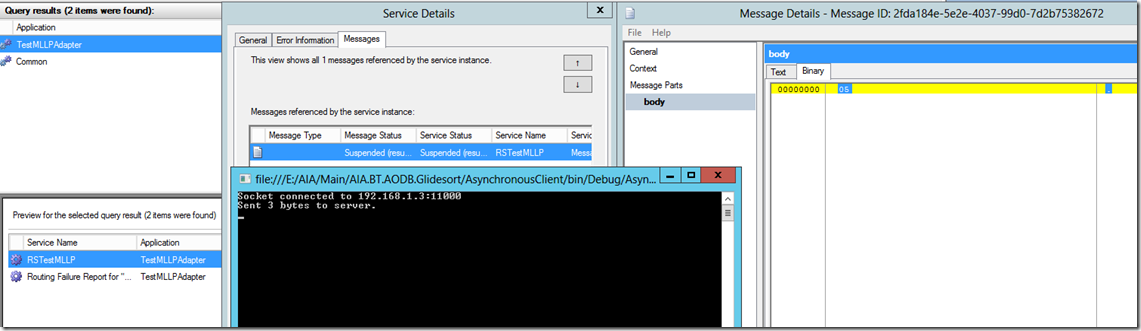

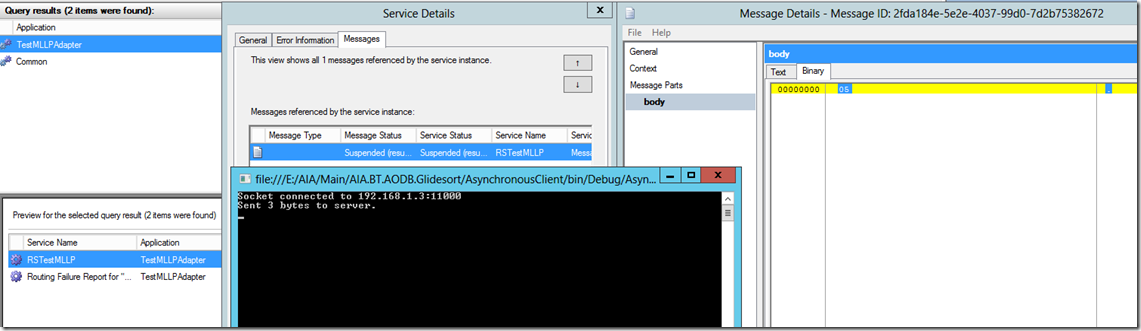

Now after changing the host to 192.168.1.3, enabling the receive location and starting the socket test client, a ENQ message is received in BizTalk. This proves that the MLLP adapter can consume a socket client with a message that is not a HL7 message.

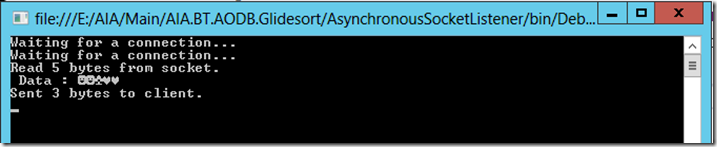

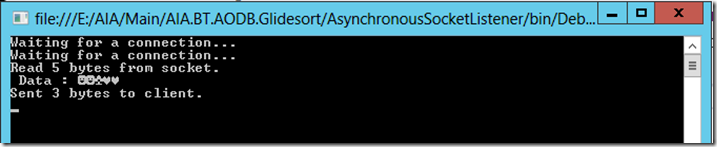

Next i set a file port to send a heartbeat message MLLP send adapter configure to send to 192.168.1.3 as above. The heartbeat message was <STX><ENQ><ETX>. I created a socket server similar to the Microsoft example here. I modified it be adding the following lines

//Start,End and ACK messages

protected const string STX = “02”;

protected const string ETX = “03”;

protected const string ACK = “06”;

//————–

// Echo the data back to the client.

//Send(handler, content);

// Send ACK message back to clinet

data = (char)Convert.ToInt32(STX, 16) + data + (char)Convert.ToInt32(ACK, 16) + (char)Convert.ToInt32(ETX, 16);

On dropping the file in the file receive port it was sent to the MLLP adapter and then sent to the socket server as shown by the printout below. There is five bytes because the configured adapter is also adding an extra STX and ETX character the message.

This proves the a MLLP receive adapter can consume messages from a socket client and that a send MLLP adapter can send to a socket server.

We have shown that MLLP adapter can be used instead of the Codeplex TCP/IP adapter or the Acme socket adapter

…….but this is not the end for me. My application wants to be a socket client and for BizTalk to send a heartbeat when it will send an AC i.e.

Application –>connect to BizTalk Server

BizTalk Server –>Message or heartbeat to the application

Application –> ACK message to BizTalk Server

I think I will have to create a custom adapter to do this.

In summary for the basic case the MLLP adapter can be used instead of the Codeplex TCP/IP adapter or the Acme socket adapter.

by Steef-Jan Wiggers | Jan 6, 2017 | BizTalk Community Blogs via Syndication

In the past, now and tomorrow I will keep saying that integration is relevant, even in this day and age of digitalization. Integration professionals will play an essential role in providing connectivity between systems, devices and services. Integration drives the digitalization forward by connecting everything with anything.

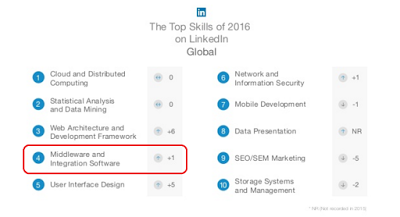

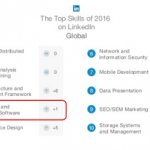

Integration skills are still in demand. A LinkedIn study revealed a top 10 with integration and middleware in top 5 with Cloud, Data Science, UX and Web. And the expectation for 2017 is that this will be similar.

Our toolbox is expanding from on premise tooling to cloud services; we have WCF, BizTalk Server, MSMQ to Logic Apps, API Management and Service Bus. Formats ranging from flat file, EDI, XML to JSON, several protocols open and proprietary, tooling from mappers to BizTalk360. It can be challenging yet much more exciting. My conclusion is more options, more fun, at least in my view.

In February I, will be travelling to Australia to meet up with my buddies Mick and Rene. And Rene is organizing a Meetup in Sydney on the 20th of February. And since I am kind of in the neighborhood, I have decided to pay my friends (Mark e.a.) in New Zealand a visit too. Hence, I will speak in Auckland on the 14th of February and in Melbourne, where Bill Chesnut resides. That’s three meet ups I will speak during my stay down under.

With Eldert I will be travelling around and our plans will look like:

• Saturday 11-2 – Monday 13-2: Sydney

• Tuesday 14-2 – Wednesday 15-2: Auckland (Meetup)

• Thursday 16-2 – Friday 17-2: Gold Coast (Eldert attends Ignite, Gold Coast)

• Saturday 18-2: Brisbane

• Sunday 19-2 – Monday 20-2: Sydney (Meetup)

• Tuesday 21-2 – Thursday 23-2: Melbourne (Meetup)

• Friday 24-2 – Monday 27-2: Sydney

Hope to see some of you there!

And that’s not all. The following month, March 25th there will be the Global Integration Bootcamp and the IntegrationMondays will start from the 9th of January onwards to end of April. And finally, there will be an Integrate 2017 in the UK and TUGAIT with an Integration Track in May.

Hence, there you go a tremendous community effort the coming months!

Author: Steef-Jan Wiggers

Steef-Jan Wiggers is all in on Microsoft Azure, Integration, and Data Science. He has over 15 years’ experience in a wide variety of scenarios such as custom .NET solution development, overseeing large enterprise integrations, building web services, managing projects, designing web services, experimenting with data, SQL Server database administration, and consulting. Steef-Jan loves challenges in the Microsoft playing field combining it with his domain knowledge in energy, utility, banking, insurance, health care, agriculture, (local) government, bio-sciences, retail, travel and logistics. He is very active in the community as a blogger, TechNet Wiki author, book author, and global public speaker. For these efforts, Microsoft has recognized him a Microsoft MVP for the past 6 years. View all posts by Steef-Jan Wiggers

by community-syndication | Jan 6, 2017 | BizTalk Community Blogs via Syndication

Here’s two incredible facts: 318576 views were made to my blog last year, being that, the country that most visited was the United States, followed by India, United Kingdom and Sweden. (In 2016, there was 50 new posts, growing the total of this blog to 534 posts) And I had the opportunity, as a speaker, […]

Blog Post by: Sandro Pereira

by stephen-w-thomas | Jan 5, 2017 | Stephen's BizTalk and Integration Blog

Are you working with Windows Azure Logic Apps inside Visual Studios and seen an error like this after you deploy?

The API connection ‘/subscriptions/{Subscription ID}/resourceGroups/{Resource Group Name}/providers/Microsoft.Web/connections/sql’ is a connection under a managed API. Connections under managed APIs can be used if and only if the API host is a managed API host. Either omit the ‘host.api’ input property, or verify that the specified runtime URL matches the regional managed API host endpoint ‘https://logic-apis-westus.azure-apim.net/’.

What I have found is the Logic App gets a little sticky to a Region. It seems to like the initial region you set when you first created the Logic App. Most of the shapes inside a Logic App are internal API calls to Microsoft hosted services. This ends up looking like this in the JSON:

"host": {

"api": {

"runtimeUrl": https://logic-apis-eastus.azure-apim.net/apim/sql},

"connection": { "name": "@parameters(‘$connections’)[‘sql’][‘connectionId’]"}}

As you can see the eastus is set in the runtimeUrl of the internal API call to the SQL API. When this is deployed to another region, at present Visual Studio does not replace this value with the correct region.

So what happens when you deploy to another region? Well these values get sent as-is.

If you run the Logic App you will get an error message like seen above.

To fix this issue it is simple, once you deploy the Logic App into a new region open it inside the Web Portal and Save It. You do not have to do anything else. This will adjust the runtimeUrl values to the correct region.

Happy Logic Apping!

by Nino Crudele | Jan 3, 2017 | BizTalk Community Blogs via Syndication

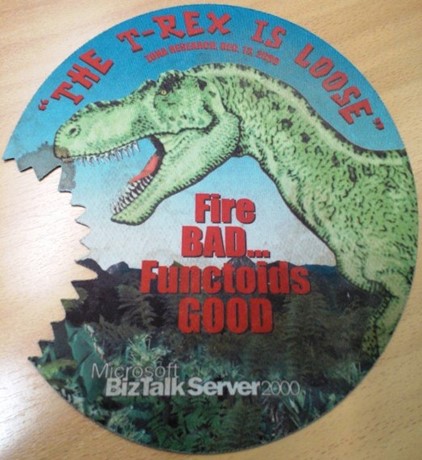

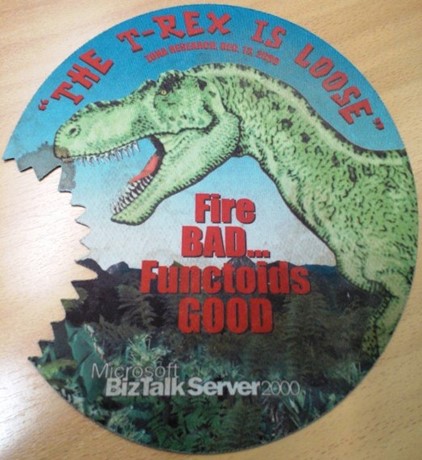

Why do people start comparing BizTalk Server to a T-Rex?

Long time ago the Microsoft marketing team created this mousepad in occasion of the BizTalk 12th birthday.

And my dear friend Sandro produced the fantastic sticker below

Time later people start comparing BizTalk Server to a T-Rex, honestly, I don’t remember the exact reasons why but I like to provide my opinion about that.

Why a T-Rex?

In the last year, I engaged many missions in UK around BizTalk Server, assessments, mentoring, development, migration, optimization and more and I heard many theories about that, let go across some of them :

Because BizTalk is old, well, in that case I’d like to say mature which is a very good point for a product, since the 2004 version the product grew up until the 2013 R2 version and in these last 10 years the community build so much material, tools and documentation that not many other products can claim.

Because BizTalk is big and monolithic, I think this is just a point of view, BizTalk can be very smart, most of the time I saw architects driving their solution in a monolithic way and, most of the times, the problem was in the lack of knowledge about BizTalk Server.

Because BizTalk is complicate, well Forrest Gump at this point would say “complicate is what complicate we do”, during my assessments and mentoring I see so many over complicated solutions which could be solved is very easy way and, the are many reasons for that, some time because we miss the knowledge, other time we don’t like to face the technology and we decide for, what I like to call, the “chicken way”.

Because now we have the cloud, well, in part I can agree with that but, believe or not, we also have the on premise and companies still use hardware, companies still integrate on premise applications and we believe or not, integrating system in productive way to send data into the cloud in efficient and reliable mode is something very complicate to do and, at the moment, BizTalk is still the number one on it.

Because BizTalk costs, the BizTalk license depends by the number of processors we need to use in order to run our solution and achieve the number of messages per second we need to consume, this is the main dilemma but, in my opinion, quite easy to solve and this is my simple development theory.

The number of messages we are able to achieve is inversely proportional to the number of wrong best practices we produce in our solution.

Many people make me this question, Nino what do you think is the future of BizTalk Server?

I don’t like to speak about future, I saw many frameworks came up and disappear after one or two years.

I like to consider the present and I think BizTalk is a solid product with tons of features and able to cover and support in great way any integration scenario.

In my opinion the main problem is how we approach to this technology.

Many times companies think about BizTalk like a single product to use in order to cover any aspect about a solution and this is deeply wrong.

I like to use many technologies together and combine them in the best way but, most important, each correct technology to solve the specific correct task.

In my opinion when we look to a technology, we need to get all the pros and cons and we must use the pros in the proper way to avoid any cons.

BizTalk can be easily extendable and we can compensate any cons in very easy way.

Below some of my personal best hints derived by my experience in the field:

If you are not comfortable or sure about BizTalk Server then call an expert, in one or two days he will be able to give you the right way, this is the most important and the best hint, I saw many people blaming BizTalk Server instead of blaming their lack of knowledge.

Use the best naming convention able to drive you in a proper way in your solution, I don’t like to follow the same one because any solution, to be well organized, needs a different structure, believe me the naming convention is all in a BizTalk Solution.

Use orchestration only when you need a strictly pattern between the processes, orchestrations are the most expensive resource in BizTalk Server, if I need to use it then I will use it for this specific reason only.

If I need to use an orchestration, then I like to simplify using code instead of using many BizTalk shapes, I like to use external libraries in my orchestrations, it’s simpler than create tons of shapes and persistent points in the orchestration.

Many times, we don’t need to use an adapter from an orchestration, which costs resources in the system, for example many times we need to retrieve data from a database or call another service and we don’t need to be reliable.

Drive your persistent points, we can drive the persistent points using atomic scopes and .Net code, I like to have the only persistent point I need to recover my process.

Anything can be extendable and reusable, when I start a new project I normally use my templates and I like to provide my templates to the customer.

I avoid the messagebox where I need real time performances, I like to use two different technics to do that, one is using Redis cache, the second is by RPC.

One of the big features provided by BizTalk Server is the possibility to reuse the artefacts separately and outside the BizTalk engine, in this way I can easily achieve real time performances in a BizTalk Server process.

Many times, we can use a Standard edition instead of an Enterprise edition, the standard edition has 2 main limitations, we can’t have multiple messageboxes and we can’t have multiple BizTalk nodes in the same group.

If the DTS (Down Time Service) is acceptable I like to use a couple of standard editions and with a proper configuration and virtual server environment I’m able to achieve a very good High Availability plan and saving costs.

I always use BAM and I implement a good notification and logging system, BizTalk Server is the middleware and, believe me, for any issue you will have in production the people will blame BizTalk, in this case, a good metrics to manage and troubleshoot in fast way any possible issue, will make you your life great.

Make the performance testing using mock services first and real external services after, in this way we are able to provide the real performances of our BizTalk solution, I saw many companies waste a lot of money trying to optimize a BizTalk process instead of the correct external service.

To conclude, when I look at BizTalk Server I don’t see a T-Rex.

BizTalk remembers me more a beautiful woman like Jessica Rabbit

full of qualities but, as any woman, sometime she plays up, we only need to know how to live together whit her 😉

Author: Nino Crudele

Nino has a deep knowledge and experience delivering world-class integration solutions using all Microsoft Azure stacks, Microsoft BizTalk Server and he has delivered world class Integration solutions using and integrating many different technologies as AS2, EDI, RosettaNet, HL7, RFID, SWIFT. View all posts by Nino Crudele

by community-syndication | Jan 1, 2017 | BizTalk Community Blogs via Syndication

And the most expected email arrived once again on 1st January, thanks Microsoft for another wonderful start of New Year. Once again, I’m delighted to share that I was renewed as a Microsoft Azure MVP (Microsoft Most Valuable Professional), This is my 7th straight year on the MVP Program, an amazing journey and experience that […]

Blog Post by: Sandro Pereira