by Bill Chesnut | Dec 12, 2016 | BizTalk Community Blogs via Syndication

If you are interested in learning about Azure API Management, Azure Functions, API Apps and Azure Logic Apps in an hands on 1 day training course, we have just what you are looking for, The course will be offered on the following dates and locations:

Wether you are looking a moving some of your On-premises integration to the cloud or start from scratch in the cloud this course will give you the information that you need to get started. This course is intended for developers who are responsible for developing cloud based integration solutions and includes hands-on-labs for API Management, Azure Functions and Logic Apps.

A complete syllabus and pre-requisites can be found at the registration link above or contact SixPivot here

The post Cloud Integration Training–January 2017 appeared first on biztalkbill.

by community-syndication | Dec 12, 2016 | BizTalk Community Blogs via Syndication

First of all, Happy birthday BizTalk Server for your 16th birthday! For does you don’ remember, the first version of BizTalk Server was release 12/12/2000, Congratulations!! Continuing with the topic of my last posts “Errors and Warnings, Causes and Solutions”, we will talk about an error that I face today using the BizTalk Server WCF […]

Blog Post by: Sandro Pereira

by stephen-w-thomas | Dec 7, 2016 | Stephen's BizTalk and Integration Blog

I have been working a lot with Azure Logic Apps over the past month. Since I am new to Logic Apps, I often run into silly issues that turn out to trivial to fix. This is one of them.

I was working with the HTTP Rest API shape to try to call a custom API, actually trying to call the Azure REST API to do an action on another Logic App – but more on that later.

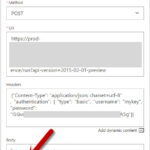

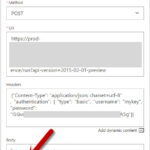

I was setting Content Type and Authorization inside the Headers file as shown below:

I kept receiving this error:

Unable to process template language expressions in action ‘HTTP’ inputs at line ‘1’ and column ‘1234’: ‘Error reading string. Unexpected token: StartObject. Path ‘headers.authentication’.’.

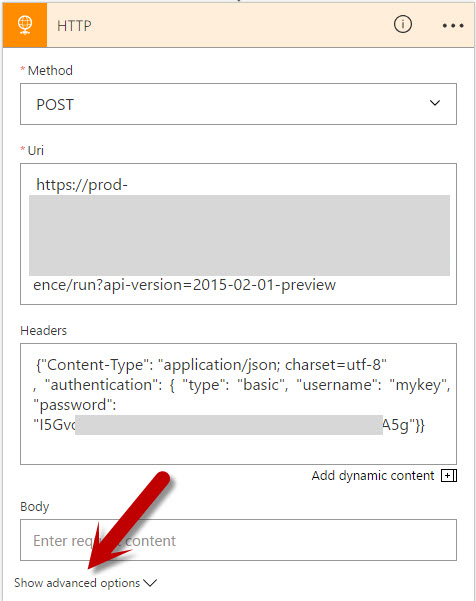

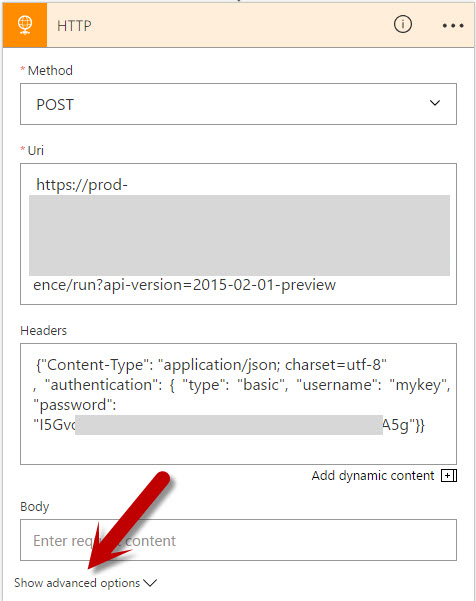

The fix was super simple. I had not expanded the Show Advanced Options for this shape. Once expanded, I see the Authorization is broken out from the other Headers. I moved the Authorization section from the Headers to here and it worked as expected!

So note to self, is something does not work as expected try expanding the Advanced Options section of the shape to see if that might help.

by Eldert Grootenboer | Dec 6, 2016 | BizTalk Community Blogs via Syndication

In this post, I will show how we can use Visual Studio to write Azure Functions and use these in our Logic Apps. Azure Functions, which went GA on November 15th are a great way to write small pieces of code, which then can be used from various places, like being triggered by a HTTP request or a message on Service Bus, and can easily integrate with other Azure services like Storage, Service Bus, DocumentDB and more. We can also use our Azure Functions from Logic Apps, which gives us powerful integrations and workflow, using the out of the box Logic Apps connectors and actions, and placing our custom code in re-usable Functions.

Previously, our main option to write Azure Functions was by using the online editor, which can be found in the portal.

However, most developers will be used to develop from Visual Studio, with its great debugging abilities and easy integration with our source control. Luckily, earlier this month the preview of Visual Studio Tools for Azure Functions was announced, giving us the ability to write Functions from our beloved IDE. For this post I used a machine with Visual Studio 2015 installed, along with Microsoft Web Developer Tools and Azure 2.9.6 .NET SDK.

After this, our first step will be to install Visual Studio Tools for Azure Functions.

Now that the tooling is installed, we can create a new Azure Functions project.

Our project will now be created, and we can start adding functions to it. To do so, rightclick on the project, choose Add, and the New Azure Function…. This will load a dialog where we can select what type of function we want to create. To use an Azure Function from a Logic App, we need to create a Generic Webhook.

For each function we add to our project, a folder will be created with its contents, including a run.csx where we will do our coding, and a function.json file containing the configuration for our Function.

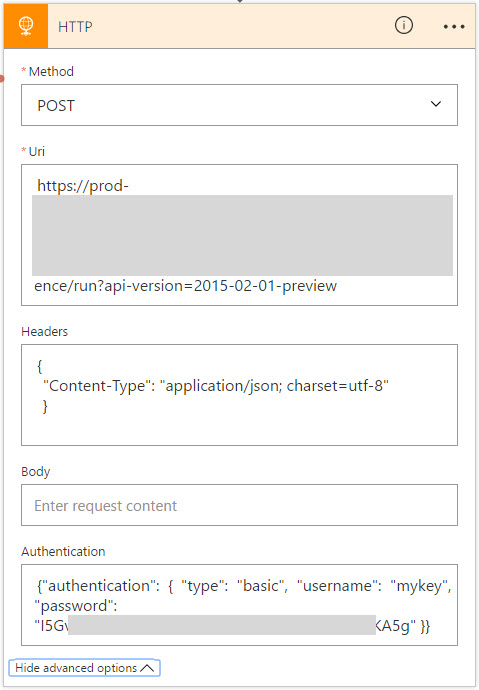

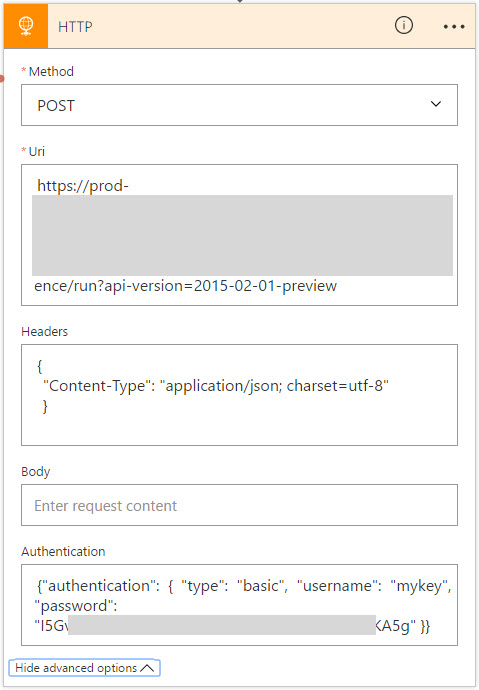

For this sample, let’s write some code which receives a order JSON message, and returns a message for the order, accessing the subnodes of the json message.

#r "Newtonsoft.Json"

using System;

using System.Text;

using System.Net;

using Newtonsoft.Json;

public static async Task<object> Run(HttpRequestMessage req, TraceWriter log)

{

string jsonContent = await req.Content.ReadAsStringAsync();

dynamic data = JsonConvert.DeserializeObject(jsonContent);

var toReturn = new StringBuilder($"Company name is {data.Order.Customer.Company}.");

foreach (var product in data.Order.Products)

{

toReturn.Append($" Ordered {product.Amount} of {product.ProductName}.");

}

return req.CreateResponse(HttpStatusCode.OK, new { greeting = toReturn });

}

|

As you can see, by using the dynamic keyword, we can easily access the JSON nodes.

Debugging

Now one of powers of Visual Studio is it’s great debugging possibilities, and we can now debug our Function locally as well. The first time you will start your Function, you will get a popup to download the Azure Functions CLI tools, accept this and wait for the tools to be downloaded and installed.

Once installed, it will start a local instance of your Function, and as we created a Generic Webhook Function, give you an endpoint where you can place your message.

Using a tool like Postman, we can now call our function, and debug our code, a great addition for more complex functions!

Deployment

Once we are happy with how our Function is working, we can deploy it to our Azure account. To do this, rightclick the project, and choose Publish…. A dialog will show, where we can choose to publish to a Microsoft Azure App Service. Here we can select an existing App Service or create a new one.

Update any settings if needed, and finally clock Publish, and your Function will be published to your Azure account and will be available for your applications. If you make any changes to your Function, just publish the updated function and it will overwrite your existing code.

Now that we have published our Function, let’s create a Logic App which uses this function. Aagain, you could do this from the portal, but luckily there is also the possibility these days to create them from Visual Studio. Go to Tools and choose Extensions and Updates. Here we download and install the Azure Logic Apps Tools for Visual Studio extension.

Now that we have our extension installed, we can create a Logic App project using the Azure Resource Group template.

This will show a dialog with various Azure templates, select the Logic App template.

Now rightclick the LogicApp.json file, and choose Open With Logic App Designer, and select and account and resource group for your Logic App.

This will load the template chooser, where we can use an predefined template, or create a blank Logic App, which we will use here. This will open the designer we know from the portal, and we create our logic app. We will use a HTTP request as our trigger. If you want, you can specify a JSON schema for your message, which can be easily created with http://jsonschema.net/. Now lets add an action which uses our Function, by clicking New step and Add an action. Use the arrow to show more options, and choose Show Azure Functions in the same region. Now select the function we created earlier.

For this example we will just pass in the body we received in our Logic App, but of course you can make some serious integrations and flows with this using all the possibilities which Logic Apps provide. Finally, we will send back the output from our function as the response.

Deployment

Rightclick on your project, and choose Deploy and selecty the resource group you selected earlier. Edit your parameters, which will include any connection settings for resources used in your Logic App as well. Once all your properties have been set, click OK to start the deployment.

Note that is you get the error The term ‘Get-AzureRmEnvironment’ is not recognized as the name of a cmdlet, function, script file, or operable program. you might have to restart your computer for the scripts to be loaded in correctly.

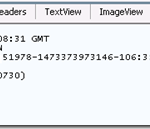

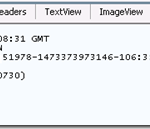

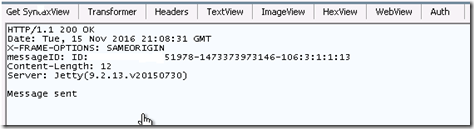

Now that your logic app is deployed, you can use Postman on your Logic App endpoint, and you will see the output from your Azure Function.

The code with this post can be found here.

by Dan Toomey | Dec 6, 2016 | BizTalk Community Blogs via Syndication

You may have noticed that I haven’t been too active on the social media / blogging front of late. It certainly isn’t because there isn’t much to write about…especially when you consider the release of BizTalk Server 2016 (including the Logic Apps Adapter), the General Availability of Azure Functions, and many other integration events leading up to these! And for those on the certification path, there’s news of the refresh of the Azure exams as well.

In fact, it is that very last item that accounts for a good deal of my scarcity in the blogging world of late. My employer is keen for as many of us as possible to earn the Microsoft Certified Solution Expert (MCSE) accreditation in Azure. I’ve already passed first of three required exams, MS 70-532 Developing Microsoft Azure Solutions after several weeks of after hours study (hours that might have been spent blogging). That accomplishment has earned me this nice little badge:

I’m now currently studying for the next exam, MS 70-533 Implementing Microsoft Azure Infrastructure Solutions. Passing this exam will earn me a Microsoft Certified Solutions Associate (MCSA) qualification in Cloud Platform. However, it won’t stop there as I’ll need to pass one more exam – MS 70-534 Architecting Microsoft Azure Solutions in order to attain the coveted MCSE in Cloud Platform and Infrastructure. All I can say is that I’ll be doing a lot of studying over the Christmas holidays…

Aside from studying for exams, I’ve also been heavily tasked at work as Mexia has had a profoundly successful sales year in 2016 – which translates into an overload of work! No wonder we’re heavily recruiting right now, looking for those “unicorns” that can help us remain as the best integration consultancy in Australia. There has been a fair amount of travel lately, and as Mexia’s only Microsoft Certified Trainer (MCT) I will continuing to deliver courses in BizTalk Server Development, BizTalk Server Administration, BizTalk360, and Azure Readiness. That means many more hours preparing all of that content.

But it hasn’t stopped me from speaking, at least not entirely. Aside from regular presentations at the Brisbane Azure User Group (including this one on Microsoft Flow), I’ve also been a guest presenter at Xamarin Dev Days in Brisbane where I talked about Connected and Disconnected Apps with Azure Mobile Apps.

Looking forward to writing posts more regularly again after this exam crunch is over. There’s a lot of exciting things happening in the integration world right now!

by Mark Brimble | Dec 4, 2016 | BizTalk Community Blogs via Syndication

This blog will describe how we can publish a message using Biztalk server and the Apache ActiveMQ Rest API. In previous blog I wrote about consuming a message from a AMQ and in this article show how to do it the other way.

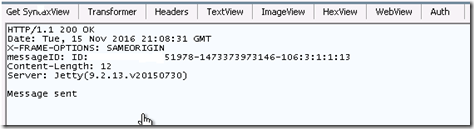

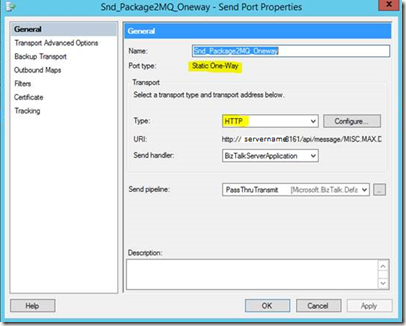

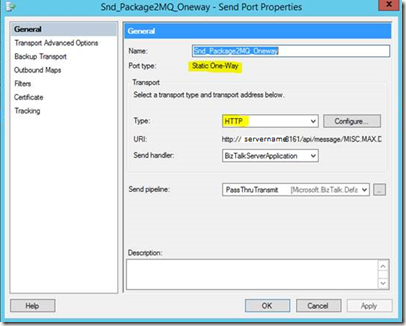

To begin with we tried to use a BizTalk WCF-WebHTTP adapter to POST a message to the AMQ with a request URL like http://servername:8161/api/message/MISC.MAX.DATA?type=queue&clientId=misc_data_biztalk&message_type=7222&message_version=2. We were pleased to see the message added to the queue with the correct properties. Unfortunately we got a transmission failure like “System.ServiceModel.ProtocolException: An HTTP Content-Type header is required for SOAP messaging and none was found” in the BizTalk group hub even though the message was added to the queue successfully. If we tried the request using fiddler it showed that the response does not contain a Content-Type in the header as well.

Getting a transmission failure each time BizTalk posts a message is not acceptable and we searched for a solution. We created a custom behaviour to add this a Content type to the header of the response but the WCF-WebHTTP adapter transmission failure occurs before the message gets to that point. Next we tried to configure the AMQ to add a content type but could not find the correct “config” to edit.

Finally we decided to choose BizTalk HTTP adapter instead of the WCF-WebHTTP adapter to POST the message to the AMQ. The HTTP adapter is a very old adapter and I reasoned that it might not be as picky about what is in the header of the response. It was pleasing to find that the HTTP adapter did POST a message to the AMQ without a transmission failure. This is a good example of where the HTTP adapter can do something that the modern WCF-WebHTTP adapter cannot do. Thanks to Deepa Kamalanathan for proving all this.

In summary we have now shown that you can consume messages from a Apache AMQ with the BizTalk WebHTTP adapter and that you can publish messages to the AMQ with the HTTP adapter. One question remains and that is what throughput can be achieved using these adapters.

by community-syndication | Dec 4, 2016 | BizTalk Community Blogs via Syndication

In this blog post we will discuss an Azure

Service called Azure

Functions. This service has recently (15th of November) gone

GA (General Available) and provides a capability of running small pieces of

code in Azure. With functions, you do not have to deal that much with infrastructure,

it’s the responsibility of Azure or an application (see Azure

Functions Overview). The functionality you

by BizTalk Team | Dec 1, 2016 | BizTalk Community Blogs via Syndication

Today, we have the pleasure of announcing the general availability (GA) of BizTalk Server 2016 – our tenth release. You can read the full announcement on the Microsoft Hybrid Blog here.

Alongside this momentous occasion we are also GA’ing today the BizTalk Server 2016 Logic Apps adapter – see here. This new adapter enables you to call Logic Apps from BizTalk Server 2016 giving you easy access from BizTalk applications to our vast array of cloud connectors in Azure truly enabling the hybrid cloud. To understand more about this adapter see here.

And that’s not all! We’re also shipping today the GA of our new BizTalk Server 2016 updated System Center Operations Manager (SCOM) management pack as well – get that here.

This new management pack includes the following enhancements:

– Support for both SCOM 2016 and SCOM 2012 R2

– Discover multiple version of Biztalk server

– Fixed the issue with the discovery of the installed BizTalk Server version

– Show biztalk .net performance counter for localized language

We hope you enjoy the new features and as always give us your feedback – today is a great day to be a BizTalker!

by bchesnut | Dec 1, 2016 | BizTalk Community Blogs via Syndication

Today marks several milestones on the history of BizTalk, it was released originally around this time in 2000 and the latest version has gone into General Availability.

There is a long list of what is new in BizTalk Server 2016, the complete list can be found here, I will highlight the one that will be the most significant to most existing BizTalk Server customers

- SQL Server 2016 AlwaysOn Availability Groups – no more SQL log shipping for DR

- Support for Visual Studio 2015, Windows Server 2016, SQL Server 2016 and Office 2016

- Re-engineered SFTP adapter which will support more SFTP servers

- Shared Access Signature support for WCF Bindings and Service Bus Messaging Adapter

Of all these items in the What New list, the most significant is the SQL Server 2016 AlwaysOn Availability Group support, in my career with BizTalk the biggest challenge at most customers was setting up a proper DR environment and since the release of AlwaysOn Availability Group in SQL 2012, most customers DBA always asked why can’t we use AlwaysOn Availability Groups, and I had to explain that they did not support MSDTC transaction that BizTalk uses MSTDC between database (in both single and multiple SQL Servers) for transactional support. So what has change to make it supported now, heaps; there have been changes to Windows Server 2016 and SQL Server 2016 that now allow MSDTC transactions to be supported by AlwaysOn Availability Groups. There are a few very specific details that need to be follow when setting this up for BizTalk, the most notable is that BizTalk requires at least 4 SQL Instances for it’s databases, this is because the current implementation of MSDTC supported by AlwaysOn Availability Groups does not support transaction inside of the same SQL Instance, which BizTalk uses extensively.

If you are interested in talking about how the new features and changes in BizTalk Server 2016 can help your existing BizTalk Infrastructure, please contact me.

by Rob Callaway | Dec 1, 2016 | BizTalk Community Blogs via Syndication

Introduction

This blog is usually reserved for technical posts and QuickLearn Training announcements, but something happened across my Facebook feed a while back and I’ve found myself revisiting it in my mind over and over so I have some thoughts / predictions / musings that I want to express.

Some Background

I’ve been training people how to be BizTalk Server developers and administrators since 2005. That’s a pretty long time; and in that time I’ve hit the job market looking for a new position on only a couple of occasions because I really love my job.

But I know that I’m one of the lucky ones. There are plenty of people out there looking to advance their careers. Others who hate the company they work for. Plenty of people feel stuck in dead-end positions. And there’s definitely a few looking to completely start over.

What’s the Point?

This brings me to my point. If any of that sounds like you, or someone you know, check out LinkedIn’s “Top Skills That Can Get You Hired in 2017” blog post (this is the thing that I saw on my Facebook feed). In it they list the top 10 skills based on the jobs listed on LinkedIn in 2016.

Of course, as someone who specializes in integration, I was pleased as punch to see Middleware and Integration Software in the #4 spot globally. Furthermore, Cloud and Distributed Computing is in the #1 spot (not surprising).

Naturally I couldn’t help but think of Logic Apps since it’s the convergence of those two categories. Logic Apps are in a position to change the game for a lot of organizations and people. I think we’re going to see a dramatic increase in the number of organizations / development teams looking for “cloud” developers with an integration background.

Don’t Tell Me BizTalk Is Dead, Because It’s Not

Just because that flashy new cloud-based integration platform comes rolling down the street doesn’t mean I’ve forgotten about BizTalk Server (my first love). Microsoft has increased their investment in BizTalk Server over the past 2 years, and just released BizTalk Server 2016 (I’m still waiting for Nick Hauenstein to start writing about all the new features). In the past year, Microsoft has changed its tune regarding Azure.

The new buzzword is Hybrid. I don’t want to dismiss that as a buzzword though. Hybrid (or more specifically, Hybrid Integration) is blending new Azure or cloud-based systems with existing on-premises systems. No one is going to abandon all of their on-premises investments overnight to adopt a cloud platform. The companies that are moving to the cloud are doing so slowly and deliberately one system / project at a time. No one is saying “Pack everything up Ted, we’re moving to the cloud.” Instead cloud services are used for new development.

As more workloads start running in the cloud, organizations need skilled people to connect those cloud services to data and services that live on-premises. BizTalk Server is a prime candidate to be your hybrid integration platform. Gartner estimates that by 2020, 75% of large organizations will have a hybrid integration platform. Those companies are going to need savvy integration professionals to build those platforms.

We Live in a Connected World

Our world seems to get more connected day-by-day. Mobile apps and IoT (Internet of Things) have changed the way people live their lives and neither is fading away any time soon. Oh yeah, I almost forgot to mention that in the LinkedIn article, Mobile Development holds the #7 spot.

That Gartner report I referenced a second ago states that 70% of mobile app development costs are related to integration and that integration represents 50% of the cost in IoT solutions. You know that all these systems don’t magically connect to each other. Someone has to build those connections, and that someone could be you.

Becoming a Unicorn

That sea-change the cloud was supposed to bring… it’s here. Companies have started adopting cloud technologies and they aren’t going to stop. As integration professionals, we are in a unique position to capitalize on this change. But with the demand as high as it is, you’re going to have to stand out. If your skills included integration (on-premises and cloud) + cloud development + mobile development, you’d be poised to land some of the most coveted jobs.

I didn’t intend for this to be a sales pitch, but if you need help getting there, QuickLearn Training can help you out. Our courses on BizTalk Server (updated for BizTalk Server 2016 starting in January 2017) and our Cloud-Based Integration Using Azure Logic Apps course will equip you with the deep skills you need to become the elusive unicorn that companies are looking for.

On the other side of the coin, if you’re looking to get some unicorns on your team, they are hard to find and will come at a cost. Honestly, you’re probably better off making your own unicorn. Time and again I hear from customers about horror stories where they hired someone who wasn’t a good fit. Or the consultant they contracted with disappeared and now they are stuck without support. I genuinely think the best option for most teams or organizations is to find the person you want and then help them gain the skills you need.

I’m not boasting when I say that I’ve had more than a handful of students tell me that my course(s) helped them find a direction for their career; if anything it is a rather humbling experience to realize that you have played a role in changing their lives. As a trainer, I love that my job is to make other people’s lives better, and I’d like to help make yours better too.

I know that I speak for everyone here at QuickLearn Training when I say, make 2017 awesome by becoming a unicorn!