by community-syndication | Mar 31, 2010 | BizTalk Community Blogs via Syndication

I have been working with Windows Server AppFabric caching lately and have found it to be very impressive. The more that I work with it the more that I can see areas that it can be utilized. One of the things that will become quite evident as you start using it is that much of the setup and configuration is done through PowerShell cmdlets.

I am in the process of putting together an application and I want the application to be able to create and pre-populate the cache. As I looked into creating the cache I knew that it could be created through PowerShell directly but I wanted to call the PowerShell cmdlets from .NET. So, lets look at what is needed to create the cache from PowerShell first and then we will look at the code to call the cmdlets from .NET.

This assumes that AppFabric has been installed and configured.

Open a PowerShell command window. The first thing that we are going to do is to import two modules and then we can start issuing commands. First, type “Import-Module DistributedCacheAdministration” (without the quotes) and hit enter. This will import the cache administration cmdlets. Next type “Import-Module DistributedCacheConfiguration” and hit enter. This will import the cache configuration cmdlets.

The next command will bring the current machines’ cluster configuration into the PowerShell session context. So, type in Use-CacheCluster and hit enter. We have now entered all that we need to start interacting with the cache. The first thing that we need to do is to ensure that the cache service is running. Type “Get-CacheHost” and hit enter. Look on the screen and see if the Service Status show UP. If it shows DOWN then issue the following commands to start it. Type “$hostinfo = Get-CacheHost” and hit enter. Then type in “Start-CacheHost $hostinfo.HostName $hostinfo.PortNo”.

At this point we are ready to create the cache using the New-Cache cmdlet. Type “New-Cache <your cache name here>” and hit enter. This will create a cache with your name that is ready to use.

If you want to see the configuration for your newly created cache type in “Get-CacheConfig <your cache name here>” and hit enter. You will see the following configuration attributes and their settings.

CacheName : MyCache

TimeToLive : 10 mins

CacheType : Partitioned

Secondaries : 0

IsExpirable : False

EvictionType : LRU

NotificationsEnabled : False

To do this all with code we need to add three references to our project. Add the System.Management.Automation assembly as well as the Microsoft.ApplicationServer.Caching.Core and Microsoft.ApplicationServer.Caching.Client assemblies. These two assemblies can be found in the Windows\System32\AppFabric directory.

To set up the environment in .NET to issue PowerShell commands we need to start with the InitialSessionState class. This will create a session with the PowerShell runspace. A PowerShell runspace is the operating environment where you can create pipelines which will run the cmdlets.

So, just as above we will need to start with importing the DistributedCacheAdministration and DistributedCacheConfiguration modules. This can be seen below in the source code with the state.ImportPSModule method. Once we have the session state created we can call the RunspaceFactory to create a new runspace. At this point we can call the Open method can now start creating commands through a pipeline.

In the code below we create two pipelines. The first is our Use-CacheCluster command. This is a command that doesn’t take or return any arguments. The next command is the New-Cache command. This takes a set of arguments so we will create this a bit different. First, we will create a Command object and then set parameters on the command object for each of the arguments we want to pass in. In this example I am providing a name as well as telling the caching system that I don’t want the cache items to expire. Next, we need to pass the command object to the pipe and lastly, call the Invoke method as shown in the code below:

private void CreateCache(string cacheName)

{

//This can also be kept in a config file

var config = new DataCacheFactoryConfiguration();

config.Servers = new List<DataCacheServerEndpoint>

{

new DataCacheServerEndpoint(Environment.MachineName, 22233)

};

DataCacheFactory dcf = new DataCacheFactory(config);

if (dcf != null)

{

var state = InitialSessionState.CreateDefault();

state.ImportPSModule(new string[] { “DistributedCacheAdministration”, “DistributedCacheConfiguration” });

state.ThrowOnRunspaceOpenError = true;

var rs = RunspaceFactory.CreateRunspace(state);

rs.Open();

var pipe = rs.CreatePipeline();

pipe.Commands.Add(new Command(“Use-CacheCluster”));

var cmd = new Command(“New-Cache”);

cmd.Parameters.Add(new CommandParameter(“Name”, cacheName));

cmd.Parameters.Add(new CommandParameter(“Expirable”, false));

pipe.Commands.Add(cmd);

var output = pipe.Invoke();

}

}

We now have a cache created for us from .NET code.

by community-syndication | Mar 30, 2010 | BizTalk Community Blogs via Syndication

Keeping the raging iCant do a thing; iCant fwd a txt msg or voice mail iPhone debate

the new Windows Phone 7 Tools are in here all its Silverlight glory.

Imagine being able to play a FLASH movie on the phone! shock horror.

So grab the next big thing and look out phone world.the way phones were meant to

be 😉

Windows

Phone Developer Tools

by community-syndication | Mar 30, 2010 | BizTalk Community Blogs via Syndication

This is the sixteenth in a series of blog posts I’m doing on the upcoming VS 2010 and .NET 4 release.

Today’s post is the first of a few blog posts I’ll be doing that talk about some of the important changes we’ve made to make Web Forms in ASP.NET 4 generate clean, standards-compliant, CSS-friendly markup. Today I’ll cover the work we are doing to provide better control over the “ID” attributes rendered by server controls to the client.

[In addition to blogging, I am also now using Twitter for quick updates and to share links. Follow me at: twitter.com/scottgu]

Clean, Standards-Based, CSS-Friendly Markup

One of the common complaints developers have often had with ASP.NET Web Forms is that when using server controls they don’t have the ability to easily generate clean, CSS-friendly output and markup. Some of the specific complaints with previous ASP.NET releases include:

- Auto-generated ID attributes within HTML make it hard to write JavaScript and style with CSS

- Use of tables instead of semantic markup for certain controls (in particular the asp:menu control) make styling ugly

- Some controls render inline style properties even if no style property on the control has been set

- ViewState can often be bigger than ideal

ASP.NET 4 provides better support for building standards-compliant pages out of the box. The built-in <asp:> server controls with ASP.NET 4 now generate cleaner markup and support CSS styling – and help address all of the above issues.

Markup Compatibility When Upgrading Existing ASP.NET Web Forms Applications

A common question people often ask when hearing about the cleaner markup coming with ASP.NET 4 is “Great – but what about my existing applications? Will these changes/improvements break things when I upgrade?”

To help ensure that we don’t break assumptions around markup and styling with existing ASP.NET Web Forms applications, we’ve enabled a configuration flag – controlRenderingCompatbilityVersion – within web.config that let’s you decide if you want to use the new cleaner markup approach that is the default with new ASP.NET 4 applications, or for compatibility reasons render the same markup that previous versions of ASP.NET used:

When the controlRenderingCompatbilityVersion flag is set to “3.5” your application and server controls will by default render output using the same markup generation used with VS 2008 and .NET 3.5. When the controlRenderingCompatbilityVersion flag is set to “4.0” your application and server controls will strictly adhere to the XHTML 1.1 specification, have cleaner client IDs, render with semantic correctness in mind, and have extraneous inline styles removed.

This flag defaults to 4.0 for all new ASP.NET Web Forms applications built using ASP.NET 4. Any previous application that is upgraded using VS 2010 will have the controlRenderingCompatbilityVersion flag automatically set to 3.5 by the upgrade wizard to ensure backwards compatibility. You can then optionally change it (either at the application level, or scope it within the web.config file to be on a per page or directory level) if you move your pages to use CSS and take advantage of the new markup rendering.

Today’s Cleaner Markup Topic: Client IDs

The ability to have clean, predictable, ID attributes on rendered HTML elements is something developers have long asked for with Web Forms (ID values like “ctl00_ContentPlaceholder1_ListView1_ctrl0_Label1” are not very popular). Having control over the ID values rendered helps make it much easier to write client-side JavaScript against the output, makes it easier to style elements using CSS, and on large pages can help reduce the overall size of the markup generated.

New ClientIDMode Property on Controls

ASP.NET 4 supports a new ClientIDMode property on the Control base class. The ClientIDMode property indicates how controls should generate client ID values when they render. The ClientIDMode property supports four possible values:

- AutoID-Renders the output as in .NET 3.5 (auto-generated IDs which will still render prefixes like ctrl00 for compatibility)

- Predictable (Default)– Trims any “ctl00” ID string and if a list/container control concatenates child ids (example: id=”ParentControl_ChildControl”)

- Static-Hands over full ID naming control to the developer – whatever they set as the ID of the control is what is rendered (example: id=”JustMyId”)

- Inherit-Tells the control to defer to the naming behavior mode of the parent container control

The ClientIDMode property can be set directly on individual controls (or within container controls – in which case the controls within them will by default inherit the setting):

Or it can be specified at a page or usercontrol level (using the <%@ Page %> or <%@ Control %> directives) – in which case controls within the pages/usercontrols inherit the setting (and can optionally override it):

Or it can be set within the web.config file of an application – in which case pages within the application inherit the setting (and can optionally override it):

This gives you the flexibility to customize/override the naming behavior however you want.

Example: Using the ClientIDMode property to control the IDs of Non-List Controls

Let’s take a look at how we can use the new ClientIDMode property to control the rendering of “ID” elements within a page. To help illustrate this we can create a simple page called “SingleControlExample.aspx” that is based on a master-page called “Site.Master”, and which has a single <asp:label> control with an ID of “Message” that is contained with an <asp:content> container control called “MainContent”:

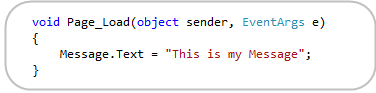

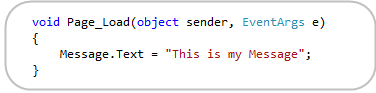

Within our code-behind we’ll then add some simple code like below to dynamically populate the Label’s Text property at runtime:

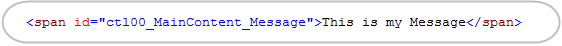

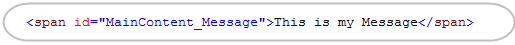

If we were running this application using ASP.NET 3.5 (or had our ASP.NET 4 application configured to run using 3.5 rendering or ClientIDMode=AutoID), then the generated markup sent down to the client would look like below:

This ID is unique (which is good) – but rather ugly because of the “ct100” prefix (which is bad).

Markup Rendering when using ASP.NET 4 and the ClientIDMode is set to “Predictable”

With ASP.NET 4, server controls by default now render their ID’s using ClientIDMode=”Predictable”. This helps ensure that ID values are still unique and don’t conflict on a page, but at the same time it makes the IDs less verbose and more predictable. This means that the generated markup of our <asp:label> control above will by default now look like below with ASP.NET 4:

Notice that the “ct100” prefix is gone. Because the “Message” control is embedded within a “MainContent” container control, by default it’s ID will be prefixed “MainContent_Message” to avoid potential collisions with other controls elsewhere within the page.

Markup Rendering when using ASP.NET 4 and the ClientIDMode is set to “Static”

Sometimes you don’t want your ID values to be nested hierarchically, though, and instead just want the ID rendered to be whatever value you set it as. To enable this you can now use ClientIDMode=static, in which case the ID rendered will be exactly the same as what you set it on the server-side on your control. This will cause the below markup to be rendered with ASP.NET 4:

This option now gives you the ability to completely control the client ID values sent down by controls.

Example: Using the ClientIDMode property to control the IDs of Data-Bound List Controls

Data-bound list/grid controls have historically been the hardest to use/style when it comes to working with Web Form’s automatically generated IDs. Let’s now take a look at a scenario where we’ll customize the ID’s rendered using a ListView control with ASP.NET 4.

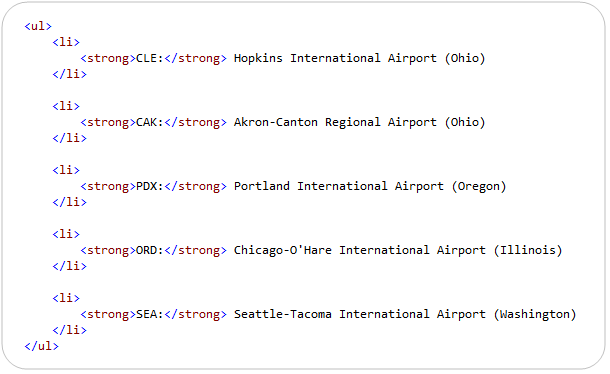

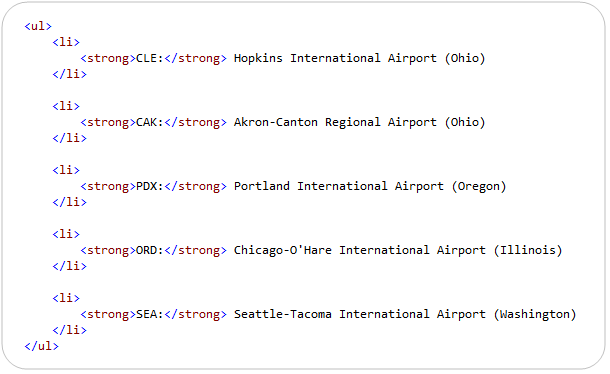

The code snippet below is an example of a ListView control that displays the contents of a data-bound collection – in this case, airports:

We can then write code like below within our code-behind to dynamically databind a list of airports to the ListView above:

At runtime this will then by default generate a <ul> list of airports like below. Note that because the <ul> and <li> elements in the ListView’s template are not server controls, no IDs are rendered in our markup:

Adding Client ID’s to Each Row Item

Now, let’s say that we wanted to add client-ID’s to the output so that we can programmatically access each <li> via JavaScript. We want these ID’s to be unique, predictable, and identifiable.

A first approach would be to mark each <li> element within the template as being a server control (by giving it a runat=server attribute) and by giving each one an id of “airport”:

By default ASP.NET 4 will now render clean IDs like below (no ctl001-like ids are rendered):

Using the ClientIDRowSuffix Property

Our template above now generates unique ID’s for each <li> element – but if we are going to access them programmatically on the client using JavaScript we might want to instead have the ID’s contain the airport code within them to make them easier to reference. The good news is that we can easily do this by taking advantage of the new ClientIDRowSuffix property on databound controls in ASP.NET 4 to better control the ID’s of our individual row elements.

To do this, we’ll set the ClientIDRowSuffix property to “Code” on our ListView control. This tells the ListView to use the databound “Code” property from our Airport class when generating the ID:

And now instead of having row suffixes like “1”, “2”, and “3”, we’ll instead have the Airport.Code value embedded within the IDs (e.g: _CLE, _CAK, _PDX, etc):

You can use this ClientIDRowSuffix approach with other databound controls like the GridView as well. It is useful anytime you want to program row elements on the client – and use clean/identified IDs to easily reference them from JavaScript code.

Summary

ASP.NET 4 enables you to generate much cleaner HTML markup from server controls and from within your Web Forms applications.

In today’s post I covered how you can now easily control the client ID values that are rendered by server controls. In upcoming posts I’ll cover some of the other markup improvements that are also coming with the ASP.NET 4 release.

Hope this helps,

Scott

by community-syndication | Mar 30, 2010 | BizTalk Community Blogs via Syndication

BizTalk Best Practice Analyser is released and available for download.

Download: BizTalkBPA

V1.2

As always another very handy tool is the Message Box Viewer (Currently V10) which

provides some very detailed information as well.

Download: Message

Box Viewer (MBV)

Enjoy your day,

Mick.

by community-syndication | Mar 30, 2010 | BizTalk Community Blogs via Syndication

by community-syndication | Mar 30, 2010 | BizTalk Community Blogs via Syndication

I’ve been noticing some oddities in how Visual Studio 2010 RC handles Custom ClassificationFormatDefinitions when multiple extensions are interacting together. It it hasn’t been fixed already, then I guess it’s already too late for it to matter, but I still wanted to bring it up just to know if it’s just me that’s been noticing […]

by community-syndication | Mar 30, 2010 | BizTalk Community Blogs via Syndication

It's one of the common scenarios, there is a sudden surge in your business and number of transactions increases drastically for a period of time. Modern business solutions are not standalone applications. In a SOA/BPM scenario there will be "n" number of layers (applications) work together to form a business solutions. So, during this sudden surge, if a single layer (application) malfunctions – its going to bring the whole end to end process in trouble.

We were hit with a similar situation recently.

Quick overview of our overall solution:

Our top level over simplified architecture looks like this

In the above picture, BPM workflow solution and Business Service (Composite service calling multiple underlying integration services) were build with Microsoft BizTalk Server. In our case the main problem area is database and concurrency. Business users basically perform 2 sets of operations.

1. Validate Applications, and

2. Commit Applications

They scan applications and feed into the system, the system goes through full validation rules and give the result back. If there are errors, the business users correct them and feed back into the system and they cycle through this loop until all the errors are resolved. Finally after resolving all the errors they perform the commit operation. Due to the complexity of the solution if things goes wrong during commit, then it will end up in manual (time consuming!!) operation to complete the job. Its efficient if we reduce the number of manual processing.

During validate cycles the calls to database are purely read only and even at very high volume levels there are no serious issues (also because lot of information are cached in memory). But the commit calls persist information into the database and some of the hot tables got few million records and can't deal with simultaneous requests. The biggest problem is, if it gets into trouble it effects everybody, as shown in the picture, there are some external applications like web sites relies on the availability of the database. They get effected as well.

Overview of the Business Services architecture

The below picture shows the architecture of our Business Services layer. The responsibility of the business services is to create a usable abstract composite business services layer for the end consumers, so end consumers don't need to understand the interaction logic with underlying systems. It takes care of various thing like the order (sequencing) in which the underlying integration services are called, error handling across various calls, suppressing duplicate errors returned from various calls, single unified contract to interact with etc.

Now getting to the technical bits?

The sub orchestrations are designed more for modularity reasons and are called using the "Call Orchestration" shape from the two main orchestrations "Validate" and "Commit". All the orchestrations are hosted using a single BizTalk Host and one host instance per server is created (we got 6 BizTalk Servers. The BizTalk servers hosts other BizTalk applications as well..).

The important thing to note here is, all the orchestrations need to live within a single host due to their tight coupling.

This is how its been tightly coupled:

1. The called sub orchestrations needs to live in the same host as calling orchestrations ("Validate" and "Commit") since you can't specify a host for sub-orchestrations (orchestrations started using a call orchestration shape runs under the same host/host instance as the called orchestration), and

2. Both the top level orchestrations share the sub orchestrations.

So the only option is to configure and run all the orchestrations in a single host. The other interesting factor here is, the send ports are also not aware of the "Validate"/"Commit" differentiation. There is only one send port per end point, which handles both validate and commit calls. This is again the constraint created by the way sub-orchestrations are designed, there is only one logical port mapped to one physical port for both the "Validate" and "Commit" operations.

This is not a problem in majority of the cases. But as I mentioned earlier in this post, in our case the multiple concurrent "validate" calls are absolutely fine, you can thrash the system with such calls (Thanks to improved memory cache stores). But, when it comes to "commit" the underlying database is seriously suffering with concurrent calls. This just shows the importance of understanding the limitations of the underlying systems, and the importance of non-functional SLA requirements when designing a SOA/BPM system which relies on diverse applications in the organisation. There is nothing wrong with this applications, its designed for easy maintenance, not keeping operational constraints in mind.

I can think of two different approaches which would have given the opportunity to separate "Validate" and "Commit" operations and setting different throttling conditions.

1. By not reusing the sub-orchestrations, in which case both "Validate" and "Commit" can live in two different host with completely isolated throttling settings.

2. By not reusing the same send port for both "Validate" and "Commit", which could have given the opportunity to control it at send port level by configuring different host for send ports with different throttling settings.

BizTalk server is designed for high throughput and its going to push the messages to underlying systems (and databases) as quickly as it can. It does provide throttling, it understands underlying systems are suffering and will try to control it. But the throttling kick off is too late for us, the system has already gone to unrecoverable mode.

Production environment Constraints

First of all there are some very restricted conditions for us to work with

1. The environment is frozen. Next few days is our core business days in the year and we are not allowed to make changes (serious financial implications!!).

2. Time is against us. We need a working solution ASAP.

3. We don't want to slow down the validate process, this will keep the business users idle. In fact there are lot of temp business users hired for this period.

Understanding the pattern and requirement:

First we identified the pattern under which the underlying database suffers. We roughly need to process 700 applications per hour. The database is very happy and capable of processing this volume at only 60% resource utilization if we feed them at consistent rate (roughly 1 application every 5 seconds). But if we accidentally receive 100 applications concurrently, this will bring the database to stand still and block all further calls. Resource utilization will be nearly 100% and it will take a while for the database to recover. By that time we would got 100's of applications already failed and routed to manual processing.

Secondly, we should have the ability to stop and start processing commit request in a very controlled way (as soon as we start seeing issues on the database). The issues might be triggered by other external applications using the same database. Ex: Some part of the external web sites hits the database via the Integrations services.

So, it all comes down to:

1. Parking all the "Commit" (only Commit) application on the Business Services side in the BizTalk MessageBox database (creating a queuing effect. The validate application should flow through without any hold).

2. Releasing "Commit" applications in a controlled way to down line integration services (and into database), with the ability to start/stop/increase volume/decrease volume/manual submit all at runtime with minimal risk.

Finally, the solution:

This is the solution I came up with,

1. Stop the top level "Commit" orchestration via the BizTalk Admin Console. This will solve our first issue of parking the commit application on BizTalk Business Services layer. All the commit orchestration instance will get suspended in a resumable state and reside in BizTalk MessageBox.

2. Use BizTalk's ability to resume the suspended instances. The key thing to note here is you don't need to start your orchestration to resume suspended(resumable) instances. You only want to make sure the orchestrations are in enlisted state.

This solves the issue of ability to submit commit applications manually in a controlled way. But there are quite few challenges here

1. It's a daunting task to sit and do this job manually for hours.

2. High possibility of human error, note in the above picture, you are only 1 click away from terminating the instances.

3. There is a possibility of one more human error, you might resume a completely wrong instance in a wrong tab.

Bare in mind, its production environment.

Tool to resume Suspended(resumable) instances using WMI

All I had to do now is just to automate the manual task of resuming suspended orchestration instances automatically in a controlled way. I just knocked this tool in couple of hours using the BizTalk Servers WMI ability to interact with the BizTalk environment.

Its a very simple tool, this is how it works

1. You select the required Orchestration, and press refresh. It will show how many instances waiting in suspended-resumable state (for this particular orchestration).

2. Then you configure how many instances to submit every time (Ex: Number of Instances to submit = 1)

3. Then you can decided whether to submit it manually (by selecting the "Manual" option and clicking "Resume Instances"), or

4. You can configure to submit it in periodic intervals. Ex: 1 every 3 seconds (by selecting the "Timed" option, configuring the time in milliseconds and clicking "Resume Instances").

5. Once you started your timed submission, instances will be submitted in periodic intervals and you'll have the ability to stop them at any time by just clicking "Stop Timer".

Certainly I'm not recommending this as a long term solution, but it helped us to get out of the scenario. This solution requires constant human monitoring, which is ok for our peak window time (few days), but not sustainable to do it throughout the year. But I believe something similar is required built into the product to support scenarios like this, which looks very common for businesses.

Difficulties in understanding BizTalk Throttling mechanism:

Anyone who worked with BizTalk long enough will agree, throttling the BizTalk environment is not for faint hearted ones. Simply because the sheer number of tuning parameters BizTalk provides to the end users. Also, because its not clear to everyone each and every settings. Here are the few parameters you can tune

1. Registry setting to alter min/max IO and Worker Threads for host instances (you need to do it on each machine)

2. Host throttling settings for each host, which affects all the host instances for that host. You will see few options for threads here.

3. adm_services table in BizTalk management database where you configure polling interval, and threads for EPM, XLANG etc..which effects the whole environment.

4. You also got individual adapter settings which effect to certain extend. Example: Polling interval in SQL, MQSeries adapter etc.

After talking to many people, its very clear to me. They simply try various combinations and finally settle for one that works for their requirement. Most of the time they really don't know the implications which is bad.

This complexity also puts the constraint, its nearly not practical to tune them in production if your business requirements are changing every day. Example: In our case, we'll know the volume we are going to process every day in the beginning of the day (when all applications are received in post). So we need to set up our system for that daily volume. If its 500 applications, we don't need to do anything system will automatically coupe. If its 3000 applications then we need to modify certain settings. BizTalk server is a self tuning engine, it caters automatically for varying situation ? majority of the time. But the problem is BizTalk can't be aware of all its underlying systems ability!!

Throttling based on system resources or functional requirements?

BizTalk got a very good throttling mechanism (I'm only saying its bit difficult to understand it 🙂 ), but the throttling is based purely on the system resources (example: CPU utilization, memory utilization, threads allocation, db connections, spool depth etc etc) of the BizTalk environment. We need to keep in mind BizTalk is a middleware product and it will depend heavily on the performance and ability of the external system to work efficiently.

BizTalk of course provides message publishing throttling, so it slows down automatically when it sees the underlying system is suffering and its host queue length is building up. But its a system decision based on parameters. In some scenarios (like ours) its too late before the system can start throttling.

While researching for a solution to this problem, I have noticed lot of people in the same boat. They were trying to slow down the BizTalk environment to coupe with the underlying systems ability in various ways. Things like building a controller orchestration, adjusting the MaxWorkerThread settings to control the number of running orchestrations, Enabling ordered delivery on the send port etc.

Suggestion to BizTalk Server Product Team:

At the moment with the current version of BizTalk (2009 R2 or 2010!!) there is no separate mechanism for Message Processing throttling between Orchestrations (Business Processes) and other subscribers (ex: Send ports). In some scenarios business processes may be driven by various external factors and need to be controlled in a much more fine grained way. Also, as explained in this post there may be requirement to change the throttling behaviour in production at runtime quite frequently for varying reasons. Here I'm going to put my fictitious idea 🙂

On the Orchestration Properties window add a new tab called "Controlled", which may look like this

All the instances that are waiting to be processed can have a status something like "Ready to Run (Controlled)". The administrators will still have the ability to Terminate the instances if not required.

The context menu can be extended a bit so, the administrator can start/stop processing in a controlled or normal way easily.

In this approach, we don't need to restart host instances, the configuration changes should take effect on the fly.

Nandri

-Saravana

by community-syndication | Mar 30, 2010 | BizTalk Community Blogs via Syndication

BizTalk and SSO was running smooth before the installation of VS2010 on my machine. I have a 64-Bit machine with Windows Server 2008 R2 and BTS 2009 installed. When I installed VS2010 my SSO was stopped and when I tried to start I started getting the error below ————————— Services ————————— Windows could not start […]

by community-syndication | Mar 29, 2010 | BizTalk Community Blogs via Syndication

OrchProfiler has now been updated to support 64 bit systems. Actually this has been available for quite some time now in the Planned releases tab of the codeplex space but i’ve taken the opportunity to give it a quick test drive, updated the version numbers etc and made the new release available.

To use this, you […]

by community-syndication | Mar 29, 2010 | BizTalk Community Blogs via Syndication

The other day this landed in my inbox. Being on the TAP program and posting various

pieces of feedback I’ve been updated that BizTalk 2010 is the only name to remember.

Keeps inline with VSNET2010 etc etc, so anything with a 2010 after its name *should*

work with each other. SharePoint 2010 etc.

So far I’ve been playing with the early bits and I’m liking what I’m seeing – copy

and paste functoids in a map!!! (for those of you who don’t know the pain.it’s

pain let me tell you)

So here’s the official blurb

Well done BizTalk Team! Working Hard!

——————————————

BizTalk Server 2010 Name

Change Q&A

Q: Why was the original name for the release BizTalk

Server 2009 R2?

BizTalk Server 2009 R2 was planned to be a focused release

to deliver support for Windows Server 2008 R2, SQL Server 2008 R2 and Visual Studio

2010. Aligning BizTalk releases to core server platform releases is very important

for our customers. Hence our original plan was to name the release as BizTalk Server

2009 R2.

Q: Why did Microsoft decide to change the name for BizTalk

Server 2009 R2 to BizTalk Server 2010?

Over the past

year we got lot of feedback from our key customers and decided to incorporate few

key asks from our customers in this release. Based on customer value we are delivering

and positive feedback we are getting from our early adopter customers we feel the

release has transitioned from minor release (BizTalk Server 2009 R2) to a major release

(BizTalk Server 2010).

Following is list of key capabilities we have added to

the release

1. Enhanced

trading partner management that will enable

our customers to manage complex B2B relationships with ease

2. Increase

productivity through enhanced BizTalk Mapper. These enhancements are critical

in increasing productivity in both EAI and B2B solutions; and a favorite feature of

our customers.

3. Enable

secure data transfer across business partners

with FTPS adapter

4.

U

Updated adapters for SAP 7, Oracle eBusiness Suite 12.1, SharePoint 2010 and SQL

Server 2008 R2

5.

Improved and simplified management with updated

System Center management pack

6.

Simplified management through single dashboard which

enables IT Pros to backup and restore BizTalk configuration

7.

Enhanced performance tuning capabilities at

Host and Host Instance level

8. Continued

innovation in RFID Space with out of box event

filtering and delivery of RFID events

Q: Is there any additional benefit to customers with

name change to BizTalk Server 2010?

In addition to all the great value the release provides,

customers will benefit from support window being reset to 10 years (5 years mainstream

and 5 years extended support). This highlights Microsoft’s long term commitment to

BizTalk Server product.